e231890c8e4b3122fd716c8744122ffd.ppt

- Количество слайдов: 17

Grid. X 1: A Canadian Computational Grid for HEP Applications A. Agarwal, P. Armstrong, M. Ahmed, B. L. Caron, A. Charbonneau, R. Desmarais, I. Gable, L. S. Groer, R. Haria, R. Impey, L. Klektau, C. Lindsay, G. Mateescu, Q. Matthews, A. Norton, W. Podaima, S. Popov, D. Quesnel, S. Ramage, R. Simmonds, R. J. Sobie, B. St. Arnaud, D. C. Vanderster, M. Vetterli, R. Walker CANARIE Inc. , Ottawa, Ontario, Canada Institute of Particle Physics of Canada National Research Council, Ottawa, Ontario, Canada TRIUMF, Vancouver, British Columbia, Canada University of Alberta, Edmonton, Canada University of Calgary, Canada Simon Fraser University, Burnaby, British Columbia, Canada University of Toronto, Ontario, Canada University of Victoria, British Columbia, Canada HEPi. X Fall 2006, JLab, Oct 9 -13 Ian Gable University of Victoria/HEPnet Canada 1

Grid. X 1: A Canadian Computational Grid for HEP Applications A. Agarwal, P. Armstrong, M. Ahmed, B. L. Caron, A. Charbonneau, R. Desmarais, I. Gable, L. S. Groer, R. Haria, R. Impey, L. Klektau, C. Lindsay, G. Mateescu, Q. Matthews, A. Norton, W. Podaima, S. Popov, D. Quesnel, S. Ramage, R. Simmonds, R. J. Sobie, B. St. Arnaud, D. C. Vanderster, M. Vetterli, R. Walker CANARIE Inc. , Ottawa, Ontario, Canada Institute of Particle Physics of Canada National Research Council, Ottawa, Ontario, Canada TRIUMF, Vancouver, British Columbia, Canada University of Alberta, Edmonton, Canada University of Calgary, Canada Simon Fraser University, Burnaby, British Columbia, Canada University of Toronto, Ontario, Canada University of Victoria, British Columbia, Canada HEPi. X Fall 2006, JLab, Oct 9 -13 Ian Gable University of Victoria/HEPnet Canada 1

Overview • Motivation • The Grid. X 1 Framework – Middleware, Metascheduling, Monitoring • User Applications – Ba. Bar and ATLAS • Web Services for Grid. X 1 Ian Gable University of Victoria/HEPnet Canada 2

Overview • Motivation • The Grid. X 1 Framework – Middleware, Metascheduling, Monitoring • User Applications – Ba. Bar and ATLAS • Web Services for Grid. X 1 Ian Gable University of Victoria/HEPnet Canada 2

Motivation • Particle physics (HEP) simulations are “embarrassingly parallel”; multiple instances of serial (integer) jobs • We want to exploit the unused cycles at non-HEP sites – Support dedicated and shared facilities – Each shared facility may have unique configuration requirements – Minimal software demands on sites • We want to develop a general grid – Open to other applications (serial, integer) Ian Gable University of Victoria/HEPnet Canada 3

Motivation • Particle physics (HEP) simulations are “embarrassingly parallel”; multiple instances of serial (integer) jobs • We want to exploit the unused cycles at non-HEP sites – Support dedicated and shared facilities – Each shared facility may have unique configuration requirements – Minimal software demands on sites • We want to develop a general grid – Open to other applications (serial, integer) Ian Gable University of Victoria/HEPnet Canada 3

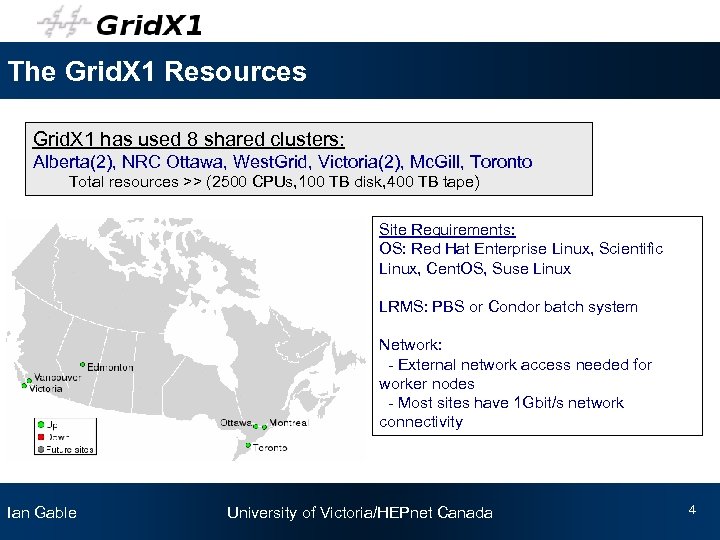

The Grid. X 1 Resources Grid. X 1 has used 8 shared clusters: Alberta(2), NRC Ottawa, West. Grid, Victoria(2), Mc. Gill, Toronto Total resources >> (2500 CPUs, 100 TB disk, 400 TB tape) Site Requirements: OS: Red Hat Enterprise Linux, Scientific Linux, Cent. OS, Suse Linux LRMS: PBS or Condor batch system Network: - External network access needed for worker nodes - Most sites have 1 Gbit/s network connectivity Ian Gable University of Victoria/HEPnet Canada 4

The Grid. X 1 Resources Grid. X 1 has used 8 shared clusters: Alberta(2), NRC Ottawa, West. Grid, Victoria(2), Mc. Gill, Toronto Total resources >> (2500 CPUs, 100 TB disk, 400 TB tape) Site Requirements: OS: Red Hat Enterprise Linux, Scientific Linux, Cent. OS, Suse Linux LRMS: PBS or Condor batch system Network: - External network access needed for worker nodes - Most sites have 1 Gbit/s network connectivity Ian Gable University of Victoria/HEPnet Canada 4

The Grid. X 1 Infrastructure • Grid Middleware – Virtual Data Toolkit: packaged version of Globus Toolkit 2. 4 • VDT is more stable than vanilla GT 2 – We are evaluating GT 4 & web services more on this later • Security and User Management – Grid. X 1 hosts require an X. 509 certificate issued by the Grid Canada Certificate Authority – User certificates from trusted CAs around the world are accepted • Authorization is managed at site level in a grid-mapfile • User certificates are mapped to local unix accounts Ian Gable University of Victoria/HEPnet Canada 5

The Grid. X 1 Infrastructure • Grid Middleware – Virtual Data Toolkit: packaged version of Globus Toolkit 2. 4 • VDT is more stable than vanilla GT 2 – We are evaluating GT 4 & web services more on this later • Security and User Management – Grid. X 1 hosts require an X. 509 certificate issued by the Grid Canada Certificate Authority – User certificates from trusted CAs around the world are accepted • Authorization is managed at site level in a grid-mapfile • User certificates are mapped to local unix accounts Ian Gable University of Victoria/HEPnet Canada 5

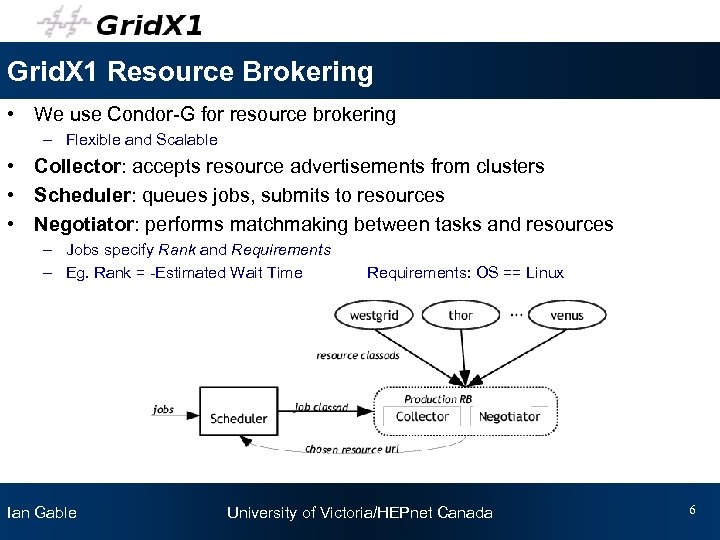

Grid. X 1 Resource Brokering • We use Condor-G for resource brokering – Flexible and Scalable • Collector: accepts resource advertisements from clusters • Scheduler: queues jobs, submits to resources • Negotiator: performs matchmaking between tasks and resources – Jobs specify Rank and Requirements – Eg. Rank = -Estimated Wait Time Ian Gable Requirements: OS == Linux University of Victoria/HEPnet Canada 6

Grid. X 1 Resource Brokering • We use Condor-G for resource brokering – Flexible and Scalable • Collector: accepts resource advertisements from clusters • Scheduler: queues jobs, submits to resources • Negotiator: performs matchmaking between tasks and resources – Jobs specify Rank and Requirements – Eg. Rank = -Estimated Wait Time Ian Gable Requirements: OS == Linux University of Victoria/HEPnet Canada 6

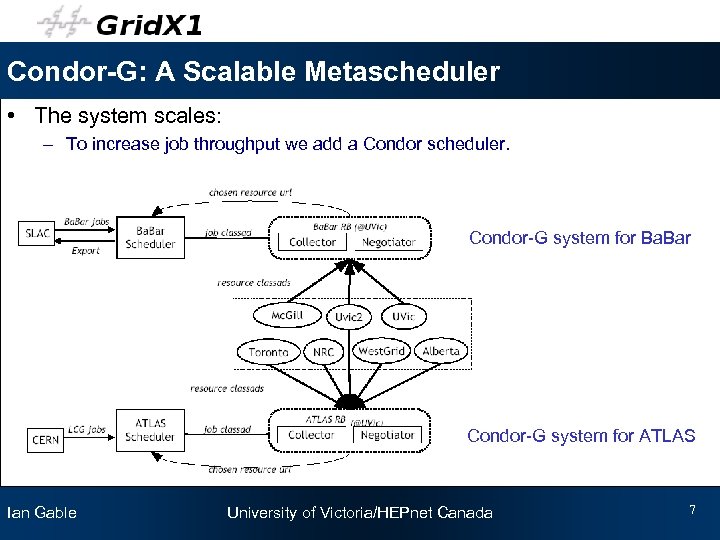

Condor-G: A Scalable Metascheduler • The system scales: – To increase job throughput we add a Condor scheduler. Condor-G system for Ba. Bar Condor-G system for ATLAS Ian Gable University of Victoria/HEPnet Canada 7

Condor-G: A Scalable Metascheduler • The system scales: – To increase job throughput we add a Condor scheduler. Condor-G system for Ba. Bar Condor-G system for ATLAS Ian Gable University of Victoria/HEPnet Canada 7

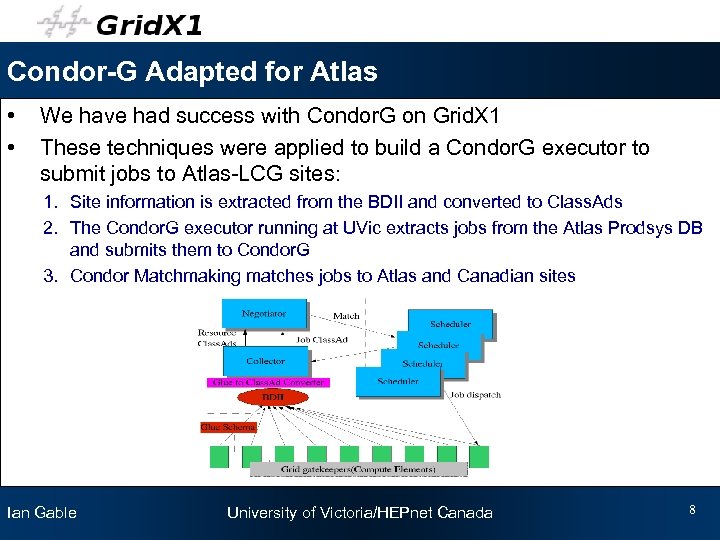

Condor-G Adapted for Atlas • • We have had success with Condor. G on Grid. X 1 These techniques were applied to build a Condor. G executor to submit jobs to Atlas-LCG sites: 1. Site information is extracted from the BDII and converted to Class. Ads 2. The Condor. G executor running at UVic extracts jobs from the Atlas Prodsys DB and submits them to Condor. G 3. Condor Matchmaking matches jobs to Atlas and Canadian sites Ian Gable University of Victoria/HEPnet Canada 8

Condor-G Adapted for Atlas • • We have had success with Condor. G on Grid. X 1 These techniques were applied to build a Condor. G executor to submit jobs to Atlas-LCG sites: 1. Site information is extracted from the BDII and converted to Class. Ads 2. The Condor. G executor running at UVic extracts jobs from the Atlas Prodsys DB and submits them to Condor. G 3. Condor Matchmaking matches jobs to Atlas and Canadian sites Ian Gable University of Victoria/HEPnet Canada 8

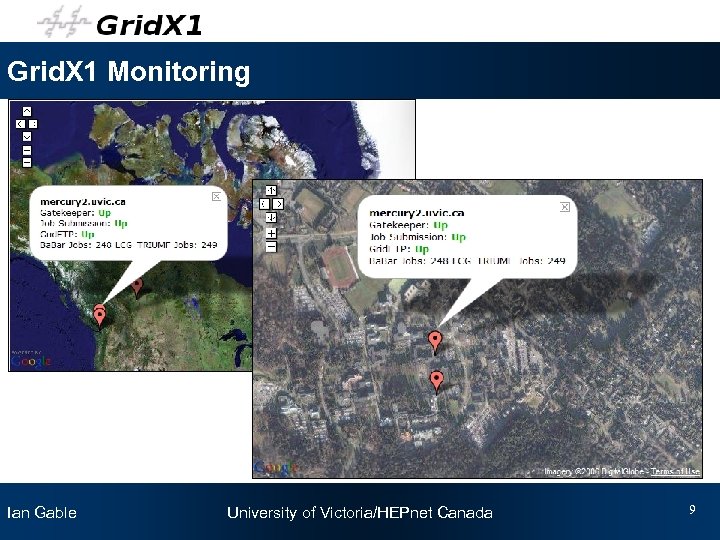

Grid. X 1 Monitoring Grid. X 1 is monitored using a Google Maps Mashup Ian Gable University of Victoria/HEPnet Canada 9

Grid. X 1 Monitoring Grid. X 1 is monitored using a Google Maps Mashup Ian Gable University of Victoria/HEPnet Canada 9

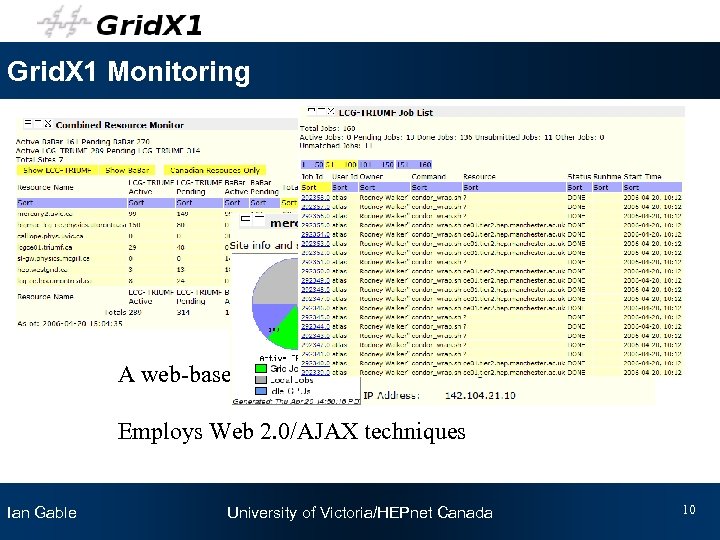

Grid. X 1 Monitoring A web-based dynamic resource monitor Employs Web 2. 0/AJAX techniques Ian Gable University of Victoria/HEPnet Canada 10

Grid. X 1 Monitoring A web-based dynamic resource monitor Employs Web 2. 0/AJAX techniques Ian Gable University of Victoria/HEPnet Canada 10

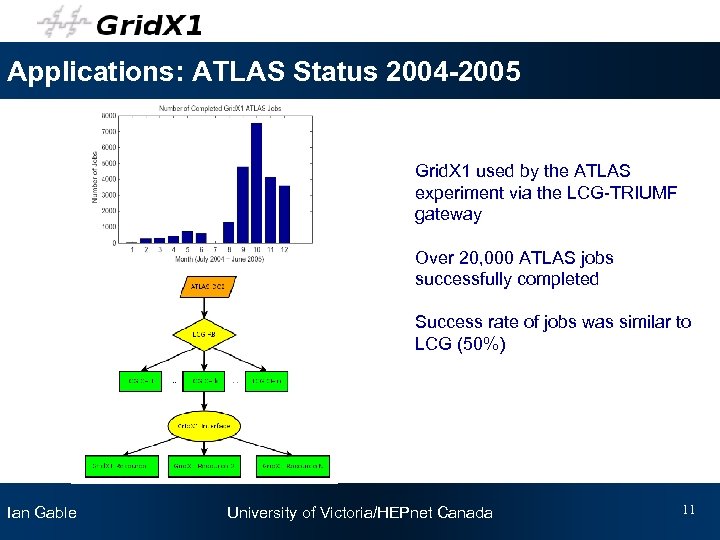

Applications: ATLAS Status 2004 -2005 Grid. X 1 used by the ATLAS experiment via the LCG-TRIUMF gateway Over 20, 000 ATLAS jobs successfully completed Success rate of jobs was similar to LCG (50%) Ian Gable University of Victoria/HEPnet Canada 11

Applications: ATLAS Status 2004 -2005 Grid. X 1 used by the ATLAS experiment via the LCG-TRIUMF gateway Over 20, 000 ATLAS jobs successfully completed Success rate of jobs was similar to LCG (50%) Ian Gable University of Victoria/HEPnet Canada 11

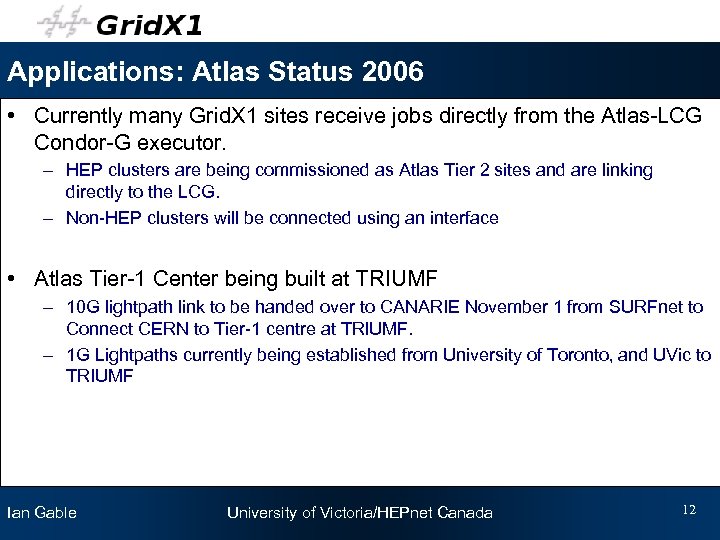

Applications: Atlas Status 2006 • Currently many Grid. X 1 sites receive jobs directly from the Atlas-LCG Condor-G executor. – HEP clusters are being commissioned as Atlas Tier 2 sites and are linking directly to the LCG. – Non-HEP clusters will be connected using an interface • Atlas Tier-1 Center being built at TRIUMF – 10 G lightpath link to be handed over to CANARIE November 1 from SURFnet to Connect CERN to Tier-1 centre at TRIUMF. – 1 G Lightpaths currently being established from University of Toronto, and UVic to TRIUMF Ian Gable University of Victoria/HEPnet Canada 12

Applications: Atlas Status 2006 • Currently many Grid. X 1 sites receive jobs directly from the Atlas-LCG Condor-G executor. – HEP clusters are being commissioned as Atlas Tier 2 sites and are linking directly to the LCG. – Non-HEP clusters will be connected using an interface • Atlas Tier-1 Center being built at TRIUMF – 10 G lightpath link to be handed over to CANARIE November 1 from SURFnet to Connect CERN to Tier-1 centre at TRIUMF. – 1 G Lightpaths currently being established from University of Toronto, and UVic to TRIUMF Ian Gable University of Victoria/HEPnet Canada 12

Applications: Atlas Future Plans • Effort will be focused on recommissioning a Grid. X 1 interface to facilitate addition of non-HEP sites – Non-LCG resources are integrated into LCG without all LCG middleware – Greatly simplifies the management of shared resources • VM's such as Xen can be used to simplify the requirements at non. HEP sites • CHEP 2006 Paper: Evaluation of Virtual Machines for HEP Grids – We showed that negligible performance penalty was suffered by the Atlas kit validation when run on Xen Virtual Machine. – We plan to research deploying pre packaged Atlas and Ba. Bar images to Grid. X 1 sites. Ian Gable University of Victoria/HEPnet Canada 13

Applications: Atlas Future Plans • Effort will be focused on recommissioning a Grid. X 1 interface to facilitate addition of non-HEP sites – Non-LCG resources are integrated into LCG without all LCG middleware – Greatly simplifies the management of shared resources • VM's such as Xen can be used to simplify the requirements at non. HEP sites • CHEP 2006 Paper: Evaluation of Virtual Machines for HEP Grids – We showed that negligible performance penalty was suffered by the Atlas kit validation when run on Xen Virtual Machine. – We plan to research deploying pre packaged Atlas and Ba. Bar images to Grid. X 1 sites. Ian Gable University of Victoria/HEPnet Canada 13

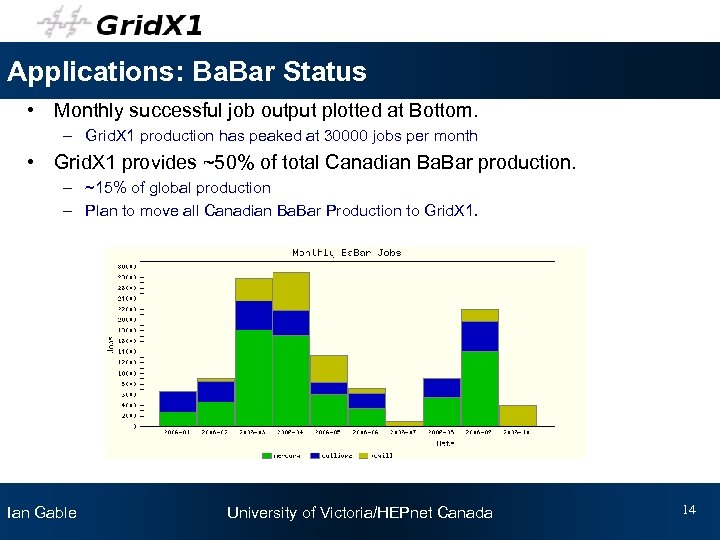

Applications: Ba. Bar Status • Monthly successful job output plotted at Bottom. – Grid. X 1 production has peaked at 30000 jobs per month • Grid. X 1 provides ~50% of total Canadian Ba. Bar production. – ~15% of global production – Plan to move all Canadian Ba. Bar Production to Grid. X 1. Ian Gable University of Victoria/HEPnet Canada 14

Applications: Ba. Bar Status • Monthly successful job output plotted at Bottom. – Grid. X 1 production has peaked at 30000 jobs per month • Grid. X 1 provides ~50% of total Canadian Ba. Bar production. – ~15% of global production – Plan to move all Canadian Ba. Bar Production to Grid. X 1. Ian Gable University of Victoria/HEPnet Canada 14

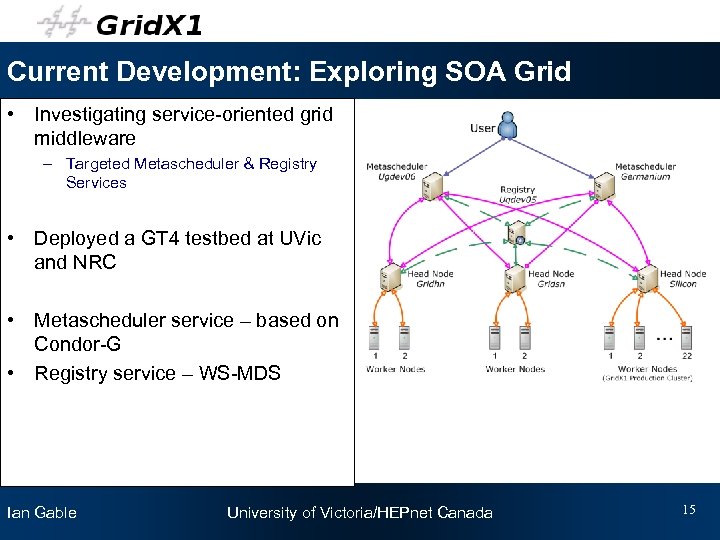

Current Development: Exploring SOA Grid • Investigating service-oriented grid middleware – Targeted Metascheduler & Registry Services • Deployed a GT 4 testbed at UVic and NRC • Metascheduler service – based on Condor-G • Registry service – WS-MDS Ian Gable University of Victoria/HEPnet Canada 15

Current Development: Exploring SOA Grid • Investigating service-oriented grid middleware – Targeted Metascheduler & Registry Services • Deployed a GT 4 testbed at UVic and NRC • Metascheduler service – based on Condor-G • Registry service – WS-MDS Ian Gable University of Victoria/HEPnet Canada 15

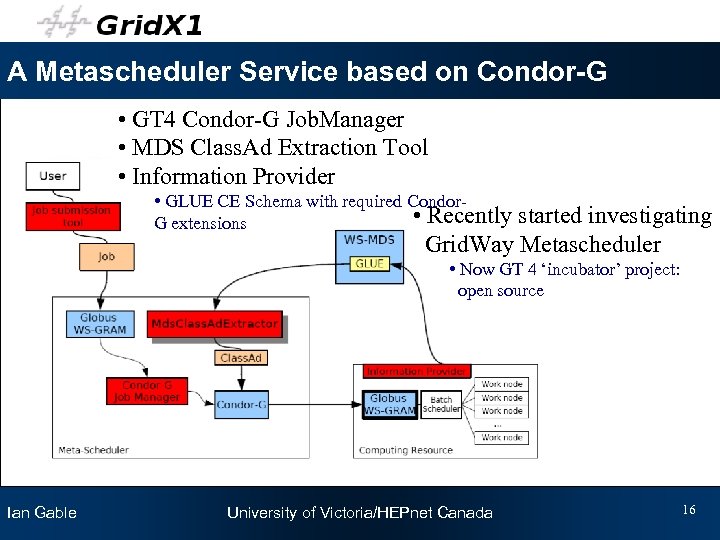

A Metascheduler Service based on Condor-G • GT 4 Condor-G Job. Manager • MDS Class. Ad Extraction Tool • Information Provider • GLUE CE Schema with required Condor • Recently G extensions started investigating Grid. Way Metascheduler • Now GT 4 ‘incubator’ project: open source Condor-G Job Manager Ian Gable University of Victoria/HEPnet Canada 16

A Metascheduler Service based on Condor-G • GT 4 Condor-G Job. Manager • MDS Class. Ad Extraction Tool • Information Provider • GLUE CE Schema with required Condor • Recently G extensions started investigating Grid. Way Metascheduler • Now GT 4 ‘incubator’ project: open source Condor-G Job Manager Ian Gable University of Victoria/HEPnet Canada 16

Summary • Built upon proven technologies: VDT, Condor-G • Grid. X 1 allows us to exploit unused resources at HEP and non-HEP sites • Dynamic grid monitor available. • Grid. X 1 usage by ATLAS and Ba. Bar applications is successful – Used for ATLAS DC 2 during July 2004 – June 2005 – Receiving jobs from Atlas Executor in 2006 – Daily ~1000 Ba. Bar jobs run daily • Moving towards a Web Services based architecture. Ian Gable University of Victoria/HEPnet Canada 17

Summary • Built upon proven technologies: VDT, Condor-G • Grid. X 1 allows us to exploit unused resources at HEP and non-HEP sites • Dynamic grid monitor available. • Grid. X 1 usage by ATLAS and Ba. Bar applications is successful – Used for ATLAS DC 2 during July 2004 – June 2005 – Receiving jobs from Atlas Executor in 2006 – Daily ~1000 Ba. Bar jobs run daily • Moving towards a Web Services based architecture. Ian Gable University of Victoria/HEPnet Canada 17