7830df2175fee2afdd0a8b29f0ac770f.ppt

- Количество слайдов: 18

Grid Tutorial, NIKHEF Amsterdam, 3 -4 June 2004 www. eu-egee. org HEP Use Cases for Grid Computing J. A. Templon Undecided (NIKHEF) EGEE is a project funded by the European Union under contract IST-2003 -508833

Grid Tutorial, NIKHEF Amsterdam, 3 -4 June 2004 www. eu-egee. org HEP Use Cases for Grid Computing J. A. Templon Undecided (NIKHEF) EGEE is a project funded by the European Union under contract IST-2003 -508833

Contents • The HEP Computing Problem • How it matches the Grid Computing Idea • Some HEP “Use Cases” & Approaches NIKHEF Grid Tutorial, 3 -4 June 2004 - 2

Contents • The HEP Computing Problem • How it matches the Grid Computing Idea • Some HEP “Use Cases” & Approaches NIKHEF Grid Tutorial, 3 -4 June 2004 - 2

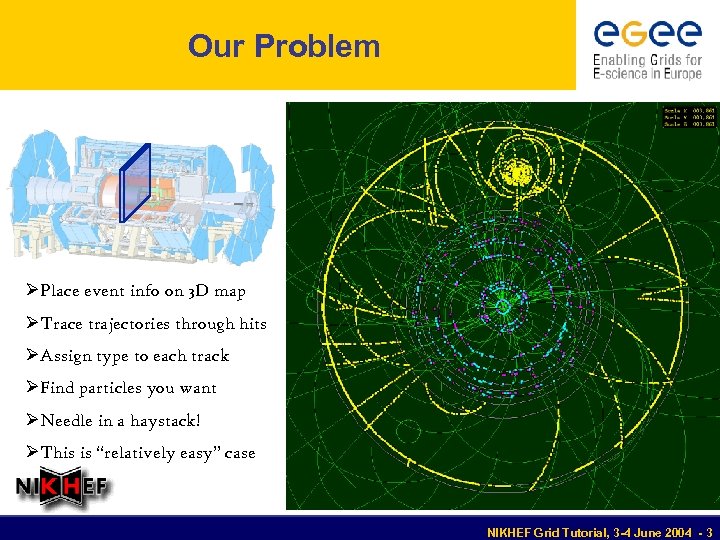

Our Problem ØPlace event info on 3 D map ØTrace trajectories through hits ØAssign type to each track ØFind particles you want ØNeedle in a haystack! ØThis is “relatively easy” case NIKHEF Grid Tutorial, 3 -4 June 2004 - 3

Our Problem ØPlace event info on 3 D map ØTrace trajectories through hits ØAssign type to each track ØFind particles you want ØNeedle in a haystack! ØThis is “relatively easy” case NIKHEF Grid Tutorial, 3 -4 June 2004 - 3

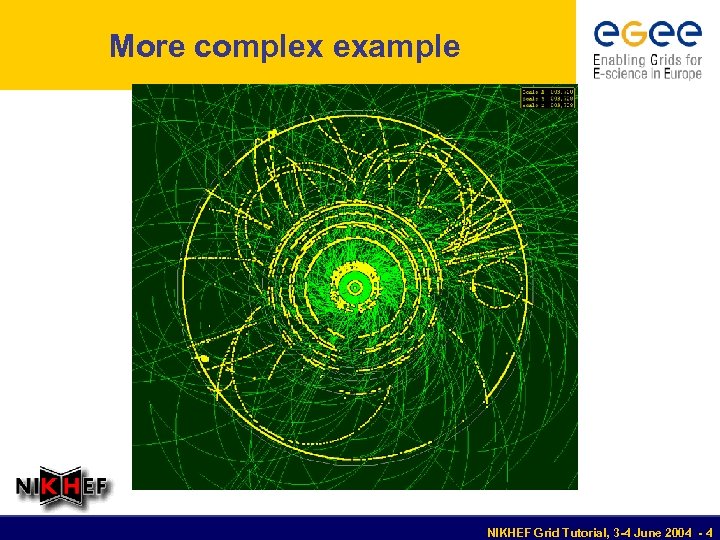

More complex example NIKHEF Grid Tutorial, 3 -4 June 2004 - 4

More complex example NIKHEF Grid Tutorial, 3 -4 June 2004 - 4

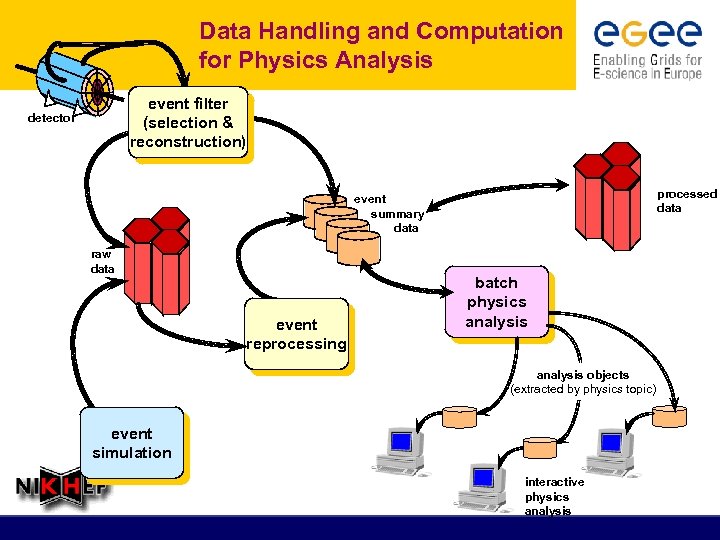

Data Handling and Computation for Physics Analysis event filter (selection & reconstruction) detector processed data event summary data raw data event reprocessing batch physics analysis objects (extracted by physics topic) event simulation interactive physics analysis

Data Handling and Computation for Physics Analysis event filter (selection & reconstruction) detector processed data event summary data raw data event reprocessing batch physics analysis objects (extracted by physics topic) event simulation interactive physics analysis

Scales • To reconstruct and analyze 1 event takes about 90 seconds • Maybe only a few out of a million are interesting. But we have to check them all! • Analysis program needs lots of calibration; determined from inspecting results of first pass. • Each event will be analyzed several times! NIKHEF Grid Tutorial, 3 -4 June 2004 - 6

Scales • To reconstruct and analyze 1 event takes about 90 seconds • Maybe only a few out of a million are interesting. But we have to check them all! • Analysis program needs lots of calibration; determined from inspecting results of first pass. • Each event will be analyzed several times! NIKHEF Grid Tutorial, 3 -4 June 2004 - 6

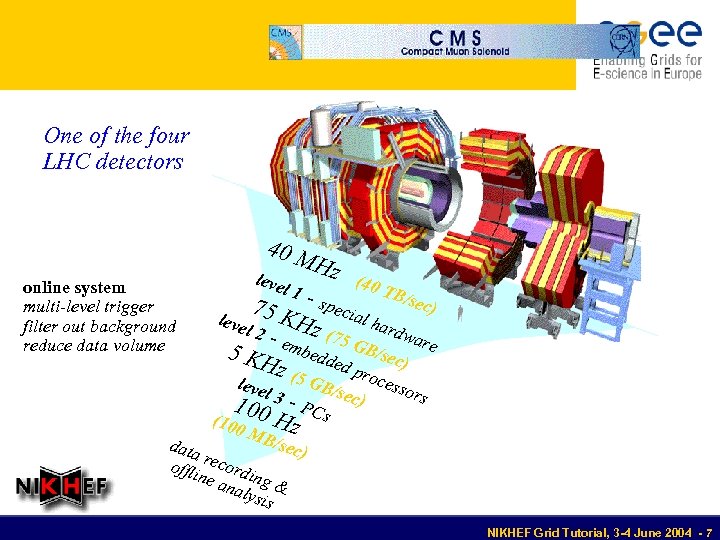

One of the four LHC detectors 40 M online system multi-level trigger filter out background reduce data volume leve l 1 Hz (40 TB/ - spe sec) 75 K cial leve hard l 2 - Hz (7 war 5 G emb 5 KH edde B/sec) e d pr z( o 5 G leve B/se cessor l s 100 3 - PCs c) (100 H MB z /sec data ) offli record ne a ing & naly sis NIKHEF Grid Tutorial, 3 -4 June 2004 - 7

One of the four LHC detectors 40 M online system multi-level trigger filter out background reduce data volume leve l 1 Hz (40 TB/ - spe sec) 75 K cial leve hard l 2 - Hz (7 war 5 G emb 5 KH edde B/sec) e d pr z( o 5 G leve B/se cessor l s 100 3 - PCs c) (100 H MB z /sec data ) offli record ne a ing & naly sis NIKHEF Grid Tutorial, 3 -4 June 2004 - 7

Scales (2) • 90 seconds per event to reconstruct and analyze • 100 incoming events per second • To keep up, need either: § A computer that is nine thousand times faster, or § nine thousand computers working together • Moore’s Law: wait 20 years and computers will be 9000 times faster (we need them in 2007!) NIKHEF Grid Tutorial, 3 -4 June 2004 - 8

Scales (2) • 90 seconds per event to reconstruct and analyze • 100 incoming events per second • To keep up, need either: § A computer that is nine thousand times faster, or § nine thousand computers working together • Moore’s Law: wait 20 years and computers will be 9000 times faster (we need them in 2007!) NIKHEF Grid Tutorial, 3 -4 June 2004 - 8

Computational {Impli, Compli}cations • Four LHC experiments – roughly 36 k CPUs needed • BUT: accelerator not always “on” – need fewer • BUT: multiple passes per event – need more! • BUT: haven’t accounted for Monte Carlo production – more!! • AND: haven’t addressed the needs of “physics users” at all! NIKHEF Grid Tutorial, 3 -4 June 2004 - 9

Computational {Impli, Compli}cations • Four LHC experiments – roughly 36 k CPUs needed • BUT: accelerator not always “on” – need fewer • BUT: multiple passes per event – need more! • BUT: haven’t accounted for Monte Carlo production – more!! • AND: haven’t addressed the needs of “physics users” at all! NIKHEF Grid Tutorial, 3 -4 June 2004 - 9

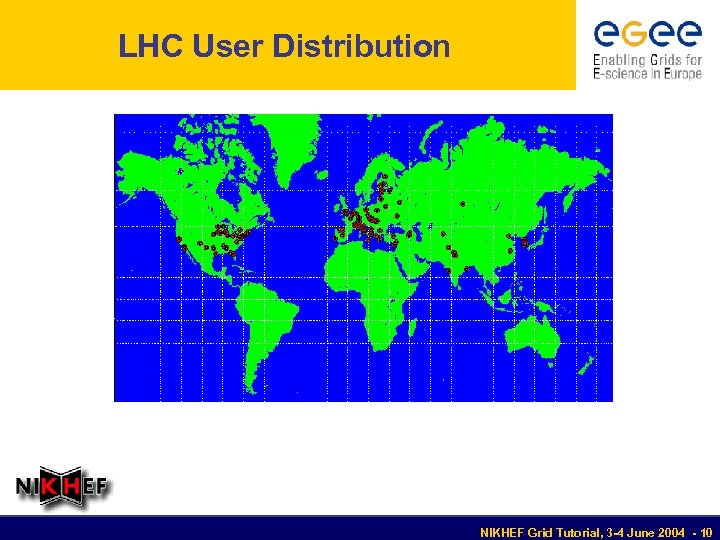

LHC User Distribution NIKHEF Grid Tutorial, 3 -4 June 2004 - 10

LHC User Distribution NIKHEF Grid Tutorial, 3 -4 June 2004 - 10

Classic Motivation for Grids • Trivially parallel problem • Large Scales: 100 k CPUs, petabytes of data § (if we’re only talking ten machines, who cares? ) • Large Dynamic Range: bursty usage patterns § Why buy 25 k CPUs if 60% of the time you only need 900 CPUs? • Multiple user groups (& purposes) on single system § Can’t “hard-wire” the system for your purposes • Wide-area access requirements § Users not in same lab or even continent NIKHEF Grid Tutorial, 3 -4 June 2004 - 11

Classic Motivation for Grids • Trivially parallel problem • Large Scales: 100 k CPUs, petabytes of data § (if we’re only talking ten machines, who cares? ) • Large Dynamic Range: bursty usage patterns § Why buy 25 k CPUs if 60% of the time you only need 900 CPUs? • Multiple user groups (& purposes) on single system § Can’t “hard-wire” the system for your purposes • Wide-area access requirements § Users not in same lab or even continent NIKHEF Grid Tutorial, 3 -4 June 2004 - 11

Solution using Grids • Trivially parallel: break up problem “appropriate”-sized pieces • Large Scales: 100 k CPUs, petabytes of data § Assemble 100 k+ CPUs and petabytes of mass storage § Don’t need to be in the same place! • Large Dynamic Range: bursty usage patterns § When you need less than you have, others use excess capacity § When you need more, use others’ excess capacities • Multiple user groups on single system § “Generic” grid software services (think web server here) • Wide-area access requirements § Public Key Infrastructure for authentication & authorization NIKHEF Grid Tutorial, 3 -4 June 2004 - 12

Solution using Grids • Trivially parallel: break up problem “appropriate”-sized pieces • Large Scales: 100 k CPUs, petabytes of data § Assemble 100 k+ CPUs and petabytes of mass storage § Don’t need to be in the same place! • Large Dynamic Range: bursty usage patterns § When you need less than you have, others use excess capacity § When you need more, use others’ excess capacities • Multiple user groups on single system § “Generic” grid software services (think web server here) • Wide-area access requirements § Public Key Infrastructure for authentication & authorization NIKHEF Grid Tutorial, 3 -4 June 2004 - 12

HEP Use Cases • Simulation • Data (Re)Processing • Physics Analysis General ideas presented here … contact us for detailed info NIKHEF Grid Tutorial, 3 -4 June 2004 - 13

HEP Use Cases • Simulation • Data (Re)Processing • Physics Analysis General ideas presented here … contact us for detailed info NIKHEF Grid Tutorial, 3 -4 June 2004 - 13

Simulation • The easiest use case § No input data § Output can be to a central location § Bookkeeping not really a problem (lost jobs OK) • • Define program version and parameters Tune # of events produced per run to “reasonable” value Submit (needed ev)/(ev per job) jobs Wait NIKHEF Grid Tutorial, 3 -4 June 2004 - 14

Simulation • The easiest use case § No input data § Output can be to a central location § Bookkeeping not really a problem (lost jobs OK) • • Define program version and parameters Tune # of events produced per run to “reasonable” value Submit (needed ev)/(ev per job) jobs Wait NIKHEF Grid Tutorial, 3 -4 June 2004 - 14

Data (Re)Processing • Quite a bit more challenging: there are input files, and you • • • can’t lose jobs One job per input file (so far) Data distribution strategy Monitoring and bookkeeping Software distribution Traceability of output (“provenance”) NIKHEF Grid Tutorial, 3 -4 June 2004 - 15

Data (Re)Processing • Quite a bit more challenging: there are input files, and you • • • can’t lose jobs One job per input file (so far) Data distribution strategy Monitoring and bookkeeping Software distribution Traceability of output (“provenance”) NIKHEF Grid Tutorial, 3 -4 June 2004 - 15

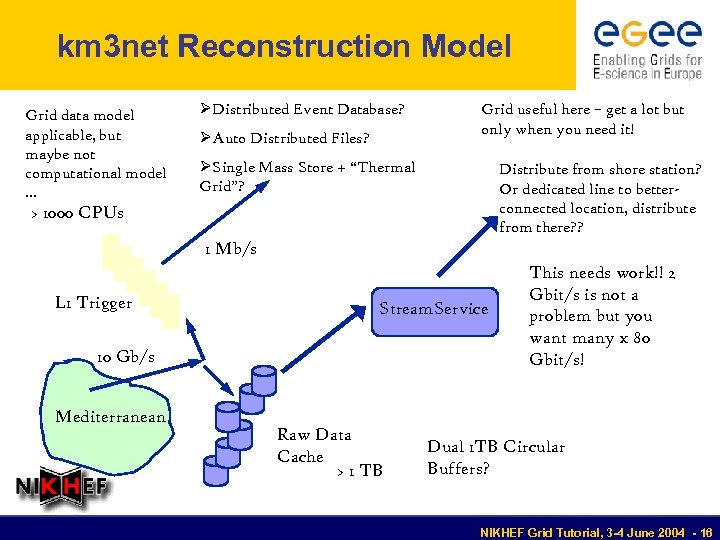

km 3 net Reconstruction Model Grid data model applicable, but maybe not computational model … ØDistributed Event Database? ØAuto Distributed Files? Grid useful here – get a lot but only when you need it! ØSingle Mass Store + “Thermal Grid”? Distribute from shore station? Or dedicated line to betterconnected location, distribute from there? ? > 1000 CPUs 1 Mb/s L 1 Trigger Stream. Service 10 Gb/s Mediterranean Raw Data Cache > 1 TB This needs work!! 2 Gbit/s is not a problem but you want many x 80 Gbit/s! Dual 1 TB Circular Buffers? NIKHEF Grid Tutorial, 3 -4 June 2004 - 16

km 3 net Reconstruction Model Grid data model applicable, but maybe not computational model … ØDistributed Event Database? ØAuto Distributed Files? Grid useful here – get a lot but only when you need it! ØSingle Mass Store + “Thermal Grid”? Distribute from shore station? Or dedicated line to betterconnected location, distribute from there? ? > 1000 CPUs 1 Mb/s L 1 Trigger Stream. Service 10 Gb/s Mediterranean Raw Data Cache > 1 TB This needs work!! 2 Gbit/s is not a problem but you want many x 80 Gbit/s! Dual 1 TB Circular Buffers? NIKHEF Grid Tutorial, 3 -4 June 2004 - 16

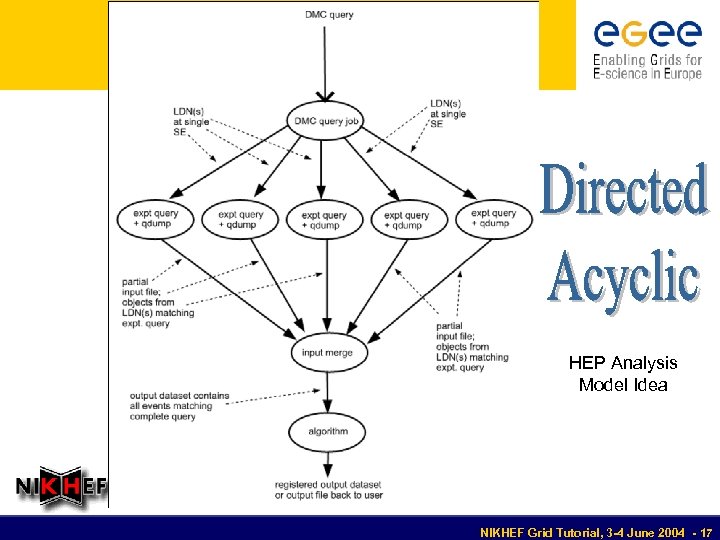

HEP Analysis Model Idea NIKHEF Grid Tutorial, 3 -4 June 2004 - 17

HEP Analysis Model Idea NIKHEF Grid Tutorial, 3 -4 June 2004 - 17

Conclusions • HEP Computing well-suited to Grids • HEP is using Grids now • There is a lot of (fun) work to do! NIKHEF Grid Tutorial, 3 -4 June 2004 - 18

Conclusions • HEP Computing well-suited to Grids • HEP is using Grids now • There is a lot of (fun) work to do! NIKHEF Grid Tutorial, 3 -4 June 2004 - 18