d423d527a51648297ccc7ebfa3caf3ea.ppt

- Количество слайдов: 24

Grid Projects: EU Data. Grid and LHC Computing Grid Oxana Smirnova Lund University October 29, 2003, Košice 2003 -10 -29 oxana. smirnova@hep. lu. se

Grid Projects: EU Data. Grid and LHC Computing Grid Oxana Smirnova Lund University October 29, 2003, Košice 2003 -10 -29 oxana. smirnova@hep. lu. se

Outlook n n n Precursors: attempts to meet tasks of HEP computing EDG: the first global Grid development project LCG: deploy computing environment for LHC experiments 2003 -10 -29 oxana. smirnova@hep. lu. se 2

Outlook n n n Precursors: attempts to meet tasks of HEP computing EDG: the first global Grid development project LCG: deploy computing environment for LHC experiments 2003 -10 -29 oxana. smirnova@hep. lu. se 2

Characteristics of HEP computing Event independence q q Data from each collision is processed independently: trivial parallelism Mass of independent problems with no information exchange Massive data storage Massive data q q Modest event size: 1 – 10 MB (not ALICE though) Total is very large – Petabytes for each experiment. Mostly read only q q Data never changed after recording to tertiary storage But is read often! A tape is mounted at CERN every second! Resilience rather than ultimate reliability q q Individual components should not bring down the whole system Reschedule jobs on failed equipment Modest floating point needs q 2003 -10 -29 HEP computations involve decision making rather than calculation oxana. smirnova@hep. lu. se 3

Characteristics of HEP computing Event independence q q Data from each collision is processed independently: trivial parallelism Mass of independent problems with no information exchange Massive data storage Massive data q q Modest event size: 1 – 10 MB (not ALICE though) Total is very large – Petabytes for each experiment. Mostly read only q q Data never changed after recording to tertiary storage But is read often! A tape is mounted at CERN every second! Resilience rather than ultimate reliability q q Individual components should not bring down the whole system Reschedule jobs on failed equipment Modest floating point needs q 2003 -10 -29 HEP computations involve decision making rather than calculation oxana. smirnova@hep. lu. se 3

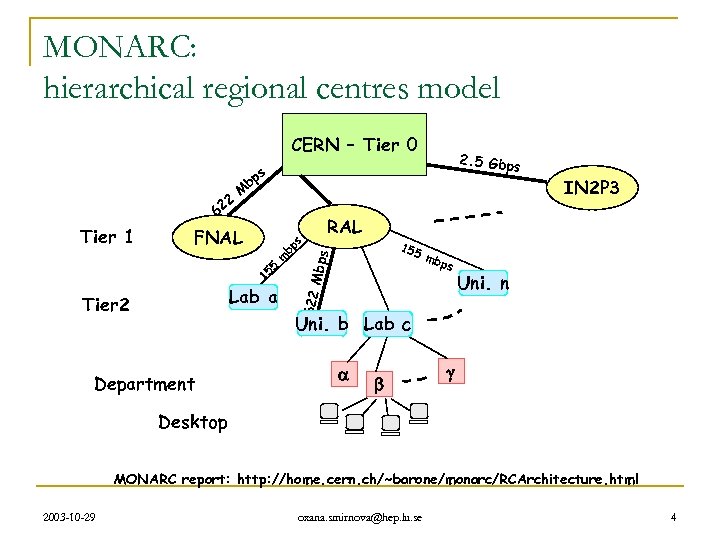

MONARC: hierarchical regional centres model CERN – Tier 0 22 s bp M 6 RAL Mbps IN 2 P 3 mb ps 622 bp 5 15 Lab a Tier 2 155 s FNAL m Tier 1 2. 5 Gbp s Uni. n Uni. b Lab c Department Desktop MONARC report: http: //home. cern. ch/~barone/monarc/RCArchitecture. html 2003 -10 -29 oxana. smirnova@hep. lu. se 4

MONARC: hierarchical regional centres model CERN – Tier 0 22 s bp M 6 RAL Mbps IN 2 P 3 mb ps 622 bp 5 15 Lab a Tier 2 155 s FNAL m Tier 1 2. 5 Gbp s Uni. n Uni. b Lab c Department Desktop MONARC report: http: //home. cern. ch/~barone/monarc/RCArchitecture. html 2003 -10 -29 oxana. smirnova@hep. lu. se 4

EU Datagrid project n n n In certain aspects was initiated as a MONARC followup, introducing the Grid technologies Started on January 1, 2001, to deliver by end 2003 q Aim: to develop a Grid middleware suitable for High Energy physics, Earth Observation, biomedical applications and live demonstrations q 9. 8 MEuros EU funding over 3 years q Development based on existing tools, e. g. , Globus, LCFG, GDMP etc Maintains development and applications testbeds, which include several sites across the Europe 2003 -10 -29 oxana. smirnova@hep. lu. se 5

EU Datagrid project n n n In certain aspects was initiated as a MONARC followup, introducing the Grid technologies Started on January 1, 2001, to deliver by end 2003 q Aim: to develop a Grid middleware suitable for High Energy physics, Earth Observation, biomedical applications and live demonstrations q 9. 8 MEuros EU funding over 3 years q Development based on existing tools, e. g. , Globus, LCFG, GDMP etc Maintains development and applications testbeds, which include several sites across the Europe 2003 -10 -29 oxana. smirnova@hep. lu. se 5

Slide by EU Dat. Grid EDG overview : Main partners n CERN – International (Switzerland/France) n CNRS – France n ESA/ESRIN – International (Italy) n INFN – Italy n NIKHEF – The Netherlands n PPARC – UK 2003 -10 -29 oxana. smirnova@hep. lu. se 6

Slide by EU Dat. Grid EDG overview : Main partners n CERN – International (Switzerland/France) n CNRS – France n ESA/ESRIN – International (Italy) n INFN – Italy n NIKHEF – The Netherlands n PPARC – UK 2003 -10 -29 oxana. smirnova@hep. lu. se 6

Slide by EU Dat. Grid EDG overview : Assistant Partners Industrial Partners • Datamat (Italy) • IBM-UK (UK) • CS-SI (France) Research and Academic Institutes • CESNET (Czech Republic) • Commissariat à l'énergie atomique (CEA) – France • Computer and Automation Research Institute, Hungarian Academy of Sciences (MTA SZTAKI) • Consiglio Nazionale delle Ricerche (Italy) • Helsinki Institute of Physics – Finland • Institut de Fisica d'Altes Energies (IFAE) - Spain • Istituto Trentino di Cultura (IRST) – Italy • Konrad-Zuse-Zentrum für Informationstechnik Berlin - Germany • Royal Netherlands Meteorological Institute (KNMI) • Ruprecht-Karls-Universität Heidelberg - Germany • Stichting Academisch Rekencentrum Amsterdam (SARA) – Netherlands • Swedish Research Council - Sweden 2003 -10 -29 oxana. smirnova@hep. lu. se 7

Slide by EU Dat. Grid EDG overview : Assistant Partners Industrial Partners • Datamat (Italy) • IBM-UK (UK) • CS-SI (France) Research and Academic Institutes • CESNET (Czech Republic) • Commissariat à l'énergie atomique (CEA) – France • Computer and Automation Research Institute, Hungarian Academy of Sciences (MTA SZTAKI) • Consiglio Nazionale delle Ricerche (Italy) • Helsinki Institute of Physics – Finland • Institut de Fisica d'Altes Energies (IFAE) - Spain • Istituto Trentino di Cultura (IRST) – Italy • Konrad-Zuse-Zentrum für Informationstechnik Berlin - Germany • Royal Netherlands Meteorological Institute (KNMI) • Ruprecht-Karls-Universität Heidelberg - Germany • Stichting Academisch Rekencentrum Amsterdam (SARA) – Netherlands • Swedish Research Council - Sweden 2003 -10 -29 oxana. smirnova@hep. lu. se 7

EDG work-packages WP 1: Work Load Management System q WP 2: Data Management q WP 3: Grid Monitoring / Grid Information Systems q WP 4: Fabric Management q WP 5: Storage Element, MSS support q WP 6: Testbed and demonstrators q WP 7: Network Monitoring q WP 8: High Energy Physics Applications q WP 9: Earth Observation q WP 10: Biology q WP 11: Dissemination q WP 12: Management q 2003 -10 -29 oxana. smirnova@hep. lu. se 8

EDG work-packages WP 1: Work Load Management System q WP 2: Data Management q WP 3: Grid Monitoring / Grid Information Systems q WP 4: Fabric Management q WP 5: Storage Element, MSS support q WP 6: Testbed and demonstrators q WP 7: Network Monitoring q WP 8: High Energy Physics Applications q WP 9: Earth Observation q WP 10: Biology q WP 11: Dissemination q WP 12: Management q 2003 -10 -29 oxana. smirnova@hep. lu. se 8

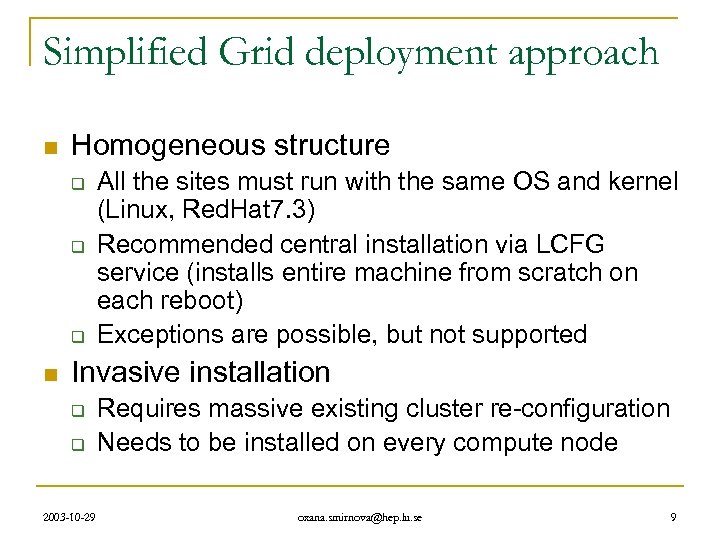

Simplified Grid deployment approach n Homogeneous structure q q q n All the sites must run with the same OS and kernel (Linux, Red. Hat 7. 3) Recommended central installation via LCFG service (installs entire machine from scratch on each reboot) Exceptions are possible, but not supported Invasive installation q q 2003 -10 -29 Requires massive existing cluster re-configuration Needs to be installed on every compute node oxana. smirnova@hep. lu. se 9

Simplified Grid deployment approach n Homogeneous structure q q q n All the sites must run with the same OS and kernel (Linux, Red. Hat 7. 3) Recommended central installation via LCFG service (installs entire machine from scratch on each reboot) Exceptions are possible, but not supported Invasive installation q q 2003 -10 -29 Requires massive existing cluster re-configuration Needs to be installed on every compute node oxana. smirnova@hep. lu. se 9

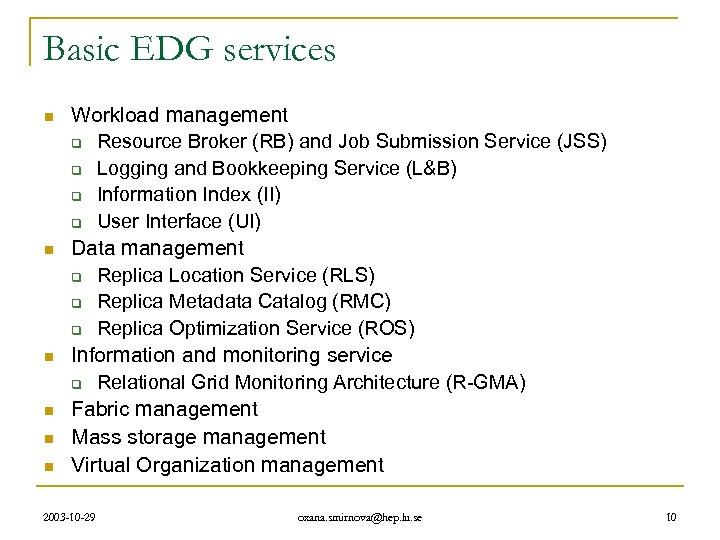

Basic EDG services n n n Workload management q Resource Broker (RB) and Job Submission Service (JSS) q Logging and Bookkeeping Service (L&B) q Information Index (II) q User Interface (UI) Data management q Replica Location Service (RLS) q Replica Metadata Catalog (RMC) q Replica Optimization Service (ROS) Information and monitoring service q Relational Grid Monitoring Architecture (R-GMA) Fabric management Mass storage management Virtual Organization management 2003 -10 -29 oxana. smirnova@hep. lu. se 10

Basic EDG services n n n Workload management q Resource Broker (RB) and Job Submission Service (JSS) q Logging and Bookkeeping Service (L&B) q Information Index (II) q User Interface (UI) Data management q Replica Location Service (RLS) q Replica Metadata Catalog (RMC) q Replica Optimization Service (ROS) Information and monitoring service q Relational Grid Monitoring Architecture (R-GMA) Fabric management Mass storage management Virtual Organization management 2003 -10 -29 oxana. smirnova@hep. lu. se 10

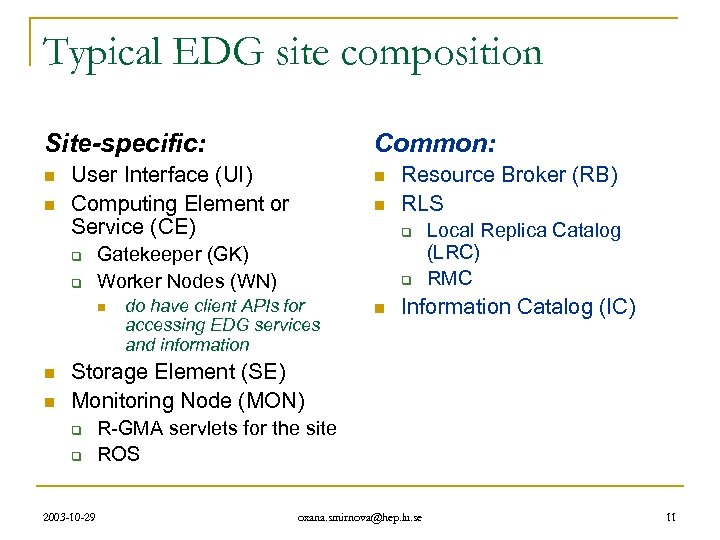

Typical EDG site composition Site-specific: n n User Interface (UI) Computing Element or Service (CE) q q n n n Resource Broker (RB) RLS q Gatekeeper (GK) Worker Nodes (WN) n n Common: q do have client APIs for accessing EDG services and information n Local Replica Catalog (LRC) RMC Information Catalog (IC) Storage Element (SE) Monitoring Node (MON) q q 2003 -10 -29 R-GMA servlets for the site ROS oxana. smirnova@hep. lu. se 11

Typical EDG site composition Site-specific: n n User Interface (UI) Computing Element or Service (CE) q q n n n Resource Broker (RB) RLS q Gatekeeper (GK) Worker Nodes (WN) n n Common: q do have client APIs for accessing EDG services and information n Local Replica Catalog (LRC) RMC Information Catalog (IC) Storage Element (SE) Monitoring Node (MON) q q 2003 -10 -29 R-GMA servlets for the site ROS oxana. smirnova@hep. lu. se 11

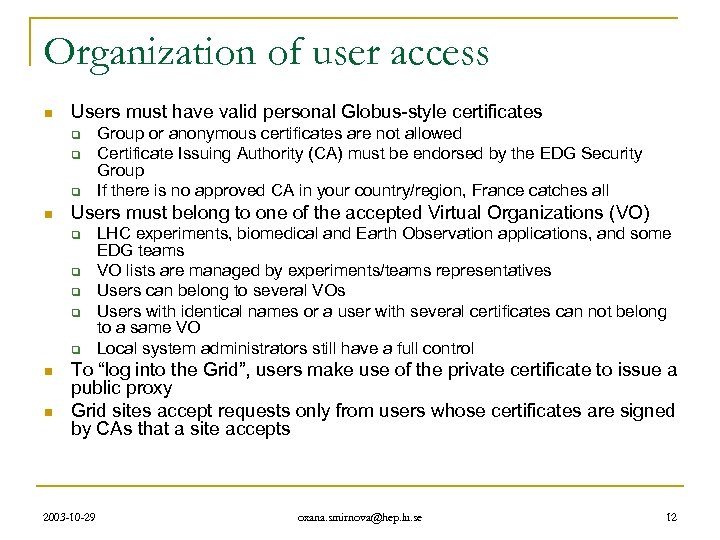

Organization of user access n Users must have valid personal Globus-style certificates q q q n Users must belong to one of the accepted Virtual Organizations (VO) q q q n n Group or anonymous certificates are not allowed Certificate Issuing Authority (CA) must be endorsed by the EDG Security Group If there is no approved CA in your country/region, France catches all LHC experiments, biomedical and Earth Observation applications, and some EDG teams VO lists are managed by experiments/teams representatives Users can belong to several VOs Users with identical names or a user with several certificates can not belong to a same VO Local system administrators still have a full control To “log into the Grid”, users make use of the private certificate to issue a public proxy Grid sites accept requests only from users whose certificates are signed by CAs that a site accepts 2003 -10 -29 oxana. smirnova@hep. lu. se 12

Organization of user access n Users must have valid personal Globus-style certificates q q q n Users must belong to one of the accepted Virtual Organizations (VO) q q q n n Group or anonymous certificates are not allowed Certificate Issuing Authority (CA) must be endorsed by the EDG Security Group If there is no approved CA in your country/region, France catches all LHC experiments, biomedical and Earth Observation applications, and some EDG teams VO lists are managed by experiments/teams representatives Users can belong to several VOs Users with identical names or a user with several certificates can not belong to a same VO Local system administrators still have a full control To “log into the Grid”, users make use of the private certificate to issue a public proxy Grid sites accept requests only from users whose certificates are signed by CAs that a site accepts 2003 -10 -29 oxana. smirnova@hep. lu. se 12

EDG applications testbed n EDG is committed to create a stable testbed to be used by applications for real tasks q q n n At the moment (October 2003) consists of ca. 15 sites in 8 countries Most sites are installed from scratch using the EDG tools (require/install Red. Hat 7. 3) q q n This started to materialize in August 2002… …and coincided with the ATLAS DC 1 CMS joined in December ALICE, LHCb – smaller scale tests Some have installations on the top of existing resources A lightweight EDG installation is available Central element: the Resource Broker (RB), distributes jobs between the resources q q q 2003 -10 -29 Most often, a single RB is used Some tests used RBs “attached” to User Interfaces In future, may be an RB per Virtual Organization (VO) or/and per user ? oxana. smirnova@hep. lu. se 13

EDG applications testbed n EDG is committed to create a stable testbed to be used by applications for real tasks q q n n At the moment (October 2003) consists of ca. 15 sites in 8 countries Most sites are installed from scratch using the EDG tools (require/install Red. Hat 7. 3) q q n This started to materialize in August 2002… …and coincided with the ATLAS DC 1 CMS joined in December ALICE, LHCb – smaller scale tests Some have installations on the top of existing resources A lightweight EDG installation is available Central element: the Resource Broker (RB), distributes jobs between the resources q q q 2003 -10 -29 Most often, a single RB is used Some tests used RBs “attached” to User Interfaces In future, may be an RB per Virtual Organization (VO) or/and per user ? oxana. smirnova@hep. lu. se 13

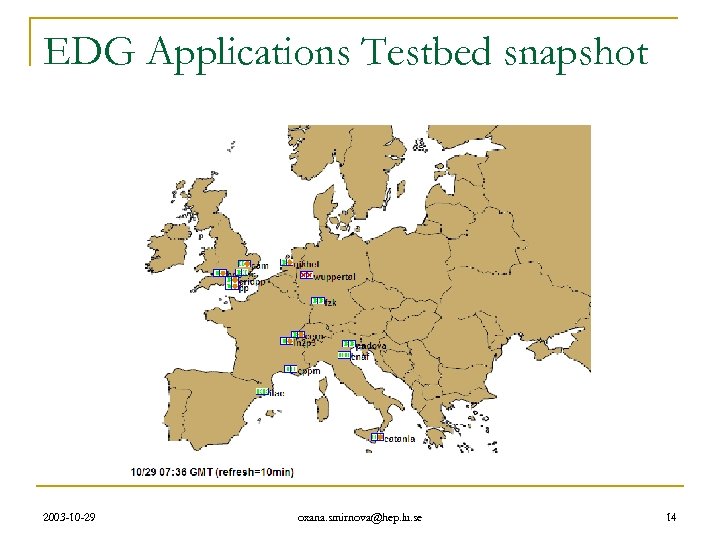

EDG Applications Testbed snapshot 2003 -10 -29 oxana. smirnova@hep. lu. se 14

EDG Applications Testbed snapshot 2003 -10 -29 oxana. smirnova@hep. lu. se 14

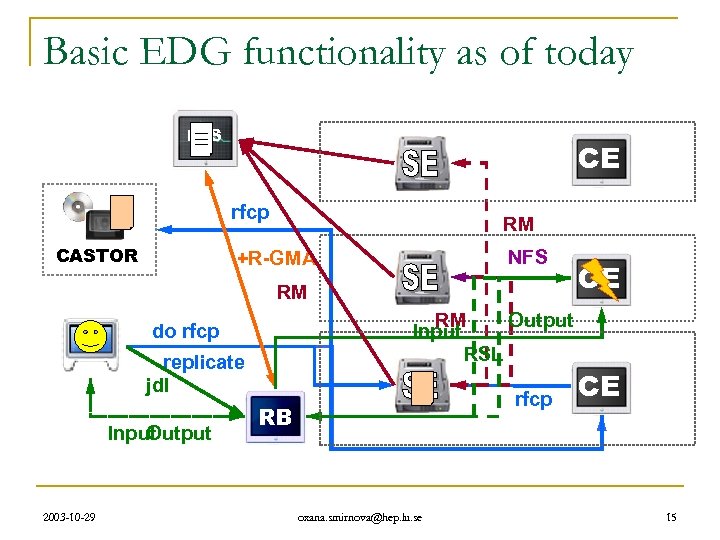

Basic EDG functionality as of today RLS CE rfcp CASTOR RM NFS +R-GMA RM UI 2003 -10 -29 Output RM Input RSL do rfcp replicate jdl Input Output CE rfcp RB oxana. smirnova@hep. lu. se CE 15

Basic EDG functionality as of today RLS CE rfcp CASTOR RM NFS +R-GMA RM UI 2003 -10 -29 Output RM Input RSL do rfcp replicate jdl Input Output CE rfcp RB oxana. smirnova@hep. lu. se CE 15

EDG status n The EDG 1 was not a very satisfactory prototype q q q n The EDG 2 is released and deployed for applications on October 20, 2003 q q q n Highly unstable behavior Somewhat late deployment Many missing features and functionalities Many services have been re-written since EDG 1 Some functionality have been added, but some have been lost Stability is still the issue, esp. the Information System performance Little has been done to streamline applications environment deployment No production-scale tasks have been shown to perform reliably yet No development will be done beyond this point q q 2003 -10 -29 Bug fixing will continue for a while Some “re-engineering” is expected to be done by the next EU-sponsored project – EGEE oxana. smirnova@hep. lu. se 16

EDG status n The EDG 1 was not a very satisfactory prototype q q q n The EDG 2 is released and deployed for applications on October 20, 2003 q q q n Highly unstable behavior Somewhat late deployment Many missing features and functionalities Many services have been re-written since EDG 1 Some functionality have been added, but some have been lost Stability is still the issue, esp. the Information System performance Little has been done to streamline applications environment deployment No production-scale tasks have been shown to perform reliably yet No development will be done beyond this point q q 2003 -10 -29 Bug fixing will continue for a while Some “re-engineering” is expected to be done by the next EU-sponsored project – EGEE oxana. smirnova@hep. lu. se 16

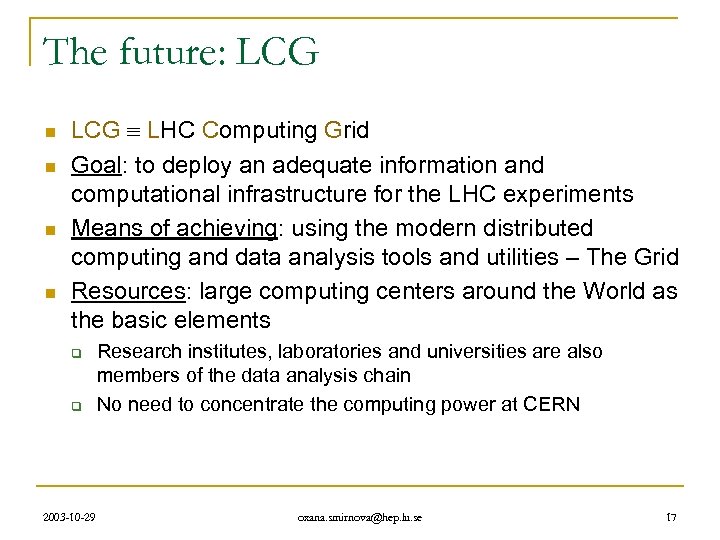

The future: LCG n n LCG LHC Computing Grid Goal: to deploy an adequate information and computational infrastructure for the LHC experiments Means of achieving: using the modern distributed computing and data analysis tools and utilities – The Grid Resources: large computing centers around the World as the basic elements q q 2003 -10 -29 Research institutes, laboratories and universities are also members of the data analysis chain No need to concentrate the computing power at CERN oxana. smirnova@hep. lu. se 17

The future: LCG n n LCG LHC Computing Grid Goal: to deploy an adequate information and computational infrastructure for the LHC experiments Means of achieving: using the modern distributed computing and data analysis tools and utilities – The Grid Resources: large computing centers around the World as the basic elements q q 2003 -10 -29 Research institutes, laboratories and universities are also members of the data analysis chain No need to concentrate the computing power at CERN oxana. smirnova@hep. lu. se 17

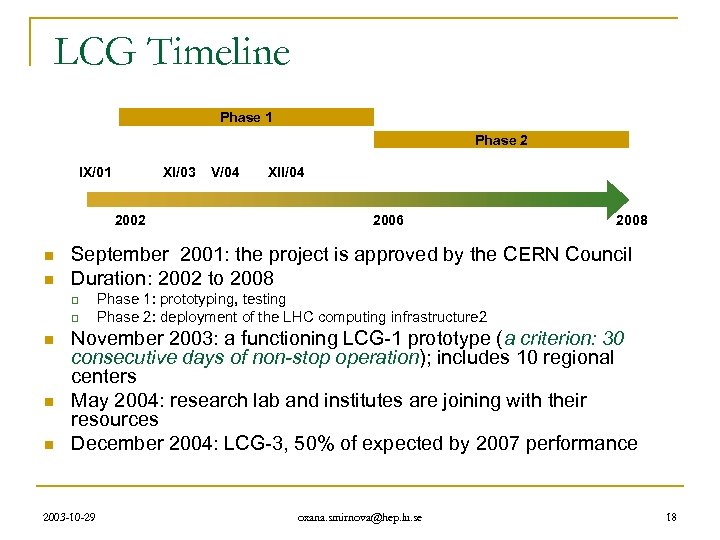

LCG Timeline Phase 1 Phase 2 IX/01 XI/03 2002 n n q n n XII/04 2006 2008 September 2001: the project is approved by the CERN Council Duration: 2002 to 2008 q n V/04 Phase 1: prototyping, testing Phase 2: deployment of the LHC computing infrastructure 2 November 2003: a functioning LCG-1 prototype (a criterion: 30 consecutive days of non-stop operation); includes 10 regional centers May 2004: research lab and institutes are joining with their resources December 2004: LCG-3, 50% of expected by 2007 performance 2003 -10 -29 oxana. smirnova@hep. lu. se 18

LCG Timeline Phase 1 Phase 2 IX/01 XI/03 2002 n n q n n XII/04 2006 2008 September 2001: the project is approved by the CERN Council Duration: 2002 to 2008 q n V/04 Phase 1: prototyping, testing Phase 2: deployment of the LHC computing infrastructure 2 November 2003: a functioning LCG-1 prototype (a criterion: 30 consecutive days of non-stop operation); includes 10 regional centers May 2004: research lab and institutes are joining with their resources December 2004: LCG-3, 50% of expected by 2007 performance 2003 -10 -29 oxana. smirnova@hep. lu. se 18

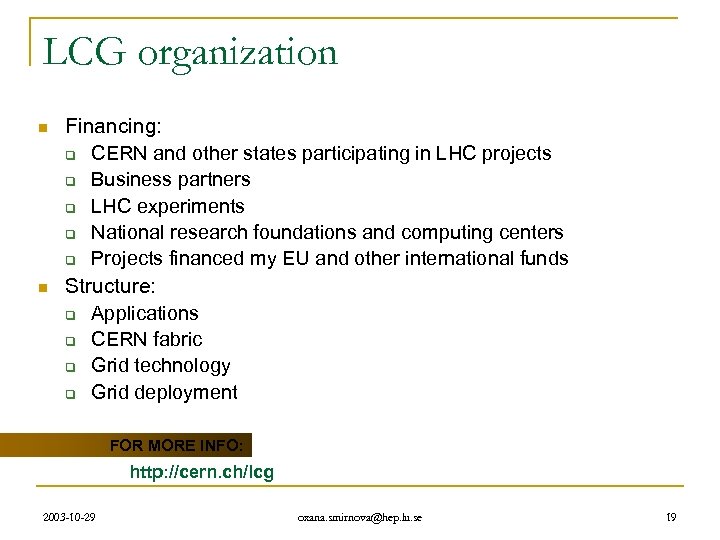

LCG organization n n Financing: q CERN and other states participating in LHC projects q Business partners q LHC experiments q National research foundations and computing centers q Projects financed my EU and other international funds Structure: q Applications q CERN fabric q Grid technology q Grid deployment FOR MORE INFO: http: //cern. ch/lcg 2003 -10 -29 oxana. smirnova@hep. lu. se 19

LCG organization n n Financing: q CERN and other states participating in LHC projects q Business partners q LHC experiments q National research foundations and computing centers q Projects financed my EU and other international funds Structure: q Applications q CERN fabric q Grid technology q Grid deployment FOR MORE INFO: http: //cern. ch/lcg 2003 -10 -29 oxana. smirnova@hep. lu. se 19

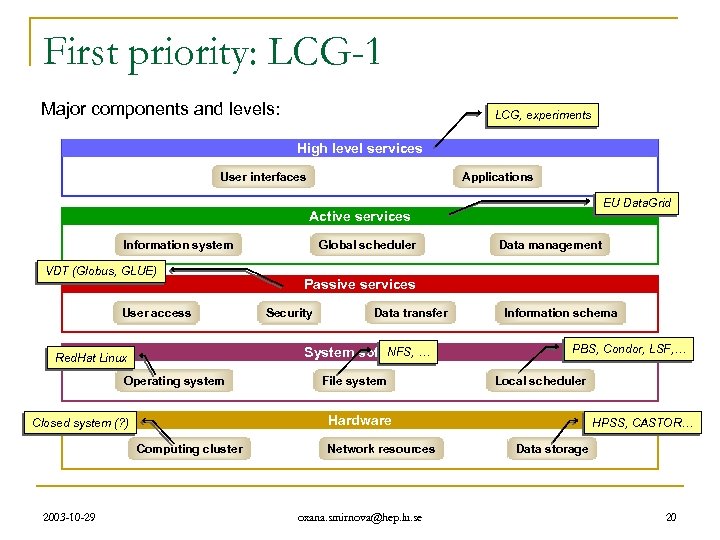

First priority: LCG-1 Major components and levels: LCG, experiments High level services User interfaces Applications EU Data. Grid Active services Information system VDT (Globus, GLUE) User access Operating system Data management Passive services Security Data transfer NFS, System software … Red. Hat Linux File system Information schema PBS, Condor, LSF, … Local scheduler Hardware Closed system (? ) Computing cluster 2003 -10 -29 Global scheduler Network resources oxana. smirnova@hep. lu. se HPSS, CASTOR… Data storage 20

First priority: LCG-1 Major components and levels: LCG, experiments High level services User interfaces Applications EU Data. Grid Active services Information system VDT (Globus, GLUE) User access Operating system Data management Passive services Security Data transfer NFS, System software … Red. Hat Linux File system Information schema PBS, Condor, LSF, … Local scheduler Hardware Closed system (? ) Computing cluster 2003 -10 -29 Global scheduler Network resources oxana. smirnova@hep. lu. se HPSS, CASTOR… Data storage 20

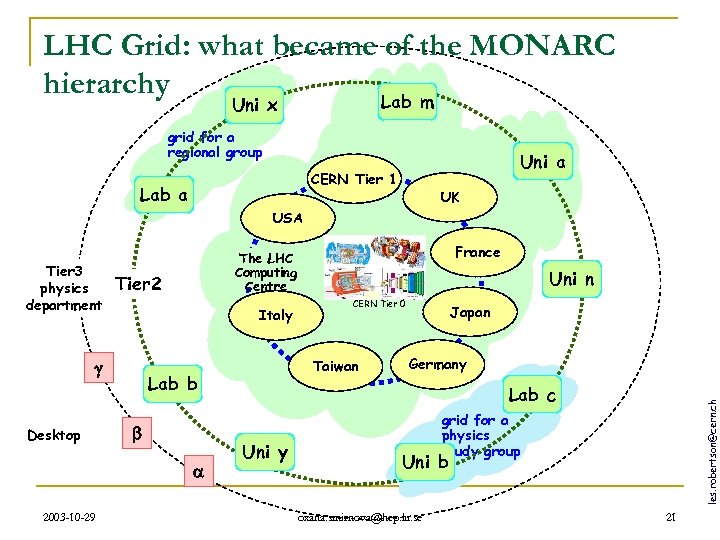

LHC Grid: what became of the MONARC hierarchy Lab m Uni x grid for a regional group Uni a CERN Tier 1 Lab a UK USA Tier 2 Italy Desktop CERN Tier 0 Taiwan Lab b 2003 -10 -29 Uni n Centre Japan Germany Lab c Uni y les. robertson@cern. ch Tier 3 physics department France The Tier LHC 1 Computing grid for a physics study group Uni b oxana. smirnova@hep. lu. se 21

LHC Grid: what became of the MONARC hierarchy Lab m Uni x grid for a regional group Uni a CERN Tier 1 Lab a UK USA Tier 2 Italy Desktop CERN Tier 0 Taiwan Lab b 2003 -10 -29 Uni n Centre Japan Germany Lab c Uni y les. robertson@cern. ch Tier 3 physics department France The Tier LHC 1 Computing grid for a physics study group Uni b oxana. smirnova@hep. lu. se 21

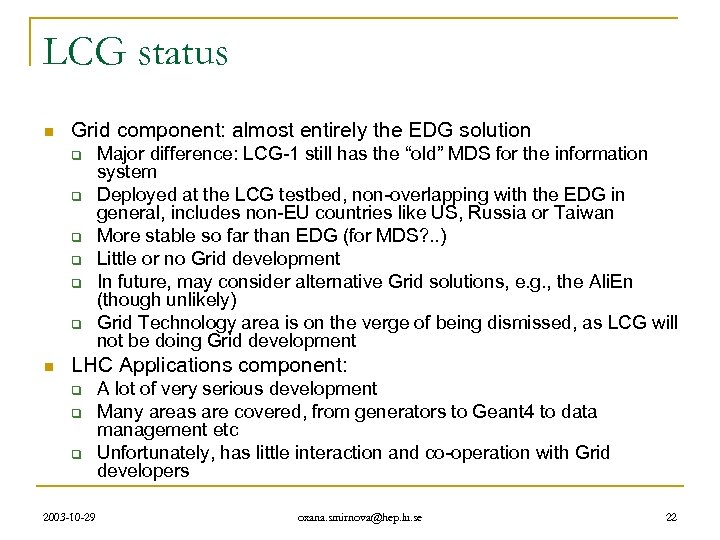

LCG status n Grid component: almost entirely the EDG solution q q q n Major difference: LCG-1 still has the “old” MDS for the information system Deployed at the LCG testbed, non-overlapping with the EDG in general, includes non-EU countries like US, Russia or Taiwan More stable so far than EDG (for MDS? . . ) Little or no Grid development In future, may consider alternative Grid solutions, e. g. , the Ali. En (though unlikely) Grid Technology area is on the verge of being dismissed, as LCG will not be doing Grid development LHC Applications component: q q q 2003 -10 -29 A lot of very serious development Many areas are covered, from generators to Geant 4 to data management etc Unfortunately, has little interaction and co-operation with Grid developers oxana. smirnova@hep. lu. se 22

LCG status n Grid component: almost entirely the EDG solution q q q n Major difference: LCG-1 still has the “old” MDS for the information system Deployed at the LCG testbed, non-overlapping with the EDG in general, includes non-EU countries like US, Russia or Taiwan More stable so far than EDG (for MDS? . . ) Little or no Grid development In future, may consider alternative Grid solutions, e. g. , the Ali. En (though unlikely) Grid Technology area is on the verge of being dismissed, as LCG will not be doing Grid development LHC Applications component: q q q 2003 -10 -29 A lot of very serious development Many areas are covered, from generators to Geant 4 to data management etc Unfortunately, has little interaction and co-operation with Grid developers oxana. smirnova@hep. lu. se 22

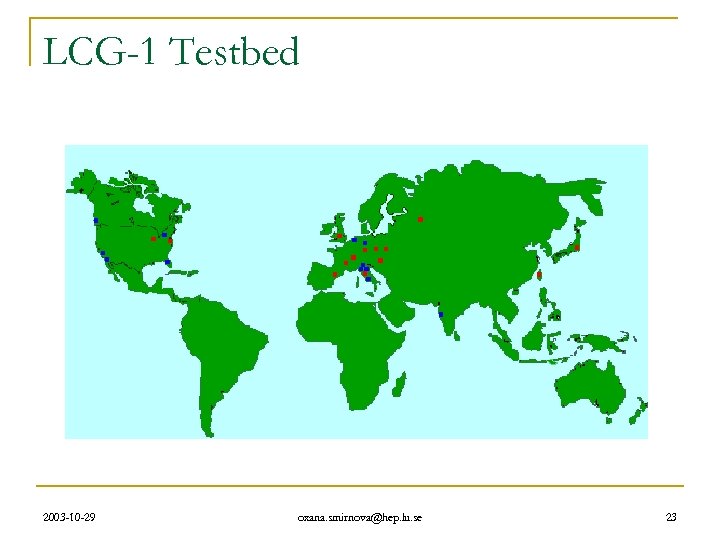

LCG-1 Testbed 2003 -10 -29 oxana. smirnova@hep. lu. se 23

LCG-1 Testbed 2003 -10 -29 oxana. smirnova@hep. lu. se 23

Summary n n n Initiated by CERN, EDG came as the first global Grid R&D project aiming at deploying working services Sailing in uncharted waters, EDG ultimately provided a set of services, allowing to construct a Grid infrastructure Perhaps the most notable EDG achievement is introduction of authentication and authorization standards, now recognized worldwide LCG took a bold decision to deploy EDG as their Grid component for the LCG-1 release The Grid development does not stop with EDG: LCG is open for new solutions, with a strong preference towards OGSA 2003 -10 -29 oxana. smirnova@hep. lu. se 24

Summary n n n Initiated by CERN, EDG came as the first global Grid R&D project aiming at deploying working services Sailing in uncharted waters, EDG ultimately provided a set of services, allowing to construct a Grid infrastructure Perhaps the most notable EDG achievement is introduction of authentication and authorization standards, now recognized worldwide LCG took a bold decision to deploy EDG as their Grid component for the LCG-1 release The Grid development does not stop with EDG: LCG is open for new solutions, with a strong preference towards OGSA 2003 -10 -29 oxana. smirnova@hep. lu. se 24