12fcb9da01cf9459673e50e0a8b48080.ppt

- Количество слайдов: 24

Grid Packaging Peter F. Couvares Associate Researcher, Condor Team Computer Sciences Department University of Wisconsin-Madison http: //www. cs. wisc. edu/condor SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Grid Packaging Peter F. Couvares Associate Researcher, Condor Team Computer Sciences Department University of Wisconsin-Madison http: //www. cs. wisc. edu/condor SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

What I Hope to Tell You • • What is the VDT? What is Pacman? What is the NMI Build & Test System? Why are they all being mentioned together? • Why should I care? What can they do for me? SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

What I Hope to Tell You • • What is the VDT? What is Pacman? What is the NMI Build & Test System? Why are they all being mentioned together? • Why should I care? What can they do for me? SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Why Should I Care? • When it comes to grid computing, the state of the art is pretty poor. – New technologies, often-immature software • Making & testing grid software is hard to do. – Tons of platforms (see “Why I Hate Linux”), tons of interdependencies, ever-changing environments • Distributing grid software is hard to do. – On the grid, every user’s environment is different. • Installing grid software is hard to do. – Every grid component has its own way of doing things, and they conflict. • Our goals: – Try to make all three easier for real users: • grid software developers • grid software stack packagers • grid software users SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Why Should I Care? • When it comes to grid computing, the state of the art is pretty poor. – New technologies, often-immature software • Making & testing grid software is hard to do. – Tons of platforms (see “Why I Hate Linux”), tons of interdependencies, ever-changing environments • Distributing grid software is hard to do. – On the grid, every user’s environment is different. • Installing grid software is hard to do. – Every grid component has its own way of doing things, and they conflict. • Our goals: – Try to make all three easier for real users: • grid software developers • grid software stack packagers • grid software users SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

In a Nutshell… • Pacman is a software packaging system – describes where to get software, how to install it, and what its dependencies are – designed for the grid • The VDT is a supported grid software distribution – designed for the scientific community – seeks to make it easy to install & configure an integrated stack of many standard grid clients & services • The NMI Build & Test Facility automates the building & testing of grid software on multiple platforms – goal is to make grid software & grid software distributions more reliable • How are they related? – Pacman is used by the VDT, and the VDT is built using the automated NMI B&T system. SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

In a Nutshell… • Pacman is a software packaging system – describes where to get software, how to install it, and what its dependencies are – designed for the grid • The VDT is a supported grid software distribution – designed for the scientific community – seeks to make it easy to install & configure an integrated stack of many standard grid clients & services • The NMI Build & Test Facility automates the building & testing of grid software on multiple platforms – goal is to make grid software & grid software distributions more reliable • How are they related? – Pacman is used by the VDT, and the VDT is built using the automated NMI B&T system. SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

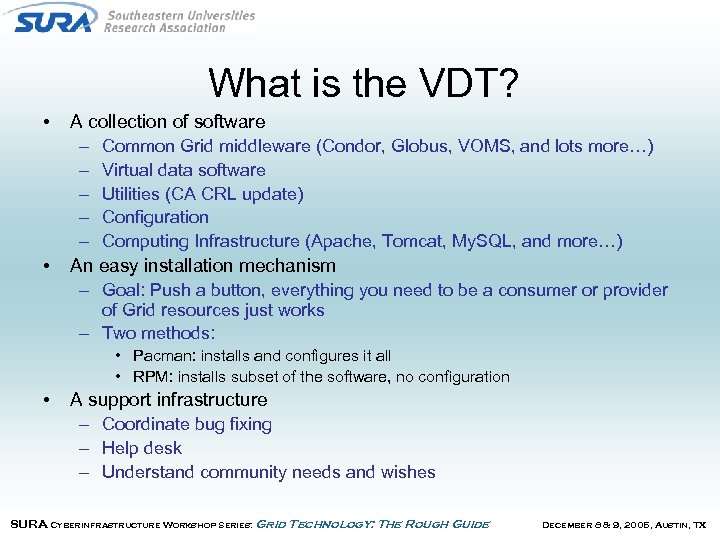

What is the VDT? • • A collection of software – Common Grid middleware (Condor, Globus, VOMS, and lots more…) – Virtual data software – Utilities (CA CRL update) – Configuration – Computing Infrastructure (Apache, Tomcat, My. SQL, and more…) An easy installation mechanism – Goal: Push a button, everything you need to be a consumer or provider of Grid resources just works – Two methods: • Pacman: installs and configures it all • RPM: installs subset of the software, no configuration • A support infrastructure – Coordinate bug fixing – Help desk – Understand community needs and wishes SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

What is the VDT? • • A collection of software – Common Grid middleware (Condor, Globus, VOMS, and lots more…) – Virtual data software – Utilities (CA CRL update) – Configuration – Computing Infrastructure (Apache, Tomcat, My. SQL, and more…) An easy installation mechanism – Goal: Push a button, everything you need to be a consumer or provider of Grid resources just works – Two methods: • Pacman: installs and configures it all • RPM: installs subset of the software, no configuration • A support infrastructure – Coordinate bug fixing – Help desk – Understand community needs and wishes SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

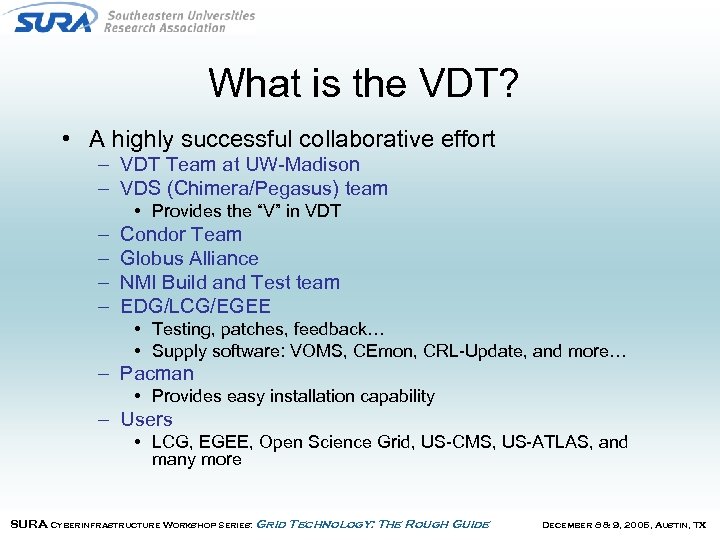

What is the VDT? • A highly successful collaborative effort – VDT Team at UW-Madison – VDS (Chimera/Pegasus) team • Provides the “V” in VDT – – Condor Team Globus Alliance NMI Build and Test team EDG/LCG/EGEE • Testing, patches, feedback… • Supply software: VOMS, CEmon, CRL-Update, and more… – Pacman • Provides easy installation capability – Users • LCG, EGEE, Open Science Grid, US-CMS, US-ATLAS, and many more SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

What is the VDT? • A highly successful collaborative effort – VDT Team at UW-Madison – VDS (Chimera/Pegasus) team • Provides the “V” in VDT – – Condor Team Globus Alliance NMI Build and Test team EDG/LCG/EGEE • Testing, patches, feedback… • Supply software: VOMS, CEmon, CRL-Update, and more… – Pacman • Provides easy installation capability – Users • LCG, EGEE, Open Science Grid, US-CMS, US-ATLAS, and many more SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

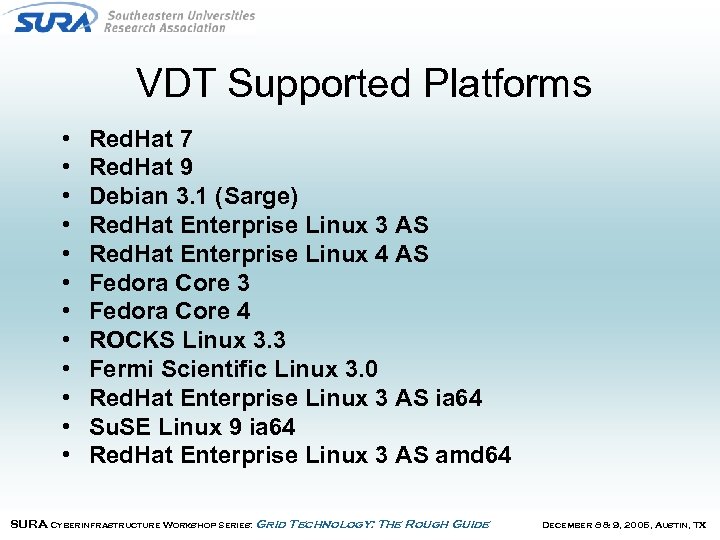

VDT Supported Platforms • • • Red. Hat 7 Red. Hat 9 Debian 3. 1 (Sarge) Red. Hat Enterprise Linux 3 AS Red. Hat Enterprise Linux 4 AS Fedora Core 3 Fedora Core 4 ROCKS Linux 3. 3 Fermi Scientific Linux 3. 0 Red. Hat Enterprise Linux 3 AS ia 64 Su. SE Linux 9 ia 64 Red. Hat Enterprise Linux 3 AS amd 64 SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

VDT Supported Platforms • • • Red. Hat 7 Red. Hat 9 Debian 3. 1 (Sarge) Red. Hat Enterprise Linux 3 AS Red. Hat Enterprise Linux 4 AS Fedora Core 3 Fedora Core 4 ROCKS Linux 3. 3 Fermi Scientific Linux 3. 0 Red. Hat Enterprise Linux 3 AS ia 64 Su. SE Linux 9 ia 64 Red. Hat Enterprise Linux 3 AS amd 64 SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

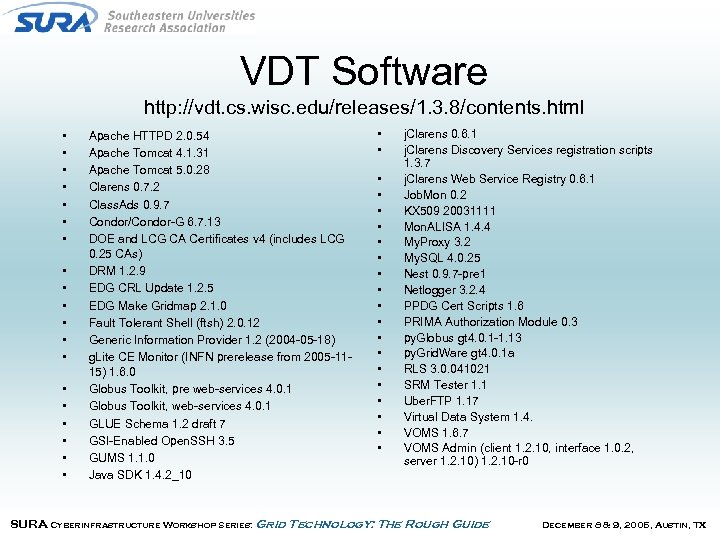

VDT Software http: //vdt. cs. wisc. edu/releases/1. 3. 8/contents. html • • • • • Apache HTTPD 2. 0. 54 Apache Tomcat 4. 1. 31 Apache Tomcat 5. 0. 28 Clarens 0. 7. 2 Class. Ads 0. 9. 7 Condor/Condor-G 6. 7. 13 DOE and LCG CA Certificates v 4 (includes LCG 0. 25 CAs) DRM 1. 2. 9 EDG CRL Update 1. 2. 5 EDG Make Gridmap 2. 1. 0 Fault Tolerant Shell (ftsh) 2. 0. 12 Generic Information Provider 1. 2 (2004 -05 -18) g. Lite CE Monitor (INFN prerelease from 2005 -1115) 1. 6. 0 Globus Toolkit, pre web-services 4. 0. 1 Globus Toolkit, web-services 4. 0. 1 GLUE Schema 1. 2 draft 7 GSI-Enabled Open. SSH 3. 5 GUMS 1. 1. 0 Java SDK 1. 4. 2_10 • • • • • j. Clarens 0. 6. 1 j. Clarens Discovery Services registration scripts 1. 3. 7 j. Clarens Web Service Registry 0. 6. 1 Job. Mon 0. 2 KX 509 20031111 Mon. ALISA 1. 4. 4 My. Proxy 3. 2 My. SQL 4. 0. 25 Nest 0. 9. 7 -pre 1 Netlogger 3. 2. 4 PPDG Cert Scripts 1. 6 PRIMA Authorization Module 0. 3 py. Globus gt 4. 0. 1 -1. 13 py. Grid. Ware gt 4. 0. 1 a RLS 3. 0. 041021 SRM Tester 1. 1 Uber. FTP 1. 17 Virtual Data System 1. 4. VOMS 1. 6. 7 VOMS Admin (client 1. 2. 10, interface 1. 0. 2, server 1. 2. 10) 1. 2. 10 -r 0 SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

VDT Software http: //vdt. cs. wisc. edu/releases/1. 3. 8/contents. html • • • • • Apache HTTPD 2. 0. 54 Apache Tomcat 4. 1. 31 Apache Tomcat 5. 0. 28 Clarens 0. 7. 2 Class. Ads 0. 9. 7 Condor/Condor-G 6. 7. 13 DOE and LCG CA Certificates v 4 (includes LCG 0. 25 CAs) DRM 1. 2. 9 EDG CRL Update 1. 2. 5 EDG Make Gridmap 2. 1. 0 Fault Tolerant Shell (ftsh) 2. 0. 12 Generic Information Provider 1. 2 (2004 -05 -18) g. Lite CE Monitor (INFN prerelease from 2005 -1115) 1. 6. 0 Globus Toolkit, pre web-services 4. 0. 1 Globus Toolkit, web-services 4. 0. 1 GLUE Schema 1. 2 draft 7 GSI-Enabled Open. SSH 3. 5 GUMS 1. 1. 0 Java SDK 1. 4. 2_10 • • • • • j. Clarens 0. 6. 1 j. Clarens Discovery Services registration scripts 1. 3. 7 j. Clarens Web Service Registry 0. 6. 1 Job. Mon 0. 2 KX 509 20031111 Mon. ALISA 1. 4. 4 My. Proxy 3. 2 My. SQL 4. 0. 25 Nest 0. 9. 7 -pre 1 Netlogger 3. 2. 4 PPDG Cert Scripts 1. 6 PRIMA Authorization Module 0. 3 py. Globus gt 4. 0. 1 -1. 13 py. Grid. Ware gt 4. 0. 1 a RLS 3. 0. 041021 SRM Tester 1. 1 Uber. FTP 1. 17 Virtual Data System 1. 4. VOMS 1. 6. 7 VOMS Admin (client 1. 2. 10, interface 1. 0. 2, server 1. 2. 10) 1. 2. 10 -r 0 SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

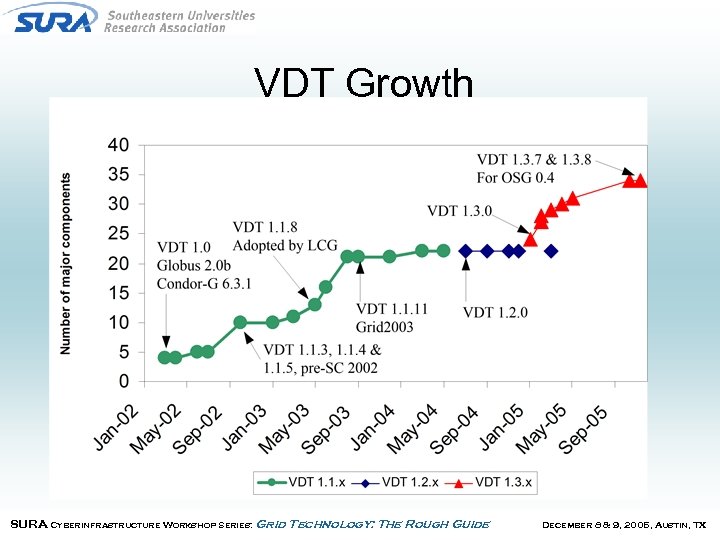

VDT Growth SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

VDT Growth SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Pacman http: //physics. bu. edu/~youssef/pacman/ • Saul Youssef, Boston University • A Pacman file: – Describes how to install some software – What software to install first (dependencies) – Is located on a web page (usually) SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Pacman http: //physics. bu. edu/~youssef/pacman/ • Saul Youssef, Boston University • A Pacman file: – Describes how to install some software – What software to install first (dependencies) – Is located on a web page (usually) SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

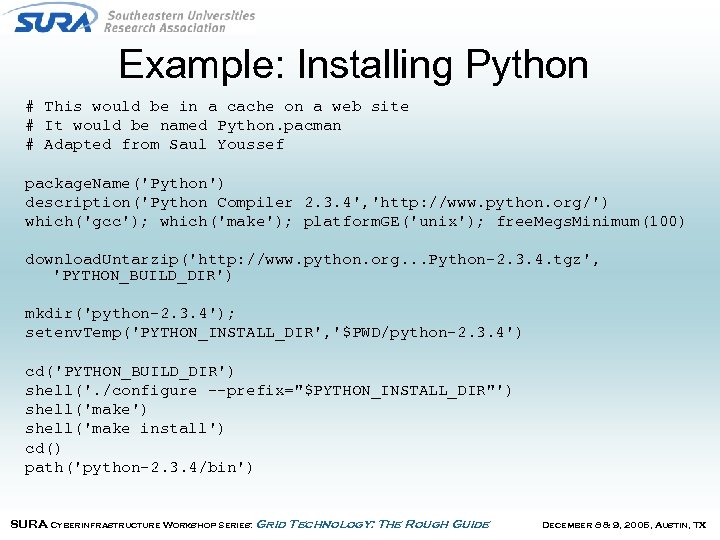

Example: Installing Python # This would be in a cache on a web site # It would be named Python. pacman # Adapted from Saul Youssef package. Name('Python') description('Python Compiler 2. 3. 4', 'http: //www. python. org/') which('gcc'); which('make'); platform. GE('unix'); free. Megs. Minimum(100) download. Untarzip('http: //www. python. org. . . Python-2. 3. 4. tgz', 'PYTHON_BUILD_DIR') mkdir('python-2. 3. 4'); setenv. Temp('PYTHON_INSTALL_DIR', '$PWD/python-2. 3. 4') cd('PYTHON_BUILD_DIR') shell('. /configure --prefix="$PYTHON_INSTALL_DIR"') shell('make install') cd() path('python-2. 3. 4/bin') SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Example: Installing Python # This would be in a cache on a web site # It would be named Python. pacman # Adapted from Saul Youssef package. Name('Python') description('Python Compiler 2. 3. 4', 'http: //www. python. org/') which('gcc'); which('make'); platform. GE('unix'); free. Megs. Minimum(100) download. Untarzip('http: //www. python. org. . . Python-2. 3. 4. tgz', 'PYTHON_BUILD_DIR') mkdir('python-2. 3. 4'); setenv. Temp('PYTHON_INSTALL_DIR', '$PWD/python-2. 3. 4') cd('PYTHON_BUILD_DIR') shell('. /configure --prefix="$PYTHON_INSTALL_DIR"') shell('make install') cd() path('python-2. 3. 4/bin') SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

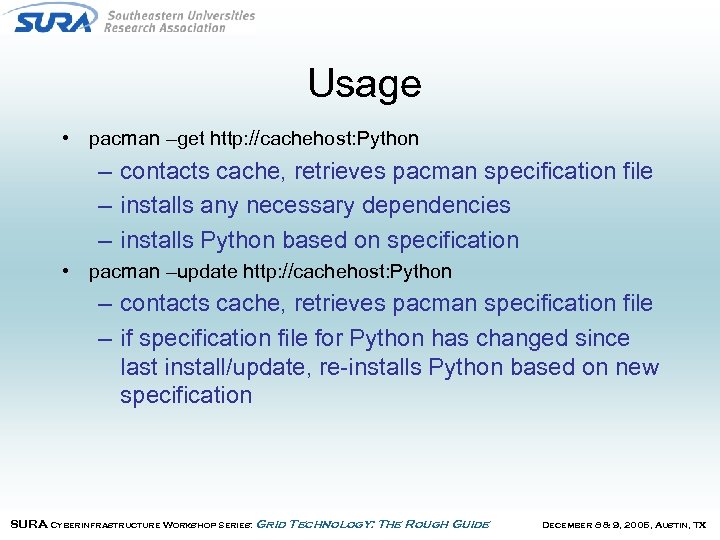

Usage • pacman –get http: //cachehost: Python – contacts cache, retrieves pacman specification file – installs any necessary dependencies – installs Python based on specification • pacman –update http: //cachehost: Python – contacts cache, retrieves pacman specification file – if specification file for Python has changed since last install/update, re-installs Python based on new specification SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Usage • pacman –get http: //cachehost: Python – contacts cache, retrieves pacman specification file – installs any necessary dependencies – installs Python based on specification • pacman –update http: //cachehost: Python – contacts cache, retrieves pacman specification file – if specification file for Python has changed since last install/update, re-installs Python based on new specification SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

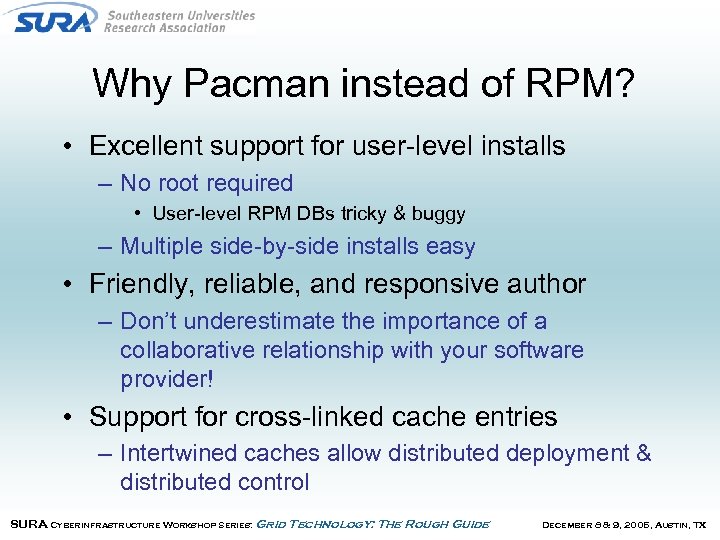

Why Pacman instead of RPM? • Excellent support for user-level installs – No root required • User-level RPM DBs tricky & buggy – Multiple side-by-side installs easy • Friendly, reliable, and responsive author – Don’t underestimate the importance of a collaborative relationship with your software provider! • Support for cross-linked cache entries – Intertwined caches allow distributed deployment & distributed control SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Why Pacman instead of RPM? • Excellent support for user-level installs – No root required • User-level RPM DBs tricky & buggy – Multiple side-by-side installs easy • Friendly, reliable, and responsive author – Don’t underestimate the importance of a collaborative relationship with your software provider! • Support for cross-linked cache entries – Intertwined caches allow distributed deployment & distributed control SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Intertwined Pacman Caches • VDT Cache has no dependencies on other caches • Open Science Grid installs subset of VDT from VDT cache, then installs extra software • Really good model for people that want some of VDT, some of their own stuff SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Intertwined Pacman Caches • VDT Cache has no dependencies on other caches • Open Science Grid installs subset of VDT from VDT cache, then installs extra software • Really good model for people that want some of VDT, some of their own stuff SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

NMI Build & Test System • NMI Statement: – Purpose – to develop, deploy and sustain a set of reusable and expandable middleware functions that benefit many science and engineering applications in a networked environment – Program encourages open source software development and development of middleware standards SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

NMI Build & Test System • NMI Statement: – Purpose – to develop, deploy and sustain a set of reusable and expandable middleware functions that benefit many science and engineering applications in a networked environment – Program encourages open source software development and development of middleware standards SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Why should you care? From our experience, the functionality, robustness and maintainability of a production -quality software component depends on the effort involved in building, deploying and testing the component. – If it is true for a component, it is definitely true for a software stack – Doing it right is much harder than it appears from the outside – Most of us have very little experience in this area SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Why should you care? From our experience, the functionality, robustness and maintainability of a production -quality software component depends on the effort involved in building, deploying and testing the component. – If it is true for a component, it is definitely true for a software stack – Doing it right is much harder than it appears from the outside – Most of us have very little experience in this area SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

The Build Challenge • Automation - “build the component at the push of a button!” – always more to it than just “configure” & “make” (e. g. , ssh to host; cvs checkout; untar; setenv, etc. ) • Reproducibility – “build the version we released 2 years ago!” – Well-managed & comprehensive source repository – Know your “externals” and keep them around • Portability – “build the component on X. nmi. wisc. edu!” – No dependencies on “local” capabilities – Understand your hardware & software requirements • Manageability – “run the build daily on 12 platforms and email me the outcome!” SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

The Build Challenge • Automation - “build the component at the push of a button!” – always more to it than just “configure” & “make” (e. g. , ssh to host; cvs checkout; untar; setenv, etc. ) • Reproducibility – “build the version we released 2 years ago!” – Well-managed & comprehensive source repository – Know your “externals” and keep them around • Portability – “build the component on X. nmi. wisc. edu!” – No dependencies on “local” capabilities – Understand your hardware & software requirements • Manageability – “run the build daily on 12 platforms and email me the outcome!” SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

The Testing Challenge • All the same challenges as builds (automation, reproducibility, portability, manageability), plus: • Flexibility - “test our RHEL 3 binaries on RHEL 4!” – important to clearly separate build & test functions – making tests just a part of a build -- instead of an independent step -- makes it difficult/impossible to: • run new tests against old builds • test one platform’s binaries on another platform • run different tests at different frequencies SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

The Testing Challenge • All the same challenges as builds (automation, reproducibility, portability, manageability), plus: • Flexibility - “test our RHEL 3 binaries on RHEL 4!” – important to clearly separate build & test functions – making tests just a part of a build -- instead of an independent step -- makes it difficult/impossible to: • run new tests against old builds • test one platform’s binaries on another platform • run different tests at different frequencies SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Goals of the Build & Test Facility • Design, develop and deploy a build system (HW and software) capable of performing daily builds of a suite of middleware packages on a heterogeneous (HW, OS, libraries, …) collection of platforms – – – Dependable Traceable Manageable Portable Extensible Schedulable SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Goals of the Build & Test Facility • Design, develop and deploy a build system (HW and software) capable of performing daily builds of a suite of middleware packages on a heterogeneous (HW, OS, libraries, …) collection of platforms – – – Dependable Traceable Manageable Portable Extensible Schedulable SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

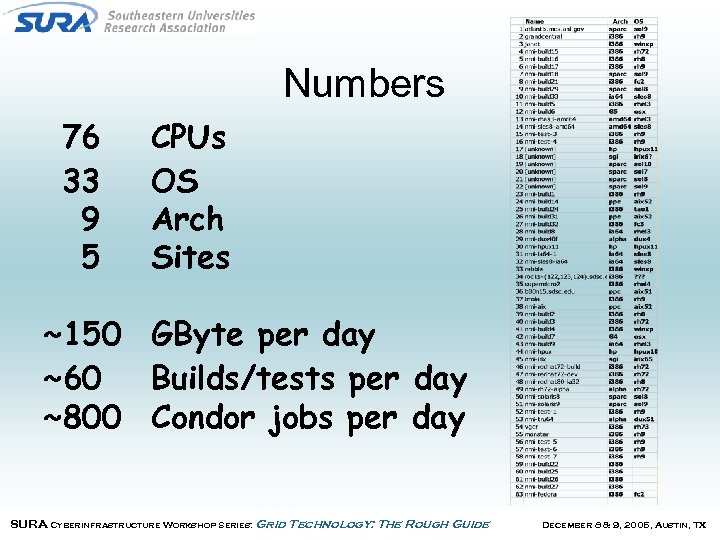

Numbers 76 33 9 5 CPUs OS Arch Sites ~150 GByte per day ~60 Builds/tests per day ~800 Condor jobs per day SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Numbers 76 33 9 5 CPUs OS Arch Sites ~150 GByte per day ~60 Builds/tests per day ~800 Condor jobs per day SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Who is on board and who is getting on. . . • "Production" Users – VDT • regular builds of all grid software components – Condor • nightly builds & tests for many different condor "customers" – Globus • nightly builds & tests of new GT 4 release – NMI GRIDS Center Software Suite • regular builds of grid software components for R 7 release, and verification tests of release candidates • Users “Getting on Board” – – – Tera. Grid LEAD (produced a custom NMI bundle for their testbed) LIGO OMII NEES, Pac. Man, Para. Dyn, more! SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Who is on board and who is getting on. . . • "Production" Users – VDT • regular builds of all grid software components – Condor • nightly builds & tests for many different condor "customers" – Globus • nightly builds & tests of new GT 4 release – NMI GRIDS Center Software Suite • regular builds of grid software components for R 7 release, and verification tests of release candidates • Users “Getting on Board” – – – Tera. Grid LEAD (produced a custom NMI bundle for their testbed) LIGO OMII NEES, Pac. Man, Para. Dyn, more! SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

“Eating Our Own Dogfood” • The NMI Build & Test Lab is built using grid technologies to automate the build, deploy, and test cycle: – – – Globus GSI/Open. SSH: remote login, start/stop services Globus Grid. FTP: copy/move files Condor: schedule build and testing tasks DAGMan: Manage build and testing workflow Hawk. Eye and Class. Ad triggers for monitoring • Construct and manage a dedicated heterogeneous and distributed facility SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

“Eating Our Own Dogfood” • The NMI Build & Test Lab is built using grid technologies to automate the build, deploy, and test cycle: – – – Globus GSI/Open. SSH: remote login, start/stop services Globus Grid. FTP: copy/move files Condor: schedule build and testing tasks DAGMan: Manage build and testing workflow Hawk. Eye and Class. Ad triggers for monitoring • Construct and manage a dedicated heterogeneous and distributed facility SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

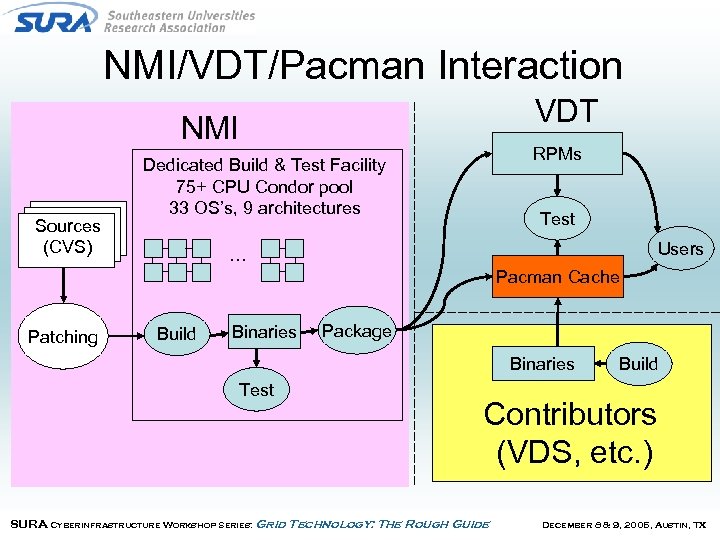

NMI/VDT/Pacman Interaction VDT NMI Sources (CVS) RPMs Dedicated Build & Test Facility 75+ CPU Condor pool 33 OS’s, 9 architectures Test Users … Pacman Cache Patching Build Binaries Package Binaries Test Build Contributors (VDS, etc. ) SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

NMI/VDT/Pacman Interaction VDT NMI Sources (CVS) RPMs Dedicated Build & Test Facility 75+ CPU Condor pool 33 OS’s, 9 architectures Test Users … Pacman Cache Patching Build Binaries Package Binaries Test Build Contributors (VDS, etc. ) SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Grid Software Installation Typical Grid Software Installation Experience… NMI-Built, Pacman-enabled, VDT Installation Experience! SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX

Grid Software Installation Typical Grid Software Installation Experience… NMI-Built, Pacman-enabled, VDT Installation Experience! SURA Cyberinfrastructure Workshop Series: Grid Technology: The Rough Guide December 8 & 9, 2005, Austin, TX