96c9768ec7114097b8934cf08c711cae.ppt

- Количество слайдов: 29

GRID, Облачные технологии, Большие Данные (Big Data) Кореньков В. В. Директор ЛИТ ОИЯИ Зав. кафедры РИВС Университета «Дубна» 1/98

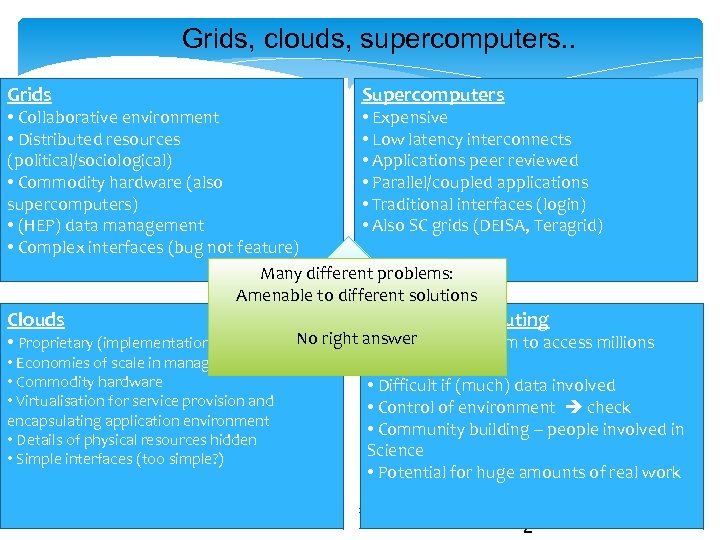

Grids, clouds, supercomputers. . Grids, clouds, supercomputers, etc. Supercomputers • Collaborative environment • Expensive • Distributed resources • Low latency interconnects (political/sociological) • Applications peer reviewed • Commodity hardware (also • Parallel/coupled applications supercomputers) • Traditional interfaces (login) • (HEP) data management • Also SC grids (DEISA, Teragrid) • Complex interfaces (bug not feature) Many different problems: Amenable to different solutions Clouds • Proprietary (implementation) • Economies of scale in management • Commodity hardware • Virtualisation for service provision and encapsulating application environment • Details of physical resources hidden • Simple interfaces (too simple? ) Mirco Mazzucato DUBNA-19 -1209 Volunteer computing No right answer mechanism to access millions • Simple CPUs • Difficult if (much) data involved • Control of environment check • Community building – people involved in Science • Potential for huge amounts of real work 2 Ian Bird 2

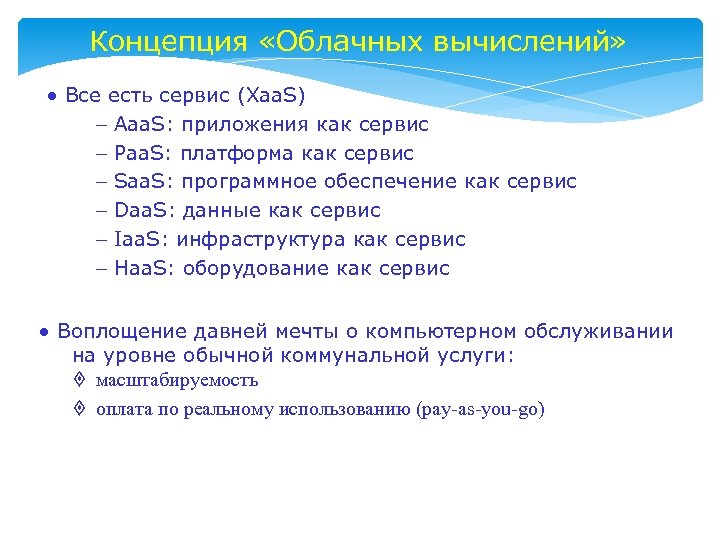

Концепция «Облачных вычислений» Все есть сервис (Xaa. S) Aaa. S: приложения как сервис Paa. S: платформа как сервис Saa. S: программное обеспечение как сервис Daa. S: данные как сервис Iaa. S: инфраструктура как сервис Haa. S: оборудование как сервис Воплощение давней мечты о компьютерном обслуживании на уровне обычной коммунальной услуги: масштабируемость оплата по реальному использованию (pay-as-you-go)

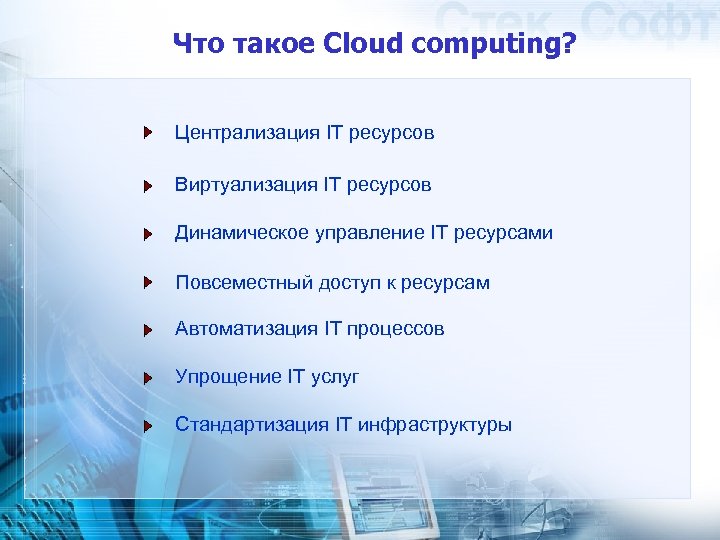

Что такое Cloud computing? Централизация IT ресурсов Виртуализация IT ресурсов Динамическое управление IT ресурсами Повсеместный доступ к ресурсам Автоматизация IT процессов Упрощение IT услуг Стандартизация IT инфраструктуры

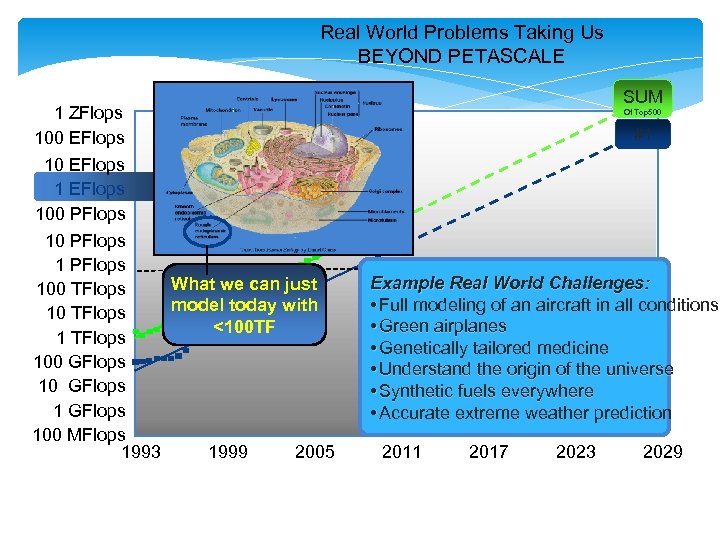

Real World Problems Taking Us BEYOND PETASCALE 1 ZFlops 100 EFlops 100 PFlops 1 PFlops What we can just 100 TFlops model today with 10 TFlops <100 TF 1 TFlops 100 GFlops 100 MFlops 1993 1999 2005 SUM Of Top 500 #1 Aerodynamic World Example Real. Analysis: Challenges: 1 Petaflops Laser Optics: 10 Petaflops • Full modeling of an aircraft in all conditions Molecular Dynamics 20 Petaflops • Green airplanes in Biology: Aerodynamic Design: 1 Exaflops • Genetically tailored medicine Computational Cosmology: Exaflops 10 • Understandin Physics: of the universe the origin Turbulence 100 Exaflops • Synthetic fuels. Chemistry: 1 Zettaflops everywhere Computational • Accurate extreme weather prediction Source: Dr. Steve Chen, “The Growing HPC Momentum in China”, June 30 th, 2006, Dresden, Germany 2011 2017 2023 2029

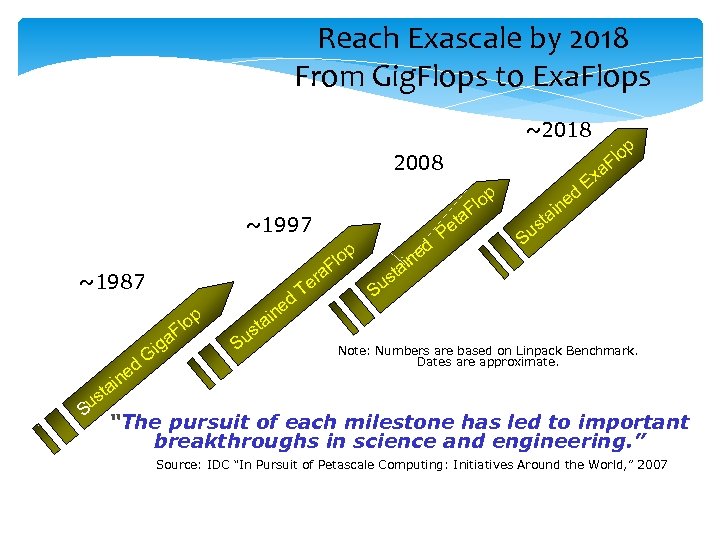

Reach Exascale by 2018 From Gig. Flops to Exa. Flops ~2018 2008 p lo F ~1997 p ~1987 p g o Fl a ed in sta Su Gi d ne ai Te o Fl ra d e in a et P ed in ta p o Fl xa E us S sta Su t s Su Note: Numbers are based on Linpack Benchmark. Dates are approximate. “The pursuit of each milestone has led to important breakthroughs in science and engineering. ” Source: IDC “In Pursuit of Petascale Computing: Initiatives Around the World, ” 2007

Top 500 Site System 1 Tianhe-2 (Milky. Way-2) - TH-IVB-FEP Cluster, Intel National University of Defense Xeon E 5 -2692 12 C 2. 200 GHz, TH Express-2, Intel Technology China Xeon Phi 31 S 1 P NUDT 2 3 Rmax (TFlop/s) Rpeak Power (TFlop/s) (k. W) 3120000 33862. 7 54902. 4 17808 DOE/SC/Oak Ridge National Laboratory United States Titan - Cray XK 7 , Opteron 6274 16 C 2. 200 GHz, Cray 560640 17590. 0 Gemini interconnect, NVIDIA K 20 x Cray Inc. 27112. 5 8209 DOE/NNSA/LLNL United States Sequoia - Blue. Gene/Q, Power BQC 16 C 1. 60 GHz, Custom IBM 1572864 17173. 2 20132. 7 7890 705024 10510. 0 11280. 4 12660 Mira - Blue. Gene/Q, Power BQC 16 C 1. 60 GHz, Custom IBM 786432 8586. 6 10066. 3 3945 Stampede - Power. Edge C 8220, Xeon E 5 -2680 8 C 2. 700 GHz, Infiniband FDR, Intel Xeon Phi SE 10 P Dell 462462 5168. 1 8520. 1 4510 RIKEN Advanced Institute for K computer, SPARC 64 VIIIfx 2. 0 GHz, Tofu 4 Computational Science (AICS) interconnect Fujitsu Japan 5 Cores DOE/SC/Argonne National Laboratory United States Texas Advanced Computing 6 Center/Univ. of Texas United States 7 Forschungszentrum Juelich (FZJ) Germany JUQUEEN - Blue. Gene/Q, Power BQC 16 C 1. 600 GHz, 458752 5008. 9 Custom Interconnect IBM 5872. 0 2301 8 DOE/NNSA/LLNL United States Vulcan - Blue. Gene/Q, Power BQC 16 C 1. 600 GHz, Custom Interconnect IBM 393216 4293. 3 5033. 2 1972 9 Leibniz Rechenzentrum Germany Super. MUC - i. Data. Plex DX 360 M 4, Xeon E 5 -2680 8 C 2. 70 GHz, Infiniband FDR IBM 147456 2897. 0 3185. 1 3423 10 National Supercomputing Center in Tianjin China Tianhe-1 A - NUDT YH MPP, Xeon X 5670 6 C 2. 93 GHz, 186368 2566. 0 NVIDIA 2050 NUDT 4701. 0 4040 7

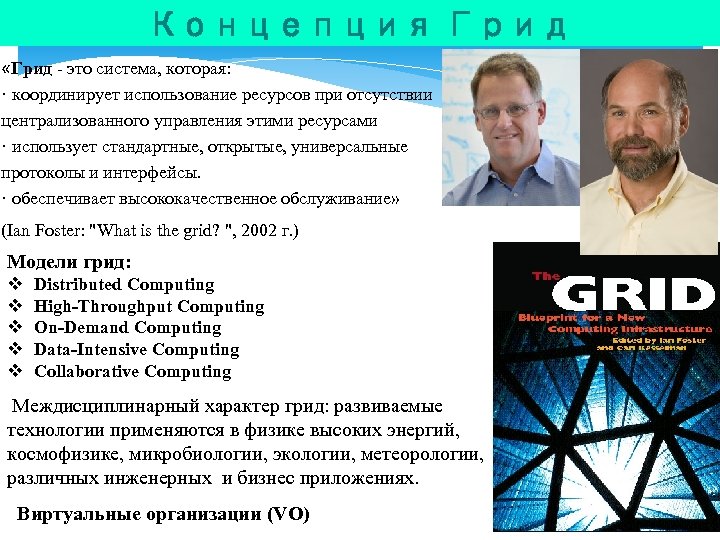

Концепция Грид «Грид - это система, которая: · координирует использование ресурсов при отсутствии централизованного управления этими ресурсами · использует стандартные, открытые, универсальные протоколы и интерфейсы. · обеспечивает высококачественное обслуживание» (Ian Foster: "What is the grid? ", 2002 г. ) Модели грид: v v v Distributed Computing High-Throughput Computing On-Demand Computing Data-Intensive Computing Collaborative Computing Междисциплинарный характер грид: развиваемые технологии применяются в физике высоких энергий, космофизике, микробиологии, экологии, метеорологии, различных инженерных и бизнес приложениях. Виртуальные организации (VO)

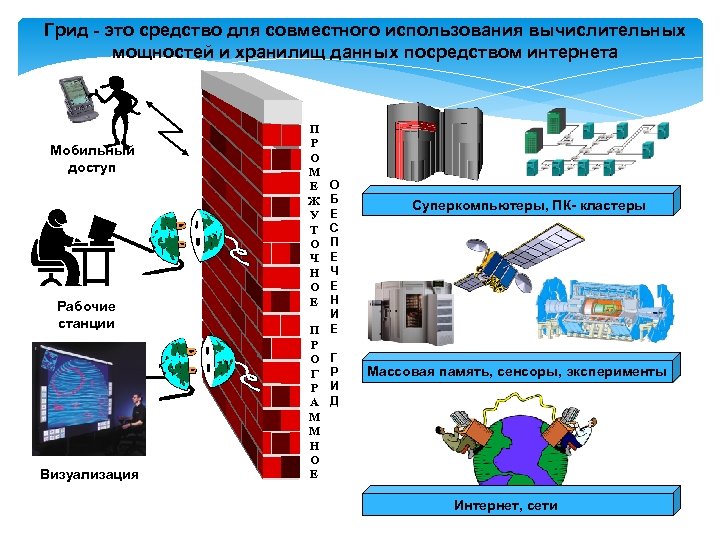

Грид - это средство для совместного использования вычислительных мощностей и хранилищ данных посредством интернета Мобильный доступ Рабочие станции Визуализация П Р О М Е Ж У Т О Ч Н О Е О Б Е С П Е Ч Е Н И П Е Р О Г Г Р Р И А Д М М Н О Е Суперкомпьютеры, ПК- кластеры Массовая память, сенсоры, эксперименты Интернет, сети

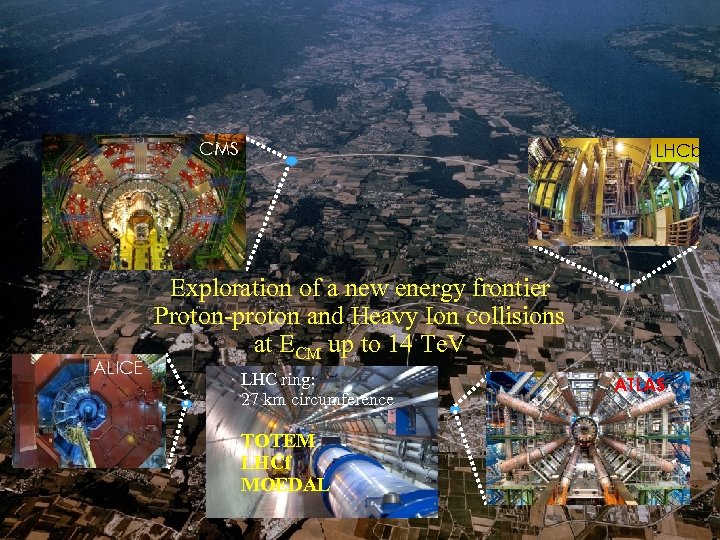

Enter a New Era in Fundamental Science The Large Hadron Collider (LHC), one of the largest and truly global scientific projects ever built, is the most exciting turning point in particle physics. CMS ALICE LHCb Exploration of a new energy frontier Proton-proton and Heavy Ion collisions at ECM up to 14 Te. V LHC ring: 27 km circumference TOTEM LHCf MOEDAL Korea and CERN / July 2009 10 ATLAS

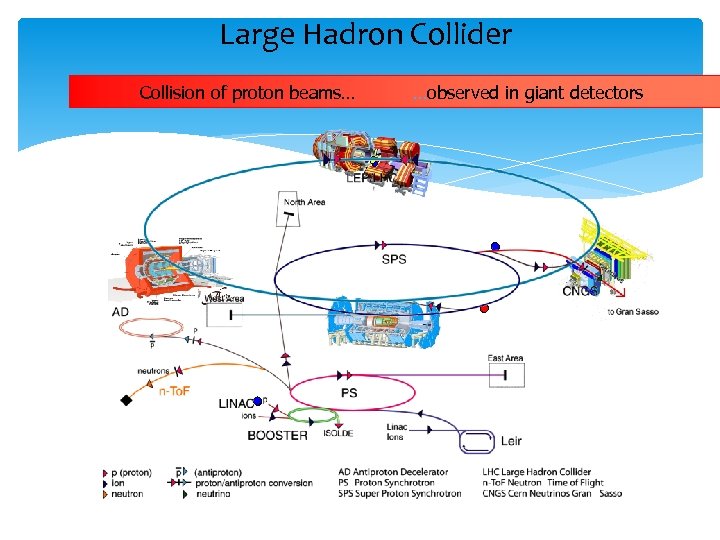

Large Hadron Collider Collision of proton beams… …observed in giant detectors

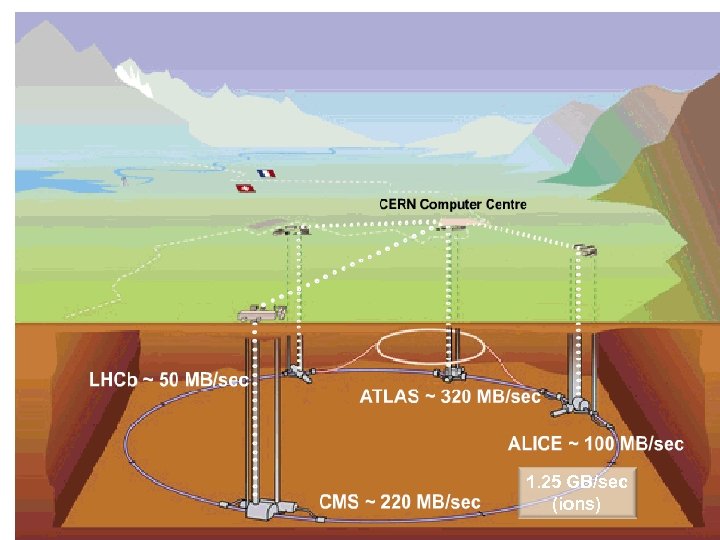

12 Ian. Bird@cern. ch 1. 25 GB/sec (ions)

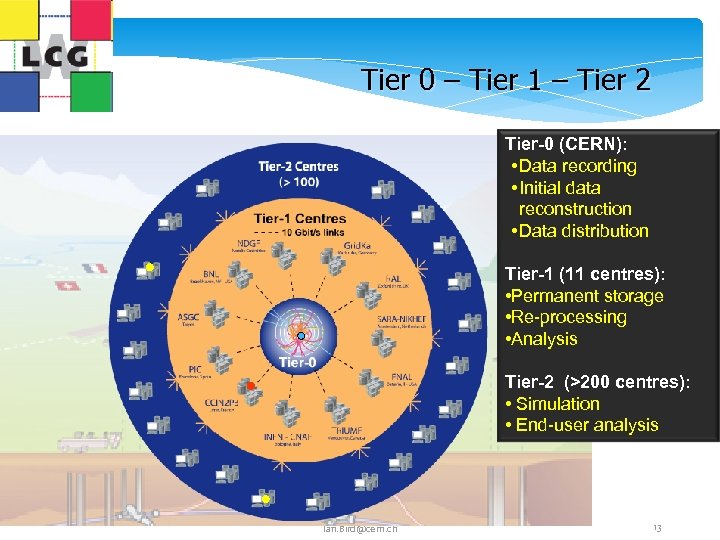

Tier 0 – Tier 1 – Tier 2 Tier-0 (CERN): • Data recording • Initial data reconstruction • Data distribution Tier-1 (11 centres): • Permanent storage • Re-processing • Analysis Tier-2 (>200 centres): • Simulation • End-user analysis 13 Ian. Bird@cern. ch 13

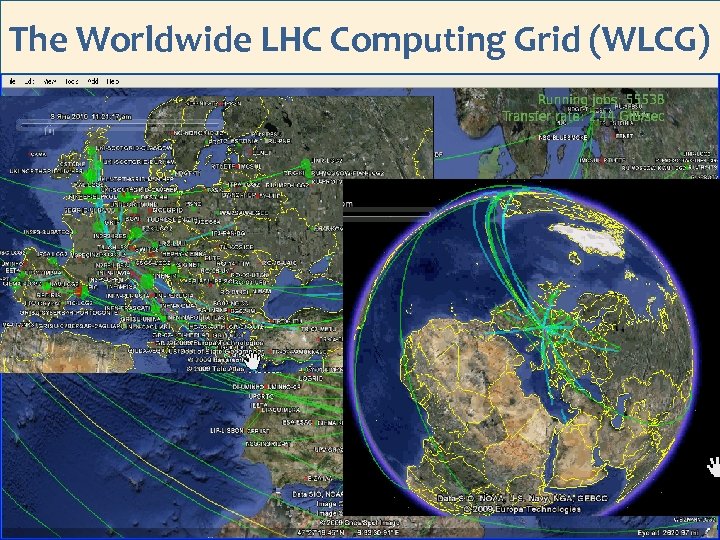

The Worldwide LHC Computing Grid (WLCG) 14/98

GRID as a brand-new understanding of possibilities of computers and computer networks foresees a global integration of information and computer resources. At the initiative of CERN, a project EU-data. Grid started up in January 2001 with the purpose of testing and developing advanced grid-technologies. JINR was involved with this project. The LCG (LHC Computing Grid) project was a continuation of the project EU-data. Grid. The main task of the new project was to build a global infrastructure of regional centres for processing, storing and analysis of data of physical experiments on the Large Hadron Collider (LHC). 2003 – Russian Consortium RDIG – Russian Data Intensive Grid – was established to provide a full-scale participation of JINR and Russia in the implementation of the LCG/EGEE project. 2004 – The EGEE (Enabling Grid for E-Science) projects was started up. CERN is its head organization, and JINR is one of its executors. 2010 – The EGI-In. SPIRE project (Integrated Sustainable Pan-European Infrastructure for Researchers in Europe) 15/98

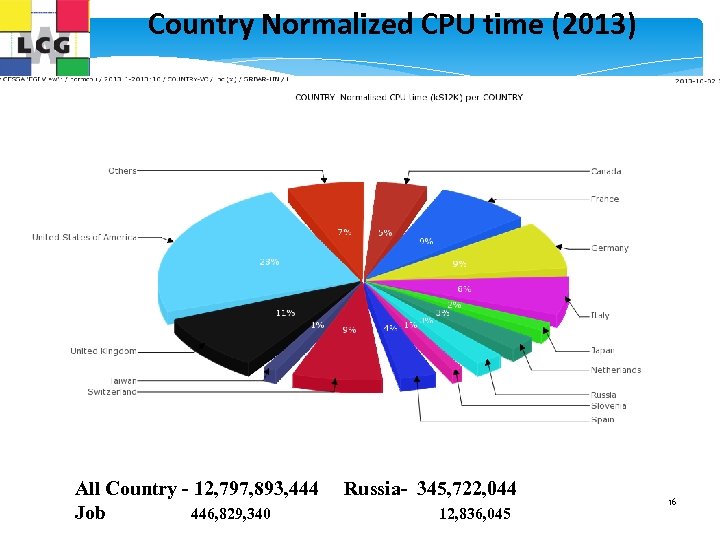

Country Normalized CPU time (2013) All Country - 12, 797, 893, 444 Russia- 345, 722, 044 Job 446, 829, 340 12, 836, 045 16

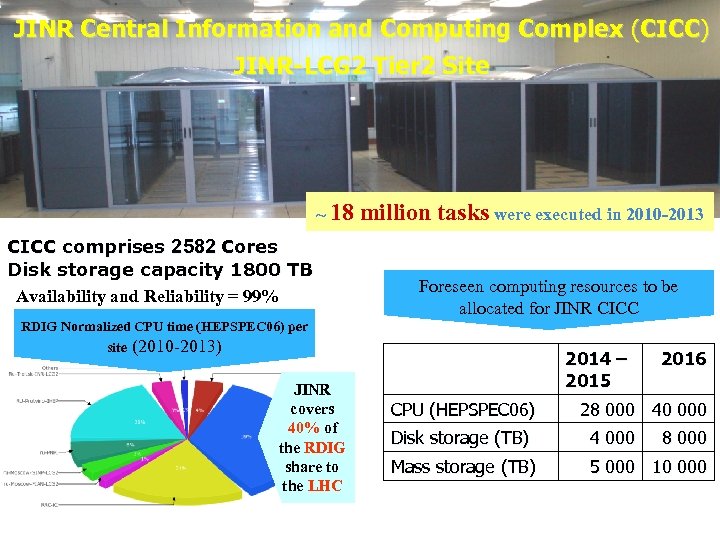

JINR Central Information and Computing Complex (CICC) JINR-LCG 2 Tier 2 Site ~ 18 CICC comprises 2582 Cores Disk storage capacity 1800 TB Availability and Reliability = 99% million tasks were executed in 2010 -2013 Foreseen computing resources to be allocated for JINR CICC RDIG Normalized CPU time (HEPSPEC 06) per site (2010 -2013) JINR covers 40% of the RDIG share to the LHC 2014 – 2015 CPU (HEPSPEC 06) 2016 28 000 40 000 Disk storage (TB) 4 000 8 000 Mass storage (TB) 5 000 10 000

Multifunctional centre for data processing, analysis and storage Ø Tier 2 level grid-infrastructure to support the experiments at LHC (ATLAS, ALICE, CMS, LHCb), FAIR (CBM, PANDA) as well as other large-scale experiments; Ø a distributed infrastructure for the storage, processing and analysis of experimental data from the accelerator complex NICA; Ø a cloud computing infrastructure; Ø a hybrid architecture supercomputer; Ø educational and research infrastructure for distributed and parallel computing. 18/98

Collaboration in the area of WLCG monitoring Ø The Worldwide LCG Computing Grid (WLCG) today includes more than 170 computing centers where more than 2 million jobs are being executed daily and petabytes of data are transferred between sites. Ø Monitoring of the LHC computing activities and of the health and performance of the distributed sites and services is a vital condition of the success of the LHC data processing • For several years CERN (IT department) and JINR collaborate in the area of the development of the applications for WLCG monitoring: - WLCG Transfer Dashboard - Monitoring of the XRoot. D federations - WLCG Google Earth Dashboard - Tier 3 monitoring toolkit

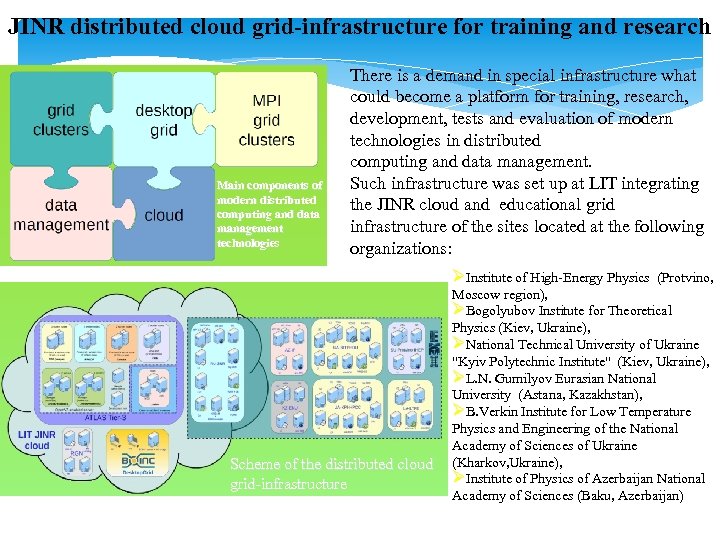

JINR distributed cloud grid-infrastructure for training and research Main components of modern distributed computing and data management technologies There is a demand in special infrastructure what could become a platform for training, research, development, tests and evaluation of modern technologies in distributed computing and data management. Such infrastructure was set up at LIT integrating the JINR cloud and educational grid infrastructure of the sites located at the following organizations: ØInstitute of High-Energy Physics (Protvino, Scheme of the distributed cloud grid-infrastructure Moscow region), ØBogolyubov Institute for Theoretical Physics (Kiev, Ukraine), ØNational Technical University of Ukraine "Kyiv Polytechnic Institute" (Kiev, Ukraine), ØL. N. Gumilyov Eurasian National University (Astana, Kazakhstan), ØB. Verkin Institute for Low Temperature Physics and Engineering of the National Academy of Sciences of Ukraine (Kharkov, Ukraine), ØInstitute of Physics of Azerbaijan National Academy of Sciences (Baku, Azerbaijan)

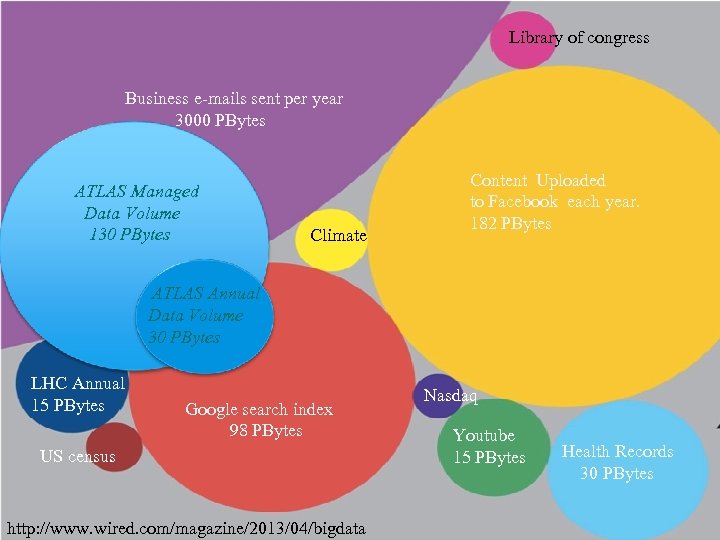

Big Data Has Arrived at an Almost Unimaginable Scale Library of congress Business e-mails sent per year 3000 PBytes ATLAS Managed Data Volume 130 PBytes Climate Content Uploaded to Facebook each year. 182 PBytes ATLAS Annual Data Volume 30 PBytes LHC Annual 15 PBytes Google search index 98 PBytes US census Nasdaq Youtube 15 PBytes Health Records 30 PBytes 9/12/13 NEC 2013 http: //www. wired. com/magazine/2013/04/bigdata 21

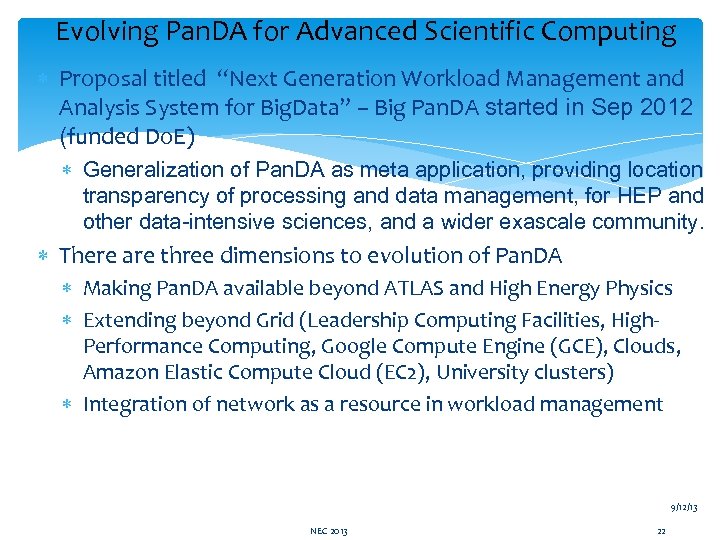

Evolving Pan. DA for Advanced Scientific Computing Proposal titled “Next Generation Workload Management and Analysis System for Big. Data” – Big Pan. DA started in Sep 2012 (funded Do. E) Generalization of Pan. DA as meta application, providing location transparency of processing and data management, for HEP and other data-intensive sciences, and a wider exascale community. There are three dimensions to evolution of Pan. DA Making Pan. DA available beyond ATLAS and High Energy Physics Extending beyond Grid (Leadership Computing Facilities, High. Performance Computing, Google Compute Engine (GCE), Clouds, Amazon Elastic Compute Cloud (EC 2), University clusters) Integration of network as a resource in workload management 9/12/13 NEC 2013 22

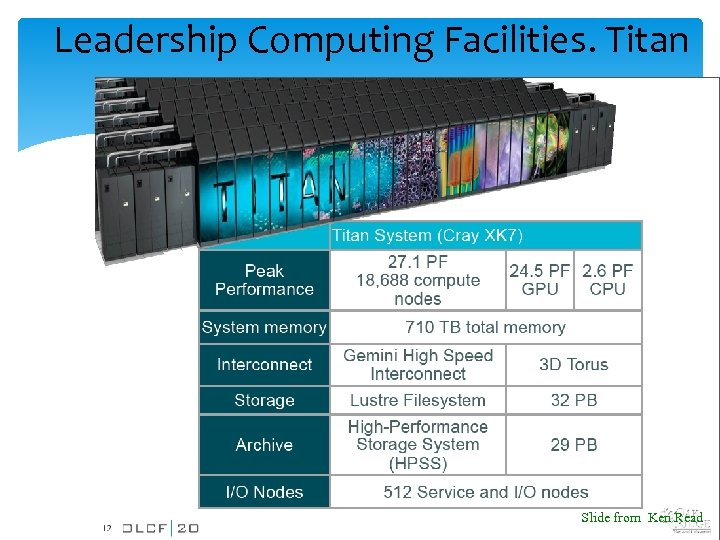

Leadership Computing Facilities. Titan 8/6/13 Big Data Workshop Slide from Ken Read 23

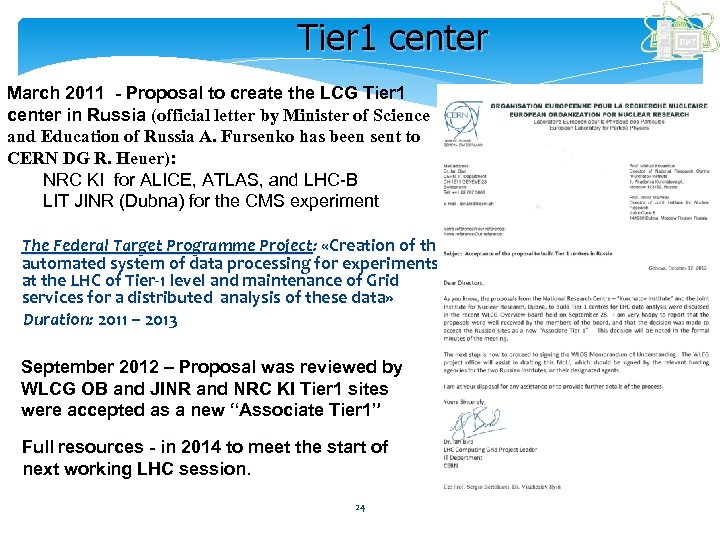

Tier 1 center March 2011 - Proposal to create the LCG Tier 1 center in Russia (official letter by Minister of Science and Education of Russia A. Fursenko has been sent to CERN DG R. Heuer): NRC KI for ALICE, ATLAS, and LHC-B LIT JINR (Dubna) for the CMS experiment The Federal Target Programme Project: «Creation of the automated system of data processing for experiments at the LHC of Tier-1 level and maintenance of Grid services for a distributed analysis of these data» Duration: 2011 – 2013 September 2012 – Proposal was reviewed by WLCG OB and JINR and NRC KI Tier 1 sites were accepted as a new “Associate Tier 1” Full resources - in 2014 to meet the start of next working LHC session. 24

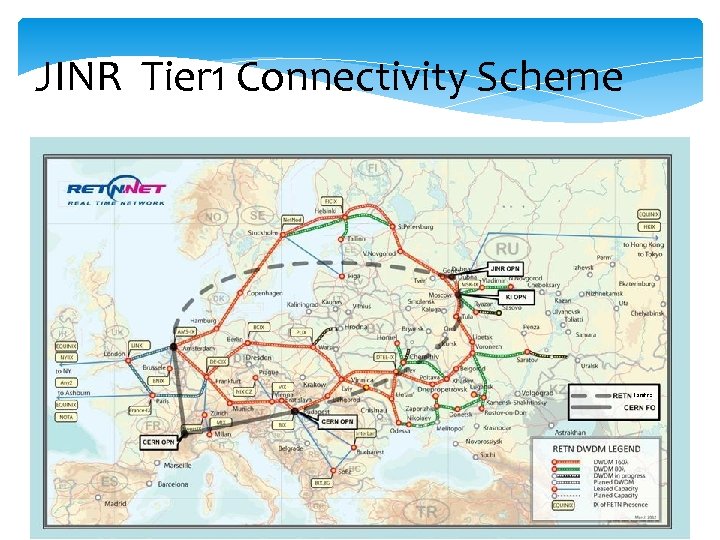

JINR Tier 1 Connectivity Scheme 25/98

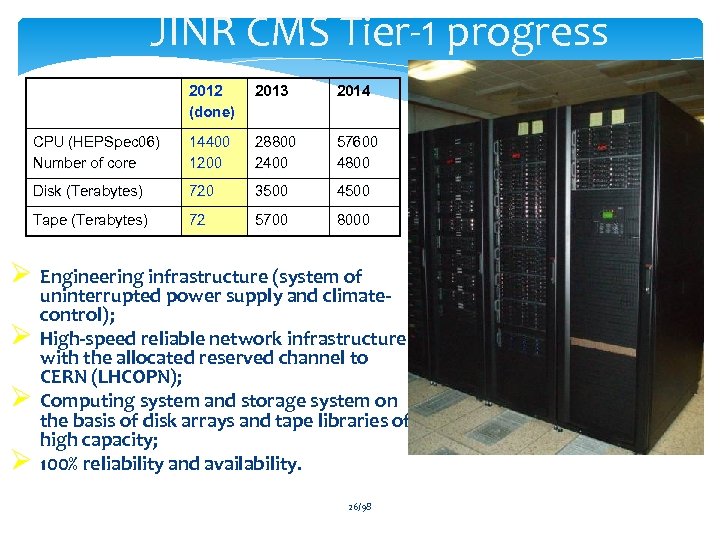

JINR CMS Tier-1 progress 2012 (done) 2013 2014 CPU (HEPSpec 06) Number of core 14400 1200 28800 2400 57600 4800 Disk (Terabytes) 720 3500 4500 Tape (Terabytes) 72 5700 8000 Ø Engineering infrastructure (system of Ø Ø Ø uninterrupted power supply and climatecontrol); High-speed reliable network infrastructure with the allocated reserved channel to CERN (LHCOPN); Computing system and storage system on the basis of disk arrays and tape libraries of high capacity; 100% reliability and availability. 26/98

CERN US-BNL Amsterdam/NIKHEF-SARA Bologna/CNAF Ca. TRIUMF Taipei/ASGC Russia: NRC KI NDGF JINR US-FNAL Barcelona/PIC De-FZK 27/98 Lyon/CCIN 2 P 3 UK-RAL

Frames for Grid cooperation of JINR n n n n Worldwide LHC Computing Grid (WLCG) Enabling Grids for E-scienc. E (EGEE) - Now is EGI-In. SPIRE RDIG Development Project BNL, ANL, UTA “Next Generation Workload Management and Analysis System for Big. Data” Tier 1 Center in Russia (NRC KI, LIT JINR) 6 Projects at CERN BMBF grant “Development of the grid-infrastructure and tools to provide joint investigations performed with participation of JINR and German research centers” “Development of grid segment for the LHC experiments” was supported in frames of JINR-South Africa cooperation agreement; Development of grid segment at Cairo University and its integration to the JINR Grid. Edu infrastructure JINR - FZU AS Czech Republic Project “The grid for the physics experiments” NASU-RFBR project “Development and support of LIT JINR and NSC KIPT gridinfrastructures for distributed CMS data processing of the LHC operation” JINR-Romania cooperation Hulubei-Meshcheryakov programme JINR-Moldova cooperation (MD-GRID, RENAM) JINR-Mongolia cooperation (Mongol-Grid) Project Grid. NNN (National Nanotechnological Net) 28/98 28

Резюме Подготовка высококвалифицированных ИТ-кадров - Современная инфраструктура (суперкомпьютеры, грид, cloud) для обучения, тренинга, выполнения проектов; - Центры передовых ИТ-технологий, на базе которых создаются научные школы, выполняются проекты высокого уровня в широкой международной кооперации с привлечением студентов и аспирантов; - Участие в мегапроектах (LHC, FAIR, NICA, PIC), в рамках которых создаются новые ИТ-технологии (WWW, Grid, Big Data); - Международные студенческие школы, летние практики на базе высокотехнологических организаций (ЦЕРН, ОИЯИ, НИЦ «Курчатовский институт» 29/98 29

96c9768ec7114097b8934cf08c711cae.ppt