5843fe782888fd99a4eb8edc0494e168.ppt

- Количество слайдов: 50

Grid-based Digital Libraries and Cheshire 3 Ray R. Larson University of California, Berkeley School of Information ISGC - Taiwan 2006. 05. 04 SLIDE 1

Overview • The Grid, Text Mining and Digital Libraries – Grid Architecture – Grid IR Issues • Cheshire 3: Building an Architecture and Bringing Search to Grid-Based Digital Libraries – Overview – Cheshire 3 Architecture – Distributed Workflows – Grid Experiments • Many of the slides in this presentation were created by others, including Ian Foster, Eric Yen, Michael Buckland Reagan Moore, Paul Watry and Clare Llewellyn ISGC - Taiwan 2006. 05. 04 SLIDE 2

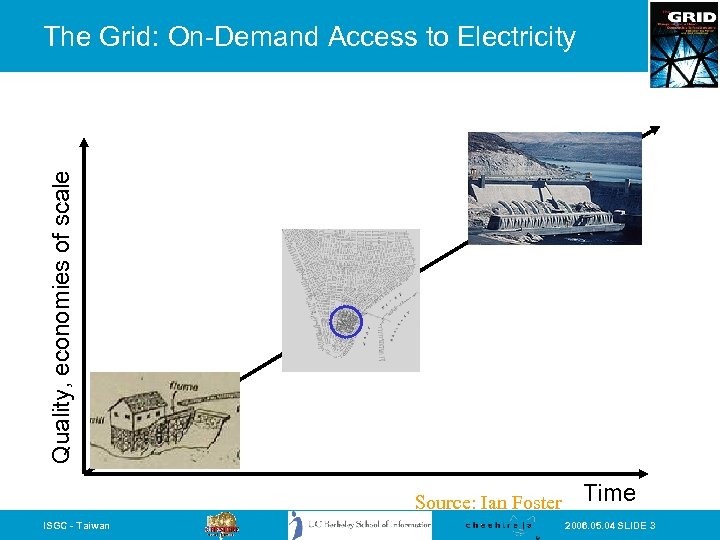

Quality, economies of scale The Grid: On-Demand Access to Electricity Source: Ian Foster ISGC - Taiwan Time 2006. 05. 04 SLIDE 3

By Analogy, A Computing Grid • Decouples production and consumption – Enable on-demand access – Achieve economies of scale – Enhance consumer flexibility – Enable new devices • On a variety of scales – Department – Campus – Enterprise – Internet ISGC - Taiwan Source: Ian Foster 2006. 05. 04 SLIDE 4

What is the Grid? “The short answer is that, whereas the Web is a service for sharing information over the Internet, the Grid is a service for sharing computer power and data storage capacity over the Internet. The Grid goes well beyond simple communication between computers, and aims ultimately to turn the global network of computers into one vast computational resource. ” Source: The Global Grid Forum ISGC - Taiwan 2006. 05. 04 SLIDE 5

The Foundations are Being Laid Edinburgh Glasgow DL Belfast Newcastle Manchester Cambridge Oxford Cardiff RAL Hinxton London Soton Tier 0/1 facility Tier 2 facility Tier 3 facility 10 Gbps link 2. 5 Gbps link 622 Mbps link Other link ISGC - Taiwan 2006. 05. 04 SLIDE 6

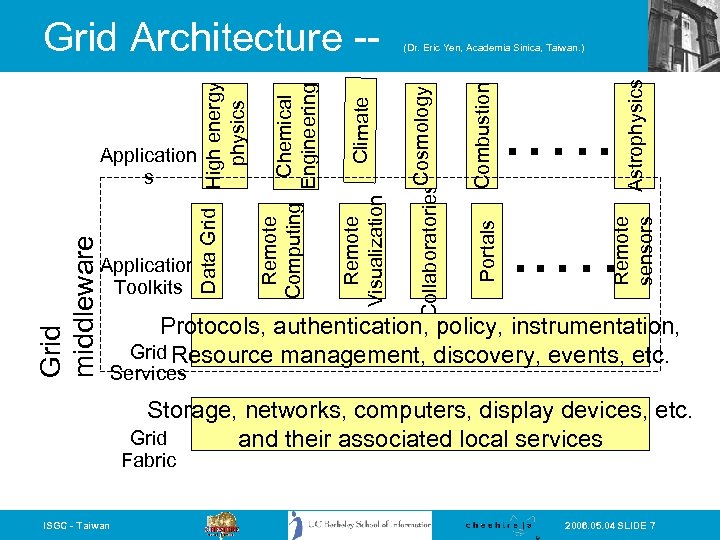

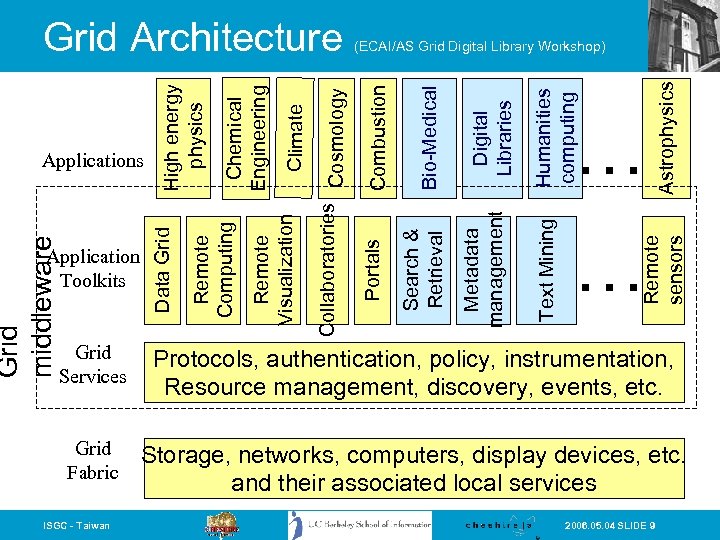

Astrophysics . …. . . … Remote sensors Combustion Portals Climate Chemical Engineering (Dr. Eric Yen, Academia Sinica, Taiwan. ) Collaboratories. Cosmology Application Toolkits Remote Visualization Grid middleware Application s Remote Computing Data Grid High energy physics Grid Architecture -- Protocols, authentication, policy, instrumentation, Grid Resource management, discovery, events, etc. Services Storage, networks, computers, display devices, etc. Grid and their associated local services Fabric ISGC - Taiwan 2006. 05. 04 SLIDE 7

But… what about… • Applications and data that are NOT for scientific research? • Things like: ISGC - Taiwan 2006. 05. 04 SLIDE 8

Astrophysics Humanities computing … … Remote sensors Text Mining Digital Libraries Metadata management Bio-Medical Search & Retrieval Combustion (ECAI/AS Grid Digital Library Workshop) Portals Collaboratories Cosmology Climate Remote Visualization Chemical Engineering Remote Computing Application Toolkits Data Grid middleware Applications High energy physics Grid Architecture Grid Services Protocols, authentication, policy, instrumentation, Resource management, discovery, events, etc. Grid Fabric Storage, networks, computers, display devices, etc. and their associated local services ISGC - Taiwan 2006. 05. 04 SLIDE 9

Grid-Based Digital Libraries: Needs • Large-scale distributed storage requirements and technologies • Organizing distributed digital collections • Shared Metadata – standards and requirements • Managing distributed digital collections • Security and access control • Collection Replication and backup • Distributed Information Retrieval support and algorithms ISGC - Taiwan 2006. 05. 04 SLIDE 10

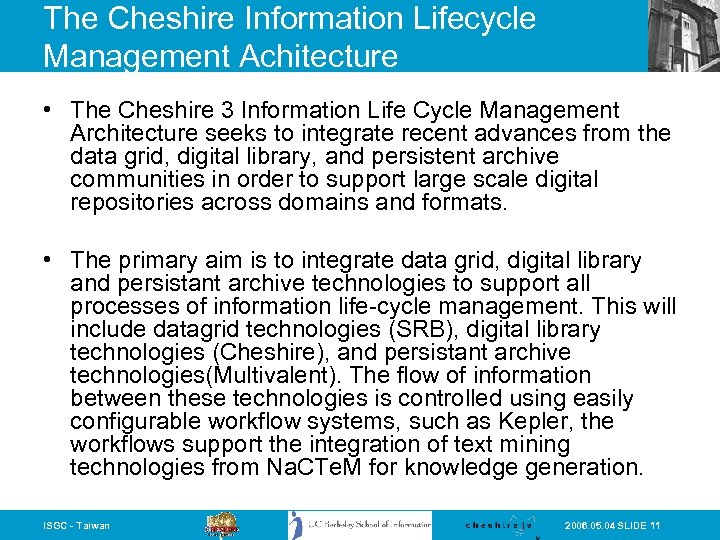

The Cheshire Information Lifecycle Management Achitecture • The Cheshire 3 Information Life Cycle Management Architecture seeks to integrate recent advances from the data grid, digital library, and persistent archive communities in order to support large scale digital repositories across domains and formats. • The primary aim is to integrate data grid, digital library and persistant archive technologies to support all processes of information life-cycle management. This will include datagrid technologies (SRB), digital library technologies (Cheshire), and persistant archive technologies(Multivalent). The flow of information between these technologies is controlled using easily configurable workflow systems, such as Kepler, the workflows support the integration of text mining technologies from Na. CTe. M for knowledge generation. ISGC - Taiwan 2006. 05. 04 SLIDE 11

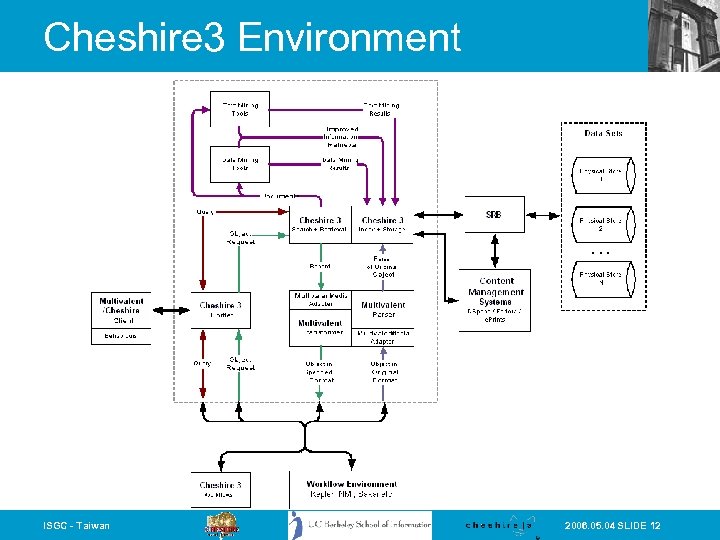

Cheshire 3 Environment ISGC - Taiwan 2006. 05. 04 SLIDE 12

Text and Data Mining Systems • The Cheshire system is being used in the UK National Text Mining Centre (Na. CTe. M) as a primary means of integrating information retrieval systems with text mining and data analysis systems. • The framework will seek to integrate text and data mining tools, and implement these on highly parallel grid infrastructures. We intend to further supplement these capabilities by incorporating support for highly dimensional data sets. • Such support will extend the capabilities of the Cheshire system to extract and relate semantic information, efficiently and effectively. ISGC - Taiwan 2006. 05. 04 SLIDE 13

Digital Preservation Technologies • The architecture is now being used for multiple persistent archives projects, including the NARA Persistant Archives and NPACI collaboration project; the NSDL Digital Preservation Life-cycle Management project; the NSDL Persistent Archive Testbed project; and AHDS prototype • We are working on a knowledge generation infrastructure, which may be used to characterise knowledge relationships. The outcome of this development may make it possible to extract and process knowledge from massive data collections which will increase the rate of discovery • The architecture incorporates the Multivalent digital object management system as a novel solution to long term preservation requirements. This component will validate, parse, and render objects from the original bit stream ISGC - Taiwan 2006. 05. 04 SLIDE 14

Data Grid Technologies • Storage capabilities of the framework will be primarily based on the data grid technologies provided by the SDSC Storage Resource Broker (SRB). • The SRB offers storage, archiving and version control of heterogeneous data in containers to allow aggregated data movement. It is able to create multiple geographically distributed backups of data via a transparent interface. • The SRB is emerging as the de-facto standard for datagrid applications, and is already in use by: – – The World University Network The Biomedical Informations Research Network (BIRN) The UK e. Science Centre (CCLRC) The National Partnership for Advanced Computational Infrastructure (NPACI) – NASA information power grid. ISGC - Taiwan 2006. 05. 04 SLIDE 15

![Data Grid Problem • “Enable a geographically distributed community [of thousands] to pool their Data Grid Problem • “Enable a geographically distributed community [of thousands] to pool their](https://present5.com/presentation/5843fe782888fd99a4eb8edc0494e168/image-16.jpg)

Data Grid Problem • “Enable a geographically distributed community [of thousands] to pool their resources in order to perform sophisticated, computationally intensive analyses on Petabytes of data” • Note that this problem: – Is common to many areas of science – Overlaps strongly with other Grid problems ISGC - Taiwan 2006. 05. 04 SLIDE 16

Examples of Desired Data Grid Functionality • • • High-speed, reliable access to remote data Automated discovery of “best” copy of data Manage replication to improve performance Co-schedule compute, storage, network “Transparency” wrt delivered performance – Particularly important for IR applications where an end user may be waiting for results • Enforce access control on data • Allow representation of “global” resource allocation policies ISGC - Taiwan 2006. 05. 04 SLIDE 17

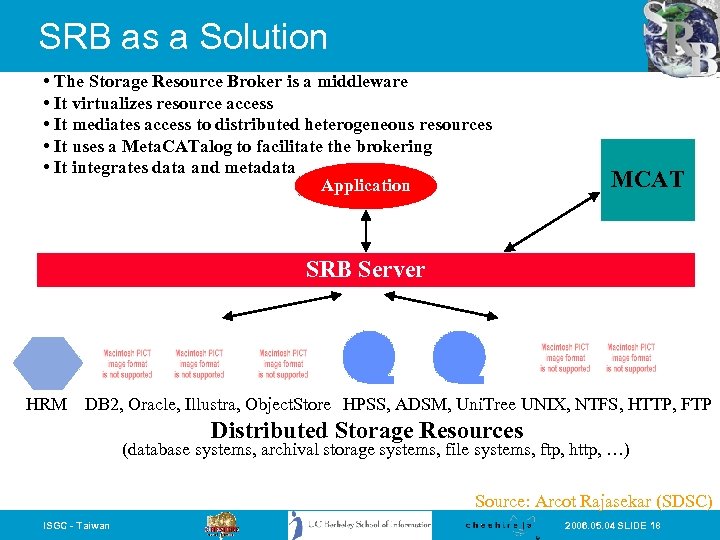

SRB as a Solution • The Storage Resource Broker is a middleware • It virtualizes resource access • It mediates access to distributed heterogeneous resources • It uses a Meta. CATalog to facilitate the brokering • It integrates data and metadata Application MCAT SRB Server HRM DB 2, Oracle, Illustra, Object. Store HPSS, ADSM, Uni. Tree UNIX, NTFS, HTTP, FTP Distributed Storage Resources (database systems, archival storage systems, file systems, ftp, http, …) Source: Arcot Rajasekar (SDSC) ISGC - Taiwan 2006. 05. 04 SLIDE 18

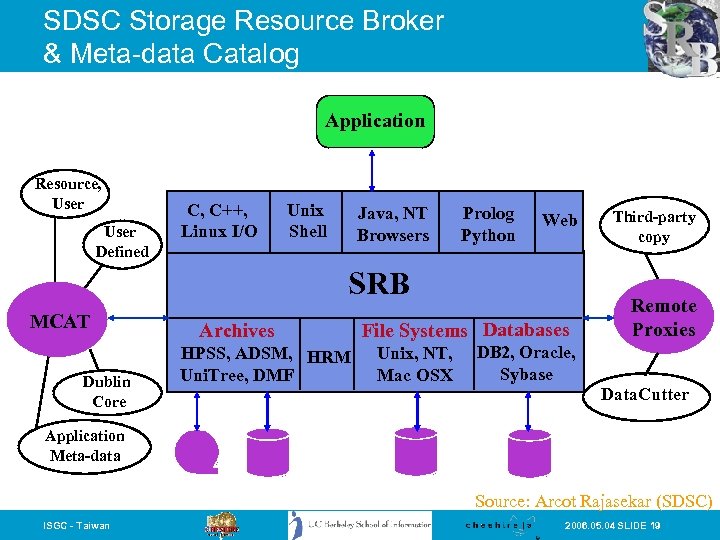

SDSC Storage Resource Broker & Meta-data Catalog Application Resource, User Defined C, C++, Linux I/O Unix Shell Java, NT Browsers Prolog Python Web SRB MCAT Dublin Core Archives HPSS, ADSM, HRM Uni. Tree, DMF File Systems Databases Unix, NT, Mac OSX Third-party copy Remote Proxies DB 2, Oracle, Sybase Data. Cutter Application Meta-data Source: Arcot Rajasekar (SDSC) ISGC - Taiwan 2006. 05. 04 SLIDE 19

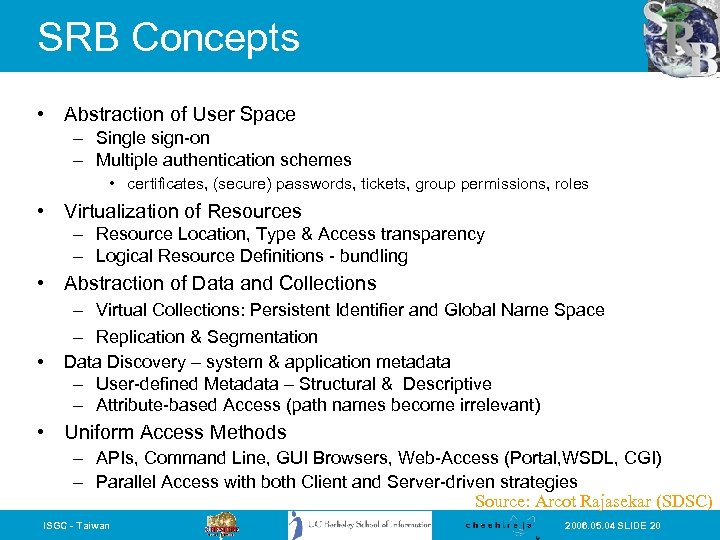

SRB Concepts • Abstraction of User Space – Single sign-on – Multiple authentication schemes • certificates, (secure) passwords, tickets, group permissions, roles • Virtualization of Resources – Resource Location, Type & Access transparency – Logical Resource Definitions - bundling • Abstraction of Data and Collections • – Virtual Collections: Persistent Identifier and Global Name Space – Replication & Segmentation Data Discovery – system & application metadata – User-defined Metadata – Structural & Descriptive – Attribute-based Access (path names become irrelevant) • Uniform Access Methods – APIs, Command Line, GUI Browsers, Web-Access (Portal, WSDL, CGI) – Parallel Access with both Client and Server-driven strategies Source: Arcot Rajasekar (SDSC) ISGC - Taiwan 2006. 05. 04 SLIDE 20

DL Collection Management • Content management systems such as Dspace and Fedora, are currently being extended to make use of the SRB for data grid storage. This will ensure their collections can in future be of virtually unlimited size, and be stored, replicated, and accessible via federated grid technologies. • By supporting the SRB, we have ensured that the Cheshire framework will be able to integrate with these systems, thereby facilitating digital content ingestion, resource discovery, content management, dissemination, and preservation, within a data-grid environment. ISGC - Taiwan 2006. 05. 04 SLIDE 21

Workflow Environments • Workflow environments, such as Kepler, are designed to allow researchers to design and execute flexible processing sequences for complex data analysis. They provide a Graphical User Interface to allow any level of user from a variety of disciplines to design these workflows in a drag-and-drop manner. • In particular we intend to provide a platform which may integrate text mining techniques and methodologies, either as part of an internal Cheshire workflow, or as external workflow configured using a system such as Kepler. ISGC - Taiwan 2006. 05. 04 SLIDE 22

Grid IR Issues • Want to preserve the same retrieval performance (precision/recall) while hopefully increasing efficiency (I. e. speed) • Very large-scale distribution of resources is (still) a challenge for sub-second retrieval • Different from most other typical Grid processes, IR is potentially less computing intensive and more data intensive • In many ways Grid IR replicates the process (and problems) of metasearch or distributed search ISGC - Taiwan 2006. 05. 04 SLIDE 23

Cheshire Digital Library System • Cheshire was originally created at UC Berkeley and more recently co-developed at the University of Liverpool. The system itself is widely used in the United Kingdom for production digital library services including: – – Archives Hub JISC Information Environment Service Registry Resource Discovery Network British Library ISTC service • The Cheshire system has recently gone through a complete redesign into its current incarnation, Cheshire 3 enabling Grid-based IR over the Data Grid ISGC - Taiwan 2006. 05. 04 SLIDE 24

Cheshire 3 IR Overview • XML Information Retrieval Engine – 3 rd Generation of the UC Berkeley Cheshire system, as co-developed at the University of Liverpool. – Uses Python for flexibility and extensibility, but uses C/C++ based libraries for processing speed – Standards based: XML, XSLT, CQL, SRW/U, Z 39. 50, OAI to name a few. – Grid capable. Uses distributed configuration files, workflow definitions and PVM or MPI to scale from one machine to thousands of parallel nodes. – Free and Open Source Software. – http: //www. cheshire 3. org/ (under development!) ISGC - Taiwan 2006. 05. 04 SLIDE 25

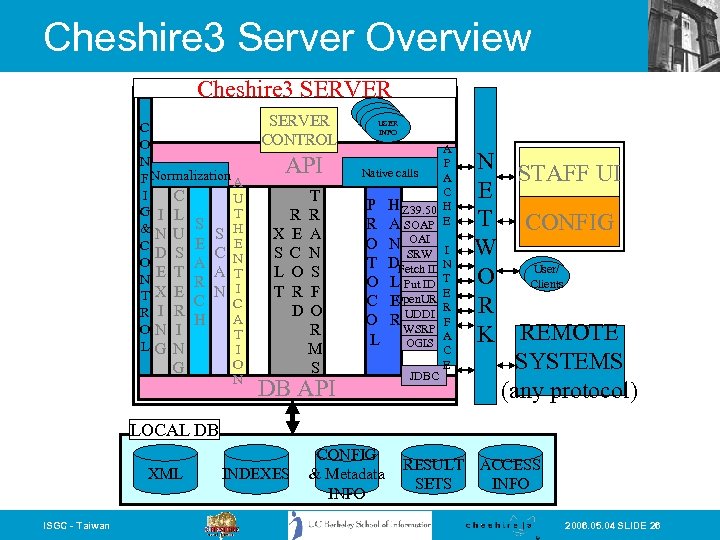

Cheshire 3 Server Overview Cheshire 3 SERVER C O N F Normalization A I C U G I L T S &N U S H E E C D S C N O A E T A T N R I T X E C N C R I R A H ON I T L G N I O G N SERVER CONTROL API X S L T T R R E A C N O S R F D O R M S DB API USER INFO A P Native calls A C P H Z 39. 50 H R A SOAP E O N OAI I SRW T DFetch ID N O L Put ID T E Open. URL C E R UDDI O R WSRP F A L OGIS C E JDBC N STAFF UI E T CONFIG W User/ O Clients R K REMOTE SYSTEMS (any protocol) LOCAL DB XML ISGC - Taiwan INDEXES CONFIG & Metadata INFO RESULT SETS ACCESS INFO 2006. 05. 04 SLIDE 26

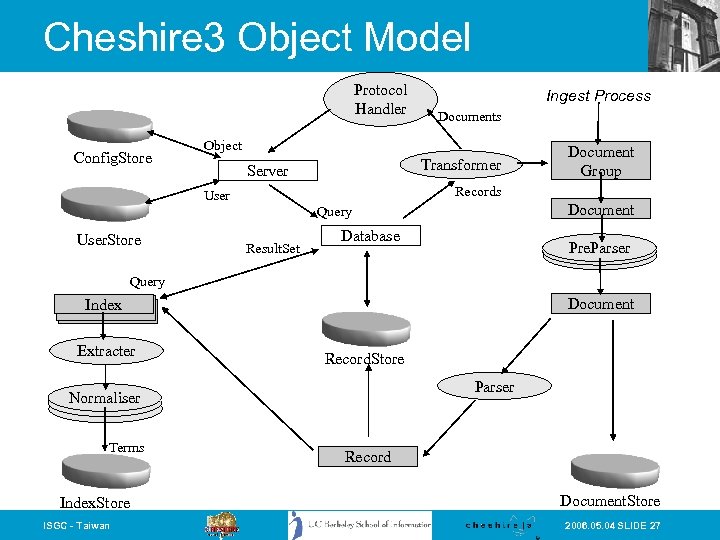

Cheshire 3 Object Model Protocol Handler Config. Store Ingest Process Documents Object Transformer Server Records User Document Query User. Store Document Group Result. Set Database Pre. Parser Query Document Index Extracter Record. Store Parser Normaliser Terms Index. Store ISGC - Taiwan Record Document. Store 2006. 05. 04 SLIDE 27

Workflow Objects • Workflows are first class objects in Cheshire 3 (though not represented in the model diagram) and now support integration with Kepler workflows • All Process and Abstract objects have individual XML configurations with a common base schema with extensions • We can treat configurations as Records and store in regular Record. Stores, allowing access via regular IR protocols. ISGC - Taiwan 2006. 05. 04 SLIDE 28

Workflow References • Workflows contain a series of instructions to perform, with reference to other Cheshire 3 objects • Reference is via pseudo-unique identifiers … Pseudo because they are unique within the current context (Server vs Database) • Workflows are objects, so this enables server level workflows to call database specific workflows with the same identifier ISGC - Taiwan 2006. 05. 04 SLIDE 29

Distributed Processing • Each node in the cluster instantiates the configured architecture, potentially through a single Config. Store • Master nodes then run a high level workflow to distribute the processing amongst Slave nodes by reference to a subsidiary workflow • As object interaction is well defined in the model, the result of a workflow is equally well defined. This allows for the easy chaining of workflows, either locally or spread throughout the cluster ISGC - Taiwan 2006. 05. 04 SLIDE 30

External Integration • Cheshire workflows now allow integration with existing cross-service workflow systems, in particular Kepler/Ptolemy • Integration at two levels: – Cheshire 3 as a Kepler service (black box). . . Identify a workflow to call – Cheshire 3 objects as a services (duplicate existing C 3 workflow functions using Kepler) ISGC - Taiwan 2006. 05. 04 SLIDE 31

Cheshire 3 Grid Tests • Initial experiments: 20 processors in Liverpool using PVM (Parallel Virtual Machine) • Using 16 processors with one “master” and 22 “slave” processes we were able to parse and index MARC data at about 13000 records per second • 610 Mb of TEI data can be parsed and indexed in seconds • More recently, 30 processors using MPI has shown even better results, unsurprisingly. ISGC - Taiwan 2006. 05. 04 SLIDE 32

SRB and SDSC Experiments • In conjunction with SDSC, we have implemented SRB support for processed data storage and data acquisition • We are continuing work with SDSC to run further evaluations using the Tera. Grid through a “small” grant for 30000 CPU hours – SDSC's Tera. Grid cluster currently consists of 256 IBM cluster nodes, each with dual 1. 5 GHz Intel® Itanium® 2 processors, for a peak performance of 3. 1 teraflops. The nodes are equipped with four gigabytes (GBs) of physical memory per node. The cluster is running Su. SE Linux and is using Myricom's Myrinet cluster interconnect network. • Large-scale test collections now include MEDLINE, NSDL, the NARA preservation prototype, and we hope to use Cite. Seer and the “million books” collections of the Internet Archive • Tests with Medline showed that we were able to index 750, 000 Medline records, including part of speech tagging in 2 minutes, 45 seconds (using 1 master, 59 slaves) ISGC - Taiwan 2006. 05. 04 SLIDE 33

NARA Prototype • The NARA (National Archives and Records Administration) preservation prototype technology demonstration… • The current demo version is not designed to show the fastest possible search times of the collection, but instead to show the system can scale while retaining usable search times and interacting directly with the preserved, original objects rather than a migrated version. • The collection was generated by a web crawl for documents relating to the Space Shuttle Columbia tragedy in 2003, and includes both federal documents (such as the CAIB site and other information from NASA) as well as third party discussion such as news sites (BBC, CNN etc) and reputable information sources (Wikipedia, Space. com). As such, it is a preservation of the popular memory of the events as well as the official perspective. ISGC - Taiwan 2006. 05. 04 SLIDE 34

NARA Prototype: Introduction • To ensure scalability and longevity, the design criteria for the prototype were: – Use the Tera. Grid for processing – Use the Storage Resource Broker for all data storage – Use Cheshire 3 for a standards based, flexible information architecture – Use Multivalent for document processing and display – Use standards where ever possible to promote architecture independence. • The use of the prototype system has two distinct phases. First, the Teragrid is used to process the documents stored in the SRB and store the processed information back in to SRB collections. In the second phase, the user performs a search on the processed data stored in the SRB, and then interacts with the original documents directly. ISGC - Taiwan 2006. 05. 04 SLIDE 35

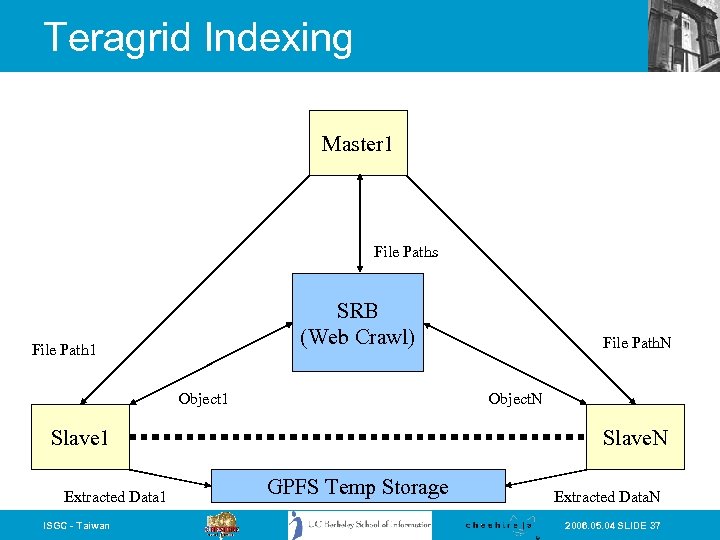

Teragrid Indexing • The following figure shows the initial data processing steps performed on the Teragrid. – Once machines in the grid have been allocated for processing, each machine builds an instance of the Cheshire 3 architecture. The architecture is as we described previously – One machine is designated as the 'Master' and controls the behaviour of all other machines, called 'Slaves'. For much larger scale datasets, multiple master nodes could be assigned – The Master acquires the list of documents to process from the SRB and distributes these amongst the slaves according to the SRB URL scheme. ISGC - Taiwan 2006. 05. 04 SLIDE 36

Teragrid Indexing Master 1 File Paths SRB (Web Crawl) File Path 1 Object. N Slave 1 Extracted Data 1 ISGC - Taiwan File Path. N Slave. N GPFS Temp Storage Extracted Data. N 2006. 05. 04 SLIDE 37

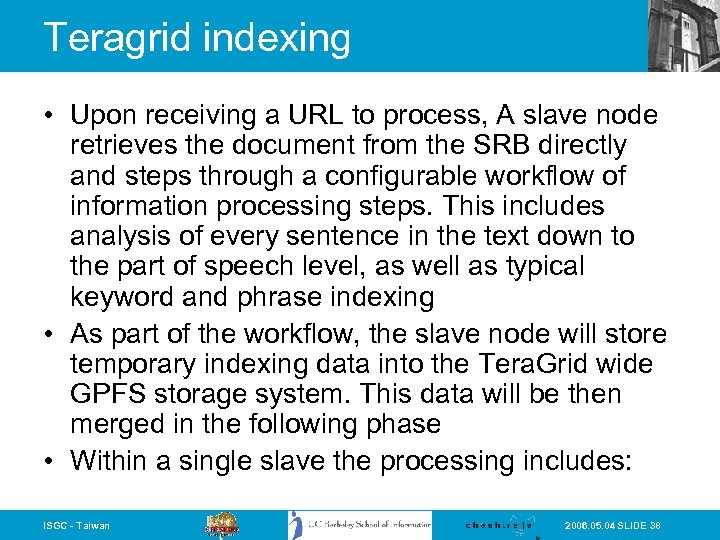

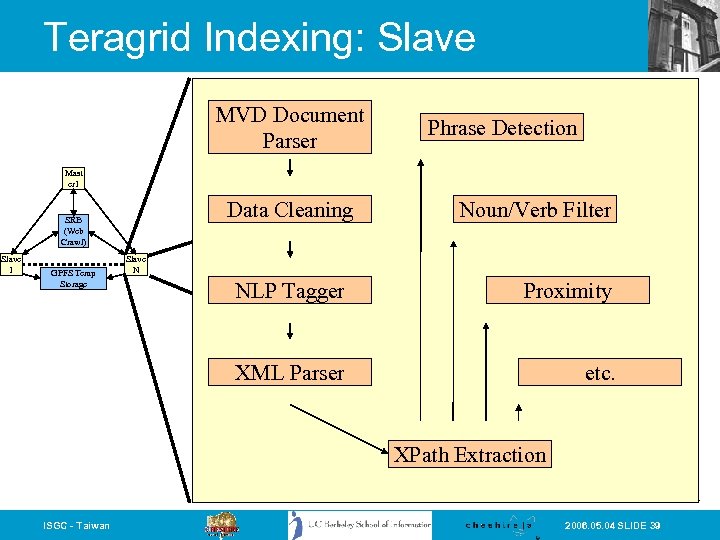

Teragrid indexing • Upon receiving a URL to process, A slave node retrieves the document from the SRB directly and steps through a configurable workflow of information processing steps. This includes analysis of every sentence in the text down to the part of speech level, as well as typical keyword and phrase indexing • As part of the workflow, the slave node will store temporary indexing data into the Tera. Grid wide GPFS storage system. This data will be then merged in the following phase • Within a single slave the processing includes: ISGC - Taiwan 2006. 05. 04 SLIDE 38

Teragrid Indexing: Slave MVD Document Parser Phrase Detection Mast er 1 Data Cleaning SRB (Web Crawl) Slave 1 GPFS Temp Storage Noun/Verb Filter Slave N NLP Tagger Proximity XML Parser etc. XPath Extraction ISGC - Taiwan 2006. 05. 04 SLIDE 39

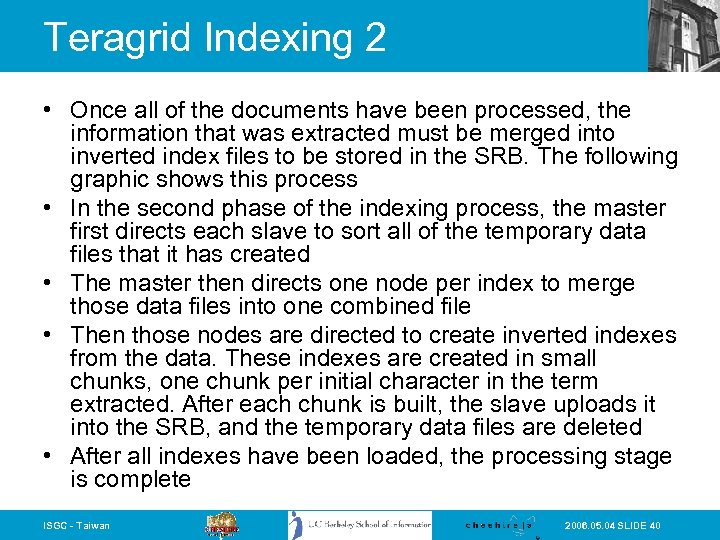

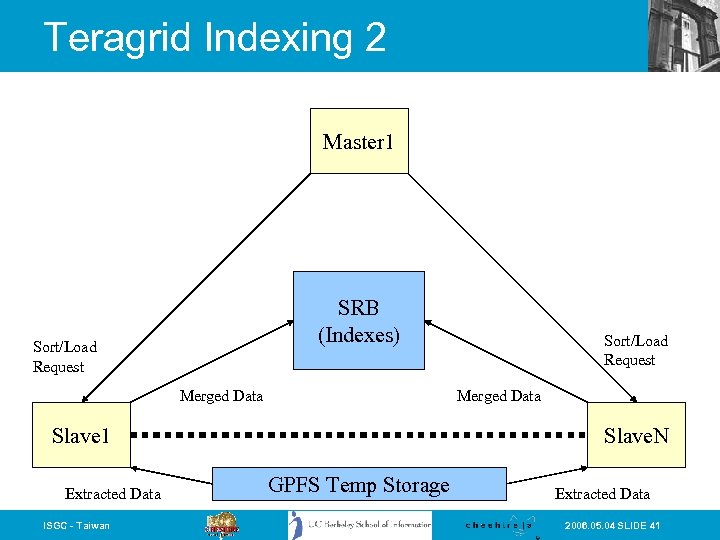

Teragrid Indexing 2 • Once all of the documents have been processed, the information that was extracted must be merged into inverted index files to be stored in the SRB. The following graphic shows this process • In the second phase of the indexing process, the master first directs each slave to sort all of the temporary data files that it has created • The master then directs one node per index to merge those data files into one combined file • Then those nodes are directed to create inverted indexes from the data. These indexes are created in small chunks, one chunk per initial character in the term extracted. After each chunk is built, the slave uploads it into the SRB, and the temporary data files are deleted • After all indexes have been loaded, the processing stage is complete ISGC - Taiwan 2006. 05. 04 SLIDE 40

Teragrid Indexing 2 Master 1 SRB (Indexes) Sort/Load Request Merged Data Slave 1 Extracted Data ISGC - Taiwan Sort/Load Request Slave. N GPFS Temp Storage Extracted Data 2006. 05. 04 SLIDE 41

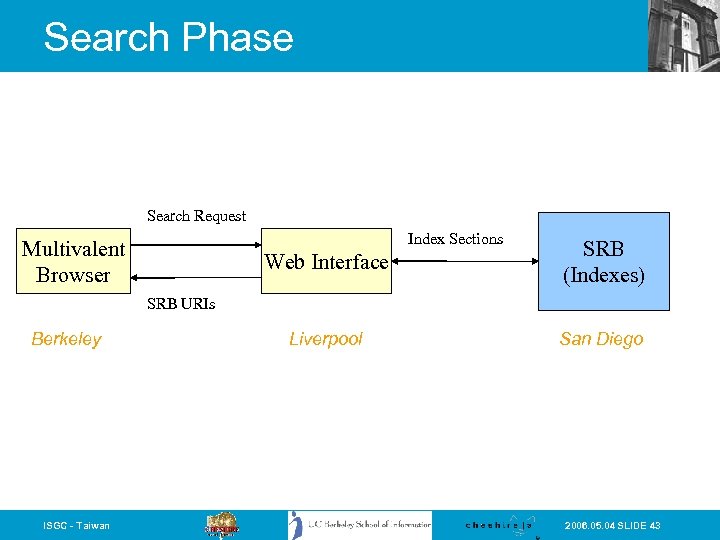

NARA Search Process • In the Discovery phase, a user interacts with a front end to the system. This phase does not use the Teragrid for processing as it cannot be predicted when a user would want to do their search, and hence when the use of the grid should be requested is unknown • In the first step, the user performs a search via the interface. The front end system retrieves the chunks of indexes necessary to fulfil the users request from the SRB and returns a set of SRB URLs. • The interaction between the Fab 4 Browser and the front end is performed via the SRW/U information retrieval standard. The response is XML which is rendered as HTML via XSLT stylesheets. By modifying the stylesheets, different user interfaces can quickly be generated without modifying the server. By using a standard protocol, we can ensure that the response is able to be interpreted by different clients or generated by different front end architectures. To verify this, you may load the pages with a regular web browser and select 'View Source' to see the underlying XML. • The search may sometimes be a little slower than expected if the index chunks needed have not been retrieved from the data grid. Please note that the data is not replicated, so the chunks are being transfered from San Diego to Liverpool in this particular setup. ISGC - Taiwan 2006. 05. 04 SLIDE 42

Search Phase Search Request Index Sections Web Interface Multivalent Browser SRB (Indexes) Liverpool San Diego SRB URIs Berkeley ISGC - Taiwan 2006. 05. 04 SLIDE 43

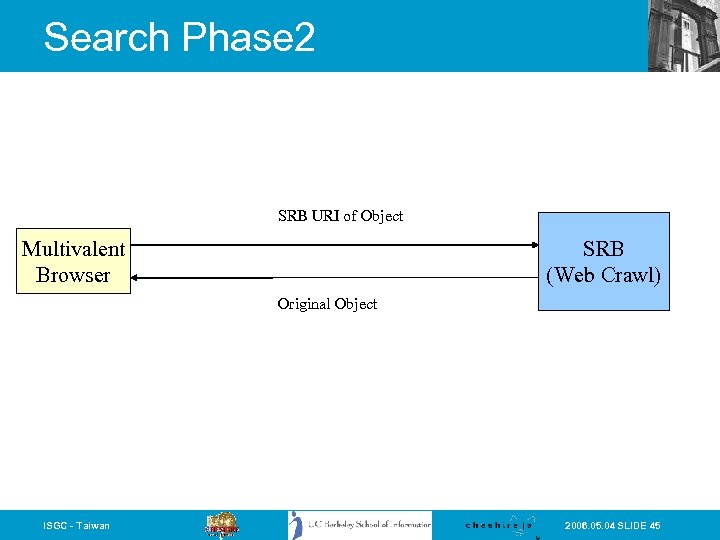

Search Process 2 • Once the SRB URLs have been returned to the users, they may interact directly with the original documents stored in the SRB via Fab 4 connects to the SRB and retrieves the document for display, rather than through a web proxy interface • (Note that this display is not available in conventional browsers) ISGC - Taiwan 2006. 05. 04 SLIDE 44

Search Phase 2 SRB URI of Object SRB (Web Crawl) Multivalent Browser Original Object ISGC - Taiwan 2006. 05. 04 SLIDE 45

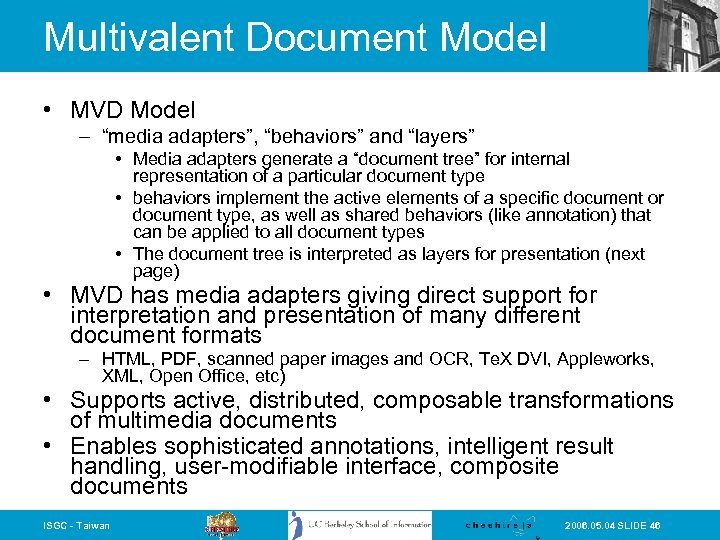

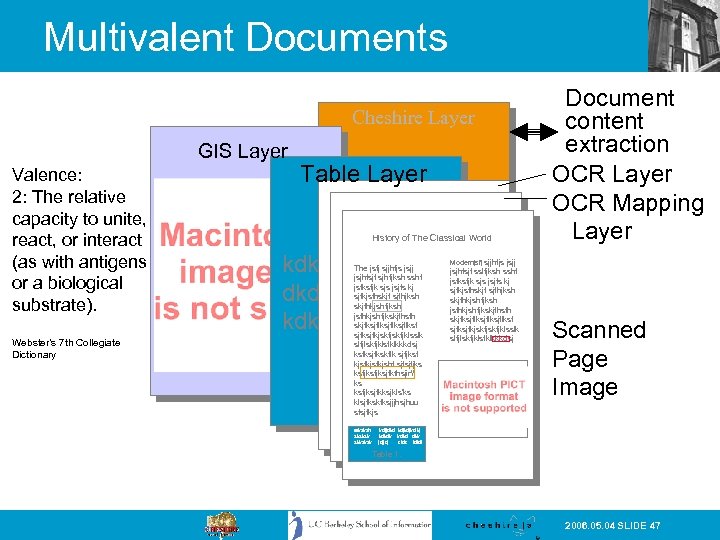

Multivalent Document Model • MVD Model – “media adapters”, “behaviors” and “layers” • Media adapters generate a “document tree” for internal representation of a particular document type • behaviors implement the active elements of a specific document or document type, as well as shared behaviors (like annotation) that can be applied to all document types • The document tree is interpreted as layers for presentation (next page) • MVD has media adapters giving direct support for interpretation and presentation of many different document formats – HTML, PDF, scanned paper images and OCR, Te. X DVI, Appleworks, XML, Open Office, etc) • Supports active, distributed, composable transformations of multimedia documents • Enables sophisticated annotations, intelligent result handling, user-modifiable interface, composite documents ISGC - Taiwan 2006. 05. 04 SLIDE 46

Multivalent Documents Cheshire Layer GIS Layer Valence: 2: The relative capacity to unite, react, or interact (as with antigens or a biological substrate). Webster’s 7 th Collegiate Dictionary Table Layer History of The Classical World kdk dkd kdk The jsfj sjjhfjs jsjj jsjhfsjf sjhfjksh sshf jsfksfjk sjs jsjfs kj sjfkjsfhskjf sjfhjksh skjfhkjshfjksh jsfhkjshfjkskjfhsfh skjfksjflksjflksf sjfkjskfjklsslk slfjlskfjklsfklkkkdsj ksfksjfkskflk sjfjksf kjsfkjshf sjfsjfjks ksfjksjfkthsjir\ ks ksfjksjfkksjkls’ks klsjfkskfksjjjhsjhuu sfsjfkjs taksksh sksksk skksksk Document content extraction OCR Layer OCR Mapping Layer Modernjsfj sjjhfjs jsjj jsjhfsjf sslfjksh sshf jsfksfjk sjs jsjfs kj sjfkjsfhskjf sjfhjksh skjfhkjshfjksh jsfhkjshfjkskjfhsfh skjfksjflksjflksf sjfkjskfjklsslk slfjlskfjklsfklkkkdsj Scanned Page Image kdjjdkd kdjkdjkd kj kdkdk kdkd dkk jdjjdj clclc ldldl Table 1. 2006. 05. 04 SLIDE 47

NARA Prototype • Fab 4 Multivalent browser available at http: //bodoni. lib. liv. ac. uk: 8080/fab 4/ • Nara prototype at http: //srw. cheshire 3. org/services/nara ISGC - Taiwan 2006. 05. 04 SLIDE 48

Summary • Indexing and IR work very well in the Grid environment, with the expected scaling behavior for multiple processes • We are working on other large-scale indexing projects (such as the NSDL) and will also be running evaluations of retrieval performance using IR test collections such as the TREC “Terabyte track” collection ISGC - Taiwan 2006. 05. 04 SLIDE 49

Thank you! Available via http: //www. cheshire 3. org ISGC - Taiwan 2006. 05. 04 SLIDE 50

5843fe782888fd99a4eb8edc0494e168.ppt