61096411c9646dc959487b935f756962.ppt

- Количество слайдов: 26

Grid 3 and a few thoughts on federating grids Rob Gardner University of Chicago rwg@hep. uchicago. edu GGF PGM May 7, 2004 1

Grid 3 and a few thoughts on federating grids Rob Gardner University of Chicago rwg@hep. uchicago. edu GGF PGM May 7, 2004 1

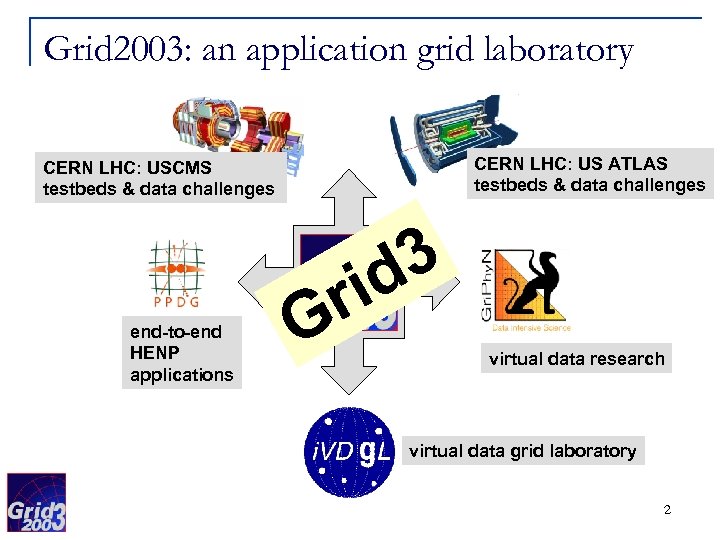

Grid 2003: an application grid laboratory CERN LHC: US ATLAS testbeds & data challenges CERN LHC: USCMS testbeds & data challenges end-to-end HENP applications G 3 id r virtual data research virtual data grid laboratory 2

Grid 2003: an application grid laboratory CERN LHC: US ATLAS testbeds & data challenges CERN LHC: USCMS testbeds & data challenges end-to-end HENP applications G 3 id r virtual data research virtual data grid laboratory 2

Grid 3 at a Glance n Grid environment built from core Globus and Condor middleware, as delivered through the Virtual Data Toolkit (VDT) q GRAM, Grid. FTP, MDS, RLS, VDS, VOMS, … n …equipped with VO and multi-VO security, monitoring, and operations services n …allowing federation with other Grids where possible, eg. CERN LHC Computing Grid (LCG) q q USATLAS: Gri. Phy. N VDS execution on LCG sites USCMS: storage element interoperability (SRM/d. Cache) 3

Grid 3 at a Glance n Grid environment built from core Globus and Condor middleware, as delivered through the Virtual Data Toolkit (VDT) q GRAM, Grid. FTP, MDS, RLS, VDS, VOMS, … n …equipped with VO and multi-VO security, monitoring, and operations services n …allowing federation with other Grids where possible, eg. CERN LHC Computing Grid (LCG) q q USATLAS: Gri. Phy. N VDS execution on LCG sites USCMS: storage element interoperability (SRM/d. Cache) 3

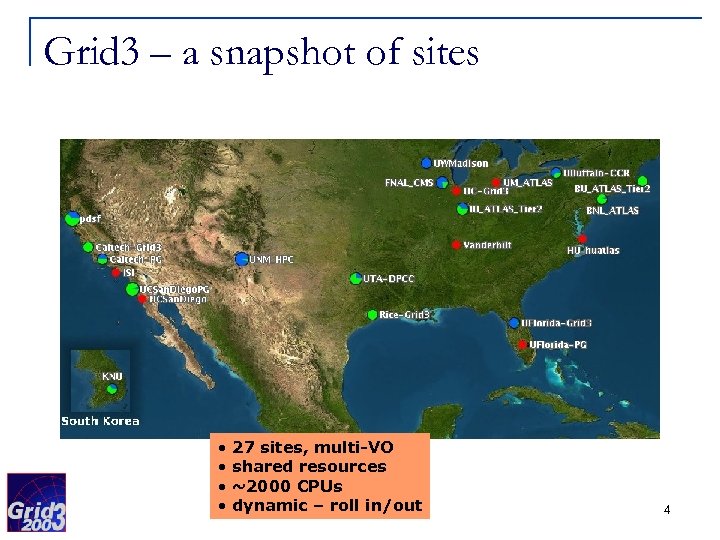

Grid 3 – a snapshot of sites • • 27 sites, multi-VO shared resources ~2000 CPUs dynamic – roll in/out 4

Grid 3 – a snapshot of sites • • 27 sites, multi-VO shared resources ~2000 CPUs dynamic – roll in/out 4

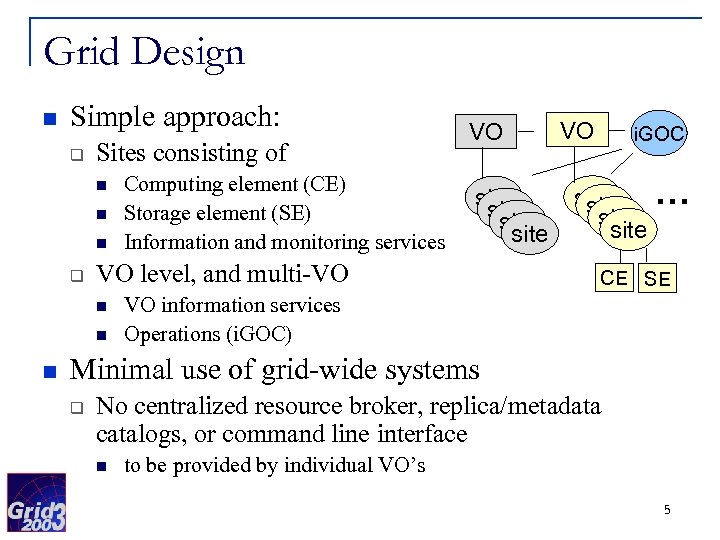

Grid Design n Simple approach: q Sites consisting of n n n q site VO level, and multi-VO n n n Computing element (CE) Storage element (SE) Information and monitoring services VO VO i. GOC site … site CE SE VO information services Operations (i. GOC) Minimal use of grid-wide systems q No centralized resource broker, replica/metadata catalogs, or command line interface n to be provided by individual VO’s 5

Grid Design n Simple approach: q Sites consisting of n n n q site VO level, and multi-VO n n n Computing element (CE) Storage element (SE) Information and monitoring services VO VO i. GOC site … site CE SE VO information services Operations (i. GOC) Minimal use of grid-wide systems q No centralized resource broker, replica/metadata catalogs, or command line interface n to be provided by individual VO’s 5

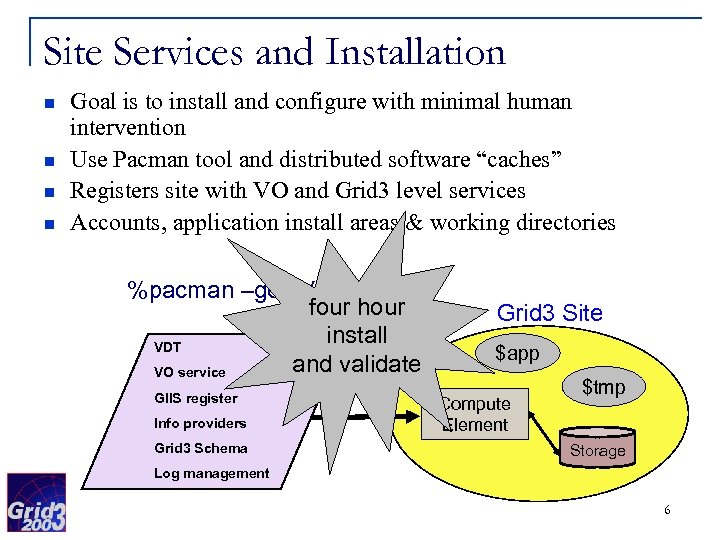

Site Services and Installation n n Goal is to install and configure with minimal human intervention Use Pacman tool and distributed software “caches” Registers site with VO and Grid 3 level services Accounts, application install areas & working directories %pacman –get i. VDGL: Grid 3 four hour install VDT and validate VO service GIIS register Info providers Grid 3 Schema Grid 3 Site $app Compute Element $tmp Storage Log management 6

Site Services and Installation n n Goal is to install and configure with minimal human intervention Use Pacman tool and distributed software “caches” Registers site with VO and Grid 3 level services Accounts, application install areas & working directories %pacman –get i. VDGL: Grid 3 four hour install VDT and validate VO service GIIS register Info providers Grid 3 Schema Grid 3 Site $app Compute Element $tmp Storage Log management 6

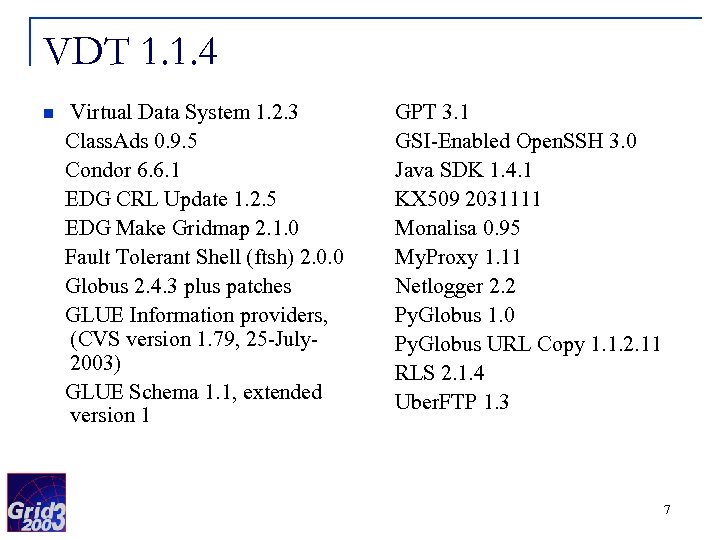

VDT 1. 1. 4 n Virtual Data System 1. 2. 3 Class. Ads 0. 9. 5 Condor 6. 6. 1 EDG CRL Update 1. 2. 5 EDG Make Gridmap 2. 1. 0 Fault Tolerant Shell (ftsh) 2. 0. 0 Globus 2. 4. 3 plus patches GLUE Information providers, (CVS version 1. 79, 25 -July 2003) GLUE Schema 1. 1, extended version 1 GPT 3. 1 GSI-Enabled Open. SSH 3. 0 Java SDK 1. 4. 1 KX 509 2031111 Monalisa 0. 95 My. Proxy 1. 11 Netlogger 2. 2 Py. Globus 1. 0 Py. Globus URL Copy 1. 1. 2. 11 RLS 2. 1. 4 Uber. FTP 1. 3 7

VDT 1. 1. 4 n Virtual Data System 1. 2. 3 Class. Ads 0. 9. 5 Condor 6. 6. 1 EDG CRL Update 1. 2. 5 EDG Make Gridmap 2. 1. 0 Fault Tolerant Shell (ftsh) 2. 0. 0 Globus 2. 4. 3 plus patches GLUE Information providers, (CVS version 1. 79, 25 -July 2003) GLUE Schema 1. 1, extended version 1 GPT 3. 1 GSI-Enabled Open. SSH 3. 0 Java SDK 1. 4. 1 KX 509 2031111 Monalisa 0. 95 My. Proxy 1. 11 Netlogger 2. 2 Py. Globus 1. 0 Py. Globus URL Copy 1. 1. 2. 11 RLS 2. 1. 4 Uber. FTP 1. 3 7

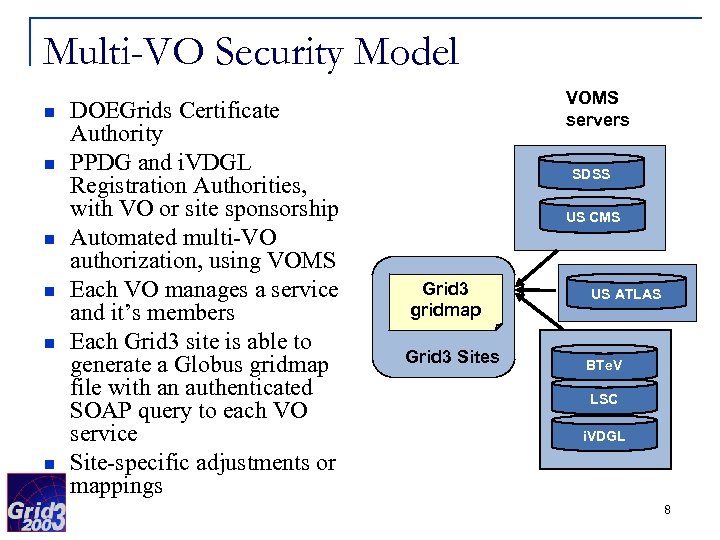

Multi-VO Security Model n n n DOEGrids Certificate Authority PPDG and i. VDGL Registration Authorities, with VO or site sponsorship Automated multi-VO authorization, using VOMS Each VO manages a service and it’s members Each Grid 3 site is able to generate a Globus gridmap file with an authenticated SOAP query to each VO service Site-specific adjustments or mappings VOMS servers SDSS US CMS Grid 3 gridmap Grid 3 Sites US ATLAS BTe. V LSC i. VDGL 8

Multi-VO Security Model n n n DOEGrids Certificate Authority PPDG and i. VDGL Registration Authorities, with VO or site sponsorship Automated multi-VO authorization, using VOMS Each VO manages a service and it’s members Each Grid 3 site is able to generate a Globus gridmap file with an authenticated SOAP query to each VO service Site-specific adjustments or mappings VOMS servers SDSS US CMS Grid 3 gridmap Grid 3 Sites US ATLAS BTe. V LSC i. VDGL 8

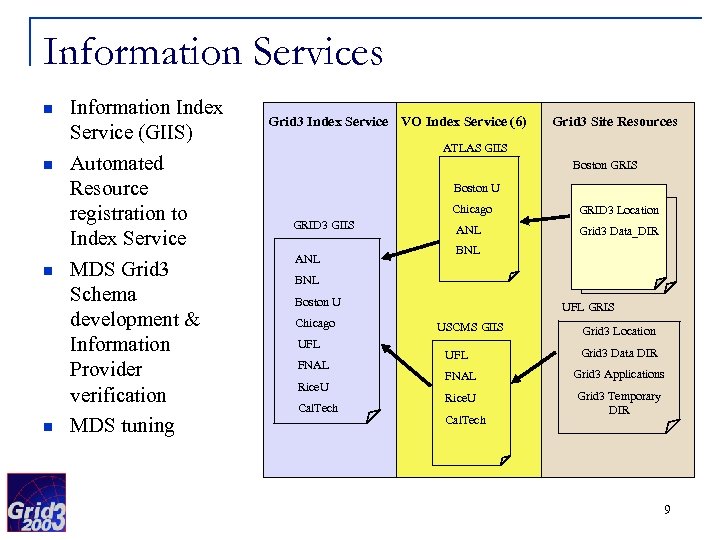

Information Services n n Information Index Service (GIIS) Automated Resource registration to Index Service MDS Grid 3 Schema development & Information Provider verification MDS tuning Grid 3 Index Service VO Index Service (6) Grid 3 Site Resources ATLAS GIIS Boston GRIS Boston U Chicago GRID 3 GIIS ANL GRID 3 Location ANL Grid 3 Data_DIR BNL Boston U Chicago UFL FNAL Rice. U Cal. Tech UFL GRIS USCMS GIIS UFL Grid 3 Location Grid 3 Data DIR FNAL Grid 3 Applications Rice. U Grid 3 Temporary DIR Cal. Tech 9

Information Services n n Information Index Service (GIIS) Automated Resource registration to Index Service MDS Grid 3 Schema development & Information Provider verification MDS tuning Grid 3 Index Service VO Index Service (6) Grid 3 Site Resources ATLAS GIIS Boston GRIS Boston U Chicago GRID 3 GIIS ANL GRID 3 Location ANL Grid 3 Data_DIR BNL Boston U Chicago UFL FNAL Rice. U Cal. Tech UFL GRIS USCMS GIIS UFL Grid 3 Location Grid 3 Data DIR FNAL Grid 3 Applications Rice. U Grid 3 Temporary DIR Cal. Tech 9

Monitoring Services and Tools n Globus MDS q n Ganglia q n Agent based monitoring gathering system ACDC Job Monitoring System q n cluster monitoring information such as CPU and network load, memory and disk usage Mon. ALISA q n GLUE and Grid 3 schema based information services Grid submitted jobs to query the job managers and collect information Metrics Data Viewer (MDViewer) q Plots information collected by the different monitoring systems 10

Monitoring Services and Tools n Globus MDS q n Ganglia q n Agent based monitoring gathering system ACDC Job Monitoring System q n cluster monitoring information such as CPU and network load, memory and disk usage Mon. ALISA q n GLUE and Grid 3 schema based information services Grid submitted jobs to query the job managers and collect information Metrics Data Viewer (MDViewer) q Plots information collected by the different monitoring systems 10

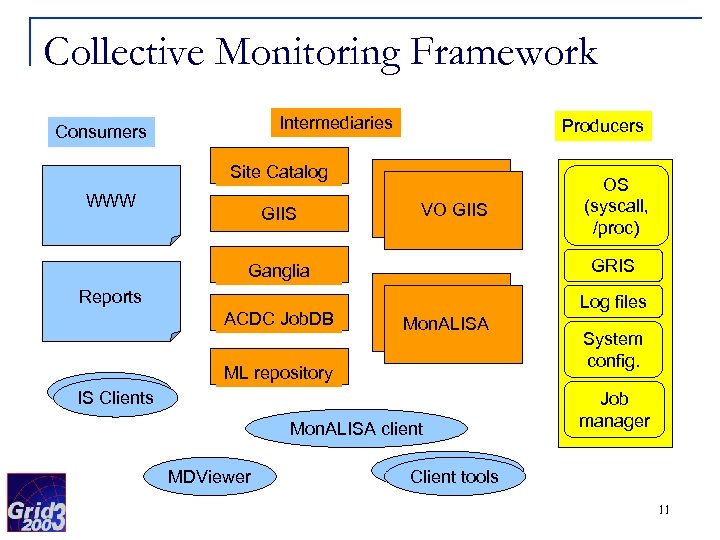

Collective Monitoring Framework Intermediaries Consumers Producers Site Catalog WWW GIIS VO GIIS GRIS Ganglia Reports ACDC Job. DB IS Clients Log files Mon. ALISA ML repository Mon. ALISA client MDViewer OS (syscall, /proc) System config. Job manager IS Clients Client tools 11

Collective Monitoring Framework Intermediaries Consumers Producers Site Catalog WWW GIIS VO GIIS GRIS Ganglia Reports ACDC Job. DB IS Clients Log files Mon. ALISA ML repository Mon. ALISA client MDViewer OS (syscall, /proc) System config. Job manager IS Clients Client tools 11

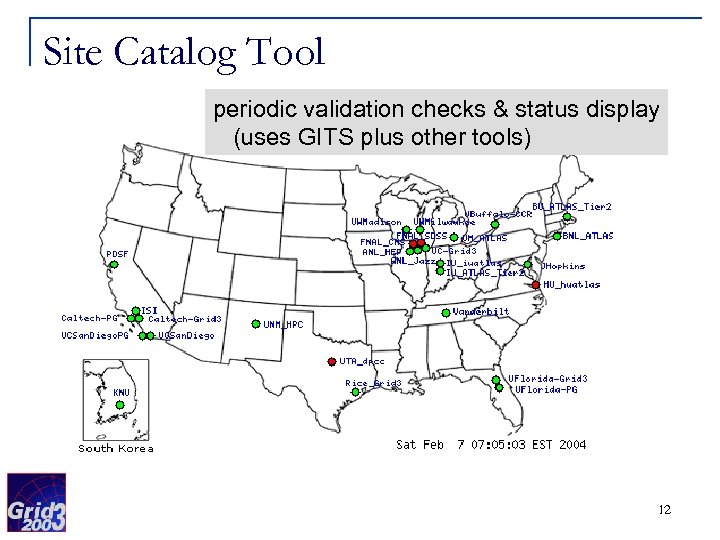

Site Catalog Tool periodic validation checks & status display (uses GITS plus other tools) 12

Site Catalog Tool periodic validation checks & status display (uses GITS plus other tools) 12

i. VDGL Operations Center (i. GOC) n n Co-located with Abilene NOC, hosted by Indiana University Hosts/manages multi-VO services: q q q n Trouble ticket systems q q n top level Ganglia collectors Mon. ALISA web server and archival service top level GIIS VOMS servers for i. VDGL, BTe. V, SDSS Site Catalog service i. VDGL Pacman caches phone (24 hr), web and email based collection and reporting system Investigation and resolution of grid middleware problems at the level of 16 -20 contacts per week Weekly operations meetings for troubleshooting 13

i. VDGL Operations Center (i. GOC) n n Co-located with Abilene NOC, hosted by Indiana University Hosts/manages multi-VO services: q q q n Trouble ticket systems q q n top level Ganglia collectors Mon. ALISA web server and archival service top level GIIS VOMS servers for i. VDGL, BTe. V, SDSS Site Catalog service i. VDGL Pacman caches phone (24 hr), web and email based collection and reporting system Investigation and resolution of grid middleware problems at the level of 16 -20 contacts per week Weekly operations meetings for troubleshooting 13

Scientific Applications n n Rule: install through grid jobs, encourage selfcontained environments 11 applications q q q LHC (ATLAS, CMS) Astrophysics (LIGO/SDSS) Biochemical n n q Computer Science n n q Molecular X-ray diffraction GADU/Gnare: compares protein sequences Adaptive data placement and scheduling algorithms Grid Exerciser Over 100 users authorized to run on Grid 3 14

Scientific Applications n n Rule: install through grid jobs, encourage selfcontained environments 11 applications q q q LHC (ATLAS, CMS) Astrophysics (LIGO/SDSS) Biochemical n n q Computer Science n n q Molecular X-ray diffraction GADU/Gnare: compares protein sequences Adaptive data placement and scheduling algorithms Grid Exerciser Over 100 users authorized to run on Grid 3 14

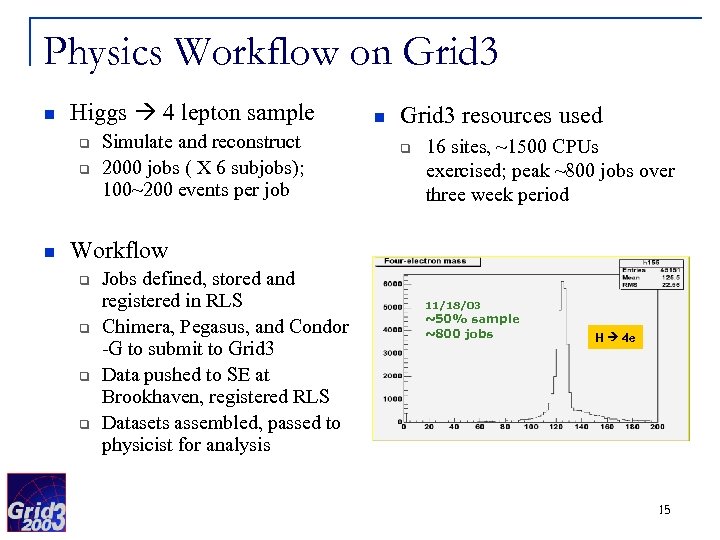

Physics Workflow on Grid 3 n Higgs 4 lepton sample q q n Simulate and reconstruct 2000 jobs ( X 6 subjobs); 100~200 events per job n Grid 3 resources used q 16 sites, ~1500 CPUs exercised; peak ~800 jobs over three week period Workflow q q Jobs defined, stored and registered in RLS Chimera, Pegasus, and Condor -G to submit to Grid 3 Data pushed to SE at Brookhaven, registered RLS Datasets assembled, passed to physicist for analysis 11/18/03 ~50% sample ~800 jobs H 4 e 15

Physics Workflow on Grid 3 n Higgs 4 lepton sample q q n Simulate and reconstruct 2000 jobs ( X 6 subjobs); 100~200 events per job n Grid 3 resources used q 16 sites, ~1500 CPUs exercised; peak ~800 jobs over three week period Workflow q q Jobs defined, stored and registered in RLS Chimera, Pegasus, and Condor -G to submit to Grid 3 Data pushed to SE at Brookhaven, registered RLS Datasets assembled, passed to physicist for analysis 11/18/03 ~50% sample ~800 jobs H 4 e 15

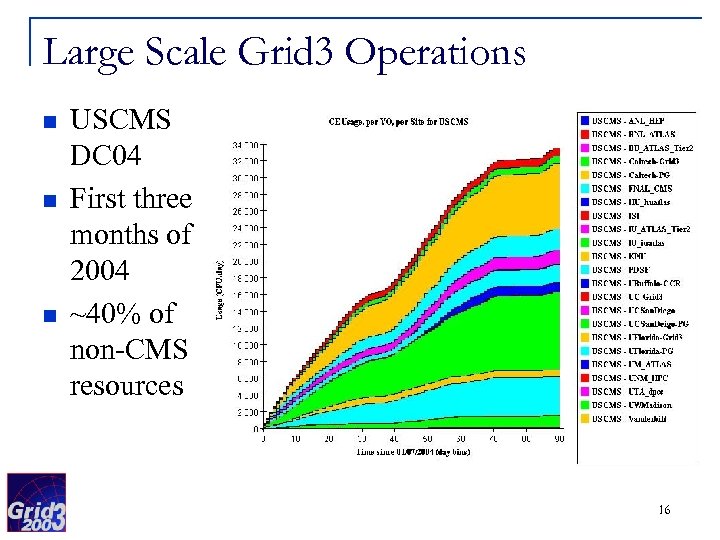

Large Scale Grid 3 Operations n n n USCMS DC 04 First three months of 2004 ~40% of non-CMS resources 16

Large Scale Grid 3 Operations n n n USCMS DC 04 First three months of 2004 ~40% of non-CMS resources 16

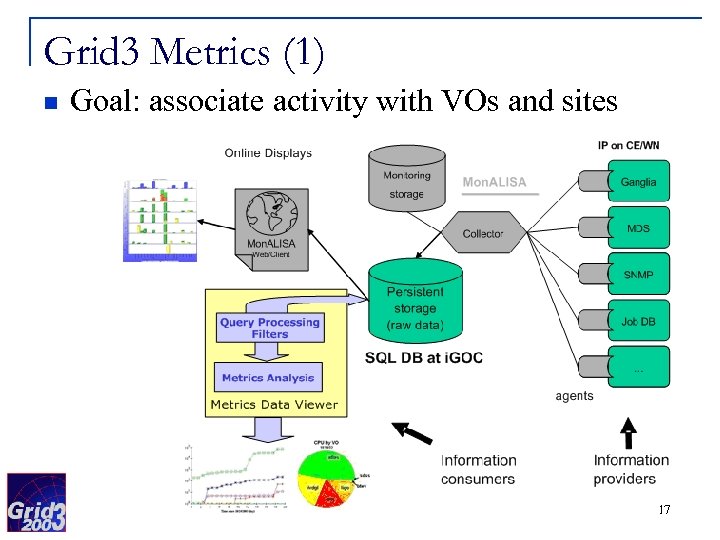

Grid 3 Metrics (1) n Goal: associate activity with VOs and sites 17

Grid 3 Metrics (1) n Goal: associate activity with VOs and sites 17

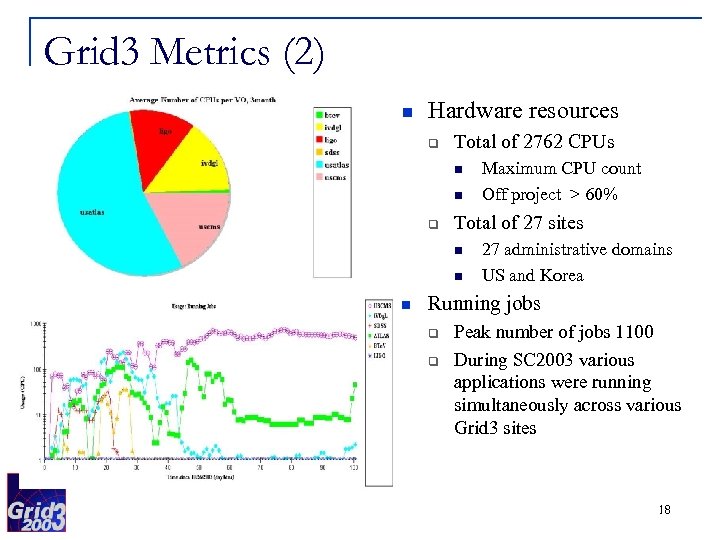

Grid 3 Metrics (2) n Hardware resources q Total of 2762 CPUs n n q Total of 27 sites n n n Maximum CPU count Off project > 60% 27 administrative domains US and Korea Running jobs q q Peak number of jobs 1100 During SC 2003 various applications were running simultaneously across various Grid 3 sites 18

Grid 3 Metrics (2) n Hardware resources q Total of 2762 CPUs n n q Total of 27 sites n n n Maximum CPU count Off project > 60% 27 administrative domains US and Korea Running jobs q q Peak number of jobs 1100 During SC 2003 various applications were running simultaneously across various Grid 3 sites 18

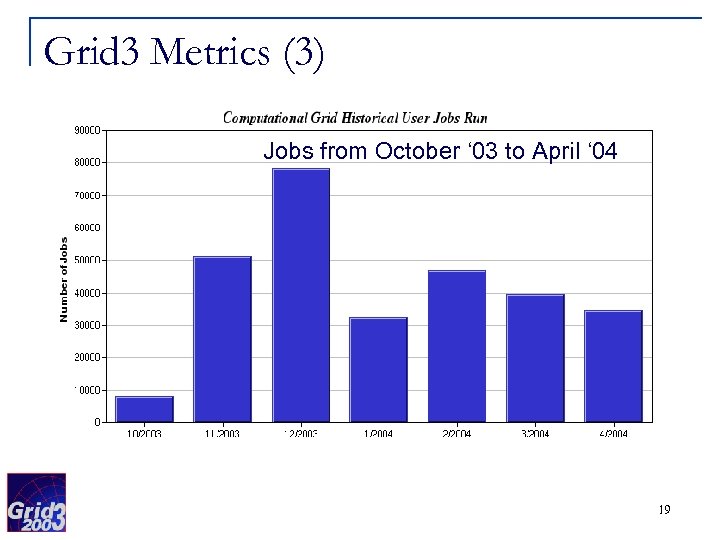

Grid 3 Metrics (3) Jobs from October ‘ 03 to April ‘ 04 19

Grid 3 Metrics (3) Jobs from October ‘ 03 to April ‘ 04 19

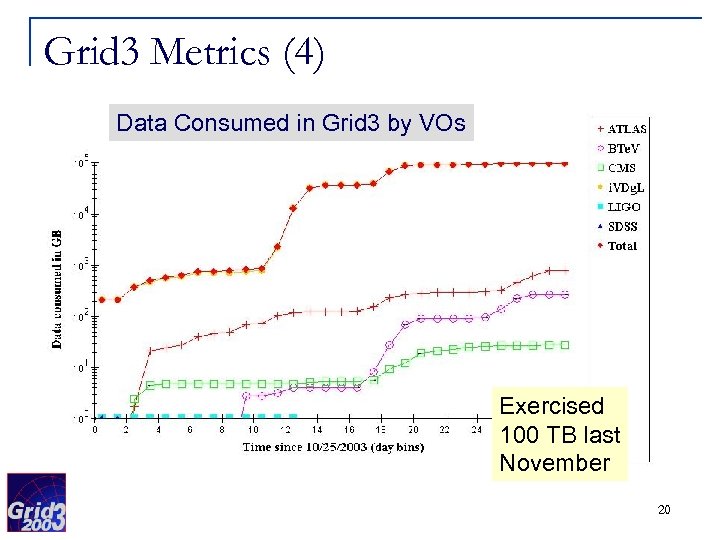

Grid 3 Metrics (4) Data Consumed in Grid 3 by VOs Exercised 100 TB last November 20

Grid 3 Metrics (4) Data Consumed in Grid 3 by VOs Exercised 100 TB last November 20

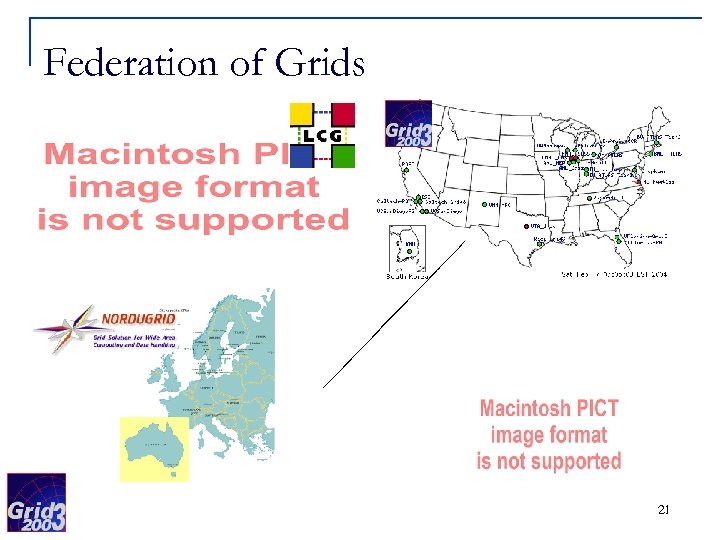

Federation of Grids 21

Federation of Grids 21

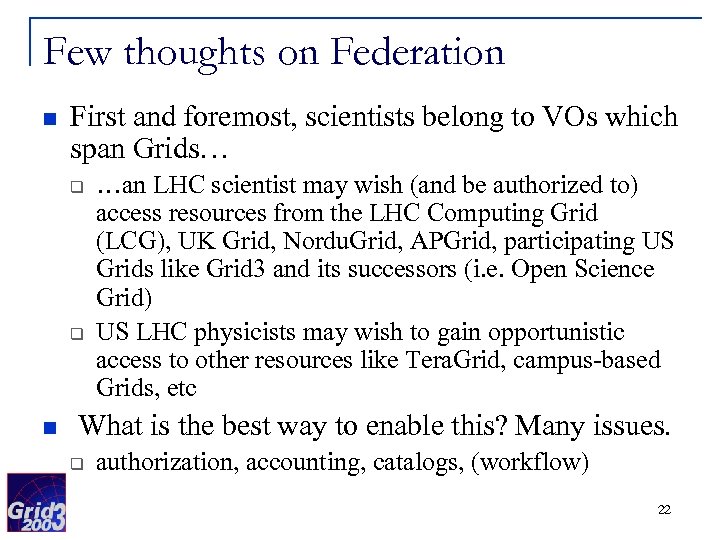

Few thoughts on Federation n First and foremost, scientists belong to VOs which span Grids… q q n …an LHC scientist may wish (and be authorized to) access resources from the LHC Computing Grid (LCG), UK Grid, Nordu. Grid, APGrid, participating US Grids like Grid 3 and its successors (i. e. Open Science Grid) US LHC physicists may wish to gain opportunistic access to other resources like Tera. Grid, campus-based Grids, etc What is the best way to enable this? Many issues. q authorization, accounting, catalogs, (workflow) 22

Few thoughts on Federation n First and foremost, scientists belong to VOs which span Grids… q q n …an LHC scientist may wish (and be authorized to) access resources from the LHC Computing Grid (LCG), UK Grid, Nordu. Grid, APGrid, participating US Grids like Grid 3 and its successors (i. e. Open Science Grid) US LHC physicists may wish to gain opportunistic access to other resources like Tera. Grid, campus-based Grids, etc What is the best way to enable this? Many issues. q authorization, accounting, catalogs, (workflow) 22

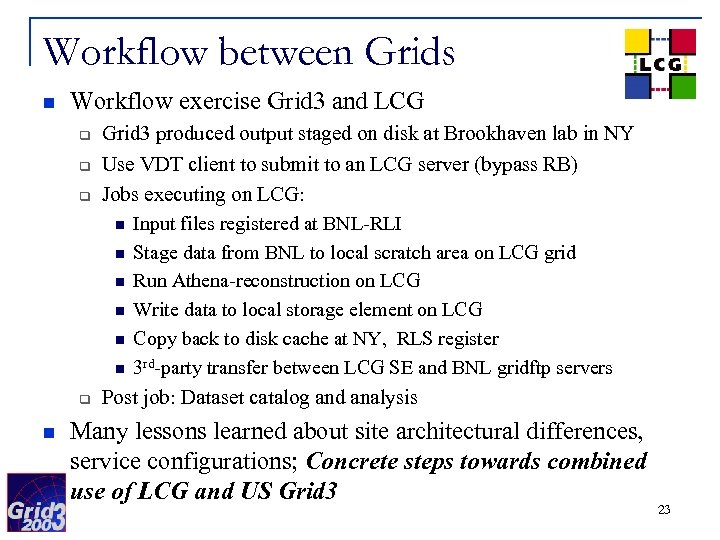

Workflow between Grids n Workflow exercise Grid 3 and LCG q q n Grid 3 produced output staged on disk at Brookhaven lab in NY Use VDT client to submit to an LCG server (bypass RB) Jobs executing on LCG: n Input files registered at BNL-RLI n Stage data from BNL to local scratch area on LCG grid n Run Athena-reconstruction on LCG n Write data to local storage element on LCG n Copy back to disk cache at NY, RLS register n 3 rd-party transfer between LCG SE and BNL gridftp servers Post job: Dataset catalog and analysis Many lessons learned about site architectural differences, service configurations; Concrete steps towards combined use of LCG and US Grid 3 23

Workflow between Grids n Workflow exercise Grid 3 and LCG q q n Grid 3 produced output staged on disk at Brookhaven lab in NY Use VDT client to submit to an LCG server (bypass RB) Jobs executing on LCG: n Input files registered at BNL-RLI n Stage data from BNL to local scratch area on LCG grid n Run Athena-reconstruction on LCG n Write data to local storage element on LCG n Copy back to disk cache at NY, RLS register n 3 rd-party transfer between LCG SE and BNL gridftp servers Post job: Dataset catalog and analysis Many lessons learned about site architectural differences, service configurations; Concrete steps towards combined use of LCG and US Grid 3 23

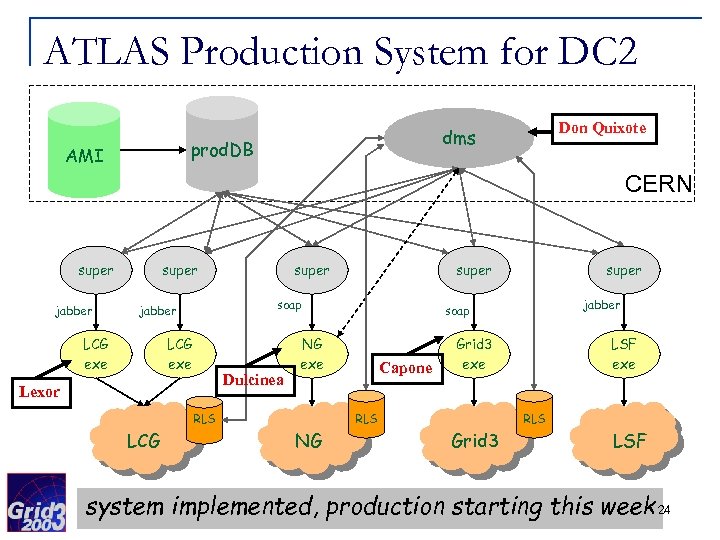

ATLAS Production System for DC 2 prod. DB AMI Don Quixote dms CERN super jabber super LCG exe Dulcinea Lexor jabber Capone Grid 3 exe RLS NG super soap NG exe RLS LCG super soap jabber LCG exe super LSF exe RLS Grid 3 LSF system implemented, production starting this week 24

ATLAS Production System for DC 2 prod. DB AMI Don Quixote dms CERN super jabber super LCG exe Dulcinea Lexor jabber Capone Grid 3 exe RLS NG super soap NG exe RLS LCG super soap jabber LCG exe super LSF exe RLS Grid 3 LSF system implemented, production starting this week 24

Conclusions n n Grid 2003 project created a persistent, production scale grid infrastructure, Grid 3 Continuous use since 10/03 Grid 3 will undergo adiabatic upgrades, and continue operations for 2004 (Grid 3+) Outlook: q q q Follow-on project will involve more sites and VOs Larger “consortia” forming, Open Science Grid (OSG) New projects will be defined in this larger framework 25

Conclusions n n Grid 2003 project created a persistent, production scale grid infrastructure, Grid 3 Continuous use since 10/03 Grid 3 will undergo adiabatic upgrades, and continue operations for 2004 (Grid 3+) Outlook: q q q Follow-on project will involve more sites and VOs Larger “consortia” forming, Open Science Grid (OSG) New projects will be defined in this larger framework 25

Acknowledgements n the entire Grid 2003 team which did all the work! q q n site administrators application developers i. GOC team coordinators and project teams Slides I’ve taken from presentations by: q q Leigh Grundhoefer (Indiana, i. VDGL) Ian Fisk (Fermilab, USCMS) Ruth Pordes (Fermilab, PPDG) Jorge Rodriguez (Florida, USCMS/i. VDGL) 26

Acknowledgements n the entire Grid 2003 team which did all the work! q q n site administrators application developers i. GOC team coordinators and project teams Slides I’ve taken from presentations by: q q Leigh Grundhoefer (Indiana, i. VDGL) Ian Fisk (Fermilab, USCMS) Ruth Pordes (Fermilab, PPDG) Jorge Rodriguez (Florida, USCMS/i. VDGL) 26