8ef08f8bad9965781df795b205e849aa.ppt

- Количество слайдов: 51

Gri. Phy. N: Grid Physics Network and i. VDGL: International Virtual Data Grid Laboratory SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N: Grid Physics Network and i. VDGL: International Virtual Data Grid Laboratory SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Collaboratory Basics • Two NSF-funded Grid projects in HENP (high energy and nuclear physics) and computer science – MPS and CISE have oversight • Gri. Phy. N and i. VDGL are too closely related to discuss one without discussing the other – One is CS research and test application, the other is to build an international scale facility to do these tests, and to address other goals as well – Share vision, personnel and components • These two collaboratories are part of a larger effort to develop the components and infrastructure for supporting data intensive science SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Collaboratory Basics • Two NSF-funded Grid projects in HENP (high energy and nuclear physics) and computer science – MPS and CISE have oversight • Gri. Phy. N and i. VDGL are too closely related to discuss one without discussing the other – One is CS research and test application, the other is to build an international scale facility to do these tests, and to address other goals as well – Share vision, personnel and components • These two collaboratories are part of a larger effort to develop the components and infrastructure for supporting data intensive science SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Some Science Drivers • Computation is becoming an increasingly important tool of scientific discovery – Computationally intense analyses – Global collaborations – Large datasets • The increasing importance of computation in science is more pronounced in some fields – Complex (e. g. climate modeling) and high volume (HEP) simulations – Detailed rendering (e. g. biomedical informatics) – Data intensive science (e. g. astronomy and physics) • Gri. Phy. N and i. VDGL were founded to provide the models and software for the data management infrastructure for four large projects SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Some Science Drivers • Computation is becoming an increasingly important tool of scientific discovery – Computationally intense analyses – Global collaborations – Large datasets • The increasing importance of computation in science is more pronounced in some fields – Complex (e. g. climate modeling) and high volume (HEP) simulations – Detailed rendering (e. g. biomedical informatics) – Data intensive science (e. g. astronomy and physics) • Gri. Phy. N and i. VDGL were founded to provide the models and software for the data management infrastructure for four large projects SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

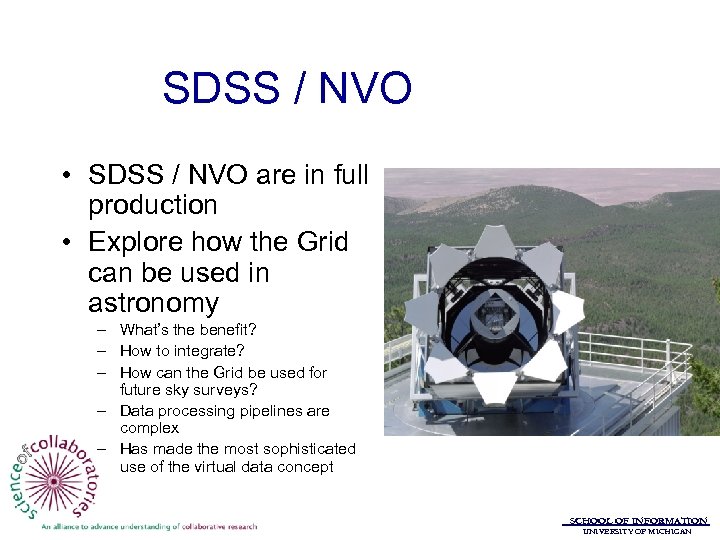

SDSS / NVO • SDSS / NVO are in full production • Explore how the Grid can be used in astronomy – What’s the benefit? – How to integrate? – How can the Grid be used for future sky surveys? – Data processing pipelines are complex – Has made the most sophisticated use of the virtual data concept SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

SDSS / NVO • SDSS / NVO are in full production • Explore how the Grid can be used in astronomy – What’s the benefit? – How to integrate? – How can the Grid be used for future sky surveys? – Data processing pipelines are complex – Has made the most sophisticated use of the virtual data concept SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

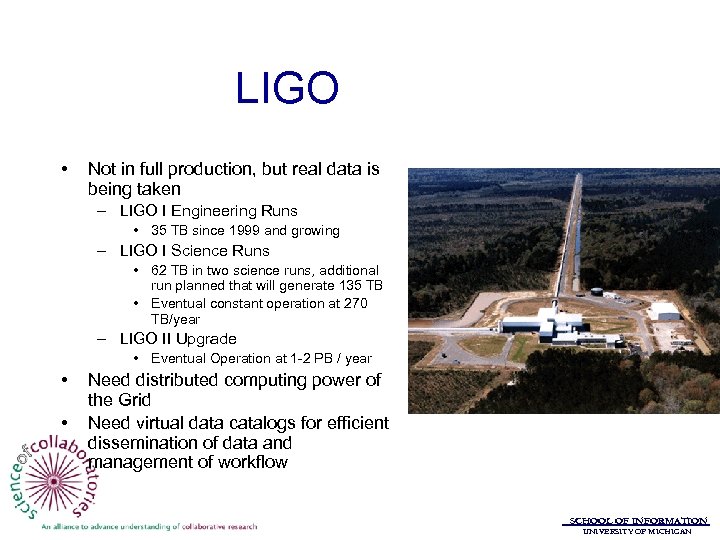

LIGO • Not in full production, but real data is being taken – LIGO I Engineering Runs • 35 TB since 1999 and growing – LIGO I Science Runs • 62 TB in two science runs, additional run planned that will generate 135 TB • Eventual constant operation at 270 TB/year – LIGO II Upgrade • Eventual Operation at 1 -2 PB / year • • Need distributed computing power of the Grid Need virtual data catalogs for efficient dissemination of data and management of workflow SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

LIGO • Not in full production, but real data is being taken – LIGO I Engineering Runs • 35 TB since 1999 and growing – LIGO I Science Runs • 62 TB in two science runs, additional run planned that will generate 135 TB • Eventual constant operation at 270 TB/year – LIGO II Upgrade • Eventual Operation at 1 -2 PB / year • • Need distributed computing power of the Grid Need virtual data catalogs for efficient dissemination of data and management of workflow SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

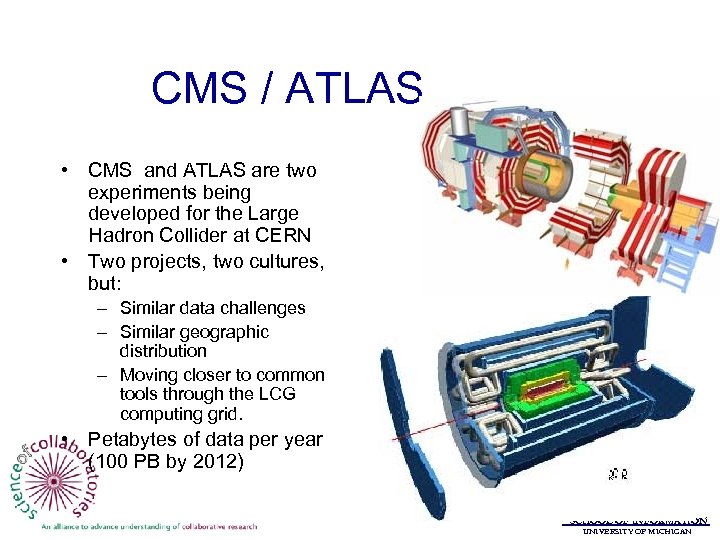

CMS / ATLAS • CMS and ATLAS are two experiments being developed for the Large Hadron Collider at CERN • Two projects, two cultures, but: – Similar data challenges – Similar geographic distribution – Moving closer to common tools through the LCG computing grid. • Petabytes of data per year (100 PB by 2012) SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

CMS / ATLAS • CMS and ATLAS are two experiments being developed for the Large Hadron Collider at CERN • Two projects, two cultures, but: – Similar data challenges – Similar geographic distribution – Moving closer to common tools through the LCG computing grid. • Petabytes of data per year (100 PB by 2012) SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Function Types • Gri. Phy. N – Distributed Research Center • i. VDGL – Community Data System SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Function Types • Gri. Phy. N – Distributed Research Center • i. VDGL – Community Data System SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N Funding • Funded in 2000 through NSF ITR program • $11. 9 M + $1. 6 M matching SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N Funding • Funded in 2000 through NSF ITR program • $11. 9 M + $1. 6 M matching SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N Project Team • Led by U. Florida and U. Chicago – PD’s Paul Avery (UF) and Ian Foster (UC) • 22 Participant institutions – 13 funded – 9 unfunded • Roughly 82 people involved • 2/3 of activity computer science, 1/3 physics SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N Project Team • Led by U. Florida and U. Chicago – PD’s Paul Avery (UF) and Ian Foster (UC) • 22 Participant institutions – 13 funded – 9 unfunded • Roughly 82 people involved • 2/3 of activity computer science, 1/3 physics SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

• Funded Institutions – – – U. Florida U. Chicago Cal. Tech U. Wisconsin - Madison USC / ISI Indiana U. Johns Hopkins U. Texas A & M UT Brownsville UC Berkeley U Wisconsin Milwaukee SDSC • Unfunded Institutions – – – – – Argonne NL Fermi NAL Brookhaven NL UC San Diego U. Pennsylvania U. Illinois - Chicago Stanford Harvard Boston U. Lawrence Berkeley Lab SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

• Funded Institutions – – – U. Florida U. Chicago Cal. Tech U. Wisconsin - Madison USC / ISI Indiana U. Johns Hopkins U. Texas A & M UT Brownsville UC Berkeley U Wisconsin Milwaukee SDSC • Unfunded Institutions – – – – – Argonne NL Fermi NAL Brookhaven NL UC San Diego U. Pennsylvania U. Illinois - Chicago Stanford Harvard Boston U. Lawrence Berkeley Lab SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

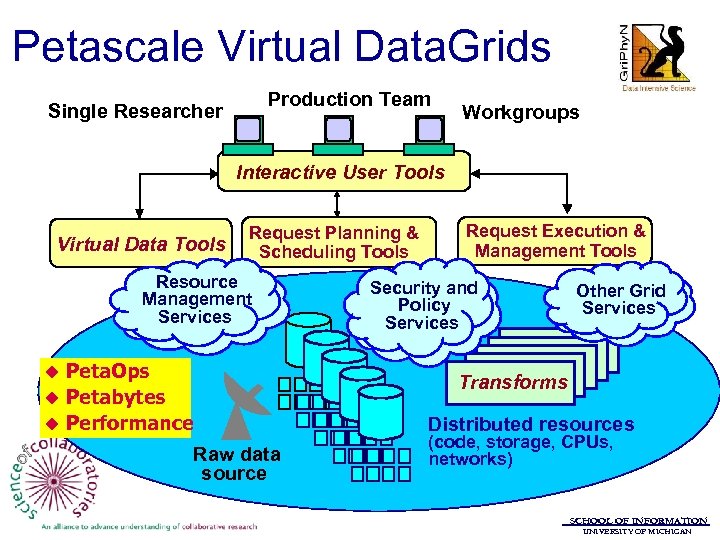

Technology • Gri. Phy. N’s science drivers demand timely access to very large datasets and the computer cycles and information management infrastructure needed to manipulate and transform those datasets in a meaningful way • Data Grids are an approach to data management and resource sharing in environments where datasets are very large – Policy-driven resource sharing, distributed storage, distributed computation, replication and provenance tracking • Gri. Phy. N and i. VDGL aim to enable petascale virtual data grids SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Technology • Gri. Phy. N’s science drivers demand timely access to very large datasets and the computer cycles and information management infrastructure needed to manipulate and transform those datasets in a meaningful way • Data Grids are an approach to data management and resource sharing in environments where datasets are very large – Policy-driven resource sharing, distributed storage, distributed computation, replication and provenance tracking • Gri. Phy. N and i. VDGL aim to enable petascale virtual data grids SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Petascale Virtual Data. Grids Production Team Single Researcher Workgroups Interactive User Tools Virtual Data Tools Request Planning & Scheduling Tools Resource Management Services Peta. Ops u Petabytes u Performance u Raw data source Request Execution & Management Tools Security and Policy Services Other Grid Services Transforms Distributed resources (code, storage, CPUs, networks) SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Petascale Virtual Data. Grids Production Team Single Researcher Workgroups Interactive User Tools Virtual Data Tools Request Planning & Scheduling Tools Resource Management Services Peta. Ops u Petabytes u Performance u Raw data source Request Execution & Management Tools Security and Policy Services Other Grid Services Transforms Distributed resources (code, storage, CPUs, networks) SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

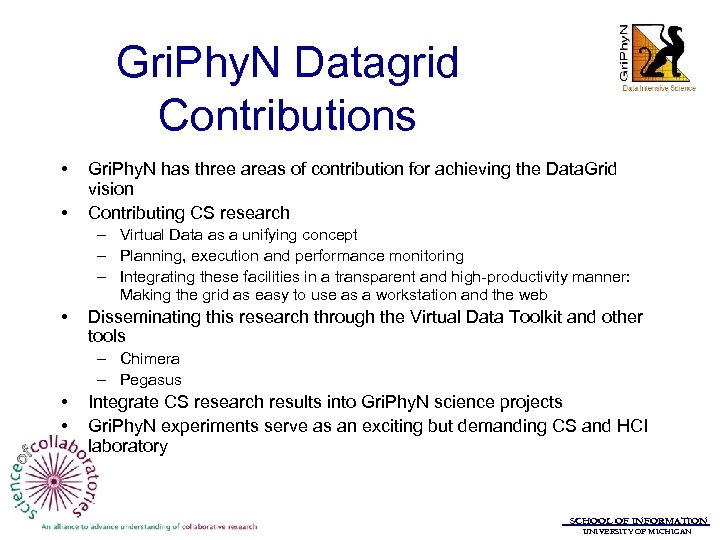

Gri. Phy. N Datagrid Contributions • • Gri. Phy. N has three areas of contribution for achieving the Data. Grid vision Contributing CS research – Virtual Data as a unifying concept – Planning, execution and performance monitoring – Integrating these facilities in a transparent and high-productivity manner: Making the grid as easy to use as a workstation and the web • Disseminating this research through the Virtual Data Toolkit and other tools – Chimera – Pegasus • • Integrate CS research results into Gri. Phy. N science projects Gri. Phy. N experiments serve as an exciting but demanding CS and HCI laboratory SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Gri. Phy. N Datagrid Contributions • • Gri. Phy. N has three areas of contribution for achieving the Data. Grid vision Contributing CS research – Virtual Data as a unifying concept – Planning, execution and performance monitoring – Integrating these facilities in a transparent and high-productivity manner: Making the grid as easy to use as a workstation and the web • Disseminating this research through the Virtual Data Toolkit and other tools – Chimera – Pegasus • • Integrate CS research results into Gri. Phy. N science projects Gri. Phy. N experiments serve as an exciting but demanding CS and HCI laboratory SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

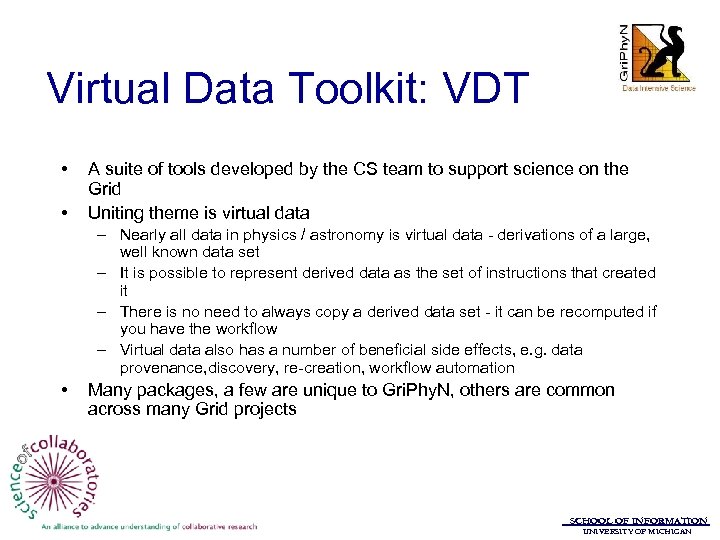

Virtual Data Toolkit: VDT • • A suite of tools developed by the CS team to support science on the Grid Uniting theme is virtual data – Nearly all data in physics / astronomy is virtual data - derivations of a large, well known data set – It is possible to represent derived data as the set of instructions that created it – There is no need to always copy a derived data set - it can be recomputed if you have the workflow – Virtual data also has a number of beneficial side effects, e. g. data provenance, discovery, re-creation, workflow automation • Many packages, a few are unique to Gri. Phy. N, others are common across many Grid projects SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Virtual Data Toolkit: VDT • • A suite of tools developed by the CS team to support science on the Grid Uniting theme is virtual data – Nearly all data in physics / astronomy is virtual data - derivations of a large, well known data set – It is possible to represent derived data as the set of instructions that created it – There is no need to always copy a derived data set - it can be recomputed if you have the workflow – Virtual data also has a number of beneficial side effects, e. g. data provenance, discovery, re-creation, workflow automation • Many packages, a few are unique to Gri. Phy. N, others are common across many Grid projects SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

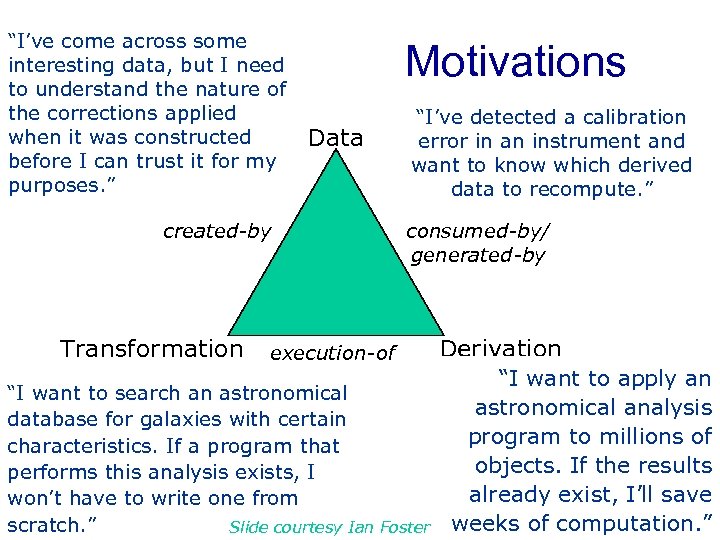

“I’ve come across some interesting data, but I need to understand the nature of the corrections applied when it was constructed before I can trust it for my purposes. ” Motivations Data created-by Transformation “I’ve detected a calibration error in an instrument and want to know which derived data to recompute. ” consumed-by/ generated-by execution-of “I want to search an astronomical database for galaxies with certain characteristics. If a program that performs this analysis exists, I won’t have to write one from scratch. ” Slide courtesy Ian Foster Derivation “I want to apply an astronomical analysis program to millions of objects. If the results already exist, I’ll save SCHOOL OF INFORMATION weeks of computation. ” UNIVERSITY OF MICHIGAN

“I’ve come across some interesting data, but I need to understand the nature of the corrections applied when it was constructed before I can trust it for my purposes. ” Motivations Data created-by Transformation “I’ve detected a calibration error in an instrument and want to know which derived data to recompute. ” consumed-by/ generated-by execution-of “I want to search an astronomical database for galaxies with certain characteristics. If a program that performs this analysis exists, I won’t have to write one from scratch. ” Slide courtesy Ian Foster Derivation “I want to apply an astronomical analysis program to millions of objects. If the results already exist, I’ll save SCHOOL OF INFORMATION weeks of computation. ” UNIVERSITY OF MICHIGAN

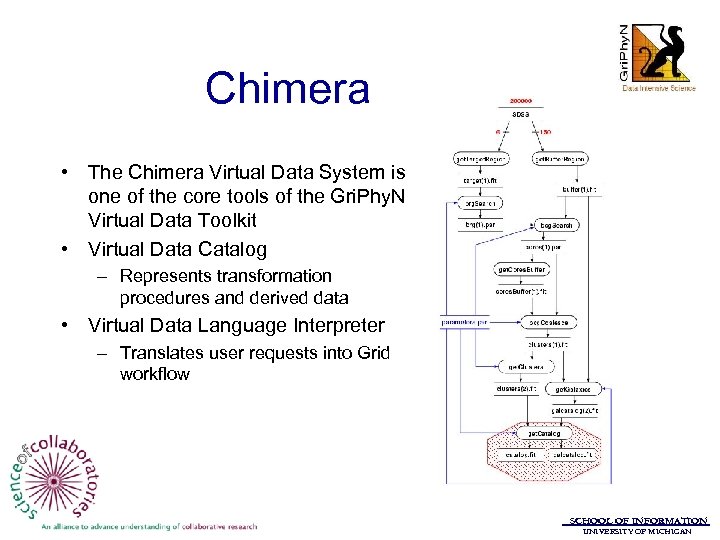

Chimera • The Chimera Virtual Data System is one of the core tools of the Gri. Phy. N Virtual Data Toolkit • Virtual Data Catalog – Represents transformation procedures and derived data • Virtual Data Language Interpreter – Translates user requests into Grid workflow SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Chimera • The Chimera Virtual Data System is one of the core tools of the Gri. Phy. N Virtual Data Toolkit • Virtual Data Catalog – Represents transformation procedures and derived data • Virtual Data Language Interpreter – Translates user requests into Grid workflow SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Pegasus • Planning Execution in Grids • Tool for mapping complex workflows onto the Grid • Converts abstract Chimera workflow into a concrete workflow, which is sent to DAGman for execution – DAGman is the Condor meta-scheduler – Determines sites and data transfers SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Pegasus • Planning Execution in Grids • Tool for mapping complex workflows onto the Grid • Converts abstract Chimera workflow into a concrete workflow, which is sent to DAGman for execution – DAGman is the Condor meta-scheduler – Determines sites and data transfers SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

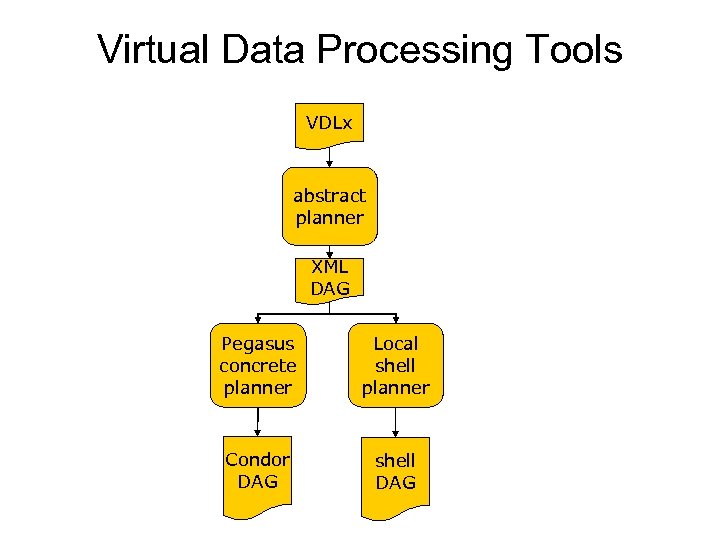

Virtual Data Processing Tools VDLx abstract planner XML DAG Pegasus concrete planner Local shell planner Condor DAG shell DAG

Virtual Data Processing Tools VDLx abstract planner XML DAG Pegasus concrete planner Local shell planner Condor DAG shell DAG

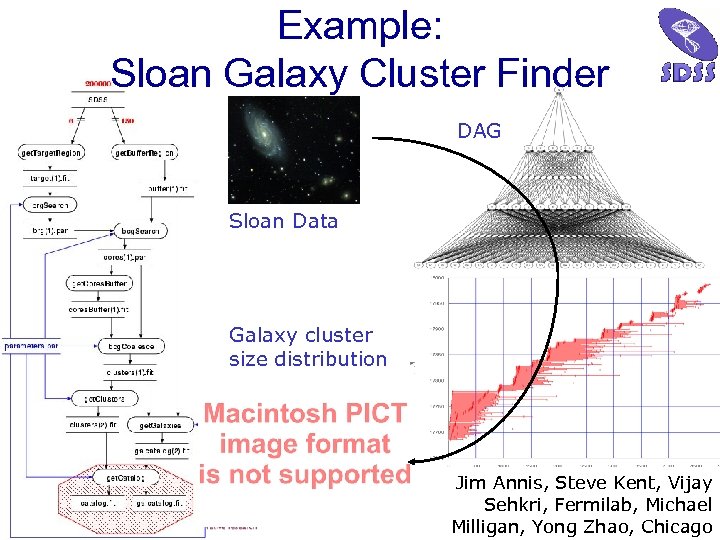

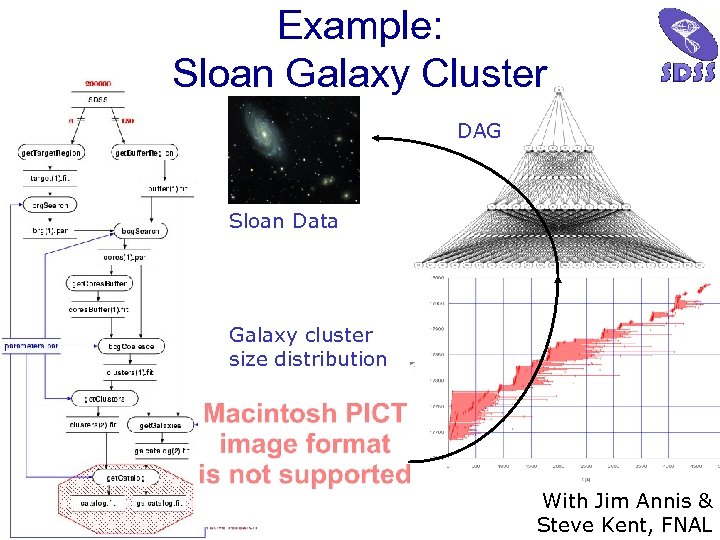

Example: Sloan Galaxy Cluster Finder DAG Sloan Data Galaxy cluster size distribution Jim Annis, Steve Kent, Vijay Sehkri, Fermilab, Michael SCHOOL OF Milligan, Yong UNIVERSITYINFORMATION Zhao, OF MICHIGAN Chicago

Example: Sloan Galaxy Cluster Finder DAG Sloan Data Galaxy cluster size distribution Jim Annis, Steve Kent, Vijay Sehkri, Fermilab, Michael SCHOOL OF Milligan, Yong UNIVERSITYINFORMATION Zhao, OF MICHIGAN Chicago

Example: Sloan Galaxy Cluster DAG Sloan Data Galaxy cluster size distribution With Jim Annis & SCHOOL OF INFORMATION Steve Kent, FNAL UNIVERSITY OF MICHIGAN

Example: Sloan Galaxy Cluster DAG Sloan Data Galaxy cluster size distribution With Jim Annis & SCHOOL OF INFORMATION Steve Kent, FNAL UNIVERSITY OF MICHIGAN

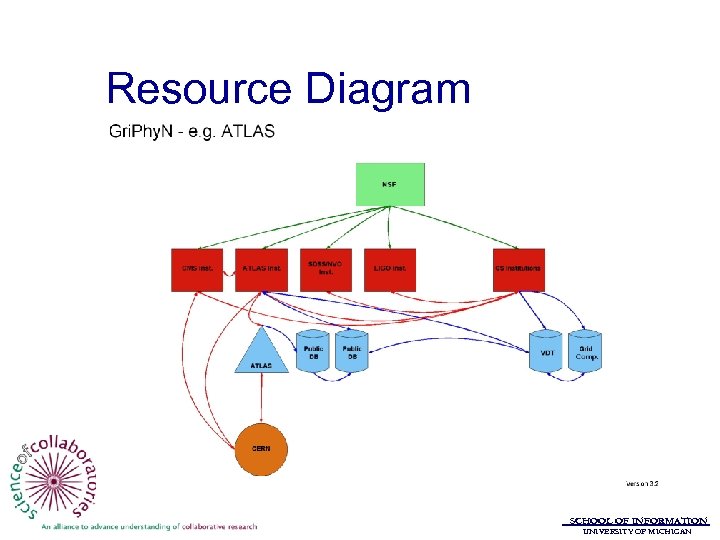

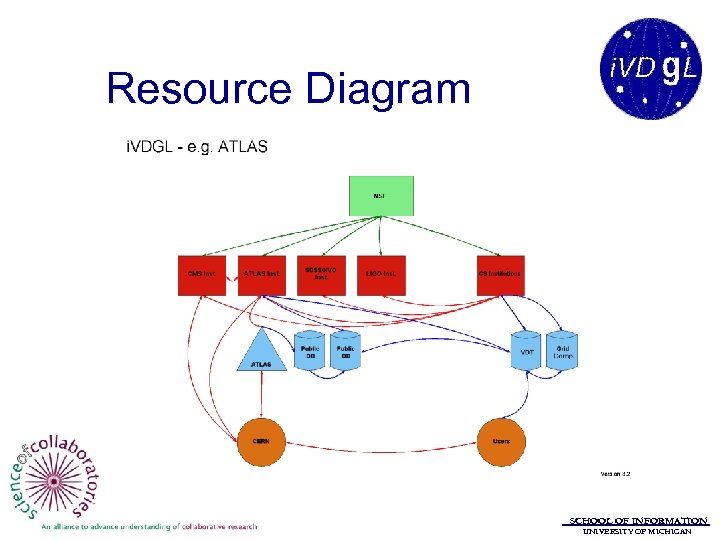

Resource Diagram SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Resource Diagram SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

International Virtual Data Grid Laboratory: i. VDGL SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

International Virtual Data Grid Laboratory: i. VDGL SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Some Context • There is much more to the Data. Grid world than Gri. Phy. N • Broad problem space, with many cooperative projects – U. S. • Particle Physics Data Grid (PPDG) • Gri. Phy. N – Europe • Data. TAG • EU Data. Grid – International • i. VDGL SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Some Context • There is much more to the Data. Grid world than Gri. Phy. N • Broad problem space, with many cooperative projects – U. S. • Particle Physics Data Grid (PPDG) • Gri. Phy. N – Europe • Data. TAG • EU Data. Grid – International • i. VDGL SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

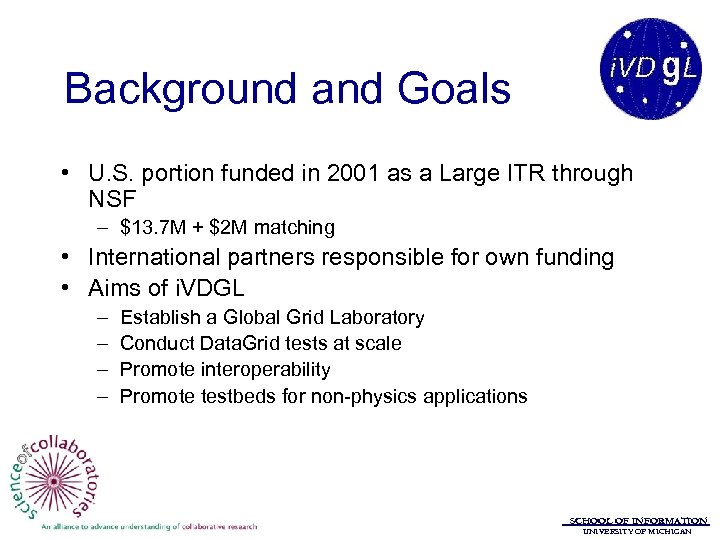

Background and Goals • U. S. portion funded in 2001 as a Large ITR through NSF – $13. 7 M + $2 M matching • International partners responsible for own funding • Aims of i. VDGL – – Establish a Global Grid Laboratory Conduct Data. Grid tests at scale Promote interoperability Promote testbeds for non-physics applications SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Background and Goals • U. S. portion funded in 2001 as a Large ITR through NSF – $13. 7 M + $2 M matching • International partners responsible for own funding • Aims of i. VDGL – – Establish a Global Grid Laboratory Conduct Data. Grid tests at scale Promote interoperability Promote testbeds for non-physics applications SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

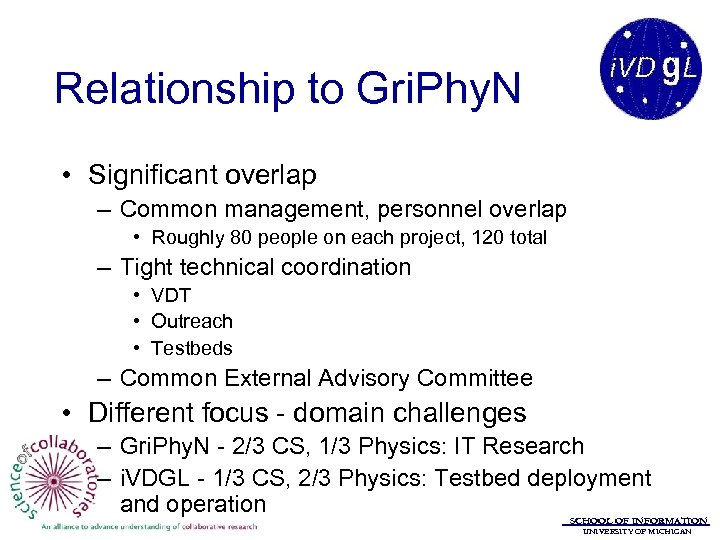

Relationship to Gri. Phy. N • Significant overlap – Common management, personnel overlap • Roughly 80 people on each project, 120 total – Tight technical coordination • VDT • Outreach • Testbeds – Common External Advisory Committee • Different focus - domain challenges – Gri. Phy. N - 2/3 CS, 1/3 Physics: IT Research – i. VDGL - 1/3 CS, 2/3 Physics: Testbed deployment and operation SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Relationship to Gri. Phy. N • Significant overlap – Common management, personnel overlap • Roughly 80 people on each project, 120 total – Tight technical coordination • VDT • Outreach • Testbeds – Common External Advisory Committee • Different focus - domain challenges – Gri. Phy. N - 2/3 CS, 1/3 Physics: IT Research – i. VDGL - 1/3 CS, 2/3 Physics: Testbed deployment and operation SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

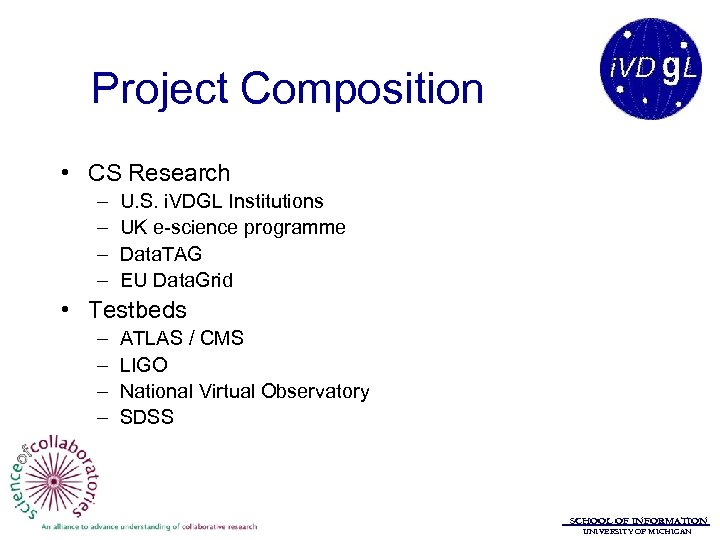

Project Composition • CS Research – – U. S. i. VDGL Institutions UK e-science programme Data. TAG EU Data. Grid • Testbeds – – ATLAS / CMS LIGO National Virtual Observatory SDSS SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Project Composition • CS Research – – U. S. i. VDGL Institutions UK e-science programme Data. TAG EU Data. Grid • Testbeds – – ATLAS / CMS LIGO National Virtual Observatory SDSS SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

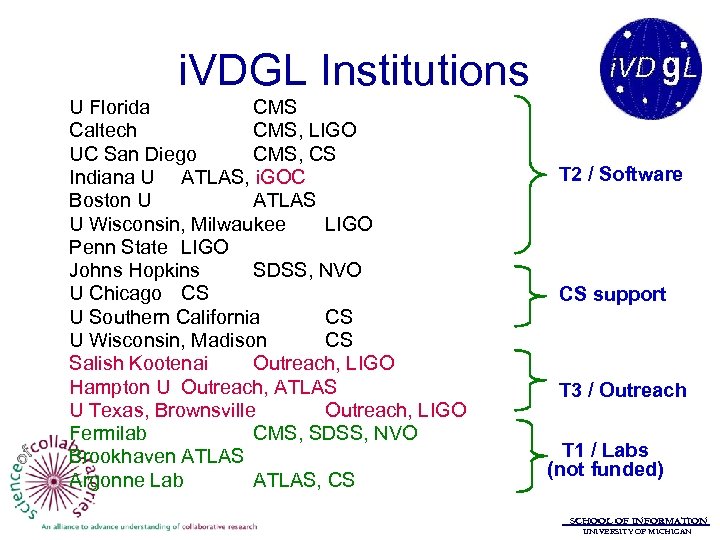

i. VDGL Institutions U Florida CMS Caltech CMS, LIGO UC San Diego CMS, CS Indiana U ATLAS, i. GOC Boston U ATLAS U Wisconsin, Milwaukee LIGO Penn State LIGO Johns Hopkins SDSS, NVO U Chicago CS U Southern California CS U Wisconsin, Madison CS Salish Kootenai Outreach, LIGO Hampton U Outreach, ATLAS U Texas, Brownsville Outreach, LIGO Fermilab CMS, SDSS, NVO Brookhaven ATLAS Argonne Lab ATLAS, CS T 2 / Software CS support T 3 / Outreach T 1 / Labs (not funded) SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

i. VDGL Institutions U Florida CMS Caltech CMS, LIGO UC San Diego CMS, CS Indiana U ATLAS, i. GOC Boston U ATLAS U Wisconsin, Milwaukee LIGO Penn State LIGO Johns Hopkins SDSS, NVO U Chicago CS U Southern California CS U Wisconsin, Madison CS Salish Kootenai Outreach, LIGO Hampton U Outreach, ATLAS U Texas, Brownsville Outreach, LIGO Fermilab CMS, SDSS, NVO Brookhaven ATLAS Argonne Lab ATLAS, CS T 2 / Software CS support T 3 / Outreach T 1 / Labs (not funded) SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

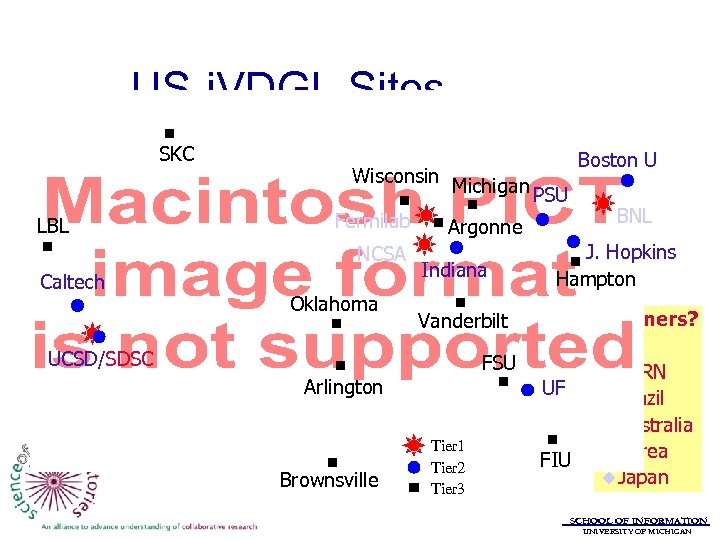

US-i. VDGL Sites SKC LBL Wisconsin Michigan PSU Fermilab Argonne NCSA Caltech Boston U Oklahoma Indiana J. Hopkins Hampton Vanderbilt UCSD/SDSC FSU Arlington Brownsville BNL UF Tier 1 Tier 2 Tier 3 FIU Partners? u. EU u. CERN u. Brazil u. Australia u. Korea u. Japan SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

US-i. VDGL Sites SKC LBL Wisconsin Michigan PSU Fermilab Argonne NCSA Caltech Boston U Oklahoma Indiana J. Hopkins Hampton Vanderbilt UCSD/SDSC FSU Arlington Brownsville BNL UF Tier 1 Tier 2 Tier 3 FIU Partners? u. EU u. CERN u. Brazil u. Australia u. Korea u. Japan SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

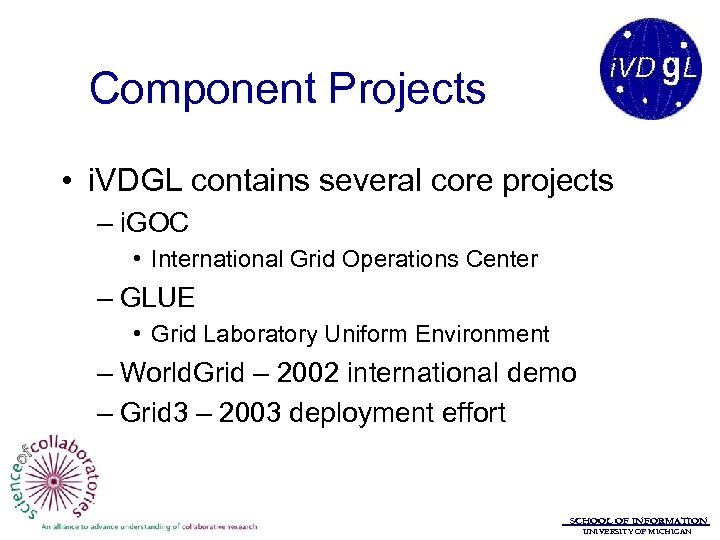

Component Projects • i. VDGL contains several core projects – i. GOC • International Grid Operations Center – GLUE • Grid Laboratory Uniform Environment – World. Grid – 2002 international demo – Grid 3 – 2003 deployment effort SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Component Projects • i. VDGL contains several core projects – i. GOC • International Grid Operations Center – GLUE • Grid Laboratory Uniform Environment – World. Grid – 2002 international demo – Grid 3 – 2003 deployment effort SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

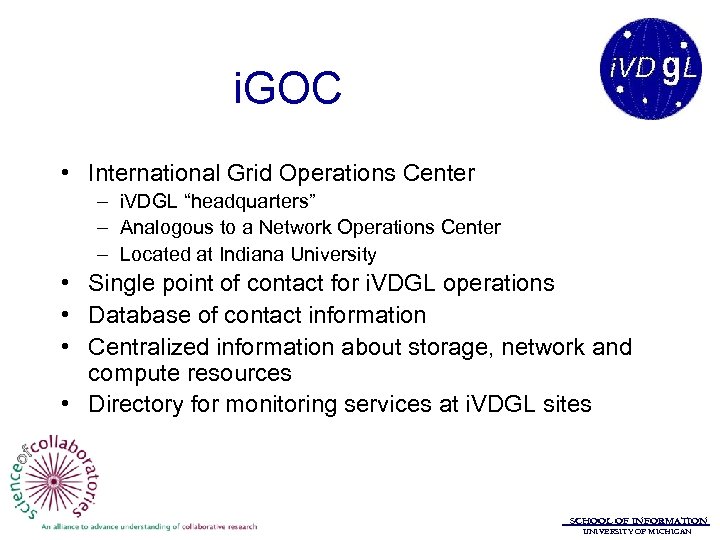

i. GOC • International Grid Operations Center – i. VDGL “headquarters” – Analogous to a Network Operations Center – Located at Indiana University • Single point of contact for i. VDGL operations • Database of contact information • Centralized information about storage, network and compute resources • Directory for monitoring services at i. VDGL sites SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

i. GOC • International Grid Operations Center – i. VDGL “headquarters” – Analogous to a Network Operations Center – Located at Indiana University • Single point of contact for i. VDGL operations • Database of contact information • Centralized information about storage, network and compute resources • Directory for monitoring services at i. VDGL sites SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

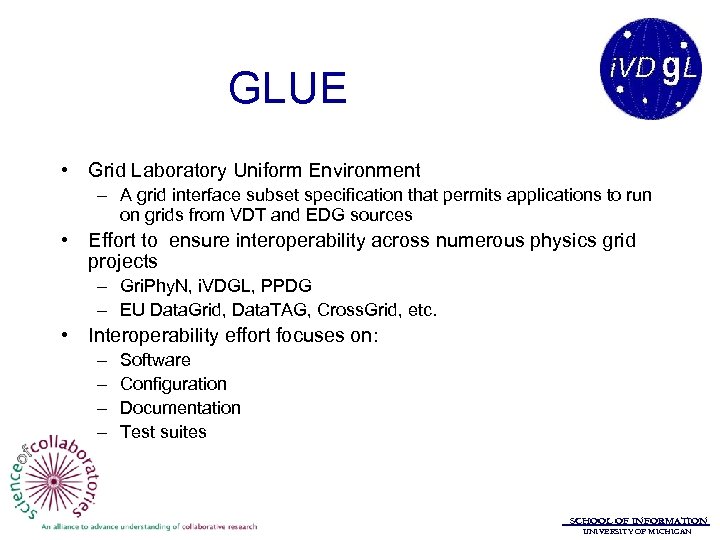

GLUE • Grid Laboratory Uniform Environment – A grid interface subset specification that permits applications to run on grids from VDT and EDG sources • Effort to ensure interoperability across numerous physics grid projects – Gri. Phy. N, i. VDGL, PPDG – EU Data. Grid, Data. TAG, Cross. Grid, etc. • Interoperability effort focuses on: – – Software Configuration Documentation Test suites SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

GLUE • Grid Laboratory Uniform Environment – A grid interface subset specification that permits applications to run on grids from VDT and EDG sources • Effort to ensure interoperability across numerous physics grid projects – Gri. Phy. N, i. VDGL, PPDG – EU Data. Grid, Data. TAG, Cross. Grid, etc. • Interoperability effort focuses on: – – Software Configuration Documentation Test suites SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

World. Grid • Effort at a world wide Data. Grid • Easy to deploy and administer – Middleware based on VDT – Chimera development – Scalability • Demo at SC 2002 – United Data. TAG and i. VDGL SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

World. Grid • Effort at a world wide Data. Grid • Easy to deploy and administer – Middleware based on VDT – Chimera development – Scalability • Demo at SC 2002 – United Data. TAG and i. VDGL SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Resource Diagram SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Resource Diagram SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

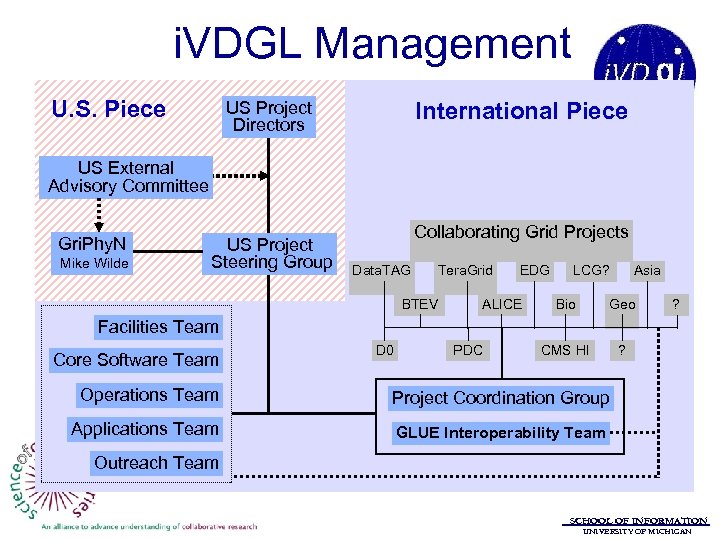

i. VDGL Management U. S. Piece International Piece US Project Directors US External Advisory Committee Gri. Phy. N Mike Wilde US Project Steering Group Collaborating Grid Projects Data. TAG Tera. Grid BTEV EDG ALICE LCG? Asia Bio Geo CMS HI ? ? Facilities Team Core Software Team D 0 PDC Operations Team Project Coordination Group Applications Team GLUE Interoperability Team Outreach Team SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

i. VDGL Management U. S. Piece International Piece US Project Directors US External Advisory Committee Gri. Phy. N Mike Wilde US Project Steering Group Collaborating Grid Projects Data. TAG Tera. Grid BTEV EDG ALICE LCG? Asia Bio Geo CMS HI ? ? Facilities Team Core Software Team D 0 PDC Operations Team Project Coordination Group Applications Team GLUE Interoperability Team Outreach Team SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Issues across projects • • • Technical readiness Infrastructure readiness Collaboration readiness Common ground Coupling of tasks Incentives SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Issues across projects • • • Technical readiness Infrastructure readiness Collaboration readiness Common ground Coupling of tasks Incentives SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Technical readiness • Very high • Physics and CS are both very high on the adoption curve, generally • Long history of infrastructure development to support national and global experiments SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Technical readiness • Very high • Physics and CS are both very high on the adoption curve, generally • Long history of infrastructure development to support national and global experiments SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Infrastructure readiness • Also quite high • Not all of the pieces are in place to meet demand • The expertise exists within these communities to build and maintain the necessary infrastructure – Community is inventing the infrastructure • Real understanding in the project that interoperability and standards are part of infrastructure SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Infrastructure readiness • Also quite high • Not all of the pieces are in place to meet demand • The expertise exists within these communities to build and maintain the necessary infrastructure – Community is inventing the infrastructure • Real understanding in the project that interoperability and standards are part of infrastructure SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Collaboration readiness • Again, quite high • Physicists have a long history of large scale collaboration • CS collaborations built on old relationships with long time collaborators SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Collaboration readiness • Again, quite high • Physicists have a long history of large scale collaboration • CS collaborations built on old relationships with long time collaborators SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Common ground • Perhaps a bit too high • What you can do with a physics background: – Win the ACM Turing Award – Co-invent the World Wide Web – Direct the development of the Abilene backbone • Because application community has a strong understanding of the required work and the technical aspects of the work, some friction about how work separates – History of physicists building computational tools e. g. ROOT SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Common ground • Perhaps a bit too high • What you can do with a physics background: – Win the ACM Turing Award – Co-invent the World Wide Web – Direct the development of the Abilene backbone • Because application community has a strong understanding of the required work and the technical aspects of the work, some friction about how work separates – History of physicists building computational tools e. g. ROOT SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Coupling of tasks • Tasks decompose into subtasks that are somewhat tightly coupled – Locate tightly coupled tasks at individual sites SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Coupling of tasks • Tasks decompose into subtasks that are somewhat tightly coupled – Locate tightly coupled tasks at individual sites SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Incentives • Both groups are well motivated, but for different reasons • CS is engaging in extremely cutting edge research across a large range of activities – Funded for deployment as well as development • Physics is structurally committed to global collaborations SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Incentives • Both groups are well motivated, but for different reasons • CS is engaging in extremely cutting edge research across a large range of activities – Funded for deployment as well as development • Physics is structurally committed to global collaborations SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Some successes • • Lessons in infrastructure development Outreach and engagement Community buy-in / investment Achieving the CS research goals for Virtual Data and Grid execution SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Some successes • • Lessons in infrastructure development Outreach and engagement Community buy-in / investment Achieving the CS research goals for Virtual Data and Grid execution SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Infrastructure Dev • Looking at the history of the Grid (electrical, not computational) – Long phases • • Invention Initial production use Adaptation Standardization / regulation – Geographically bounded dominant design • I. e. 220 vs. 110 SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Infrastructure Dev • Looking at the history of the Grid (electrical, not computational) – Long phases • • Invention Initial production use Adaptation Standardization / regulation – Geographically bounded dominant design • I. e. 220 vs. 110 SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Infrastructure Dev • We don’t see this with Gri. Phy. N / i. VDGL – Projects concurrent, not consecutive – Pipeline approach to phases of infrastructure development – Real efforts at cooperation with other Data. Grid communities • Why? – Deep understanding at high levels of project that building it alone is not enough – Directive and funding from NSF to do deployment SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Infrastructure Dev • We don’t see this with Gri. Phy. N / i. VDGL – Projects concurrent, not consecutive – Pipeline approach to phases of infrastructure development – Real efforts at cooperation with other Data. Grid communities • Why? – Deep understanding at high levels of project that building it alone is not enough – Directive and funding from NSF to do deployment SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Outreach • The Gri. Phy. N / i. VDGL community is extremely active in outreach to other projects and communities – Evangelizing virtual data – Distributed tools • This is a huge win for building CI that others can use SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Outreach • The Gri. Phy. N / i. VDGL community is extremely active in outreach to other projects and communities – Evangelizing virtual data – Distributed tools • This is a huge win for building CI that others can use SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Community buy-in • Together, these projects are funded at nearly $30 M over 6 years • This does not represent the total investment that was needed to make this work – – Leveraged FTE Unfunded testbed sites International partners Lots of collaboration with PPDG; starting some with Alliance Expeditions, etc • This kind of community commitment necessary for a project of this size to succeed SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Community buy-in • Together, these projects are funded at nearly $30 M over 6 years • This does not represent the total investment that was needed to make this work – – Leveraged FTE Unfunded testbed sites International partners Lots of collaboration with PPDG; starting some with Alliance Expeditions, etc • This kind of community commitment necessary for a project of this size to succeed SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Challenges • Staying relevant • Building infrastructure with term limited funding SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Challenges • Staying relevant • Building infrastructure with term limited funding SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Staying Relevant (1) • The application communities are fast paced, high power groups of people – Real danger in those communities developing tools that satisfice while they wait for the tools that are optimal and fit into a greater cyberinfrastructure – Each experiment ideally wants tools perfectly tailored to their needs • Maintaining user engagement and meeting the needs of each community is critical, but difficult SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Staying Relevant (1) • The application communities are fast paced, high power groups of people – Real danger in those communities developing tools that satisfice while they wait for the tools that are optimal and fit into a greater cyberinfrastructure – Each experiment ideally wants tools perfectly tailored to their needs • Maintaining user engagement and meeting the needs of each community is critical, but difficult SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Staying Relevant (2) • In addition to staying relevant to the experiments, Gri. Phy. N must also be relevant to the greater scientific community – To CS researchers – To similarly data-intensive projects • Easy to understand code, concepts, APIs, etc. • How do you accommodate both a focused client community and the broader scientific community • Common challenge across many CI initiatives SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Staying Relevant (2) • In addition to staying relevant to the experiments, Gri. Phy. N must also be relevant to the greater scientific community – To CS researchers – To similarly data-intensive projects • Easy to understand code, concepts, APIs, etc. • How do you accommodate both a focused client community and the broader scientific community • Common challenge across many CI initiatives SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Limited Term Investment • These projects are both funded under the NSF ITR mechanism – 5 year limit • Would you buy your telephone service from a company that was going to shut down after 5 years? • Challenge to find a sustainable support mechanism for CI SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN

Limited Term Investment • These projects are both funded under the NSF ITR mechanism – 5 year limit • Would you buy your telephone service from a company that was going to shut down after 5 years? • Challenge to find a sustainable support mechanism for CI SCHOOL OF INFORMATION UNIVERSITY OF MICHIGAN