650ba9f7386a70b715e24717721ed484.ppt

- Количество слайдов: 111

Global Data Services Developing Data-Intensive Applications Using Globus Software Ian Foster Computation Institute Argonne National Lab & University of Chicago

Acknowledgements l Thanks to Bill Allcock, Ann Chervenak, Neil P. Chue Hong, Mike Wilde, and Carl Kesselman for slides l I present the work of many Globus contributors: see www. globus. org l Work supported by NSF and DOE 2

Context l Science is increasingly about massive &/or complex data l Turning data into insight requires more than data access: we must connect data with people & computers 3

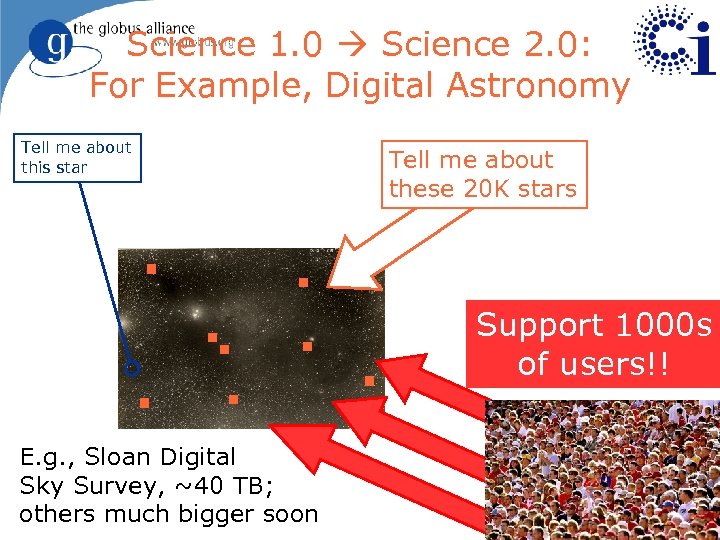

Science 1. 0 Science 2. 0: For Example, Digital Astronomy Tell me about this star Tell me about these 20 K stars Support 1000 s of users!! E. g. , Sloan Digital Sky Survey, ~40 TB; others much bigger soon 4

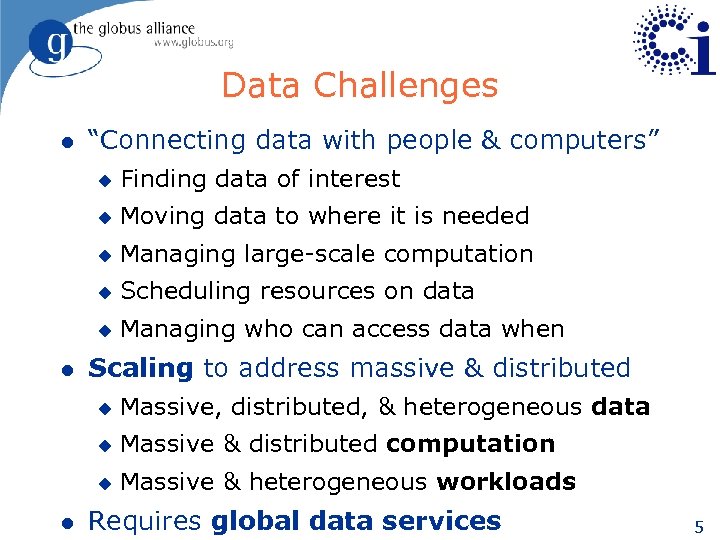

Data Challenges l “Connecting data with people & computers” u u Moving data to where it is needed u Managing large-scale computation u Scheduling resources on data u l Finding data of interest Managing who can access data when Scaling to address massive & distributed u u Massive & distributed computation u l Massive, distributed, & heterogeneous data Massive & heterogeneous workloads Requires global data services 5

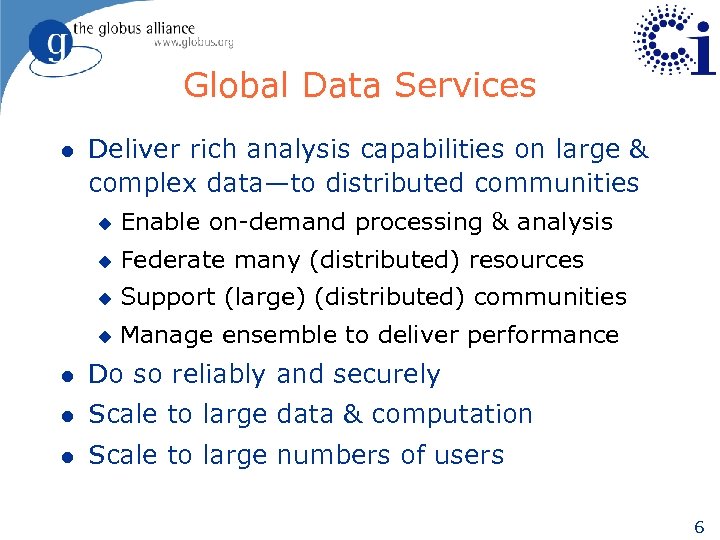

Global Data Services l Deliver rich analysis capabilities on large & complex data—to distributed communities u Enable on-demand processing & analysis u Federate many (distributed) resources u Support (large) (distributed) communities u Manage ensemble to deliver performance l Do so reliably and securely l Scale to large data & computation l Scale to large numbers of users 6

Overview l Global data services l Globus building blocks l Building higher-level services l Application case studies l Summary 7

Overview l Global data services l Globus building blocks u Overview u Grid. FTP u Reliable File Transfer Service u Replica Location Service u Data Access and Integration Services l Building higher-level services l Application case studies l Summary 8

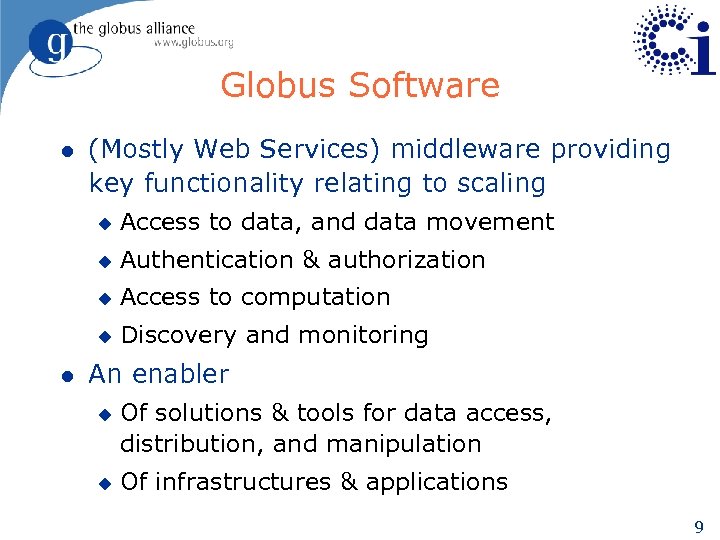

Globus Software l (Mostly Web Services) middleware providing key functionality relating to scaling u u Authentication & authorization u Access to computation u l Access to data, and data movement Discovery and monitoring An enabler u u Of solutions & tools for data access, distribution, and manipulation Of infrastructures & applications 9

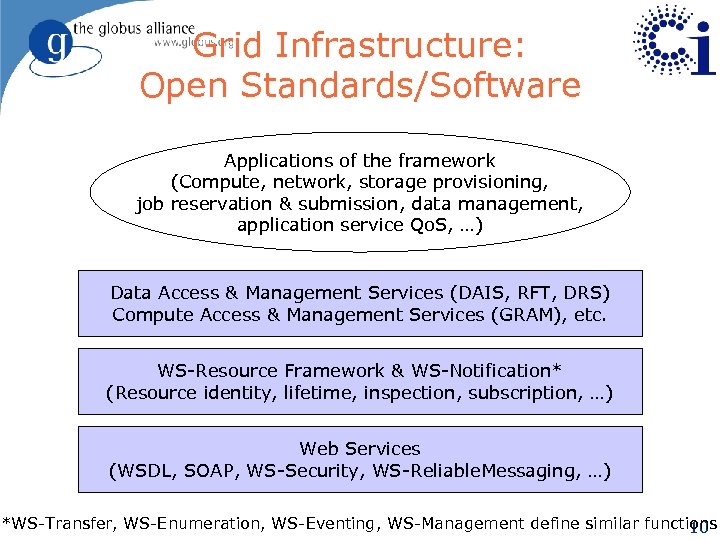

Grid Infrastructure: Open Standards/Software Applications of the framework (Compute, network, storage provisioning, job reservation & submission, data management, application service Qo. S, …) Data Access & Management Services (DAIS, RFT, DRS) Compute Access & Management Services (GRAM), etc. WS-Resource Framework & WS-Notification* (Resource identity, lifetime, inspection, subscription, …) Web Services (WSDL, SOAP, WS-Security, WS-Reliable. Messaging, …) *WS-Transfer, WS-Enumeration, WS-Eventing, WS-Management define similar functions 10

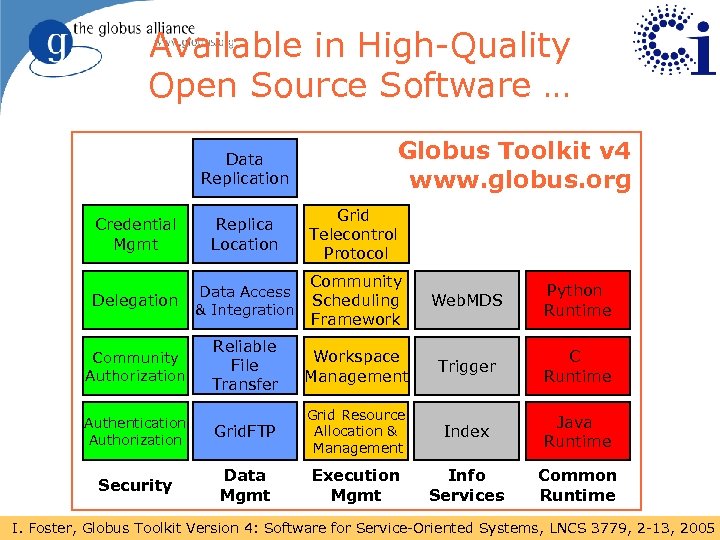

Available in High-Quality Open Source Software … Data Replication Globus Toolkit v 4 www. globus. org Credential Mgmt Replica Location Grid Telecontrol Protocol Delegation Data Access & Integration Community Scheduling Framework Web. MDS Python Runtime Community Authorization Reliable File Transfer Workspace Management Trigger C Runtime Authentication Authorization Grid. FTP Grid Resource Allocation & Management Index Java Runtime Security Data Mgmt Execution Mgmt Info Services Common Runtime 11 I. Foster, Globus Toolkit Version 4: Software for Service-Oriented Systems, LNCS 3779, 2 -13, 2005

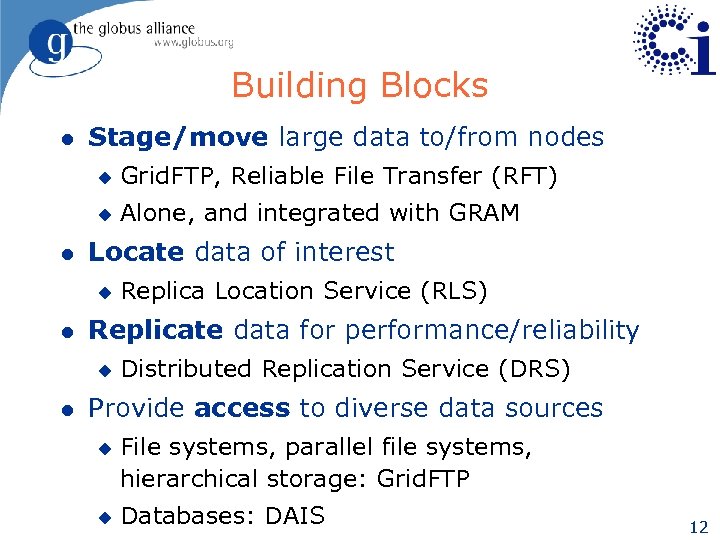

Building Blocks l Stage/move large data to/from nodes u u l Grid. FTP, Reliable File Transfer (RFT) Alone, and integrated with GRAM Locate data of interest u l Replicate data for performance/reliability u l Replica Location Service (RLS) Distributed Replication Service (DRS) Provide access to diverse data sources u u File systems, parallel file systems, hierarchical storage: Grid. FTP Databases: DAIS 12

Overview l Global data services l Globus building blocks u Overview u Grid. FTP u Reliable File Transfer Service u Replica Location Service u Data Access and Integration Services l Building higher-level services l Application case studies l Summary 13

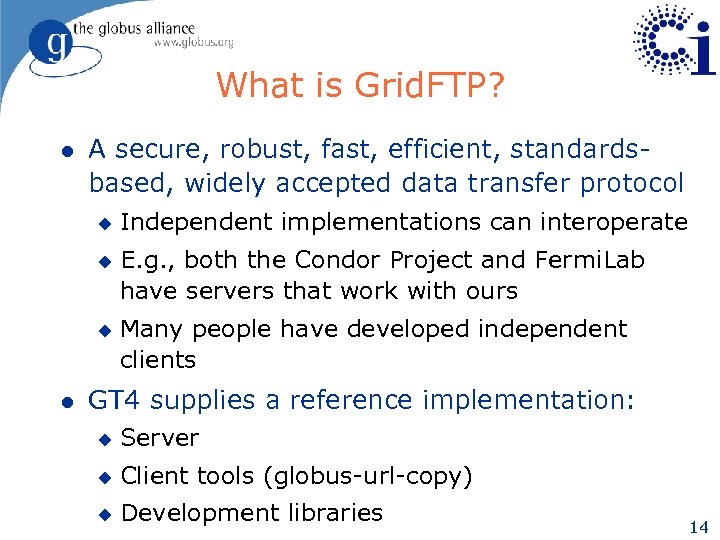

What is Grid. FTP? l A secure, robust, fast, efficient, standardsbased, widely accepted data transfer protocol u u u l Independent implementations can interoperate E. g. , both the Condor Project and Fermi. Lab have servers that work with ours Many people have developed independent clients GT 4 supplies a reference implementation: u Server u Client tools (globus-url-copy) u Development libraries 14

Grid. FTP: The Protocol l FTP protocol is defined by several IETF RFCs l Start with most commonly used subset u l Implement standard but often unused features u l Standard FTP: get/put etc. , 3 rd-party transfer GSS binding, extended directory listing, simple restart Extend in various ways, while preserving interoperability with existing servers u Striped/parallel data channels, partial file, automatic & manual TCP buffer setting, progress monitoring, extended restart 15

Grid. FTP: The Protocol (cont) l Existing FTP standards u RFC 959: File Transfer Protocol u RFC 2228: FTP Security Extensions u u l RFC 2389: Feature Negotiation for the File Transfer Protocol Draft: FTP Extensions New standard u Grid. FTP: Protocol Extensions to FTP for the Grid u Grid Forum Recommendation, GFD. 20 u www. ggf. org/documents/GWD-R/GFD-R. 020. pdf 16

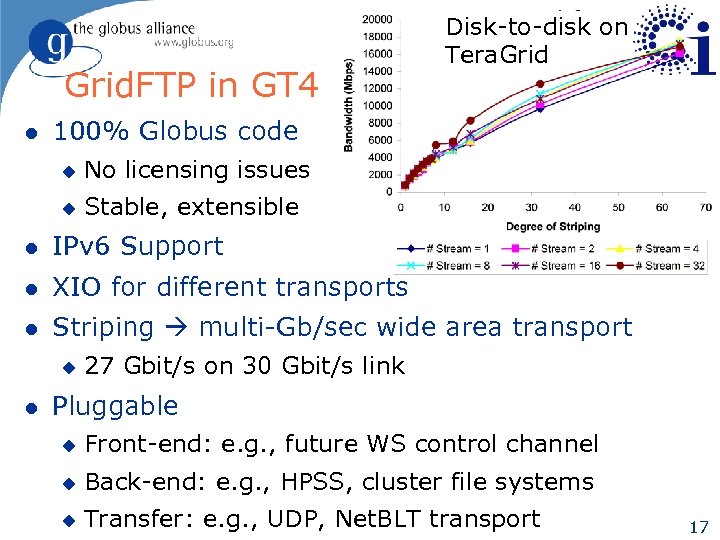

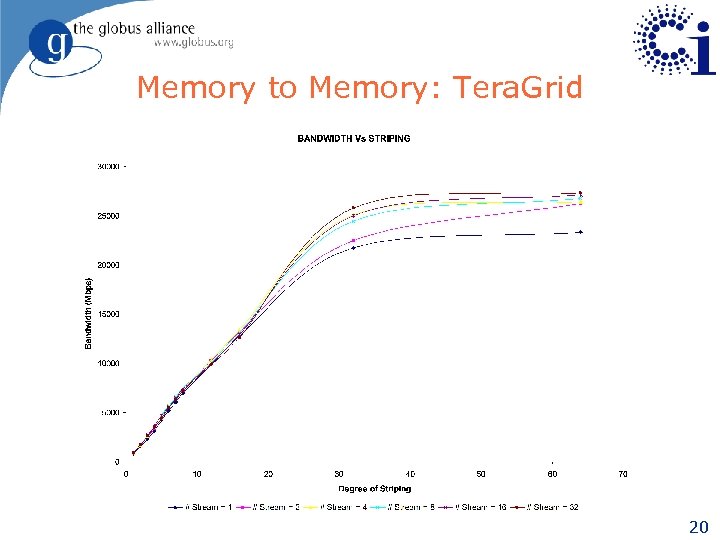

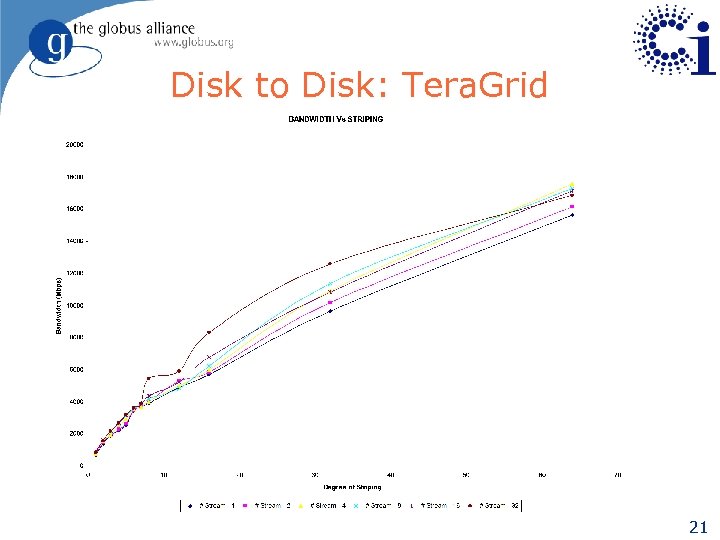

Grid. FTP in GT 4 l Disk-to-disk on Tera. Grid 100% Globus code u No licensing issues u Stable, extensible l IPv 6 Support l XIO for different transports l Striping multi-Gb/sec wide area transport u l 27 Gbit/s on 30 Gbit/s link Pluggable u Front-end: e. g. , future WS control channel u Back-end: e. g. , HPSS, cluster file systems u Transfer: e. g. , UDP, Net. BLT transport 17

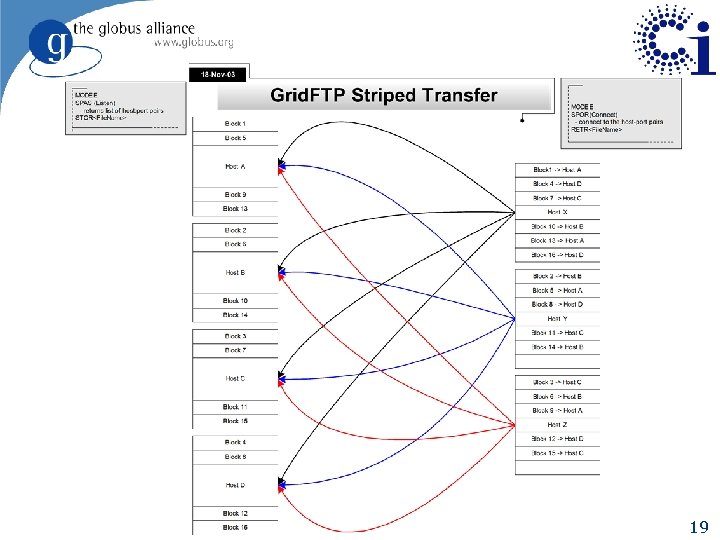

Striped Server Mode l Multiple nodes work together on a single file and act as a single Grid. FTP server l Underlying parallel file system allows all nodes to see the same file system u Must deliver good performance (usually the limiting factor in transfer speed)—i. e. , NFS does not cut it l Each node then moves (reads or writes) only the pieces of the file for which it is responsible l Allows multiple levels of parallelism, CPU, bus, NIC, disk, etc. u Critical to achieve >1 Gbs economically 18

19

Memory to Memory: Tera. Grid 20

Disk to Disk: Tera. Grid 21

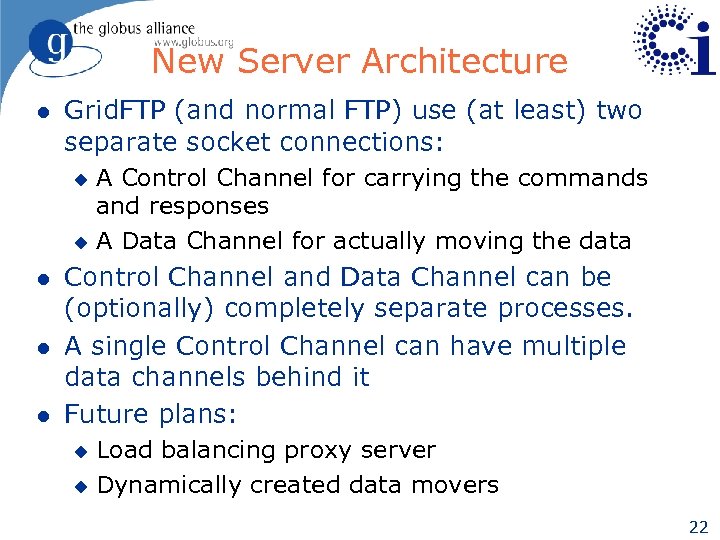

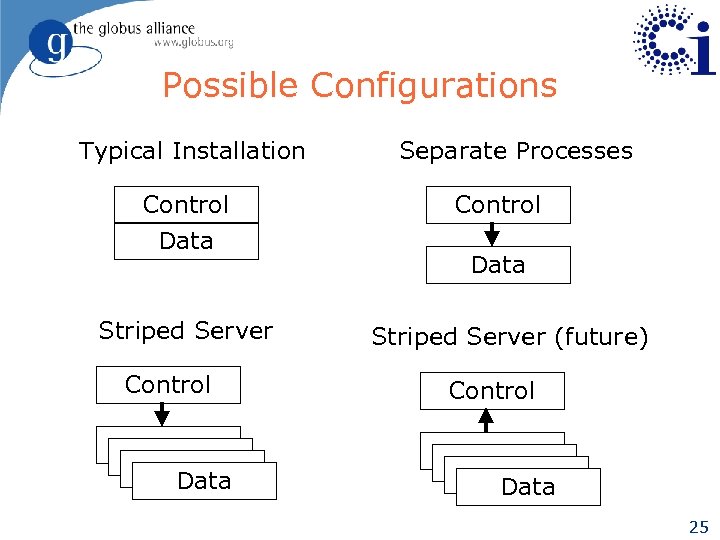

New Server Architecture l Grid. FTP (and normal FTP) use (at least) two separate socket connections: A Control Channel for carrying the commands and responses u A Data Channel for actually moving the data u l l l Control Channel and Data Channel can be (optionally) completely separate processes. A single Control Channel can have multiple data channels behind it Future plans: Load balancing proxy server u Dynamically created data movers u 22

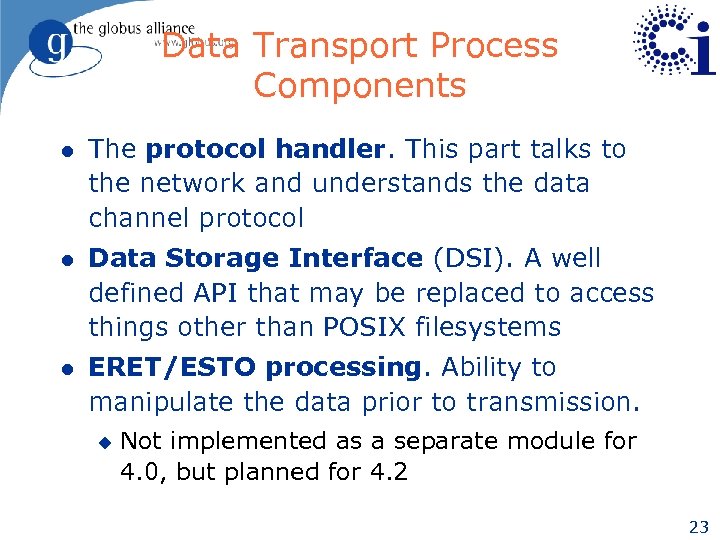

Data Transport Process Components l The protocol handler. This part talks to the network and understands the data channel protocol l Data Storage Interface (DSI). A well defined API that may be replaced to access things other than POSIX filesystems l ERET/ESTO processing. Ability to manipulate the data prior to transmission. u Not implemented as a separate module for 4. 0, but planned for 4. 2 23

Data Storage Interfaces (DSIs) l Posix file I/O l HPSS (with LANL / IBM) l Ne. ST (with UWis / Condor) l SRB (with SDSC) 24

Possible Configurations Typical Installation Control Data Striped Server Control Data Separate Processes Control Data Striped Server (future) Control Data 25

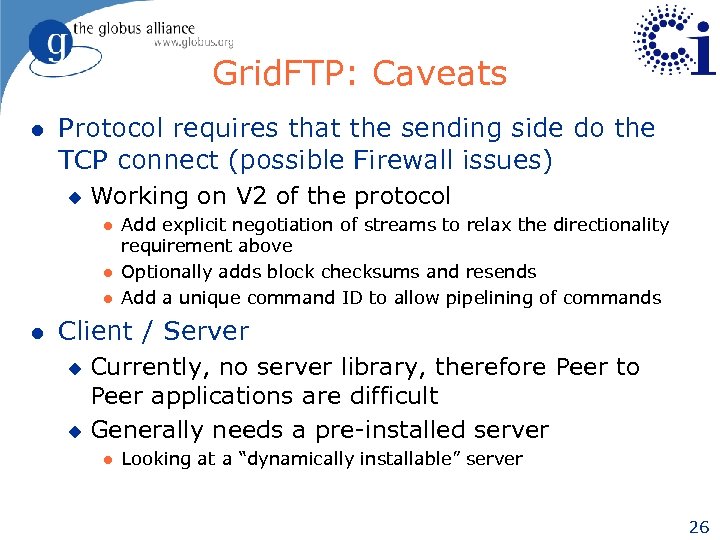

Grid. FTP: Caveats l Protocol requires that the sending side do the TCP connect (possible Firewall issues) u Working on V 2 of the protocol l l Add explicit negotiation of streams to relax the directionality requirement above Optionally adds block checksums and resends Add a unique command ID to allow pipelining of commands Client / Server Currently, no server library, therefore Peer to Peer applications are difficult u Generally needs a pre-installed server u l Looking at a “dynamically installable” server 26

Extensible IO (XIO) system l Provides a framework that implements a Read/Write/Open/Close Abstraction l Drivers are written that implement the functionality (file, TCP, UDP, GSI, etc. ) l Different functionality is achieved by building protocol stacks l Grid. FTP drivers allow 3 rd party applications to access files stored under a Grid. FTP server l Other drivers could be written to allow access to other data stores l Changing drivers requires minimal change to the application code 27

Overview l Global data services l Globus building blocks u Overview u Grid. FTP u Reliable File Transfer Service u Replica Location Service u Data Access and Integration Services l Building higher-level services l Application case studies l Summary 28

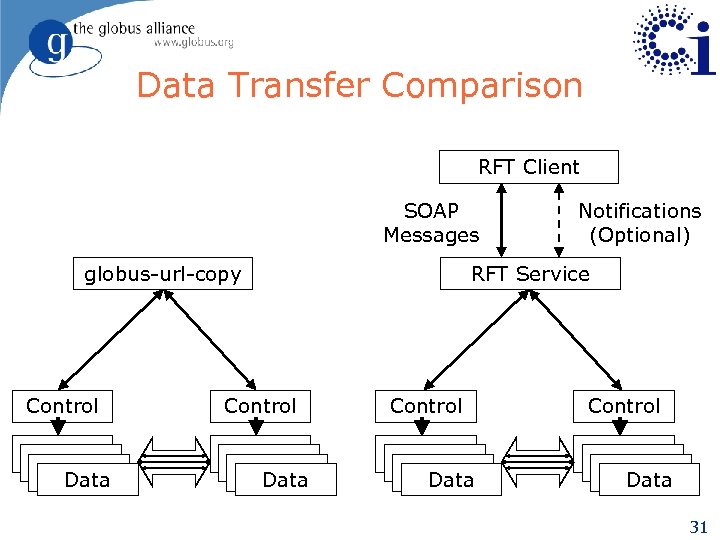

Reliable File Transfer l Comparison with globus-url-copy u u l Supports all the same options (buffer size, etc) Increased reliability because state is stored in a database. Service interface: The client can submit the transfer request and then disconnect and go away Think of this as a job scheduler for transfer job Two ways to check status u Subscribe for notifications u Poll for status (can check for missed notifications) 29

Reliable File Transfer l RFT accepts a SOAP description of the desired transfer l It writes this to a database l It then uses the Java Grid. FTP client library to initiate 3 rd part transfers on behalf of the requestor l Restart Markers are stored in the database to allow for restart in the event of an RFT failure l Supports concurrency, i. e. , multiple files in transit at the same time, to give good performance on many small files 30

Data Transfer Comparison RFT Client SOAP Messages globus-url-copy Control Data Notifications (Optional) RFT Service Control Data 31

Overview l Global data services l Globus building blocks u Overview u Grid. FTP u Reliable File Transfer Service u Replica Location Service u Data Access and Integration Services l Building higher-level services l Application case studies l Summary 32

Replica Management l Data intensive applications produce terabytes or petabytes of data u l Hundreds of millions of data objects Replicate data at multiple locations for: u u Fault tolerance: Avoid single points of failure Performance: Avoid wide area data transfer latencies; load balancing 33

A Replica Location Service l A Replica Location Service (RLS) is a distributed registry that records the locations of data copies and allows replica discovery u u l RLS maintains mappings between logical identifiers and target names Must perform and scale well: support hundreds of millions of objects, hundreds of clients RLS is one component of a Replica Management system u Other components include consistency services, replica selection services, reliable data transfer 34

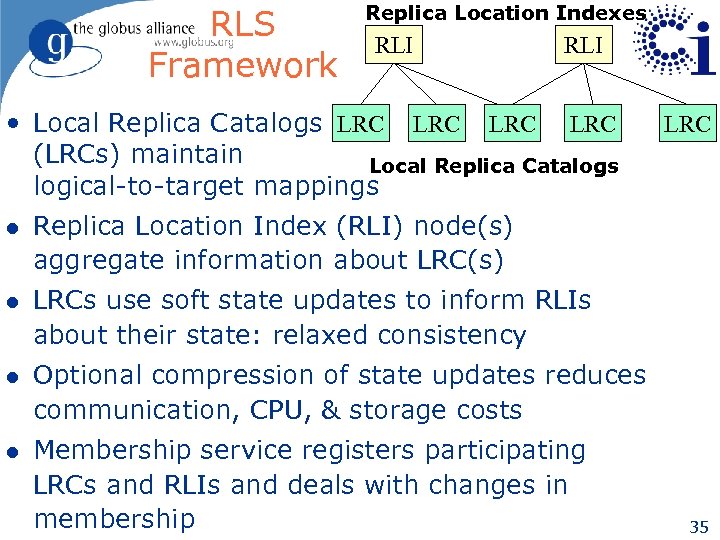

RLS Framework Replica Location Indexes RLI • Local Replica Catalogs LRC LRC (LRCs) maintain Local Replica Catalogs logical-to-target mappings l Replica Location Index (RLI) node(s) aggregate information about LRC(s) l LRCs use soft state updates to inform RLIs about their state: relaxed consistency l Optional compression of state updates reduces communication, CPU, & storage costs l Membership service registers participating LRCs and RLIs and deals with changes in membership LRC 35

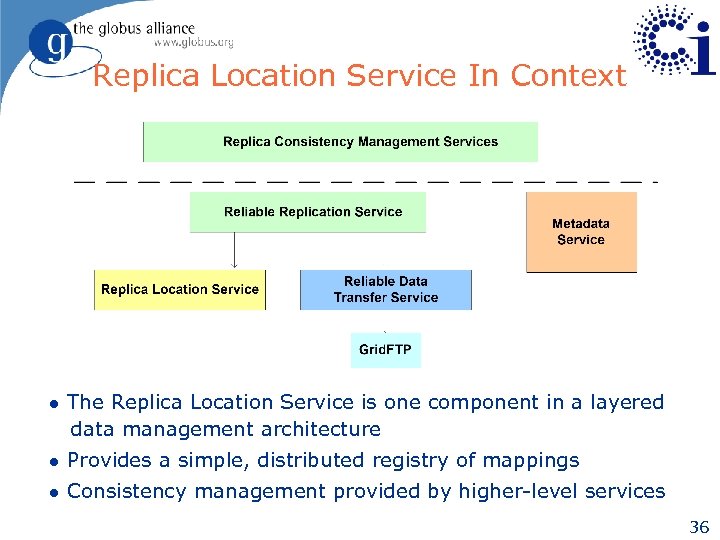

Replica Location Service In Context l The Replica Location Service is one component in a layered data management architecture l Provides a simple, distributed registry of mappings l Consistency management provided by higher-level services 36

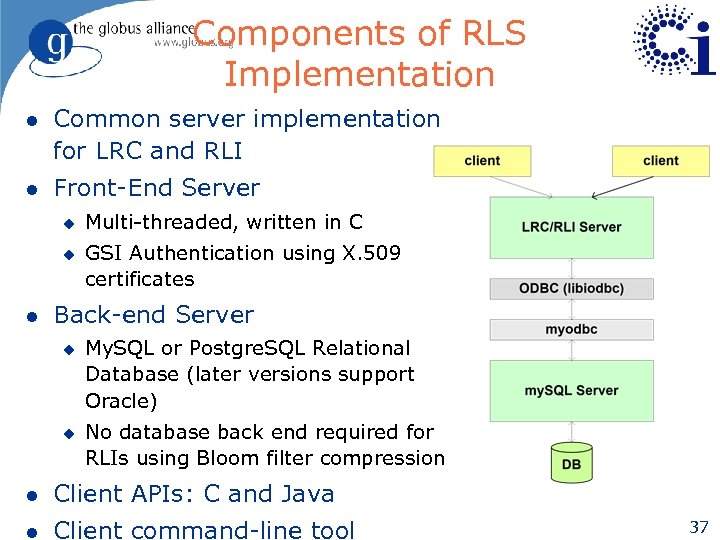

Components of RLS Implementation l Common server implementation for LRC and RLI l Front-End Server u u l Multi-threaded, written in C GSI Authentication using X. 509 certificates Back-end Server u u My. SQL or Postgre. SQL Relational Database (later versions support Oracle) No database back end required for RLIs using Bloom filter compression l Client APIs: C and Java l Client command-line tool 37

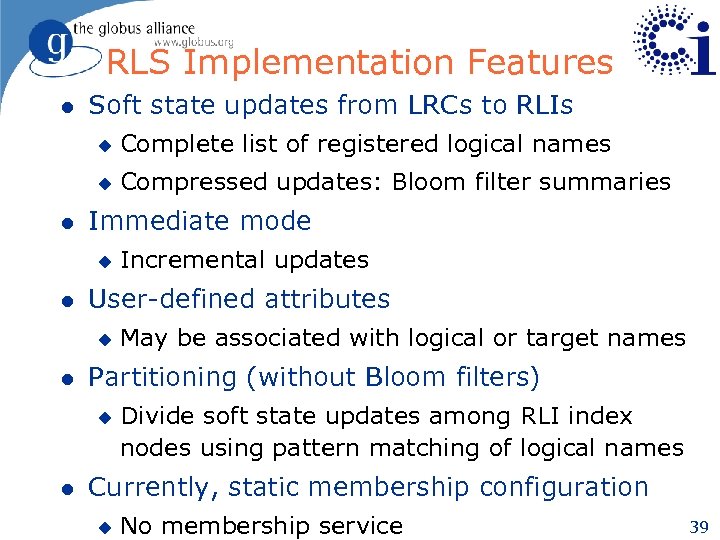

RLS Implementation Features l Two types of soft state updates from LRCs to RLIs u u l Complete list of logical names registered in LRC Compressed updates: Bloom filter summaries of LRC User-defined attributes u May be associated with logical or target names 38

RLS Implementation Features l Soft state updates from LRCs to RLIs u u l Complete list of registered logical names Compressed updates: Bloom filter summaries Immediate mode u l User-defined attributes u l May be associated with logical or target names Partitioning (without Bloom filters) u l Incremental updates Divide soft state updates among RLI index nodes using pattern matching of logical names Currently, static membership configuration u No membership service 39

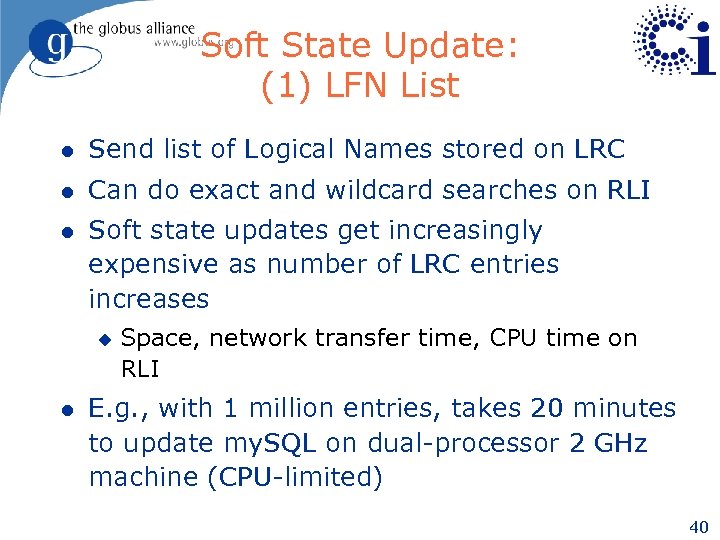

Soft State Update: (1) LFN List l Send list of Logical Names stored on LRC l Can do exact and wildcard searches on RLI l Soft state updates get increasingly expensive as number of LRC entries increases u l Space, network transfer time, CPU time on RLI E. g. , with 1 million entries, takes 20 minutes to update my. SQL on dual-processor 2 GHz machine (CPU-limited) 40

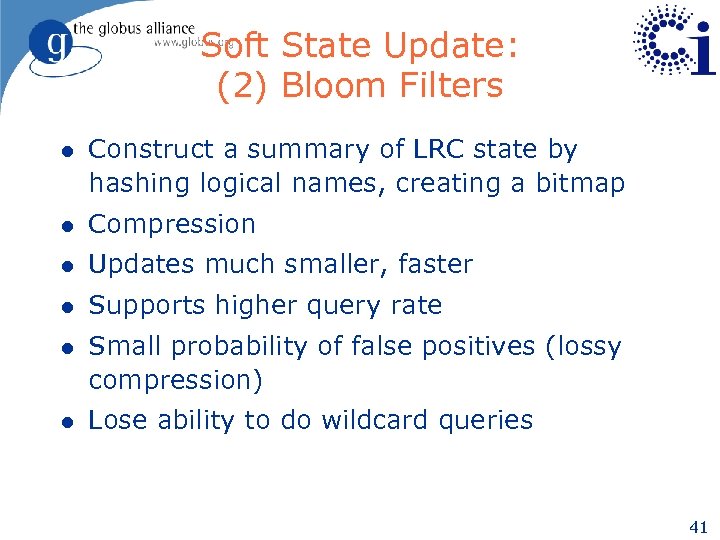

Soft State Update: (2) Bloom Filters l Construct a summary of LRC state by hashing logical names, creating a bitmap l Compression l Updates much smaller, faster l Supports higher query rate l Small probability of false positives (lossy compression) l Lose ability to do wildcard queries 41

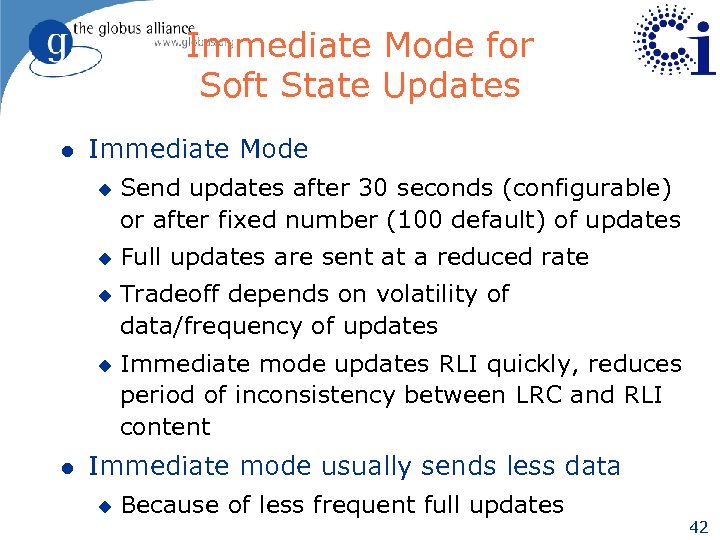

Immediate Mode for Soft State Updates l Immediate Mode u u l Send updates after 30 seconds (configurable) or after fixed number (100 default) of updates Full updates are sent at a reduced rate Tradeoff depends on volatility of data/frequency of updates Immediate mode updates RLI quickly, reduces period of inconsistency between LRC and RLI content Immediate mode usually sends less data u Because of less frequent full updates 42

Performance Testing (see HPDC paper) l Performance of individual LRC (catalog) or RLI (index) servers u l Performance of soft state updates u l Client program submits requests to server Client LRCs sends updates to index servers Software Versions: u Replica Location Service Version 2. 0. 9 u Globus Packaging Toolkit Version 2. 2. 5 u libi. ODBC library Version 3. 0. 5 u My. SQL database Version 4. 0. 14 u My. ODBC library (with My. SQL) Version 3. 51. 06 43

Testing Environment l Local Area Network Tests u u u l 100 Megabit Ethernet Clients (either client program or LRCs) on cluster: dual Pentium-III 547 MHz workstations with 1. 5 GB memory running Red Hat Linux 9 Server: dual Intel Xeon 2. 2 GHz processor with 1 GB memory running Red Hat Linux 7. 3 Wide Area Network Tests (Soft state updates) u u LRC clients (Los Angeles): cluster nodes RLI server (Chicago): dual Intel Xeon 2. 2 GHz machine with 2 GB memory running Red Hat Linux 7. 3 44

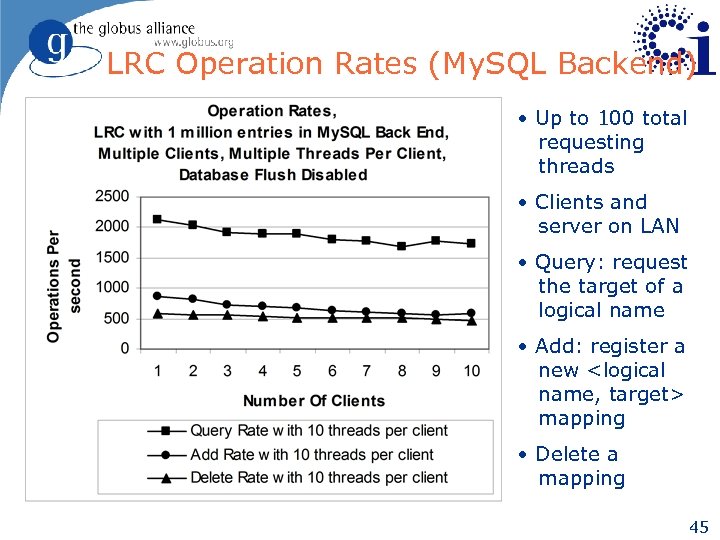

LRC Operation Rates (My. SQL Backend) • Up to 100 total requesting threads • Clients and server on LAN • Query: request the target of a logical name • Add: register a new <logical name, target> mapping • Delete a mapping 45

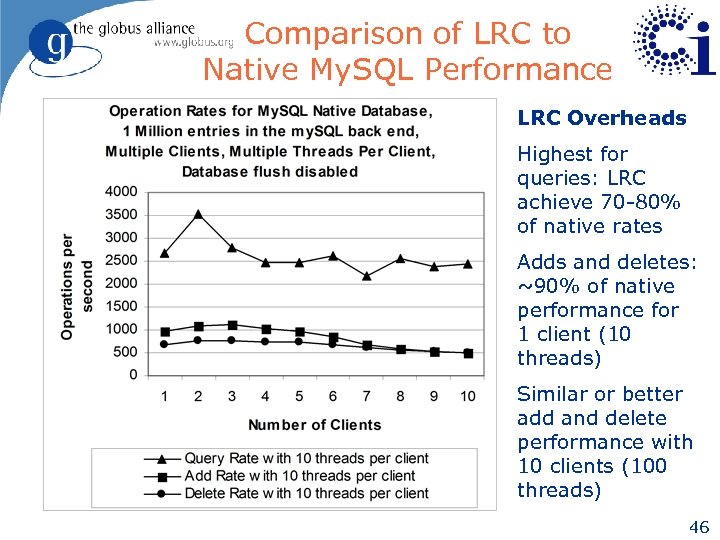

Comparison of LRC to Native My. SQL Performance LRC Overheads Highest for queries: LRC achieve 70 -80% of native rates Adds and deletes: ~90% of native performance for 1 client (10 threads) Similar or better add and delete performance with 10 clients (100 threads) 46

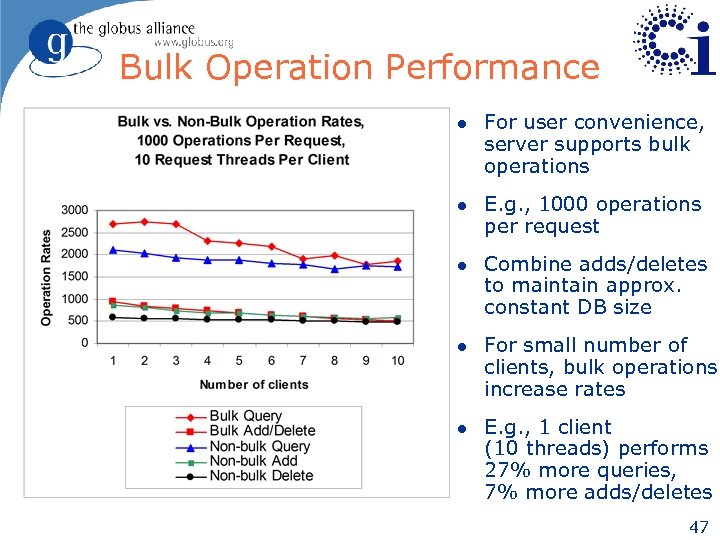

Bulk Operation Performance l For user convenience, server supports bulk operations l E. g. , 1000 operations per request l Combine adds/deletes to maintain approx. constant DB size l For small number of clients, bulk operations increase rates l E. g. , 1 client (10 threads) performs 27% more queries, 7% more adds/deletes 47

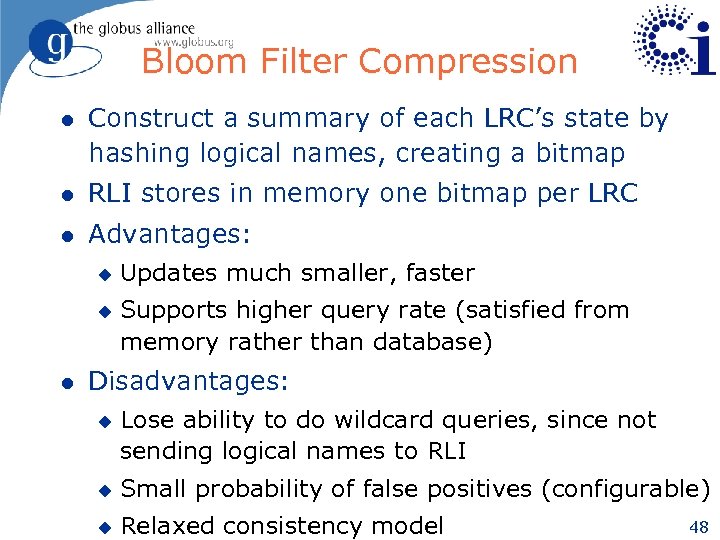

Bloom Filter Compression l Construct a summary of each LRC’s state by hashing logical names, creating a bitmap l RLI stores in memory one bitmap per LRC l Advantages: u u l Updates much smaller, faster Supports higher query rate (satisfied from memory rather than database) Disadvantages: u Lose ability to do wildcard queries, since not sending logical names to RLI u Small probability of false positives (configurable) u Relaxed consistency model 48

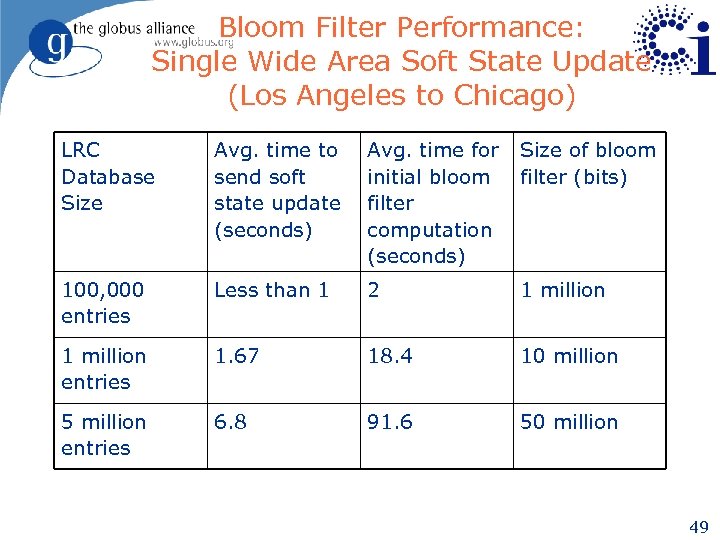

Bloom Filter Performance: Single Wide Area Soft State Update (Los Angeles to Chicago) LRC Database Size Avg. time to send soft state update (seconds) Avg. time for initial bloom filter computation (seconds) Size of bloom filter (bits) 100, 000 entries Less than 1 2 1 million entries 1. 67 18. 4 10 million 5 million entries 6. 8 91. 6 50 million 49

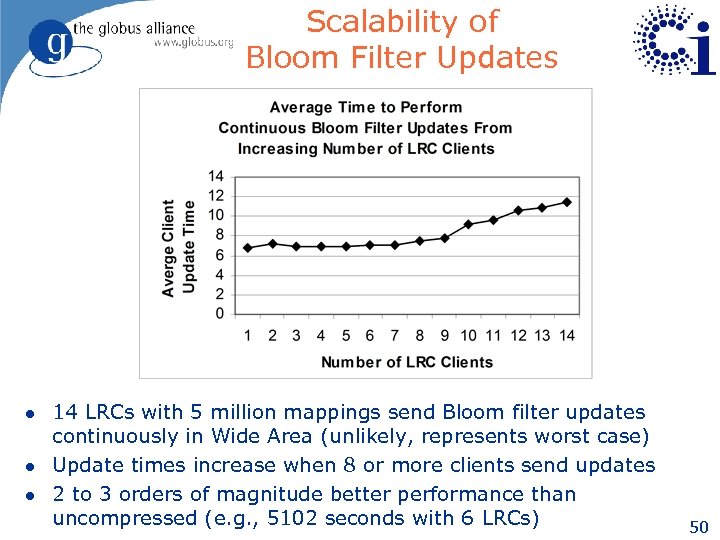

Scalability of Bloom Filter Updates l l l 14 LRCs with 5 million mappings send Bloom filter updates continuously in Wide Area (unlikely, represents worst case) Update times increase when 8 or more clients send updates 2 to 3 orders of magnitude better performance than uncompressed (e. g. , 5102 seconds with 6 LRCs) 50

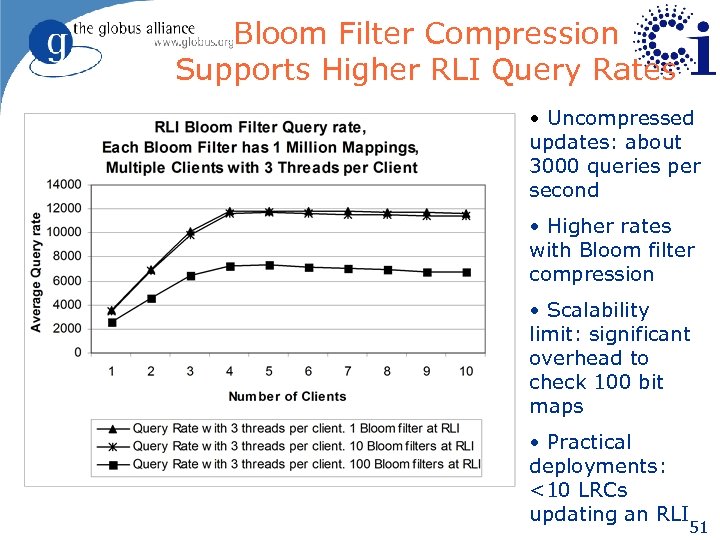

Bloom Filter Compression Supports Higher RLI Query Rates • Uncompressed updates: about 3000 queries per second • Higher rates with Bloom filter compression • Scalability limit: significant overhead to check 100 bit maps • Practical deployments: <10 LRCs updating an RLI 51

Data Services in Production Use: LIGO l Laser Interferometer Gravitational Wave Observatory Currently use RLS servers at 10 sites u l Contain mappings from 6 million logical files to over 40 million physical replicas Used in customized data management system: the LIGO Lightweight Data Replicator System (LDR) u Includes RLS, Grid. FTP, custom metadata catalog, tools for storage management and data validation 52

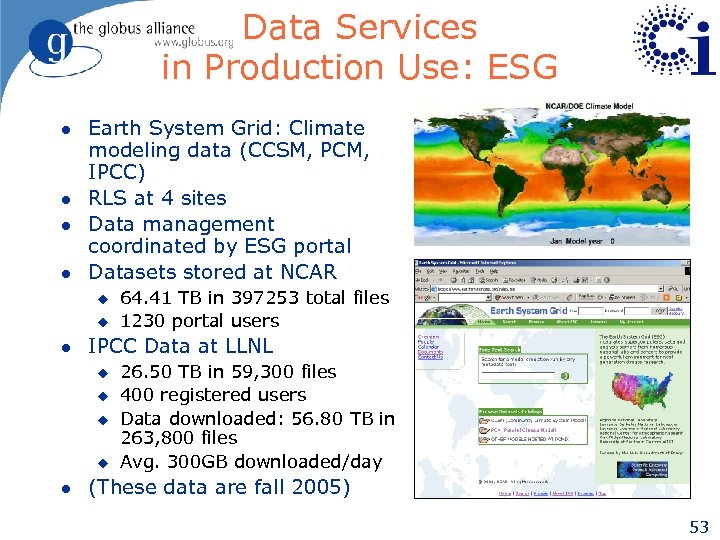

Data Services in Production Use: ESG l l Earth System Grid: Climate modeling data (CCSM, PCM, IPCC) RLS at 4 sites Data management coordinated by ESG portal Datasets stored at NCAR u u l IPCC Data at LLNL u u l 64. 41 TB in 397253 total files 1230 portal users 26. 50 TB in 59, 300 files 400 registered users Data downloaded: 56. 80 TB in 263, 800 files Avg. 300 GB downloaded/day (These data are fall 2005) 53

Data Services in Production Use: Virtual Data System l Virtual Data System (VDS) u u u l Maps from a high-level, abstract definition of a workflow onto a Grid environment Maps to a concrete or executable workflow in the form of a Directed Acyclic Graph (DAG) Passes this concrete workflow to the Condor DAGMan execution system VDS uses RLS to u u Identify physical replicas of logical files specified in the abstract workflow Register new files created during workflow execution 54

Overview l Global data services l Globus building blocks u Overview u Grid. FTP u Reliable File Transfer Service u Replica Location Service u Data Access and Integration Services l Building higher-level services l Application case studies l Summary 55

OGSA-DAI: Data Access and Integration for the Grid l Focus on structured data (e. g. , relational, XML) l Meet data requirements of Grid applications u u Reduce development cost of data-centric apps u l Functionality, performance and reliability Provide consistent interfaces to data resources Acceptable and supportable by database providers u u Trustable, imposed demand is acceptable, etc. Provide a standard framework that satisfies standard requirements 56

OGSA-DAI Contd. l A base for developing higher-level services u Data federation u Distributed query processing u Data mining u Data visualisation 57

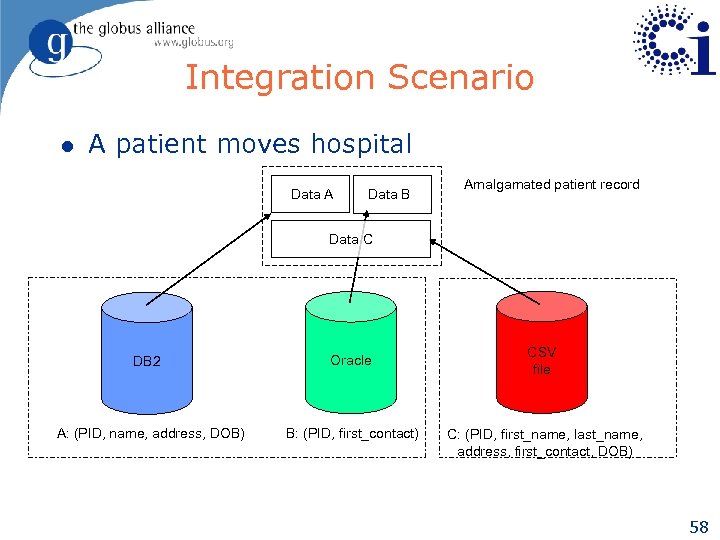

Integration Scenario l A patient moves hospital Data A Data B Amalgamated patient record Data C DB 2 Oracle A: (PID, name, address, DOB) B: (PID, first_contact) CSV file C: (PID, first_name, last_name, address, first_contact, DOB) 58

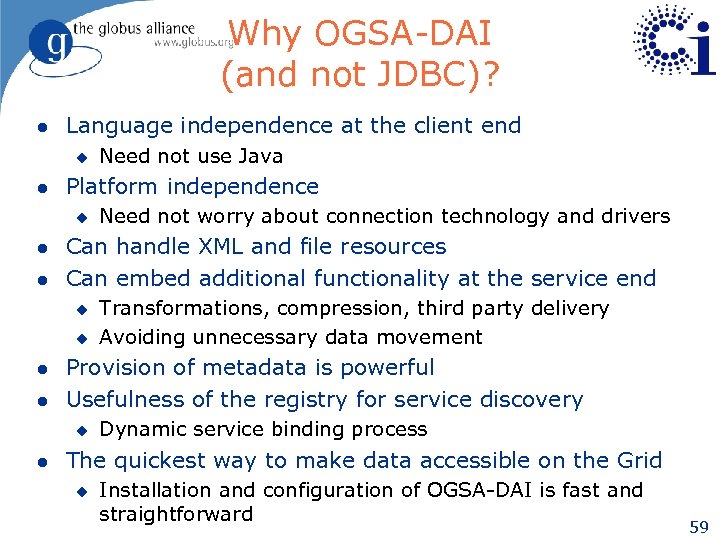

Why OGSA-DAI (and not JDBC)? l Language independence at the client end u l Platform independence u l l u l Transformations, compression, third party delivery Avoiding unnecessary data movement Provision of metadata is powerful Usefulness of the registry for service discovery u l Need not worry about connection technology and drivers Can handle XML and file resources Can embed additional functionality at the service end u l Need not use Java Dynamic service binding process The quickest way to make data accessible on the Grid u Installation and configuration of OGSA-DAI is fast and straightforward 59

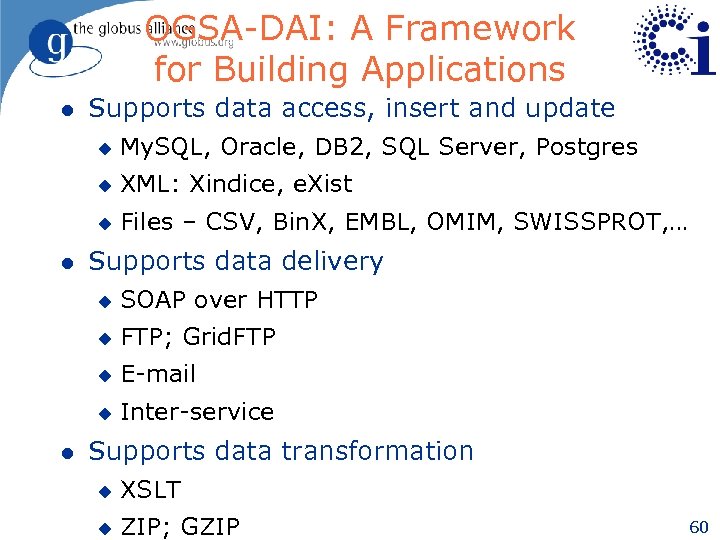

OGSA-DAI: A Framework for Building Applications l Supports data access, insert and update u u XML: Xindice, e. Xist u l My. SQL, Oracle, DB 2, SQL Server, Postgres Files – CSV, Bin. X, EMBL, OMIM, SWISSPROT, … Supports data delivery u u FTP; Grid. FTP u E-mail u l SOAP over HTTP Inter-service Supports data transformation u XSLT u ZIP; GZIP 60

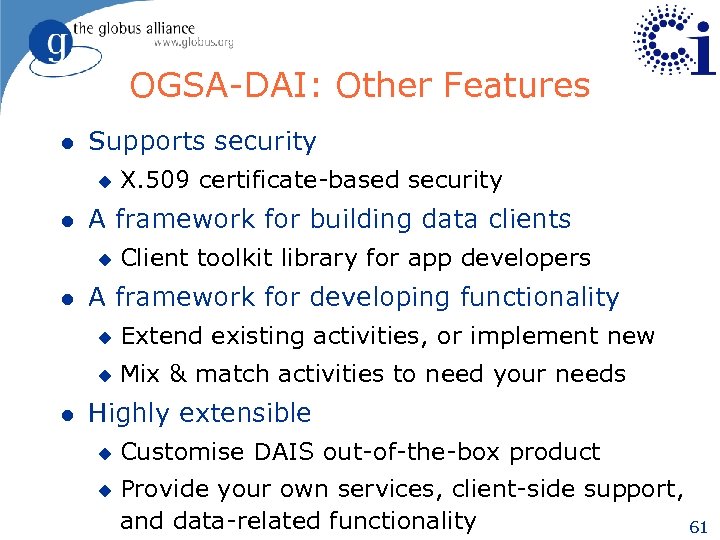

OGSA-DAI: Other Features l Supports security u l A framework for building data clients u l X. 509 certificate-based security Client toolkit library for app developers A framework for developing functionality u u l Extend existing activities, or implement new Mix & match activities to need your needs Highly extensible u u Customise DAIS out-of-the-box product Provide your own services, client-side support, and data-related functionality 61

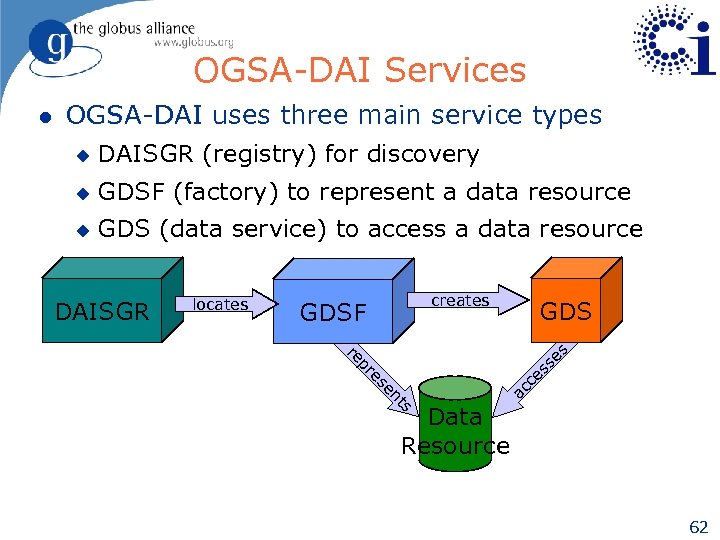

OGSA-DAI Services OGSA-DAI uses three main service types u DAISGR (registry) for discovery u GDSF (factory) to represent a data resource u GDS (data service) to access a data resource creates GDSF GDS pr re se s locates ts en es ce s DAISGR ac l Data Resource 62

Activities Express Tasks to be Performed by a GDS l Three broad classes of activities u u Transformations u l Statement Delivery Extensible u u No modification to service interface required u l Easy to add new functionality Extensions operate within OGSA-DAI framework Functionality u Implemented at the service u Work where the data is (need not move data) 63

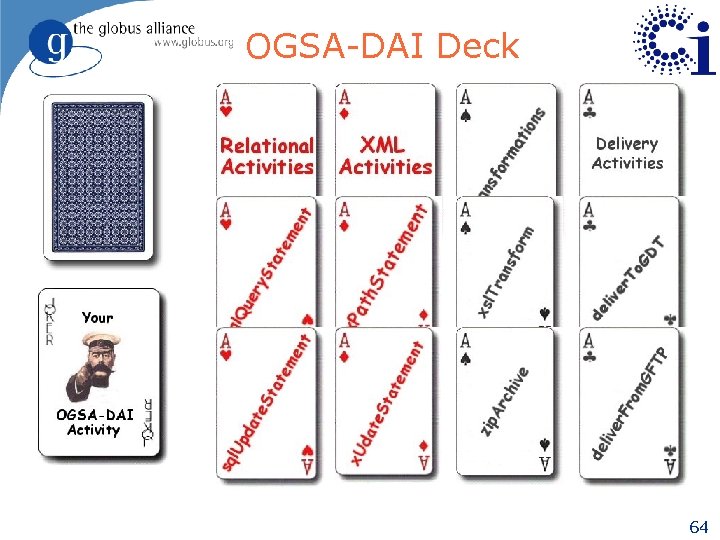

OGSA-DAI Deck 64

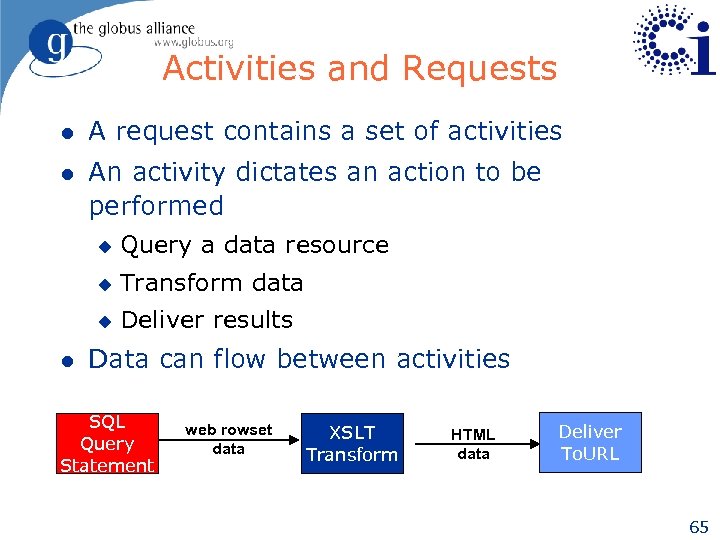

Activities and Requests l A request contains a set of activities l An activity dictates an action to be performed u u Transform data u l Query a data resource Deliver results Data can flow between activities SQL Query Statement web rowset data XSLT Transform HTML data Deliver To. URL 65

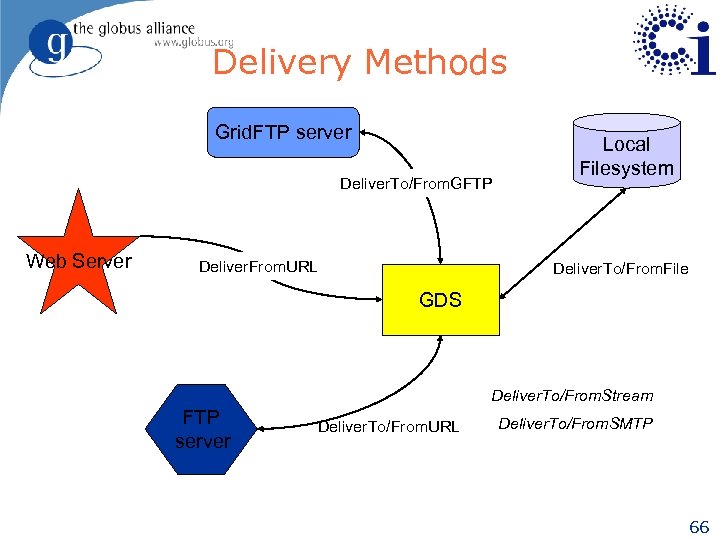

Delivery Methods Grid. FTP server Deliver. To/From. GFTP Web Server Deliver. From. URL Local Filesystem Deliver. To/From. File GDS Deliver. To/From. Stream FTP server Deliver. To/From. URL Deliver. To/From. SMTP 66

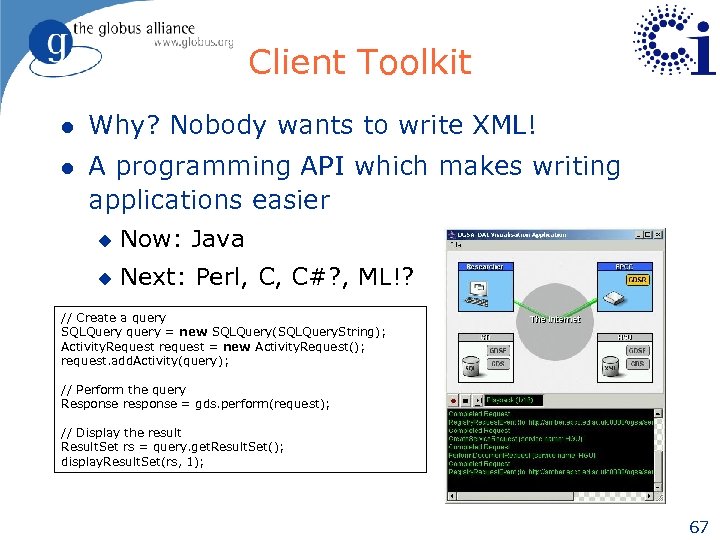

Client Toolkit l Why? Nobody wants to write XML! l A programming API which makes writing applications easier u Now: Java u Next: Perl, C, C#? , ML!? // Create a query SQLQuery query = new SQLQuery(SQLQuery. String); Activity. Request request = new Activity. Request(); request. add. Activity(query); // Perform the query Response response = gds. perform(request); // Display the result Result. Set rs = query. get. Result. Set(); display. Result. Set(rs, 1); 67

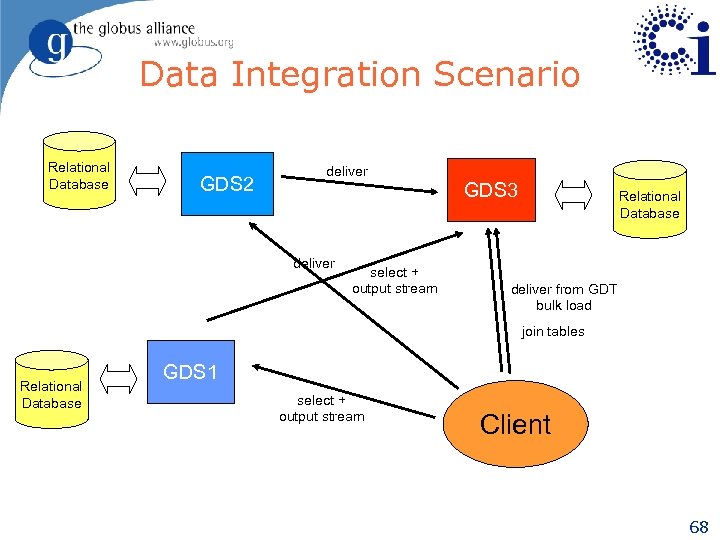

Data Integration Scenario Relational Database GDS 2 deliver GDS 3 deliver select + output stream Relational Database deliver from GDT bulk load join tables Relational Database GDS 1 select + output stream Client 68

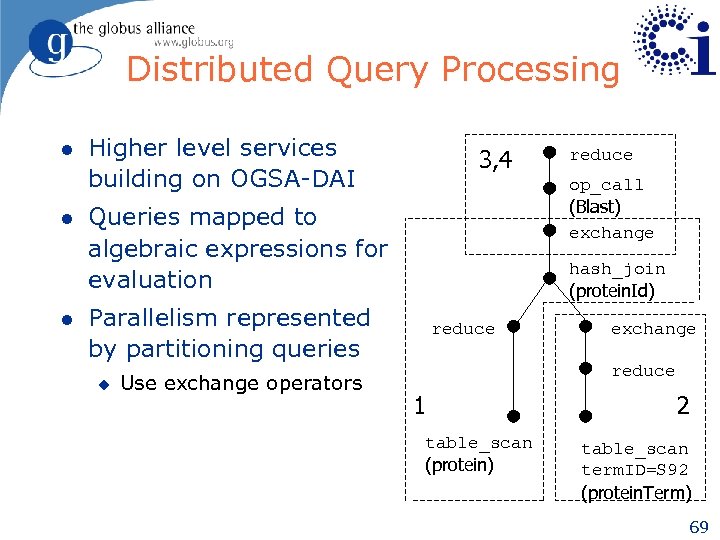

Distributed Query Processing l l l Higher level services building on OGSA-DAI 3, 4 op_call (Blast) exchange Queries mapped to algebraic expressions for evaluation hash_join (protein. Id) Parallelism represented by partitioning queries u Use exchange operators reduce exchange reduce 1 table_scan (protein) 2 table_scan term. ID=S 92 (protein. Term) 69

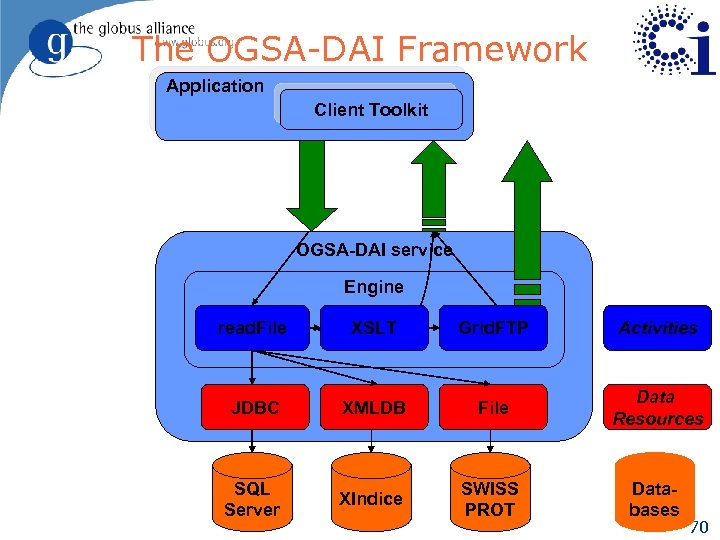

The OGSA-DAI Framework Application Client Toolkit OGSA-DAI service Engine SQLQuery read. File XPath XSLT GZip Grid. FTP Activities JDBC XMLDB File Data Resources SQL My. SQL DB 2 Server XIndice SWISS PROT Databases 70

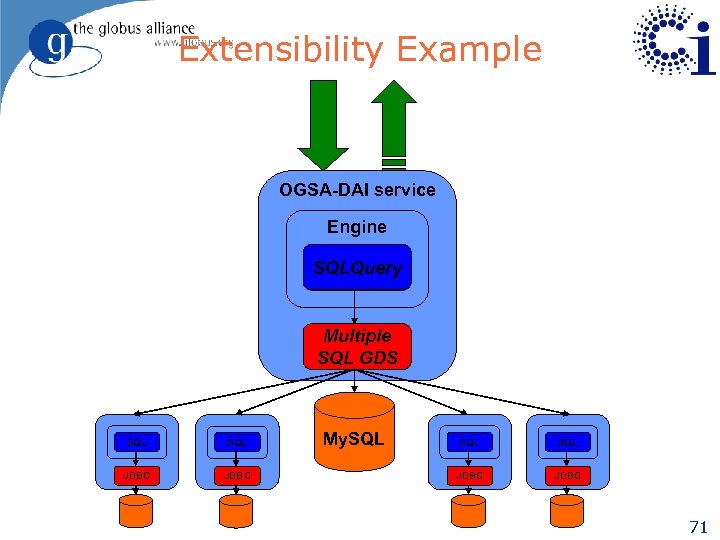

Extensibility Example OGSA-DAI service Engine SQLQuery Multiple JDBC SQL GDS SQL JDBC My. SQL SQL JDBC 71

Overview l Global data services l Globus building blocks l Building higher-level services u GRAM execution management service u Data replication service u Workflow management l Application case studies l Summary 72

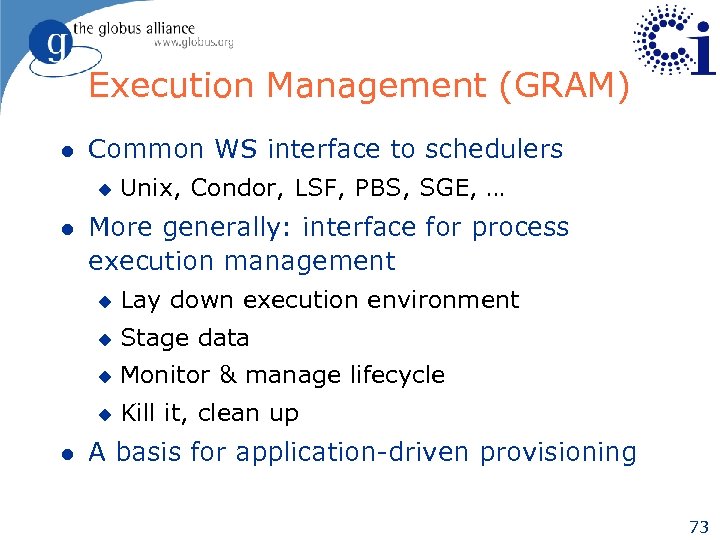

Execution Management (GRAM) l Common WS interface to schedulers u l Unix, Condor, LSF, PBS, SGE, … More generally: interface for process execution management u u Stage data u Monitor & manage lifecycle u l Lay down execution environment Kill it, clean up A basis for application-driven provisioning 73

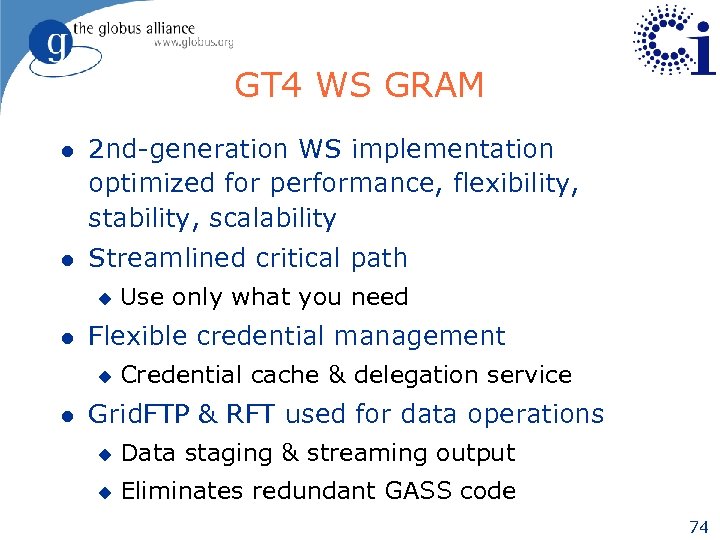

GT 4 WS GRAM l 2 nd-generation WS implementation optimized for performance, flexibility, stability, scalability l Streamlined critical path u l Flexible credential management u l Use only what you need Credential cache & delegation service Grid. FTP & RFT used for data operations u Data staging & streaming output u Eliminates redundant GASS code 74

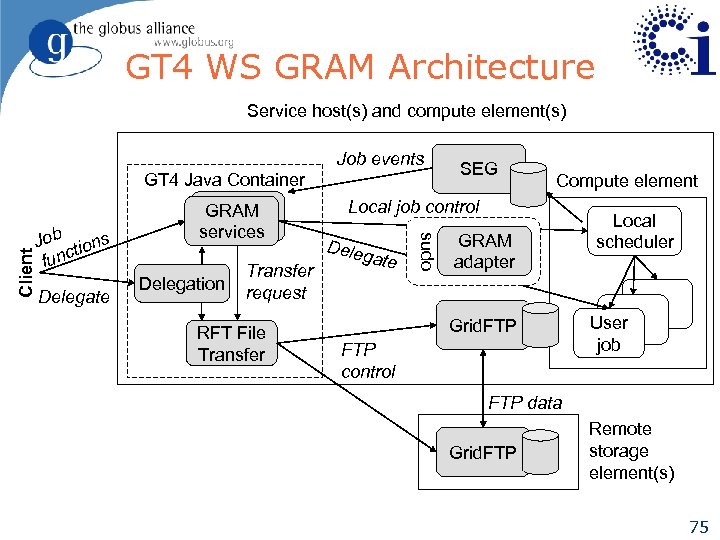

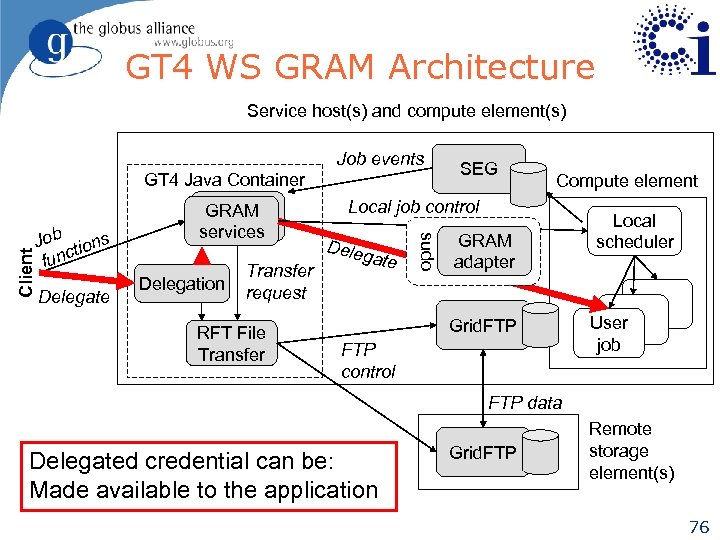

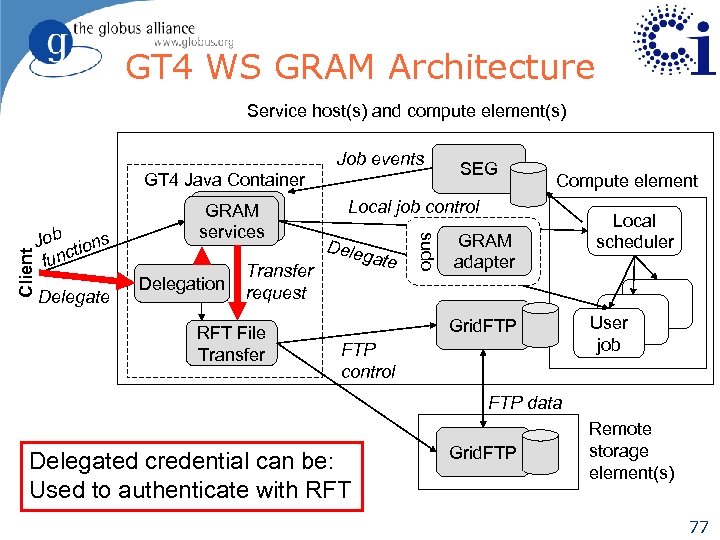

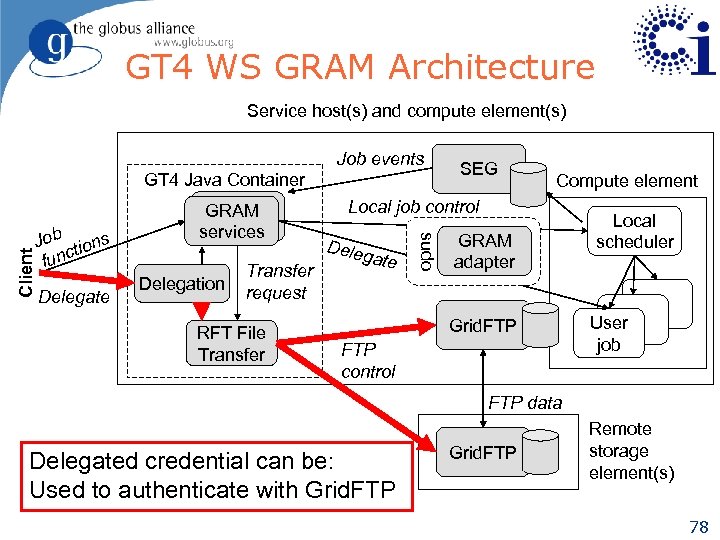

GT 4 WS GRAM Architecture Service host(s) and compute element(s) Job tions func Delegate GT 4 Java Container GRAM services Delegation Transfer request RFT File Transfer SEG Compute element Local job control Deleg ate sudo Client Job events GRAM adapter Grid. FTP control Local scheduler User job FTP data Grid. FTP Remote storage element(s) 75

GT 4 WS GRAM Architecture Service host(s) and compute element(s) Job tions func Delegate GT 4 Java Container GRAM services Delegation Transfer request RFT File Transfer SEG Compute element Local job control Deleg ate sudo Client Job events GRAM adapter Grid. FTP control Local scheduler User job FTP data Delegated credential can be: Made available to the application Grid. FTP Remote storage element(s) 76

GT 4 WS GRAM Architecture Service host(s) and compute element(s) Job tions func Delegate GT 4 Java Container GRAM services Delegation Transfer request RFT File Transfer SEG Compute element Local job control Deleg ate sudo Client Job events GRAM adapter Grid. FTP control Local scheduler User job FTP data Delegated credential can be: Used to authenticate with RFT Grid. FTP Remote storage element(s) 77

GT 4 WS GRAM Architecture Service host(s) and compute element(s) Job tions func Delegate GT 4 Java Container GRAM services Delegation Transfer request RFT File Transfer SEG Compute element Local job control Deleg ate sudo Client Job events GRAM adapter Grid. FTP control Local scheduler User job FTP data Delegated credential can be: Used to authenticate with Grid. FTP Remote storage element(s) 78

Overview l Global data services l Globus building blocks l Building higher-level services u GRAM execution management service u Data replication service u Workflow management l Application case studies l Summary 79

Motivation for Data Replication Services l Data-intensive applications need higher-level data management services that integrate lower-level Grid functionality u Efficient data transfer (Grid. FTP, RFT) u Replica registration and discovery (RLS) u Eventually validation of replicas, consistency management, etc. Provide a suite of general, configurable, higher-level data management services u Data Replication Service is the first of these 80

Data Replication Service l Design based on the publication component of the Lightweight Data Replicator system u l Scott Koranda, U. Wisconsin Milwaukee Ensures that specified files exist at a site u Compares contents of a local file catalog with a list of desired files u u l Transfers copies of missing files other locations Registers them in the local file catalog Uses a pull-based model u Localizes decision making; load balancing u Minimizes dependency on outside services 81

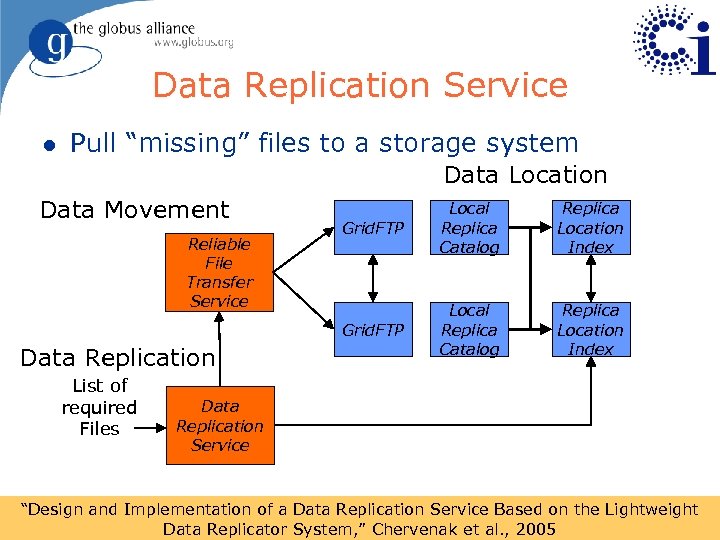

Data Replication Service l Pull “missing” files to a storage system Data Location Data Movement Data Replication List of required Files Replica Location Index Grid. FTP Reliable File Transfer Service Grid. FTP Local Replica Catalog Replica Location Index Data Replication Service “Design and Implementation of a Data Replication Service Based on the Lightweight 82 Data Replicator System, ” Chervenak et al. , 2005

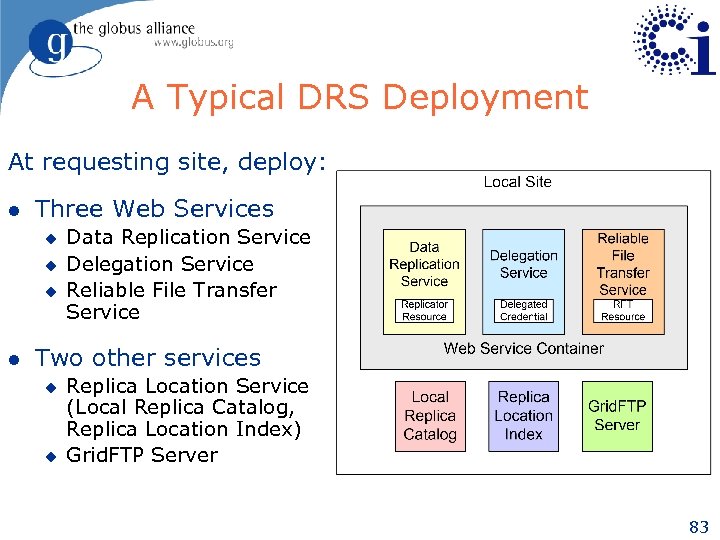

A Typical DRS Deployment At requesting site, deploy: l Three Web Services u u u l Data Replication Service Delegation Service Reliable File Transfer Service Two other services u u Replica Location Service (Local Replica Catalog, Replica Location Index) Grid. FTP Server 83

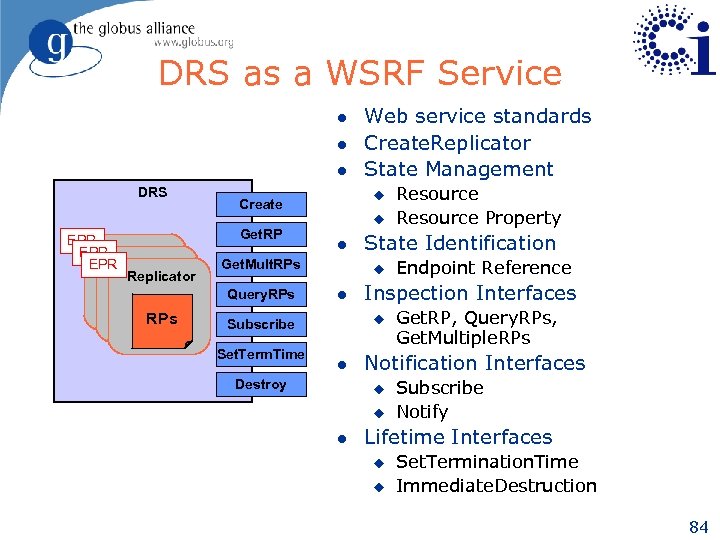

DRS as a WSRF Service l l l DRS EPR EPR Replicator u l Get. Mult. RPs Query. RPs u Create Get. RP l Destroy Get. RP, Query. RPs, Get. Multiple. RPs Notification Interfaces u u l Endpoint Reference Inspection Interfaces u l Resource Property State Identification u Subscribe Set. Term. Time Web service standards Create. Replicator State Management Subscribe Notify Lifetime Interfaces u u Set. Termination. Time Immediate. Destruction 84

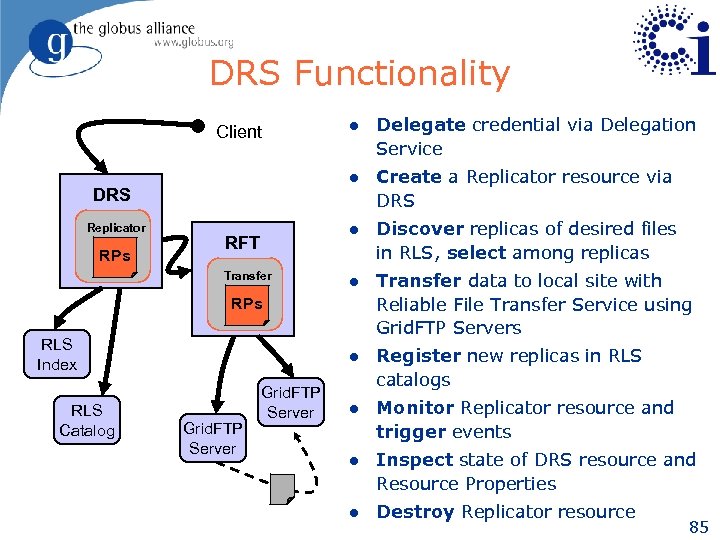

DRS Functionality Replicator RPs Transfer Create a Replicator resource via DRS Discover replicas of desired files in RLS, select among replicas l Transfer data to local site with Reliable File Transfer Service using Grid. FTP Servers l RFT Delegate credential via Delegation Service l DRS l l Client Register new replicas in RLS catalogs l Monitor Replicator resource and trigger events l Inspect state of DRS resource and Resource Properties l Destroy Replicator resource RPs RLS Index RLS Catalog Grid. FTP Server 85

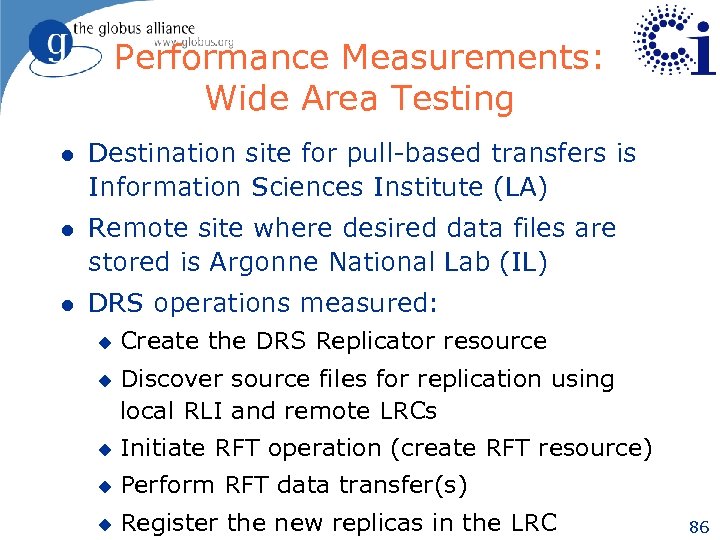

Performance Measurements: Wide Area Testing l Destination site for pull-based transfers is Information Sciences Institute (LA) l Remote site where desired data files are stored is Argonne National Lab (IL) l DRS operations measured: u u Create the DRS Replicator resource Discover source files for replication using local RLI and remote LRCs u Initiate RFT operation (create RFT resource) u Perform RFT data transfer(s) u Register the new replicas in the LRC 86

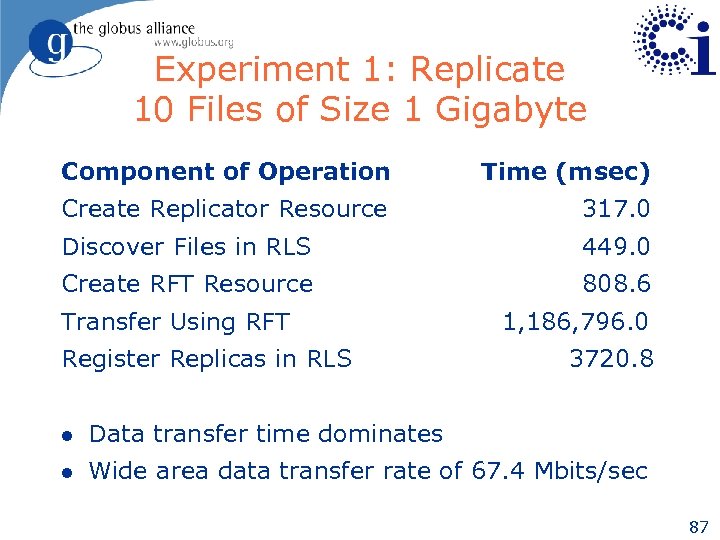

Experiment 1: Replicate 10 Files of Size 1 Gigabyte Component of Operation Time (msec) Create Replicator Resource 317. 0 Discover Files in RLS 449. 0 Create RFT Resource 808. 6 Transfer Using RFT Register Replicas in RLS 1, 186, 796. 0 3720. 8 l Data transfer time dominates l Wide area data transfer rate of 67. 4 Mbits/sec 87

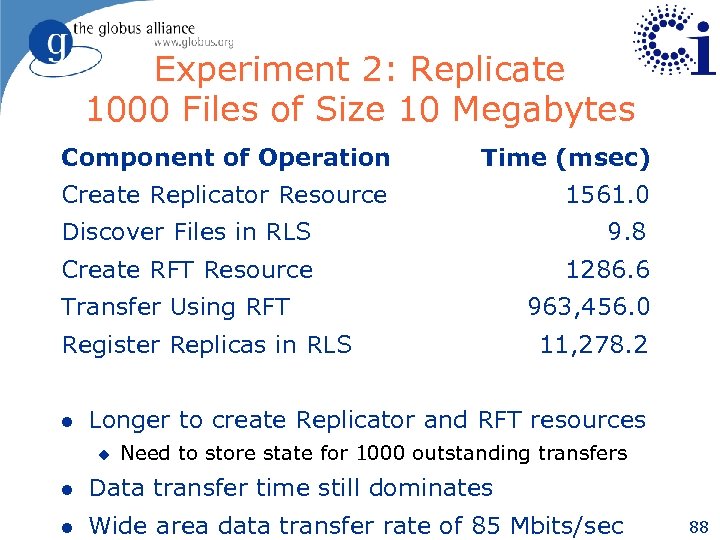

Experiment 2: Replicate 1000 Files of Size 10 Megabytes Component of Operation Time (msec) Create Replicator Resource 1561. 0 Discover Files in RLS 9. 8 Create RFT Resource 1286. 6 Transfer Using RFT Register Replicas in RLS l 963, 456. 0 11, 278. 2 Longer to create Replicator and RFT resources u Need to store state for 1000 outstanding transfers l Data transfer time still dominates l Wide area data transfer rate of 85 Mbits/sec 88

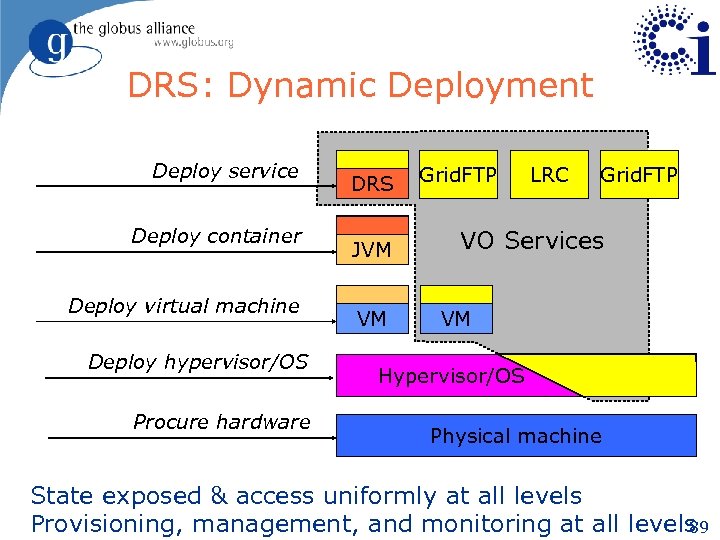

DRS: Dynamic Deployment Deploy service Deploy container Deploy virtual machine Deploy hypervisor/OS Procure hardware DRS JVM VM Grid. FTP LRC Grid. FTP VO Services VM Hypervisor/OS Physical machine State exposed & access uniformly at all levels Provisioning, management, and monitoring at all levels 89

Overview l Global data services l Globus building blocks l Building higher-level services u GRAM execution management service u Data replication service u Workflow management l Application case studies l Summary 90

Data-Intensive Workflow (www. griphyn. org) Enhance scientific productivity through… l Discovery, application and management of data and processes at petabyte scale l Using a worldwide data grid as a scientific workstation The key to this approach is Virtual Data – creating and managing datasets through workflow “recipes” and provenance recording. 91

What Must we “Virtualize” to Compute on the Grid? l Location-independent computing: represent all workflow in abstract terms l Declarations not tied to specific entities: u u File systems u l Sites Schedulers Failures – automated retry for data server and execution site un-availability 93

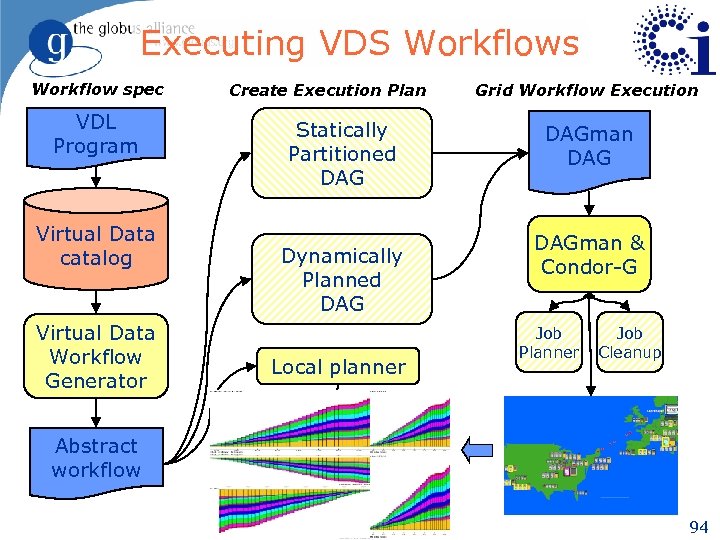

Executing VDS Workflows Workflow spec VDL Program Virtual Data catalog Virtual Data Workflow Generator Create Execution Plan Statically Partitioned DAG Dynamically Planned DAG Local planner Grid Workflow Execution DAGman & Condor-G Job Planner Job Cleanup Abstract workflow 94

OSG: The “target chip” for VDS Workflows Supported by the National Science Foundation and the Department of Energy. 95

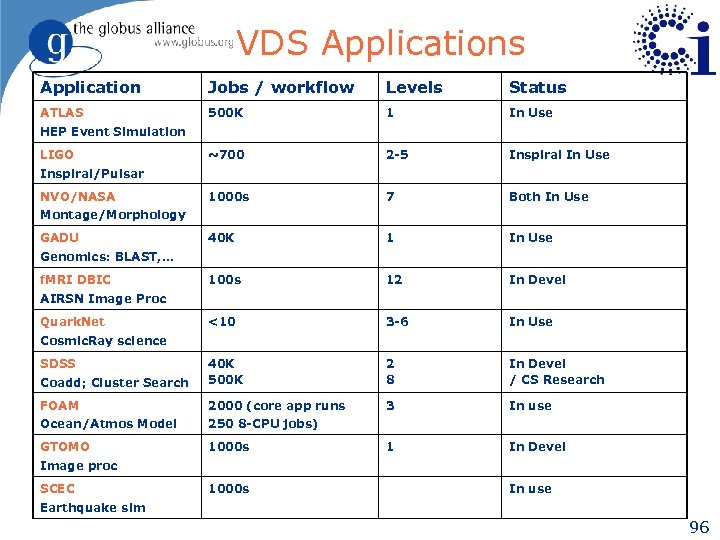

VDS Applications Application Jobs / workflow Levels Status ATLAS 500 K 1 In Use ~700 2 -5 Inspiral In Use 1000 s 7 Both In Use 40 K 1 In Use 100 s 12 In Devel <10 3 -6 In Use Coadd; Cluster Search 40 K 500 K 2 8 In Devel / CS Research FOAM 2000 (core app runs 3 In use Ocean/Atmos Model 250 8 -CPU jobs) GTOMO 1000 s 1 In Devel HEP Event Simulation LIGO Inspiral/Pulsar NVO/NASA Montage/Morphology GADU Genomics: BLAST, … f. MRI DBIC AIRSN Image Proc Quark. Net Cosmic. Ray science SDSS Image proc SCEC 1000 s In use Earthquake sim 96

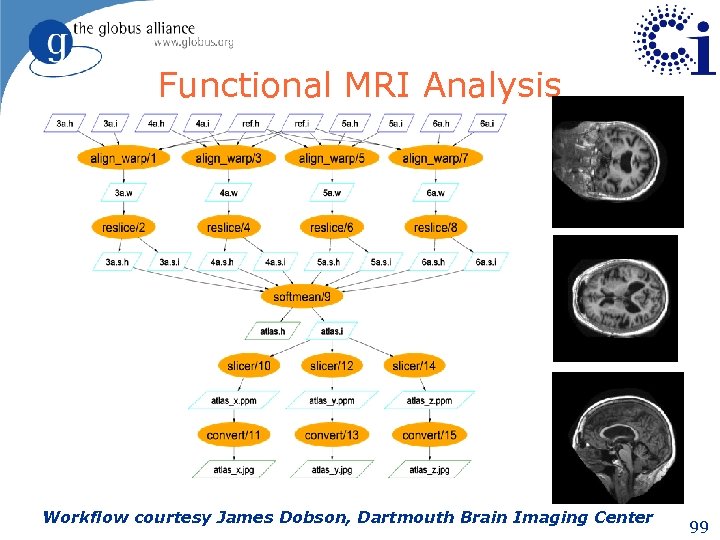

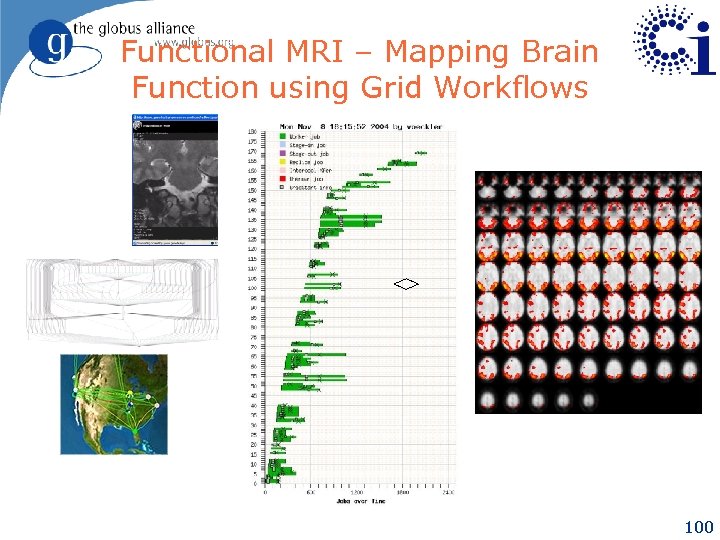

A Case Study – Functional MRI l Problem: “spatial normalization” of a images to prepare data from f. MRI studies for analysis l Target community is approximately 60 users at Dartmouth Brain Imaging Center l Wish to share data and methods across country with researchers at Berkeley l Process data from arbitrary user and archival directories in the center’s AFS space; bring data back to same directories l Grid needs to be transparent to the users: Literally, “Grid as a Workstation” 97

Functional MRI Analysis Workflow courtesy James Dobson, Dartmouth Brain Imaging Center 99

Functional MRI – Mapping Brain Function using Grid Workflows <> 100

Overview l Global data services l Building blocks l Case studies u u Southern California Earthquake Center u Cancer Bioinformatics Grid u Astro. Portal stacking service u l Earth System Grid GADU bioinformatics service Summary 102

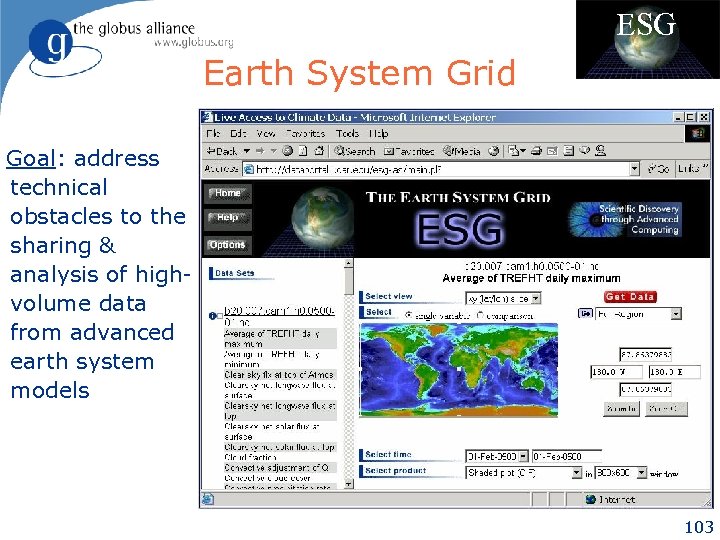

ESG Earth System Grid Goal: address technical obstacles to the sharing & analysis of highvolume data from advanced earth system models 103

ESG Requirements l Move data a minimal amount, keep it close to computational point of origin when possible l When we must move data, do it fast and with minimum human intervention l Keep track of what we have, particularly what’s on deep storage l Make use of the facilities available at multiple sites (centralization not an option) l Data must be easy to find access using standard Web browsers 104

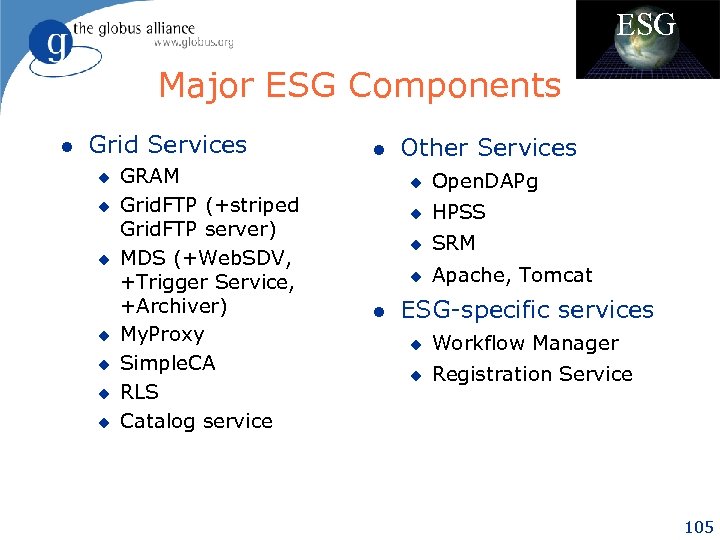

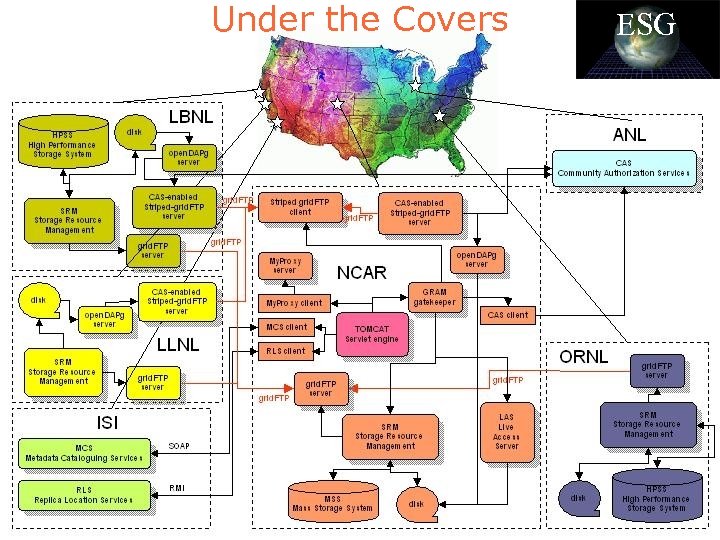

ESG Major ESG Components l Grid Services u u u u GRAM Grid. FTP (+striped Grid. FTP server) MDS (+Web. SDV, +Trigger Service, +Archiver) My. Proxy Simple. CA RLS Catalog service l Other Services u u HPSS u SRM u l Open. DAPg Apache, Tomcat ESG-specific services u Workflow Manager u Registration Service 105

Under the Covers ESG 106

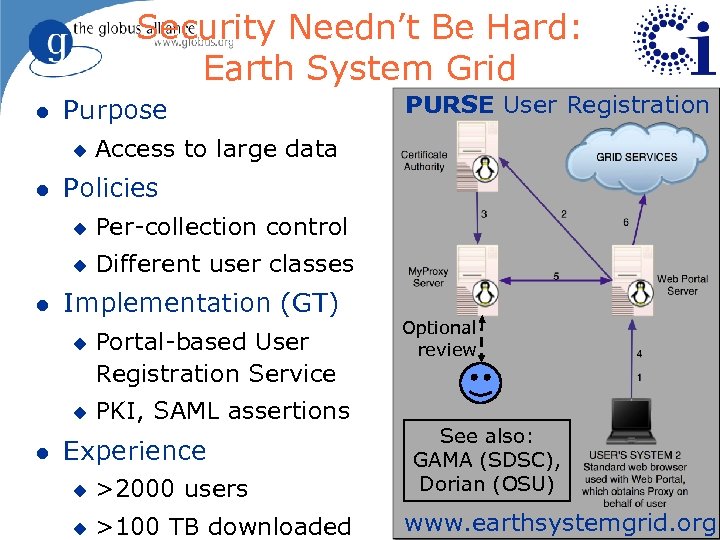

Security Needn’t Be Hard: Earth System Grid l Purpose u l Access to large data Policies u Per-collection control u l Different user classes Implementation (GT) u u l PURSE User Registration Portal-based User Registration Service PKI, SAML assertions Experience u >2000 users u >100 TB downloaded Optional review See also: GAMA (SDSC), Dorian (OSU) www. earthsystemgrid. org 107

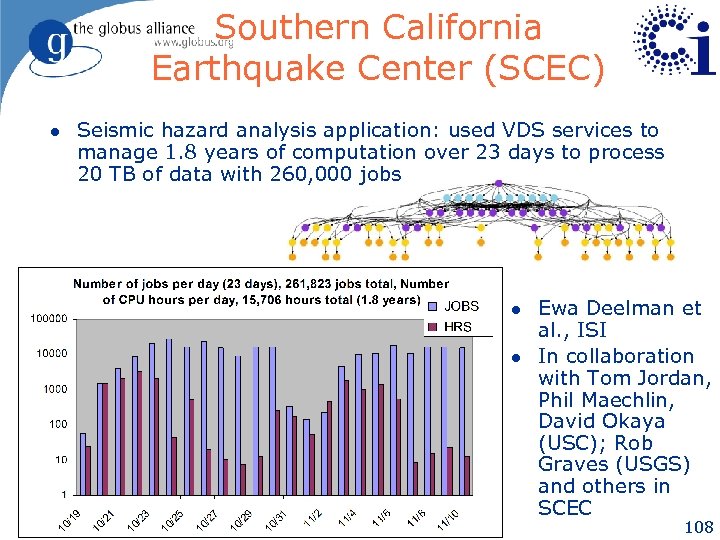

Southern California Earthquake Center (SCEC) l Seismic hazard analysis application: used VDS services to manage 1. 8 years of computation over 23 days to process 20 TB of data with 260, 000 jobs l l Ewa Deelman et al. , ISI In collaboration with Tom Jordan, Phil Maechlin, David Okaya (USC); Rob Graves (USGS) and others in SCEC 108

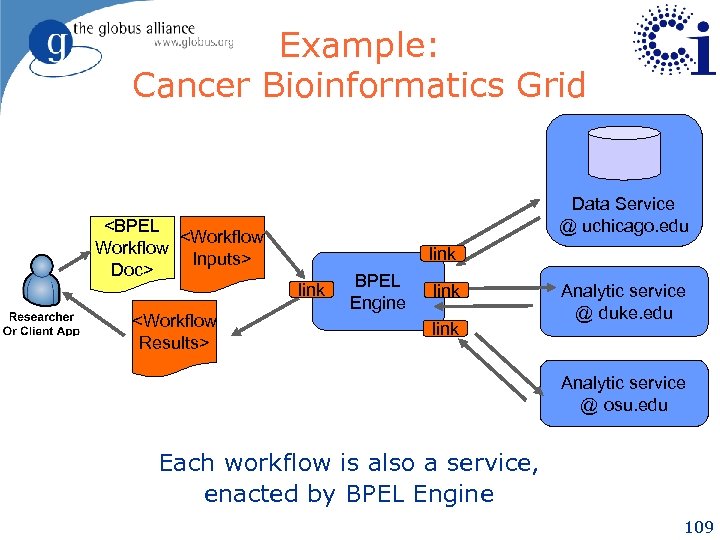

Example: Cancer Bioinformatics Grid Data Service @ uchicago. edu <BPEL <Workflow Inputs> Doc> link <Workflow Results> BPEL Engine link Analytic service @ duke. edu Analytic service @ osu. edu Each workflow is also a service, enacted by BPEL Engine 109

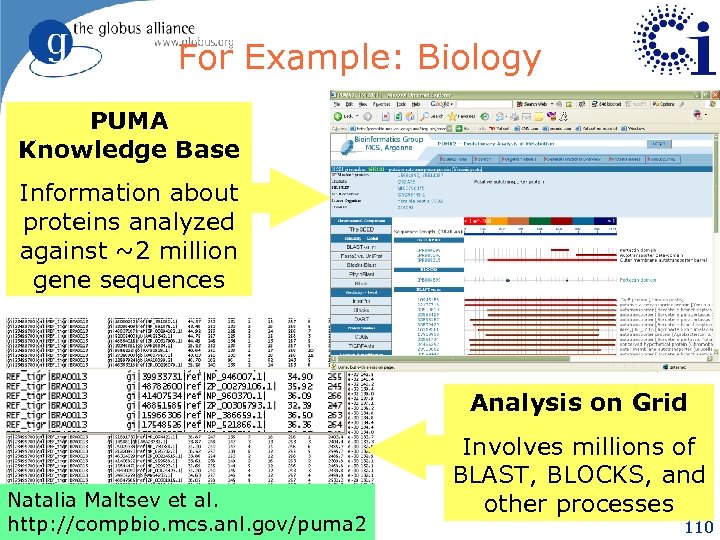

For Example: Biology PUMA Knowledge Base Information about proteins analyzed against ~2 million gene sequences Analysis on Grid Natalia Maltsev et al. http: //compbio. mcs. anl. gov/puma 2 Involves millions of BLAST, BLOCKS, and other processes 110

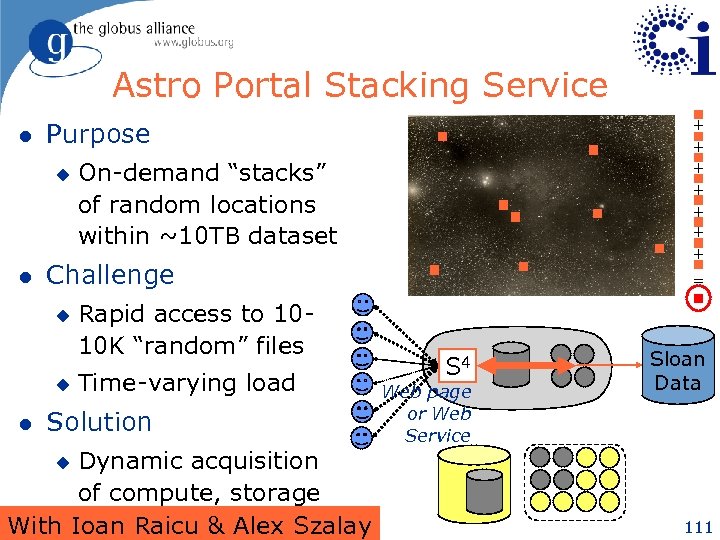

Astro Portal Stacking Service l u l On-demand “stacks” of random locations within ~10 TB dataset Challenge u u l + + + + Purpose Rapid access to 1010 K “random” files Time-varying load Solution Dynamic acquisition of compute, storage With Ioan Raicu & Alex Szalay = S 4 Web page or Web Service Sloan Data u 111

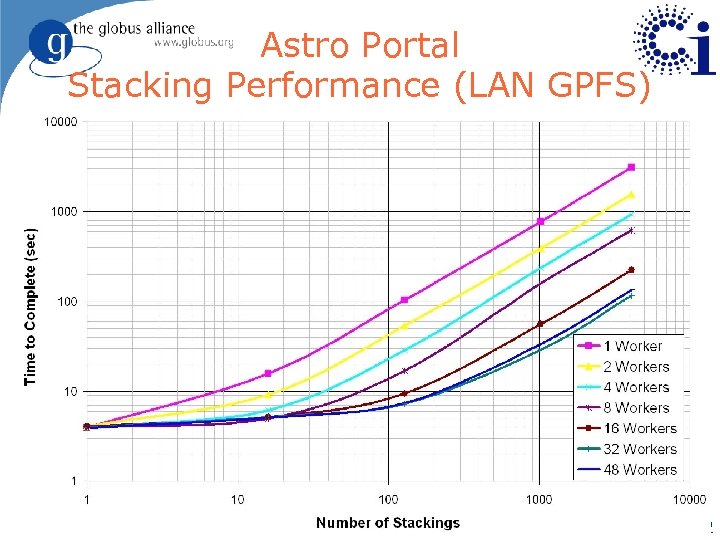

Astro Portal Stacking Performance (LAN GPFS) 112

Summary l Global data services u l Globus building blocks u l Connecting data with people & computers, often on a large scale Core Web Services & security; enabling mechanisms for data access & manipulation Building higher-level services u E. g. , Data replication service l Application case studies l Summary 113

For More Information l Globus Alliance u l Dev. Globus u l www. teragrid. org Open Science Grid u l www. ggf. org Tera. Grid u l dev. globus. org Global Grid Forum u l www. globus. org www. opensciencegrid. org 2 nd Edition www. mkp. com/grid 2 Background information u www. mcs. anl. gov/~foster 114

650ba9f7386a70b715e24717721ed484.ppt