gist improvement_msg.pptx

- Количество слайдов: 28

Gi. ST node fan-out optimization Andrey Borodin Software engineer at Octonica Associate Professor at URFU

Beckman Report On Database Stonebraker’s report FIY: MS SQL SAP’s Columnar DB My. SQL With enhancements VmwarePivotal project

Stonebraker’s point • Old databases are optimized for efficiency of a slowest device • Currently we have plenty of RAM Other points: • Main memory format for storage • Hot tuples in RAM • Fail transaction on “miss”, heat tuples, rerun

Derivations from Stonebraker’s points 1. RAM and CPUs are used inefficiently 2. Tradeoff: Disk format for buffer

2. Tradeoff: Disk format for buffer If we choose NO DISK FORMAT: 1. Direct tuples references (no FK joins) 2. Columnar store: fast projection Hybrid way: columnar heap

1. RAM and CPU is used inefficiently If you do not think in terms of: • Number of cache lines involved for read and write • Memory bandwidth 1024 cores server suddenly becomes server with just 2 memory channels DB’s usually do not depend on arithmetic power of CPUs, but it is surprisingly easy to hit memory bandwidth. In some cases it is easier than hitting SSD performance bottleneck.

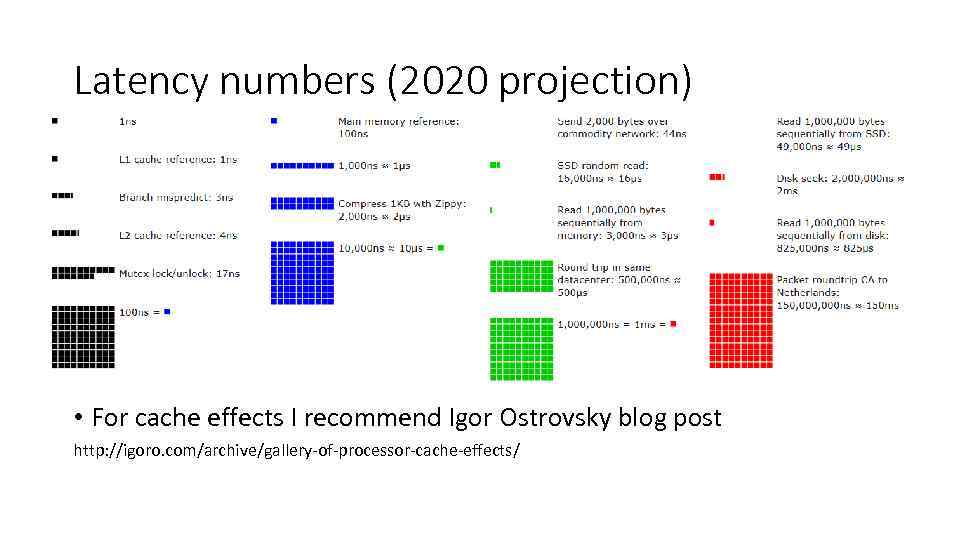

Latency numbers (2020 projection) • For cache effects I recommend Igor Ostrovsky blog post http: //igoro. com/archive/gallery-of-processor-cache-effects/

Multidimensional access methods select aggregate(column) from table where table. point is inside some region FYI:

MD access methods dichotomy • Spatial indexing: pretend we can have smart heuristics to organize data. In my experience this works with ~10 independent dimensions. • Bitmap indexing: prepare proper “brute force” search. • Hybrid approach: bitmap to search, spatial indexing for clustering data on storage. • Add-on strategy – precaching: Ra-trees, mipmap`ing, et c.

Generalized index search tree (Gi. ST) • Balanced-tree framework for different kinds “part of” relations • User must define “penalty of unification” for keys Marcel Kornacker was working on another index framework with APIcustomizable page layout (see Gi. ST-Based Index Extension Architecture) FYI: Kornacker thesis

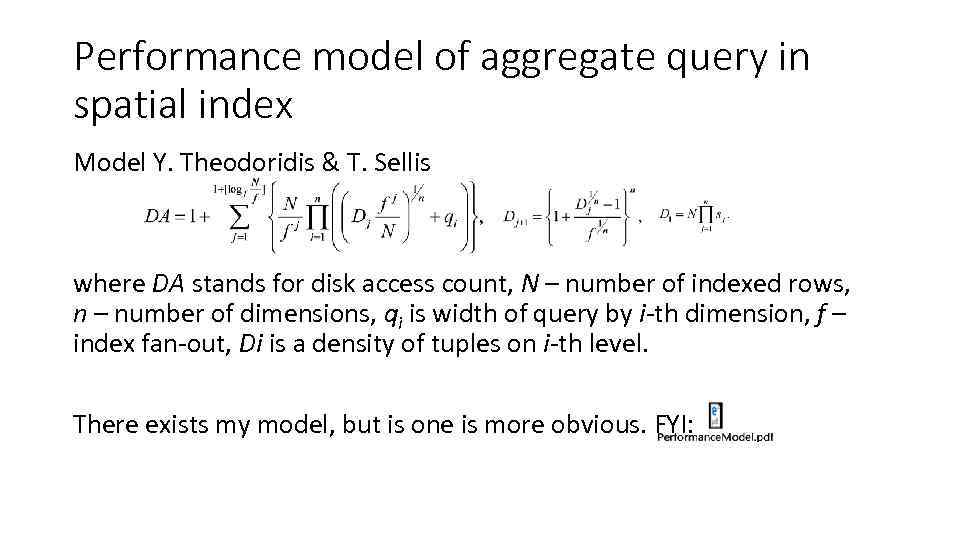

Performance model of aggregate query in spatial index Model Y. Theodoridis & T. Sellis where DA stands for disk access count, N – number of indexed rows, n – number of dimensions, qi is width of query by i-th dimension, f – index fan-out, Di is a density of tuples on i-th level. There exists my model, but is one is more obvious. FYI:

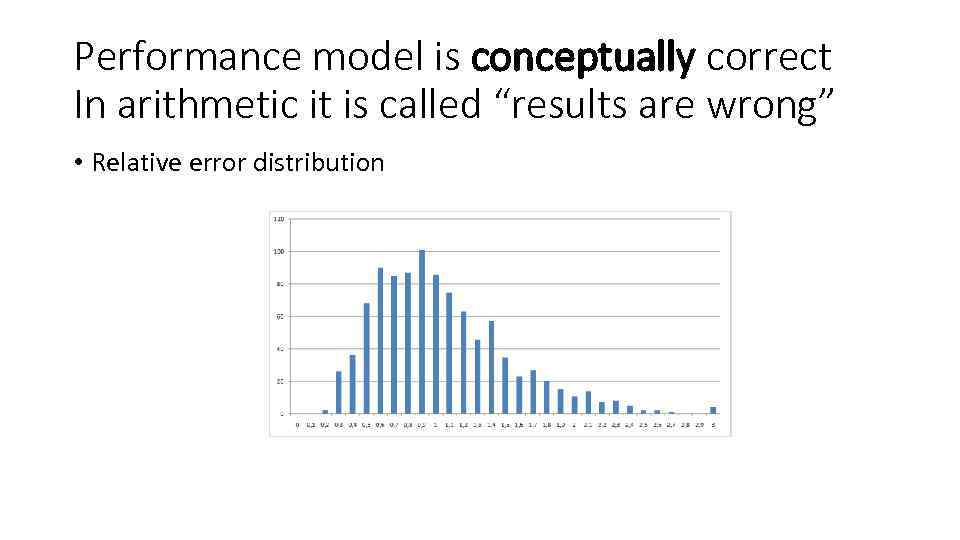

Performance model is conceptually correct In arithmetic it is called “results are wrong” • Relative error distribution

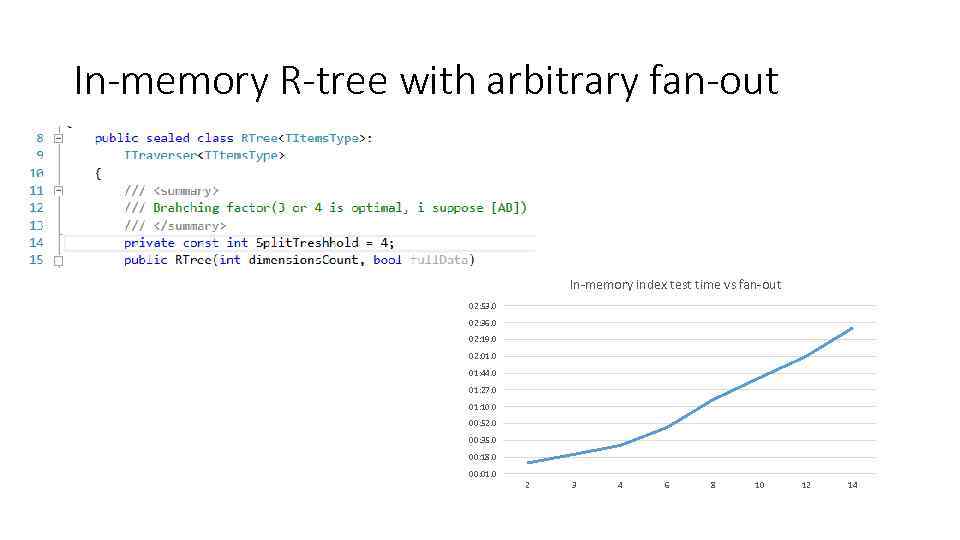

In-memory R-tree with arbitrary fan-out In-memory index test time vs fan-out 02: 53. 0 02: 36. 0 02: 19. 0 02: 01. 0 01: 44. 0 01: 27. 0 01: 10. 0 00: 52. 0 00: 35. 0 00: 18. 0 00: 01. 0 2 3 4 6 8 10 12 14

Theoretically optimal fan-out In a tree with fan-out f query returning exactly 1 row has to touch every tuple in a node, but in optimal tree path from root to leaf will take exactly 1 page on each level, so key-compares count K is equal to tree height h multiplied by f. K = f ∙ h = [f ∙ logf. N], which is optimal with f e ≈ 3. Without loss of generality this holds for cache lines count instead of key compares. As we saw above this does not work for f = 2. Probably, due to differences of constraints (experiment involved more than one row returned by aggregate query)

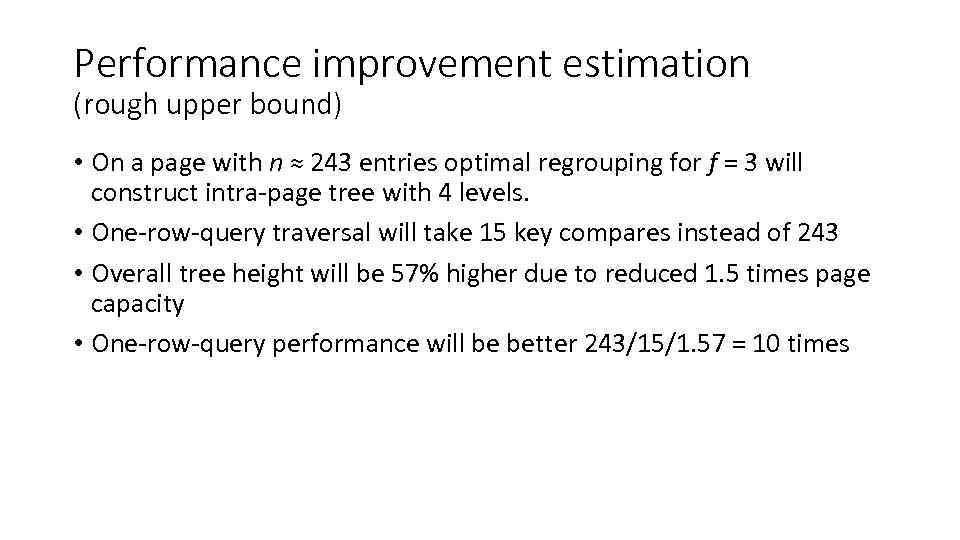

Performance improvement estimation (rough upper bound) • On a page with n ≈ 243 entries optimal regrouping for f = 3 will construct intra-page tree with 4 levels. • One-row-query traversal will take 15 key compares instead of 243 • Overall tree height will be 57% higher due to reduced 1. 5 times page capacity • One-row-query performance will be better 243/15/1. 57 = 10 times

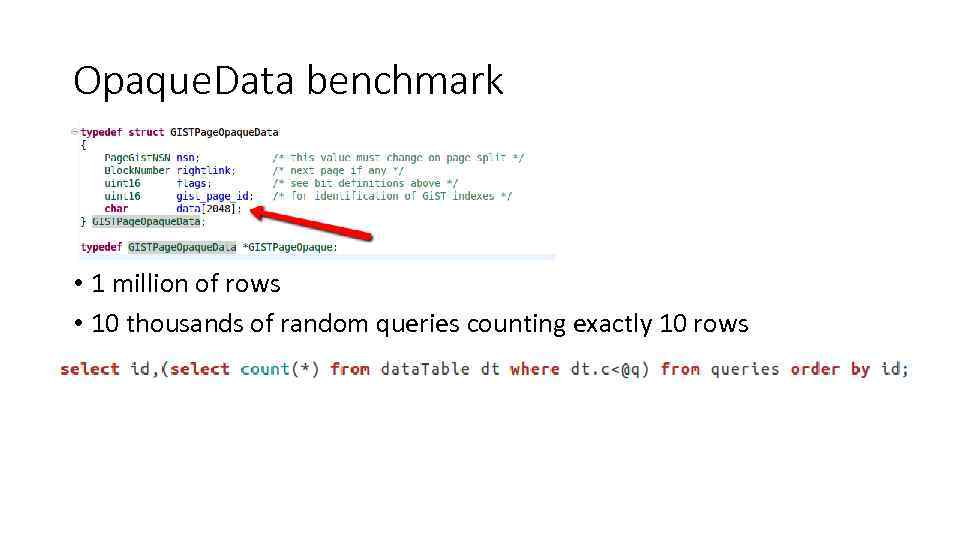

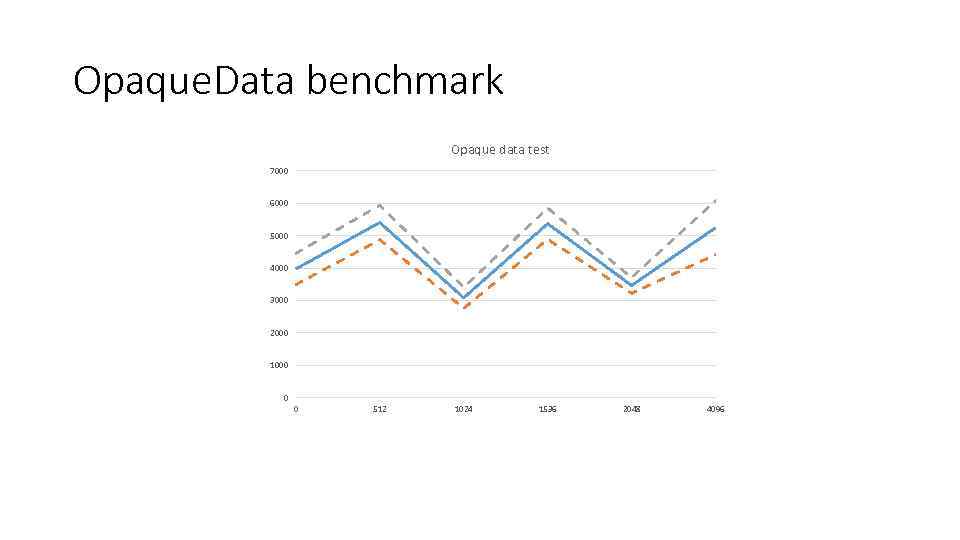

Opaque. Data benchmark • 1 million of rows • 10 thousands of random queries counting exactly 10 rows

Opaque. Data benchmark Opaque data test 7000 6000 5000 4000 3000 2000 1000 0 0 512 1024 1536 2048 4096

Page layout ways • Dense vs fragmented

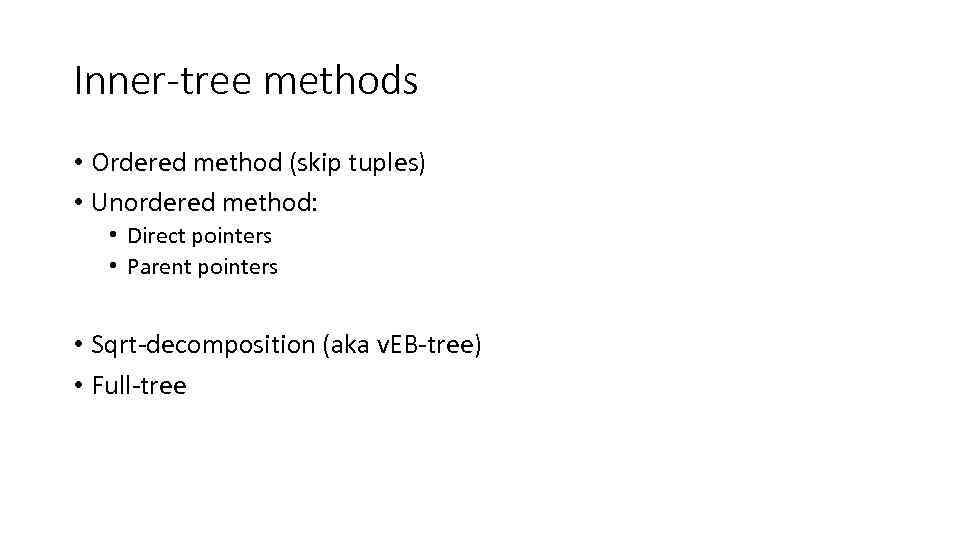

Inner-tree methods • Ordered method (skip tuples) • Unordered method: • Direct pointers • Parent pointers • Sqrt-decomposition (aka v. EB-tree) • Full-tree

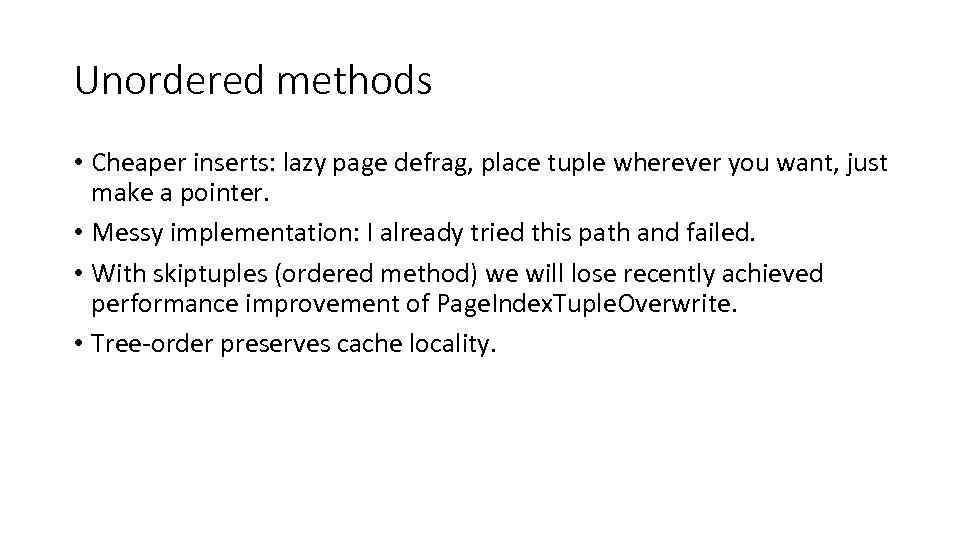

Unordered methods • Cheaper inserts: lazy page defrag, place tuple wherever you want, just make a pointer. • Messy implementation: I already tried this path and failed. • With skiptuples (ordered method) we will lose recently achieved performance improvement of Page. Index. Tuple. Overwrite. • Tree-order preserves cache locality.

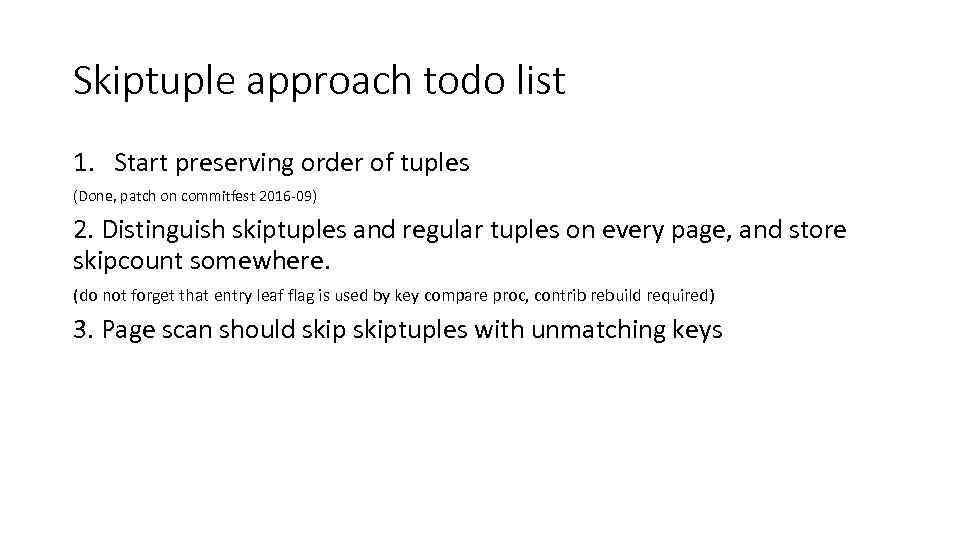

Skiptuple approach todo list 1. Start preserving order of tuples (Done, patch on commitfest 2016 -09) 2. Distinguish skiptuples and regular tuples on every page, and store skipcount somewhere. (do not forget that entry leaf flag is used by key compare proc, contrib rebuild required) 3. Page scan should skiptuples with unmatching keys

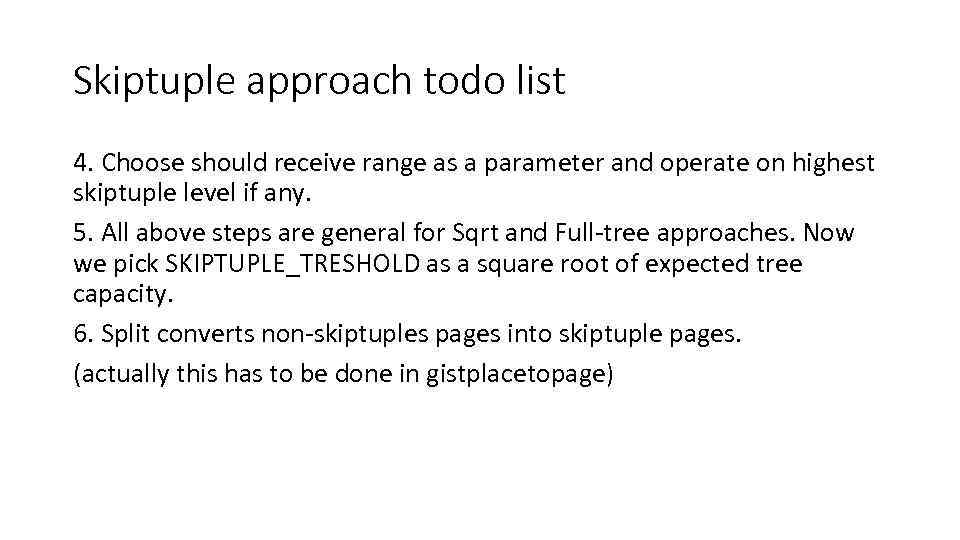

Skiptuple approach todo list 4. Choose should receive range as a parameter and operate on highest skiptuple level if any. 5. All above steps are general for Sqrt and Full-tree approaches. Now we pick SKIPTUPLE_TRESHOLD as a square root of expected tree capacity. 6. Split converts non-skiptuples pages into skiptuple pages. (actually this has to be done in gistplacetopage)

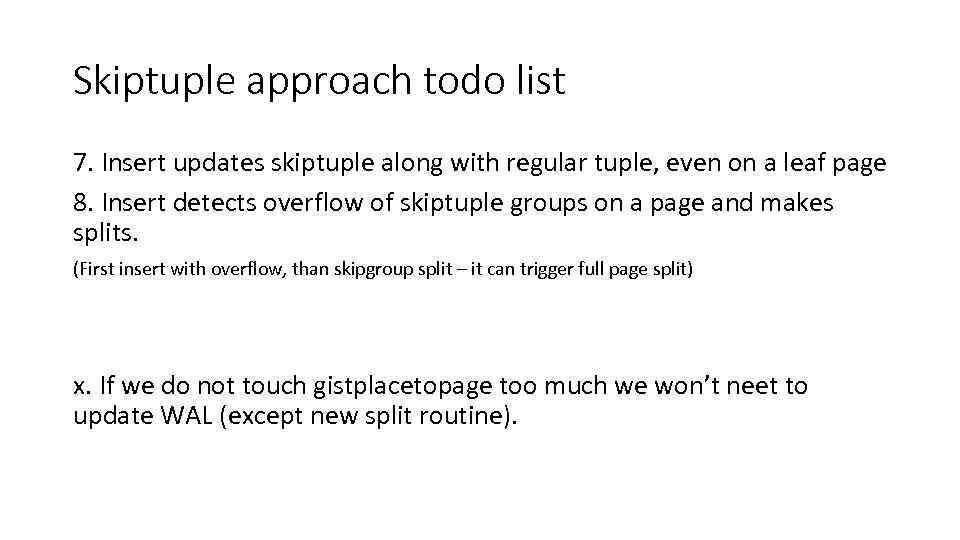

Skiptuple approach todo list 7. Insert updates skiptuple along with regular tuple, even on a leaf page 8. Insert detects overflow of skiptuple groups on a page and makes splits. (First insert with overflow, than skipgroup split – it can trigger full page split) x. If we do not touch gistplacetopage too much we won’t neet to update WAL (except new split routine).

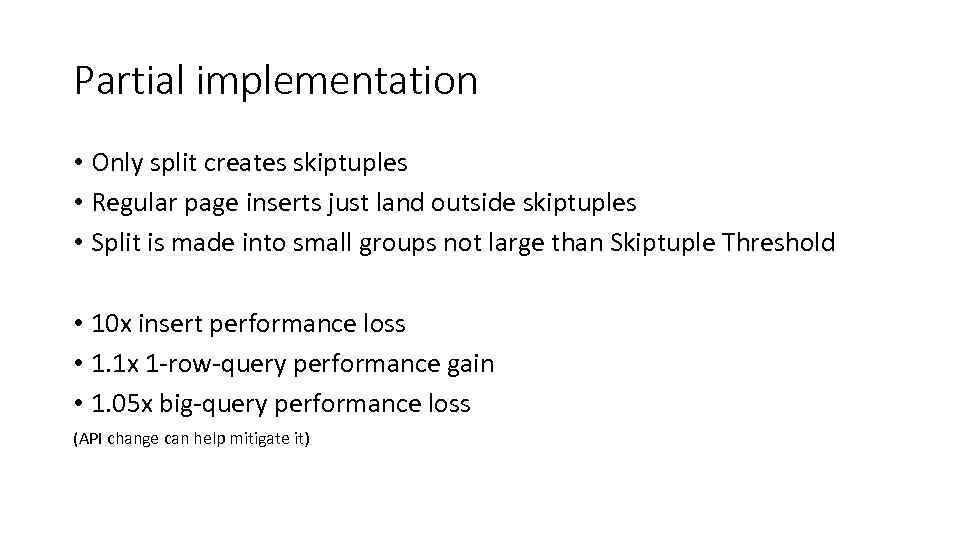

Partial implementation • Only split creates skiptuples • Regular page inserts just land outside skiptuples • Split is made into small groups not large than Skiptuple Threshold • 10 x insert performance loss • 1. 1 x 1 -row-query performance gain • 1. 05 x big-query performance loss (API change can help mitigate it)

Optimizing big queries • Now key compare answer is NO and MAY BE • If we have answer SURE (all tree branch data surely satisfies search) we skip many kay compares • For cache locality bonus it’s necessary to move TIDs to Item. Id

Bulk-loading • VAM-Split algorithm helps to build optimal multidimensional index fast. VAM is Variance and Median. • Neither variance, nor median exists in Gi. ST API. • We can design Gi. ST bulkload • probably extending Gi. ST API • FYI:

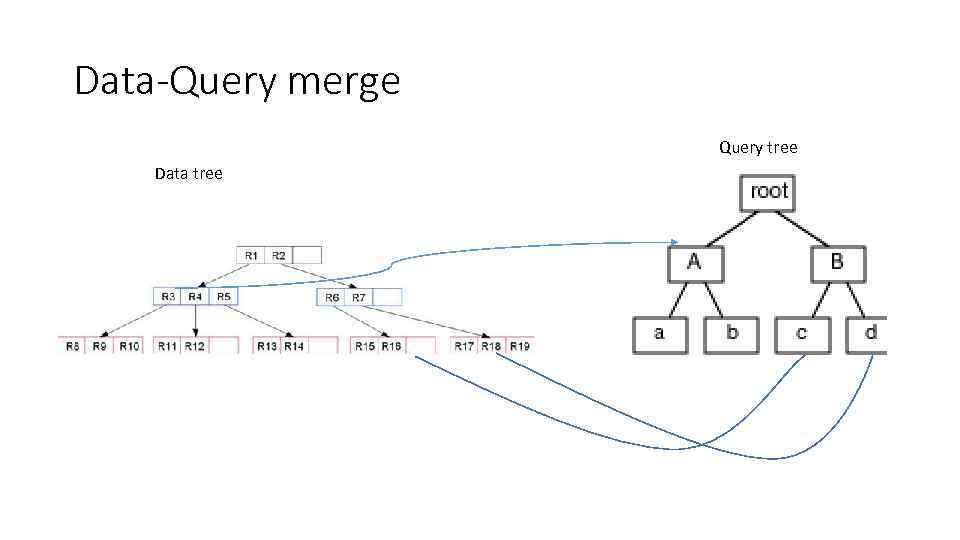

Data-Query merge Query tree Data tree

Practical point of view • Fan-out optimization is hard way (requires development of nonexistent algorithms) to gain constant performance improvement. • Though outcome constant may be high, there is a lot of places to optimize with less effort. • Remember R*-tree reinserts: it is near-optimal, but no one cares to implement it. FYI:

gist improvement_msg.pptx