5616e78bf05f0c3a785ee23ea9183498.ppt

- Количество слайдов: 60

Gesture Recognition in Complex Scenes Vassilis Athitsos Computer Science and Engineering Department University of Texas at Arlington 1

Gesture Recognition in Complex Scenes Vassilis Athitsos Computer Science and Engineering Department University of Texas at Arlington 1

Collaborators n n n n Jonathan Alon (ex-BU, now Negevtech, Israel). Jingbin Wang (ex-BU, now Google). Quan Yuan (Boston University). Alexandra Stefan (Boston University). Stan Sclaroff (Boston University). George Kollios (Boston University). Margrit Betke (Boston University). 2

Collaborators n n n n Jonathan Alon (ex-BU, now Negevtech, Israel). Jingbin Wang (ex-BU, now Google). Quan Yuan (Boston University). Alexandra Stefan (Boston University). Stan Sclaroff (Boston University). George Kollios (Boston University). Margrit Betke (Boston University). 2

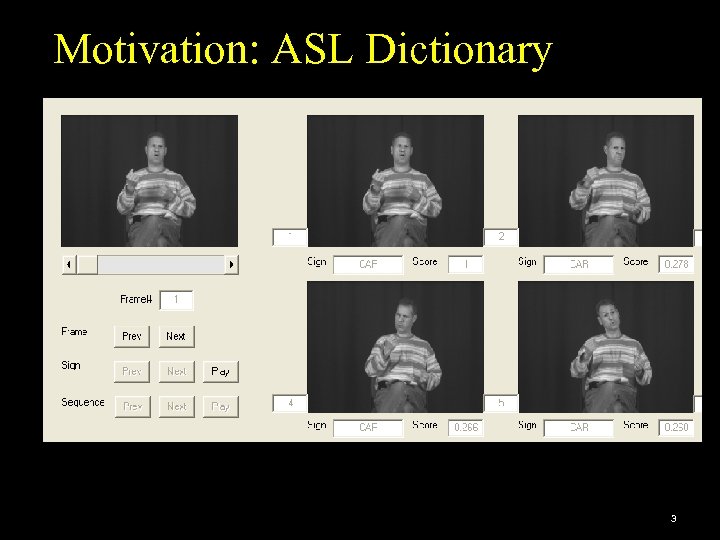

Motivation: ASL Dictionary 3

Motivation: ASL Dictionary 3

Motivation: ASL Dictionary n Addresses needs of a large community: n 500, 000 to 2 million ASL users in the US. n n Direct impact in education of Deaf children. n n ? ? ? in the European Union. Most born to hearing parents, learn ASL at school. Challenging problems in vision, learning, database indexing. n n n Large-scale motion-based video retrieval. Efficient large-scale multiclass recognition. Learning complex patterns from few examples. 4

Motivation: ASL Dictionary n Addresses needs of a large community: n 500, 000 to 2 million ASL users in the US. n n Direct impact in education of Deaf children. n n ? ? ? in the European Union. Most born to hearing parents, learn ASL at school. Challenging problems in vision, learning, database indexing. n n n Large-scale motion-based video retrieval. Efficient large-scale multiclass recognition. Learning complex patterns from few examples. 4

Sources of Information Hand motion. n Hand pose. n n n Shape. Orientation. Facial expressions. n Body pose. n 5

Sources of Information Hand motion. n Hand pose. n n n Shape. Orientation. Facial expressions. n Body pose. n 5

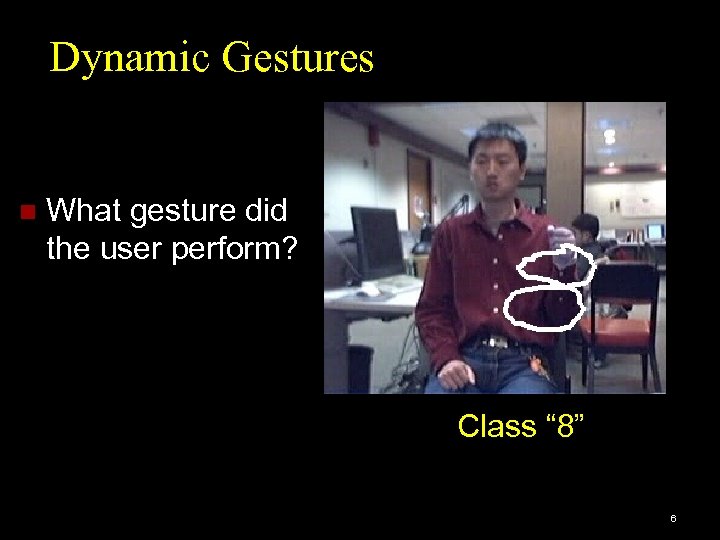

Dynamic Gestures n What gesture did the user perform? Class “ 8” 6

Dynamic Gestures n What gesture did the user perform? Class “ 8” 6

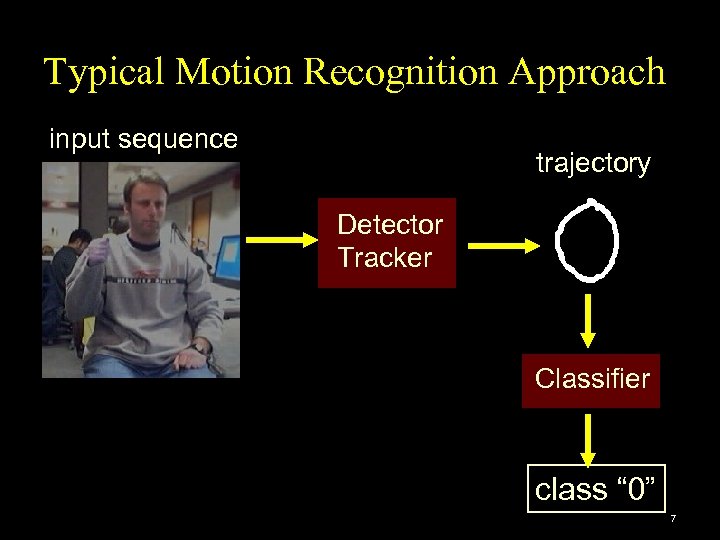

Typical Motion Recognition Approach input sequence trajectory Detector Tracker Classifier class “ 0” 7

Typical Motion Recognition Approach input sequence trajectory Detector Tracker Classifier class “ 0” 7

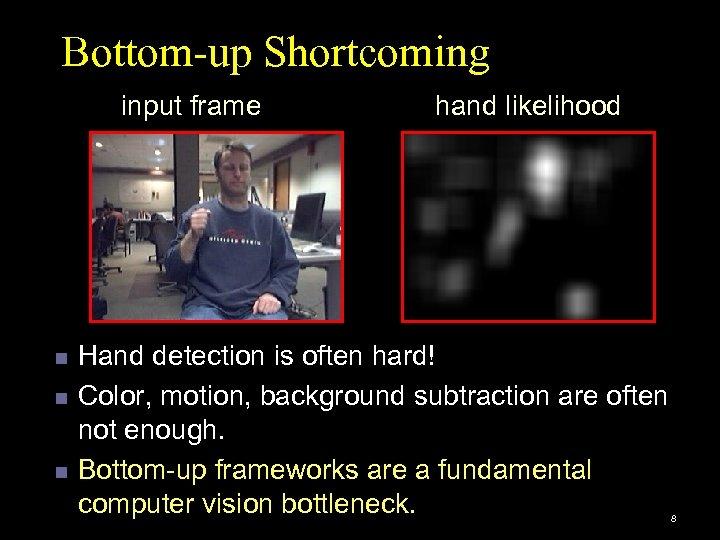

Bottom-up Shortcoming input frame n n n hand likelihood Hand detection is often hard! Color, motion, background subtraction are often not enough. Bottom-up frameworks are a fundamental computer vision bottleneck. 8

Bottom-up Shortcoming input frame n n n hand likelihood Hand detection is often hard! Color, motion, background subtraction are often not enough. Bottom-up frameworks are a fundamental computer vision bottleneck. 8

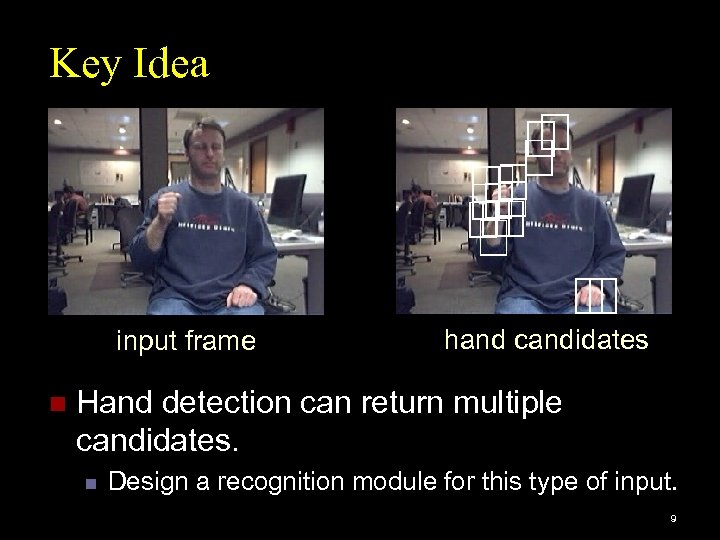

Key Idea input frame n hand candidates Hand detection can return multiple candidates. n Design a recognition module for this type of input. 9

Key Idea input frame n hand candidates Hand detection can return multiple candidates. n Design a recognition module for this type of input. 9

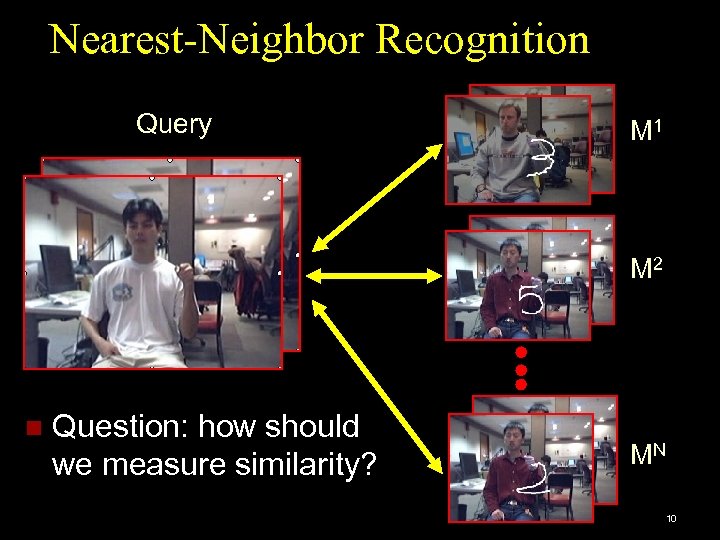

Nearest-Neighbor Recognition Query M 1 M 2 n Question: how should we measure similarity? MN 10

Nearest-Neighbor Recognition Query M 1 M 2 n Question: how should we measure similarity? MN 10

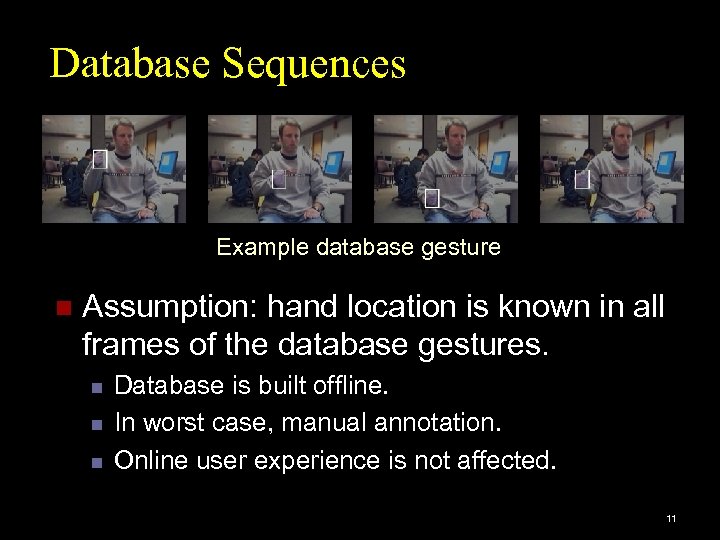

Database Sequences Example database gesture n Assumption: hand location is known in all frames of the database gestures. n n n Database is built offline. In worst case, manual annotation. Online user experience is not affected. 11

Database Sequences Example database gesture n Assumption: hand location is known in all frames of the database gestures. n n n Database is built offline. In worst case, manual annotation. Online user experience is not affected. 11

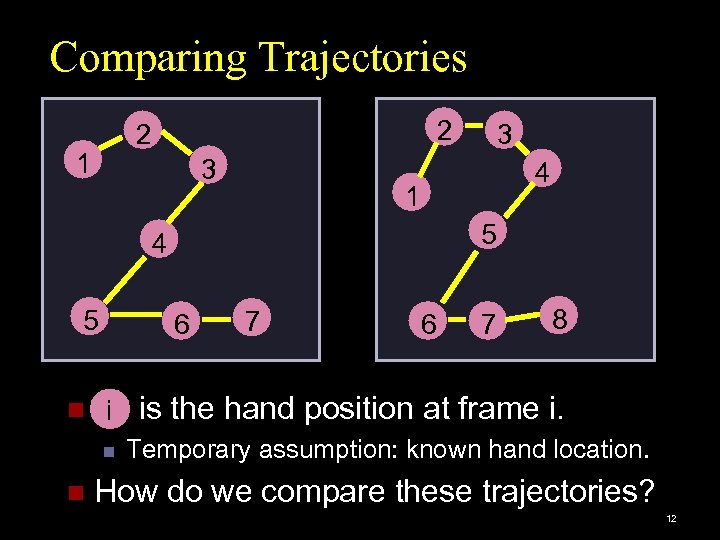

Comparing Trajectories 2 2 1 3 4 1 5 4 5 6 n i n n 3 7 6 7 8 is the hand position at frame i. Temporary assumption: known hand location. How do we compare these trajectories? 12

Comparing Trajectories 2 2 1 3 4 1 5 4 5 6 n i n n 3 7 6 7 8 is the hand position at frame i. Temporary assumption: known hand location. How do we compare these trajectories? 12

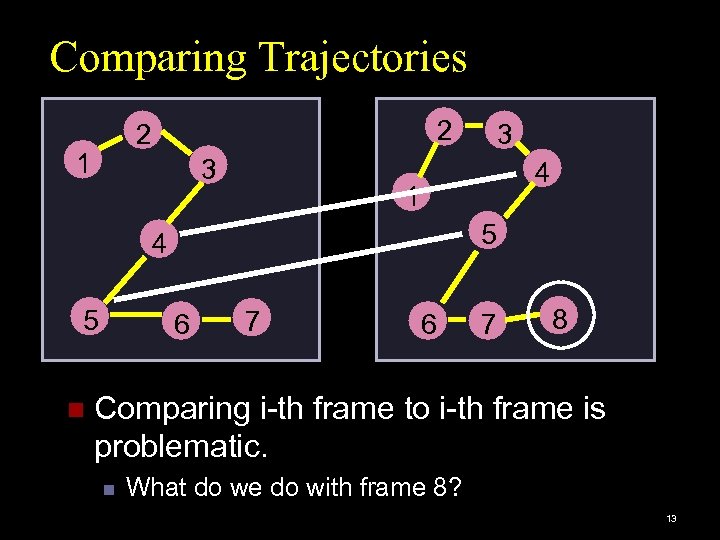

Comparing Trajectories 2 2 1 3 4 1 5 4 5 n 3 6 7 8 Comparing i-th frame to i-th frame is problematic. n What do we do with frame 8? 13

Comparing Trajectories 2 2 1 3 4 1 5 4 5 n 3 6 7 8 Comparing i-th frame to i-th frame is problematic. n What do we do with frame 8? 13

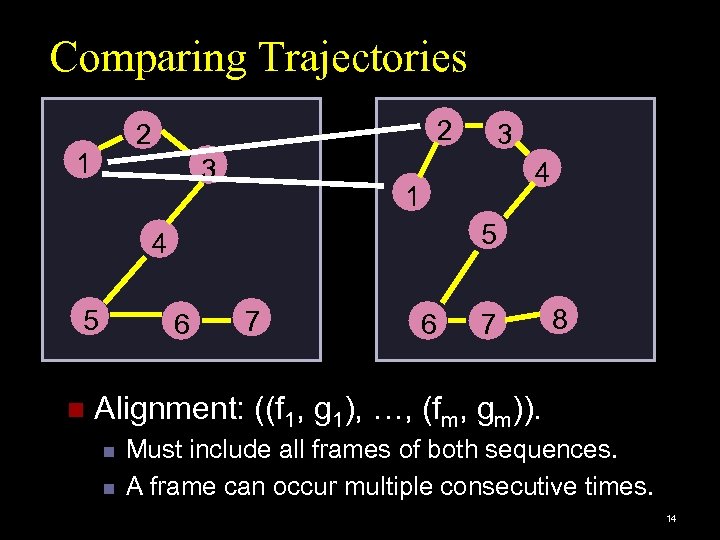

Comparing Trajectories 2 2 1 3 4 1 5 4 5 n 3 6 7 8 Alignment: ((f 1, g 1), …, (fm, gm)). n n Must include all frames of both sequences. A frame can occur multiple consecutive times. 14

Comparing Trajectories 2 2 1 3 4 1 5 4 5 n 3 6 7 8 Alignment: ((f 1, g 1), …, (fm, gm)). n n Must include all frames of both sequences. A frame can occur multiple consecutive times. 14

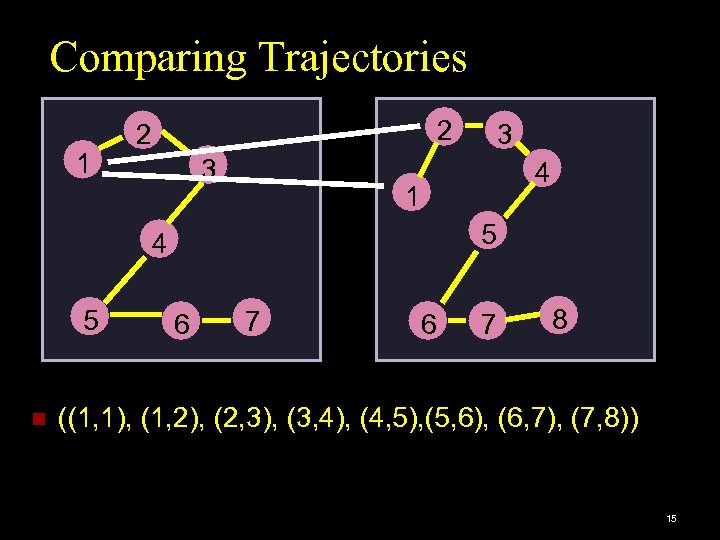

Comparing Trajectories 1 2 2 3 4 1 5 4 5 n 3 6 7 8 ((1, 1), (1, 2), (2, 3), (3, 4), (4, 5), (5, 6), (6, 7), (7, 8)) 15

Comparing Trajectories 1 2 2 3 4 1 5 4 5 n 3 6 7 8 ((1, 1), (1, 2), (2, 3), (3, 4), (4, 5), (5, 6), (6, 7), (7, 8)) 15

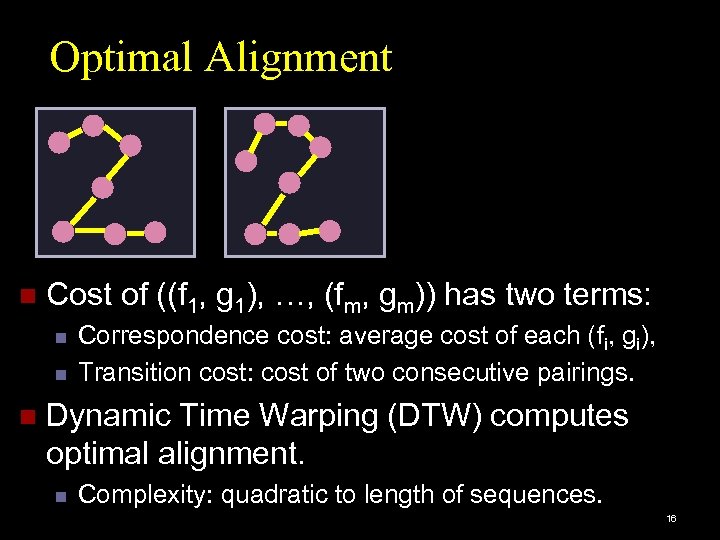

Optimal Alignment n Cost of ((f 1, g 1), …, (fm, gm)) has two terms: n n n Correspondence cost: average cost of each (fi, gi), Transition cost: cost of two consecutive pairings. Dynamic Time Warping (DTW) computes optimal alignment. n Complexity: quadratic to length of sequences. 16

Optimal Alignment n Cost of ((f 1, g 1), …, (fm, gm)) has two terms: n n n Correspondence cost: average cost of each (fi, gi), Transition cost: cost of two consecutive pairings. Dynamic Time Warping (DTW) computes optimal alignment. n Complexity: quadratic to length of sequences. 16

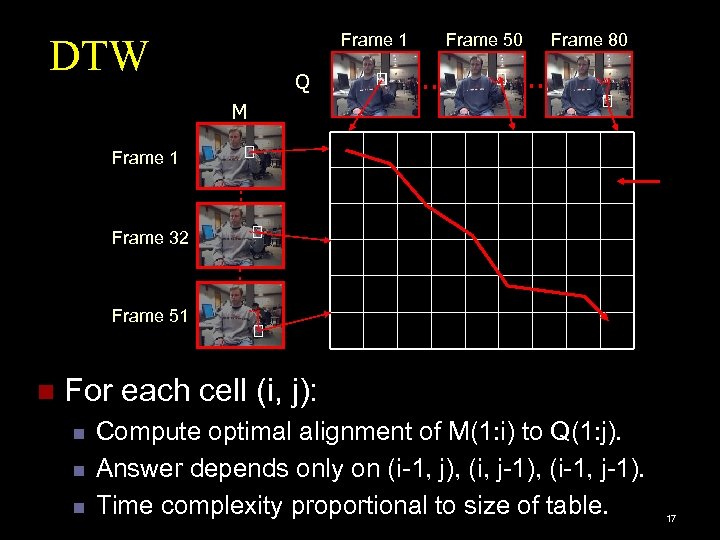

Frame 1 DTW Q Frame 50 . . Frame 80 . . M Frame 1 . . Frame 32 . . Frame 51 n For each cell (i, j): n n n Compute optimal alignment of M(1: i) to Q(1: j). Answer depends only on (i-1, j), (i, j-1), (i-1, j-1). Time complexity proportional to size of table. 17

Frame 1 DTW Q Frame 50 . . Frame 80 . . M Frame 1 . . Frame 32 . . Frame 51 n For each cell (i, j): n n n Compute optimal alignment of M(1: i) to Q(1: j). Answer depends only on (i-1, j), (i, j-1), (i-1, j-1). Time complexity proportional to size of table. 17

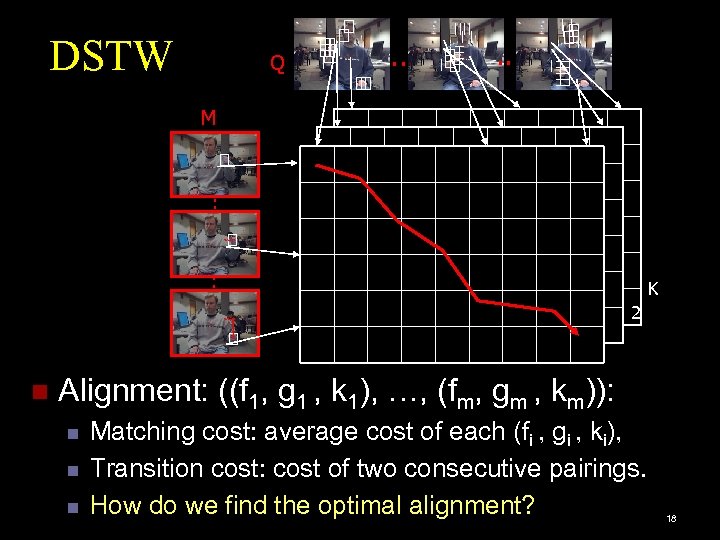

DSTW Q . . M . . W W . . K 2 1 n Alignment: ((f 1, g 1 , k 1), …, (fm, gm , km)): n n n Matching cost: average cost of each (fi , gi , ki), Transition cost: cost of two consecutive pairings. How do we find the optimal alignment? 18

DSTW Q . . M . . W W . . K 2 1 n Alignment: ((f 1, g 1 , k 1), …, (fm, gm , km)): n n n Matching cost: average cost of each (fi , gi , ki), Transition cost: cost of two consecutive pairings. How do we find the optimal alignment? 18

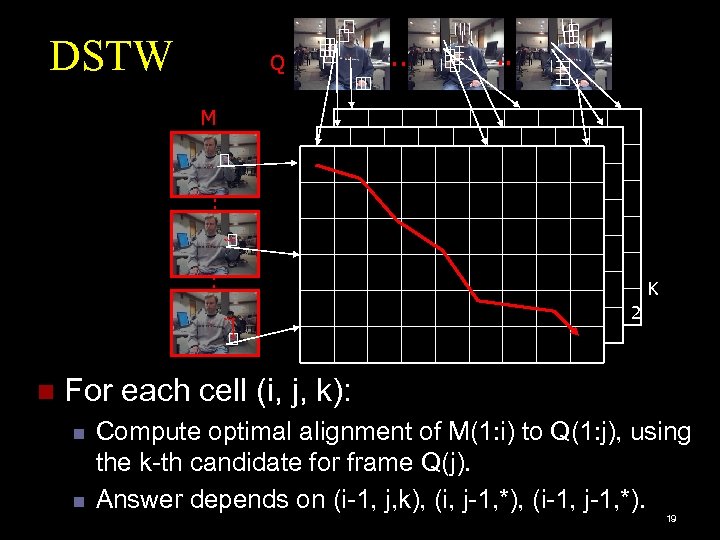

DSTW Q . . M . . W W . . K 2 1 n For each cell (i, j, k): n n Compute optimal alignment of M(1: i) to Q(1: j), using the k-th candidate for frame Q(j). Answer depends on (i-1, j, k), (i, j-1, *), (i-1, j-1, *). 19

DSTW Q . . M . . W W . . K 2 1 n For each cell (i, j, k): n n Compute optimal alignment of M(1: i) to Q(1: j), using the k-th candidate for frame Q(j). Answer depends on (i-1, j, k), (i, j-1, *), (i-1, j-1, *). 19

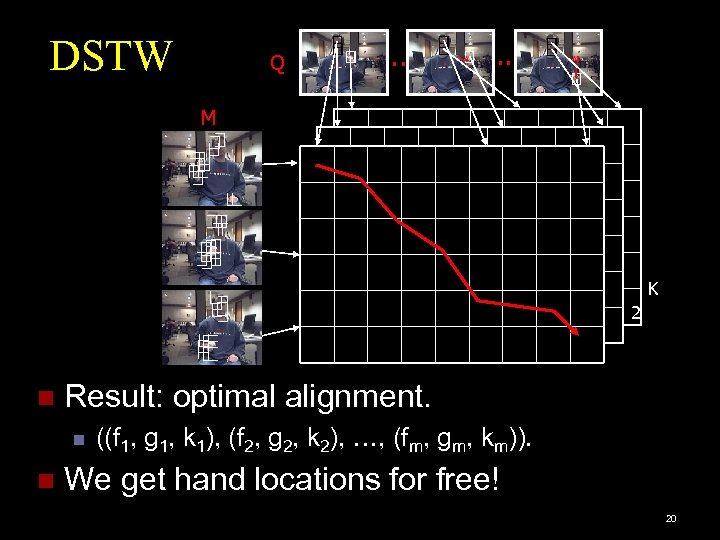

DSTW Q . . M W W K 2 1 n Result: optimal alignment. n n ((f 1, g 1, k 1), (f 2, g 2, k 2), …, (fm, gm, km)). We get hand locations for free! 20

DSTW Q . . M W W K 2 1 n Result: optimal alignment. n n ((f 1, g 1, k 1), (f 2, g 2, k 2), …, (fm, gm, km)). We get hand locations for free! 20

Application: Gesture Recognition with Short Sleeves! 21

Application: Gesture Recognition with Short Sleeves! 21

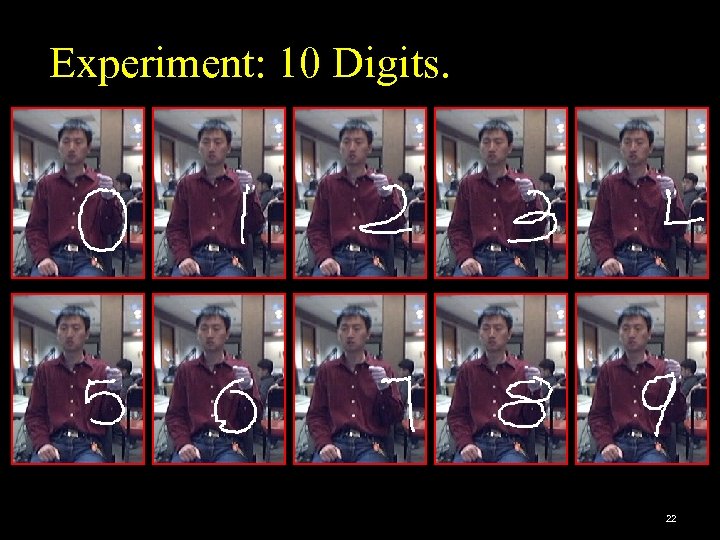

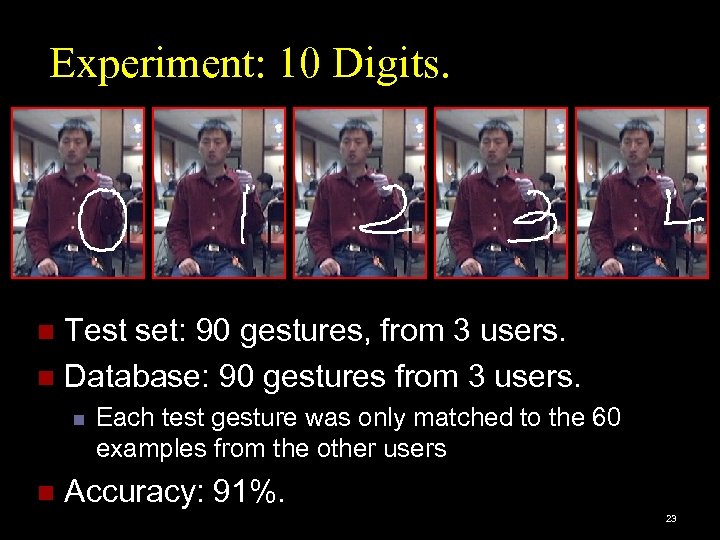

Experiment: 10 Digits. 22

Experiment: 10 Digits. 22

Experiment: 10 Digits. Test set: 90 gestures, from 3 users. n Database: 90 gestures from 3 users. n n n Each test gesture was only matched to the 60 examples from the other users Accuracy: 91%. 23

Experiment: 10 Digits. Test set: 90 gestures, from 3 users. n Database: 90 gestures from 3 users. n n n Each test gesture was only matched to the 60 examples from the other users Accuracy: 91%. 23

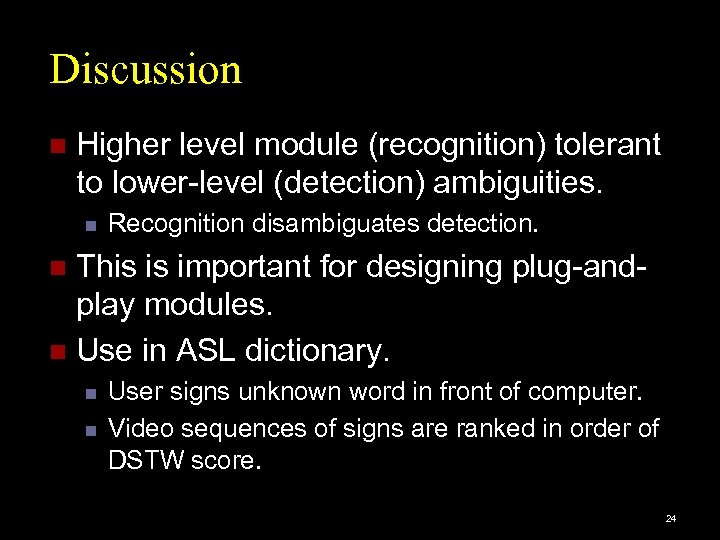

Discussion n Higher level module (recognition) tolerant to lower-level (detection) ambiguities. n Recognition disambiguates detection. This is important for designing plug-andplay modules. n Use in ASL dictionary. n n n User signs unknown word in front of computer. Video sequences of signs are ranked in order of DSTW score. 24

Discussion n Higher level module (recognition) tolerant to lower-level (detection) ambiguities. n Recognition disambiguates detection. This is important for designing plug-andplay modules. n Use in ASL dictionary. n n n User signs unknown word in front of computer. Video sequences of signs are ranked in order of DSTW score. 24

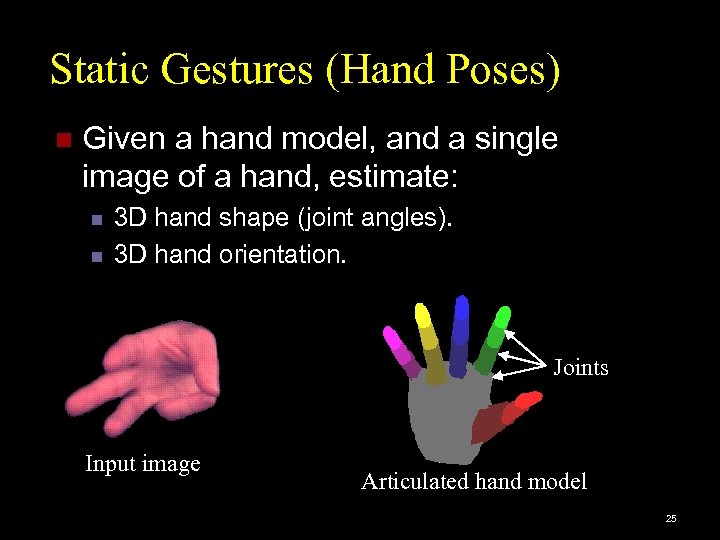

Static Gestures (Hand Poses) n Given a hand model, and a single image of a hand, estimate: n n 3 D hand shape (joint angles). 3 D hand orientation. Joints Input image Articulated hand model 25

Static Gestures (Hand Poses) n Given a hand model, and a single image of a hand, estimate: n n 3 D hand shape (joint angles). 3 D hand orientation. Joints Input image Articulated hand model 25

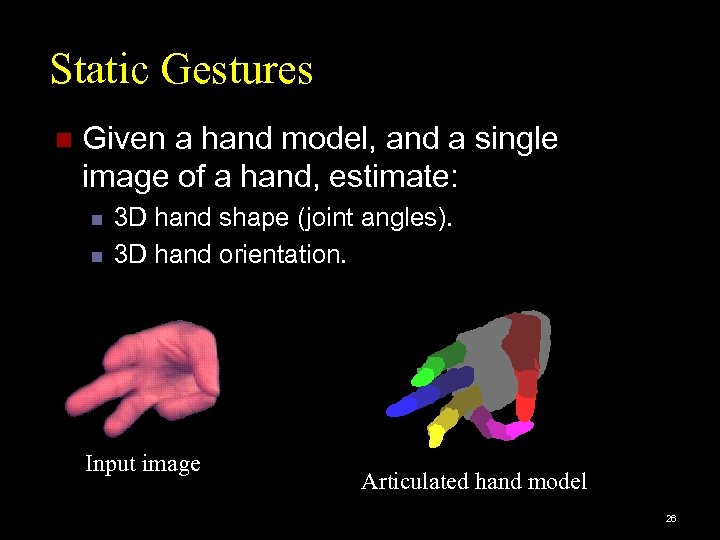

Static Gestures n Given a hand model, and a single image of a hand, estimate: n n 3 D hand shape (joint angles). 3 D hand orientation. Input image Articulated hand model 26

Static Gestures n Given a hand model, and a single image of a hand, estimate: n n 3 D hand shape (joint angles). 3 D hand orientation. Input image Articulated hand model 26

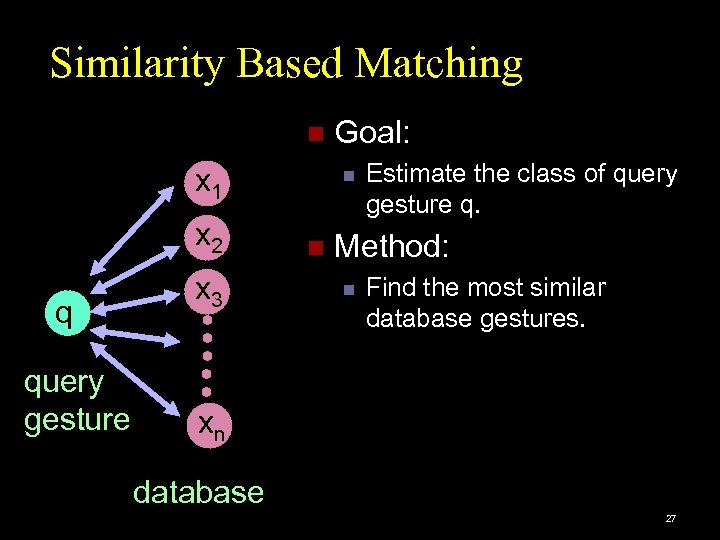

Similarity Based Matching n x 1 x 2 q query gesture x 3 Goal: n n Estimate the class of query gesture q. Method: n Find the most similar database gestures. xn database 27

Similarity Based Matching n x 1 x 2 q query gesture x 3 Goal: n n Estimate the class of query gesture q. Method: n Find the most similar database gestures. xn database 27

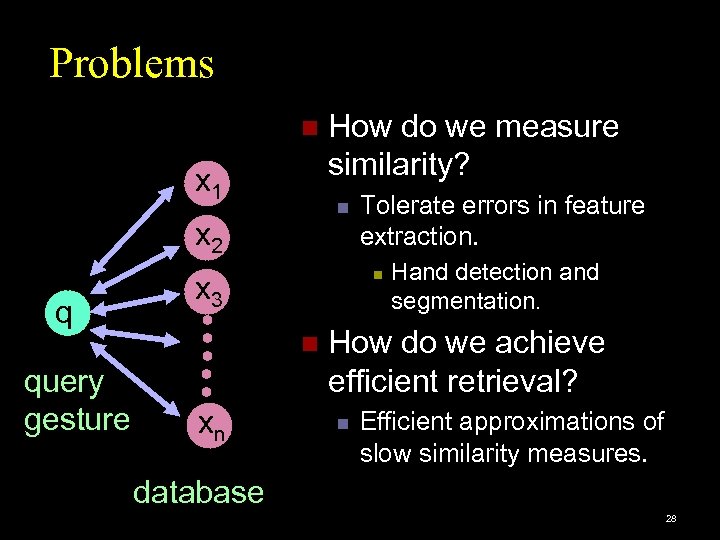

Problems n x 1 n x 2 q x 3 xn Tolerate errors in feature extraction. n n query gesture How do we measure similarity? Hand detection and segmentation. How do we achieve efficient retrieval? n Efficient approximations of slow similarity measures. database 28

Problems n x 1 n x 2 q x 3 xn Tolerate errors in feature extraction. n n query gesture How do we measure similarity? Hand detection and segmentation. How do we achieve efficient retrieval? n Efficient approximations of slow similarity measures. database 28

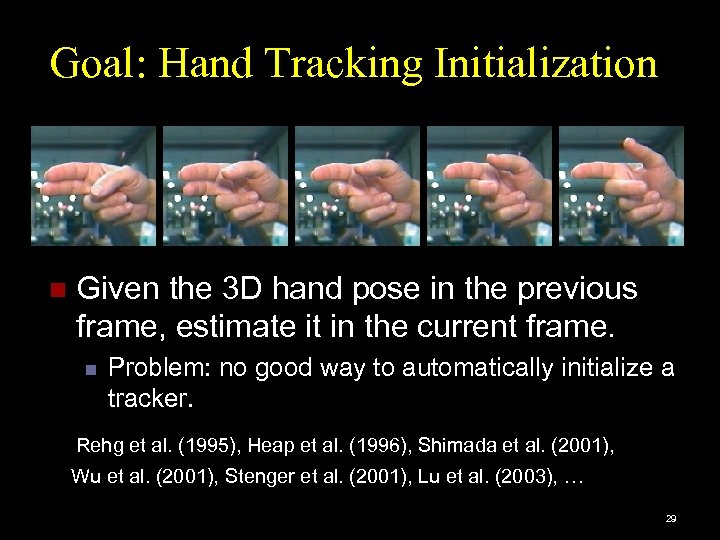

Goal: Hand Tracking Initialization n Given the 3 D hand pose in the previous frame, estimate it in the current frame. n Problem: no good way to automatically initialize a tracker. Rehg et al. (1995), Heap et al. (1996), Shimada et al. (2001), Wu et al. (2001), Stenger et al. (2001), Lu et al. (2003), … 29

Goal: Hand Tracking Initialization n Given the 3 D hand pose in the previous frame, estimate it in the current frame. n Problem: no good way to automatically initialize a tracker. Rehg et al. (1995), Heap et al. (1996), Shimada et al. (2001), Wu et al. (2001), Stenger et al. (2001), Lu et al. (2003), … 29

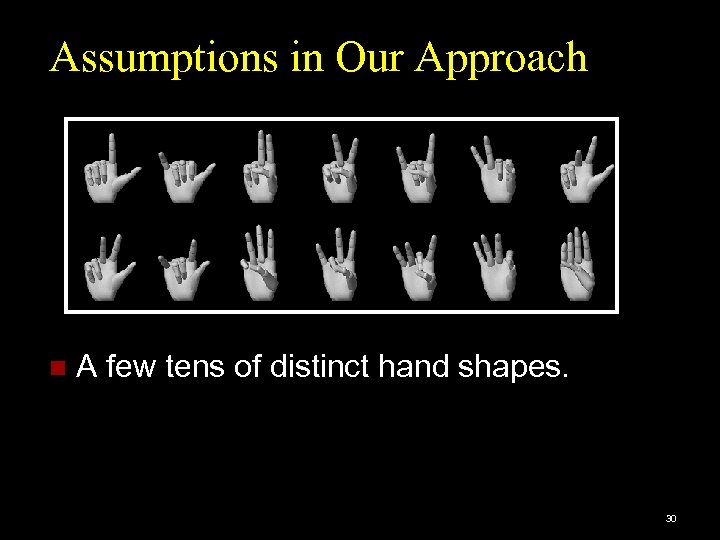

Assumptions in Our Approach n A few tens of distinct hand shapes. 30

Assumptions in Our Approach n A few tens of distinct hand shapes. 30

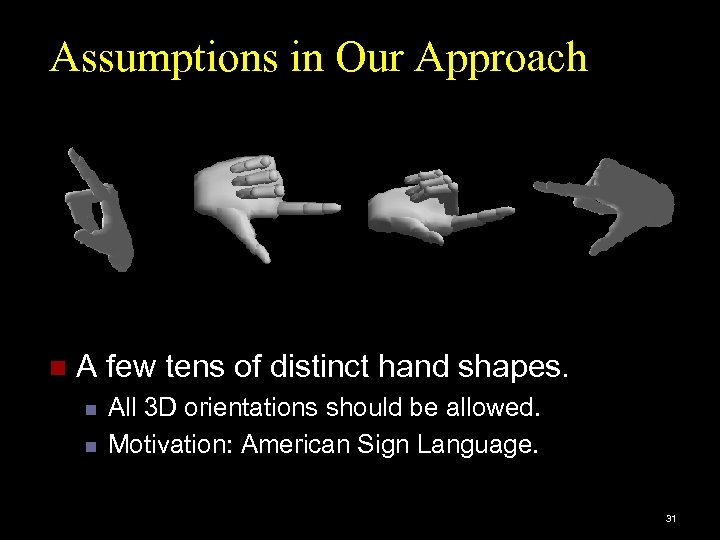

Assumptions in Our Approach n A few tens of distinct hand shapes. n n All 3 D orientations should be allowed. Motivation: American Sign Language. 31

Assumptions in Our Approach n A few tens of distinct hand shapes. n n All 3 D orientations should be allowed. Motivation: American Sign Language. 31

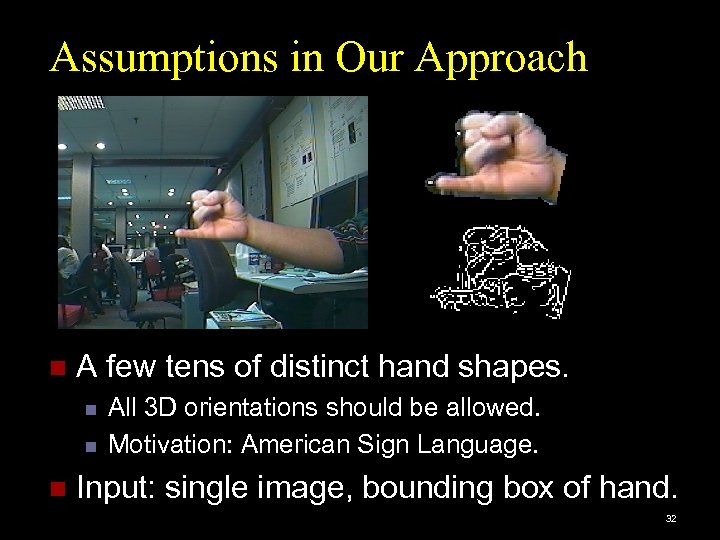

Assumptions in Our Approach n A few tens of distinct hand shapes. n n n All 3 D orientations should be allowed. Motivation: American Sign Language. Input: single image, bounding box of hand. 32

Assumptions in Our Approach n A few tens of distinct hand shapes. n n n All 3 D orientations should be allowed. Motivation: American Sign Language. Input: single image, bounding box of hand. 32

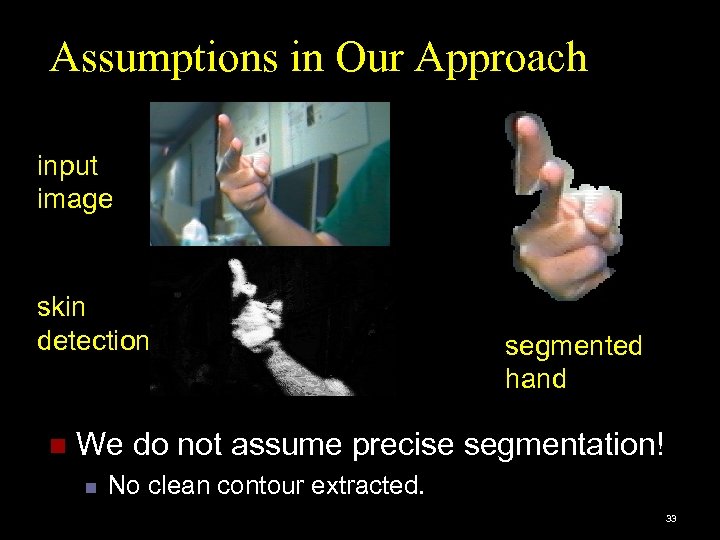

Assumptions in Our Approach input image skin detection n segmented hand We do not assume precise segmentation! n No clean contour extracted. 33

Assumptions in Our Approach input image skin detection n segmented hand We do not assume precise segmentation! n No clean contour extracted. 33

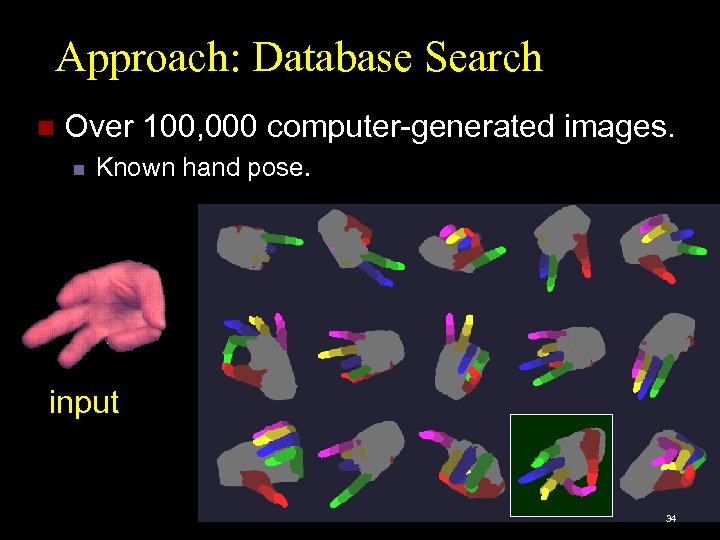

Approach: Database Search n Over 100, 000 computer-generated images. n Known hand pose. input 34

Approach: Database Search n Over 100, 000 computer-generated images. n Known hand pose. input 34

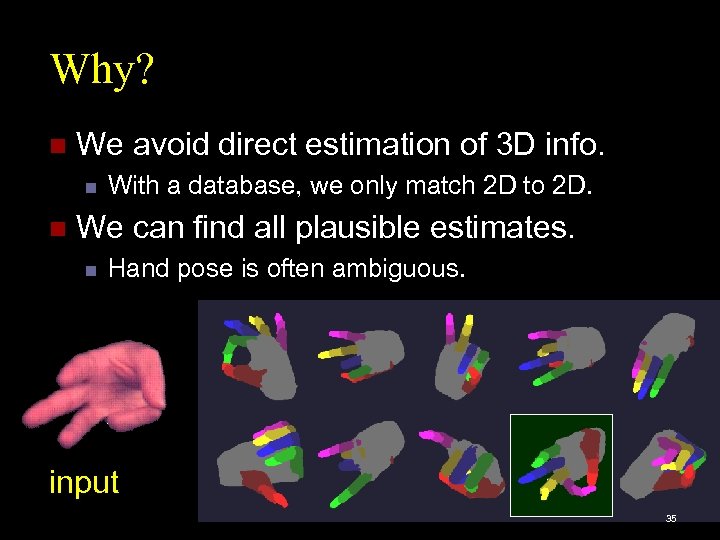

Why? n We avoid direct estimation of 3 D info. n n With a database, we only match 2 D to 2 D. We can find all plausible estimates. n Hand pose is often ambiguous. input 35

Why? n We avoid direct estimation of 3 D info. n n With a database, we only match 2 D to 2 D. We can find all plausible estimates. n Hand pose is often ambiguous. input 35

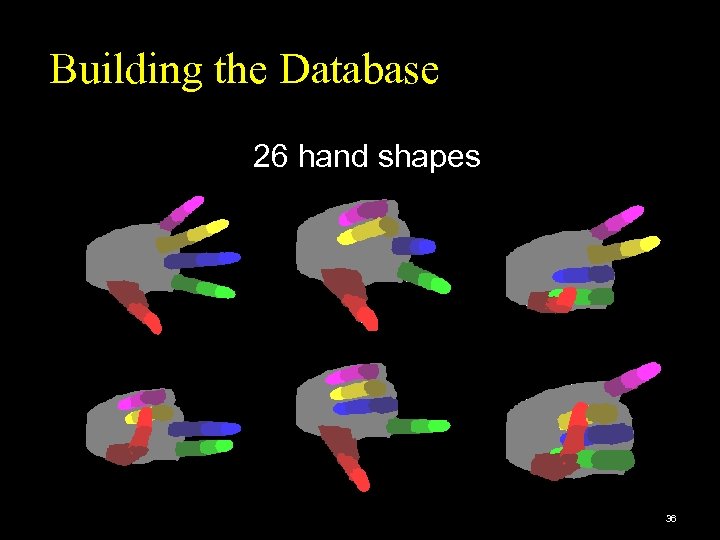

Building the Database 26 hand shapes 36

Building the Database 26 hand shapes 36

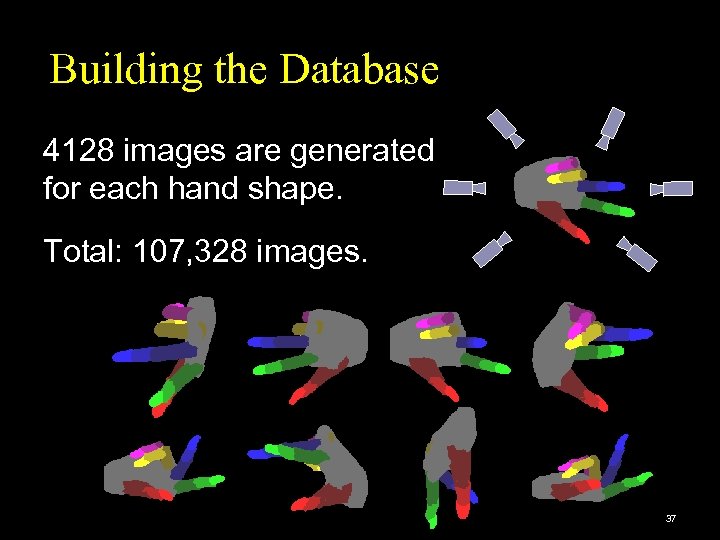

Building the Database 4128 images are generated for each hand shape. Total: 107, 328 images. 37

Building the Database 4128 images are generated for each hand shape. Total: 107, 328 images. 37

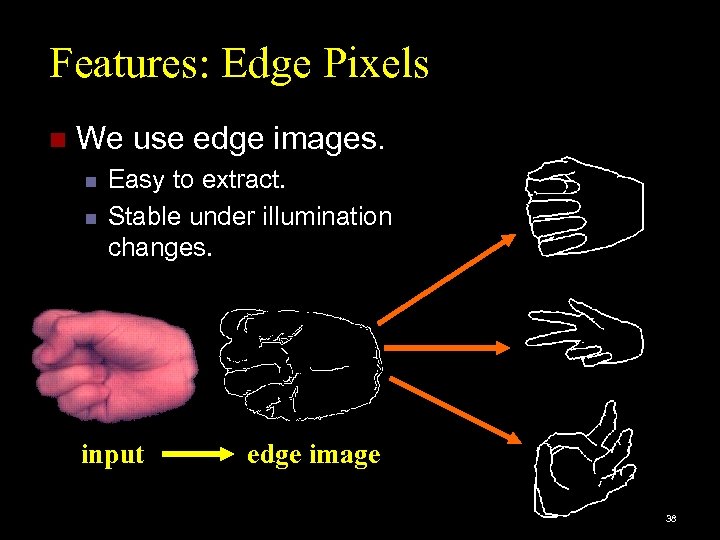

Features: Edge Pixels n We use edge images. n n Easy to extract. Stable under illumination changes. input edge image 38

Features: Edge Pixels n We use edge images. n n Easy to extract. Stable under illumination changes. input edge image 38

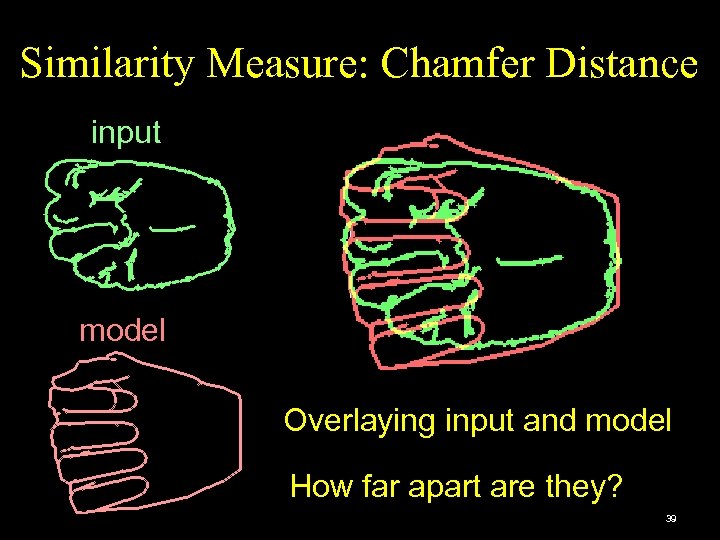

Similarity Measure: Chamfer Distance input model Overlaying input and model How far apart are they? 39

Similarity Measure: Chamfer Distance input model Overlaying input and model How far apart are they? 39

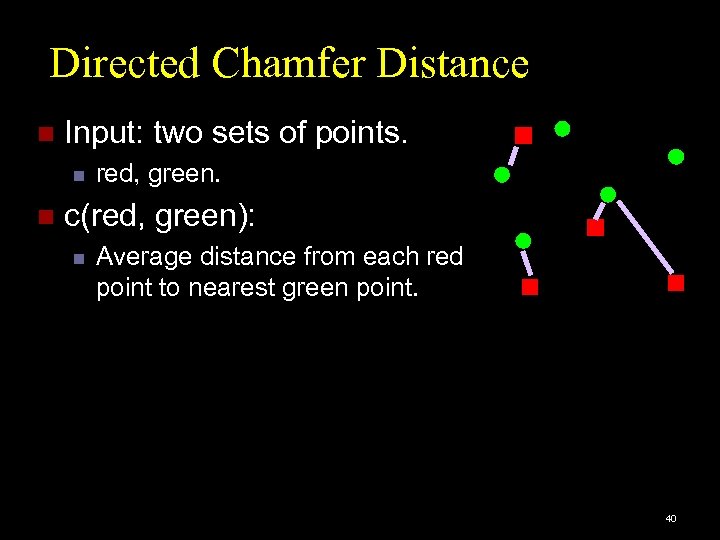

Directed Chamfer Distance n Input: two sets of points. n n red, green. c(red, green): n Average distance from each red point to nearest green point. 40

Directed Chamfer Distance n Input: two sets of points. n n red, green. c(red, green): n Average distance from each red point to nearest green point. 40

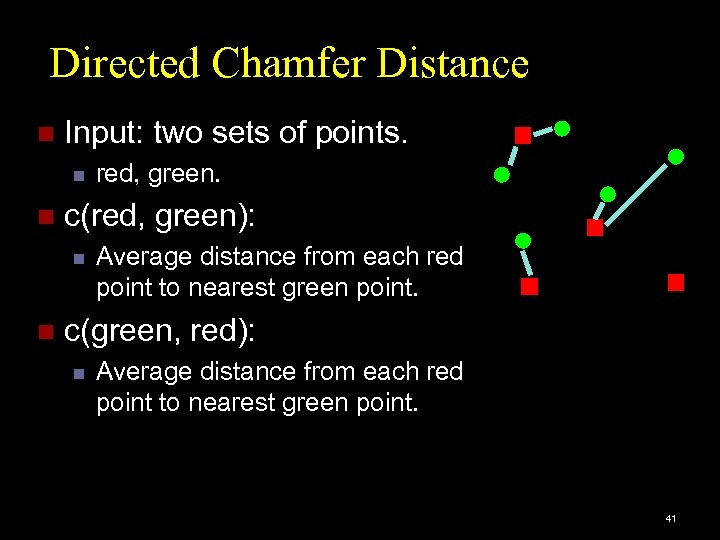

Directed Chamfer Distance n Input: two sets of points. n n c(red, green): n n red, green. Average distance from each red point to nearest green point. c(green, red): n Average distance from each red point to nearest green point. 41

Directed Chamfer Distance n Input: two sets of points. n n c(red, green): n n red, green. Average distance from each red point to nearest green point. c(green, red): n Average distance from each red point to nearest green point. 41

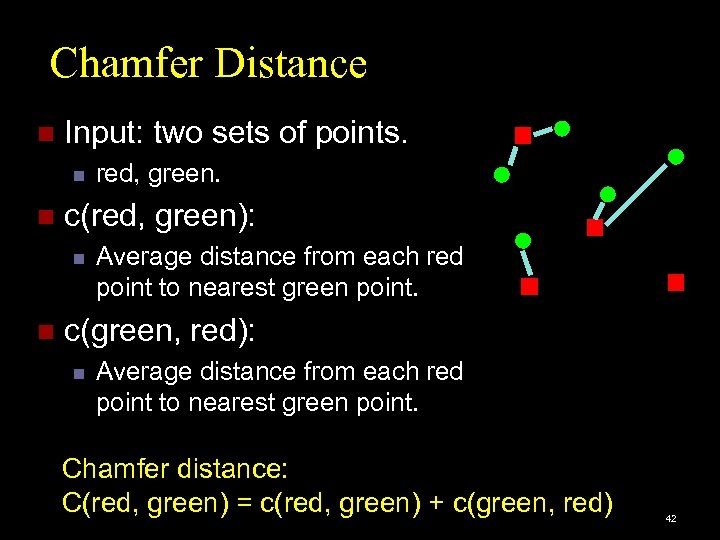

Chamfer Distance n Input: two sets of points. n n c(red, green): n n red, green. Average distance from each red point to nearest green point. c(green, red): n Average distance from each red point to nearest green point. Chamfer distance: C(red, green) = c(red, green) + c(green, red) 42

Chamfer Distance n Input: two sets of points. n n c(red, green): n n red, green. Average distance from each red point to nearest green point. c(green, red): n Average distance from each red point to nearest green point. Chamfer distance: C(red, green) = c(red, green) + c(green, red) 42

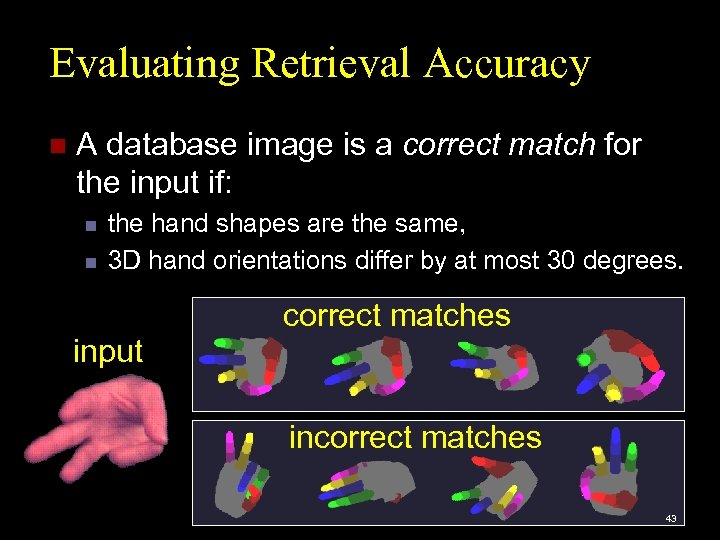

Evaluating Retrieval Accuracy n A database image is a correct match for the input if: n n the hand shapes are the same, 3 D hand orientations differ by at most 30 degrees. correct matches input incorrect matches 43

Evaluating Retrieval Accuracy n A database image is a correct match for the input if: n n the hand shapes are the same, 3 D hand orientations differ by at most 30 degrees. correct matches input incorrect matches 43

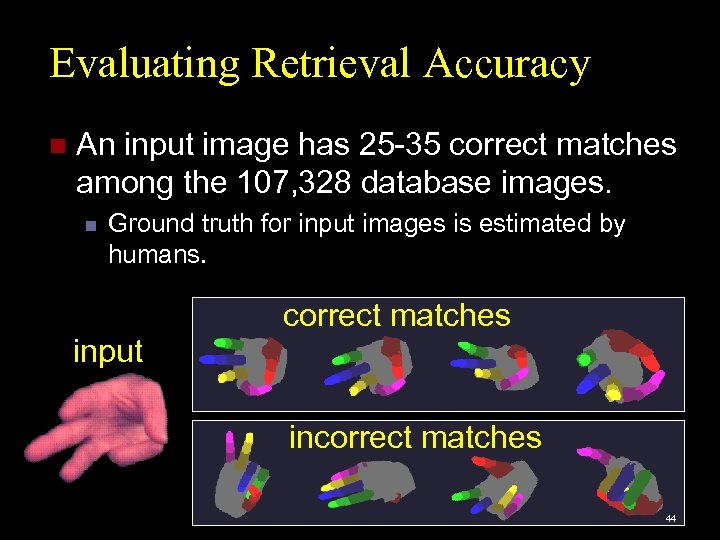

Evaluating Retrieval Accuracy n An input image has 25 -35 correct matches among the 107, 328 database images. n Ground truth for input images is estimated by humans. correct matches input incorrect matches 44

Evaluating Retrieval Accuracy n An input image has 25 -35 correct matches among the 107, 328 database images. n Ground truth for input images is estimated by humans. correct matches input incorrect matches 44

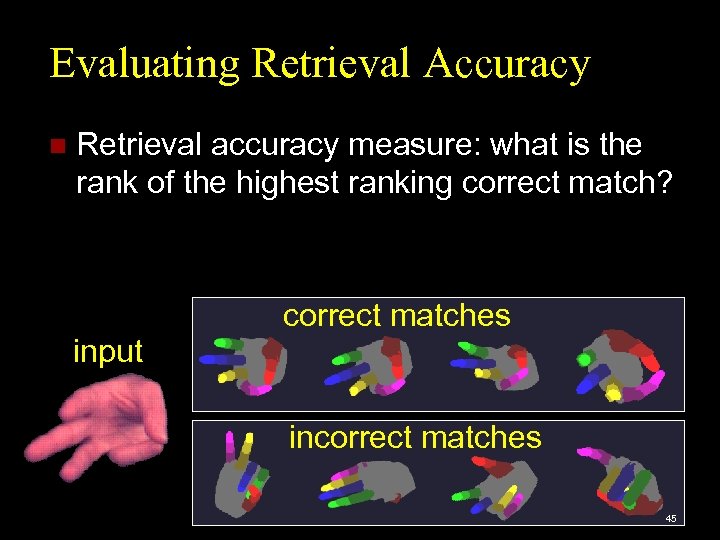

Evaluating Retrieval Accuracy n Retrieval accuracy measure: what is the rank of the highest ranking correct match? correct matches input incorrect matches 45

Evaluating Retrieval Accuracy n Retrieval accuracy measure: what is the rank of the highest ranking correct match? correct matches input incorrect matches 45

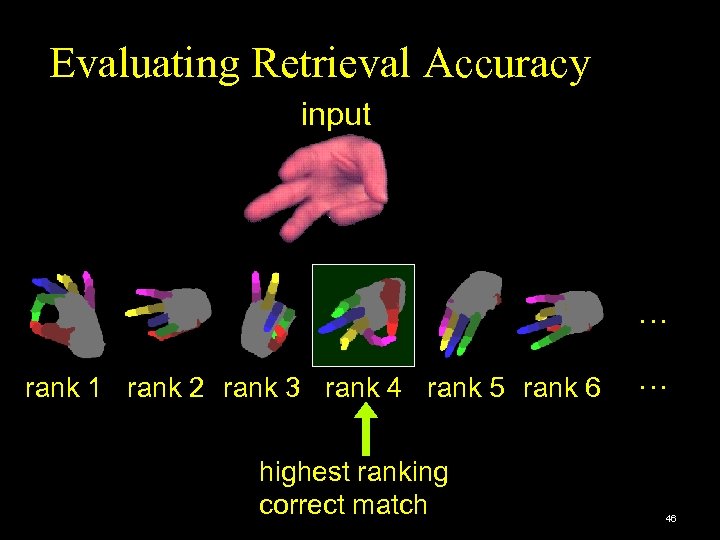

Evaluating Retrieval Accuracy input … rank 1 rank 2 rank 3 rank 4 rank 5 rank 6 highest ranking correct match … 46

Evaluating Retrieval Accuracy input … rank 1 rank 2 rank 3 rank 4 rank 5 rank 6 highest ranking correct match … 46

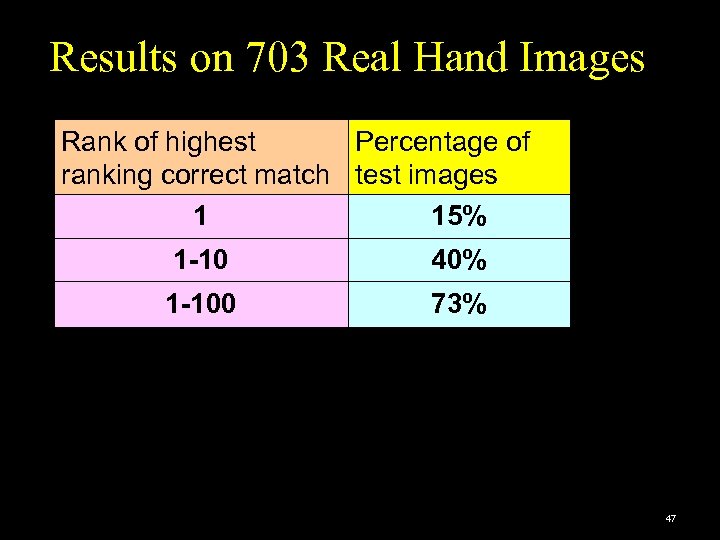

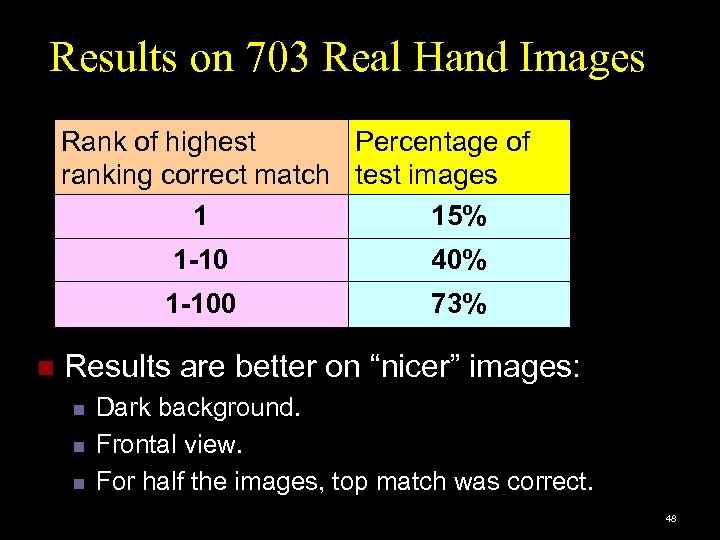

Results on 703 Real Hand Images Rank of highest Percentage of ranking correct match test images 1 15% 1 -10 40% 1 -100 73% 47

Results on 703 Real Hand Images Rank of highest Percentage of ranking correct match test images 1 15% 1 -10 40% 1 -100 73% 47

Results on 703 Real Hand Images Rank of highest Percentage of ranking correct match test images 1 15% 1 -100 n 40% 73% Results are better on “nicer” images: n n n Dark background. Frontal view. For half the images, top match was correct. 48

Results on 703 Real Hand Images Rank of highest Percentage of ranking correct match test images 1 15% 1 -100 n 40% 73% Results are better on “nicer” images: n n n Dark background. Frontal view. For half the images, top match was correct. 48

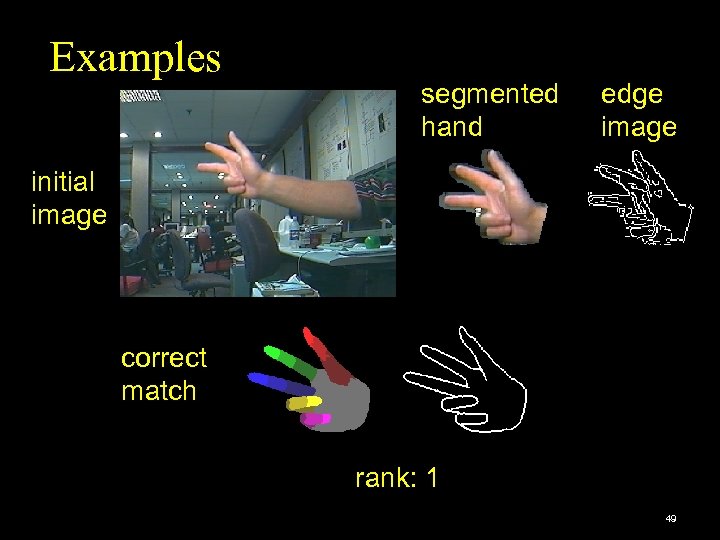

Examples segmented hand edge image initial image correct match rank: 1 49

Examples segmented hand edge image initial image correct match rank: 1 49

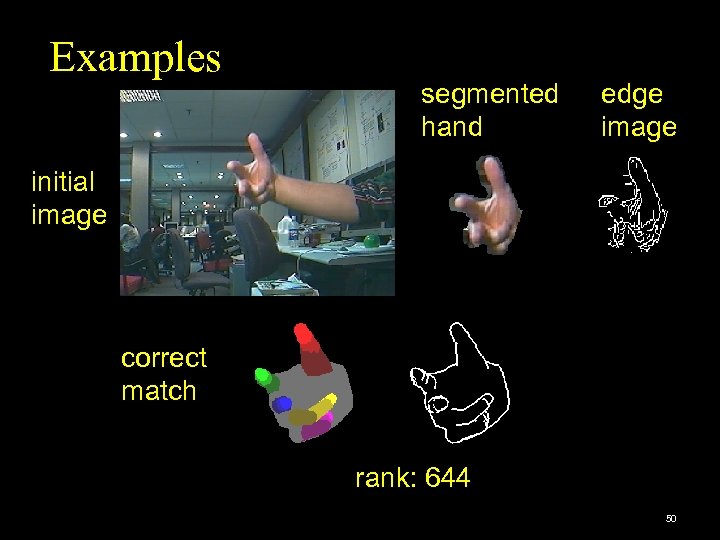

Examples segmented hand edge image initial image correct match rank: 644 50

Examples segmented hand edge image initial image correct match rank: 644 50

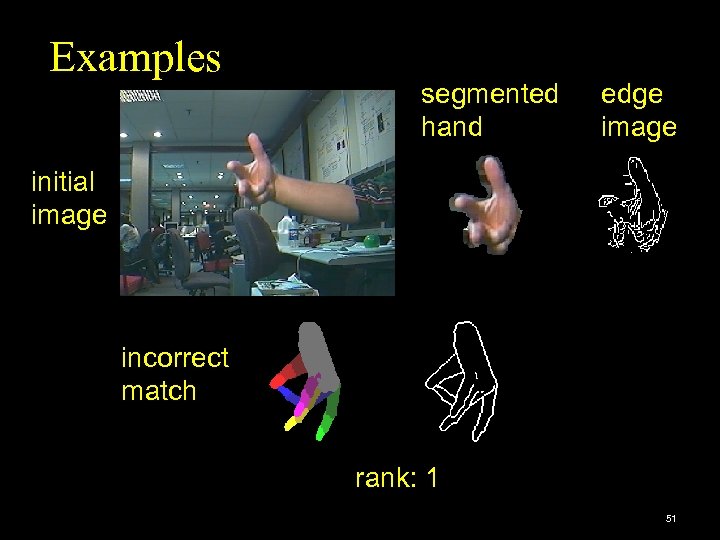

Examples segmented hand edge image initial image incorrect match rank: 1 51

Examples segmented hand edge image initial image incorrect match rank: 1 51

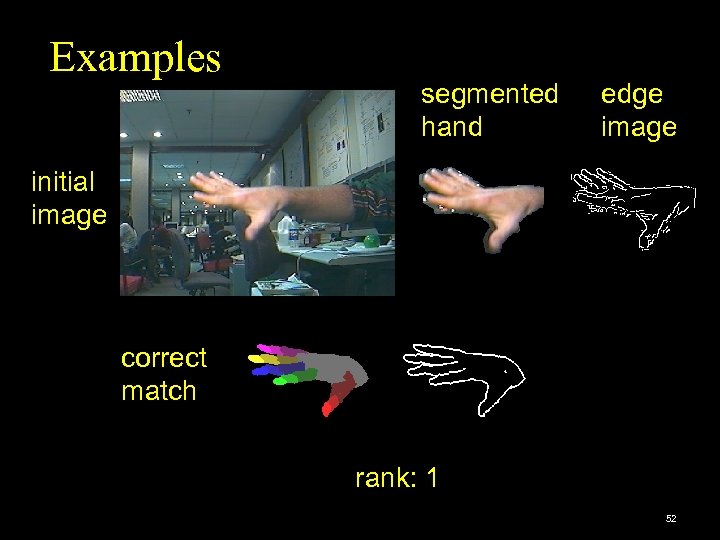

Examples segmented hand edge image initial image correct match rank: 1 52

Examples segmented hand edge image initial image correct match rank: 1 52

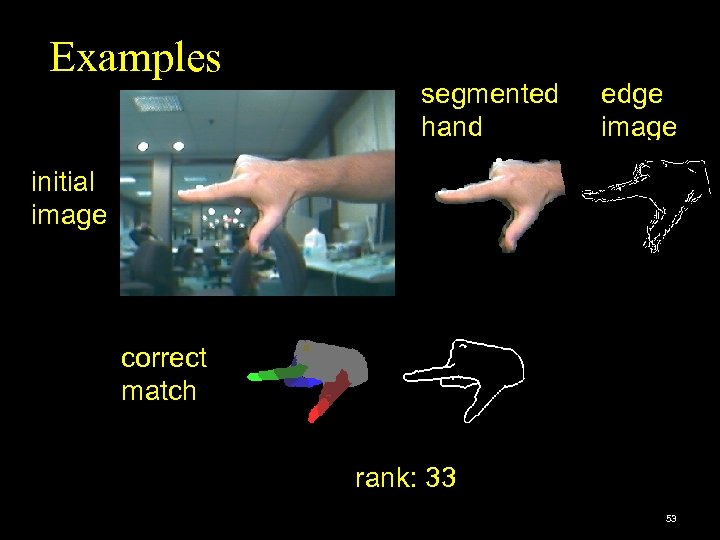

Examples segmented hand edge image initial image correct match rank: 33 53

Examples segmented hand edge image initial image correct match rank: 33 53

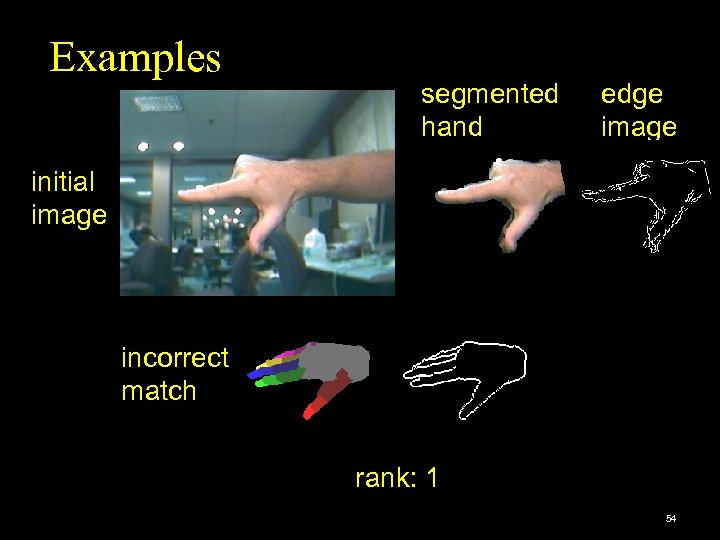

Examples segmented hand edge image initial image incorrect match rank: 1 54

Examples segmented hand edge image initial image incorrect match rank: 1 54

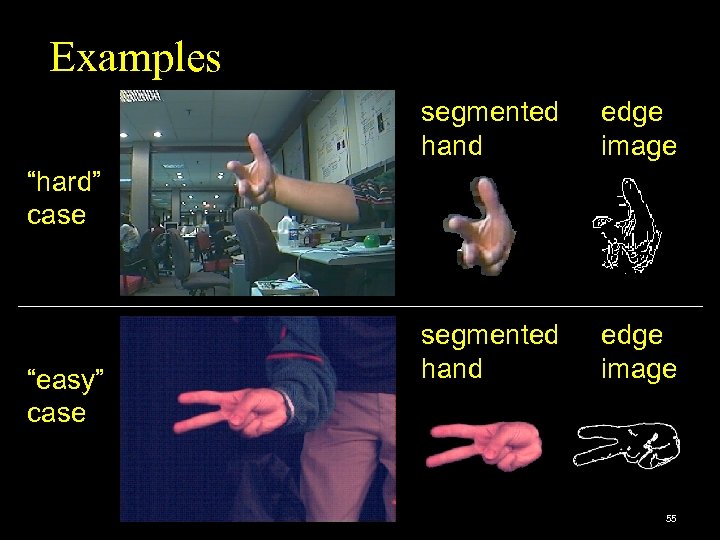

Examples segmented hand edge image “hard” case “easy” case 55

Examples segmented hand edge image “hard” case “easy” case 55

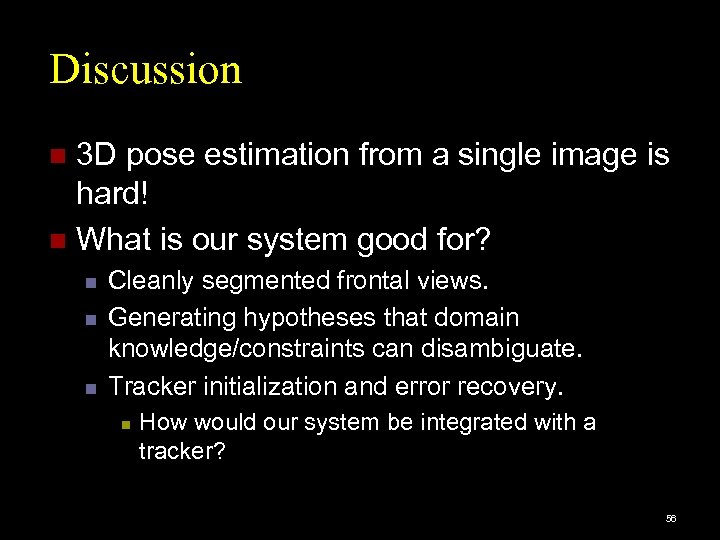

Discussion 3 D pose estimation from a single image is hard! n What is our system good for? n n Cleanly segmented frontal views. Generating hypotheses that domain knowledge/constraints can disambiguate. Tracker initialization and error recovery. n How would our system be integrated with a tracker? 56

Discussion 3 D pose estimation from a single image is hard! n What is our system good for? n n Cleanly segmented frontal views. Generating hypotheses that domain knowledge/constraints can disambiguate. Tracker initialization and error recovery. n How would our system be integrated with a tracker? 56

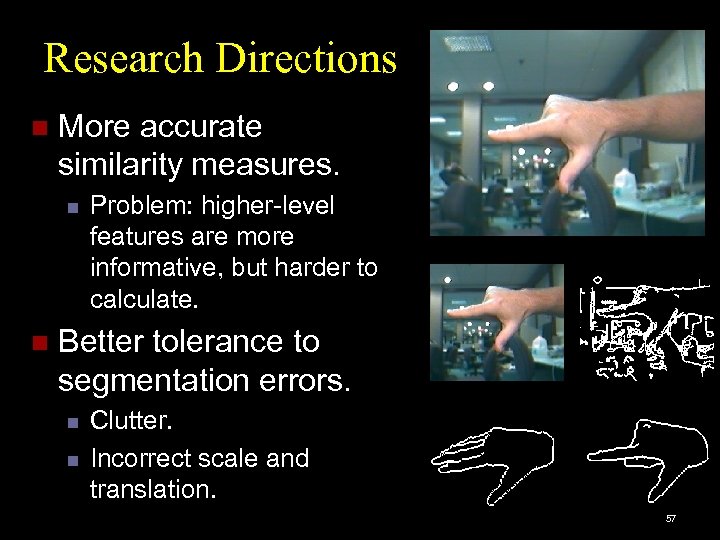

Research Directions n More accurate similarity measures. n n Problem: higher-level features are more informative, but harder to calculate. Better tolerance to segmentation errors. n n Clutter. Incorrect scale and translation. 57

Research Directions n More accurate similarity measures. n n Problem: higher-level features are more informative, but harder to calculate. Better tolerance to segmentation errors. n n Clutter. Incorrect scale and translation. 57

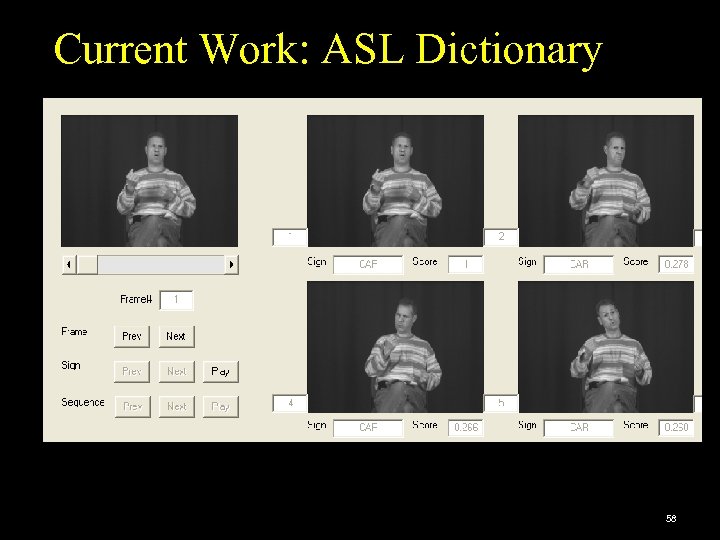

Current Work: ASL Dictionary 58

Current Work: ASL Dictionary 58

Current Work: ASL Dictionary n Computer vision challenge: n n n Estimate hand pose and motion accurately and fast. Our existing hand pose method leaves many questions unanswered. Machine learning challenge: n n Currently, in DSTW, there is no learning. learn models of signs. n n 4000 classes, 1 -5 examples per sign. Data mining challenge: n indexing methods for large numbers of classes. 59

Current Work: ASL Dictionary n Computer vision challenge: n n n Estimate hand pose and motion accurately and fast. Our existing hand pose method leaves many questions unanswered. Machine learning challenge: n n Currently, in DSTW, there is no learning. learn models of signs. n n 4000 classes, 1 -5 examples per sign. Data mining challenge: n indexing methods for large numbers of classes. 59

n Comments, n E-mail: n Web: questions, complaints… athitsos@uta. edu http: //crystal. uta. edu/~athitsos/ END 60

n Comments, n E-mail: n Web: questions, complaints… athitsos@uta. edu http: //crystal. uta. edu/~athitsos/ END 60