e757aa2b9cde0ca542cb794c67cdeca1.ppt

- Количество слайдов: 88

George Mason University Learning Agents Laboratory Concepts, Issues, and Methodologies for Evaluating Mixed-initiative Intelligent Systems Research Presentation by Ping Shyr Research Director Dr Gheorghe Tecuci May 3, 2004 1

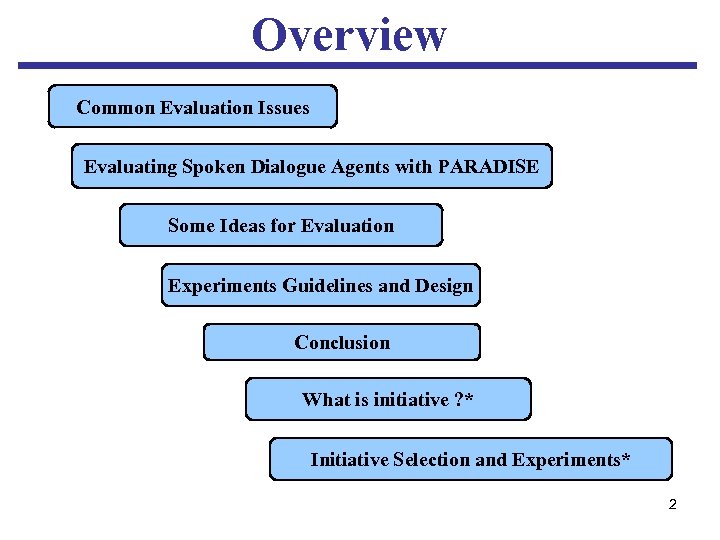

Overview Common Evaluation Issues Evaluating Spoken Dialogue Agents with PARADISE Some Ideas for Evaluation Experiments Guidelines and Design Conclusion What is initiative ? * Initiative Selection and Experiments* 2

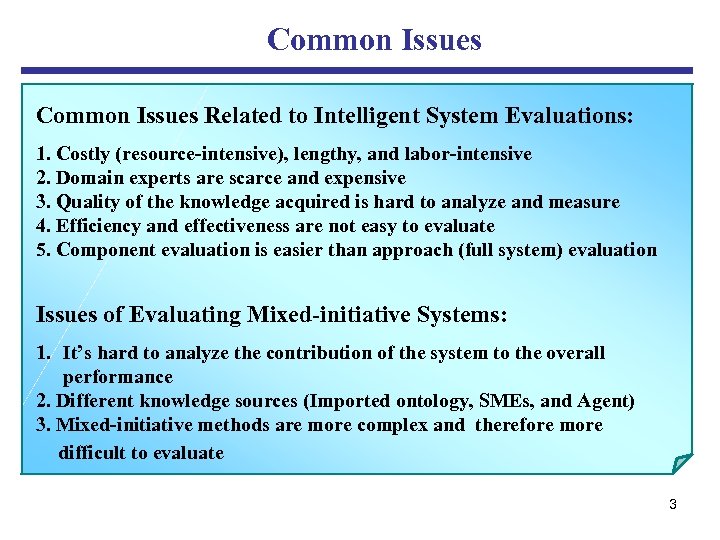

Common Issues Related to Intelligent System Evaluations: 1. Costly (resource-intensive), lengthy, and labor-intensive 2. Domain experts are scarce and expensive 3. Quality of the knowledge acquired is hard to analyze and measure 4. Efficiency and effectiveness are not easy to evaluate 5. Component evaluation is easier than approach (full system) evaluation Issues of Evaluating Mixed-initiative Systems: 1. It’s hard to analyze the contribution of the system to the overall performance 2. Different knowledge sources (Imported ontology, SMEs, and Agent) 3. Mixed-initiative methods are more complex and therefore more difficult to evaluate 3

General Evaluation Questions 1. When to conduct an evaluation? 2. What kind of evaluation should we use? Summative/outcome evaluation? (predict/measure the final level of performance) Diagnostic/process evaluation? (identify problems in the prototype/development system that may degrade the performance of the final system) 3. Where and when to collect evaluation data? Who should supply data? 4. Who should be involved in an evaluation? 4

Usability Evaluation 1. Usability is critical factor to judge a system 2. Usability evaluation provides us important information to determine: a). How easy a system (especially human computer/agent interface/interaction) to learn and to use? b). User’s confidence in the system results. 3. Usability evaluation also can be used to predict whether an organization or will use the system? 5

Issues in Usability Evaluation 1. To what extent does the interface meet acceptable Human Factors standards? 2. To what extent is the system easy to use? 3. To what extent is the system easy to learn how to use? 4. To what extent does the system decrease user workload? 5. To what extent does the explanation capability meet user needs? 6. Is the allocation of tasks to user and system appropriate? 7. Is the supporting documentation adequate? 6

Performance Evaluation Performance evaluation helps us to answer the question “Does the system meet user and organizational objects and need? ” Measure the system’s performance behavior. Experiment is the best (only) method can appropriately evaluate the system’s performance of the stable prototype and the final system. 7

Issues in Performance Evaluation 1. Is the system cost-effective? 2. To what extent does the system meet users’ need? 3. To what extent does the system meet organization’s need? 4. How effective is the system in enhancing user’s performance? 5. How effective is the system in enhancing organizational performance? 6. How effective is the system in [specific tasks] ? 8

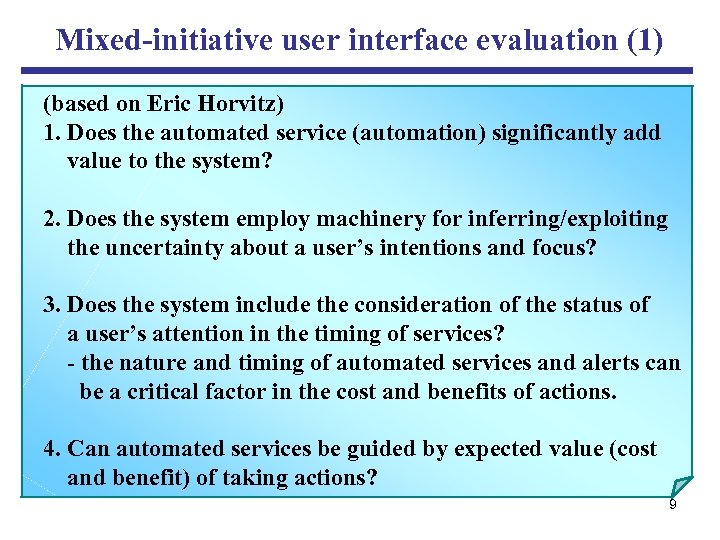

Mixed-initiative user interface evaluation (1) (based on Eric Horvitz) 1. Does the automated service (automation) significantly add value to the system? 2. Does the system employ machinery for inferring/exploiting the uncertainty about a user’s intentions and focus? 3. Does the system include the consideration of the status of a user’s attention in the timing of services? - the nature and timing of automated services and alerts can be a critical factor in the cost and benefits of actions. 4. Can automated services be guided by expected value (cost and benefit) of taking actions? 9

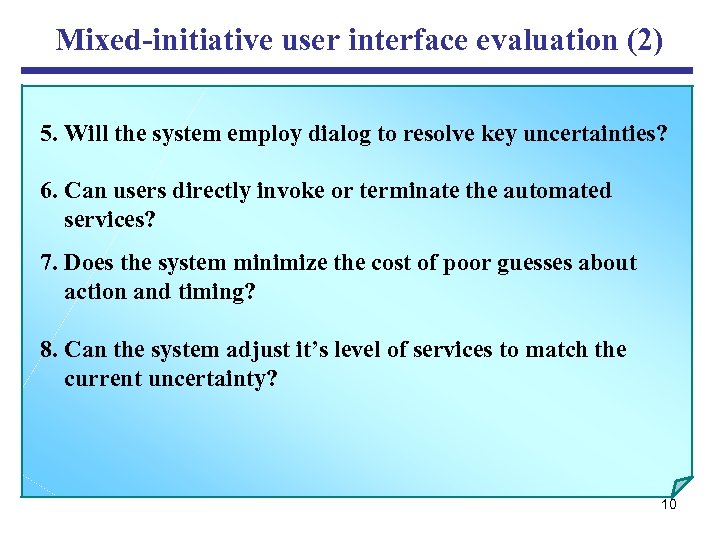

Mixed-initiative user interface evaluation (2) 5. Will the system employ dialog to resolve key uncertainties? 6. Can users directly invoke or terminate the automated services? 7. Does the system minimize the cost of poor guesses about action and timing? 8. Can the system adjust it’s level of services to match the current uncertainty? 10

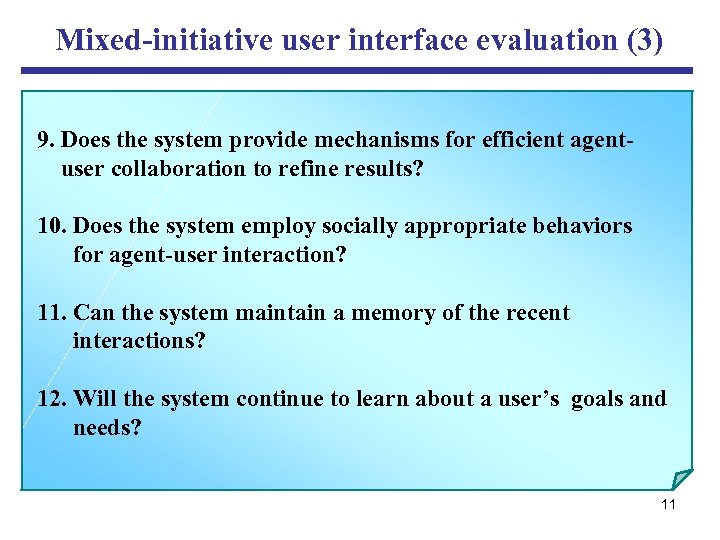

Mixed-initiative user interface evaluation (3) 9. Does the system provide mechanisms for efficient agent user collaboration to refine results? 10. Does the system employ socially appropriate behaviors for agent-user interaction? 11. Can the system maintain a memory of the recent interactions? 12. Will the system continue to learn about a user’s goals and needs? 11

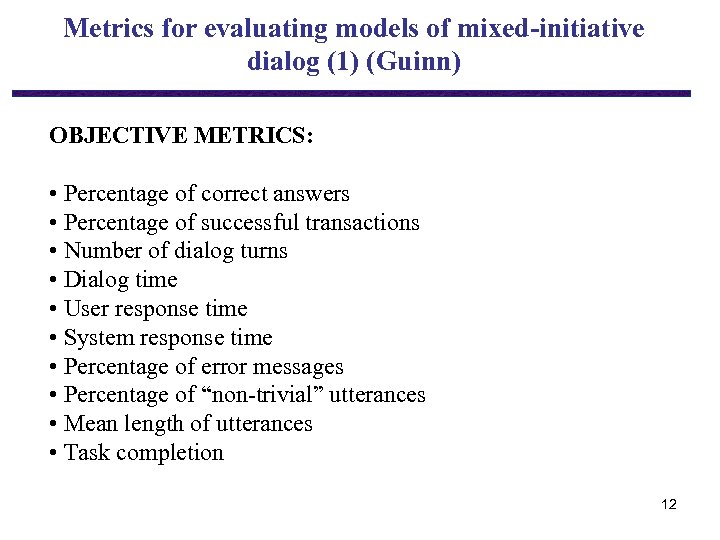

Metrics for evaluating models of mixed-initiative dialog (1) (Guinn) OBJECTIVE METRICS: • Percentage of correct answers • Percentage of successful transactions • Number of dialog turns • Dialog time • User response time • System response time • Percentage of error messages • Percentage of “non-trivial” utterances • Mean length of utterances • Task completion 12

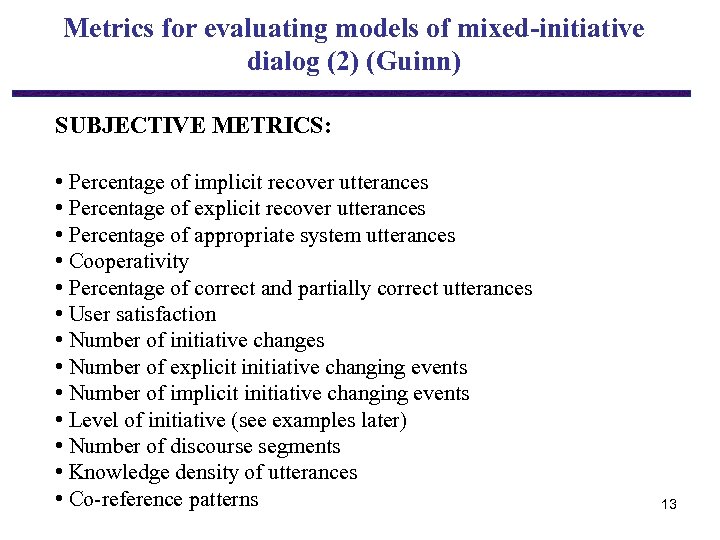

Metrics for evaluating models of mixed-initiative dialog (2) (Guinn) SUBJECTIVE METRICS: • Percentage of implicit recover utterances • Percentage of explicit recover utterances • Percentage of appropriate system utterances • Cooperativity • Percentage of correct and partially correct utterances • User satisfaction • Number of initiative changes • Number of explicit initiative changing events • Number of implicit initiative changing events • Level of initiative (see examples later) • Number of discourse segments • Knowledge density of utterances • Co-reference patterns 13

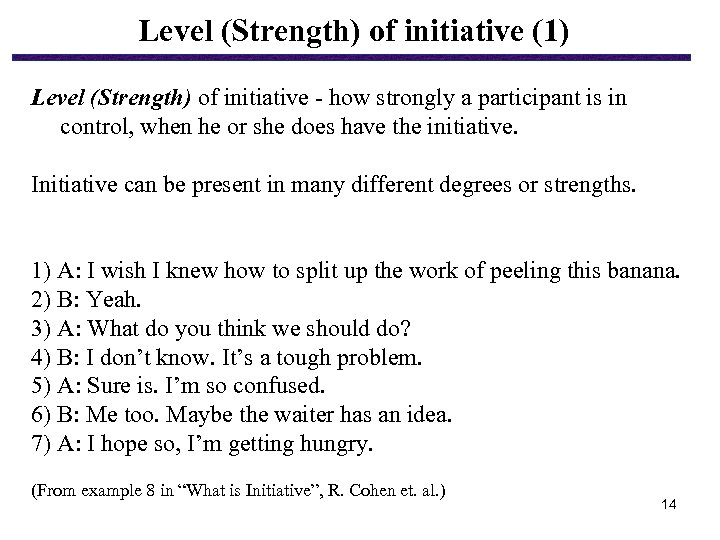

Level (Strength) of initiative (1) Level (Strength) of initiative - how strongly a participant is in control, when he or she does have the initiative. Initiative can be present in many different degrees or strengths. 1) A: I wish I knew how to split up the work of peeling this banana. 2) B: Yeah. 3) A: What do you think we should do? 4) B: I don’t know. It’s a tough problem. 5) A: Sure is. I’m so confused. 6) B: Me too. Maybe the waiter has an idea. 7) A: I hope so, I’m getting hungry. (From example 8 in “What is Initiative”, R. Cohen et. al. ) 14

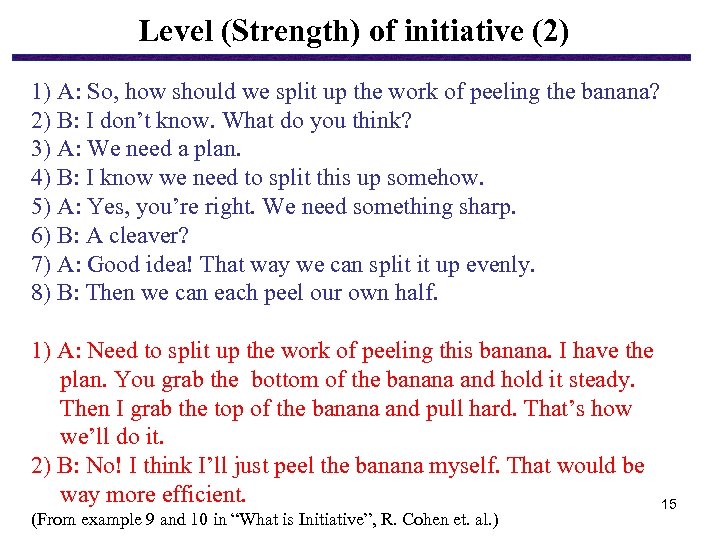

Level (Strength) of initiative (2) 1) A: So, how should we split up the work of peeling the banana? 2) B: I don’t know. What do you think? 3) A: We need a plan. 4) B: I know we need to split this up somehow. 5) A: Yes, you’re right. We need something sharp. 6) B: A cleaver? 7) A: Good idea! That way we can split it up evenly. 8) B: Then we can each peel our own half. 1) A: Need to split up the work of peeling this banana. I have the plan. You grab the bottom of the banana and hold it steady. Then I grab the top of the banana and pull hard. That’s how we’ll do it. 2) B: No! I think I’ll just peel the banana myself. That would be way more efficient. 15 (From example 9 and 10 in “What is Initiative”, R. Cohen et. al. )

Overview Common Evaluation Issues Evaluating spoken dialogue agents with PARADISE Some Ideas for Evaluation Experiments Guidelines and Design Conclusion What is initiative ? * Initiative Selection and Experiments* 16

Evaluating Spoken Dialogue Agent with PARADISE (M. Walker) PARADISE (PARAdigm for DIalogue System Evaluation), a general framework for evaluating spoken dialogue agents. • Decouples task requirements from an agent’s dialogue behaviors • Supports comparisons among dialogue strategies • Enables the calculation of performance over subdialogues and whole dialogues • Specifies the relative contribution of various factors to performance • Makes it possible to compare agents performing different tasks by normalizing for task complexity. 17

Attribute Value Matrix (AVM) An attribute value matrix (AVM) can represent many dialogue tasks. This consists of the information that must be exchanged between the agent and the user during the dialogue, represented as a set of ordered pairs of attributes and their possible values. Attribute-value pairs are annotated with the direction of information flow to represent who acquires the information. Performance evaluation for an agent requires a corpus of dialogues between users and the agent, in which users execute a set of scenarios. Each scenario execution has a corresponding AVM instantiation indicating the task information that was actually obtained via the dialogue. (from PARADISE, M. Walker) 18

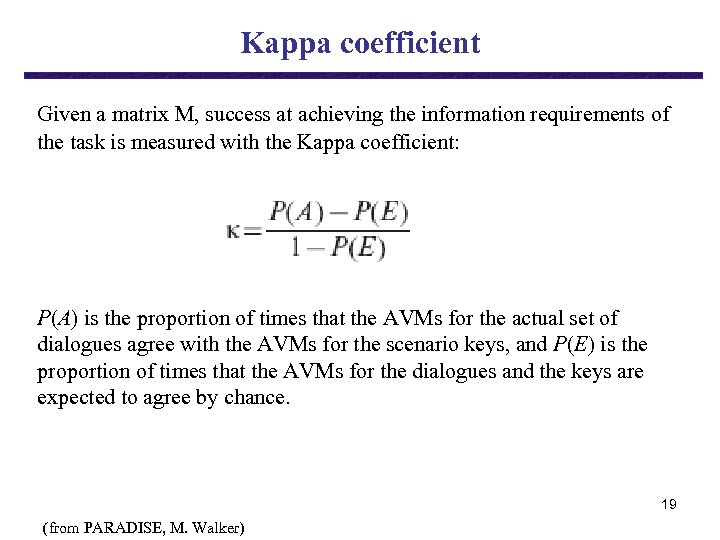

Kappa coefficient Given a matrix M, success at achieving the information requirements of the task is measured with the Kappa coefficient: P(A) is the proportion of times that the AVMs for the actual set of dialogues agree with the AVMs for the scenario keys, and P(E) is the proportion of times that the AVMs for the dialogues and the keys are expected to agree by chance. 19 (from PARADISE, M. Walker)

Steps in PARADISE Methodology 1. Definition of a task and a set of scenarios; 2. Specification of the AVM task representation; 3. Experiments with alternate dialogue agents for the task; 4. Calculation of user satisfaction using surveys; 5. Calculation of task success using k; 6. Calculation of dialogue cost using efficiency and qualitative measures; 7. Estimation of a performance function using linear regression and values for user satisfaction, k, and dialogue costs; 8. Comparison with other agents/tasks to determine which factor generalize; 9. Refinement of the performance model. (from PARADISE, M. Walker) 20

Objective Metrics for evaluating a dialog (Walker) Objective metrics can be calculated without recourse to human judgment, and in many cases, can be logged by the spoken dialogue system so that they can be calculated automatically. • Percentage of correct answers with respect to a set of reference answers • Percentage of successful transactions or completed tasks • Number of turns or utterances • Dialogue time or task completion time • Mean user response time • Mean system response time • Percentage of diagnostic error messages • Percentage of “non-trivial” (more than one word) utterances • Mean length of “non-trivial” utterances 21 • (from PARADISE, M. Walker)

Subjective Metrics for evaluating a dialog (1) • Percentage of implicit recovery utterances (where the system uses dialogue context to recover from errors of partial recognition or understanding) • Percentage of explicit recovery utterances • Percentage of contextually appropriate system utterances • Cooperativity (the adherence of the system’s behavior to Grice’s conversational maxims [Grice, 1967]) • Percentage of correct and partially correct answers • Percentage of appropriate and inappropriate system directive and diagnostic Utterances • User satisfaction (user’s perceptions about the usability of a system, usually assessed with multiple choice questionnaires that ask users to rank the system’s performance on a range of usability features according to a scale of potential assessments) 22 (from PARADISE, M. Walker)

Subjective Metrics for evaluating a dialog (2) Subjective metrics require subjects using the system and/or human evaluators to categorize the dialogue or utterances within the dialogue along various qualitative dimensions. Because these metrics are based on human judgments, such judgments need to be reliable across judges in order to compete with the reproducibility of metrics based on objective criteria. Subjective metrics can still be quantitative, as when a ratio between two subjective categories is computed. (from PARADISE, M. Walker) 23

Limitations of Metrics & Current Methodologies for evaluating a dialog • The use of reference answers makes it impossible to compare systems that use different dialogue strategies for carrying out the same task. This is because the reference answer approach requires canonical responses (i. e. a single “correct” answer) to be defined for every user utterance, even though there are potentially many correct answers. • Interdependencies between metrics are not yet well understood. • The inability to trade-off or combine various metrics and to make generalizations (from PARADISE, M. Walker) 24

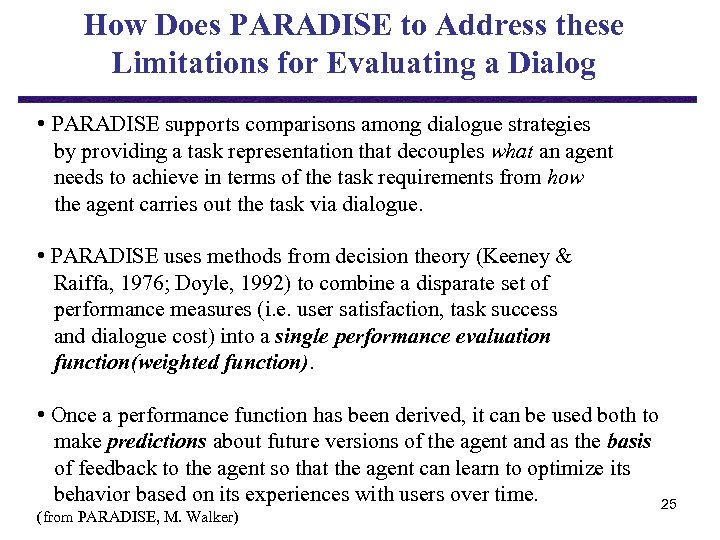

How Does PARADISE to Address these Limitations for Evaluating a Dialog • PARADISE supports comparisons among dialogue strategies by providing a task representation that decouples what an agent needs to achieve in terms of the task requirements from how the agent carries out the task via dialogue. • PARADISE uses methods from decision theory (Keeney & Raiffa, 1976; Doyle, 1992) to combine a disparate set of performance measures (i. e. user satisfaction, task success and dialogue cost) into a single performance evaluation function(weighted function). • Once a performance function has been derived, it can be used both to make predictions about future versions of the agent and as the basis of feedback to the agent so that the agent can learn to optimize its behavior based on its experiences with users over time. 25 (from PARADISE, M. Walker)

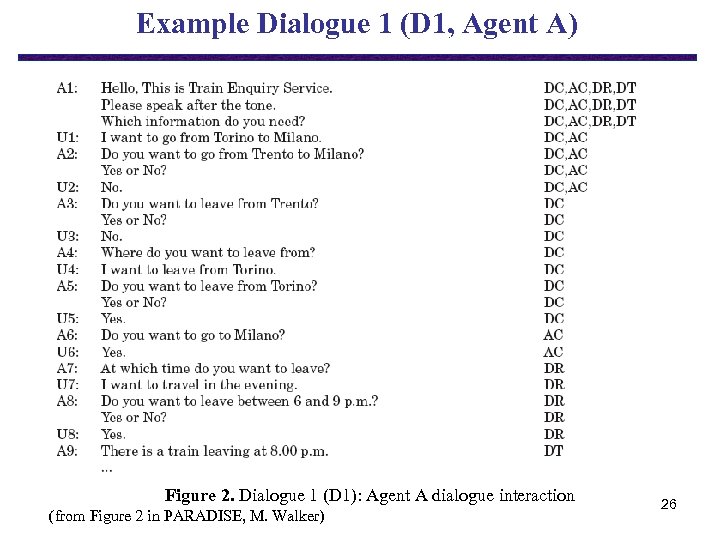

Example Dialogue 1 (D 1, Agent A) Figure 2. Dialogue 1 (D 1): Agent A dialogue interaction (from Figure 2 in PARADISE, M. Walker) 26

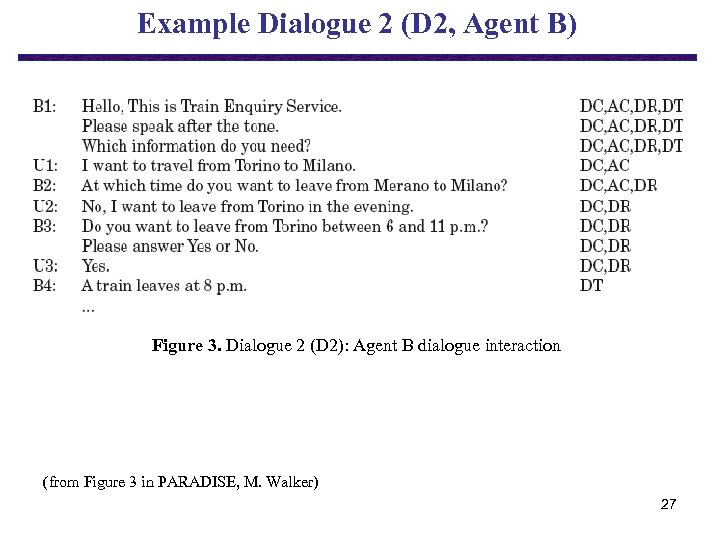

Example Dialogue 2 (D 2, Agent B) Figure 3. Dialogue 2 (D 2): Agent B dialogue interaction (from Figure 3 in PARADISE, M. Walker) 27

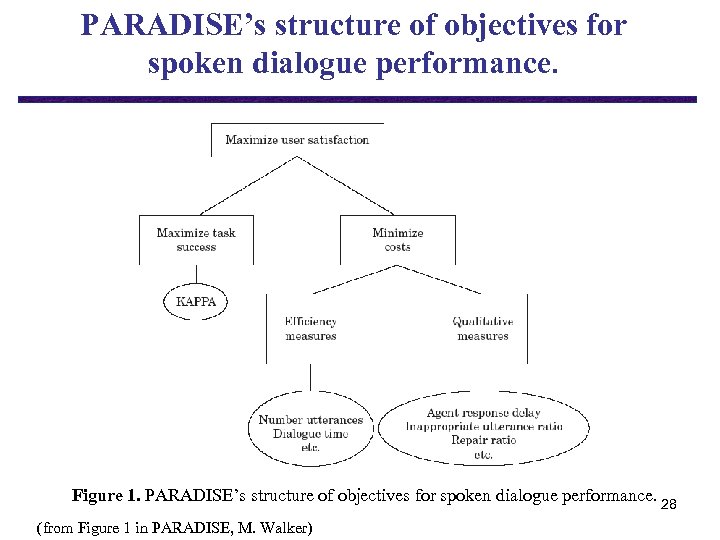

PARADISE’s structure of objectives for spoken dialogue performance. Figure 1. PARADISE’s structure of objectives for spoken dialogue performance. 28 (from Figure 1 in PARADISE, M. Walker)

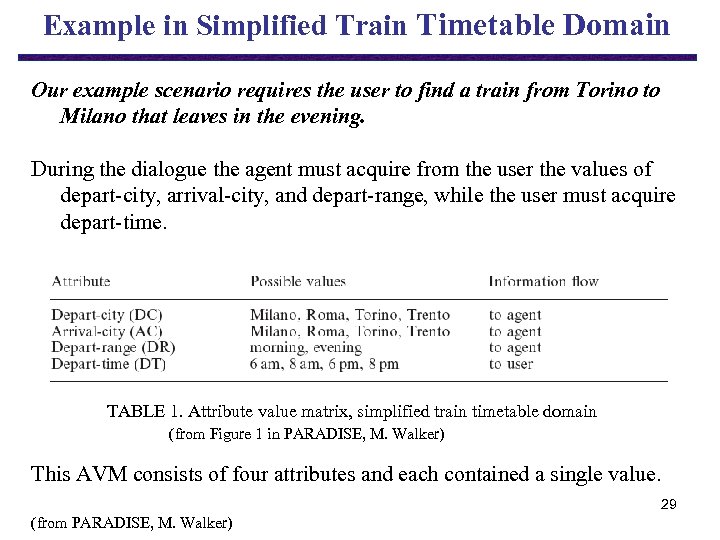

Example in Simplified Train Timetable Domain Our example scenario requires the user to find a train from Torino to Milano that leaves in the evening. During the dialogue the agent must acquire from the user the values of depart-city, arrival-city, and depart-range, while the user must acquire depart-time. TABLE 1. Attribute value matrix, simplified train timetable domain (from Figure 1 in PARADISE, M. Walker) This AVM consists of four attributes and each contained a single value. 29 (from PARADISE, M. Walker)

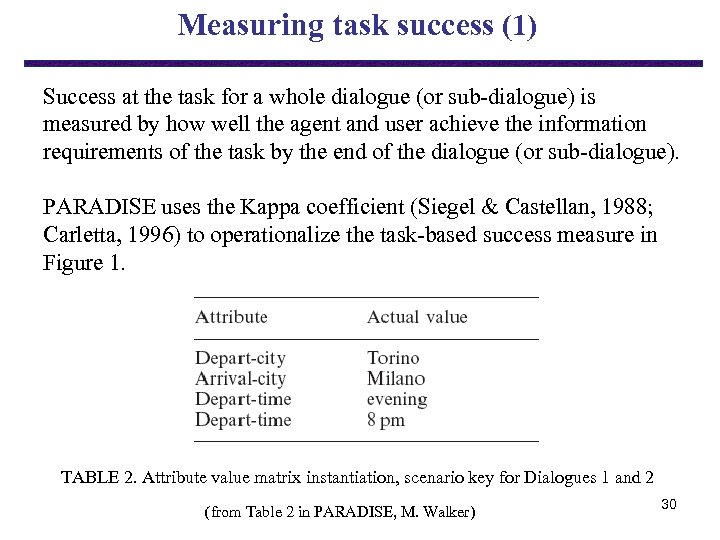

Measuring task success (1) Success at the task for a whole dialogue (or sub-dialogue) is measured by how well the agent and user achieve the information requirements of the task by the end of the dialogue (or sub-dialogue). PARADISE uses the Kappa coefficient (Siegel & Castellan, 1988; Carletta, 1996) to operationalize the task-based success measure in Figure 1. TABLE 2. Attribute value matrix instantiation, scenario key for Dialogues 1 and 2 (from Table 2 in PARADISE, M. Walker) 30

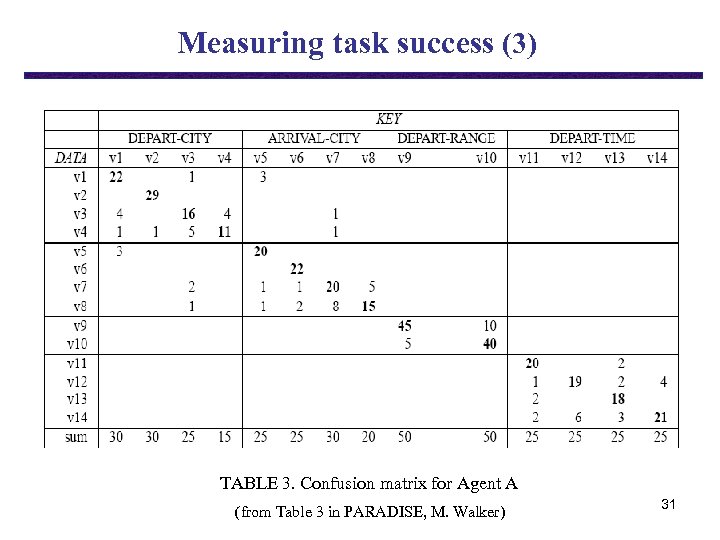

Measuring task success (3) TABLE 3. Confusion matrix for Agent A (from Table 3 in PARADISE, M. Walker) 31

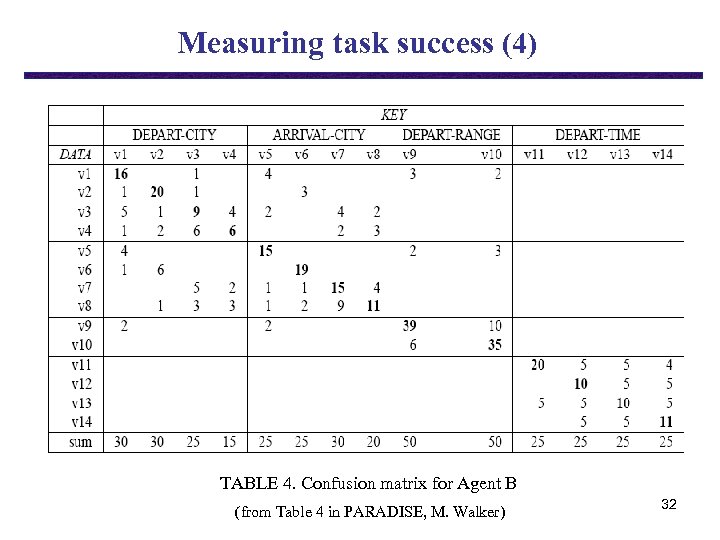

Measuring task success (4) TABLE 4. Confusion matrix for Agent B (from Table 4 in PARADISE, M. Walker) 32

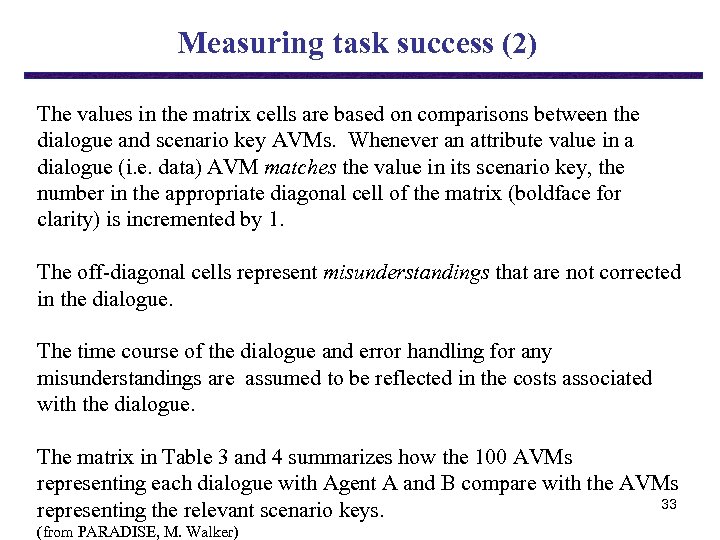

Measuring task success (2) The values in the matrix cells are based on comparisons between the dialogue and scenario key AVMs. Whenever an attribute value in a dialogue (i. e. data) AVM matches the value in its scenario key, the number in the appropriate diagonal cell of the matrix (boldface for clarity) is incremented by 1. The off-diagonal cells represent misunderstandings that are not corrected in the dialogue. The time course of the dialogue and error handling for any misunderstandings are assumed to be reflected in the costs associated with the dialogue. The matrix in Table 3 and 4 summarizes how the 100 AVMs representing each dialogue with Agent A and B compare with the AVMs 33 representing the relevant scenario keys. (from PARADISE, M. Walker)

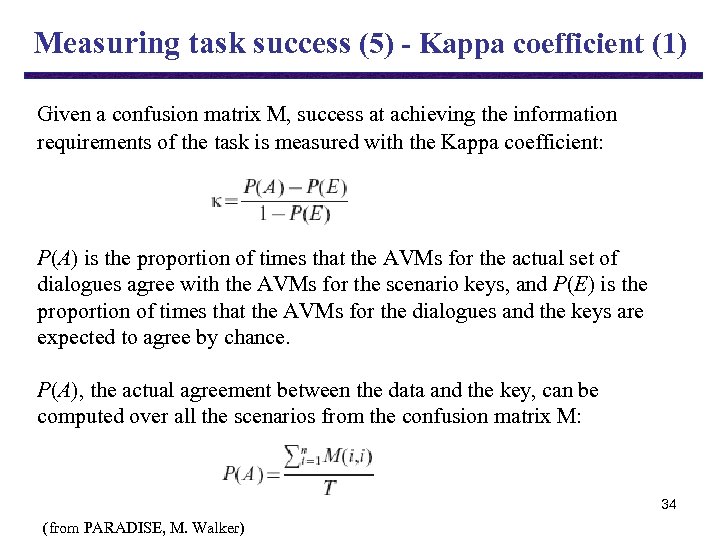

Measuring task success (5) - Kappa coefficient (1) Given a confusion matrix M, success at achieving the information requirements of the task is measured with the Kappa coefficient: P(A) is the proportion of times that the AVMs for the actual set of dialogues agree with the AVMs for the scenario keys, and P(E) is the proportion of times that the AVMs for the dialogues and the keys are expected to agree by chance. P(A), the actual agreement between the data and the key, can be computed over all the scenarios from the confusion matrix M: 34 (from PARADISE, M. Walker)

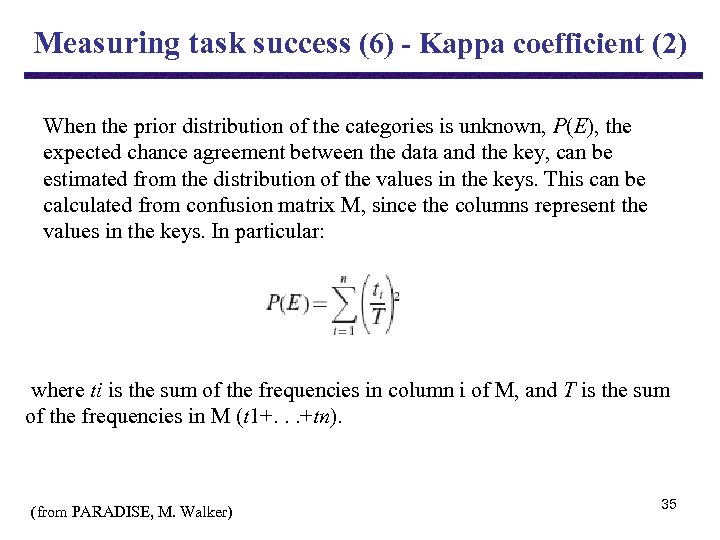

Measuring task success (6) - Kappa coefficient (2) When the prior distribution of the categories is unknown, P(E), the expected chance agreement between the data and the key, can be estimated from the distribution of the values in the keys. This can be calculated from confusion matrix M, since the columns represent the values in the keys. In particular: where ti is the sum of the frequencies in column i of M, and T is the sum of the frequencies in M (t 1+. . . +tn). (from PARADISE, M. Walker) 35

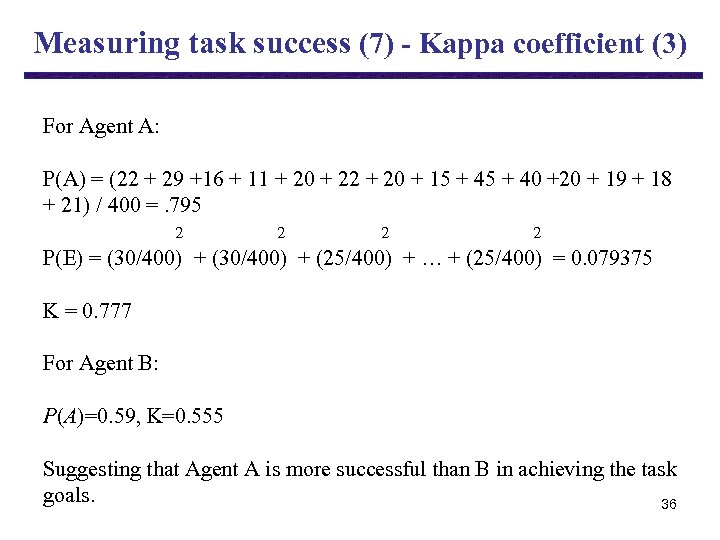

Measuring task success (7) - Kappa coefficient (3) For Agent A: P(A) = (22 + 29 +16 + 11 + 20 + 22 + 20 + 15 + 40 +20 + 19 + 18 + 21) / 400 =. 795 2 2 P(E) = (30/400) + (25/400) + … + (25/400) = 0. 079375 K = 0. 777 For Agent B: P(A)=0. 59, K=0. 555 Suggesting that Agent A is more successful than B in achieving the task goals. 36

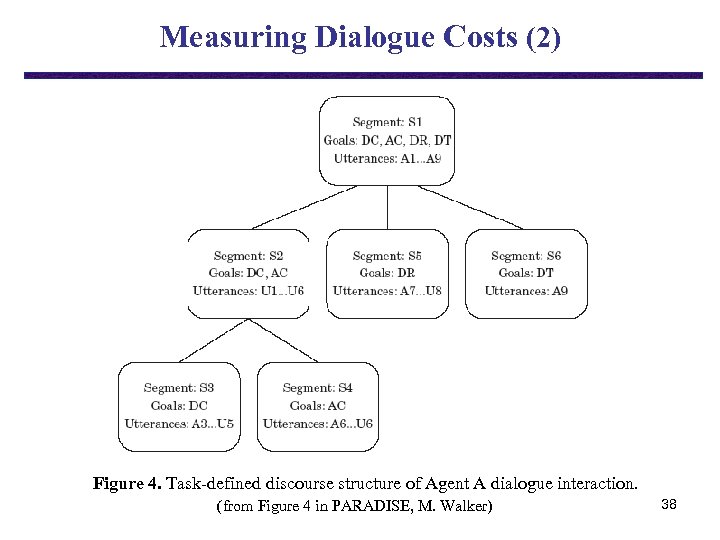

Measuring Dialogue Costs (1) PARADISE represents each cost measure as a function ci that can be applied to any (sub)dialogue. First, consider the simplest case of calculating efficiency measures over a whole dialogue. For example, let c 1 be the total number of utterances. For the whole dialogue D 1 in Figure 2, c 1 (D 1) is 23 utterances. For the whole dialogue D 2 in Figure 3, c 1 (D 2) is 10 utterances. Tagging by AVM attributes is required to calculate costs over subdialogues, since for any sub-dialogue task attributes define the subdialogue. For sub-dialogue S 4 in Figure 4, which is about the attribute arrival-city and consists of utterances A 6 and U 6, c 1 (S 4) is 2. (from PARADISE, M. Walker) 37

Measuring Dialogue Costs (2) Figure 4. Task-defined discourse structure of Agent A dialogue interaction. (from Figure 4 in PARADISE, M. Walker) 38

Measuring Dialogue Costs (3) Tagging by AVM attributes is also required to calculate the cost of some of the qualitative measures, such as number of repair utterances. For example, let c 2 be the number of repair utterances. The repair utterances for the whole dialogue D 1 in Figure 2 are A 3 through U 6, thus c 2 (D 1) is 10 utterances and c 2 (S 4) is two utterances. The repair utterance for the whole dialogue D 2 in Figure 3 is U 2, but note that according to the AVM task tagging, U 2 simultaneously addresses the information goals for depart-range. In general, if an utterance U contributes to the information goals of N different attributes, each attribute accounts for 1/N of any costs derivable from U. Thus, c 2 (D 2) is 0. 5. 39 (from PARADISE, M. Walker)

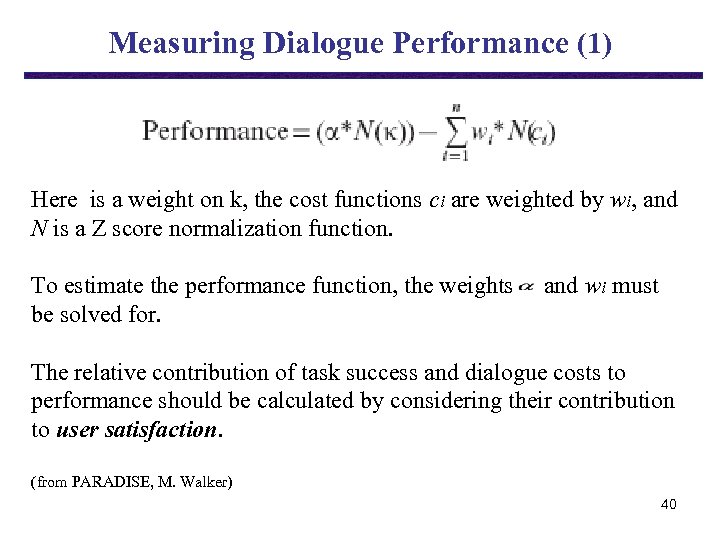

Measuring Dialogue Performance (1) Here is a weight on k, the cost functions ci are weighted by wi, and N is a Z score normalization function. To estimate the performance function, the weights and wi must be solved for. The relative contribution of task success and dialogue costs to performance should be calculated by considering their contribution to user satisfaction. (from PARADISE, M. Walker) 40

Measuring Dialogue Performance (2) User satisfaction is typically calculated with surveys that ask users to specify the degree to which they agree with one or more statements about the behavior or the performance of the system. A single user satisfaction measure can be calculated from a single question, as the mean of a set of ratings, or as a linear combination of a set of ratings. The weights and wi can be solved for using multivariate linear regression. Multivariate linear regression produces a set of coefficients (weights) describing the relative contribution of each predictor factor in accounting for the variance in a predicted factor. Regression of the Table 5 data for both sets of users tests which factors k, #utt, #rep most strongly predicts US. 41 (from PARADISE, M. Walker)

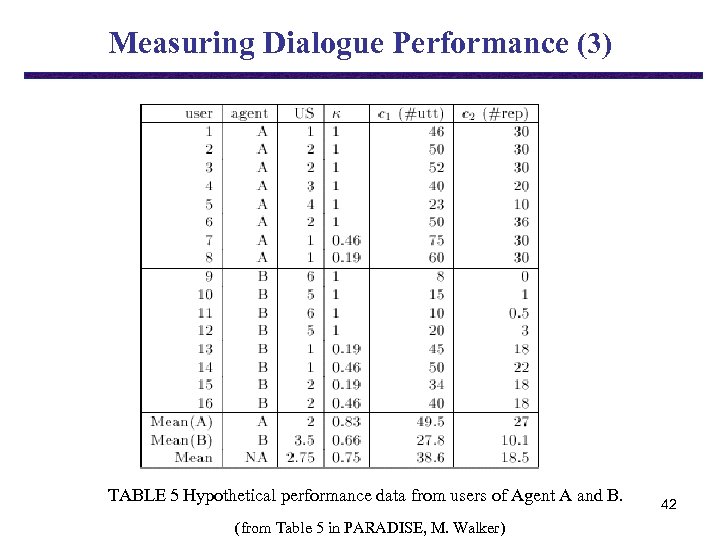

Measuring Dialogue Performance (3) TABLE 5 Hypothetical performance data from users of Agent A and B. (from Table 5 in PARADISE, M. Walker) 42

Measuring Dialogue Performance (4) In this illustrative example, the results of the regression with all factors included shows that only j and #rep are significant (p<0· 02). In order to develop a performance function estimate that includes only significant factors and eliminates redundancies. A second regression including only significant factors must then be done. In this case, a second regression yields the predictive equation: The mean performance of A is – 0. 44 and the mean performance of B is 0. 44, suggesting that Agent B may perform better than Agent A overall. The evaluator must then however test these performance differences for statistical significance. In this case, a t-test shows that differences are only significant at the p<0. 07 level, indicating a trend only. 43 (from PARADISE, M. Walker)

Experimental Design (1) Both experiments required every user to complete a set of application tasks (3 task with 2 subtasks; 4 tasks) in conversations with a particular version of the agent. Instructions to the users were given on a set of web pages; there was one page for each experimental task. Each web page consisted of a brief general description of the functionality of the agent, a list of hints for talking to the agent, a task description and information on how to call the agent. (from PARADISE, M. Walker) 44

Experimental Design (2) Each page also contained a form for specifying information acquired from the agent during the dialogue, and a survey, to be filled out after task completion, designed to probe the user’s satisfaction with the system. Users read the instructions in their offices before calling the agent from their office phone. All of the dialogues were recorded. The agent’s dialogue behavior was logged in terms of entering and exiting each state in the state transition table for the dialogue. 45 Users were required to fill out a web page form after each

Examples of survey questions (1) Was SYSTEM_NAME easy to understand in this conversation? (text-to-speech (TTS) Performance) In this conversation, did SYSTEM_NAME understand what you said? (automatic speech recognition (ASR) Performance) In this conversation, was it easy to find the message you wanted? (Task Ease) Was the pace of interaction with SYSTEM_NAME appropriate in this conversation? (Interaction Pace) How often was SYSTEM_NAME sluggish and slow to reply to you in this conversation? (System Response) 46 (from PARADISE, M. Walker)

Examples of survey questions (2) Did SYSTEM_NAME work the way you expected him to in this conversation? (Expected Behavior) In this conversation, how did SYSTEM_NAME’s voice interface compare to the touch-tone interface to voice mail? (Comparable Interface) From your current experience with using SYSTEM_NAME to get your e-mail, do you think you’d use SYSTEM_NAME regularly to access your mail when you are away from your desk? (Future Use) (from PARADISE, M. Walker) 47

Summary of Measurements User Satisfaction score is used as a measure of User Satisfaction. Kappa measures actual task success. The measures of System Turns, User Turns and Elapsed Time (the total time of the interaction ) are efficiency cost measures. The qualitative measures are Completed, Barge Ins (how many times users barged in on agent utterances), Timeout Prompts (the number of timeout prompts that were played), ASR Rejections (the number of times that ASR rejected the user’s utterance ), Help Requests and Mean Recognition Score. (from PARADISE, M. Walker) 48

Using the performance equation One potentially broad use of the PARADISE performance function is as feedback to the agent, then agent to learn how to optimize its behavior automatically. The basic idea is to apply the performance function to any dialogues Di in which the agent conversed with a user. Then each dialogue has an associated real numbered performance value Pi, which represents the performance of the agent for that dialogue. If the agent can make different choices in the dialogue about what to do in various situations, this performance feedback can be used to help the agent learn automatically, over time, which choices are optimal. Learning could be either on-line so that the agent tracks its behavior on a dialogue by dialogue basis, or off-line where the agent collects a lot of 49 experience and then tries to learn from it. (from PARADISE, M. Walker)

Overview Common Evaluation Issues Evaluating Spoken Dialogue Agents with PARADISE Some Ideas for Evaluation Experiments Guidelines and Design Conclusion What is initiative ? * Initiative Selection and Experiments* 50

How to Evaluate a Mixed-initiative System? Mike Pazzani’s caution: Don’t lose sight of the goal. • The metrics are just approximations of the goal. • Optimizing the metric may not optimize the goal. 51

Usability Evaluation - Sweeny et al. , 1993 (1) Three dimensions of usability evaluation: 1. Evaluation approach User-based, Expert-based, and Theory-based approach 2. Type of evaluation Diagnostic methods, Summative evaluation, and Certification approach 3. Time of evaluation Specification, Rapid prototype, High Fidelity prototype, and Operational system 52

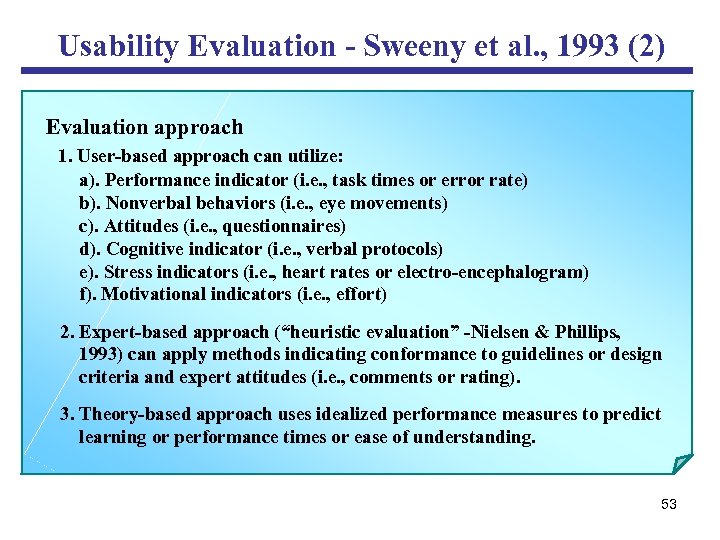

Usability Evaluation - Sweeny et al. , 1993 (2) Evaluation approach 1. User-based approach can utilize: a). Performance indicator (i. e. , task times or error rate) b). Nonverbal behaviors (i. e. , eye movements) c). Attitudes (i. e. , questionnaires) d). Cognitive indicator (i. e. , verbal protocols) e). Stress indicators (i. e. , heart rates or electro-encephalogram) f). Motivational indicators (i. e. , effort) 2. Expert-based approach (“heuristic evaluation” -Nielsen & Phillips, 1993) can apply methods indicating conformance to guidelines or design criteria and expert attitudes (i. e. , comments or rating). 3. Theory-based approach uses idealized performance measures to predict learning or performance times or ease of understanding. 53

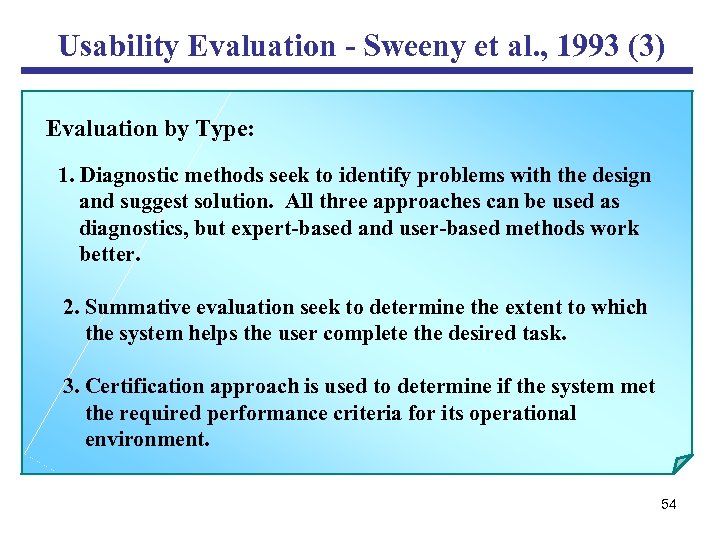

Usability Evaluation - Sweeny et al. , 1993 (3) Evaluation by Type: 1. Diagnostic methods seek to identify problems with the design and suggest solution. All three approaches can be used as diagnostics, but expert-based and user-based methods work better. 2. Summative evaluation seek to determine the extent to which the system helps the user complete the desired task. 3. Certification approach is used to determine if the system met the required performance criteria for its operational environment. 54

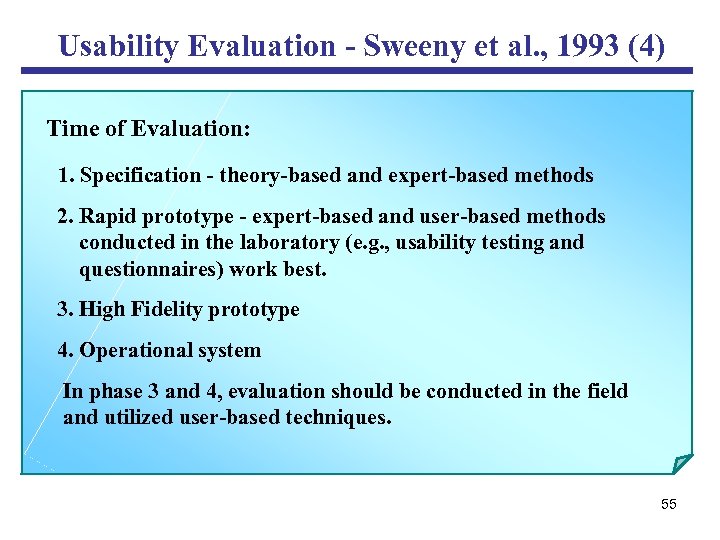

Usability Evaluation - Sweeny et al. , 1993 (4) Time of Evaluation: 1. Specification - theory-based and expert-based methods 2. Rapid prototype - expert-based and user-based methods conducted in the laboratory (e. g. , usability testing and questionnaires) work best. 3. High Fidelity prototype 4. Operational system In phase 3 and 4, evaluation should be conducted in the field and utilized user-based techniques. 55

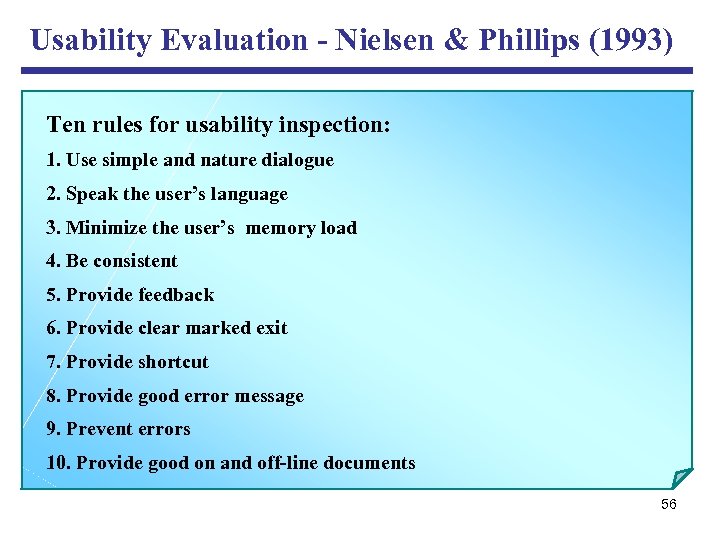

Usability Evaluation - Nielsen & Phillips (1993) Ten rules for usability inspection: 1. Use simple and nature dialogue 2. Speak the user’s language 3. Minimize the user’s memory load 4. Be consistent 5. Provide feedback 6. Provide clear marked exit 7. Provide shortcut 8. Provide good error message 9. Prevent errors 10. Provide good on and off-line documents 56

Subjective Usability Evaluation Methods Nine subjective evaluation methods: 1. Thinking aloud 2. Observation 3. Questionnaires 4. Interviews 5. Focus groups (5 -9 participants, lead by a moderator - member of evaluation team) 6. User feedback 7. User diaries and log-books 8. Teaching back (having users try to teach others how to use a system) 9. video taping 57

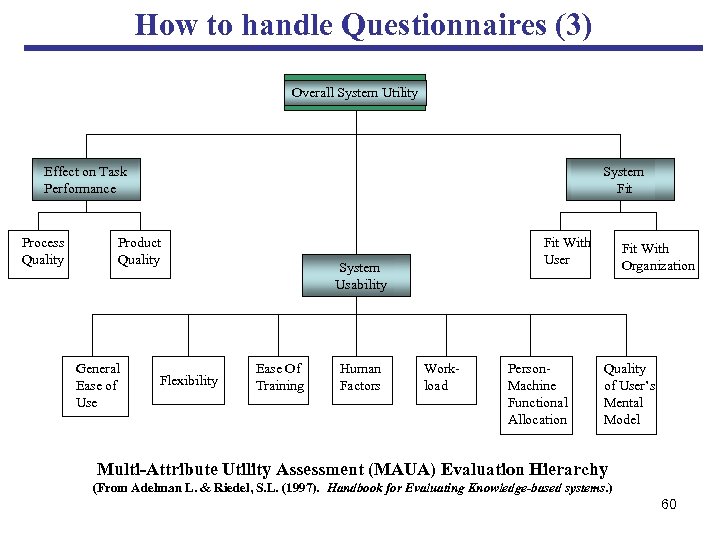

How to handle Questionnaires (1) Adelman & Riedel (1997) suggested to use Multi-Attribute Utility Assessment (MAUA) approach 1. the overall system utility is decomposed into three categories (dimensions): - Effect on task performance - System usability - System fit. 2. Each dimensions is decomposed into different criteria (e. g. fit with user and fit with organization in system fit dimension), and each criterion may be further decomposed into specific attributes. 58

How to handle Questionnaires (2) 3. There at least two questions for each bottom-level criterion and attribute in the hierarchy. 4. Each dimension is weighted equally and each criterion is also weighted equally in its dimension, so as attributes in each criterion. 5. A simple arithmetic operation can be used to score and weight the results. 6. Sensitivity analysis can be performed by determining how sensitive the overall utility score is to change in the relative weights on the criteria and dimensions, or to the system scores on the criteria and attributes 59

How to handle Questionnaires (3) Overall System Utility Effect on Task Performance Process Quality System Fit Product Quality General Ease of Use Flexibility Fit With User System Usability Ease Of Training Human Factors Workload Person. Machine Functional Allocation Fit With Organization Quality of User’s Mental Model Multi-Attribute Utility Assessment (MAUA) Evaluation Hierarchy (From Adelman L. & Riedel, S. L. (1997). Handbook for Evaluating Knowledge-based systems. ) 60

Objective Usability Evaluation Methods Objective data about how well users can actually use a system can be collected by empirical evaluation methods, and this kind of data is the best data one can gather to evaluate system usability. Four Evaluation Methods proposed: 1. Usability testing 2. Logging activity use (includes system use) 3. Activity analysis 4. Profile examination Usability testing and logging system use methods have been identified as effective methods for evaluating a stable prototype (Adelman and Riedel, 1997) 61

Usability Testing (1) 1. Usability testing is the most common empirical evaluation method – it assesses a system’s usability on pre-defined object performance measures. 2. Involves potential system users in a laboratory-like environment. Users will be giving either test cases or problems to solve after received proper training on the prototype. 3. Evaluation team collects objective data on the usability measures while they are performing the test cases or solving problems. - user’s individual or group performance data - measure the relative position (e. g. how much time difference or how many times) of the current level of usability against (1) the best possible expected performance level and (2) the worst expected performance level. This is our upper/lower bound baseline. 62

Usability Testing (2) 4. The best possible expected performance level can be obtained by having development team members perform each of the task and recording their results (e. g. , time). 5. The worst expected performance level is the lowest level of acceptable performance - this the lowest level in which the system could reasonably be expected to be used. We plan to use 1/6 of the best possible expected performance level as our worst expected performance level in our initial study. This proportion is based on the study in the “usability testing handbook” (Uehling, 1994). 63

Logging Activity Use (1) Some of the important objective measures (system use) provide by Nielsen (1993) are listed below: - The time users take to complete a specific task. - The number of tasks of various kinds that can be completed within a given time period. - The ratio between successful interactions and errors. - The time spent recovering from errors. - The number of user errors. - The number of system features utilized by users. - The frequency of use of the manuals and/or the help system, and the time spent using them. - The number of times the user express clear frustration (or clear joy). - The proportion of users who say that they would prefer using the system over some specified competitor. - The number of times the user had to work around an unsolvable problem. 64

Logging Activity Use (2) Proposed logging activity use method not only collects system features’ related information, but also records user behavior and relationship between tasks/movements (e. g. sequence, preference, pattern, trend, etc. ) . This provides us a better coverage on both system and user level. 65

Activity Analysis & Profile Examination Methods - Proposed activity analysis method analyzes the user behavior (e. g. sequence or preference) while using the system. - This method together with profile examination will provide extreme useful feedback to users, developers, and agent. - Profile examination method analyzes consolidated data from user logging files and provide summary level results. - Review single user profiles, group users’ profiles, and all users’ profiles and compare behaviors, trends, and progress on the same user and it also provides comparison among users, groups, and best possible expected performance. 66

Integration of System Prototyping and Evaluation Closely coupling evaluation utilities with research (prototype) systems and their design - evaluation module becomes a key component (like input module, for example) of the prototyping system. Comprehensive: All time (real time), all angles (local, group, and global), and automatic evaluation vs. phase end, partial, manual evaluation 67

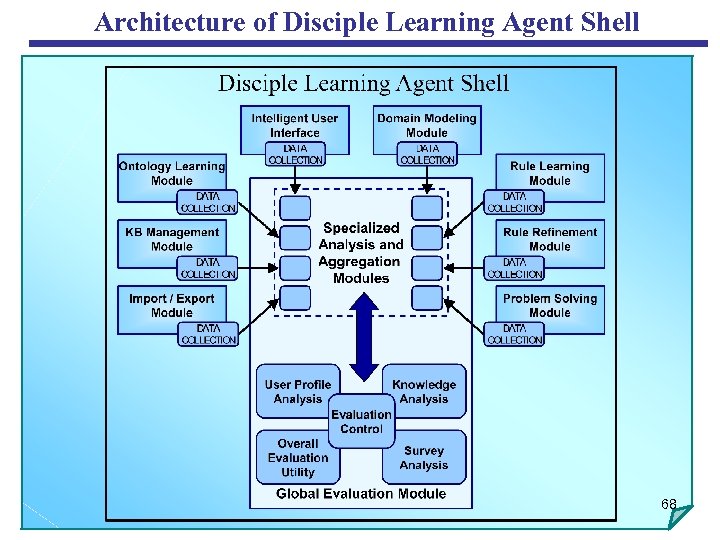

Architecture of Disciple Learning Agent Shell 68

Performance Evaluation 1. Experiment is the most appropriate method for evaluating the performance of the stable prototype (Adelman and Riedel, 1997 and Shadbolt et al. , 1999). 2. Two major kinds of experiments - laboratory and field experiments (allow evaluator to rigorously evaluate the system’s effect in its operational environment). 3. Collect both objective data and subjective data for performance evaluation. 69

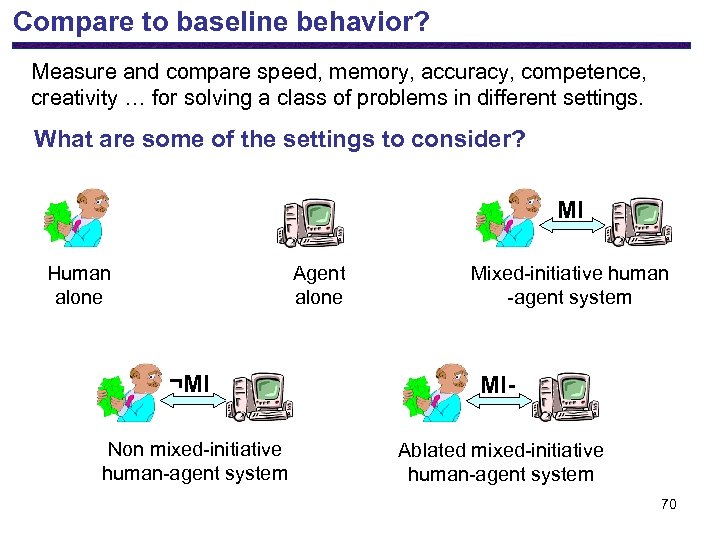

Compare to baseline behavior? Measure and compare speed, memory, accuracy, competence, creativity … for solving a class of problems in different settings. What are some of the settings to consider? MI Human alone Agent alone ¬MI Non mixed-initiative human-agent system Mixed-initiative human -agent system MIAblated mixed-initiative human-agent system 70

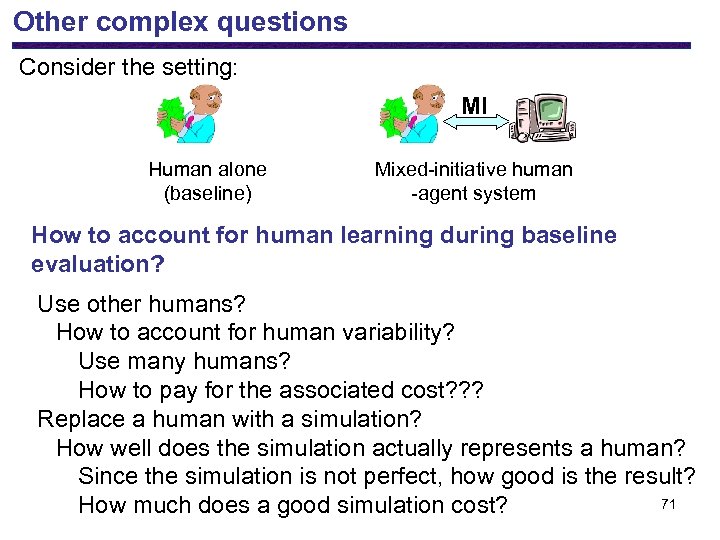

Other complex questions Consider the setting: MI Human alone (baseline) Mixed-initiative human -agent system How to account for human learning during baseline evaluation? Use other humans? How to account for human variability? Use many humans? How to pay for the associated cost? ? ? Replace a human with a simulation? How well does the simulation actually represents a human? Since the simulation is not perfect, how good is the result? 71 How much does a good simulation cost?

Important Studies of Performance Evaluation (1) Several important studies are needed for a through performance evaluation: 1). Knowledge-level study - Analyzes agent’s overall behavior and knowledge formation rate, knowledge changes (adding, deletion, and modification) and reasoning. - Not only analyze the size changes among KBS during the KB building process, but also examine the real content changes among KBS (e. g. , same rule (name) in different phase of KBS may cover different examples or knowledge elements). - By just comparing number of rules (or even name or rules) in different KBS will not give us the whole picture of knowledge changes. 72

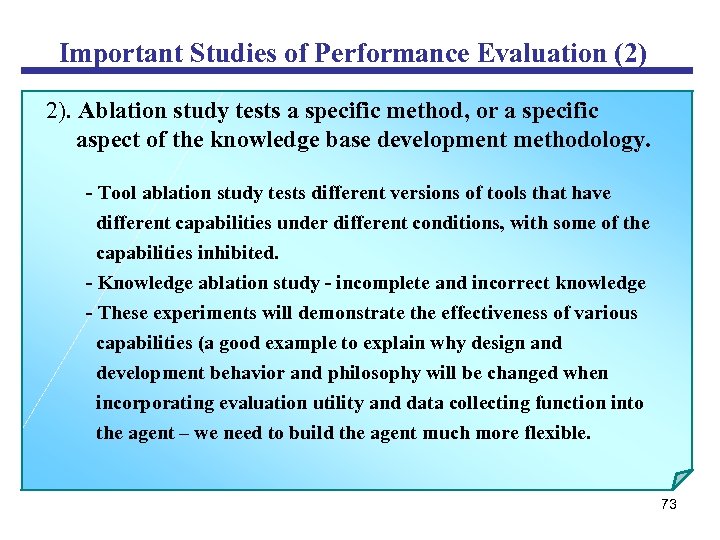

Important Studies of Performance Evaluation (2) 2). Ablation study tests a specific method, or a specific aspect of the knowledge base development methodology. - Tool ablation study tests different versions of tools that have different capabilities under different conditions, with some of the capabilities inhibited. - Knowledge ablation study - incomplete and incorrect knowledge - These experiments will demonstrate the effectiveness of various capabilities (a good example to explain why design and development behavior and philosophy will be changed when incorporating evaluation utility and data collecting function into the agent – we need to build the agent much more flexible. 73

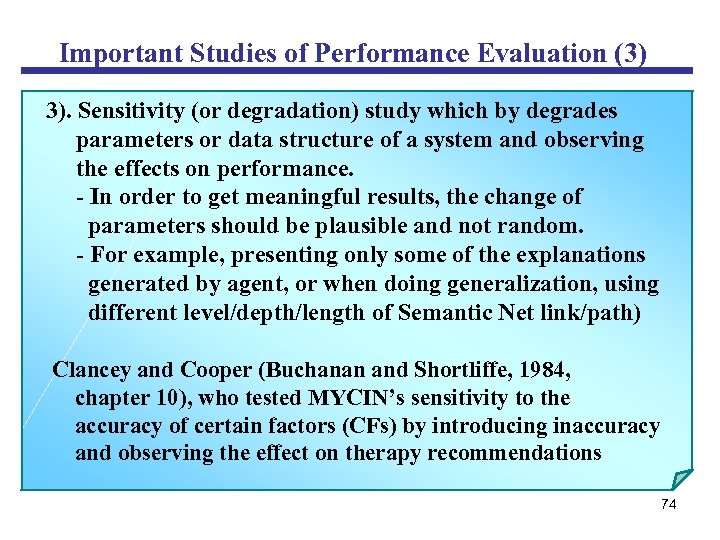

Important Studies of Performance Evaluation (3) 3). Sensitivity (or degradation) study which by degrades parameters or data structure of a system and observing the effects on performance. - In order to get meaningful results, the change of parameters should be plausible and not random. - For example, presenting only some of the explanations generated by agent, or when doing generalization, using different level/depth/length of Semantic Net link/path) Clancey and Cooper (Buchanan and Shortliffe, 1984, chapter 10), who tested MYCIN’s sensitivity to the accuracy of certain factors (CFs) by introducing inaccuracy and observing the effect on therapy recommendations 74

Overview Common Evaluation Issues Evaluating Spoken Dialogue Agents with PARADISE Some Ideas for Evaluation Experiments Guidelines and Design Conclusion What is initiative ? * Initiative Selection and Experiments* 75

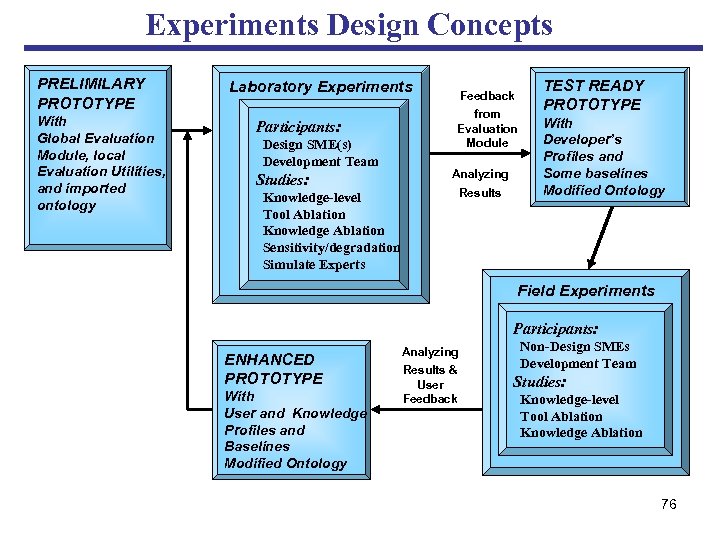

Experiments Design Concepts PRELIMILARY PROTOTYPE With Global Evaluation Module, local Evaluation Utilities, and imported ontology Laboratory Experiments Participants: Design SME(s) Development Team Studies: Feedback from Evaluation Module Analyzing Results Knowledge-level Tool Ablation Knowledge Ablation Sensitivity/degradation Simulate Experts TEST READY PROTOTYPE With Developer’s Profiles and Some baselines Modified Ontology Field Experiments Participants: ENHANCED PROTOTYPE With User and Knowledge Profiles and Baselines Modified Ontology Analyzing Results & User Feedback Non-Design SMEs Development Team Studies: Knowledge-level Tool Ablation Knowledge Ablation 76

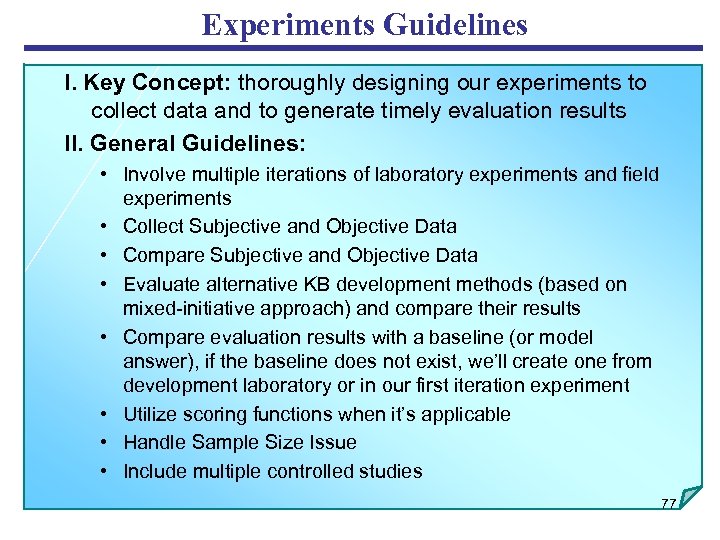

Experiments Guidelines I. Key Concept: thoroughly designing our experiments to collect data and to generate timely evaluation results II. General Guidelines: • Involve multiple iterations of laboratory experiments and field experiments • Collect Subjective and Objective Data • Compare Subjective and Objective Data • Evaluate alternative KB development methods (based on mixed-initiative approach) and compare their results • Compare evaluation results with a baseline (or model answer), if the baseline does not exist, we’ll create one from development laboratory or in our first iteration experiment • Utilize scoring functions when it’s applicable • Handle Sample Size Issue • Include multiple controlled studies 77

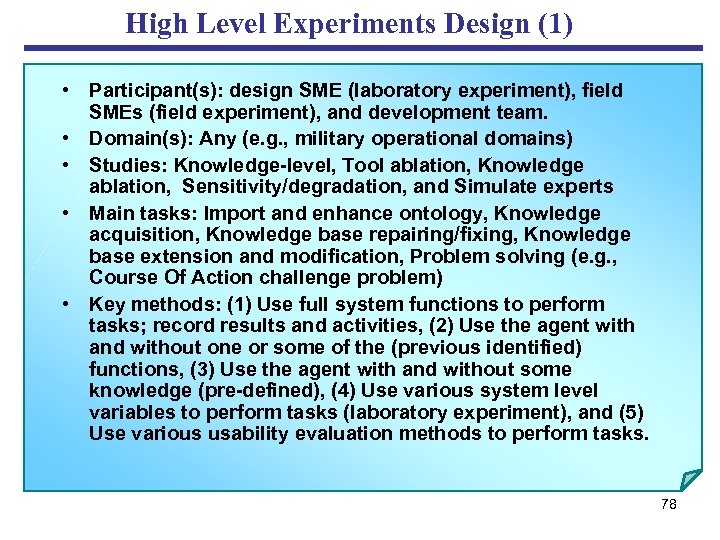

High Level Experiments Design (1) • Participant(s): design SME (laboratory experiment), field SMEs (field experiment), and development team. • Domain(s): Any (e. g. , military operational domains) • Studies: Knowledge-level, Tool ablation, Knowledge ablation, Sensitivity/degradation, and Simulate experts • Main tasks: Import and enhance ontology, Knowledge acquisition, Knowledge base repairing/fixing, Knowledge base extension and modification, Problem solving (e. g. , Course Of Action challenge problem) • Key methods: (1) Use full system functions to perform tasks; record results and activities, (2) Use the agent with and without one or some of the (previous identified) functions, (3) Use the agent with and without some knowledge (pre-defined), (4) Use various system level variables to perform tasks (laboratory experiment), and (5) Use various usability evaluation methods to perform tasks. 78

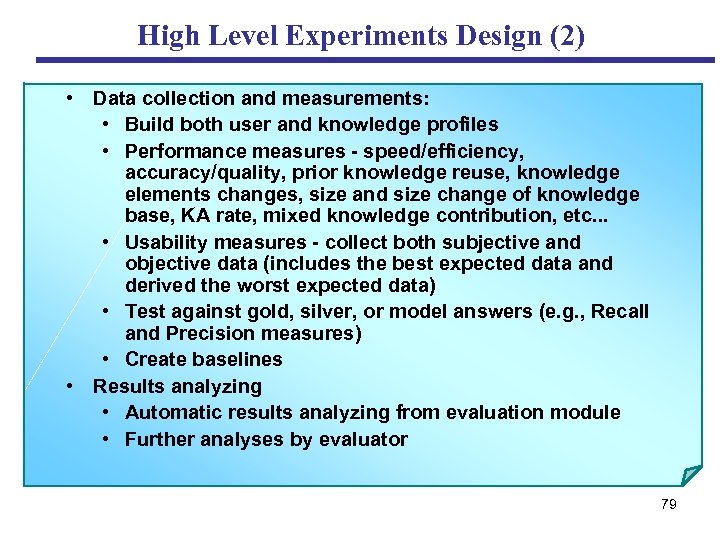

High Level Experiments Design (2) • Data collection and measurements: • Build both user and knowledge profiles • Performance measures - speed/efficiency, accuracy/quality, prior knowledge reuse, knowledge elements changes, size and size change of knowledge base, KA rate, mixed knowledge contribution, etc. . . • Usability measures - collect both subjective and objective data (includes the best expected data and derived the worst expected data) • Test against gold, silver, or model answers (e. g. , Recall and Precision measures) • Create baselines • Results analyzing • Automatic results analyzing from evaluation module • Further analyses by evaluator 79

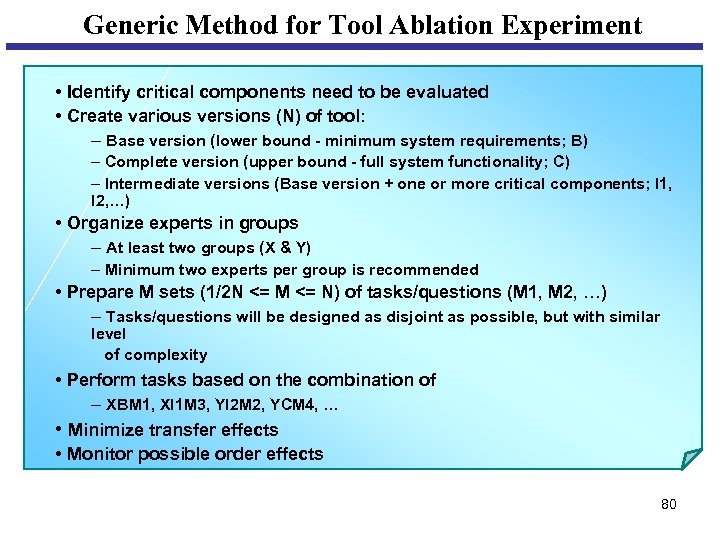

Generic Method for Tool Ablation Experiment • Identify critical components need to be evaluated • Create various versions (N) of tool: – Base version (lower bound - minimum system requirements; B) – Complete version (upper bound - full system functionality; C) – Intermediate versions (Base version + one or more critical components; I 1, I 2, …) • Organize experts in groups – At least two groups (X & Y) – Minimum two experts per group is recommended • Prepare M sets (1/2 N <= M <= N) of tasks/questions (M 1, M 2, …) – Tasks/questions will be designed as disjoint as possible, but with similar level of complexity • Perform tasks based on the combination of – XBM 1, XI 1 M 3, YI 2 M 2, YCM 4, … • Minimize transfer effects • Monitor possible order effects 80

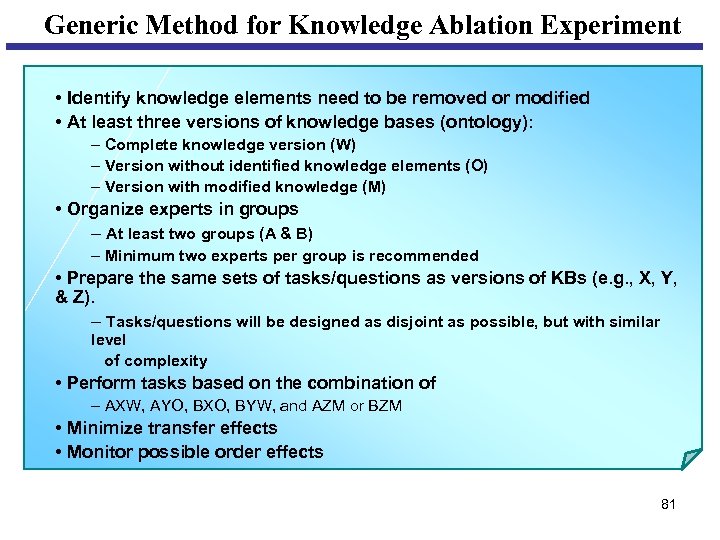

Generic Method for Knowledge Ablation Experiment • Identify knowledge elements need to be removed or modified • At least three versions of knowledge bases (ontology): – Complete knowledge version (W) – Version without identified knowledge elements (O) – Version with modified knowledge (M) • Organize experts in groups – At least two groups (A & B) – Minimum two experts per group is recommended • Prepare the same sets of tasks/questions as versions of KBs (e. g. , X, Y, & Z). – Tasks/questions will be designed as disjoint as possible, but with similar level of complexity • Perform tasks based on the combination of – AXW, AYO, BXO, BYW, and AZM or BZM • Minimize transfer effects • Monitor possible order effects 81

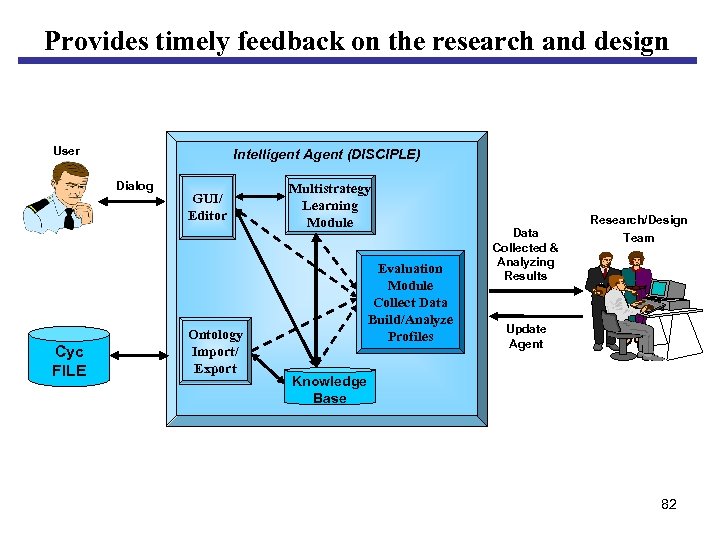

Provides timely feedback on the research and design User Intelligent Agent (DISCIPLE) Dialog Cyc FILE GUI/ Editor Ontology Import/ Export Multistrategy Learning Module Evaluation Module Collect Data Build/Analyze Profiles Research/Design Data Collected & Analyzing Results Team Update Agent Knowledge Base 82

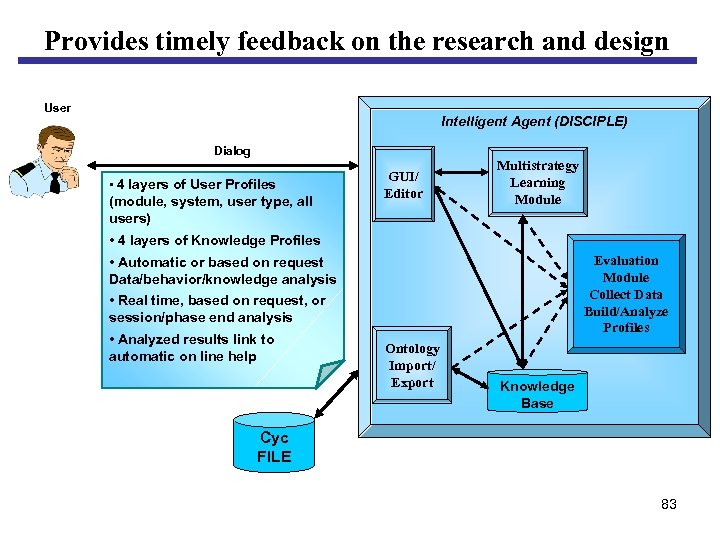

Provides timely feedback on the research and design User Intelligent Agent (DISCIPLE) Dialog • 4 layers of User Profiles (module, system, user type, all users) GUI/ Editor Multistrategy Learning Module • 4 layers of Knowledge Profiles Evaluation Module Collect Data Build/Analyze Profiles • Automatic or based on request Data/behavior/knowledge analysis • Real time, based on request, or session/phase end analysis • Analyzed results link to automatic on line help Ontology Import/ Export Knowledge Base Cyc FILE 83

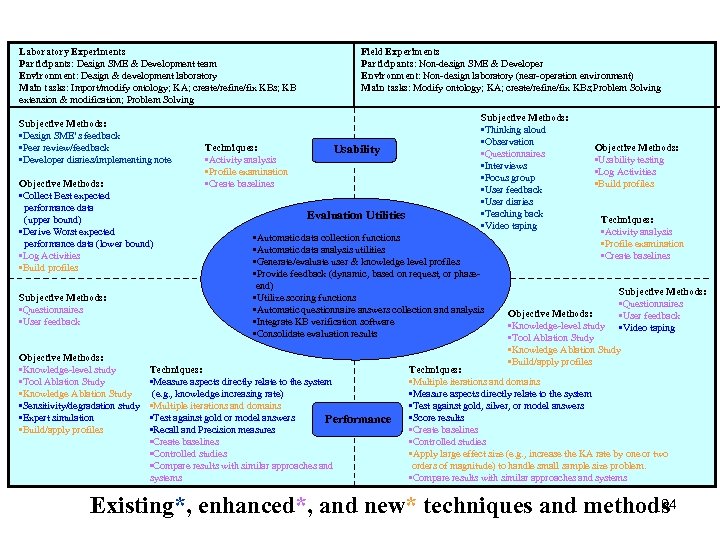

Laboratory Experiments Participants: Design SME & Development team Environment: Design & development laboratory Main tasks: Import/modify ontology; KA; create/refine/fix KBs; KB extension & modification; Problem Solving Subjective Methods: • Design SME’s feedback • Peer review/feedback • Developer diaries/implementing note Objective Methods: • Collect Best expected performance data (upper bound) • Derive Worst expected performance data (lower bound) • Log Activities • Build profiles Subjective Methods: • Questionnaires • User feedback Objective Methods: • Knowledge-level study • Tool Ablation Study • Knowledge Ablation Study • Sensitivity/degradation study • Expert simulation • Build/apply profiles Techniques: • Activity analysis • Profile examination • Create baselines Field Experiments Participants: Non-design SME & Developer Environment: Non-design laboratory (near-operation environment) Main tasks: Modify ontology; KA; create/refine/fix KBs; Problem Solving Usability Evaluation Utilities Subjective Methods: • Thinking aloud • Observation • Questionnaires • Interviews • Focus group • User feedback • User diaries • Teaching back • Video taping • Automatic data collection functions • Automatic data analysis utilities • Activity analysis • Generate/evaluate user & knowledge level profiles • Profile examination • Provide feedback (dynamic, based on request, or phase end) • Utilize scoring functions • Automatic questionnaire answers collection and analysis • Integrate KB verification software • Consolidate evaluation results Techniques: • Measure aspects directly relate to the system (e. g. , knowledge increasing rate) • Multiple iterations and domains • Test against gold or model answers Performance • Recall and Precision measures • Create baselines • Controlled studies • Compare results with similar approaches and systems Objective Methods: • Usability testing • Log Activities • Build profiles Techniques: • Activity analysis • Profile examination • Create baselines Subjective Methods: • Questionnaires • User feedback • Video taping Objective Methods: • Knowledge-level study • Tool Ablation Study • Knowledge Ablation Study • Build/apply profiles Techniques: • Multiple iterations and domains • Measure aspects directly relate to the system • Test against gold, silver, or model answers • Score results • Create baselines • Controlled studies • Apply large effect size (e. g. , increase the KA rate by one or two orders of magnitude) to handle small sample size problem. • Compare results with similar approaches and systems 8 Existing*, enhanced*, and new* techniques and methods 4

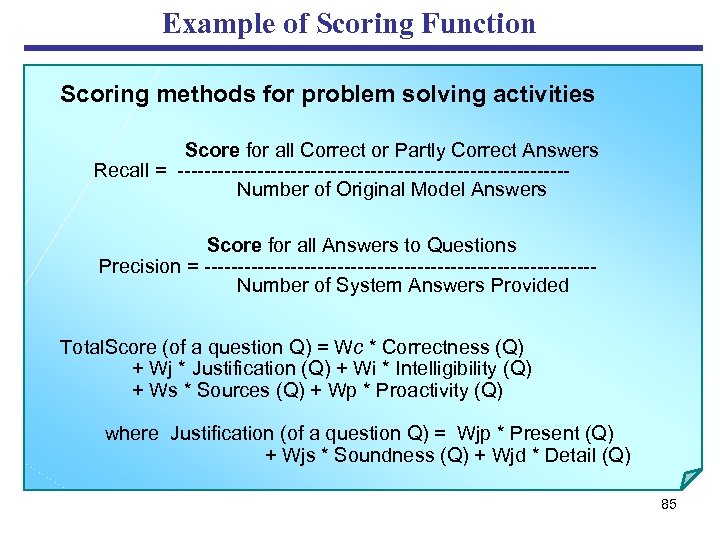

Example of Scoring Function Scoring methods for problem solving activities Score for all Correct or Partly Correct Answers Recall = ----------------------------- Number of Original Model Answers Score for all Answers to Questions Precision = ----------------------------- Number of System Answers Provided Total. Score (of a question Q) = Wc * Correctness (Q) + Wj * Justification (Q) + Wi * Intelligibility (Q) + Ws * Sources (Q) + Wp * Proactivity (Q) where Justification (of a question Q) = Wjp * Present (Q) + Wjs * Soundness (Q) + Wjd * Detail (Q) 85

Overview Common Evaluation Issues Evaluating Spoken Dialogue Agents with PARADISE Some Ideas for Evaluation Experiments Guidelines and Design Conclusion What is initiative ? * Initiative Selection and Experiments* 86

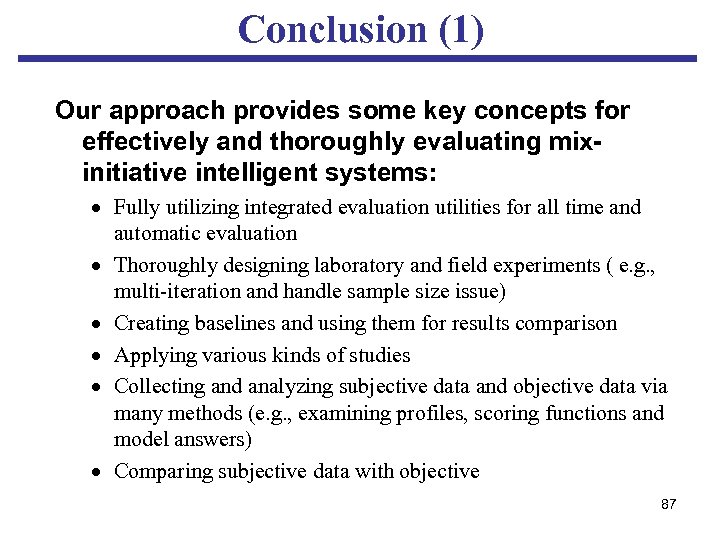

Conclusion (1) Our approach provides some key concepts for effectively and thoroughly evaluating mixinitiative intelligent systems: · Fully utilizing integrated evaluation utilities for all time and automatic evaluation · Thoroughly designing laboratory and field experiments ( e. g. , multi-iteration and handle sample size issue) · Creating baselines and using them for results comparison · Applying various kinds of studies · Collecting and analyzing subjective data and objective data via many methods (e. g. , examining profiles, scoring functions and model answers) · Comparing subjective data with objective 87

Conclusion (2) Our approach provides some key concepts for useful and timely feedback to users and development: · Building data collection, data analysis, and evaluation utility in the agent · Generate and evaluate user & knowledge data files and profiles · Provide three kinds of feedback - dynamic, based on request, and phase-end - to users, developers, and the agent 88

e757aa2b9cde0ca542cb794c67cdeca1.ppt