fdd86e47511b316f9990b416873664a8.ppt

- Количество слайдов: 46

Geometry-based sampling in learning and classification Or, Universal -approximators for integration of some nonnegative functions Leonard J. Schulman Caltech Joint with Michael Langberg Open U. Israel work in progress

Outline 1. 2. 3. 4. 5. 6. Vapnik-Chervonenkis (VC) method / PAC learning; nets, -approximators. Shatter function as cover code. -approximators (core-sets) for clustering; universal approximation of integrals of families of unbounded nonnegative functions. Failure of naive sampling approach. Small-variance estimators. Sensitivity and total sensitivity. Some results on sensitivity; MIN operator on families. Sensitivity for k-medians. Covering code for k-median.

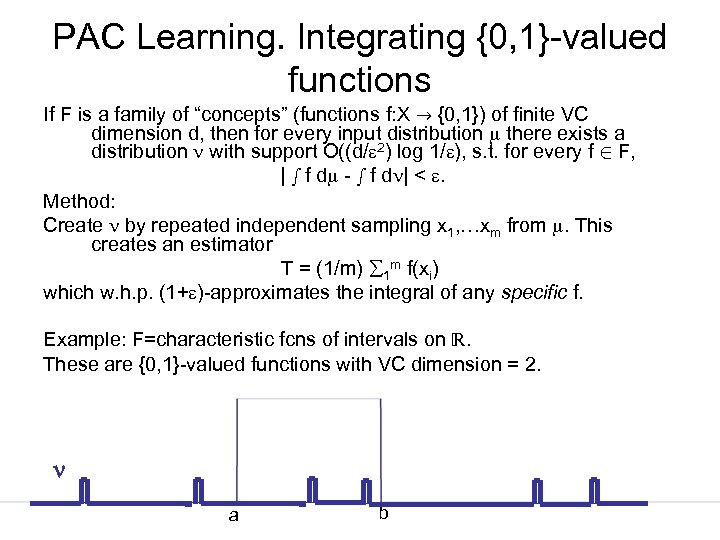

PAC Learning. Integrating {0, 1}-valued functions If F is a family of “concepts” (functions f: X {0, 1}) of finite VC dimension d, then for every input distribution there exists a distribution with support O((d/ 2) log 1/ ), s. t. for every f 2 F, | s f d - s f d | < . Method: (1) Create by repeated independent sampling x 1, …xm from . This creates an estimator T = (1/m) 1 m f(xi) which w. h. p. (1+ )-approximates any the integral of any specific f. Example: F=characteristic fcns of intervals on . These are {0, 1}-valued functions with VC dimension = 2. a b

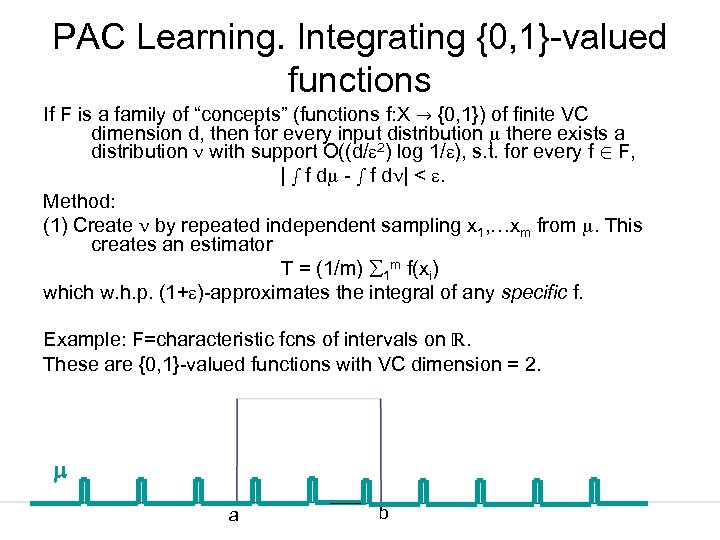

PAC Learning. Integrating {0, 1}-valued functions If F is a family of “concepts” (functions f: X {0, 1}) of finite VC dimension d, then for every input distribution there exists a distribution with support O((d/ 2) log 1/ ), s. t. for every f 2 F, | s f d - s f d | < . Method: (1) Create by repeated independent sampling x 1, …xm from . This creates an estimator T = (1/m) 1 m f(xi) which w. h. p. (1+ )-approximates the integral of any specific f. Example: F=characteristic fcns of intervals on . These are {0, 1}-valued functions with VC dimension = 2. a b

PAC Learning. Integrating {0, 1}-valued functions If F is a family of “concepts” (functions f: X {0, 1}) of finite VC dimension d, then for every input distribution there exists a distribution with support O((d/ 2) log 1/ ), s. t. for every f 2 F, | s f d - s f d | < . Method: Create by repeated independent sampling x 1, …xm from . This creates an estimator T = (1/m) 1 m f(xi) which w. h. p. (1+ )-approximates the integral of any specific f. Example: F=characteristic fcns of intervals on . These are {0, 1}-valued functions with VC dimension = 2. n a b

PAC Learning. Integrating {0, 1}-valued functions (cont. ) Easy to see that for any particular f 2 F, w. h. p. | s f d - s f d | < . But how do we argue this is simultaneously achieved for all the functions f 2 F? Can’t take union bound over infinitely many “bad events”. Need to express that there are few “types” of bad events. To conquer the infinite union bound apply “Red & Green Points” argument. Sample m=O((d/ 2) log (1/ )) “green” points G from . Will use = G=uniform dist. on G. P( G not an -approximator) = P(9 f 2 C badly-counted by G) · P(9 f 2 C: | (f)- G(f)|> ) Suppose G is not an -approximator: 9 f: | (f)- G(f)|>. Sample another m=O((d/ 2) log (1/ )) “red” points R from . With probability > ½, | (f)- R(f)|< /2. (Markov ineq. ) So: P(9 f 2 C: | (f)- G(f)|> ) < 2 P(9 f 2 C: | R(f)- G(f)|> /2).

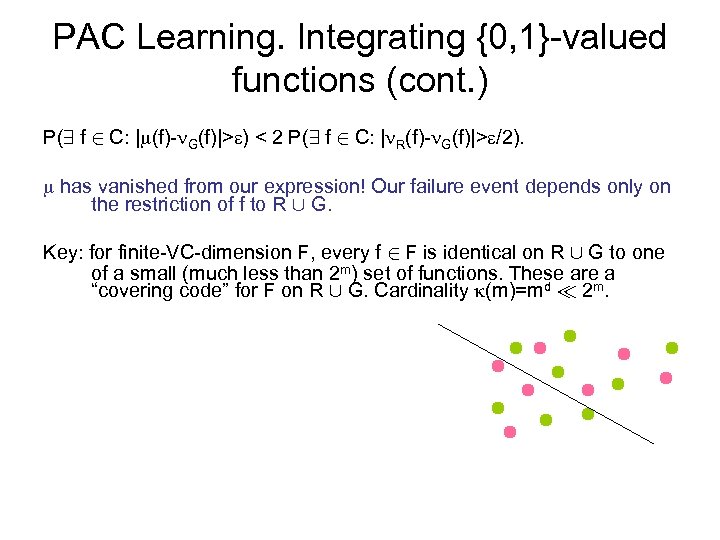

PAC Learning. Integrating {0, 1}-valued functions (cont. ) P(9 f 2 C: | (f)- G(f)|> ) < 2 P(9 f 2 C: | R(f)- G(f)|> /2). has vanished from our expression! Our failure event depends only on the restriction of f to R [ G. Key: for finite-VC-dimension F, every f 2 F is identical on R [ G to one of a small (much less than 2 m) set of functions. These are a “covering code” for F on R [ G. Cardinality (m)=md ¿ 2 m.

Integrating functions into larger ranges (still additive approximation) Extensions of VC-dimension notions to families of f: X {0, . . . n}: Pollard 1984 Natarajan 1989 Vapnik 1989 Ben-David, Cesa-Bianchi, Haussler, Long 1997. Families of functions f: X [0, 1]: extension of VC-dimension notion (analogous to discrete definitions but insists on quantitative separation of values): “fat-shattering”. Alon, Ben-David, Cesa-Bianchi, Haussler 1993 Kearns, Schapire 1994 Bartlett, Long, Williamson 1996 Bartlett, Long 1998 Function classes with finite “dimension” (as above) possess small coresets for additive -approximation of integrals. Same method still works: simply construct by repeatedly sampling from . Does not solve multiplicative approximation of nonnegative functions.

What classes of +-valued functions possess core-sets for integration? Why multiplicative approximation? In optimization we often wish to minimize a nonnegative loss function. Makes sense to settle for (1+ )-multiplicative approximation (and often unavoidable because of hardness). Example: important optimization problems arise in classification: Choose c 1, . . . ck to minimize: • • • k-median function: cost(fc 1, . . . ck)= s ||x-{c 1, . . . ck}|| d (x) k-means function: cost(fc 1, . . . ck)= s ||x-{c 1, . . . ck}||2 d (x) Or for any >0, cost(fc 1, . . . ck)= s ||x-{c 1, . . . ck}|| d (x)

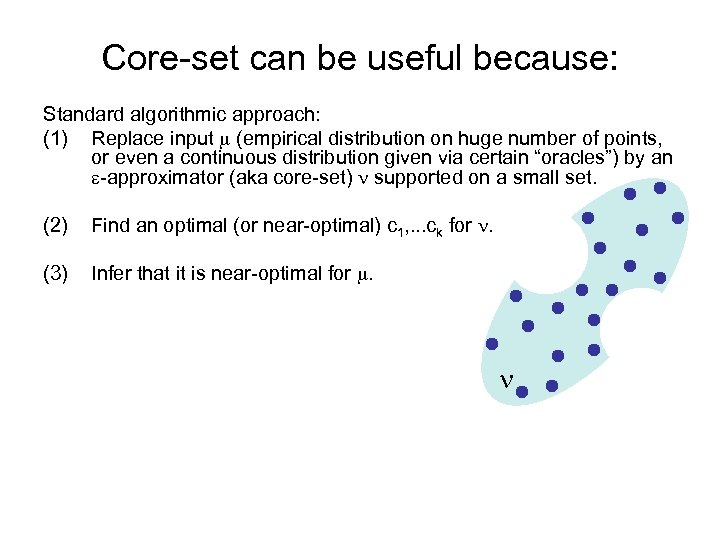

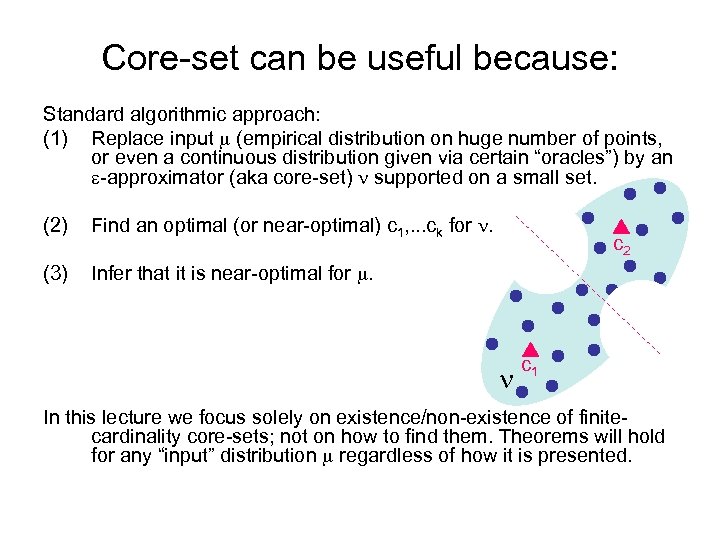

Core-set can be useful because: Standard algorithmic approach: (1) Replace input (empirical distribution on huge number of points, or even a continuous distribution given via certain “oracles”) by an -approximator (aka core-set) supported on a small set. (2) Find an optimal (or near-optimal) c 1, . . . ck for . (3) Infer that it is near-optimal for .

Core-set can be useful because: Standard algorithmic approach: (1) Replace input (empirical distribution on huge number of points, or even a continuous distribution given via certain “oracles”) by an -approximator (aka core-set) supported on a small set. (2) Find an optimal (or near-optimal) c 1, . . . ck for . (3) Infer that it is near-optimal for .

Core-set can be useful because: Standard algorithmic approach: (1) Replace input (empirical distribution on huge number of points, or even a continuous distribution given via certain “oracles”) by an -approximator (aka core-set) supported on a small set. (2) Find an optimal (or near-optimal) c 1, . . . ck for . (3) Infer that it is near-optimal for . c 2 c 1 In this lecture we focus solely on existence/non-existence of finitecardinality core-sets; not on how to find them. Theorems will hold for any “input” distribution regardless of how it is presented.

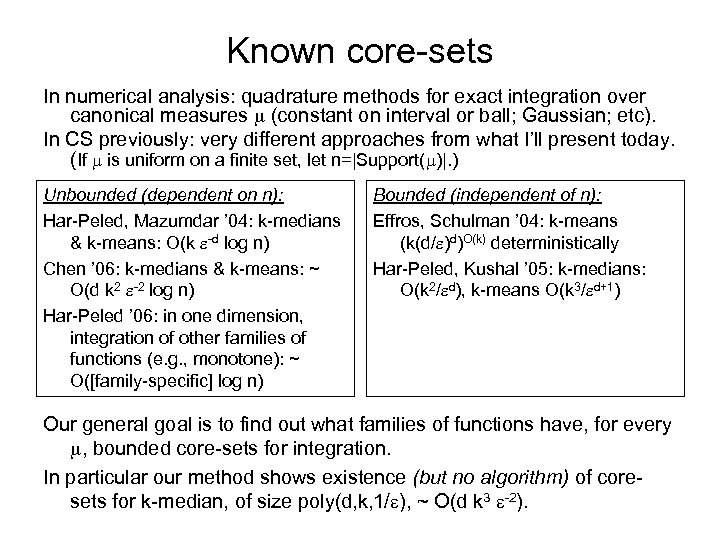

Known core-sets In numerical analysis: quadrature methods for exact integration over canonical measures (constant on interval or ball; Gaussian; etc). In CS previously: very different approaches from what I’ll present today. (If is uniform on a finite set, let n=|Support( )|. ) Unbounded (dependent on n): Har-Peled, Mazumdar ’ 04: k-medians & k-means: O(k -d log n) Chen ’ 06: k-medians & k-means: ~ O(d k 2 -2 log n) Har-Peled ’ 06: in one dimension, integration of other families of functions (e. g. , monotone): ~ O([family-specific] log n) Bounded (independent of n): Effros, Schulman ’ 04: k-means (k(d/ )d)O(k) deterministically Har-Peled, Kushal ’ 05: k-medians: O(k 2/ d), k-means O(k 3/ d+1) Our general goal is to find out what families of functions have, for every , bounded core-sets for integration. In particular our method shows existence (but no algorithm) of coresets for k-median, of size poly(d, k, 1/ ), ~ O(d k 3 -2).

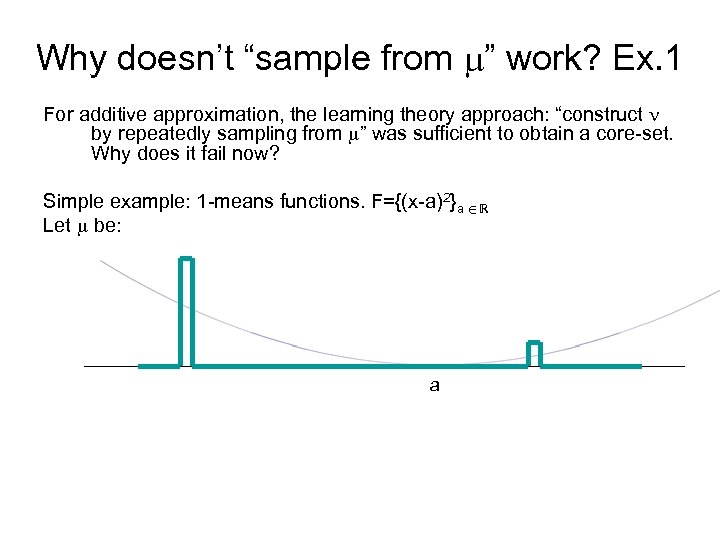

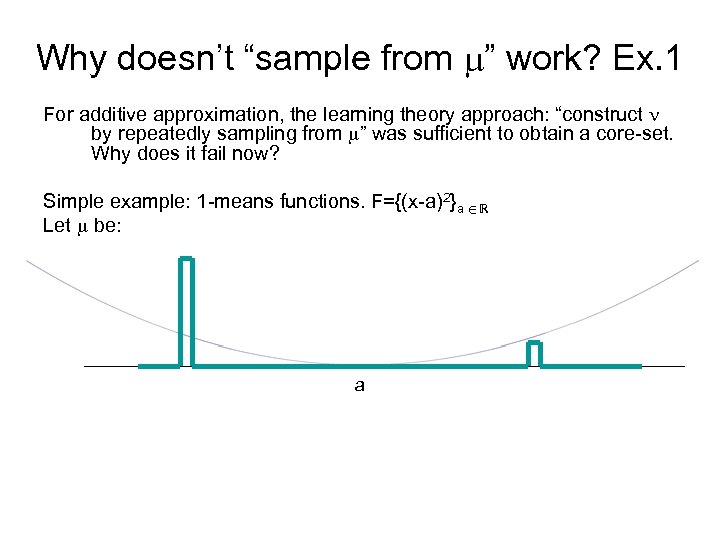

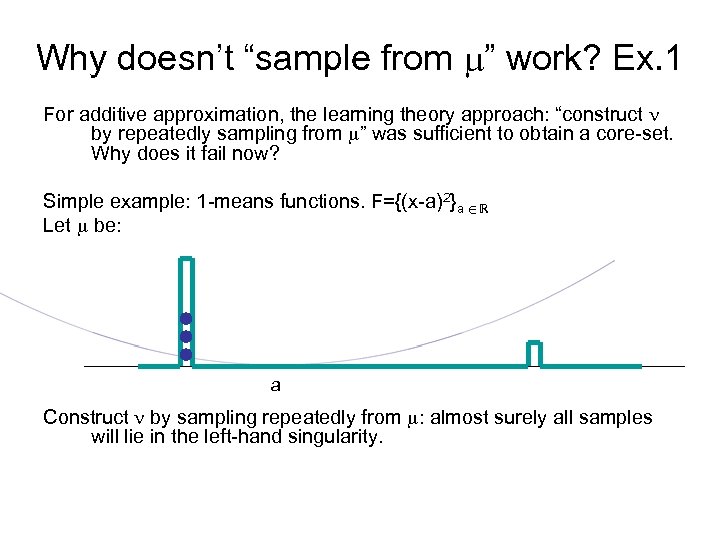

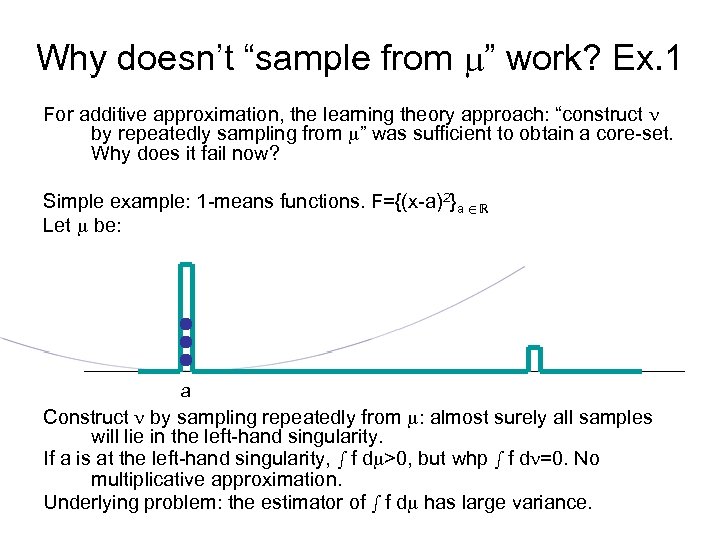

Why doesn’t “sample from ” work? Ex. 1 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Simple example: 1 -means functions. F={(x-a)2}a 2 Let be: a

Why doesn’t “sample from ” work? Ex. 1 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Simple example: 1 -means functions. F={(x-a)2}a 2 Let be: a

Why doesn’t “sample from ” work? Ex. 1 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Simple example: 1 -means functions. F={(x-a)2}a 2 Let be: a Construct by sampling repeatedly from : almost surely all samples will lie in the left-hand singularity.

Why doesn’t “sample from ” work? Ex. 1 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Simple example: 1 -means functions. F={(x-a)2}a 2 Let be: a Construct by sampling repeatedly from : almost surely all samples will lie in the left-hand singularity. If a is at the left-hand singularity, s f d >0, but whp s f d =0. No multiplicative approximation. Underlying problem: the estimator of s f d has large variance.

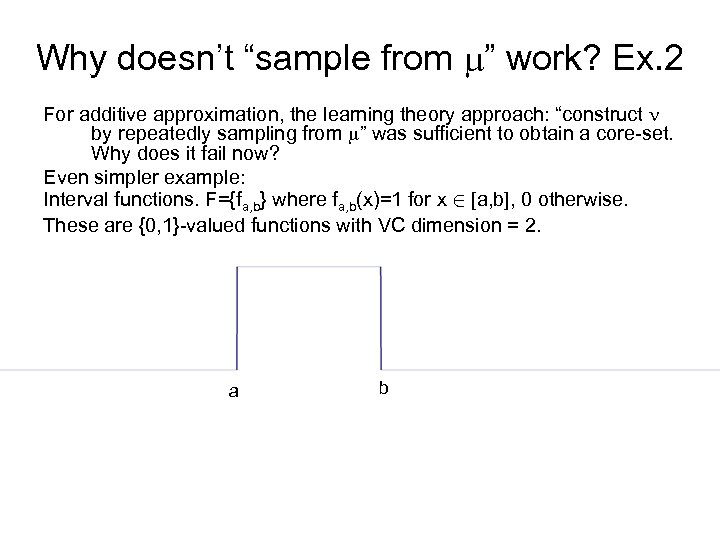

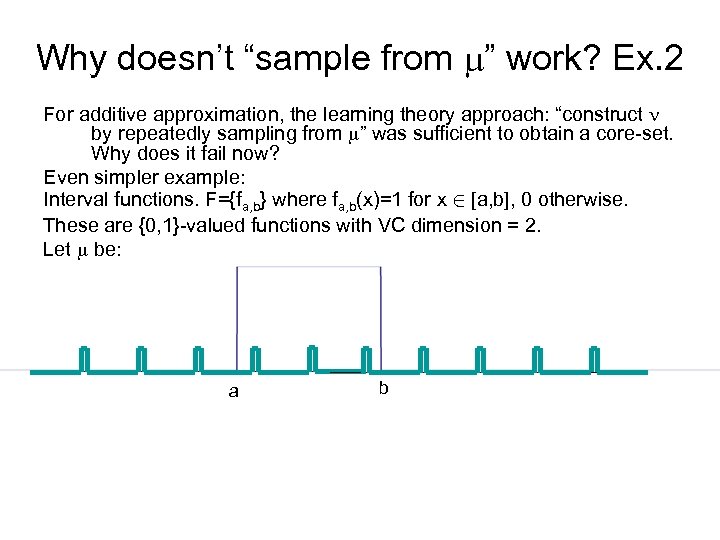

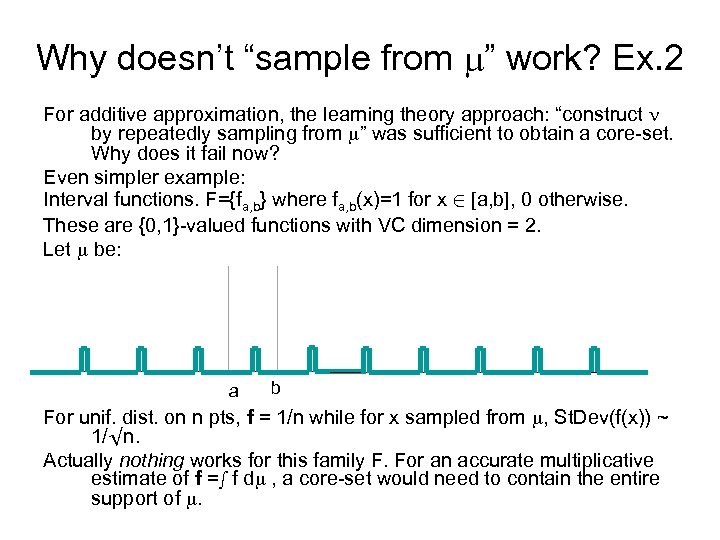

Why doesn’t “sample from ” work? Ex. 2 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Even simpler example: Interval functions. F={fa, b} where fa, b(x)=1 for x 2 [a, b], 0 otherwise. These are {0, 1}-valued functions with VC dimension = 2. a b

Why doesn’t “sample from ” work? Ex. 2 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Even simpler example: Interval functions. F={fa, b} where fa, b(x)=1 for x 2 [a, b], 0 otherwise. These are {0, 1}-valued functions with VC dimension = 2. Let be: a b

Why doesn’t “sample from ” work? Ex. 2 For additive approximation, the learning theory approach: “construct by repeatedly sampling from ” was sufficient to obtain a core-set. Why does it fail now? Even simpler example: Interval functions. F={fa, b} where fa, b(x)=1 for x 2 [a, b], 0 otherwise. These are {0, 1}-valued functions with VC dimension = 2. Let be: a b For unif. dist. on n pts, f = 1/n while for x sampled from , St. Dev(f(x)) ~ 1/ n. Actually nothing works for this family F. For an accurate multiplicative estimate of f =s f d , a core-set would need to contain the entire support of .

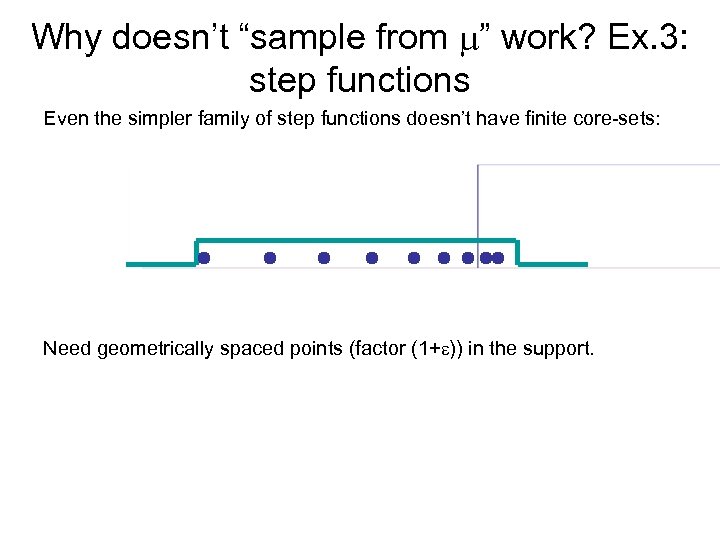

Why doesn’t “sample from ” work? Ex. 3: step functions Even the simpler family of step functions doesn’t have finite core-sets:

Why doesn’t “sample from ” work? Ex. 3: step functions Even the simpler family of step functions doesn’t have finite core-sets:

Why doesn’t “sample from ” work? Ex. 3: step functions Even the simpler family of step functions doesn’t have finite core-sets: Need geometrically spaced points (factor (1+ )) in the support.

Return to Ex. 1: show 9 small-variance estimator f =s f d General approach: weighted sampling. Sample not from but from a distribution q which depends on both and F. Weighted sampling has long been used for clustering algorithms [Fernandez de la Vega, Kenyon’ 98; Kleinberg, Papadimitriou, Raghavan’ 98; Schulman’ 98; Alon, Sudakov’ 99; . . . ], to reduce the size of the data set. What we’re trying to explain is (a) For what classes of functions can weighted sampling provide an -approximator (core-set); (b) What is the connection with the VC proof of existence of approximators in learning theory.

Return to Ex. 1: show 9 small-variance estimator f =s f d General approach: weighted sampling. Sample not from but from a distribution q which depends on both and F. Sample x from q. The random variable T = x fx / qx is an unbiased estimator of f. Can we design q so Var(T) is small 8 f 2 F? Ideally: Var(T) 2 O(f 2) For the case of “ 1 -means in one dimension”, the optimization Given , choose q to minimize maxf 2 F Var(T) can be solved (with mild pain) by Lagrange multipliers. Solution: Let 2=Var( ). Center at 0. Then sample from qx= x( 2+x 2)/(2 2). (Note: heavily weights the tails of . ) Calculation: Var(T) · f 2. (Now average O(1/ 2) samples. For any specific f, only 1± error. )

Can we generalize the success of Ex. 1? For what classes F of nonnegative functions does there exist, for all , an estimator T with Var(T) 2 O(f 2)? E. g. , what about nonnegative quartics, fx=(x-a)2(x-b)2 ? Shouldn’t have to do Lagrange multipliers each time. Key notion: sensitivity. Define the sensitivity of x w. r. t. (F, ): Define the total sensitivity of F: sx = supf 2 F fx/f S(F) = sup s sx d Sample from the distribution qx = x sx / S. (Emphasizes sensitive x’s) Theorem 1: Var(T) · (S-1) f 2 Proof omitted. Exercise: For “parabolas”, F={(x-a)2}, show S=2. Corollary: Var(T) · f 2 (as previously obtained via Lagrange mults) Theorem 2 (slightly harder): T has a Chernoff bound (distribution has exponential tails). Don’t need this today.

Can we calculate S for more examples? Example 1. Let V be a real or complex vector space of dimension d. For each v=(. . . vx. . . ) 2 V define an f 2 F by fx=|vx|2. Theorem 3: S(F)=d. Proof omitted. Corollary (again): Quadratics in 1 dimension have S(F)=2. Quartics in 1 dimension have S(F)=3.

Can we calculate S for more examples? Let V be a real or complex vector space of dimension d. For each v=(. . . vx. . . ) 2 V define an f 2 F by fx=|vx|2. Theorem 3: S(F)=d. Proof omitted. Corollary (again): Quadratics in 1 dimension have S(F)=2. Quartics in 1 dimension have S(F)=3. Quadratics in r dimensions have S(F)=r+1.

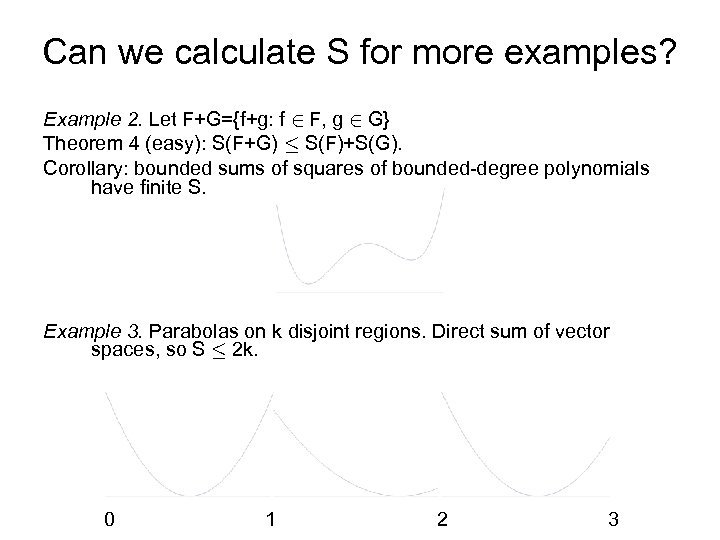

Can we calculate S for more examples? Example 2. Let F+G={f+g: f 2 F, g 2 G} Theorem 4 (easy): S(F+G) · S(F)+S(G). Corollary: bounded sums of squares of bounded-degree polynomials have finite S. Example 3. Parabolas on k disjoint regions. Direct sum of vector spaces, so S · 2 k. 0 1 2 3

Can we calculate S for more examples? But all these examples don’t even handle the 1 -median functions:

Can we calculate S for more examples? But all these examples don’t even handle the 1 -median functions: And certainly not the k-median functions:

Can we calculate S for more examples? But all these examples don’t even handle the 1 -median functions: And certainly not the k-median functions: Will return to this. . .

What about MIN(F, G)? Question: If F and G have finite total sensitivity, is the same true of MIN(F, G) = {min(f, g): f 2 F, g 2 G} ? Want this for optimization: e. g. , k-means or k-median functions are constructed by MIN out of simple families. We know S(Parabolas)=2; what is S(MIN(Parabolas, Parabolas))?

What about MIN(F, G)? Question: If F and G have finite total sensitivity, is the same true of MIN(F, G) = {min(f, g): f 2 F, g 2 G} ? Want this for optimization: eg k-means or k-median functions are constructed by MIN out of simple families. We know S(Parabolas)=2; what is S(MIN(Parabolas, Parabolas))? Answer: unbounded. So total sensitivity does not remain finite under MIN operator.

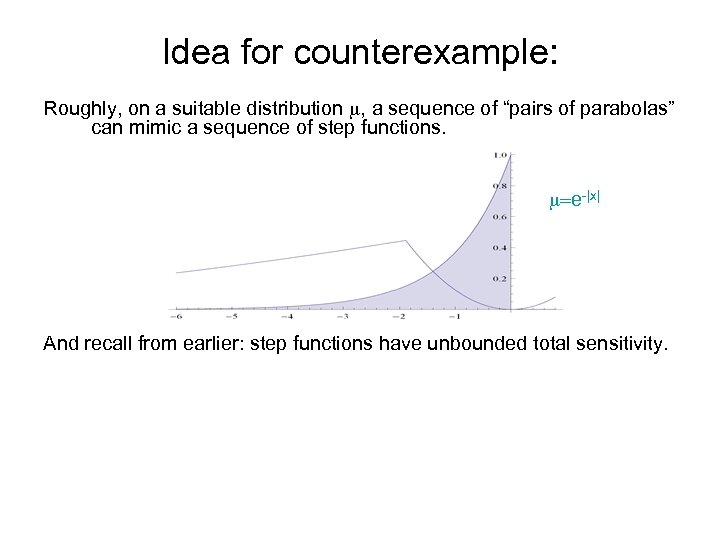

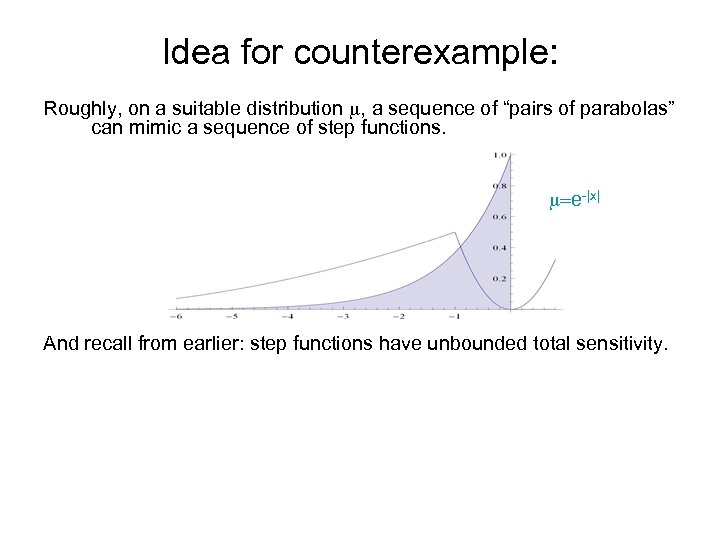

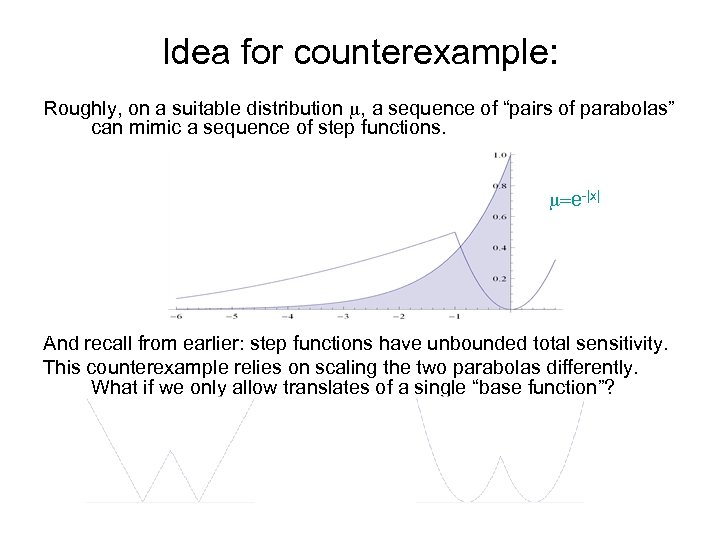

Idea for counterexample: Roughly, on a suitable distribution , a sequence of “pairs of parabolas” can mimic a sequence of step functions. =e-|x| And recall from earlier: step functions have unbounded total sensitivity.

Idea for counterexample: Roughly, on a suitable distribution , a sequence of “pairs of parabolas” can mimic a sequence of step functions. =e-|x| And recall from earlier: step functions have unbounded total sensitivity.

Idea for counterexample: Roughly, on a suitable distribution , a sequence of “pairs of parabolas” can mimic a sequence of step functions. =e-|x| And recall from earlier: step functions have unbounded total sensitivity. This counterexample relies on scaling the two parabolas differently. What if we only allow translates of a single “base function”?

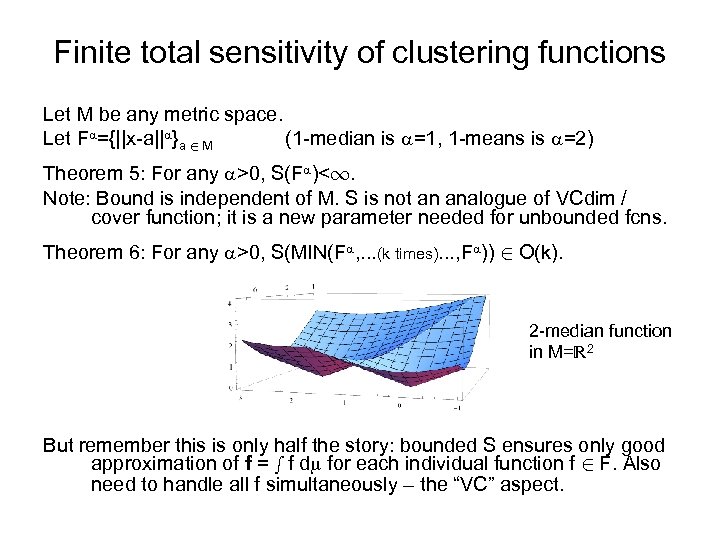

Finite total sensitivity of clustering functions Let M be any metric space. Let F ={||x-a|| }a 2 M (1 -median is =1, 1 -means is =2) Theorem 5: For any >0, S(F )<1. Note: Bound is independent of M. S is not an analogue of VCdim / cover function; it is a new parameter needed for unbounded fcns. Theorem 6: For any >0, S(MIN(F , . . . (k times). . . , F )) 2 O(k). 2 -median function in M= 2 But remember this is only half the story: bounded S ensures only good approximation of f = s f d for each individual function f 2 F. Also need to handle all f simultaneously – the “VC” aspect.

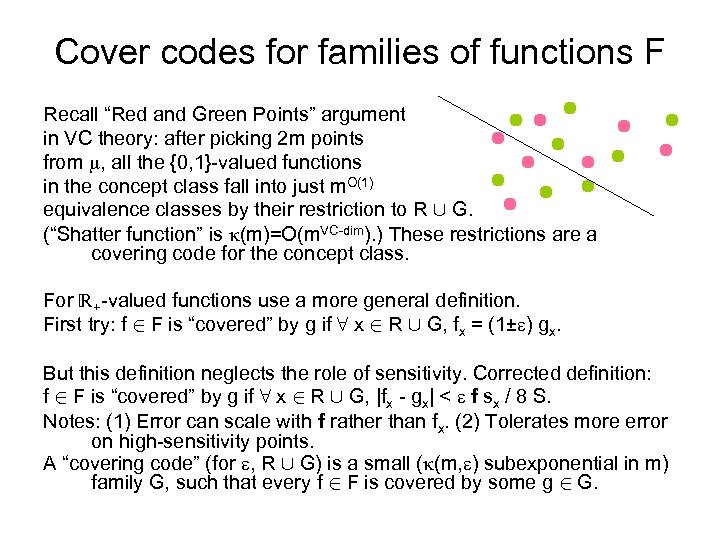

Cover codes for families of functions F Recall “Red and Green Points” argument in VC theory: after picking 2 m points from , all the {0, 1}-valued functions in the concept class fall into just m. O(1) equivalence classes by their restriction to R [ G. (“Shatter function” is (m)=O(m. VC-dim). ) These restrictions are a covering code for the concept class. For +-valued functions use a more general definition. First try: f 2 F is “covered” by g if 8 x 2 R [ G, fx = (1± ) gx. But this definition neglects the role of sensitivity. Corrected definition: f 2 F is “covered” by g if 8 x 2 R [ G, |fx - gx| < f sx / 8 S. Notes: (1) Error can scale with f rather than fx. (2) Tolerates more error on high-sensitivity points. A “covering code” (for , R [ G) is a small ( (m, ) subexponential in m) family G, such that every f 2 F is covered by some g 2 G.

Cover codes for families of functions F So (now focusing on k-median) we need to prove two things: Theorem 6: S(MIN(F 1, . . . (k times). . . , F 1)) 2 O(k). Theorem 7: (a) In d, (MIN(F 1, . . . (k times). . . , F 1)) 2 mpoly(k, 1/ , d) (b) Chernoff bound for s fx/sx d G as an estimator of s fx/sx d R [ G (Recall G = uniform dist. on G. ) Today talk only about: Theorem 6 Theorem 7 in the case k=1, d arbitrary.

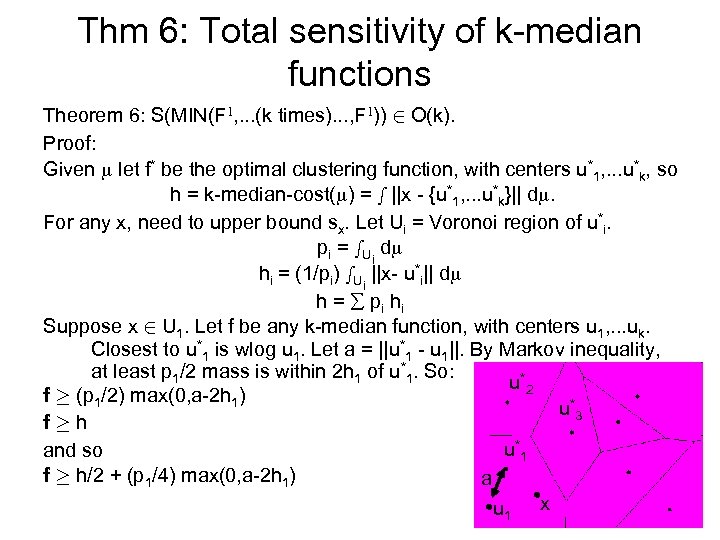

Thm 6: Total sensitivity of k-median functions Theorem 6: S(MIN(F 1, . . . (k times). . . , F 1)) 2 O(k). Proof: Given let f* be the optimal clustering function, with centers u*1, . . . u*k, so h = k-median-cost( ) = s ||x - {u*1, . . . u*k}|| d. For any x, need to upper bound sx. Let Ui = Voronoi region of u*i. pi = s. Ui d hi = (1/pi) s. Ui ||x- u*i|| d h = pi hi Suppose x 2 U 1. Let f be any k-median function, with centers u 1, . . . uk. Closest to u*1 is wlog u 1. Let a = ||u*1 - u 1||. By Markov inequality, at least p 1/2 mass is within 2 h 1 of u*1. So: u*2 f ¸ (p 1/2) max(0, a-2 h 1) u*3 f¸h u*1 and so f ¸ h/2 + (p 1/4) max(0, a-2 h 1) a u 1 x

Thm 6: Total sensitivity of k-median functions f ¸ h/2 + (p 1/4) max(0, a-2 h 1) From the definition of sensitivity, sx = maxf fx / f · maxf ||x-{u 1, . . . , uk}|| / f · maxf ||x-u 1|| / f ·. . . (can show worst case is either a=2 h 1 or a=1). . . · 4 h 1/h+ 2||x-u*1||/h + 4/p 1 Thus S = s sx d = i s. Ui sx d · i s. Ui [4 h 1/h+ 2||x-u*1||/h + 4/p 1] d = (4/h) pi hi + (2/h) s ||{x- u*1, . . . u*k}|| d + i 4 = 4 + 2 + 4 k = 6+4 k. (Best possible up to constants. )

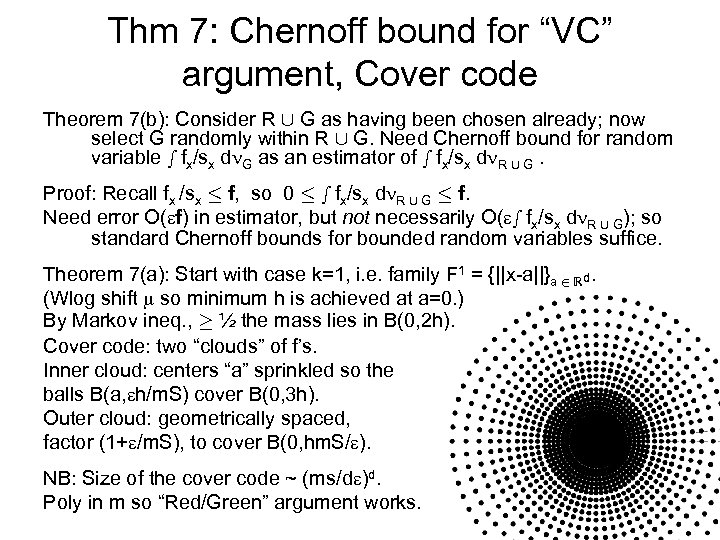

Thm 7: Chernoff bound for “VC” argument, Cover code Theorem 7(b): Consider R [ G as having been chosen already; now select G randomly within R [ G. Need Chernoff bound for random variable s fx/sx d G as an estimator of s fx/sx d R [ G. Proof: Recall fx /sx · f, so 0 · s fx/sx d R [ G · f. Need error O( f) in estimator, but not necessarily O( s fx/sx d R [ G); so standard Chernoff bounds for bounded random variables suffice. Theorem 7(a): Start with case k=1, i. e. family F 1 = {||x-a||}a 2 d. (Wlog shift so minimum h is achieved at a=0. ) By Markov ineq. , ¸ ½ the mass lies in B(0, 2 h). Cover code: two “clouds” of f’s. Inner cloud: centers “a” sprinkled so the balls B(a, h/m. S) cover B(0, 3 h). Outer cloud: geometrically spaced, factor (1+ /m. S), to cover B(0, hm. S/ ). NB: Size of the cover code ~ (ms/d )d. Poly in m so “Red/Green” argument works.

Thm 7: Chernoff bound for “VC” argument, Cover code Why is every f=||x-a|| covered by this code? In cases 1 & 2, f is covered by the g whose root b is closest to a. Case 1: a 2 inner ball B(0, 3 h). Then for all x, |fx-gx| is bounded by Lipshitz property. Case 2: a 2 outer ball B(0, hm. S/ ). This forces f to be large (proportional to a rather than h) which makes it easier to achieve |fx-gx| · f; again use Lipshitz property. Case 3: a outer ball B(0, hm. S/ ). In this case f is covered by the constant function gx=a. Again this forces f to be large (proportional to a rather than h), but for x far from 0 this is not enough. Use the inequality h ¸ |x|/sx. Distant points have high sensitivity. Take advantage of the extra tolerance for error on high-sensitivity points.

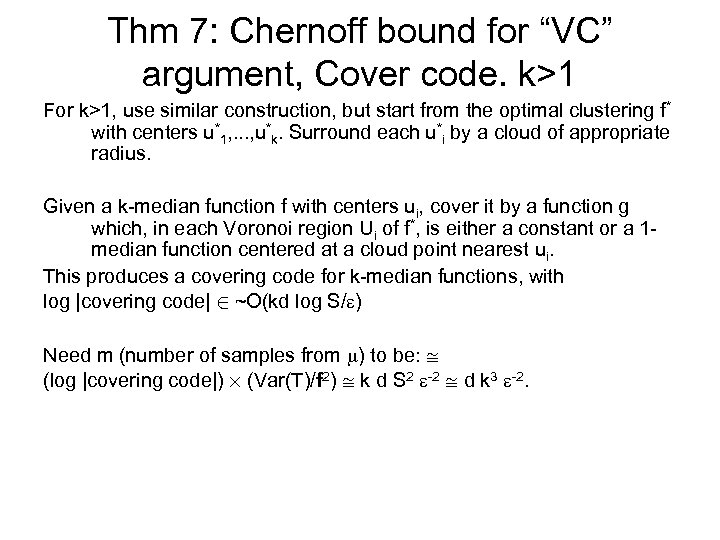

Thm 7: Chernoff bound for “VC” argument, Cover code. k>1 For k>1, use similar construction, but start from the optimal clustering f* with centers u*1, . . . , u*k. Surround each u*i by a cloud of appropriate radius. Given a k-median function f with centers ui, cover it by a function g which, in each Voronoi region Ui of f*, is either a constant or a 1 median function centered at a cloud point nearest ui. This produces a covering code for k-median functions, with log |covering code| 2 ~O(kd log S/ ) Need m (number of samples from ) to be: (log |covering code|) £ (Var(T)/f 2) k d S 2 -2 d k 3 -2.

Some open questions (1) Efficient algorithm to find a small -approximator? (Suppose Support( ) is finite. ) (2) For {0, 1}-valued functions there was a finitary characterization of whether the cover function F was exponential or sub-exponential: largest set shattered by F. Question: Is there an analogous finitary characterization for the cover function for multiplicative approximation of +-valued functions? (Not sufficient that level sets have low VC dimension; step functions are a counterexample. )

fdd86e47511b316f9990b416873664a8.ppt