4d875758c4922aef03f190c7fd17b093.ppt

- Количество слайдов: 30

Generating Password Challenge Questions Chuong Ngo

Generating Password Challenge Questions Chuong Ngo

Online Services and the Problem of Account Security E-commerce, banking, e-mail, etc. . . Average: 26 different online accounts 5 unique passwords 25 to 30: 40+ accounts 2012 online fraud cases: 3 x 2010

Online Services and the Problem of Account Security E-commerce, banking, e-mail, etc. . . Average: 26 different online accounts 5 unique passwords 25 to 30: 40+ accounts 2012 online fraud cases: 3 x 2010

Passwords: So secure you can't remember it? Memorability vs security negative correlation Password recovery systems a must SMS E-mail Snail mail

Passwords: So secure you can't remember it? Memorability vs security negative correlation Password recovery systems a must SMS E-mail Snail mail

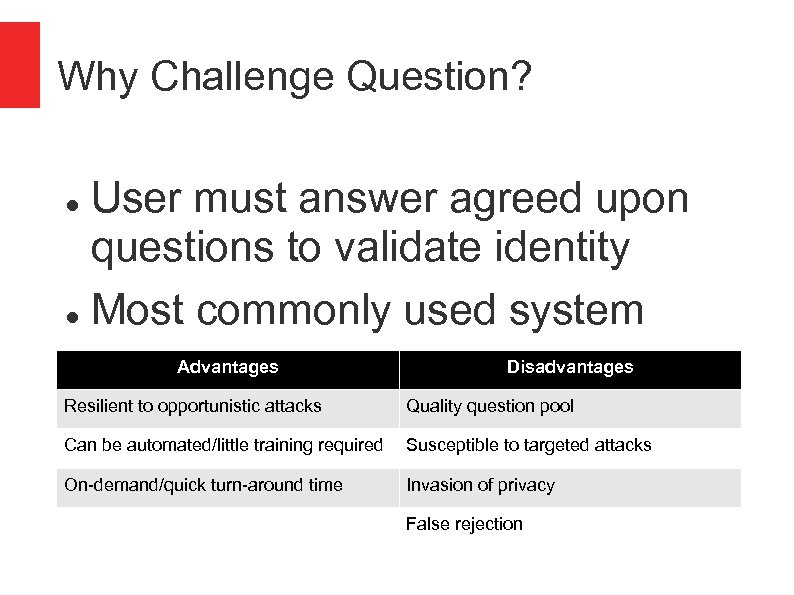

Why Challenge Question? User must answer agreed upon questions to validate identity Most commonly used system Advantages Disadvantages Resilient to opportunistic attacks Quality question pool Can be automated/little training required Susceptible to targeted attacks On-demand/quick turn-around time Invasion of privacy False rejection

Why Challenge Question? User must answer agreed upon questions to validate identity Most commonly used system Advantages Disadvantages Resilient to opportunistic attacks Quality question pool Can be automated/little training required Susceptible to targeted attacks On-demand/quick turn-around time Invasion of privacy False rejection

Just How Safe and Secure? System is weak & exploitable Social media Answers easy to obtain/public domain 12% answerable with social media info Applicability & repeatability

Just How Safe and Secure? System is weak & exploitable Social media Answers easy to obtain/public domain 12% answerable with social media info Applicability & repeatability

Can It be Salvaged? Treat challenge questions like passwords Must value memorability Avoid too many “easy” answers Large pool of challenge questions What if the questions were targeted and personal?

Can It be Salvaged? Treat challenge questions like passwords Must value memorability Avoid too many “easy” answers Large pool of challenge questions What if the questions were targeted and personal?

Targeted Challenge Questions Applicability and repeatability negligible More personal, more secure & memorable the uses or Make a system Greater answer variety from long generates challenge questions -form answers user's strong, that target the personal memories.

Targeted Challenge Questions Applicability and repeatability negligible More personal, more secure & memorable the uses or Make a system Greater answer variety from long generates challenge questions -form answers user's strong, that target the personal memories.

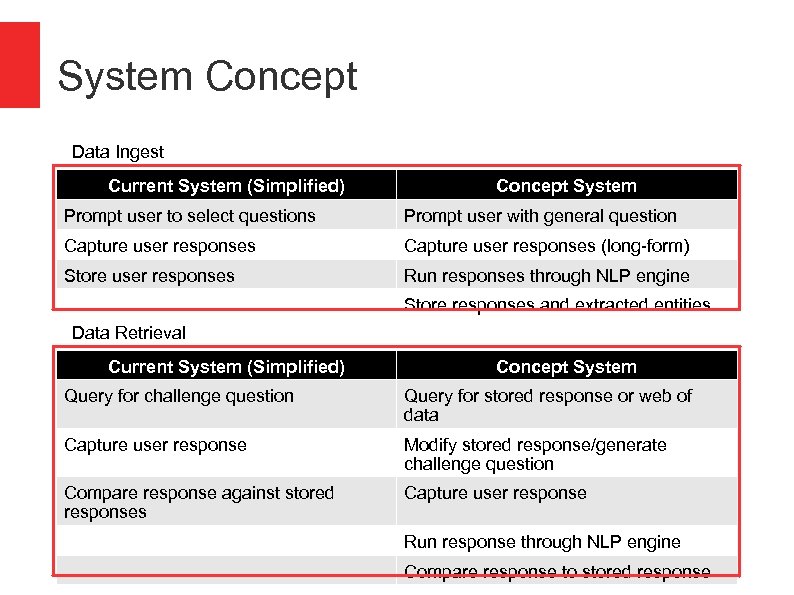

System Concept Data Ingest Current System (Simplified) Concept System Prompt user to select questions Prompt user with general question Capture user responses (long-form) Store user responses Run responses through NLP engine Store responses and extracted entities Data Retrieval Current System (Simplified) Concept System Query for challenge question Query for stored response or web of data Capture user response Modify stored response/generate challenge question Compare response against stored responses Capture user response Run response through NLP engine Compare response to stored response

System Concept Data Ingest Current System (Simplified) Concept System Prompt user to select questions Prompt user with general question Capture user responses (long-form) Store user responses Run responses through NLP engine Store responses and extracted entities Data Retrieval Current System (Simplified) Concept System Query for challenge question Query for stored response or web of data Capture user response Modify stored response/generate challenge question Compare response against stored responses Capture user response Run response through NLP engine Compare response to stored response

The Natural Language Processing Engine at the Heart of it All

The Natural Language Processing Engine at the Heart of it All

The NLP Engine Uses Stanford Core. NLP Pipeline includes: Tokenizer Sentence Splitter Po. S Tagger Morpha Annotator NER

The NLP Engine Uses Stanford Core. NLP Pipeline includes: Tokenizer Sentence Splitter Po. S Tagger Morpha Annotator NER

Notable Pipeline Absences No sentiment analyzer Requires training for individuals No real advantage No relationship analyzer Beyond scope Limited use of the coreferencer and dependency tree.

Notable Pipeline Absences No sentiment analyzer Requires training for individuals No real advantage No relationship analyzer Beyond scope Limited use of the coreferencer and dependency tree.

Fill-in-the-Blanks (Fit. B) Approach A First Step

Fill-in-the-Blanks (Fit. B) Approach A First Step

Fit. B Approach Overview Challenge question is openended and general. User provides a long-form response. Presents user with the modified answer to the challenge question. User must “fill-in-theblanks”/correct the mistakes.

Fit. B Approach Overview Challenge question is openended and general. User provides a long-form response. Presents user with the modified answer to the challenge question. User must “fill-in-theblanks”/correct the mistakes.

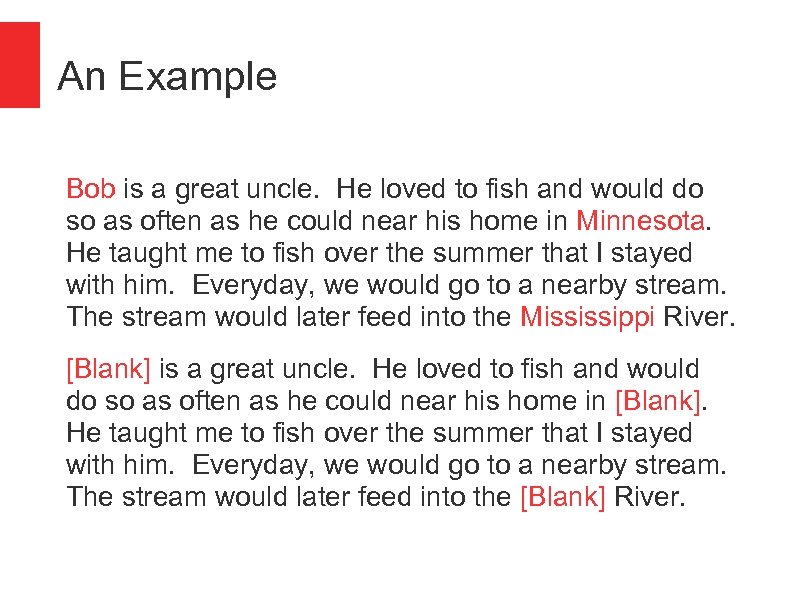

An Example Bob is a great uncle. He loved to fish and would do so as often as he could near his home in Minnesota. He taught me to fish over the summer that I stayed with him. Everyday, we would go to a nearby stream. The stream would later feed into the Mississippi River. [Blank] is a great uncle. He loved to fish and would do so as often as he could near his home in [Blank]. He taught me to fish over the summer that I stayed with him. Everyday, we would go to a nearby stream. The stream would later feed into the [Blank] River.

An Example Bob is a great uncle. He loved to fish and would do so as often as he could near his home in Minnesota. He taught me to fish over the summer that I stayed with him. Everyday, we would go to a nearby stream. The stream would later feed into the Mississippi River. [Blank] is a great uncle. He loved to fish and would do so as often as he could near his home in [Blank]. He taught me to fish over the summer that I stayed with him. Everyday, we would go to a nearby stream. The stream would later feed into the [Blank] River.

Why does it work? It is a single story. Multiple NNs related to the same idea. It is memorable. Prompt helps to kick start memory. Simple and fast

Why does it work? It is a single story. Multiple NNs related to the same idea. It is memorable. Prompt helps to kick start memory. Simple and fast

Where does it fall short? Potentially low entropy in question pool. Question is not generated. No web of knowledge – no context. Unable to correlate multiple stored user responses.

Where does it fall short? Potentially low entropy in question pool. Question is not generated. No web of knowledge – no context. Unable to correlate multiple stored user responses.

Future Work Different user interfaces Example: pictures Incorporate additional processors Example: relationship analyzer Increase the number of data points to match.

Future Work Different user interfaces Example: pictures Incorporate additional processors Example: relationship analyzer Increase the number of data points to match.

Document Retrieval Approach A Slight Twist

Document Retrieval Approach A Slight Twist

Document Retrieval Approach Similar to Fit. B approach. User is prompted to answer the same challenge question they originally wrote an answer for. User's answers run through NLP engine, NNs extracted. NNs used to search through all

Document Retrieval Approach Similar to Fit. B approach. User is prompted to answer the same challenge question they originally wrote an answer for. User's answers run through NLP engine, NNs extracted. NNs used to search through all

Not Quite Right. . . Cannot use regular bag-of-words approach. Source document and userprovided answer document may differ too much. Not backed by web of knowledge. Does not reveal private information.

Not Quite Right. . . Cannot use regular bag-of-words approach. Source document and userprovided answer document may differ too much. Not backed by web of knowledge. Does not reveal private information.

Future Work May benefit from existing search engine technologies (ex. Lucene). May benefit from more data points to match.

Future Work May benefit from existing search engine technologies (ex. Lucene). May benefit from more data points to match.

Generating Questions from a Web of Knowledge (WOK) Approach Now I Understand Why This is Still Unsolved

Generating Questions from a Web of Knowledge (WOK) Approach Now I Understand Why This is Still Unsolved

WOK Approach Overview NLP engine extracts the NNs from the user's initial response. User is prompted to provide more information for the NNs. Information stored in WOK. Challenge questions generated from WOK.

WOK Approach Overview NLP engine extracts the NNs from the user's initial response. User is prompted to provide more information for the NNs. Information stored in WOK. Challenge questions generated from WOK.

Making the WOK Utilized Protege Popular java library for OWL and RDF. Information stored as OWL

Making the WOK Utilized Protege Popular java library for OWL and RDF. Information stored as OWL

Generating the Questions A random class is chosen from the WOK. Question is generated using a property's label id and a template question. User's response is matched against the property's value.

Generating the Questions A random class is chosen from the WOK. Question is generated using a property's label id and a template question. User's response is matched against the property's value.

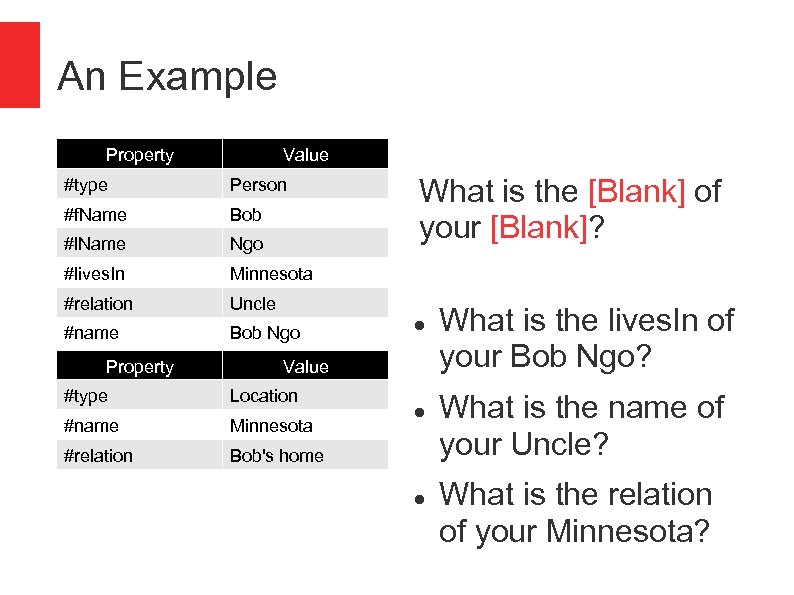

An Example Property Value #type Person #f. Name Bob #l. Name Ngo #lives. In Minnesota #relation Uncle #name Bob Ngo What is the [Blank] of your [Blank]? Property Value #type Location #name Minnesota #relation Bob's home What is the lives. In of your Bob Ngo? What is the name of your Uncle? What is the relation of your Minnesota?

An Example Property Value #type Person #f. Name Bob #l. Name Ngo #lives. In Minnesota #relation Uncle #name Bob Ngo What is the [Blank] of your [Blank]? Property Value #type Location #name Minnesota #relation Bob's home What is the lives. In of your Bob Ngo? What is the name of your Uncle? What is the relation of your Minnesota?

Why doesn't it work? Question generation algorithm needs to be less naive. Generated questions are very impersonal. Not really an improvement over current method. Creation of WOK is not automatic/semi-automatic.

Why doesn't it work? Question generation algorithm needs to be less naive. Generated questions are very impersonal. Not really an improvement over current method. Creation of WOK is not automatic/semi-automatic.

Future Work Question generation algorithm must be improved. Incorporation of additional NLP technologies for a smarter WOK. Ontology is the wrong technology?

Future Work Question generation algorithm must be improved. Incorporation of additional NLP technologies for a smarter WOK. Ontology is the wrong technology?

Conclusion Fit. B approach is the most ready for deployment. Document retrieval approach evaluation incomplete. WOK approach needs a lot more work.

Conclusion Fit. B approach is the most ready for deployment. Document retrieval approach evaluation incomplete. WOK approach needs a lot more work.

Questions?

Questions?