c8475281dde8c0ab63fc0400af866e18.ppt

- Количество слайдов: 30

Generalized Entropies Renato Renner Institute for Theoretical Physics ETH Zurich, Switzerland

Generalized Entropies Renato Renner Institute for Theoretical Physics ETH Zurich, Switzerland

Collaborators • • • Roger Colbeck (ETH Zurich) Nilanjana Datta (U Cambridge) Oscar Dahlsten (ETH Zurich) Patrick Hayden (Mc. Gill U, Montreal) Robert König (Caltech) Christian Schaffner (CWI, Amsterdam) Valerio Scarani (NUS, Singapore) Marco Tomamichel (ETH Zurich) Ligong Wang (ETH Zurich) Andreas Winter (U Bristol) Stefan Wolf (ETH Zurich) Jürg Wullschleger (U Bristol)

Collaborators • • • Roger Colbeck (ETH Zurich) Nilanjana Datta (U Cambridge) Oscar Dahlsten (ETH Zurich) Patrick Hayden (Mc. Gill U, Montreal) Robert König (Caltech) Christian Schaffner (CWI, Amsterdam) Valerio Scarani (NUS, Singapore) Marco Tomamichel (ETH Zurich) Ligong Wang (ETH Zurich) Andreas Winter (U Bristol) Stefan Wolf (ETH Zurich) Jürg Wullschleger (U Bristol)

Why is Shannon / von Neumann entropy so widely used in information theory? • operational interpretation quantitative characterization of information processing tasks • easy to handle simple mathematical definition / intuitive entropy calculus

Why is Shannon / von Neumann entropy so widely used in information theory? • operational interpretation quantitative characterization of information processing tasks • easy to handle simple mathematical definition / intuitive entropy calculus

Operational interpretations of Shannon entropy (classical scenarios) • data compression rate for a source PX rate = S(X) • transmission rate of a channel PY|X rate = max. PX S(X) S(X|Y) • secret key rate for a correlated source PXYZ rate ≥ S(X|Z) S(X|Y) • many more …

Operational interpretations of Shannon entropy (classical scenarios) • data compression rate for a source PX rate = S(X) • transmission rate of a channel PY|X rate = max. PX S(X) S(X|Y) • secret key rate for a correlated source PXYZ rate ≥ S(X|Z) S(X|Y) • many more …

Operational interpretations of von Neumann entropy (quantum scenarios) • data compression rate for a source ½A rate = S(A) • state merging rate for a bipartite state ½AB rate = S(A|B) • randomness extraction rate for a cq state ½XE rate = S(X|E) • secret key rate for a cqq state ½XBE rate ≥ S(X|E) S(X|B) • …

Operational interpretations of von Neumann entropy (quantum scenarios) • data compression rate for a source ½A rate = S(A) • state merging rate for a bipartite state ½AB rate = S(A|B) • randomness extraction rate for a cq state ½XE rate = S(X|E) • secret key rate for a cqq state ½XBE rate ≥ S(X|E) S(X|B) • …

Why is von Neumann entropy so widely used in information theory? • operational meaning quantitative characterization of information processing tasks • easy to handle simple mathematical definition / intuitive entropy calculus

Why is von Neumann entropy so widely used in information theory? • operational meaning quantitative characterization of information processing tasks • easy to handle simple mathematical definition / intuitive entropy calculus

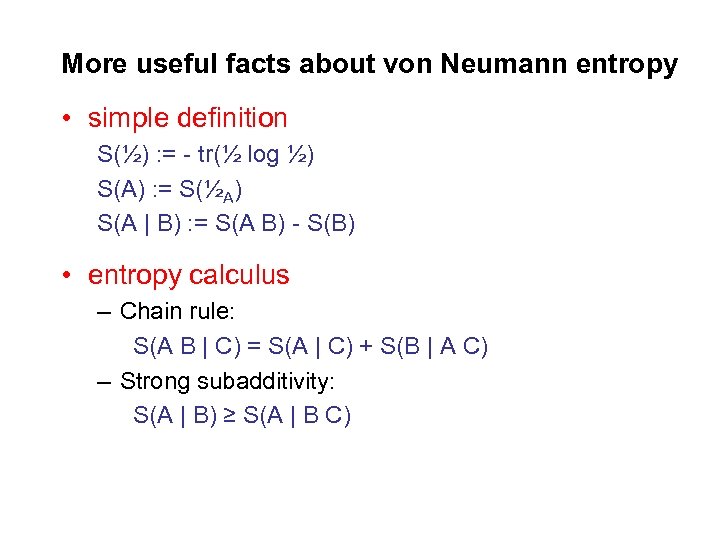

More useful facts about von Neumann entropy • simple definition S(½) : = tr(½ log ½) S(A) : = S(½A) S(A | B) : = S(A B) S(B) • entropy calculus – Chain rule: S(A B | C) = S(A | C) + S(B | A C) – Strong subadditivity: S(A | B) ≥ S(A | B C)

More useful facts about von Neumann entropy • simple definition S(½) : = tr(½ log ½) S(A) : = S(½A) S(A | B) : = S(A B) S(B) • entropy calculus – Chain rule: S(A B | C) = S(A | C) + S(B | A C) – Strong subadditivity: S(A | B) ≥ S(A | B C)

Why is von Neumann entropy so widely used in information theory? • operational meaning quantitative characterization of information processing tasks • easy to handle simple mathematical definition / intuitive entropy calculus

Why is von Neumann entropy so widely used in information theory? • operational meaning quantitative characterization of information processing tasks • easy to handle simple mathematical definition / intuitive entropy calculus

Limitations of the von Neumann entropy Claim: Operational interpretations are only valid under certain assumptions. Typical assumptions (e. g. , for source coding) • i. i. d. : source emits n identical and independently distributed pieces of data • asymptotics: n is large (n 1) Formally: PX 1…Xn = (PX)£n for n 1

Limitations of the von Neumann entropy Claim: Operational interpretations are only valid under certain assumptions. Typical assumptions (e. g. , for source coding) • i. i. d. : source emits n identical and independently distributed pieces of data • asymptotics: n is large (n 1) Formally: PX 1…Xn = (PX)£n for n 1

Can these assumptions be justified in realistic settings? • i. i. d. assumption – approximation justified by de Finetti’s theorem (permutation symmetry implies i. i. d. structure on almost all subsystems) – problematic in certain cryptographic scenarios (e. g. , in the bounded storage model) • asymptotics – realistic settings are always finite (small systems might be of particular interest for practice) – but might be OK if convergence is fast enough (convergence often unknown, problematic in cryptography)

Can these assumptions be justified in realistic settings? • i. i. d. assumption – approximation justified by de Finetti’s theorem (permutation symmetry implies i. i. d. structure on almost all subsystems) – problematic in certain cryptographic scenarios (e. g. , in the bounded storage model) • asymptotics – realistic settings are always finite (small systems might be of particular interest for practice) – but might be OK if convergence is fast enough (convergence often unknown, problematic in cryptography)

Is the i. i. d. assumption really needed? Example PX Fig. : k=4 • Randomness extraction Hextr(X) = 1 bit (depends on maximum prob. ) • Data compression Hcompr(X) ¸ k bits (depends on alphabet size) • Shannon entropy S(X) = 1 + k/2 (provides the right answer if PX 1…Xn = (PX)£n for n 1)

Is the i. i. d. assumption really needed? Example PX Fig. : k=4 • Randomness extraction Hextr(X) = 1 bit (depends on maximum prob. ) • Data compression Hcompr(X) ¸ k bits (depends on alphabet size) • Shannon entropy S(X) = 1 + k/2 (provides the right answer if PX 1…Xn = (PX)£n for n 1)

Features of von Neumann entropy • operational interpretations – hold asymptotically under the i. i. d. assumption – but generally invalid • easy to handle – simple definition – entropy calculus (Obvious) question Is there a entropy measure? Answer Yes: Hmin

Features of von Neumann entropy • operational interpretations – hold asymptotically under the i. i. d. assumption – but generally invalid • easy to handle – simple definition – entropy calculus (Obvious) question Is there a entropy measure? Answer Yes: Hmin

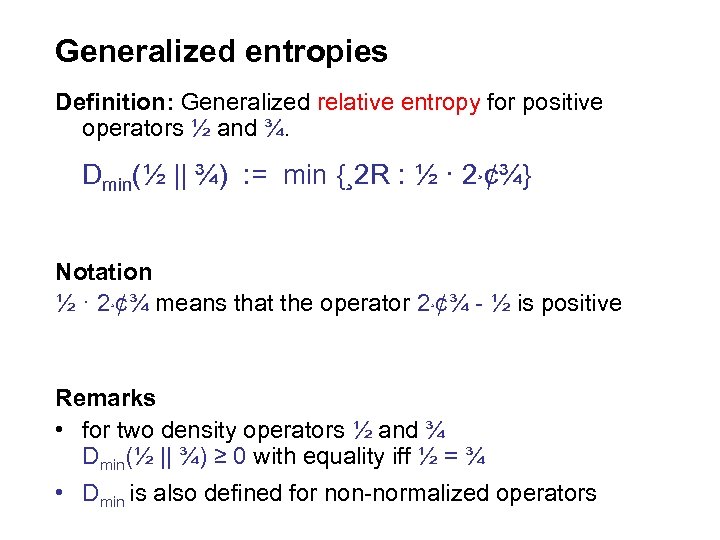

Generalized entropies Definition: Generalized relative entropy for positive operators ½ and ¾. Dmin(½ || ¾) : = min {¸ 2 R : ½ · 2¸¢¾} Notation ½ · 2¸¢¾ means that the operator 2¸¢¾ ½ is positive Remarks • for two density operators ½ and ¾ Dmin(½ || ¾) ≥ 0 with equality iff ½ = ¾ • Dmin is also defined for non normalized operators

Generalized entropies Definition: Generalized relative entropy for positive operators ½ and ¾. Dmin(½ || ¾) : = min {¸ 2 R : ½ · 2¸¢¾} Notation ½ · 2¸¢¾ means that the operator 2¸¢¾ ½ is positive Remarks • for two density operators ½ and ¾ Dmin(½ || ¾) ≥ 0 with equality iff ½ = ¾ • Dmin is also defined for non normalized operators

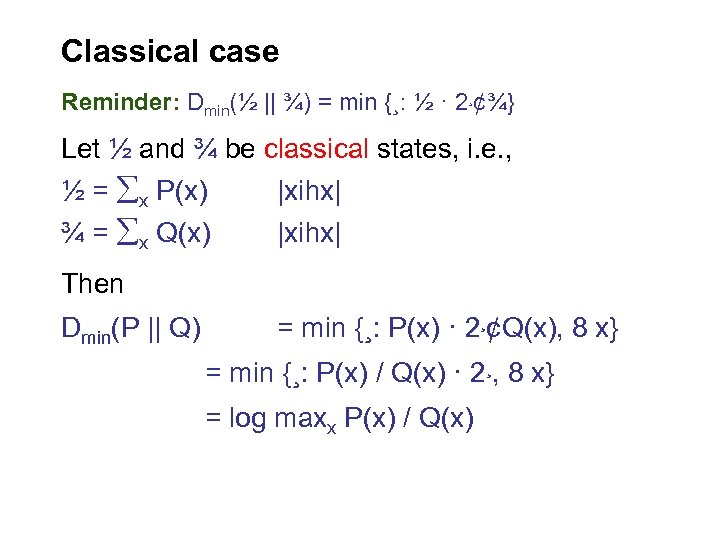

Classical case Reminder: Dmin(½ || ¾) = min {¸: ½ · 2¸¢¾} Let ½ and ¾ be classical states, i. e. , ½ = x P(x) |xihx| ¾ = x Q(x) |xihx| Then Dmin(P || Q) = min {¸: P(x) · 2¸¢Q(x), 8 x} = min {¸: P(x) / Q(x) · 2¸, 8 x} = log maxx P(x) / Q(x)

Classical case Reminder: Dmin(½ || ¾) = min {¸: ½ · 2¸¢¾} Let ½ and ¾ be classical states, i. e. , ½ = x P(x) |xihx| ¾ = x Q(x) |xihx| Then Dmin(P || Q) = min {¸: P(x) · 2¸¢Q(x), 8 x} = min {¸: P(x) / Q(x) · 2¸, 8 x} = log maxx P(x) / Q(x)

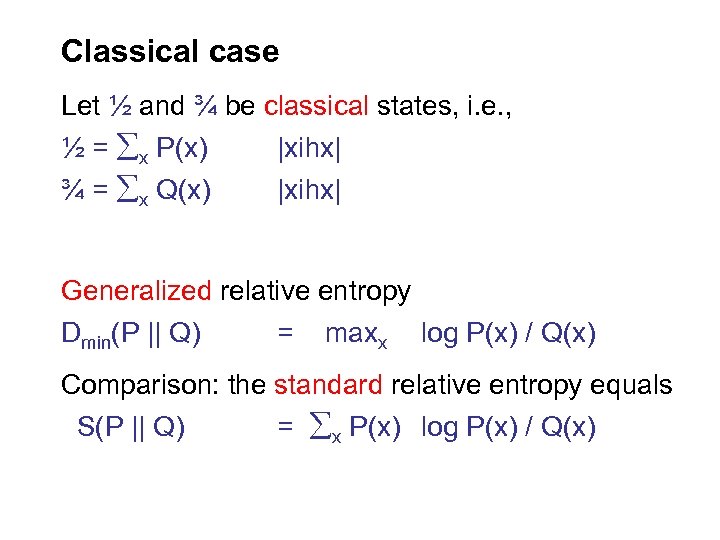

Classical case Let ½ and ¾ be classical states, i. e. , ½ = x P(x) |xihx| ¾ = x Q(x) |xihx| Generalized relative entropy Dmin(P || Q) = maxx log P(x) / Q(x) Comparison: the standard relative entropy equals S(P || Q) = x P(x) log P(x) / Q(x)

Classical case Let ½ and ¾ be classical states, i. e. , ½ = x P(x) |xihx| ¾ = x Q(x) |xihx| Generalized relative entropy Dmin(P || Q) = maxx log P(x) / Q(x) Comparison: the standard relative entropy equals S(P || Q) = x P(x) log P(x) / Q(x)

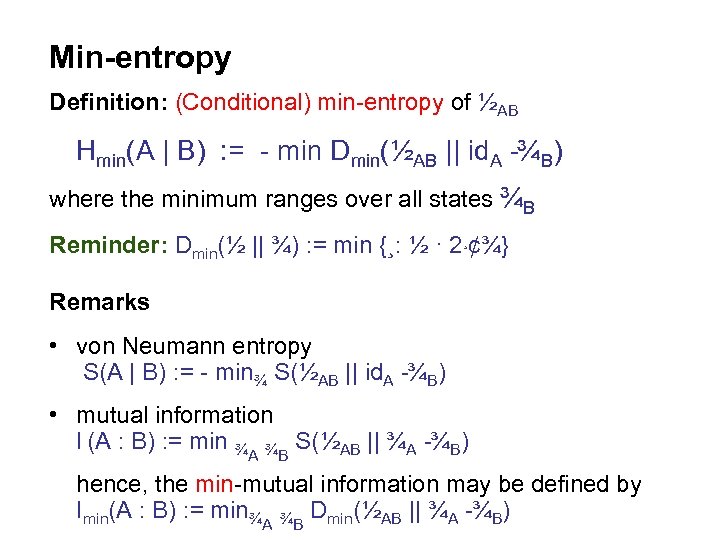

Min-entropy Definition: (Conditional) min entropy of ½AB Hmin(A | B) : = min Dmin(½AB || id. A ¾B) where the minimum ranges over all states ¾B Reminder: Dmin(½ || ¾) : = min {¸: ½ · 2¸¢¾} Remarks • von Neumann entropy S(A | B) : = min¾ S(½AB || id. A ¾B) • mutual information I (A : B) : = min ¾A ¾B S(½AB || ¾A ¾B) hence, the min mutual information may be defined by Imin(A : B) : = min¾A ¾B Dmin(½AB || ¾A ¾B)

Min-entropy Definition: (Conditional) min entropy of ½AB Hmin(A | B) : = min Dmin(½AB || id. A ¾B) where the minimum ranges over all states ¾B Reminder: Dmin(½ || ¾) : = min {¸: ½ · 2¸¢¾} Remarks • von Neumann entropy S(A | B) : = min¾ S(½AB || id. A ¾B) • mutual information I (A : B) : = min ¾A ¾B S(½AB || ¾A ¾B) hence, the min mutual information may be defined by Imin(A : B) : = min¾A ¾B Dmin(½AB || ¾A ¾B)

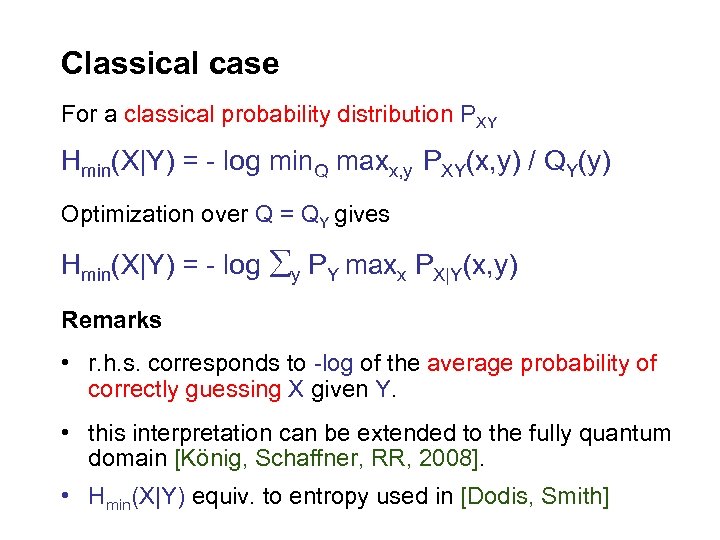

Classical case For a classical probability distribution PXY Hmin(X|Y) = log min. Q maxx, y PXY(x, y) / QY(y) Optimization over Q = QY gives Hmin(X|Y) = log y PY maxx PX|Y(x, y) Remarks • r. h. s. corresponds to log of the average probability of correctly guessing X given Y. • this interpretation can be extended to the fully quantum domain [König, Schaffner, RR, 2008]. • Hmin(X|Y) equiv. to entropy used in [Dodis, Smith]

Classical case For a classical probability distribution PXY Hmin(X|Y) = log min. Q maxx, y PXY(x, y) / QY(y) Optimization over Q = QY gives Hmin(X|Y) = log y PY maxx PX|Y(x, y) Remarks • r. h. s. corresponds to log of the average probability of correctly guessing X given Y. • this interpretation can be extended to the fully quantum domain [König, Schaffner, RR, 2008]. • Hmin(X|Y) equiv. to entropy used in [Dodis, Smith]

Min-entropy without conditioning Hmin(X) = log maxx PX(x)

Min-entropy without conditioning Hmin(X) = log maxx PX(x)

Smoothing Definition: Smooth entropy of PX ² H (X) : = max. X’ Hmin(X’) maximum taken over all PX’ with || PX PX’ || · ²

Smoothing Definition: Smooth entropy of PX ² H (X) : = max. X’ Hmin(X’) maximum taken over all PX’ with || PX PX’ || · ²

Smoothing Definition: Smooth relative entropy of ½ and ¾ ² D (½ || ¾) : = min½’ Dmin(½’ || ¾) minimum taken over all ½ such that ||½ ½’|| · ². Definition: Smooth min entropy of ½AB ² ² H (A | B) : = min¾B D (½AB || id. A ¾B)

Smoothing Definition: Smooth relative entropy of ½ and ¾ ² D (½ || ¾) : = min½’ Dmin(½’ || ¾) minimum taken over all ½ such that ||½ ½’|| · ². Definition: Smooth min entropy of ½AB ² ² H (A | B) : = min¾B D (½AB || id. A ¾B)

Von Neumann entropy as a special case n Consider an i. i. d. state ½A 1. . . An B 1. . . Bn : = ½A B . Lemma ² S( A | B ) = lim² 1 limn 1 H ( A 1. . . An | B 1. . . Bn) / n Remark The lemma can be extended to spectral entropy rates (see [Han, Verdu] for classical distributions and [Hayashi, Nagaoka, Ogawa, Bowen, Datta] for quantum states).

Von Neumann entropy as a special case n Consider an i. i. d. state ½A 1. . . An B 1. . . Bn : = ½A B . Lemma ² S( A | B ) = lim² 1 limn 1 H ( A 1. . . An | B 1. . . Bn) / n Remark The lemma can be extended to spectral entropy rates (see [Han, Verdu] for classical distributions and [Hayashi, Nagaoka, Ogawa, Bowen, Datta] for quantum states).

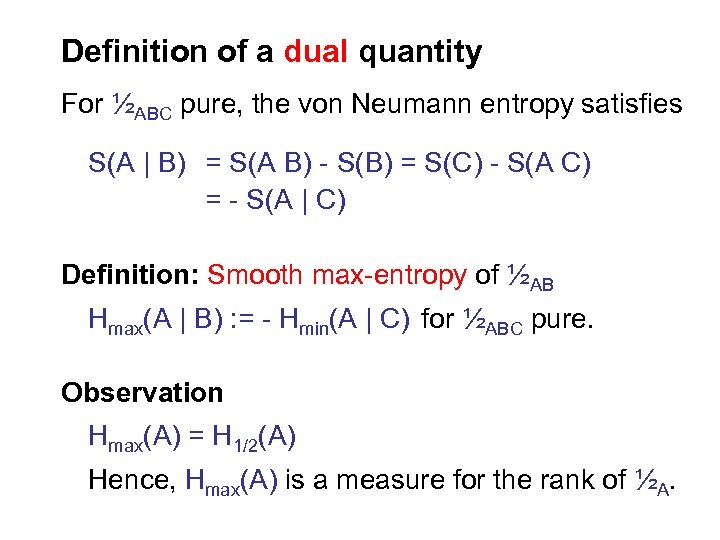

Definition of a dual quantity For ½ABC pure, the von Neumann entropy satisfies S(A | B) = S(A B) S(B) = S(C) S(A C) = S(A | C) Definition: Smooth max entropy of ½AB Hmax(A | B) : = Hmin(A | C) for ½ABC pure. Observation Hmax(A) = H 1/2(A) Hence, Hmax(A) is a measure for the rank of ½A.

Definition of a dual quantity For ½ABC pure, the von Neumann entropy satisfies S(A | B) = S(A B) S(B) = S(C) S(A C) = S(A | C) Definition: Smooth max entropy of ½AB Hmax(A | B) : = Hmin(A | C) for ½ABC pure. Observation Hmax(A) = H 1/2(A) Hence, Hmax(A) is a measure for the rank of ½A.

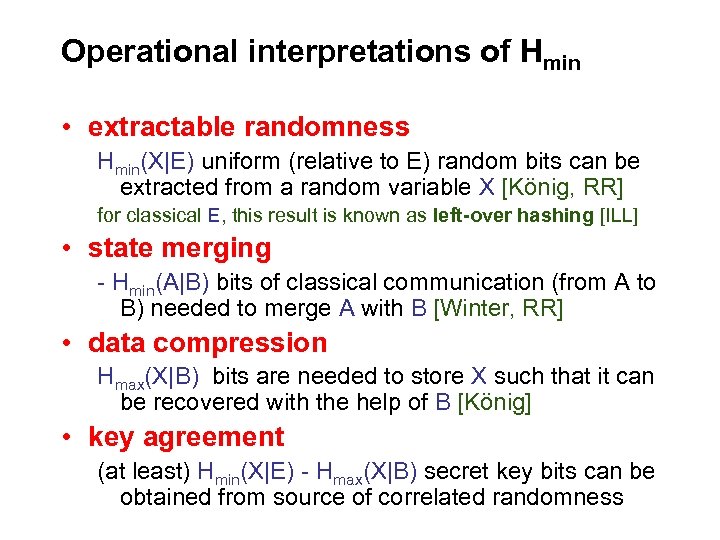

Operational interpretations of Hmin • extractable randomness Hmin(X|E) uniform (relative to E) random bits can be extracted from a random variable X [König, RR] for classical E, this result is known as left-over hashing [ILL] • state merging Hmin(A|B) bits of classical communication (from A to B) needed to merge A with B [Winter, RR] • data compression Hmax(X|B) bits are needed to store X such that it can be recovered with the help of B [König] • key agreement (at least) Hmin(X|E) Hmax(X|B) secret key bits can be obtained from source of correlated randomness

Operational interpretations of Hmin • extractable randomness Hmin(X|E) uniform (relative to E) random bits can be extracted from a random variable X [König, RR] for classical E, this result is known as left-over hashing [ILL] • state merging Hmin(A|B) bits of classical communication (from A to B) needed to merge A with B [Winter, RR] • data compression Hmax(X|B) bits are needed to store X such that it can be recovered with the help of B [König] • key agreement (at least) Hmin(X|E) Hmax(X|B) secret key bits can be obtained from source of correlated randomness

Features of Hmin • operational meaning • easy to handle ?

Features of Hmin • operational meaning • easy to handle ?

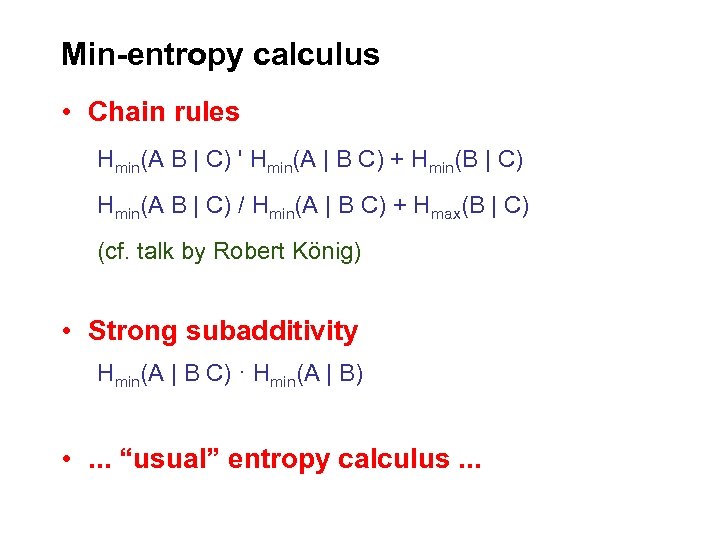

Min-entropy calculus • Chain rules Hmin(A B | C) ' Hmin(A | B C) + Hmin(B | C) Hmin(A B | C) / Hmin(A | B C) + Hmax(B | C) (cf. talk by Robert König) • Strong subadditivity Hmin(A | B C) · Hmin(A | B) • . . . “usual” entropy calculus. . .

Min-entropy calculus • Chain rules Hmin(A B | C) ' Hmin(A | B C) + Hmin(B | C) Hmin(A B | C) / Hmin(A | B C) + Hmax(B | C) (cf. talk by Robert König) • Strong subadditivity Hmin(A | B C) · Hmin(A | B) • . . . “usual” entropy calculus. . .

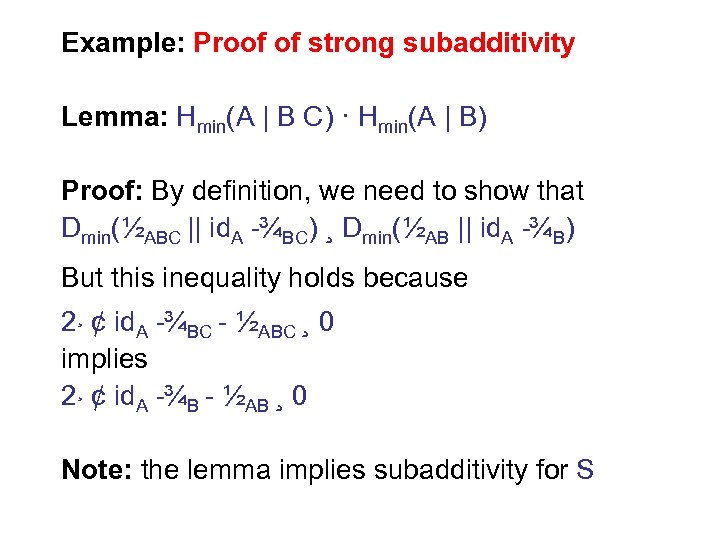

Example: Proof of strong subadditivity Lemma: Hmin(A | B C) · Hmin(A | B) Proof: By definition, we need to show that Dmin(½ABC || id. A ¾BC) ¸ Dmin(½AB || id. A ¾B) But this inequality holds because 2¸ ¢ id. A ¾BC ½ABC ¸ 0 implies 2¸ ¢ id. A ¾B ½AB ¸ 0 Note: the lemma implies subadditivity for S

Example: Proof of strong subadditivity Lemma: Hmin(A | B C) · Hmin(A | B) Proof: By definition, we need to show that Dmin(½ABC || id. A ¾BC) ¸ Dmin(½AB || id. A ¾B) But this inequality holds because 2¸ ¢ id. A ¾BC ½ABC ¸ 0 implies 2¸ ¢ id. A ¾B ½AB ¸ 0 Note: the lemma implies subadditivity for S

Features of Hmin • operational meaning • easy to handle (often easier than von Neumann entropy)

Features of Hmin • operational meaning • easy to handle (often easier than von Neumann entropy)

Summary Hmin generalizes von Neumann entropy Main features • general operational interpretation – i. i. d. assumption not needed – no asymptotics • easy to handle – simple definition – simpler proofs (e. g. , strong subadditivity)

Summary Hmin generalizes von Neumann entropy Main features • general operational interpretation – i. i. d. assumption not needed – no asymptotics • easy to handle – simple definition – simpler proofs (e. g. , strong subadditivity)

Applications • quantum key distribution min entropy plays crucial role in general security proofs • cryptography in the bounded storage model see talks by Christian Schaffner and by Robert König • . . . Open questions • Additivity conjecture Hmax corresponds to H 1/2, for which the additivity conjecture still might hold • New entropy measures based on Hmin?

Applications • quantum key distribution min entropy plays crucial role in general security proofs • cryptography in the bounded storage model see talks by Christian Schaffner and by Robert König • . . . Open questions • Additivity conjecture Hmax corresponds to H 1/2, for which the additivity conjecture still might hold • New entropy measures based on Hmin?

Thanks for your attention

Thanks for your attention