08321362b3c55ccbd6b3e3aee506f5c1.ppt

- Количество слайдов: 21

Fusion Testing Maximizing Test Execution By: James Tischart

About Me • Director - Product Delivery Saa. S at Mc. Afee • 15+ years experience in testing and engineering • Multiple certifications in Agile and Testing • Passionate about Testing as an eng discipline, a science and an art • Continue to challenge the status quo, test new approaches and always strive to improve • Support the legitimacy of the testing professional in the broader engineering world

What is Fusion Testing? An occurrence that involves the production of a union • • Organization of structured testing Freedom of exploratory testing Rigors of automated testing Combined into one test methodology To achieve • • • Maximize code execution Increased test coverage Reduced test artifact and documentation Higher quality for users Improved data for organization

Fusion Focus – start your day with 15 minutes of thought Usage – how will your users work with the system Scope – decide on the scope of everything Initiate – just go and explore Organize – create a plan & be ready to deviate from it Note – keep track of your exploration to retrace steps

Fusion Testing Guidance Maximize test execution with Fusion by: 1. Identify Goals for Testing 2. Choose the right mix of methodologies 3. Utilize Tests Lists to guide exploration 4. Automate the Right Things 5. Document at the Right Detail

Fusion Implementation 1. Guide your team through the change 1. Create a Change Message 1. Mentor your team members 2. Train the team in testing and engineering 3. Create Metrics to measure success & failure

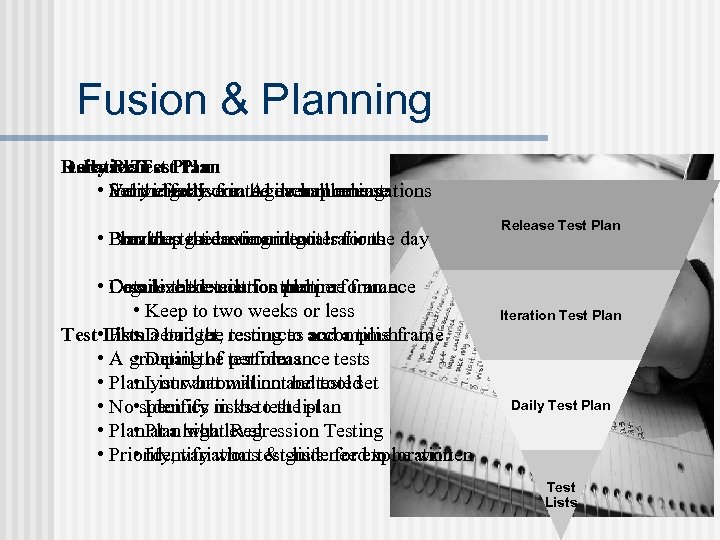

Fusion & Planning Daily Plan Release Test Plan Iterative Test Plan • Set the goals for the overall release Individually created each morning Very effective in Agile implementations • Plan the test environment Provides guidance and goals for the day Break up the testing into iterations • Organize the metrics and performance Details the execution plan Low level details for the time frame • Keep to two weeks or less Test • Lists Detail the testing to accomplish Plan a budget, resources and a time frame • • A grouping of test ideas • Detail the performance tests • Plan your automation and tool set • List what will not be tested • No specifics in the test list • Identify risks to the plan • Plan at a high level • Plan what Regression Testing • Priority, variations & guide for exploration • Identify what test-lists need to be written Release Test Plan Iteration Test Plan Daily Test Plan Test Lists

The Power of Many • Utilize different users of your system to bring fresh perspectives - Support personnel - Developers - User groups - Sales staff - Training - Product Management • Help guide the testing by providing checklists, environments and goals • Don’t give exact details or specific steps this minimizes innovation • Make the event fun and you will have many people continuing to help • Have testing experts available to help the volunteers for problems

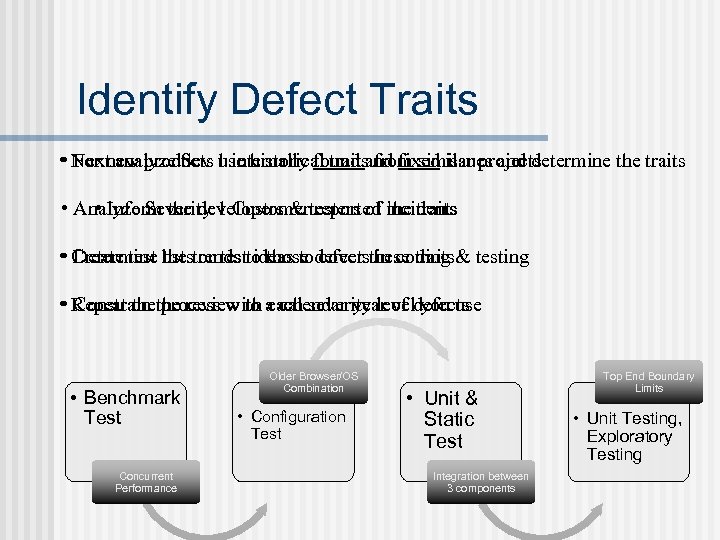

Identify Defect Traits • Next analyze Sev 1 internally found and fixed issues and determine the traits For new products use historical traits from similar projects • Analyze Severity 1 Customer reported incidents • Inform the developers & testers of the traits • Create test lists or test ideas to cover these traits Determine the trends to those defects in coding & testing • Repeat the process with each severity level you use Constrain the review to a calendar year of defects • Benchmark Test Concurrent Performance Older Browser/OS Combination • Configuration Test • Unit & Static Test Integration between 3 components Top End Boundary Limits • Unit Testing, Exploratory Testing

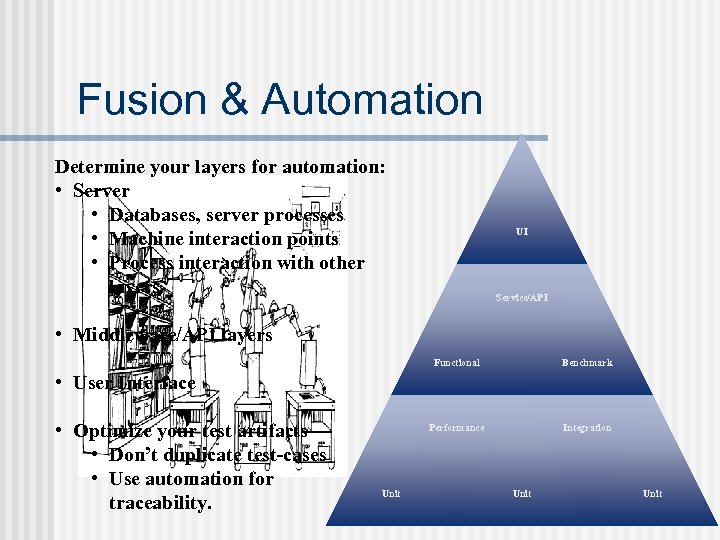

Fusion & Automation Determine your layers for automation: • Server • Databases, server processes • Machine interaction points • Process interaction with other layers UI Service/API • Middleware/API layers Functional Benchmark Performance Integration • User Interface • Optimize your test artifacts • Don’t duplicate test-cases • Use automation for traceability. Unit

Test Results & Metrics Since you can’t test everything, here are some ideas of results to report: • # of Test Ideas Executed based on Prioritydata no matter the test structure. Performance/Benchmark comparisons by build/iteration/release There will always be interest in the test • # of Test Cases passed/failed versus total coverage that the tests represent Team Quality Satisfaction Rating – get the gut feeling of the team Q: How can you accurately assess quality when the testing combinations exceed the particles of the universe? • # of Automated Tests Passed/Failed by # of executed times Open defects to highlight potential issues that your customers may find A: You can’t! We need to present what was tested, what has not been tested • and support the assessment used to make this prioritization. metrics Go to fusiontesting. blogspot. com for more details on these

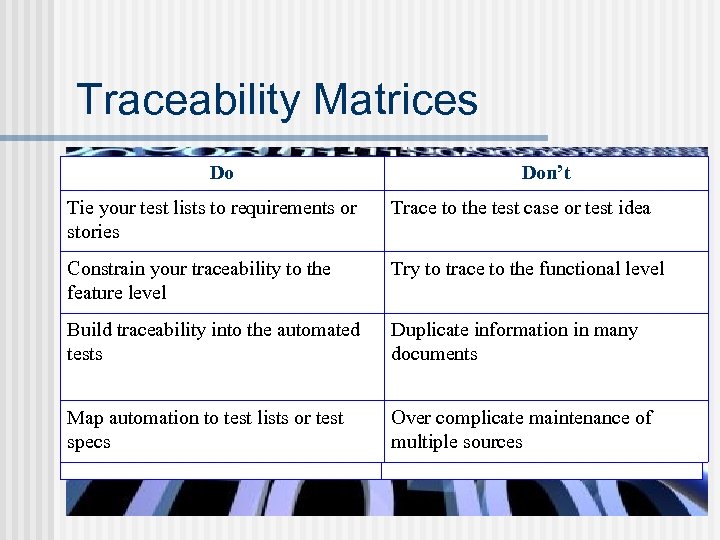

Traceability Matrices Do Positives Don’t Negatives Can trace tests to requirement Tie your test lists to requirements or coverage stories Time consuming to create and Trace to the test case or test idea maintain Constrain your traceability to the Displays what has been executed feature level Try to trace to the functional level Often out of date and misleading Build traceability into the automated Duplicate information in many Shows relationship between tests and Duplicates information from tests documents features cases and requirements Map automation to test lists or test Over complicate maintenance of Provides defect traceability to features Dedication to frequent updates needed specs multiple sources

Implementation Challenges There are challenges to implementation centralized in 5 areas: 1. Management 2. Product Management 3. Engineering Teams 4. Regulated Projects 5. Outsourcing

Challenge: Management Respond to Challenges: Identify the Challenges: • Reliance on historical metrics Provide better metrics • Understand the current processes and practices Show new process improves quality • Decision-making timelines Prove how decision can be made faster • Need traceability to feel confident of data Review traceability needs and support them

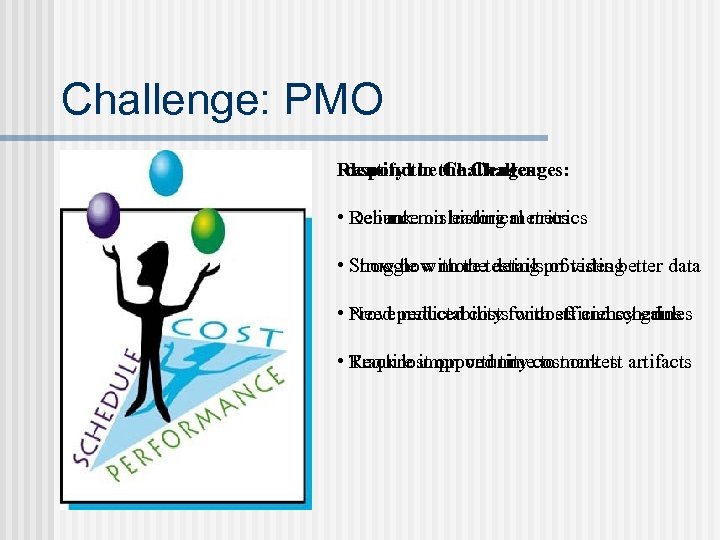

Challenge: PMO Respond to Challenges: Identify the Challenges: • Reliance on historical metrics Debunk misleading metrics • Struggle with the details of testing Show more testing provides better data • Need predictability for costs and schedules Prove reduced costs with efficiency gains • Require improved time to market Track lost opportunity cost on test artifacts

Challenge: Engineering Identify the Challenges: Respond to Challenges: • Takes time away from coding Test first design increases new coding time • Testing can be tedious Automated & exploratory tests are less tedious • Not their specialization Understanding testing improves code writing • Rely on a serial approach to testing Less defects will be logged with up-front tests

Challenge: Regulation Exploratory Structured Full Traceability and Meet all Standards Defined Test Scen arios Incorporate Explo ratory Testing Augment Docum entation with Test-Lists Formal Documen tation Rigorous Automat ion Respond to Challenges: Identify the Challenges: • Documentation Requirements Lean towards more structured testing • Formal or Standards Approval Use exploratory but document results • Full traceability Plan for and work in shorter iterations • Rigorous Automation & detailed test results Automate more, document more

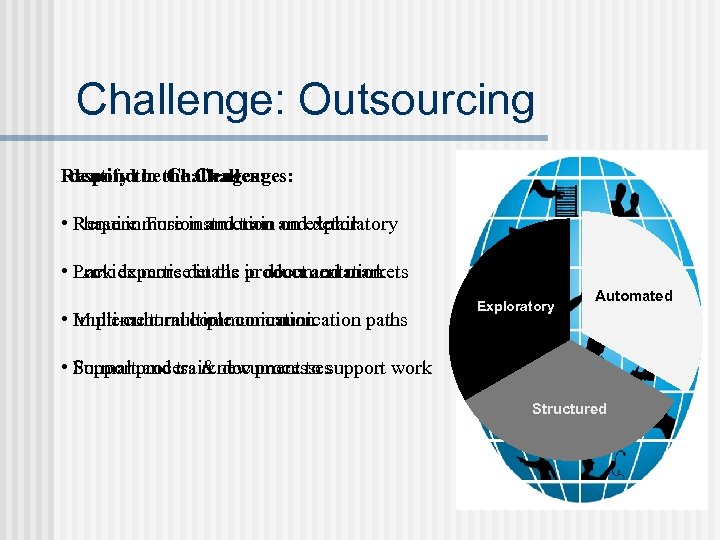

Challenge: Outsourcing Respond to Challenges: Identify the Challenges: • Require more instruction and detail Phase in Fusion and train on exploratory • Lack expertise in the product and markets Provide more details in documentation • Multi-cultural communication Implement multiple communication paths • Formal process & document to support work Support and train new processes Exploratory Automated Structured

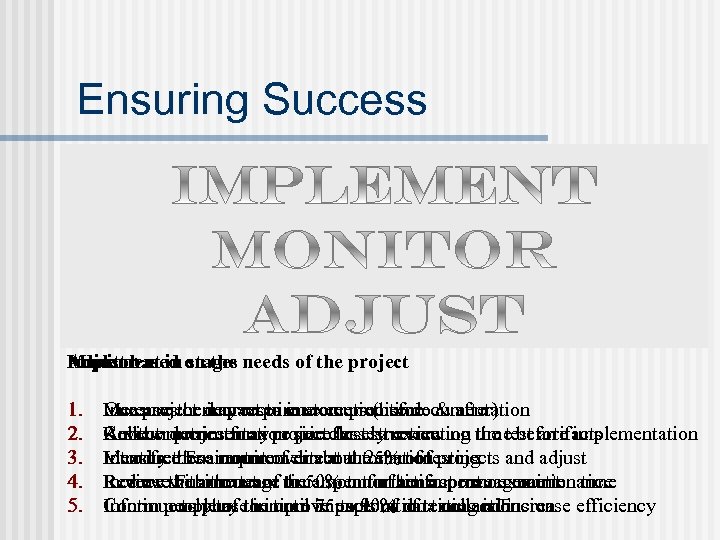

Ensuring Success Implement in on the needs of the project Monitor Adjust based stages 1. 2. 3. 4. 5. Measure the impact to customers (before & after) Increases or decreases in execution time One project may require more precise documentation Collect metrics for a project for test execution time before implementation Review documentation size closely reviewing the test artifacts Another project may require less structure Introduce Fusion to cover about 25% of testing Measure the amount of direct automation Identify these requirements at the start of projects and adjust Increase Fusion usage to 50% to further improve execution time Review with the team the amount of time spent on maintenance Reduce the amount of time spent on artifact management Continue to phase in until 75 to 90% of testing is Fusion Continuously try to improve exploration time and increase efficiency Inform people of the time impacts of data collection

Five Keys to Fusion 5 5 5 1. Detail – the right level of detail at the right time 2. Planning – consistently plan, execute and adjust 3. Automate - spend time on specifics in automation 4. Report – measure what truly represents the team and customer 5. POM – get more people involved to expand the breadth of test

Conclusion Rick Craig James Bach My family, friends, colleagues who have supported me! QA&Test Hung Nyugen Cem Kaner

08321362b3c55ccbd6b3e3aee506f5c1.ppt