ef484e08cc258cf82f7672d27c23479b.ppt

- Количество слайдов: 21

Freddies: DHT-Based Adaptive Query Processing via Federated Eddies Ryan Huebsch Shawn Jeffery CS 294 -4 Peer-to-Peer Systems 12/9/03

Outline l l l Background: PIER Motivation: Adaptive Query Processing (Eddies) Federated Eddies (Freddies) l l l System Model Routing Policies Implementation Experimental Results Conclusions and Continuing Work

PIER l l Fully decentralized relational query processing engine Principles: l l l Relaxed consistency Organic Scaling Data in its Natural Habitat Standard Schemas via Grassroots software Relational queries can be executed in a number of logically equivalent ways l l Optimization step chooses the best performance-wise Currently, PIER has no means to optimize queries

Adaptive Query Processing l Traditional query optimization occurs at query time and is based on statistics. This is hard because: l l l Catalog (statistics) must be accurate and maintained Cannot recover from poor choices The story gets worse! l Long running queries: Changing selectivity/costs of operators l Assumptions made at query time may no longer hold Federated/autonomous data sources: l No control/knowledge of statistics Heterogeneous data sources: l Different arrival rates l l Thus, Adaptive Query Processing systems attempt to change execution order during the query l Query Scrambling, Tukwila, Wisconsin, Eddies

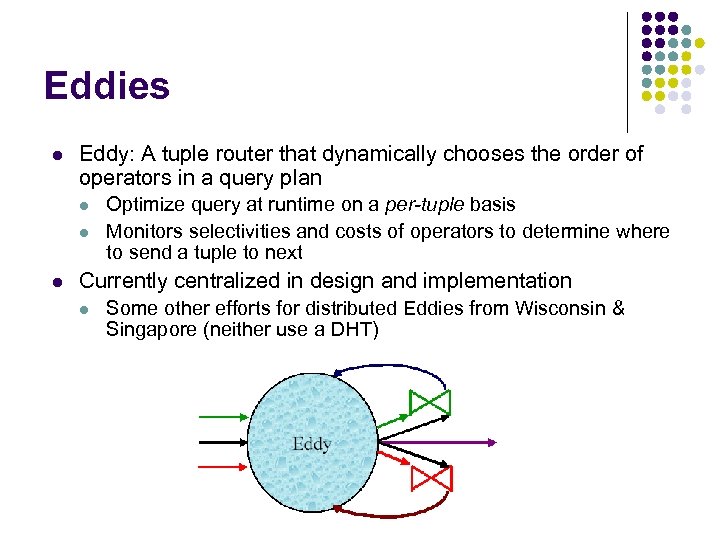

Eddies l Eddy: A tuple router that dynamically chooses the order of operators in a query plan l l l Optimize query at runtime on a per-tuple basis Monitors selectivities and costs of operators to determine where to send a tuple to next Currently centralized in design and implementation l Some other efforts for distributed Eddies from Wisconsin & Singapore (neither use a DHT)

Why use Eddies in P 2 P? (The easy answers) l Much of the promise of P 2 P lies in its fully distributed nature l l No central point of synchronization no central catalog Distributed catalog with statistics helps, but does not solve all problems l Possibly stale, hard to maintain l Need CAP to do the best optimization l No knowledge of available resources or the current state of the system (load, etc) This is the PIER Philosophy! Eddies were designed for a federated query processor l l Changing operator selectivities and costs Federated/heterogeneous data sources

Why Eddies in P 2 P? (The not so obvious answers) l Available compute resources in a P 2 P network are heterogeneous and dynamically changing l l Where should the query be processed? In a large P 2 P system, local data distributions, arrival rates, etc. maybe different than global

Freddies: Federated Eddies l l A Freddy is an adaptive query processing operator within the PIER framework Goals: l l l Show feasibility of adaptive query processing in PIER Build foundation and infrastructure for smarter adaptive query processing Establish baseline for Freddy performance to improve upon with smarter routing policies

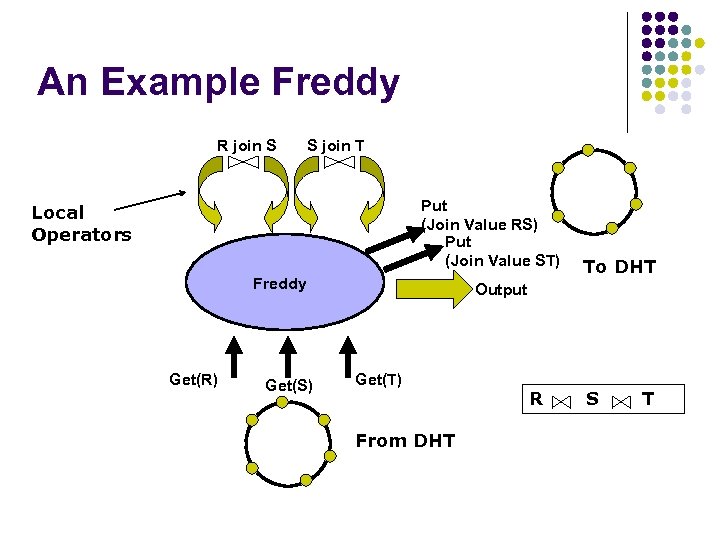

An Example Freddy R join S S join T Put (Join Value RS) Put (Join Value ST) Local Operators Freddy Get(R) Get(S) To DHT Output Get(T) From DHT R S T

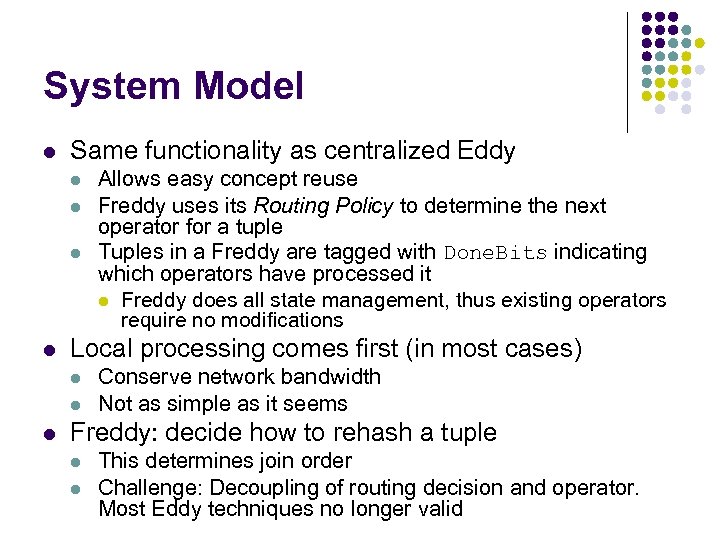

System Model l Same functionality as centralized Eddy l l Local processing comes first (in most cases) l l l Allows easy concept reuse Freddy uses its Routing Policy to determine the next operator for a tuple Tuples in a Freddy are tagged with Done. Bits indicating which operators have processed it l Freddy does all state management, thus existing operators require no modifications Conserve network bandwidth Not as simple as it seems Freddy: decide how to rehash a tuple l l This determines join order Challenge: Decoupling of routing decision and operator. Most Eddy techniques no longer valid

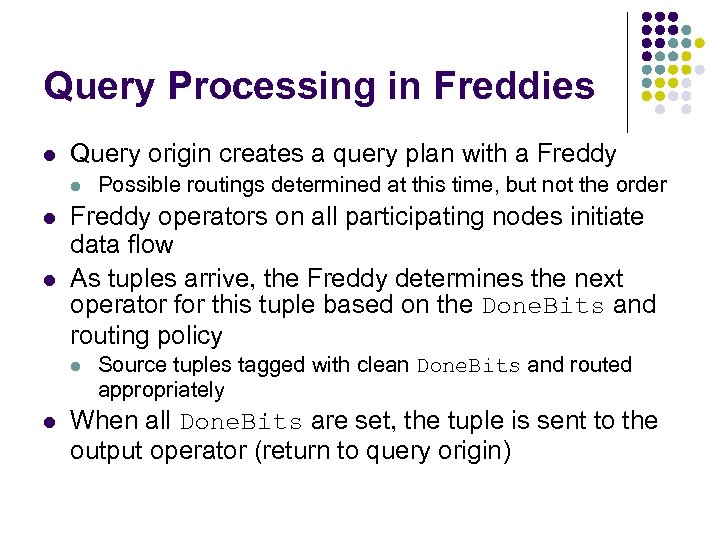

Query Processing in Freddies l Query origin creates a query plan with a Freddy l l l Freddy operators on all participating nodes initiate data flow As tuples arrive, the Freddy determines the next operator for this tuple based on the Done. Bits and routing policy l l Possible routings determined at this time, but not the order Source tuples tagged with clean Done. Bits and routed appropriately When all Done. Bits are set, the tuple is sent to the output operator (return to query origin)

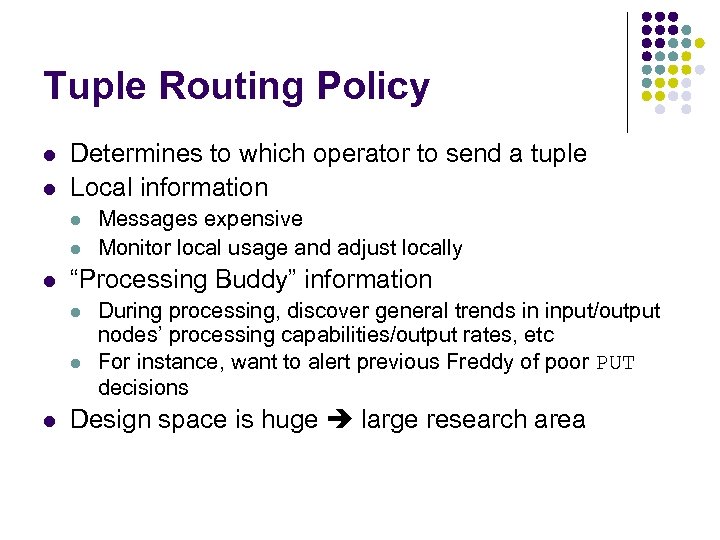

Tuple Routing Policy l l Determines to which operator to send a tuple Local information l l l “Processing Buddy” information l l l Messages expensive Monitor local usage and adjust locally During processing, discover general trends in input/output nodes’ processing capabilities/output rates, etc For instance, want to alert previous Freddy of poor PUT decisions Design space is huge large research area

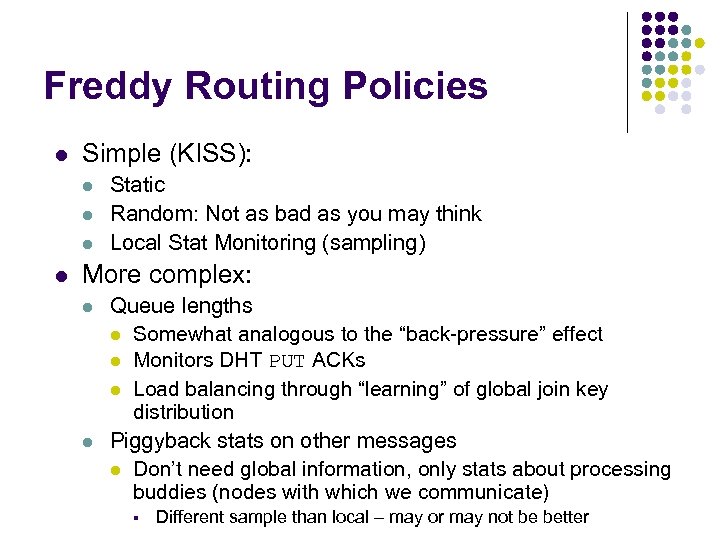

Freddy Routing Policies l Simple (KISS): l l Static Random: Not as bad as you may think Local Stat Monitoring (sampling) More complex: l l Queue lengths l Somewhat analogous to the “back-pressure” effect l Monitors DHT PUT ACKs l Load balancing through “learning” of global join key distribution Piggyback stats on other messages l Don’t need global information, only stats about processing buddies (nodes with which we communicate) § Different sample than local – may or may not be better

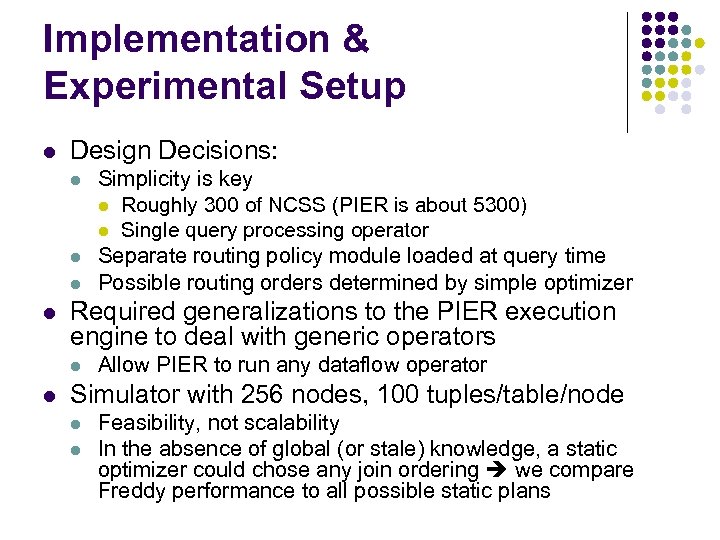

Implementation & Experimental Setup l Design Decisions: l l Required generalizations to the PIER execution engine to deal with generic operators l l Simplicity is key l Roughly 300 of NCSS (PIER is about 5300) l Single query processing operator Separate routing policy module loaded at query time Possible routing orders determined by simple optimizer Allow PIER to run any dataflow operator Simulator with 256 nodes, 100 tuples/table/node l l Feasibility, not scalability In the absence of global (or stale) knowledge, a static optimizer could chose any join ordering we compare Freddy performance to all possible static plans

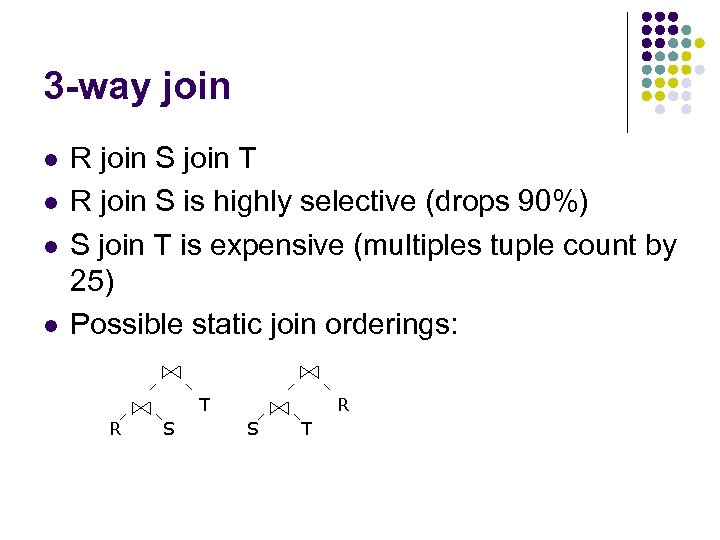

3 -way join l l R join S join T R join S is highly selective (drops 90%) S join T is expensive (multiples tuple count by 25) Possible static join orderings: T R S T

3 Way Join Results

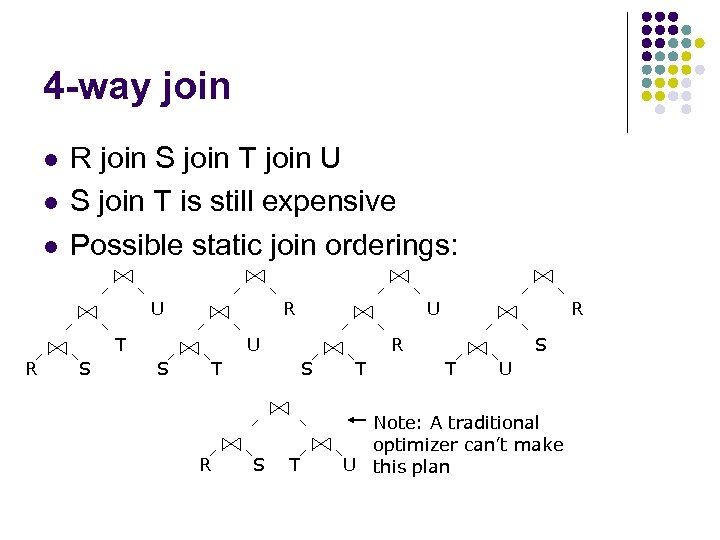

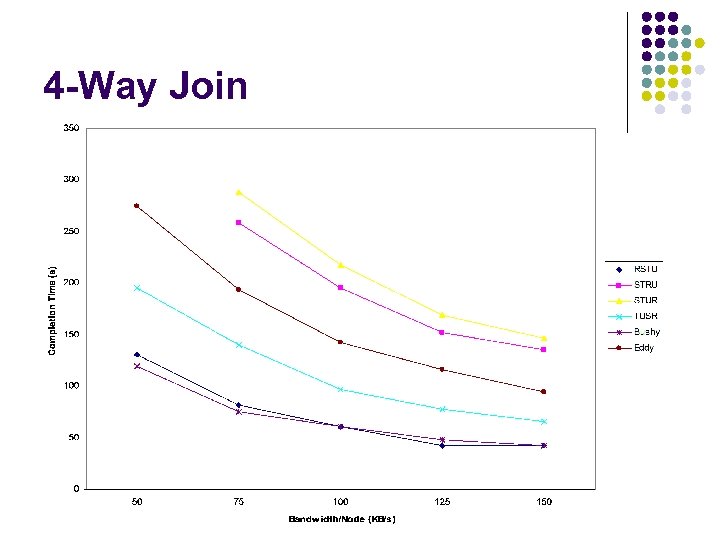

4 -way join l l l R join S join T join U S join T is still expensive Possible static join orderings: U R T R S U U S R T R S S R T T S T U Note: A traditional optimizer can’t make U this plan

4 -Way Join

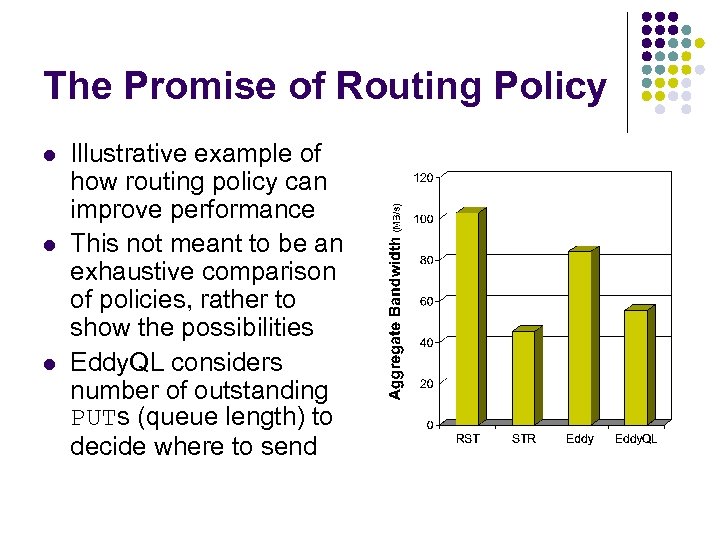

The Promise of Routing Policy l l l Illustrative example of how routing policy can improve performance This not meant to be an exhaustive comparison of policies, rather to show the possibilities Eddy. QL considers number of outstanding PUTs (queue length) to decide where to send

Conclusions and Continuing Work l Freddies provide adaptable query processing in a P 2 P system l l l Require no global knowledge Baseline performance shows promise for smarter policies In the future… l l Explore Freddy performance in a dynamic environment Explore more complex routing policies

Questions? Comments? Snide remarks for Ryan? Glorious praise for Shawn? Thanks!

ef484e08cc258cf82f7672d27c23479b.ppt