5a9eb01a1ce2d04da12bf985a279f279.ppt

- Количество слайдов: 48

Four Pillars of Success: Significance, Cost Benefits, Treatment Fidelity, and Public Policy 2008 REAP Conference Santa Fe, New Mexico March 19, 2008 Michael Gass, Ph. D. , LMFT University of New Hampshire

Apologies to the other forms of researchers/”house subcontractors”

Who is affected by these four pillars in the adventure field? • • • Violence prevention Drug prevention and treatment Delinquency prevention and treatment Education programs - academic & social Youth Development Mental Health programs Employment & Welfare Child & Family services International development Adolescent Pregnancy prevention Healthy aging programs Developmental disabilities

“Evidence” behind the programming of my first “youth development” job Our House Inc. - 1979 Greeley, Colorado Group Home #2

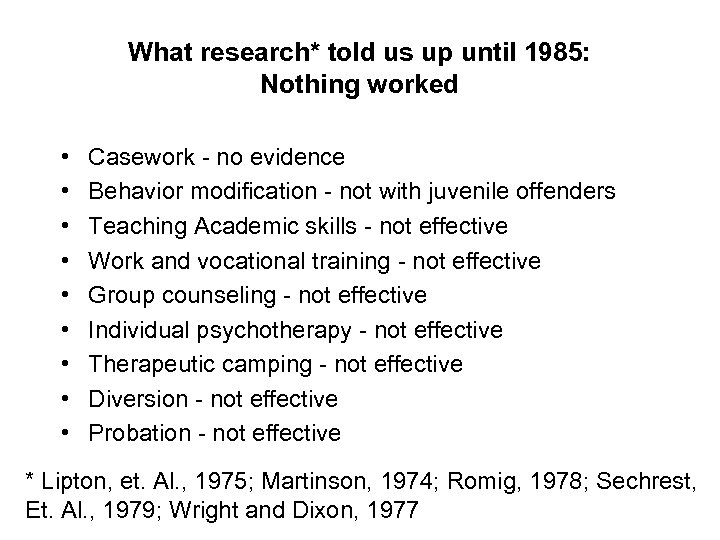

What research* told us up until 1985: Nothing worked • • • Casework - no evidence Behavior modification - not with juvenile offenders Teaching Academic skills - not effective Work and vocational training - not effective Group counseling - not effective Individual psychotherapy - not effective Therapeutic camping - not effective Diversion - not effective Probation - not effective * Lipton, et. Al. , 1975; Martinson, 1974; Romig, 1978; Sechrest, Et. Al. , 1979; Wright and Dixon, 1977

Pre-EBP youth era: Tail ‘em, Nail ‘em and Jail ‘em • Incarceration until they were 18 • Clay Yeager - Burger King story

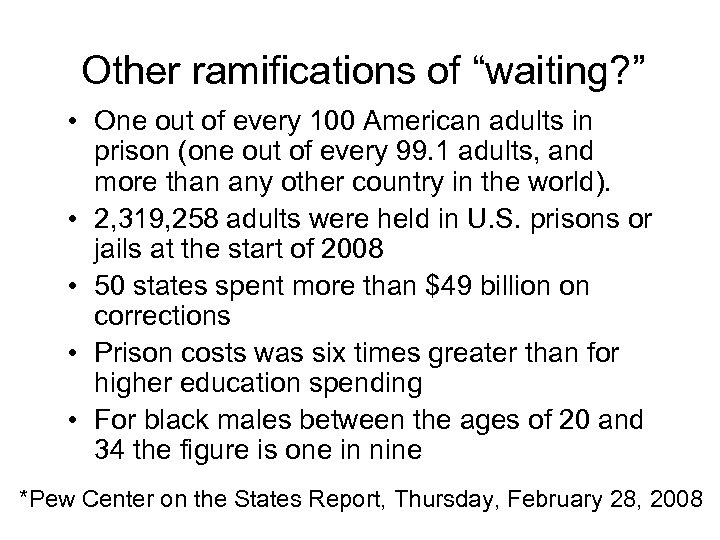

Other ramifications of “waiting? ” • One out of every 100 American adults in prison (one out of every 99. 1 adults, and more than any other country in the world). • 2, 319, 258 adults were held in U. S. prisons or jails at the start of 2008 • 50 states spent more than $49 billion on corrections • Prison costs was six times greater than for higher education spending • For black males between the ages of 20 and 34 the figure is one in nine *Pew Center on the States Report, Thursday, February 28, 2008

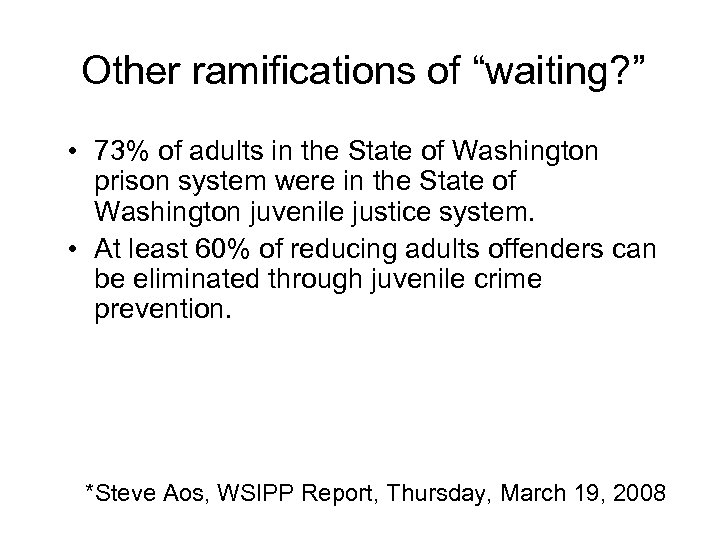

Other ramifications of “waiting? ” • 73% of adults in the State of Washington prison system were in the State of Washington juvenile justice system. • At least 60% of reducing adults offenders can be eliminated through juvenile crime prevention. *Steve Aos, WSIPP Report, Thursday, March 19, 2008

When did research “evidence” start to tell us something different? • According to the OJJDP, Conrad & Hedin (1981) were among the first researchers to demonstrate the beneficial impact of positive youth development (See JEE). • Demonstrated that “something different” than punitive measures worked • Combined with positive psychology “sciences” *http: //www. dsgonline. com/mpg 2. 5/ leadership_development_prevention. htm

JEE Conrad & Hedin article • 4000 adolescents in 30 experiential education programs • Six programs with comparison groups • Increased differences in personal and social development, moral reasoning, self-esteem, attitudes toward community service and involvement. • Elements to Mac Hall and Project Venture

What is significant? • P <. 05! • YOU JUST SAVED $540 ON YOUR PROPERTY TAXES! • YOU HAVE A TREATMENT MANUAL AND TRAINING PROGRAM THAT INFORMS ALL STAFF KNOW HOW EFFECTIVELY WORK WITH CLIENTS! • YOUR PROGRAM IS LISTED AS A MODEL PROGRAM BY A FEDERAL AGENCY, ENABLING YOU TO RECEIVE FEDERAL FUNDING FOR PROGRAMMING AND TRAINING!

“Choice of Drug” paradigm: What do you choose? • Scientifically based evidence backing the effectiveness of a drug with proven results, or a drug that has shown no effectiveness? • Drug that costs $400 or one that costs $1000? • Drug that is the same no matter where you take it or who gives it to you, or one that does/may change with administration? • Drug that has achieved approval from the American Medical Association and Federal Drug Administration or not

“Choice of Drug” paradigm: You choose… • One with documented, unbiased evidence, with multiple tests done by different researchers • One that is cost effective (and you can afford) • One with fidelity, or does not change with who administers it to you. • One that is approved by the highest regarded overseeing organizations. • This “medical paradigm” is the source begins the understanding of what is meant by “significant. ”

Report card on what is significant for the “framing roof builders” • • Experimental Design Evidenced-based research evaluation Provides Case studies or clinical samples Benefit-Cost Analysis Results reporting Training models Power of research design Proper instrumentation

Report card on what is significant for the “framing roof builders” (continued) • • • Cultural variability Treatment/Intervention fidelity Background literature support Replication Length of treatment effectiveness assessed

Progress for interested framing roof builders” (and others) • Rubric created for these 13 factors http: //www. shhs. unh. edu/kin_oe/Gass_(2007)_EBP_Rubric. doc • Literature reviews with rubric analysis for: - Adventure therapy (Young) - K-12 educational settings (Shirilla) - Wilderness programs (Beightol) - Higher education programs (Fitch) http: //www. shhs. unh. edu/kin_oe/bibliographies. html

NATSAP Research and Evaluation Network: A Web-Based Practice Research Network and Archival Database Michael Gass, Phd Chair, Dept. of Kinesiology, University of New Hampshire NATSAP Research Coordinator Michael Young, M. Ed Graduate Assistant, University of New Hampshire NATSAP Research Coordinator

The NATSAP Research and Evaluation network • Provide an affordable data collection tool for all NATSAP programs to utilize • Create a research data base that could be used to improve NATSAP program practices, especially EBP • Attract the interest of other researchers in appropriately using a NATSAP research database.

The NATSAP Research and Evaluation network Practice Research Network Web-based Protocol Research Coordinators, Program Staff, Establish comparative benchmarking Build the “n” by have access and Study Participants, including opportunities by establishing to multiple program sites consent forms, andaggregate scores de-identified assessments (OQ and ASEBA) through a web-site

The Measures: • The database will rely on two “groups” of survey measures: – 1) the Outcome Questionnaires and – 2) Achenbach measures. • Both are “gold standards” and are widely used in the industry. • It is recommended that programs use both instruments for data collection, but it is possible to use only one.

• www. oqmeasures. com • Used to track therapeutic progress of clients: – Y-OQ is a parent reported measure of a wide range of behaviors situations, and moods which apply to troubled teenagers. – SR Y-OQ is the adolescent self-report version • Scales: Intrapersonal Distress, Somatic, Interpersonal Relations, Critical Items, Social Problems, Behavioral Dysfunction • Aggregate Scale: Total Score

• one of the most widely-used measures in child psychology • About 110 items, < 10” to complete • Scales: Withdrawn/Depressed; Anxious/Depressed; Somatic Complaints, Social Problems, Attention Problems, Thought Problems, Aggression, Rule. Breaking Behaviors • Aggregate Scales: Internalizing, Externalizing, Total Problems • Reliability: Test-Retest Value - 0. 95 to 1. 00 Inter-rater reliability - 0. 93 to 0. 96 Internal consistency: 0. 78 to 0. 97

• www. carepaths. com • Supports the whole protocol • Allows for addition of other forms (i. e. demographics, case-mix, other standardized assessments) • Helps with e-mail reminders • Provides additional “modules” (e. g. clinical reports for indiv. Clients) if programs are interested

• De-Identified Aggregate Data will be downloaded periodically to a UNH Server • Here is where the archival data base will sit and be accessible

From more info contact: Michael Gass mgass@unh. edu 603 -862 -2024 Michael Young michael. young@unh. edu 603 -862 -2007

Evidence means more that outcomes: cost-effectiveness measures (e. g. , taxes) • With programs that work, • can you show a “bottom line” net gain? • & deliver consistent, quality programs? • Dr. Steve Aos, WSIPP http: //www. wsipp. wa. gov/default. asp

Affects on other approaches/programs Search for the actual “truth” or “outcomes” of a well-designed and effective programs • David Barlow (APA) (2004) landmark article: – In the 1990 s large amounts of money with little supporting evidence was invested into programs addressing youth and adult violence that simply didn’t work. – In some cases these intervention programs created more harm than no program at all.

Samples of well-known, ineffective programs • 1990 s for the emergence of ineffective but popular programs • (1) Gun Buyback programs - two-thirds of the guns turned in did not work, almost all of the people turning in guns had another gun at home) • (2) Bootcamp programs (failed to provide any difference in juvenile recidivism outcome rates than standard probation programs, but were four times as expensive.

Ineffective Programs continued • (3 ) DARE programs - traditional 5 th grade program failed to be effective in decreasing drug use despite the fact that by 1998 the program was used in 48% of American schools with an annual budget of over $700 million dollars (Greenwood, 2006). • (4) Scared Straight programs - inculcated youth more directly into a criminal lifestyle, actually leading to increases in crime by participating youth and required $203 in corrective programming to address and undo every dollar that was originally spent on programming.

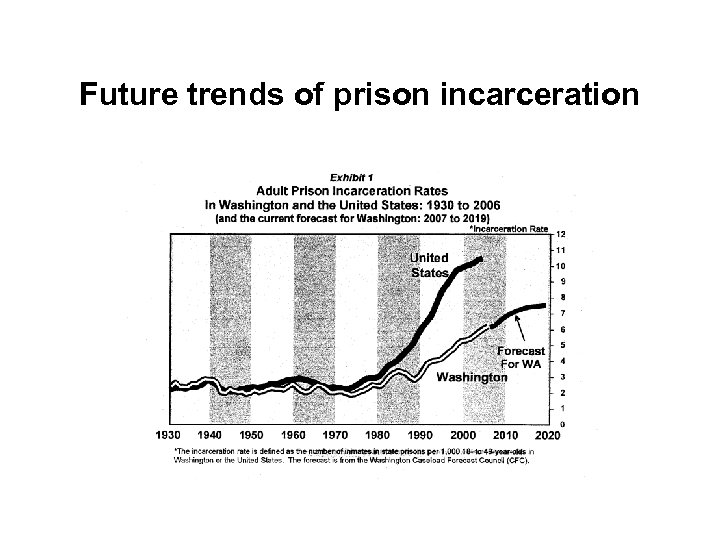

Future trends of prison incarceration

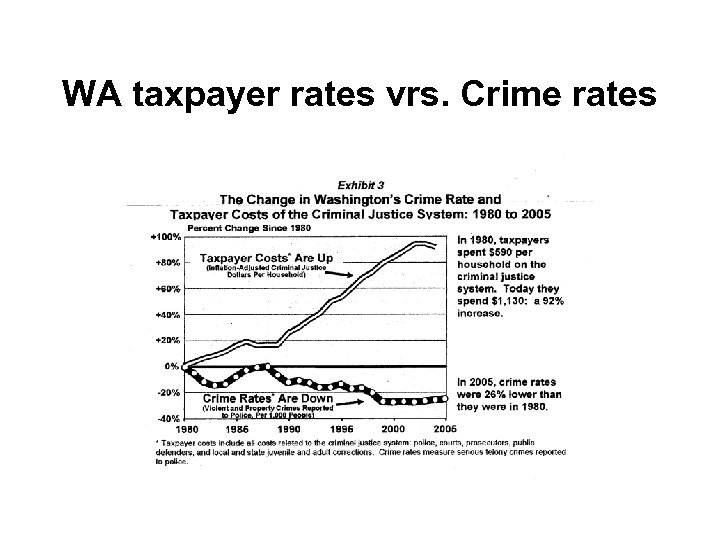

WA taxpayer rates vrs. Crime rates

Treatment Fidelity Experience Stage 1 - Produce an acceptable model of a machine that would fly Any different than how Our House, Inc. program was started? How most adventure programs are begun?

Treatment Fidelity Experience Stage 2 - Produce an acceptable model of a machine that would fly from the following model

Treatment Fidelity Experience Stage 3 - Produce an acceptable model of a machine that would fly from the following manualized version Know that you need to adhere to these guidelines accounting for some programmatic resources that fit within the program rationale

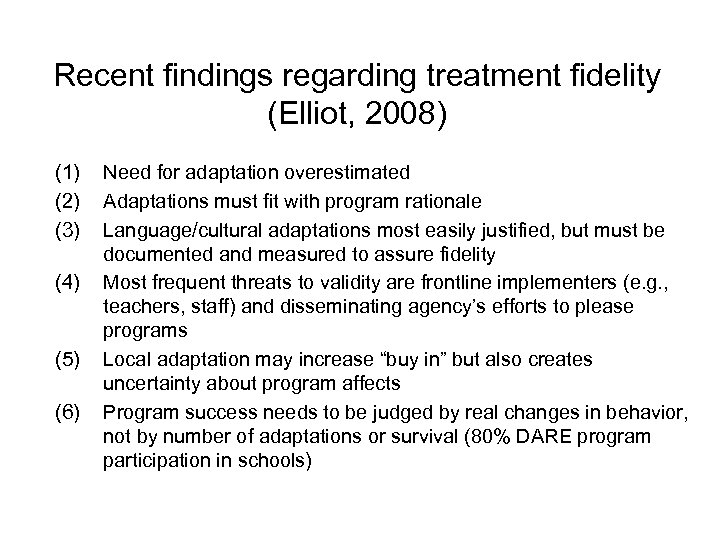

Recent findings regarding treatment fidelity (Elliot, 2008) (1) (2) (3) (4) (5) (6) Need for adaptation overestimated Adaptations must fit with program rationale Language/cultural adaptations most easily justified, but must be documented and measured to assure fidelity Most frequent threats to validity are frontline implementers (e. g. , teachers, staff) and disseminating agency’s efforts to please programs Local adaptation may increase “buy in” but also creates uncertainty about program affects Program success needs to be judged by real changes in behavior, not by number of adaptations or survival (80% DARE program participation in schools)

Public Policy Welcome to Aleta Meyer and NIDA

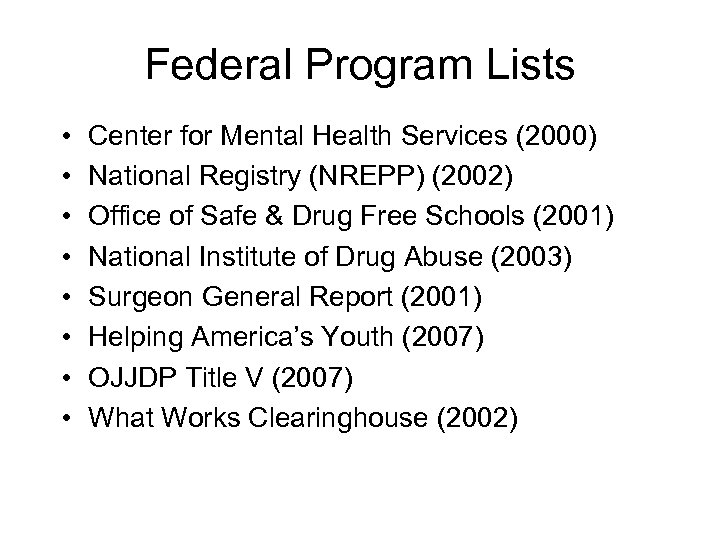

Federal Program Lists • • Center for Mental Health Services (2000) National Registry (NREPP) (2002) Office of Safe & Drug Free Schools (2001) National Institute of Drug Abuse (2003) Surgeon General Report (2001) Helping America’s Youth (2007) OJJDP Title V (2007) What Works Clearinghouse (2002)

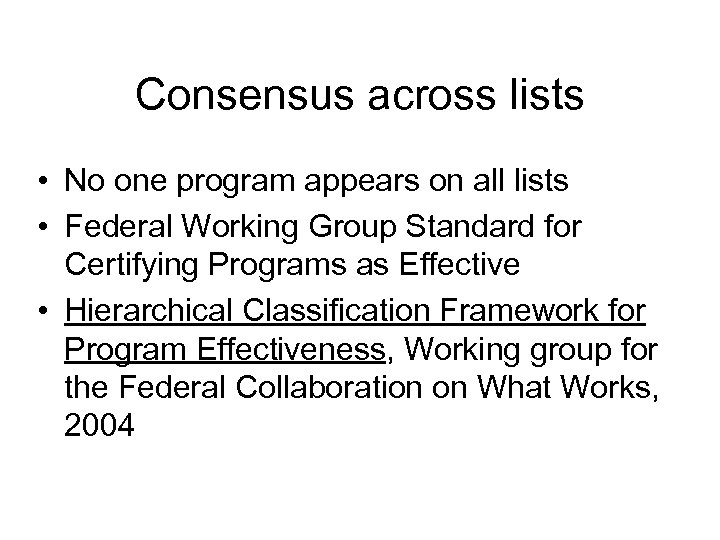

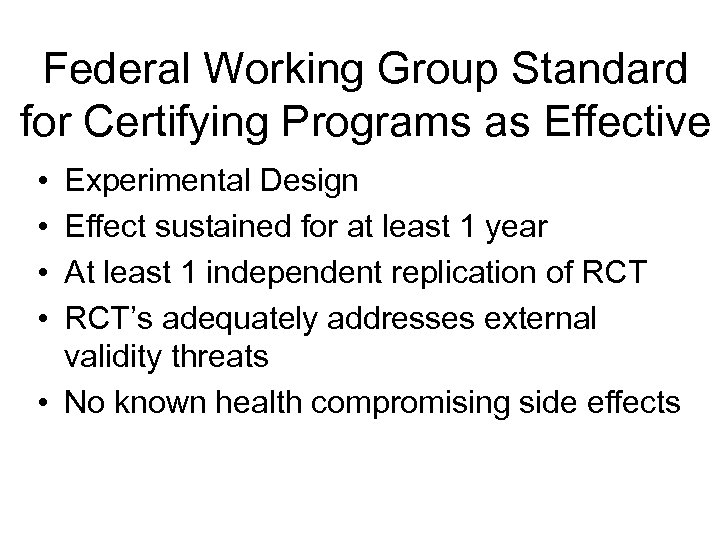

Consensus across lists • No one program appears on all lists • Federal Working Group Standard for Certifying Programs as Effective • Hierarchical Classification Framework for Program Effectiveness, Working group for the Federal Collaboration on What Works, 2004

Federal Working Group Standard for Certifying Programs as Effective • • Experimental Design Effect sustained for at least 1 year At least 1 independent replication of RCT’s adequately addresses external validity threats • No known health compromising side effects

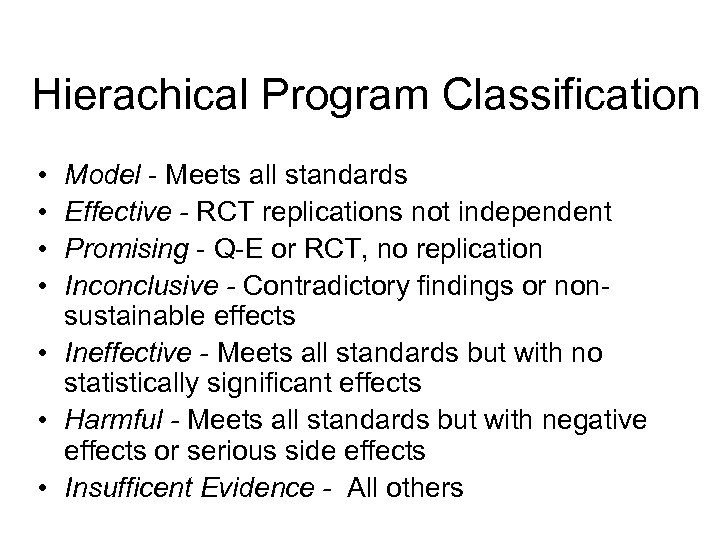

Hierachical Program Classification • • Model - Meets all standards Effective - RCT replications not independent Promising - Q-E or RCT, no replication Inconclusive - Contradictory findings or nonsustainable effects • Ineffective - Meets all standards but with no statistically significant effects • Harmful - Meets all standards but with negative effects or serious side effects • Insufficent Evidence - All others

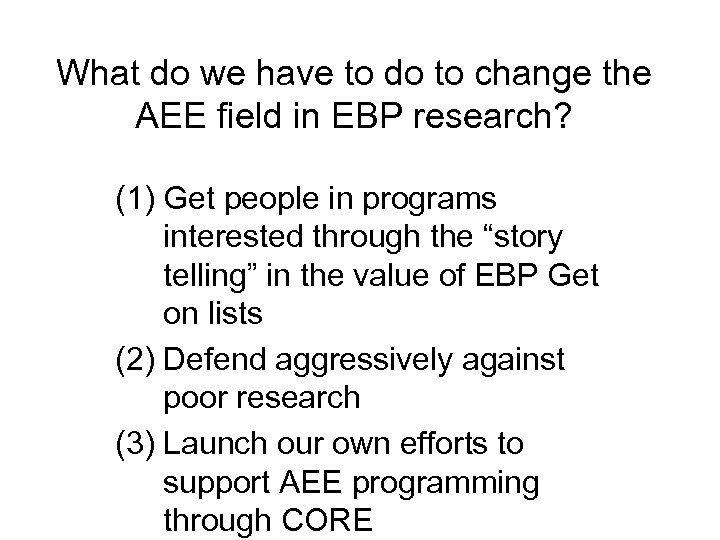

What do we have to do to change the AEE field in EBP research? (1) Get people in programs interested through the “story telling” in the value of EBP Get on lists (2) Defend aggressively against poor research (3) Launch our own efforts to support AEE programming through CORE

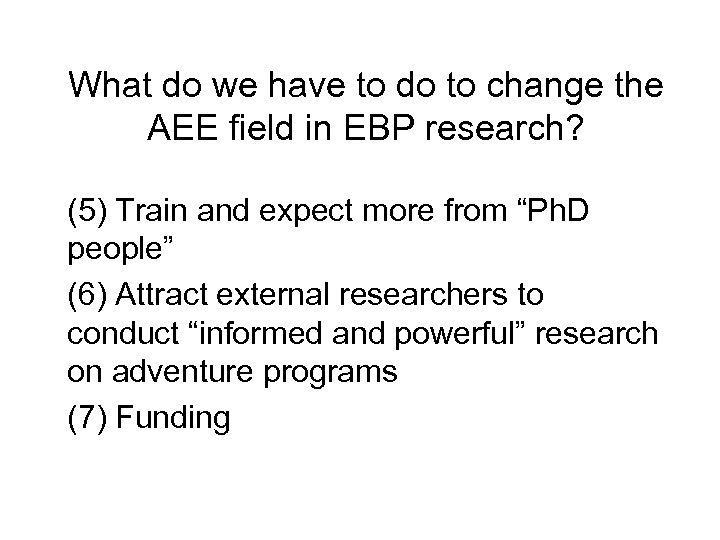

What do we have to do to change the AEE field in EBP research? (5) Train and expect more from “Ph. D people” (6) Attract external researchers to conduct “informed and powerful” research on adventure programs (7) Funding

What do we have to do to change the NATSAP field in EBP research? (8) Make advances “outside” of our field - APA journal articles - Other conferences - Be involved in “decision maker” conversations

What do we have to do to change the NATSAP field in EBP research? (9) Create “teams of success” - researchers (knowledge) - funders (resources) - programmers (access to populations) (10) Current efforts follow-up

What stage of “buy in” for EBR are you in? • Awareness stage – don’t know what it is, unaware of the benefits, or the controls dictated by EBP • Decision-making stage - weigh pros and cons, but remain vague about actually making changes or choosing for the pro side • Preparation stage – make a decision to implement this process, generated by a “value added” approach of sorts from a desire to have a more effective program or financial reasons • Action stage – partner support structure in place to aid continuation

Questions? Thanks! Michael Gass NH Hall, 124 Main St. , UNH Durham, NH 03824 mgass@unh. edu (603) 862 -2024

5a9eb01a1ce2d04da12bf985a279f279.ppt