468d04a69f4771310af568431ecde975.ppt

- Количество слайдов: 31

Forschungszentrum Karlsruhe in der Helmholtz-Gemeinschaft The German Tier 1 LHCC Review, 19/20 -nov-2007, stream B, part 2 Holger Marten Forschungszentrum Karlsruhe Gmb. H Institut for Scientific Computing, IWR Postfach 3640 D-76021 Karlsruhe 1

Forschungszentrum Karlsruhe in der Helmholtz-Gemeinschaft The German Tier 1 LHCC Review, 19/20 -nov-2007, stream B, part 2 Holger Marten Forschungszentrum Karlsruhe Gmb. H Institut for Scientific Computing, IWR Postfach 3640 D-76021 Karlsruhe 1

0. Content 1. Grid. Ka location & organization - skipped - but included in the slides 2. Resources and networks 3. Mass storage & SRM 4. Grid Services 5. Reliability & 24 x 7 operations 6. Plans for 2008 LHCC Review, November 19 -20, 2007 2

0. Content 1. Grid. Ka location & organization - skipped - but included in the slides 2. Resources and networks 3. Mass storage & SRM 4. Grid Services 5. Reliability & 24 x 7 operations 6. Plans for 2008 LHCC Review, November 19 -20, 2007 2

2. Resources and networks LHCC Review, November 19 -20, 2007 7

2. Resources and networks LHCC Review, November 19 -20, 2007 7

![Current Resources in Production CPU [k. SI 2 k] Disk [TB] Tape [TB] LCG Current Resources in Production CPU [k. SI 2 k] Disk [TB] Tape [TB] LCG](https://present5.com/presentation/468d04a69f4771310af568431ecde975/image-4.jpg) Current Resources in Production CPU [k. SI 2 k] Disk [TB] Tape [TB] LCG 1864 (55%) 878 1007 non-LCG HEP 1270 (37%) 443 585 others 264 (8%) 60 120 October 2007 accounting (example): • • • LHCC Review, November 19 -20, 2007 CPUs provided through fair share 1. 6 Mio. hours wall time by 300 k jobs on 2514 CPU cores 55% LCG, 45% non-LCG HEP 8

Current Resources in Production CPU [k. SI 2 k] Disk [TB] Tape [TB] LCG 1864 (55%) 878 1007 non-LCG HEP 1270 (37%) 443 585 others 264 (8%) 60 120 October 2007 accounting (example): • • • LHCC Review, November 19 -20, 2007 CPUs provided through fair share 1. 6 Mio. hours wall time by 300 k jobs on 2514 CPU cores 55% LCG, 45% non-LCG HEP 8

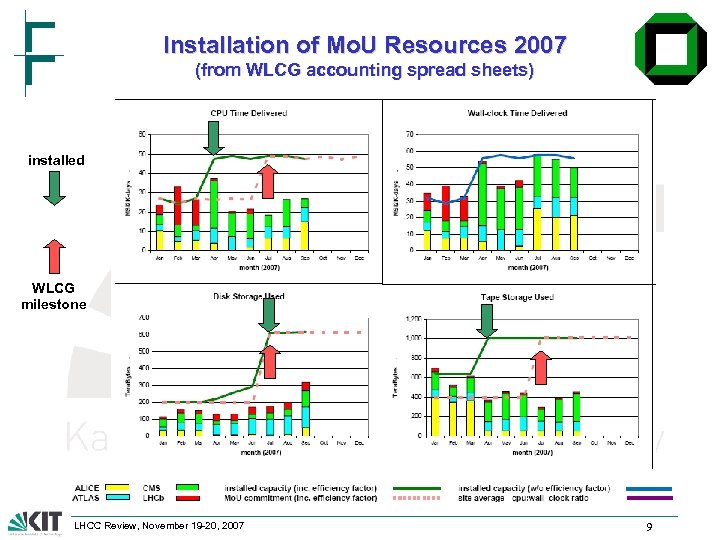

Installation of Mo. U Resources 2007 (from WLCG accounting spread sheets) installed WLCG milestone LHCC Review, November 19 -20, 2007 9

Installation of Mo. U Resources 2007 (from WLCG accounting spread sheets) installed WLCG milestone LHCC Review, November 19 -20, 2007 9

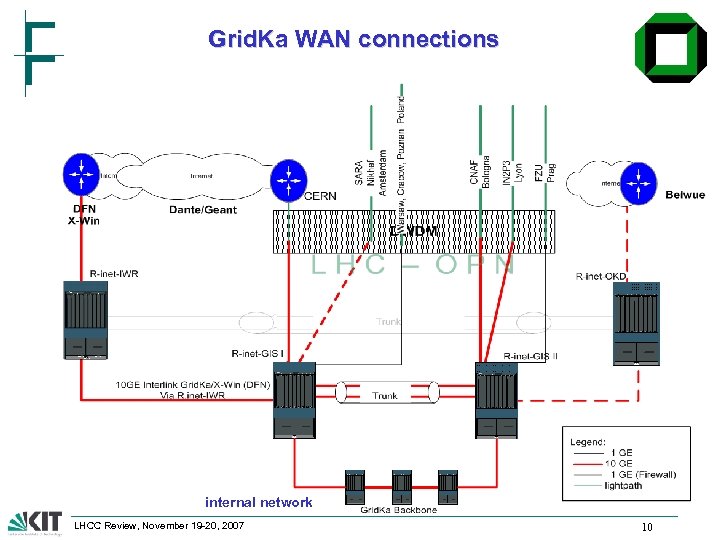

Grid. Ka WAN connections internal network LHCC Review, November 19 -20, 2007 10

Grid. Ka WAN connections internal network LHCC Review, November 19 -20, 2007 10

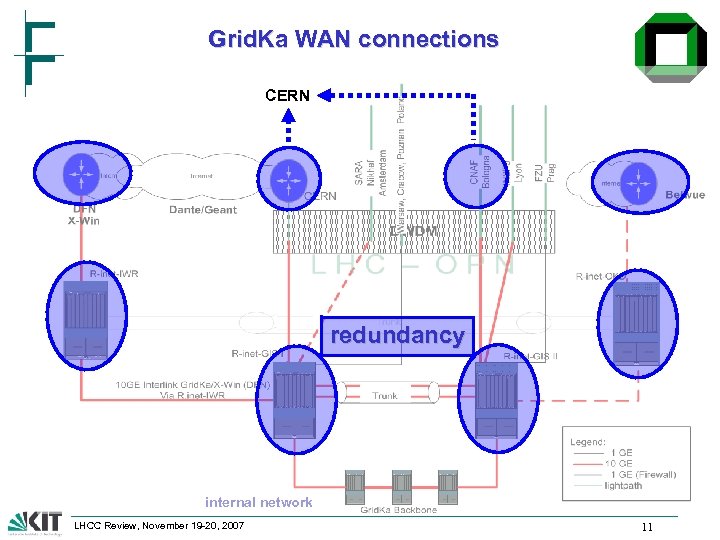

Grid. Ka WAN connections CERN redundancy internal network LHCC Review, November 19 -20, 2007 11

Grid. Ka WAN connections CERN redundancy internal network LHCC Review, November 19 -20, 2007 11

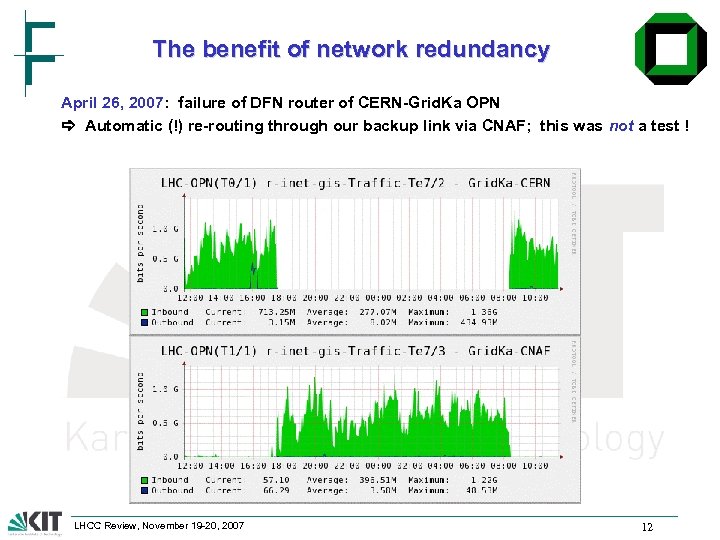

The benefit of network redundancy April 26, 2007: failure of DFN router of CERN-Grid. Ka OPN Automatic (!) re-routing through our backup link via CNAF; this was not a test ! LHCC Review, November 19 -20, 2007 12

The benefit of network redundancy April 26, 2007: failure of DFN router of CERN-Grid. Ka OPN Automatic (!) re-routing through our backup link via CNAF; this was not a test ! LHCC Review, November 19 -20, 2007 12

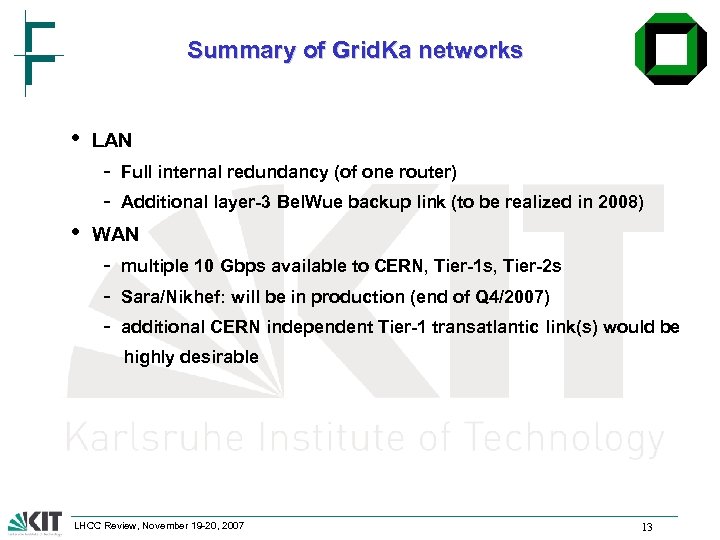

Summary of Grid. Ka networks • LAN - • Full internal redundancy (of one router) Additional layer-3 Bel. Wue backup link (to be realized in 2008) WAN - multiple 10 Gbps available to CERN, Tier-1 s, Tier-2 s Sara/Nikhef: will be in production (end of Q 4/2007) additional CERN independent Tier-1 transatlantic link(s) would be highly desirable LHCC Review, November 19 -20, 2007 13

Summary of Grid. Ka networks • LAN - • Full internal redundancy (of one router) Additional layer-3 Bel. Wue backup link (to be realized in 2008) WAN - multiple 10 Gbps available to CERN, Tier-1 s, Tier-2 s Sara/Nikhef: will be in production (end of Q 4/2007) additional CERN independent Tier-1 transatlantic link(s) would be highly desirable LHCC Review, November 19 -20, 2007 13

3. Mass storage & SRM LHCC Review, November 19 -20, 2007 14

3. Mass storage & SRM LHCC Review, November 19 -20, 2007 14

d. Cache & MSS at Grid. Ka Long time instabilities with SRM and grid. FTP implementation • reduced availability because SAM critical tests fail; many patches since Dual effort for complex and labour intensive software (data management) • running instable d. Cache SRM in production • running next SRM 2. 2 release in pre-production • in the end SRM 2. 2 was tested formally with F. Donnos S 2 test suite, but only very limited by the experiments Read-only disk storage (T 0 D 1) is administrative difficulty • full disks imply stopping experiment’s work => experiments ask for “temporary ad-hoc” conversions into T 1 D 1 • no failover or maintenance (reboot) is possible, otherwise jobs will crash LHCC Review, November 19 -20, 2007 15

d. Cache & MSS at Grid. Ka Long time instabilities with SRM and grid. FTP implementation • reduced availability because SAM critical tests fail; many patches since Dual effort for complex and labour intensive software (data management) • running instable d. Cache SRM in production • running next SRM 2. 2 release in pre-production • in the end SRM 2. 2 was tested formally with F. Donnos S 2 test suite, but only very limited by the experiments Read-only disk storage (T 0 D 1) is administrative difficulty • full disks imply stopping experiment’s work => experiments ask for “temporary ad-hoc” conversions into T 1 D 1 • no failover or maintenance (reboot) is possible, otherwise jobs will crash LHCC Review, November 19 -20, 2007 15

d. Cache & MSS at Grid. Ka Migrated to d. Cache 1. 8 with SRM 2. 2 on Nov 6/7 • very fruitful collaboration with d. Cache/SRM developers in situ • bug fix for globus-url-copy in combination with space reservation “on-the-fly” during migration process => many thanks to Timur Perelmutov and Tigran Mkrtchyan for support Stability has to be verified during the coming months. Connection to tape (MSS) is fully functional and scalable for writes • read tests by experiments have only started recently • difficult to estimate tape resources to reach required read throughput • workgroup with local experiment representatives to provide access patterns, tape classes and recall optimisation proposals LHCC Review, November 19 -20, 2007 16

d. Cache & MSS at Grid. Ka Migrated to d. Cache 1. 8 with SRM 2. 2 on Nov 6/7 • very fruitful collaboration with d. Cache/SRM developers in situ • bug fix for globus-url-copy in combination with space reservation “on-the-fly” during migration process => many thanks to Timur Perelmutov and Tigran Mkrtchyan for support Stability has to be verified during the coming months. Connection to tape (MSS) is fully functional and scalable for writes • read tests by experiments have only started recently • difficult to estimate tape resources to reach required read throughput • workgroup with local experiment representatives to provide access patterns, tape classes and recall optimisation proposals LHCC Review, November 19 -20, 2007 16

4. Grid Services LHCC Review, November 19 -20, 2007 17

4. Grid Services LHCC Review, November 19 -20, 2007 17

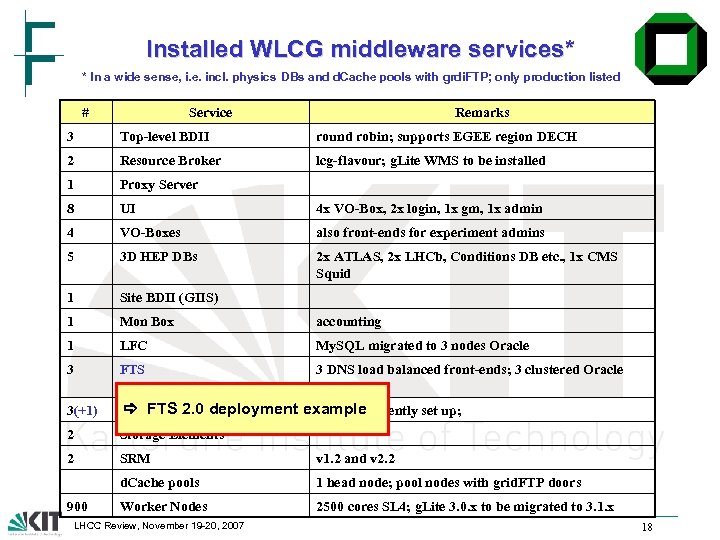

Installed WLCG middleware services* * In a wide sense, i. e. incl. physics DBs and d. Cache pools with grdi. FTP; only production listed # Service Remarks 3 Top-level BDII round robin; supports EGEE region DECH 2 Resource Broker lcg-flavour; g. Lite WMS to be installed 1 Proxy Server 8 UI 4 x VO-Box, 2 x login, 1 x gm, 1 x admin 4 VO-Boxes also front-ends for experiment admins 5 3 D HEP DBs 2 x ATLAS, 2 x LHCb, Conditions DB etc. , 1 x CMS Squid 1 Site BDII (GIIS) 1 Mon Box accounting 1 LFC My. SQL migrated to 3 nodes Oracle 3 FTS 3 DNS load balanced front-ends; 3 clustered Oracle back-ends 3(+1) FTS 2. 0 deployment example Compute Elements 4 th CE currently set up; 2 Storage Elements 2 SRM v 1. 2 and v 2. 2 d. Cache pools 1 head node; pool nodes with grid. FTP doors Worker Nodes 2500 cores SL 4; g. Lite 3. 0. x to be migrated to 3. 1. x 900 LHCC Review, November 19 -20, 2007 18

Installed WLCG middleware services* * In a wide sense, i. e. incl. physics DBs and d. Cache pools with grdi. FTP; only production listed # Service Remarks 3 Top-level BDII round robin; supports EGEE region DECH 2 Resource Broker lcg-flavour; g. Lite WMS to be installed 1 Proxy Server 8 UI 4 x VO-Box, 2 x login, 1 x gm, 1 x admin 4 VO-Boxes also front-ends for experiment admins 5 3 D HEP DBs 2 x ATLAS, 2 x LHCb, Conditions DB etc. , 1 x CMS Squid 1 Site BDII (GIIS) 1 Mon Box accounting 1 LFC My. SQL migrated to 3 nodes Oracle 3 FTS 3 DNS load balanced front-ends; 3 clustered Oracle back-ends 3(+1) FTS 2. 0 deployment example Compute Elements 4 th CE currently set up; 2 Storage Elements 2 SRM v 1. 2 and v 2. 2 d. Cache pools 1 head node; pool nodes with grid. FTP doors Worker Nodes 2500 cores SL 4; g. Lite 3. 0. x to be migrated to 3. 1. x 900 LHCC Review, November 19 -20, 2007 18

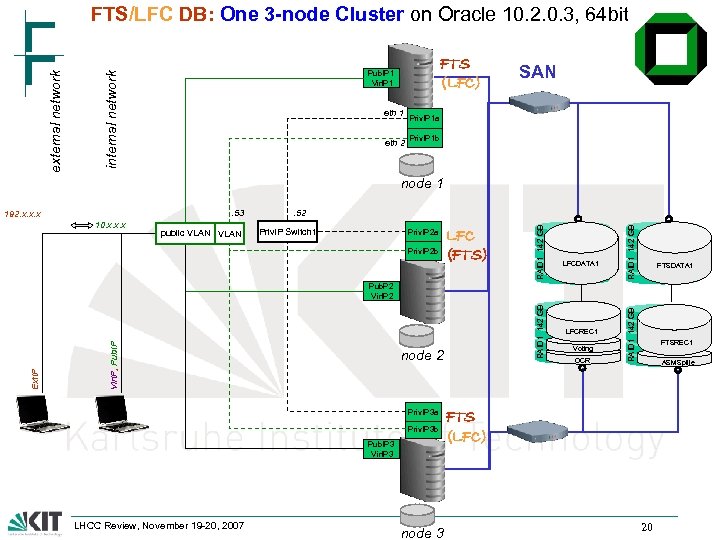

![FTS 2. 0 [+LFC] deployment at Grid. Ka Setup to ensure high availability. LHCC FTS 2. 0 [+LFC] deployment at Grid. Ka Setup to ensure high availability. LHCC](https://present5.com/presentation/468d04a69f4771310af568431ecde975/image-15.jpg) FTS 2. 0 [+LFC] deployment at Grid. Ka Setup to ensure high availability. LHCC Review, November 19 -20, 2007 Three nodes hosting web services. VO- and channel agents are distributed on the three nodes. Nodes located in 2 different cabinets to have at least one node working in case of a cabinet power failure or network switch failure. 3 nodes RAC on Oracle 10. 2. 0. 3, 64 bit RAC will be shared with LFC database. Two nodes preferred for FTS, one node preferred for LFC. Distributed over several cabinets. Mirrored disks in the SAN. 19

FTS 2. 0 [+LFC] deployment at Grid. Ka Setup to ensure high availability. LHCC Review, November 19 -20, 2007 Three nodes hosting web services. VO- and channel agents are distributed on the three nodes. Nodes located in 2 different cabinets to have at least one node working in case of a cabinet power failure or network switch failure. 3 nodes RAC on Oracle 10. 2. 0. 3, 64 bit RAC will be shared with LFC database. Two nodes preferred for FTS, one node preferred for LFC. Distributed over several cabinets. Mirrored disks in the SAN. 19

FTS (LFC) Pub. IP 1 Vir. IP 1 internal network external network FTS/LFC DB: One 3 -node Cluster on Oracle 10. 2. 0. 3, 64 bit eth 1 eth 2 SAN Priv. IP 1 a Priv. IP 1 b node 1 Priv. IP 2 a Vir. IP, Pub. IP Ext. IP Pub. P 2 Vir. IP 2 192. 168. 52 Priv. IP 2 b node 2 Priv. IP 3 a Priv. IP 3 b Pub. IP 3 Vir. IP 3 LHCC Review, November 19 -20, 2007 LFC (FTS) node 3 LFCDATA 1 LFCREC 1 Voting OCR RAID 1 142 GB Priv. IP Switch 1 FTSDATA 1 RAID 1 142 GB public VLAN . 52 RAID 1 142 GB 10. x. x. x RAID 1 142 GB . 53 192. x. x. x FTSREC 1 ASMSpfile FTS (LFC) 20

FTS (LFC) Pub. IP 1 Vir. IP 1 internal network external network FTS/LFC DB: One 3 -node Cluster on Oracle 10. 2. 0. 3, 64 bit eth 1 eth 2 SAN Priv. IP 1 a Priv. IP 1 b node 1 Priv. IP 2 a Vir. IP, Pub. IP Ext. IP Pub. P 2 Vir. IP 2 192. 168. 52 Priv. IP 2 b node 2 Priv. IP 3 a Priv. IP 3 b Pub. IP 3 Vir. IP 3 LHCC Review, November 19 -20, 2007 LFC (FTS) node 3 LFCDATA 1 LFCREC 1 Voting OCR RAID 1 142 GB Priv. IP Switch 1 FTSDATA 1 RAID 1 142 GB public VLAN . 52 RAID 1 142 GB 10. x. x. x RAID 1 142 GB . 53 192. x. x. x FTSREC 1 ASMSpfile FTS (LFC) 20

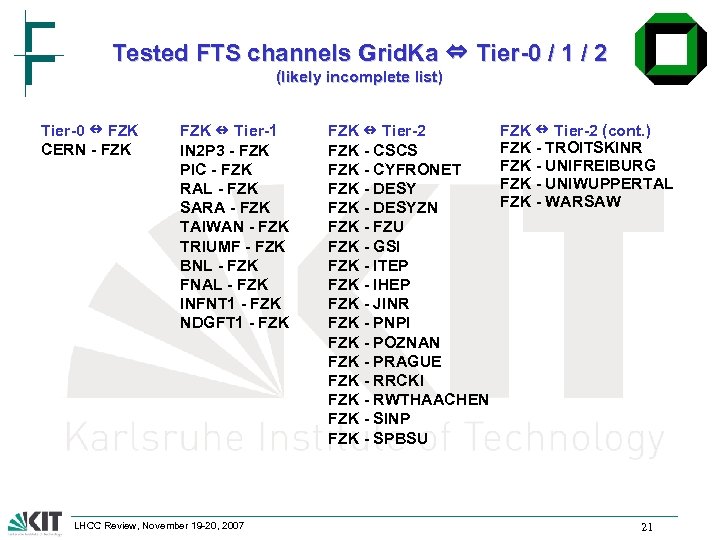

Tested FTS channels Grid. Ka ⇔ Tier-0 / 1 / 2 (likely incomplete list) Tier-0 FZK CERN - FZK Tier-1 IN 2 P 3 - FZK PIC - FZK RAL - FZK SARA - FZK TAIWAN - FZK TRIUMF - FZK BNL - FZK FNAL - FZK INFNT 1 - FZK NDGFT 1 - FZK LHCC Review, November 19 -20, 2007 FZK Tier-2 FZK - CSCS FZK - CYFRONET FZK - DESYZN FZK - FZU FZK - GSI FZK - ITEP FZK - IHEP FZK - JINR FZK - PNPI FZK - POZNAN FZK - PRAGUE FZK - RRCKI FZK - RWTHAACHEN FZK - SINP FZK - SPBSU FZK Tier-2 (cont. ) FZK - TROITSKINR FZK - UNIFREIBURG FZK - UNIWUPPERTAL FZK - WARSAW 21

Tested FTS channels Grid. Ka ⇔ Tier-0 / 1 / 2 (likely incomplete list) Tier-0 FZK CERN - FZK Tier-1 IN 2 P 3 - FZK PIC - FZK RAL - FZK SARA - FZK TAIWAN - FZK TRIUMF - FZK BNL - FZK FNAL - FZK INFNT 1 - FZK NDGFT 1 - FZK LHCC Review, November 19 -20, 2007 FZK Tier-2 FZK - CSCS FZK - CYFRONET FZK - DESYZN FZK - FZU FZK - GSI FZK - ITEP FZK - IHEP FZK - JINR FZK - PNPI FZK - POZNAN FZK - PRAGUE FZK - RRCKI FZK - RWTHAACHEN FZK - SINP FZK - SPBSU FZK Tier-2 (cont. ) FZK - TROITSKINR FZK - UNIFREIBURG FZK - UNIWUPPERTAL FZK - WARSAW 21

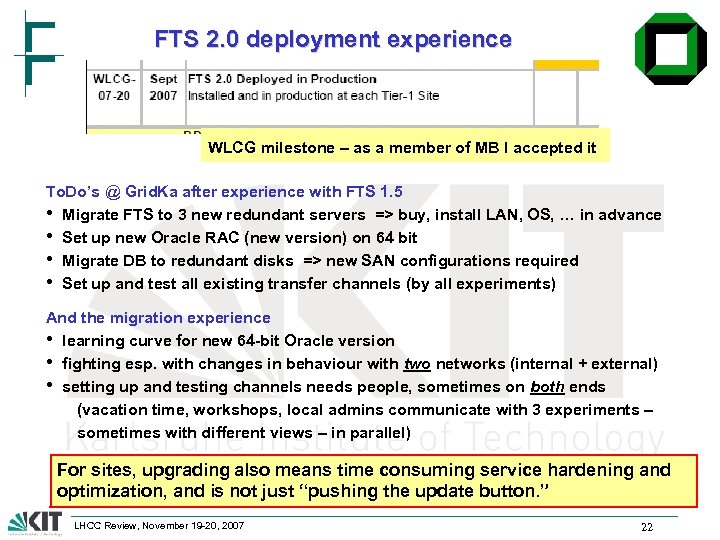

FTS 2. 0 deployment experience WLCG milestone – as a member of MB I accepted it To. Do’s @ Grid. Ka after experience with FTS 1. 5 • Migrate FTS to 3 new redundant servers => buy, install LAN, OS, … in advance • Set up new Oracle RAC (new version) on 64 bit • Migrate DB to redundant disks => new SAN configurations required • Set up and test all existing transfer channels (by all experiments) And the migration experience • learning curve for new 64 -bit Oracle version • fighting esp. with changes in behaviour with two networks (internal + external) • setting up and testing channels needs people, sometimes on both ends (vacation time, workshops, local admins communicate with 3 experiments – sometimes with different views – in parallel) For sites, upgrading also means time consuming service hardening and optimization, and is not just “pushing the update button. ” LHCC Review, November 19 -20, 2007 22

FTS 2. 0 deployment experience WLCG milestone – as a member of MB I accepted it To. Do’s @ Grid. Ka after experience with FTS 1. 5 • Migrate FTS to 3 new redundant servers => buy, install LAN, OS, … in advance • Set up new Oracle RAC (new version) on 64 bit • Migrate DB to redundant disks => new SAN configurations required • Set up and test all existing transfer channels (by all experiments) And the migration experience • learning curve for new 64 -bit Oracle version • fighting esp. with changes in behaviour with two networks (internal + external) • setting up and testing channels needs people, sometimes on both ends (vacation time, workshops, local admins communicate with 3 experiments – sometimes with different views – in parallel) For sites, upgrading also means time consuming service hardening and optimization, and is not just “pushing the update button. ” LHCC Review, November 19 -20, 2007 22

5. Reliability & 24 x 7 operations LHCC Review, November 19 -20, 2007 24

5. Reliability & 24 x 7 operations LHCC Review, November 19 -20, 2007 24

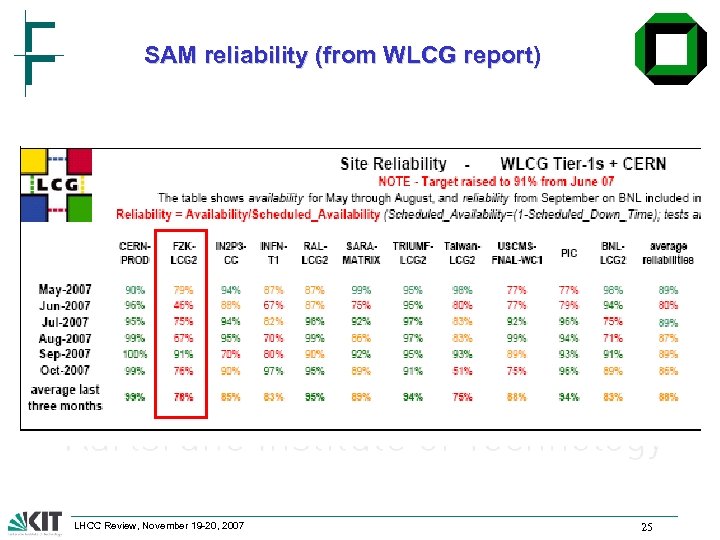

SAM reliability (from WLCG report) LHCC Review, November 19 -20, 2007 25

SAM reliability (from WLCG report) LHCC Review, November 19 -20, 2007 25

SAM reliability Some examples with zero severity for experiments • config. changes of local or central services that result in failures for OPS-VO only - missing rpm ‘lcg-version’ in new WN distribution SAM tests CA-certificates that already became officially obsolete More severe examples • pure local hardware / software failures (redundancy required…) • scalability of services after resource upgrades or during heavy load • stability of “MSS-related” software pieces (SRM, grid. FTP) Overall very complex hierarchy of dependencies • esp. transient scalability and stability issues are difficult to analyse • but this is necessary: analyse + fix instead of reboot ! (sometimes at the expense of availability though) LHCC Review, November 19 -20, 2007 26

SAM reliability Some examples with zero severity for experiments • config. changes of local or central services that result in failures for OPS-VO only - missing rpm ‘lcg-version’ in new WN distribution SAM tests CA-certificates that already became officially obsolete More severe examples • pure local hardware / software failures (redundancy required…) • scalability of services after resource upgrades or during heavy load • stability of “MSS-related” software pieces (SRM, grid. FTP) Overall very complex hierarchy of dependencies • esp. transient scalability and stability issues are difficult to analyse • but this is necessary: analyse + fix instead of reboot ! (sometimes at the expense of availability though) LHCC Review, November 19 -20, 2007 26

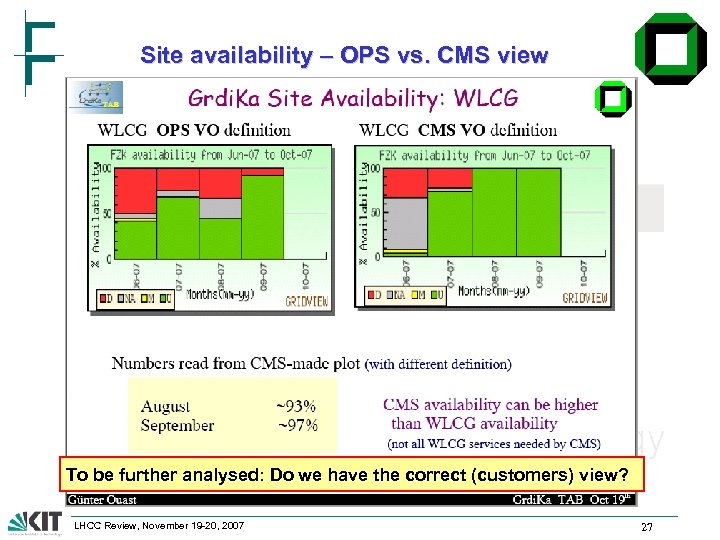

Site availability – OPS vs. CMS view To be further analysed: Do we have the correct (customers) view? LHCC Review, November 19 -20, 2007 27

Site availability – OPS vs. CMS view To be further analysed: Do we have the correct (customers) view? LHCC Review, November 19 -20, 2007 27

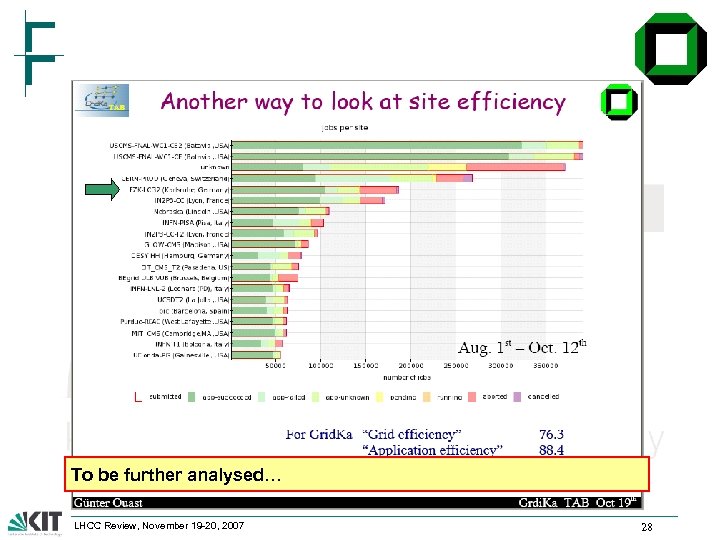

To be further analysed… LHCC Review, November 19 -20, 2007 28

To be further analysed… LHCC Review, November 19 -20, 2007 28

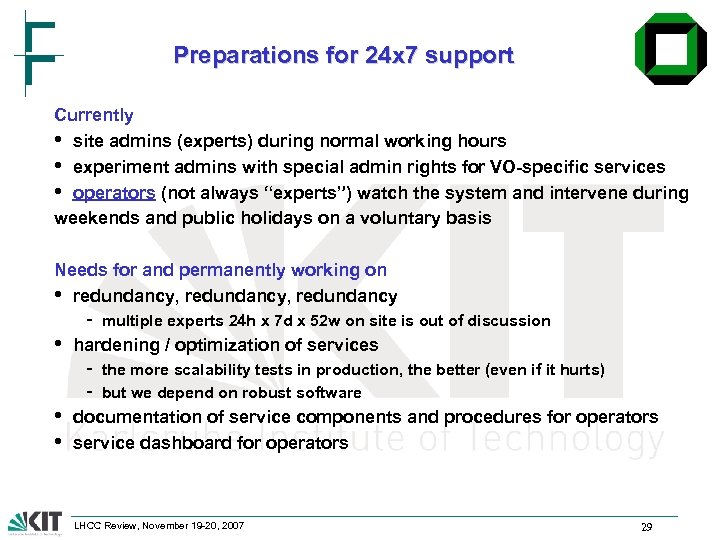

Preparations for 24 x 7 support Currently • site admins (experts) during normal working hours • experiment admins with special admin rights for VO-specific services • operators (not always “experts”) watch the system and intervene during weekends and public holidays on a voluntary basis Needs for and permanently working on • redundancy, redundancy • • • - multiple experts 24 h x 7 d x 52 w on site is out of discussion hardening / optimization of services - the more scalability tests in production, the better (even if it hurts) but we depend on robust software documentation of service components and procedures for operators service dashboard for operators LHCC Review, November 19 -20, 2007 29

Preparations for 24 x 7 support Currently • site admins (experts) during normal working hours • experiment admins with special admin rights for VO-specific services • operators (not always “experts”) watch the system and intervene during weekends and public holidays on a voluntary basis Needs for and permanently working on • redundancy, redundancy • • • - multiple experts 24 h x 7 d x 52 w on site is out of discussion hardening / optimization of services - the more scalability tests in production, the better (even if it hurts) but we depend on robust software documentation of service components and procedures for operators service dashboard for operators LHCC Review, November 19 -20, 2007 29

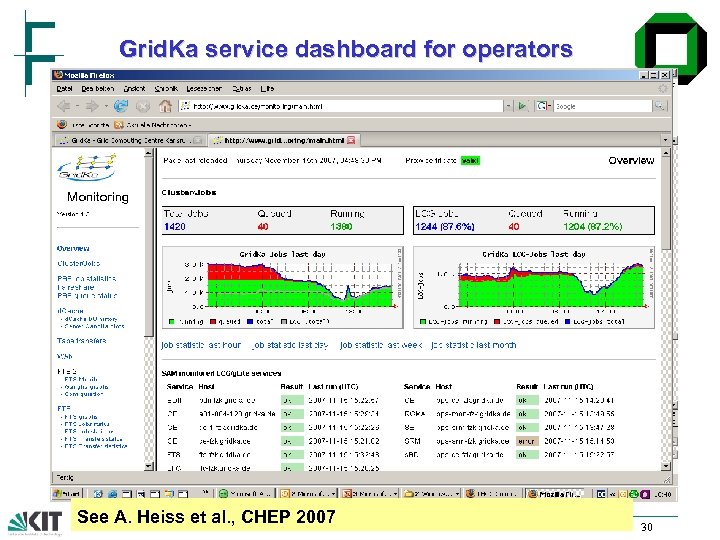

Grid. Ka service dashboard for operators See A. Heiss et al. , CHEP 2007 LHCC Review, November 19 -20, 2007 30

Grid. Ka service dashboard for operators See A. Heiss et al. , CHEP 2007 LHCC Review, November 19 -20, 2007 30

6. Plans for 2008 LHCC Review, November 19 -20, 2007 31

6. Plans for 2008 LHCC Review, November 19 -20, 2007 31

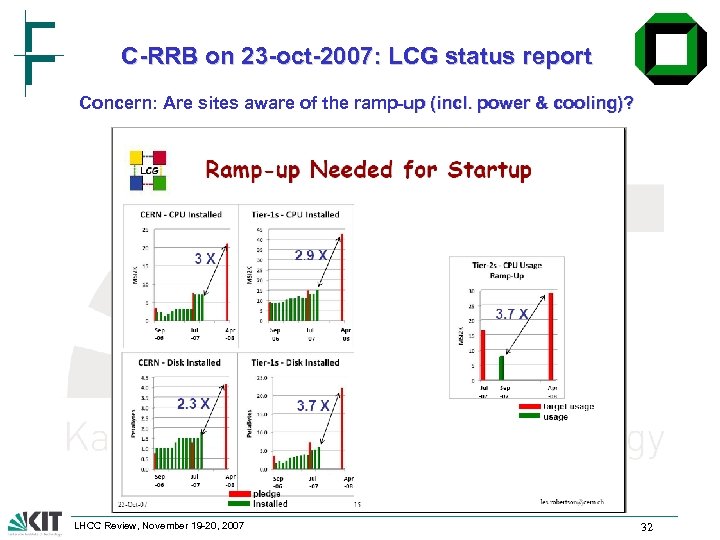

C-RRB on 23 -oct-2007: LCG status report Concern: Are sites aware of the ramp-up (incl. power & cooling)? LHCC Review, November 19 -20, 2007 32

C-RRB on 23 -oct-2007: LCG status report Concern: Are sites aware of the ramp-up (incl. power & cooling)? LHCC Review, November 19 -20, 2007 32

Electricity and cooling at Grid. Ka Planning & upgrades done during the last 3 years • • • second (redundant) main power line available since 2007 3(+1; redundancy) x 600 k. W new chillers available 1 MW of cooling (water cooling) capacity ready for 2008 Capacity not an issue, but concerned about running cost • • started benchmarking of compute and el. power in 2002 efficiency (ratio of SPECint / power consumption) enters into call for tenders since 2004 (“penalty” of 4 €/W at selection) • • many discussions with providers (Intel, AMD, IBM, …) contributing to HEPi. X benchmarking group and publishing results LHCC Review, November 19 -20, 2007 33

Electricity and cooling at Grid. Ka Planning & upgrades done during the last 3 years • • • second (redundant) main power line available since 2007 3(+1; redundancy) x 600 k. W new chillers available 1 MW of cooling (water cooling) capacity ready for 2008 Capacity not an issue, but concerned about running cost • • started benchmarking of compute and el. power in 2002 efficiency (ratio of SPECint / power consumption) enters into call for tenders since 2004 (“penalty” of 4 €/W at selection) • • many discussions with providers (Intel, AMD, IBM, …) contributing to HEPi. X benchmarking group and publishing results LHCC Review, November 19 -20, 2007 33

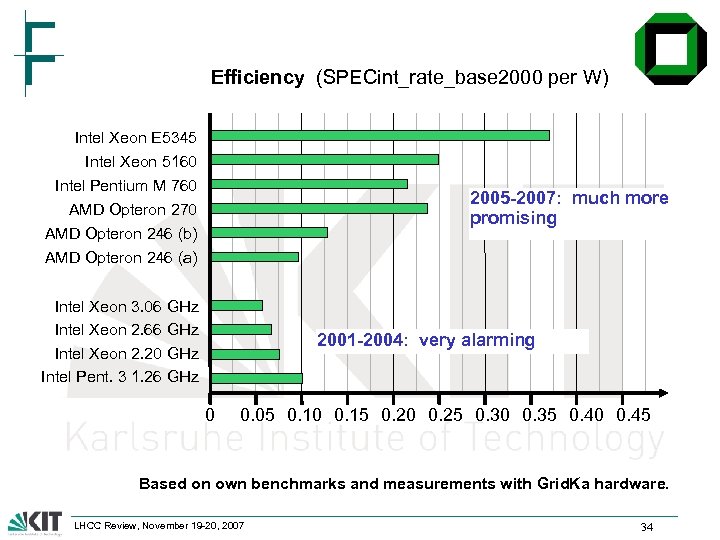

Efficiency (SPECint_rate_base 2000 per W) Intel Xeon E 5345 Intel Xeon 5160 Intel Pentium M 760 AMD Opteron 270 AMD Opteron 246 (b) AMD Opteron 246 (a) 2005 -2007: much more promising Intel Xeon 3. 06 GHz Intel Xeon 2. 66 GHz Intel Xeon 2. 20 GHz Intel Pent. 3 1. 26 GHz 2001 -2004: very alarming 0 0. 05 0. 10 0. 15 0. 20 0. 25 0. 30 0. 35 0. 40 0. 45 Based on own benchmarks and measurements with Grid. Ka hardware. LHCC Review, November 19 -20, 2007 34

Efficiency (SPECint_rate_base 2000 per W) Intel Xeon E 5345 Intel Xeon 5160 Intel Pentium M 760 AMD Opteron 270 AMD Opteron 246 (b) AMD Opteron 246 (a) 2005 -2007: much more promising Intel Xeon 3. 06 GHz Intel Xeon 2. 66 GHz Intel Xeon 2. 20 GHz Intel Pent. 3 1. 26 GHz 2001 -2004: very alarming 0 0. 05 0. 10 0. 15 0. 20 0. 25 0. 30 0. 35 0. 40 0. 45 Based on own benchmarks and measurements with Grid. Ka hardware. LHCC Review, November 19 -20, 2007 34

Extensions for 04/2008: everything is bought ! Oct’ 07 • 40 new cabinets delivered and installed • 1/3 of CPUs (~130 machines) delivered Nov’ 07: arrival and base installation of • all new networking components (incl. cabling) • remaining 2/3 of CPUs • tape cartridges & drives Nov/Dec’ 07: • arrival of 2. 3 PB disks (incl. non-LHC) + servers Jan-Mar’ 08: installations, tests, acceptance, bug fixes, … LHCC Review, November 19 -20, 2007 35

Extensions for 04/2008: everything is bought ! Oct’ 07 • 40 new cabinets delivered and installed • 1/3 of CPUs (~130 machines) delivered Nov’ 07: arrival and base installation of • all new networking components (incl. cabling) • remaining 2/3 of CPUs • tape cartridges & drives Nov/Dec’ 07: • arrival of 2. 3 PB disks (incl. non-LHC) + servers Jan-Mar’ 08: installations, tests, acceptance, bug fixes, … LHCC Review, November 19 -20, 2007 35

Summary • Grid. Ka contributes with full Mo. U 2007 resources - • Good collaboration with - • sites, developers and experiments (e. g. local / remote VO admins) Much effort spent into - • we are ready for the April’ 08 ramp-up service hardening (redundancy …) tools and procedures for operations scalability and stability analysis access performance optimization (e. g. tape reads) This is still a necessity which requires - time of admins patience and understanding by customers …sometimes at the expense of reliability measures LHCC Review, November 19 -20, 2007 36

Summary • Grid. Ka contributes with full Mo. U 2007 resources - • Good collaboration with - • sites, developers and experiments (e. g. local / remote VO admins) Much effort spent into - • we are ready for the April’ 08 ramp-up service hardening (redundancy …) tools and procedures for operations scalability and stability analysis access performance optimization (e. g. tape reads) This is still a necessity which requires - time of admins patience and understanding by customers …sometimes at the expense of reliability measures LHCC Review, November 19 -20, 2007 36