072dce30bf46b53e86eb4962c2c30407.ppt

- Количество слайдов: 88

Formal Methods and Computer Security John Mitchell Stanford University

Formal Methods and Computer Security John Mitchell Stanford University

Invitation • I'd like to invite you to speak about the role of formal methods in computer security. • This audience is … on the systems end … • If you're interested, let me know and we can work out the details.

Invitation • I'd like to invite you to speak about the role of formal methods in computer security. • This audience is … on the systems end … • If you're interested, let me know and we can work out the details.

Outline u What’s a “formal method”? u Java bytecode verification u Protocol analysis • Model checking • Protocol logic u Trust management • Access control policy language

Outline u What’s a “formal method”? u Java bytecode verification u Protocol analysis • Model checking • Protocol logic u Trust management • Access control policy language

Big Picture u Biggest problem in CS • Produce good software efficiently u Best tool • The computer u Therefore • Future improvements in computer science/industry depend on our ability to automate software design, development, and quality control processes

Big Picture u Biggest problem in CS • Produce good software efficiently u Best tool • The computer u Therefore • Future improvements in computer science/industry depend on our ability to automate software design, development, and quality control processes

Formal method u Analyze a system from its description • Executable code • Specification (possibly not executable) u Analysis based on correspondence between system description and properties of interest • Semantics of code • Semantics of specification language

Formal method u Analyze a system from its description • Executable code • Specification (possibly not executable) u Analysis based on correspondence between system description and properties of interest • Semantics of code • Semantics of specification language

![Example: TCAS [Levison, Dill, …] u Specification • Many pages of logical formulas specifying Example: TCAS [Levison, Dill, …] u Specification • Many pages of logical formulas specifying](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-6.jpg) Example: TCAS [Levison, Dill, …] u Specification • Many pages of logical formulas specifying how TCAS responds to sensor inputs u Analysis • If module satisfies specification, and aircraft proceeds as directed, then no collisions will occur u Method • Logical deduction, based on formal rules

Example: TCAS [Levison, Dill, …] u Specification • Many pages of logical formulas specifying how TCAS responds to sensor inputs u Analysis • If module satisfies specification, and aircraft proceeds as directed, then no collisions will occur u Method • Logical deduction, based on formal rules

Formal methods: good and bad u Strengths • Formal rules captures years of experience • Precise, can be automated u Weaknesses • Some subtleties are hard to formalize • Methods cumbersome, time consuming

Formal methods: good and bad u Strengths • Formal rules captures years of experience • Precise, can be automated u Weaknesses • Some subtleties are hard to formalize • Methods cumbersome, time consuming

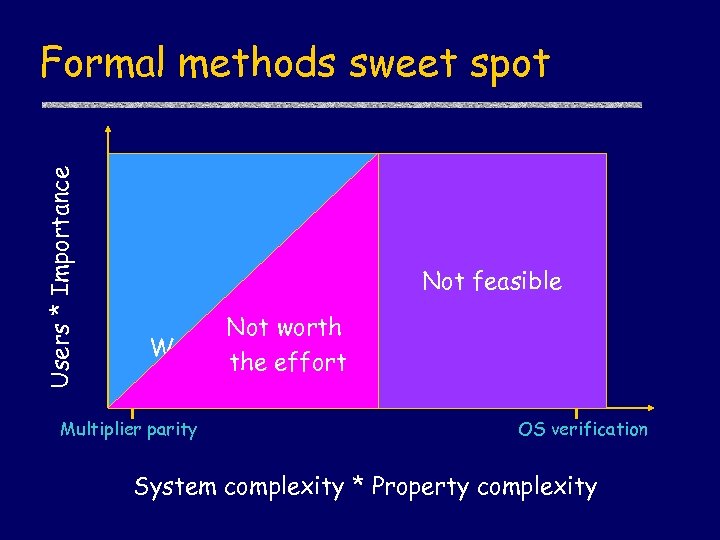

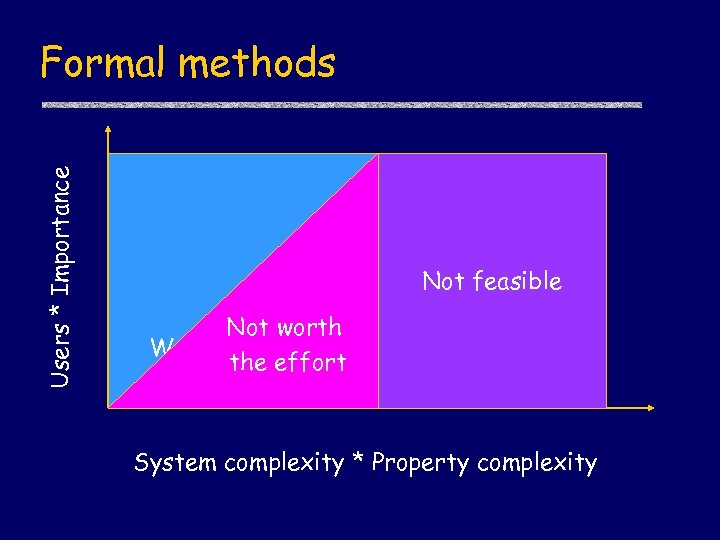

Users * Importance Formal methods sweet spot Not feasible Not worth Worthwhile the effort Multiplier parity OS verification System complexity * Property complexity

Users * Importance Formal methods sweet spot Not feasible Not worth Worthwhile the effort Multiplier parity OS verification System complexity * Property complexity

Target areas u Hardware verification u Program verification • Prove properties of programs • Requirements capture and analysis • Type checking and “semantic analysis” u Computer security • Mobile code security • Protocol analysis • Access control policy languages, analysis

Target areas u Hardware verification u Program verification • Prove properties of programs • Requirements capture and analysis • Type checking and “semantic analysis” u Computer security • Mobile code security • Protocol analysis • Access control policy languages, analysis

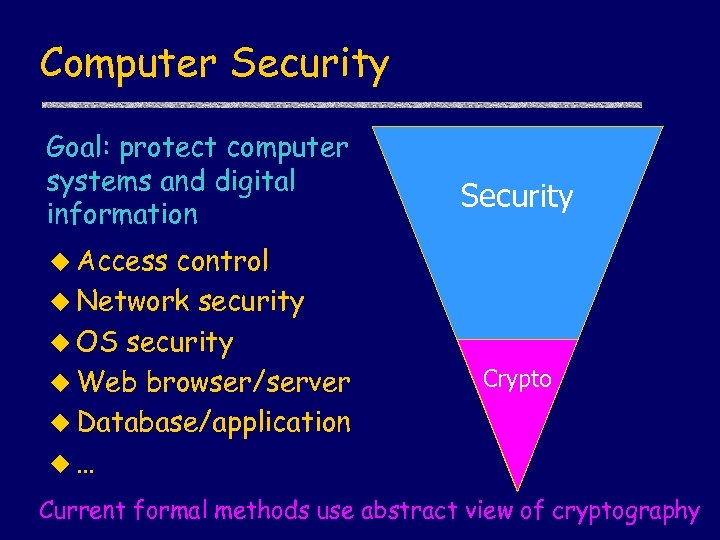

Computer Security Goal: protect computer systems and digital information control u Network security u OS security u Web browser/server u Database/application u… Security u Access Crypto Current formal methods use abstract view of cryptography

Computer Security Goal: protect computer systems and digital information control u Network security u OS security u Web browser/server u Database/application u… Security u Access Crypto Current formal methods use abstract view of cryptography

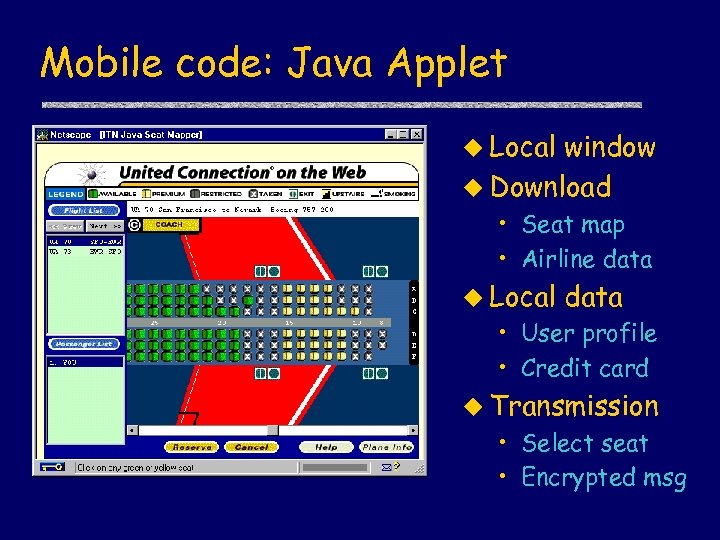

Mobile code: Java Applet u Local window u Download • Seat map • Airline data u Local data • User profile • Credit card u Transmission • Select seat • Encrypted msg

Mobile code: Java Applet u Local window u Download • Seat map • Airline data u Local data • User profile • Credit card u Transmission • Select seat • Encrypted msg

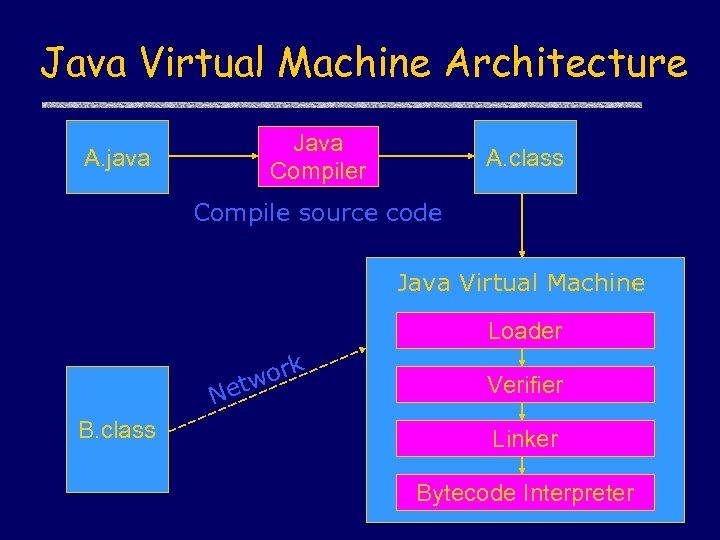

Java Virtual Machine Architecture Java Compiler A. java A. class Compile source code Java Virtual Machine Loader ork tw Ne B. class Verifier Linker Bytecode Interpreter

Java Virtual Machine Architecture Java Compiler A. java A. class Compile source code Java Virtual Machine Loader ork tw Ne B. class Verifier Linker Bytecode Interpreter

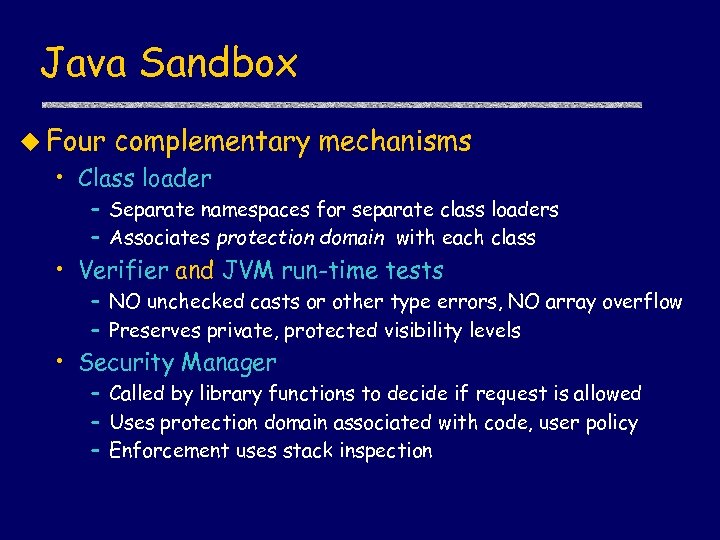

Java Sandbox u Four complementary mechanisms • Class loader – Separate namespaces for separate class loaders – Associates protection domain with each class • Verifier and JVM run-time tests – NO unchecked casts or other type errors, NO array overflow – Preserves private, protected visibility levels • Security Manager – Called by library functions to decide if request is allowed – Uses protection domain associated with code, user policy – Enforcement uses stack inspection

Java Sandbox u Four complementary mechanisms • Class loader – Separate namespaces for separate class loaders – Associates protection domain with each class • Verifier and JVM run-time tests – NO unchecked casts or other type errors, NO array overflow – Preserves private, protected visibility levels • Security Manager – Called by library functions to decide if request is allowed – Uses protection domain associated with code, user policy – Enforcement uses stack inspection

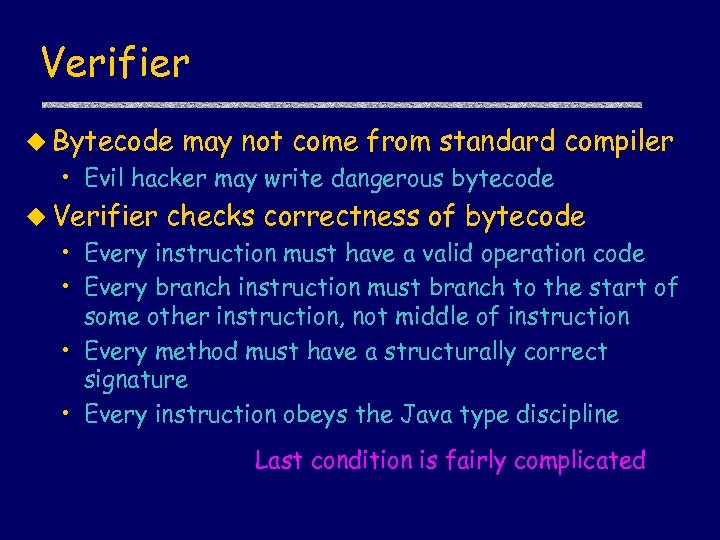

Verifier u Bytecode may not come from standard compiler • Evil hacker may write dangerous bytecode u Verifier checks correctness of bytecode • Every instruction must have a valid operation code • Every branch instruction must branch to the start of some other instruction, not middle of instruction • Every method must have a structurally correct signature • Every instruction obeys the Java type discipline Last condition is fairly complicated .

Verifier u Bytecode may not come from standard compiler • Evil hacker may write dangerous bytecode u Verifier checks correctness of bytecode • Every instruction must have a valid operation code • Every branch instruction must branch to the start of some other instruction, not middle of instruction • Every method must have a structurally correct signature • Every instruction obeys the Java type discipline Last condition is fairly complicated .

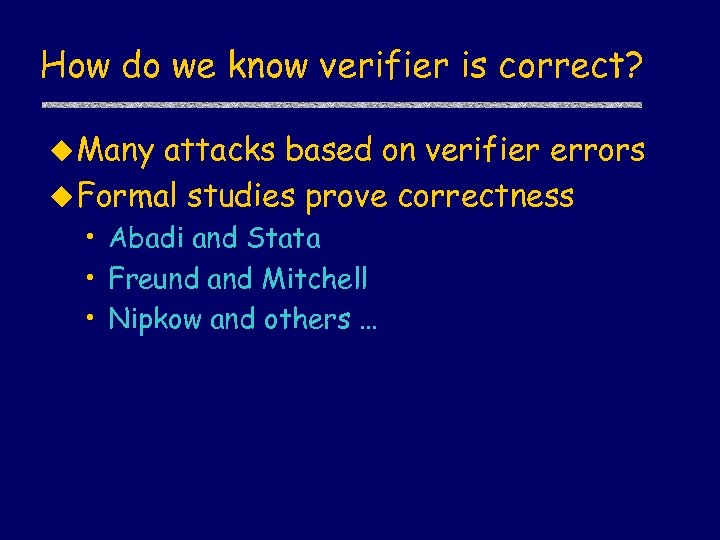

How do we know verifier is correct? u Many attacks based on verifier errors u Formal studies prove correctness • Abadi and Stata • Freund and Mitchell • Nipkow and others …

How do we know verifier is correct? u Many attacks based on verifier errors u Formal studies prove correctness • Abadi and Stata • Freund and Mitchell • Nipkow and others …

A type system for object initialization in the Java bytecode language Stephen Freund John Mitchell Stanford University (Raymie Stata and Martín Abadi, DEC SRC)

A type system for object initialization in the Java bytecode language Stephen Freund John Mitchell Stanford University (Raymie Stata and Martín Abadi, DEC SRC)

![Bytecode/Verifier Specification u Specifications from Sun/Java. Soft: • 30 page text description [Lindholm, Yellin] Bytecode/Verifier Specification u Specifications from Sun/Java. Soft: • 30 page text description [Lindholm, Yellin]](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-17.jpg) Bytecode/Verifier Specification u Specifications from Sun/Java. Soft: • 30 page text description [Lindholm, Yellin] • Reference implementation (~3500 lines of C code) u These are vague and inconsistent u Difficult to reason about: • safety and security properties • correctness of implementation u Type system provides formal spec

Bytecode/Verifier Specification u Specifications from Sun/Java. Soft: • 30 page text description [Lindholm, Yellin] • Reference implementation (~3500 lines of C code) u These are vague and inconsistent u Difficult to reason about: • safety and security properties • correctness of implementation u Type system provides formal spec

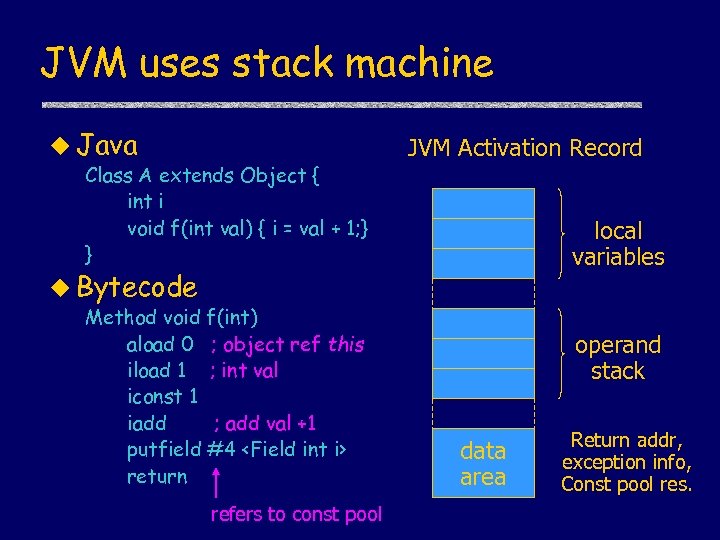

JVM uses stack machine u Java Class A extends Object { int i void f(int val) { i = val + 1; } } JVM Activation Record local variables u Bytecode Method void f(int) aload 0 ; object ref this iload 1 ; int val iconst 1 iadd ; add val +1 putfield #4

JVM uses stack machine u Java Class A extends Object { int i void f(int val) { i = val + 1; } } JVM Activation Record local variables u Bytecode Method void f(int) aload 0 ; object ref this iload 1 ; int val iconst 1 iadd ; add val +1 putfield #4

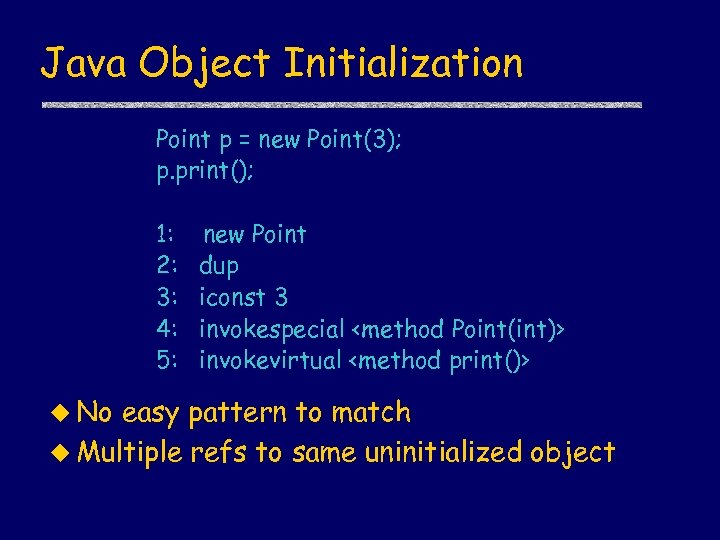

Java Object Initialization Point p = new Point(3); p. print(); 1: 2: 3: 4: 5: u No new Point dup iconst 3 invokespecial

Java Object Initialization Point p = new Point(3); p. print(); 1: 2: 3: 4: 5: u No new Point dup iconst 3 invokespecial

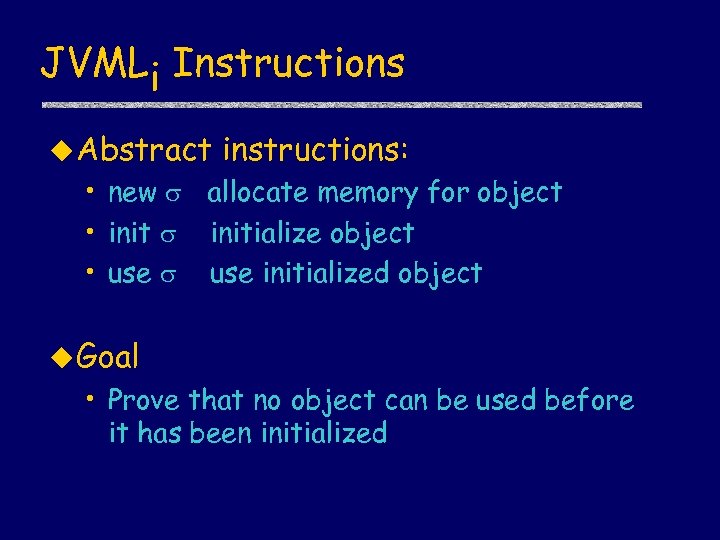

JVMLi Instructions u Abstract instructions: • new allocate memory for object • initialize object • use initialized object u Goal • Prove that no object can be used before it has been initialized

JVMLi Instructions u Abstract instructions: • new allocate memory for object • initialize object • use initialized object u Goal • Prove that no object can be used before it has been initialized

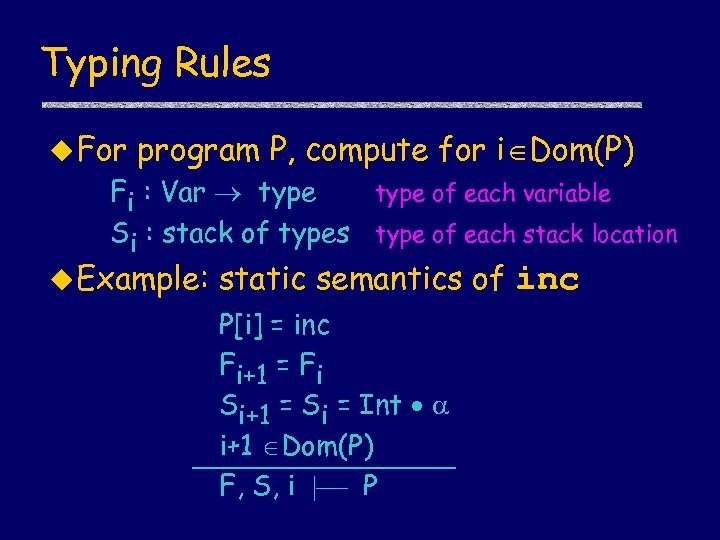

Typing Rules u For program P, compute for i Dom(P) Fi : Var type of each variable Si : stack of types type of each stack location u Example: static semantics of inc P[i] = inc Fi+1 = Fi Si+1 = Si = Int i+1 Dom(P) F, S, i P

Typing Rules u For program P, compute for i Dom(P) Fi : Var type of each variable Si : stack of types type of each stack location u Example: static semantics of inc P[i] = inc Fi+1 = Fi Si+1 = Si = Int i+1 Dom(P) F, S, i P

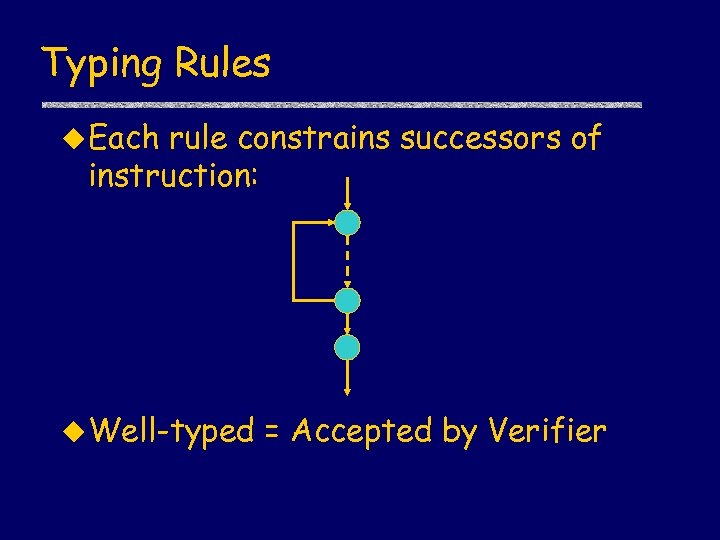

Typing Rules u Each rule constrains successors of instruction: u Well-typed = Accepted by Verifier

Typing Rules u Each rule constrains successors of instruction: u Well-typed = Accepted by Verifier

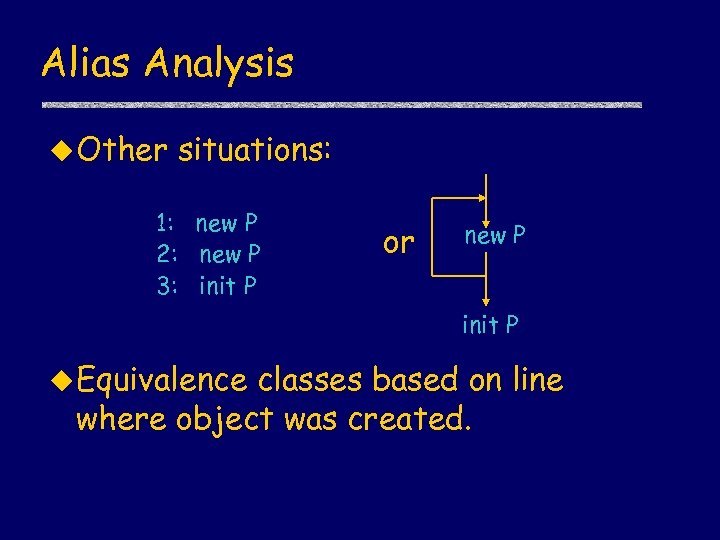

Alias Analysis u Other situations: 1: new P 2: new P 3: init P or new P init P u Equivalence classes based on line where object was created.

Alias Analysis u Other situations: 1: new P 2: new P 3: init P or new P init P u Equivalence classes based on line where object was created.

![The new Instruction u Uninitialized object type placed on stack of types: P[i] = The new Instruction u Uninitialized object type placed on stack of types: P[i] =](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-24.jpg) The new Instruction u Uninitialized object type placed on stack of types: P[i] = new Fi+1 = Fi Si+1 = i Si i S i i Range(Fi) i+1 Dom(P) F, S, i P i : uninitialized object of type allocated on line i.

The new Instruction u Uninitialized object type placed on stack of types: P[i] = new Fi+1 = Fi Si+1 = i Si i S i i Range(Fi) i+1 Dom(P) F, S, i P i : uninitialized object of type allocated on line i.

![The init Instruction u Substitution of initialized object type for uninitialized object type: P[i] The init Instruction u Substitution of initialized object type for uninitialized object type: P[i]](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-25.jpg) The init Instruction u Substitution of initialized object type for uninitialized object type: P[i] = init Si = j , j Dom(P) Si+1 =[ / j] Fi+1 =[ / j] Fi i+1 Dom(P) F, S, i P

The init Instruction u Substitution of initialized object type for uninitialized object type: P[i] = init Si = j , j Dom(P) Si+1 =[ / j] Fi+1 =[ / j] Fi i+1 Dom(P) F, S, i P

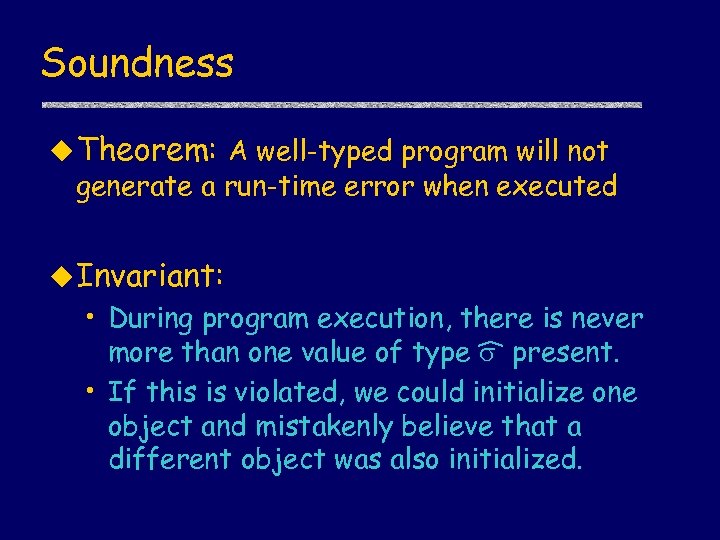

Soundness u Theorem: A well-typed program will not generate a run-time error when executed u Invariant: • During program execution, there is never more than one value of type present. • If this is violated, we could initialize one object and mistakenly believe that a different object was also initialized.

Soundness u Theorem: A well-typed program will not generate a run-time error when executed u Invariant: • During program execution, there is never more than one value of type present. • If this is violated, we could initialize one object and mistakenly believe that a different object was also initialized.

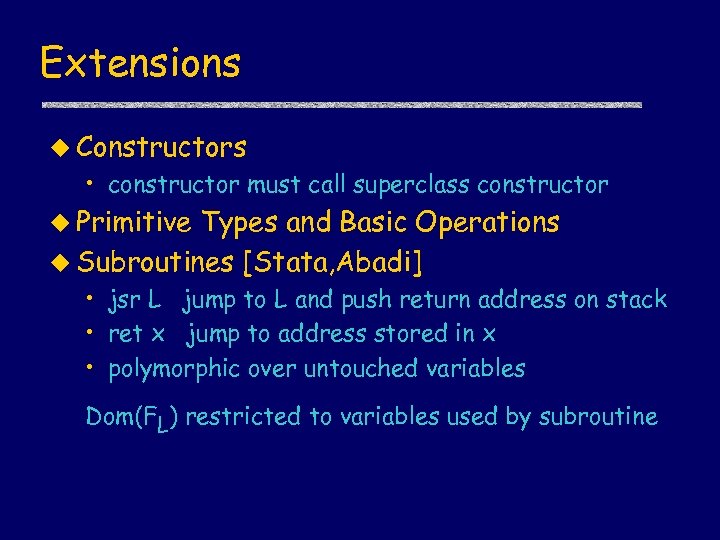

Extensions u Constructors • constructor must call superclass constructor u Primitive Types and Basic Operations u Subroutines [Stata, Abadi] • jsr L jump to L and push return address on stack • ret x jump to address stored in x • polymorphic over untouched variables Dom(FL) restricted to variables used by subroutine

Extensions u Constructors • constructor must call superclass constructor u Primitive Types and Basic Operations u Subroutines [Stata, Abadi] • jsr L jump to L and push return address on stack • ret x jump to address stored in x • polymorphic over untouched variables Dom(FL) restricted to variables used by subroutine

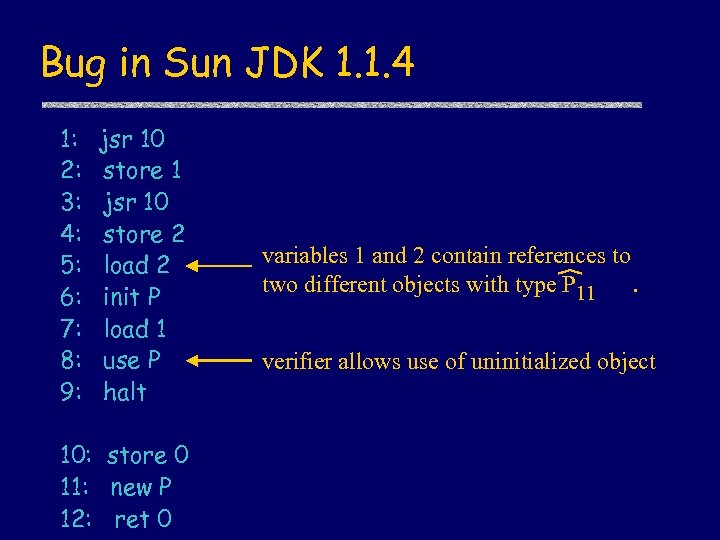

Bug in Sun JDK 1. 1. 4 1: 2: 3: 4: 5: 6: 7: 8: 9: jsr 10 store 1 jsr 10 store 2 load 2 init P load 1 use P halt 10: store 0 11: new P 12: ret 0 variables 1 and 2 contain references to two different objects with type P 11. verifier allows use of uninitialized object

Bug in Sun JDK 1. 1. 4 1: 2: 3: 4: 5: 6: 7: 8: 9: jsr 10 store 1 jsr 10 store 2 load 2 init P load 1 use P halt 10: store 0 11: new P 12: ret 0 variables 1 and 2 contain references to two different objects with type P 11. verifier allows use of uninitialized object

![Related Work u Java type systems • Java Language [DE 97], [Syme 97], . Related Work u Java type systems • Java Language [DE 97], [Syme 97], .](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-29.jpg) Related Work u Java type systems • Java Language [DE 97], [Syme 97], . . . • JVML [SA 98], [Qian 98], [HT 98], . . . u Other • • approaches Concurrent constraint programs [Saraswat 97] defensive-JVM [Cohen 97] data flow analysis frameworks [Goldberg 97] Experimental tests [SMB 97] u TIL / TAL [Harper, Morrisett, et al. ]

Related Work u Java type systems • Java Language [DE 97], [Syme 97], . . . • JVML [SA 98], [Qian 98], [HT 98], . . . u Other • • approaches Concurrent constraint programs [Saraswat 97] defensive-JVM [Cohen 97] data flow analysis frameworks [Goldberg 97] Experimental tests [SMB 97] u TIL / TAL [Harper, Morrisett, et al. ]

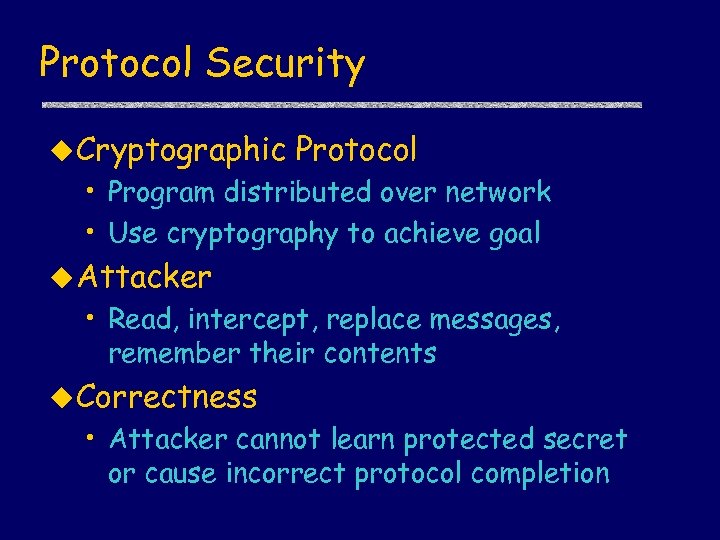

Protocol Security u Cryptographic Protocol • Program distributed over network • Use cryptography to achieve goal u Attacker • Read, intercept, replace messages, remember their contents u Correctness • Attacker cannot learn protected secret or cause incorrect protocol completion

Protocol Security u Cryptographic Protocol • Program distributed over network • Use cryptography to achieve goal u Attacker • Read, intercept, replace messages, remember their contents u Correctness • Attacker cannot learn protected secret or cause incorrect protocol completion

Example Protocols u Authentication Protocols • Clark-Jacob report >35 examples (1997) • ISO/IEC 9798, Needham-S, Denning. Sacco, Otway-Rees, Woo-Lam, Kerberos u Handshake and data transfer • SSL, SSH, SFTP, FTPS, … u Contract signing, funds transfer, … u Many others

Example Protocols u Authentication Protocols • Clark-Jacob report >35 examples (1997) • ISO/IEC 9798, Needham-S, Denning. Sacco, Otway-Rees, Woo-Lam, Kerberos u Handshake and data transfer • SSL, SSH, SFTP, FTPS, … u Contract signing, funds transfer, … u Many others

Characteristics u Relatively simple distributed programs • 5 -7 steps, 3 -10 fields per message, … u Mission critical • Security of data, credit card numbers, … u Subtle • Attack may combine data from many sessions Good target formal methods However: crypto is hard to model

Characteristics u Relatively simple distributed programs • 5 -7 steps, 3 -10 fields per message, … u Mission critical • Security of data, credit card numbers, … u Subtle • Attack may combine data from many sessions Good target formal methods However: crypto is hard to model

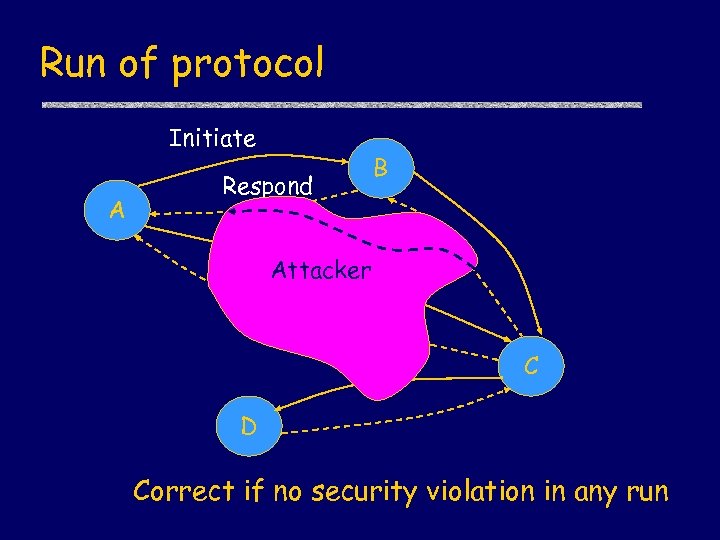

Run of protocol Initiate A Respond B Attacker C D Correct if no security violation in any run

Run of protocol Initiate A Respond B Attacker C D Correct if no security violation in any run

Protocol Analysis Methods u Non-formal approaches (useful, but no tools…) • Some crypto-based proofs [Bellare, Rogaway] • Communicating Turing Machines [Canetti] u BAN and related logics • Axiomatic semantics of protocol steps u Methods based on operational semantics • Intruder model derived from Dolev-Yao • Protocol gives rise to set of traces – Denotation of protocol = set of runs involving arbitrary number of principals plus intruder

Protocol Analysis Methods u Non-formal approaches (useful, but no tools…) • Some crypto-based proofs [Bellare, Rogaway] • Communicating Turing Machines [Canetti] u BAN and related logics • Axiomatic semantics of protocol steps u Methods based on operational semantics • Intruder model derived from Dolev-Yao • Protocol gives rise to set of traces – Denotation of protocol = set of runs involving arbitrary number of principals plus intruder

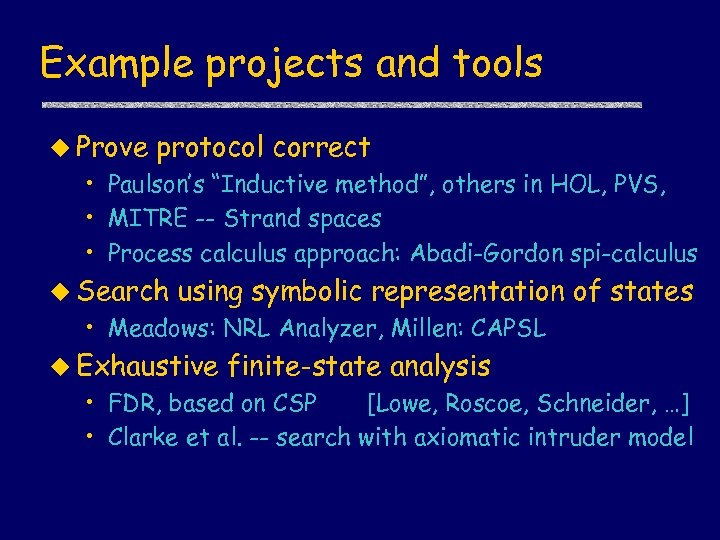

Example projects and tools u Prove protocol correct • Paulson’s “Inductive method”, others in HOL, PVS, • MITRE -- Strand spaces • Process calculus approach: Abadi-Gordon spi-calculus u Search using symbolic representation of states • Meadows: NRL Analyzer, Millen: CAPSL u Exhaustive finite-state analysis • FDR, based on CSP [Lowe, Roscoe, Schneider, …] • Clarke et al. -- search with axiomatic intruder model

Example projects and tools u Prove protocol correct • Paulson’s “Inductive method”, others in HOL, PVS, • MITRE -- Strand spaces • Process calculus approach: Abadi-Gordon spi-calculus u Search using symbolic representation of states • Meadows: NRL Analyzer, Millen: CAPSL u Exhaustive finite-state analysis • FDR, based on CSP [Lowe, Roscoe, Schneider, …] • Clarke et al. -- search with axiomatic intruder model

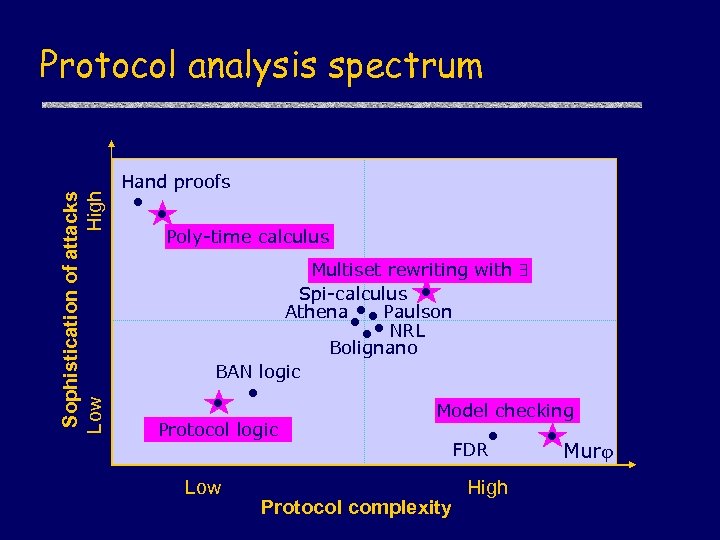

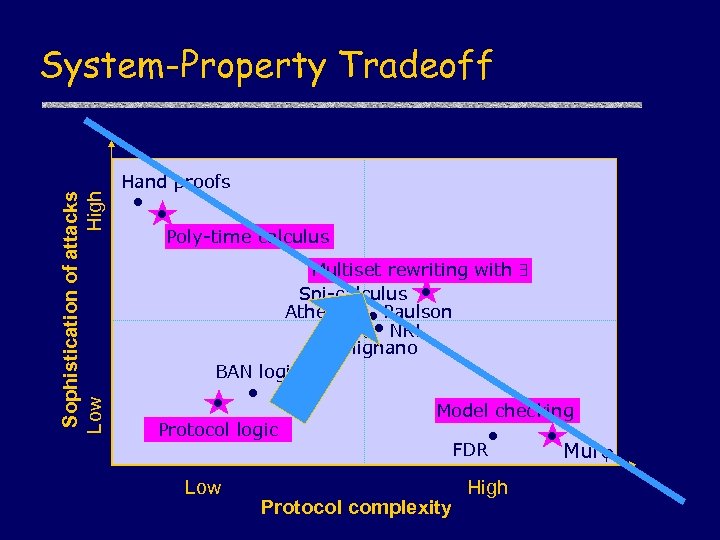

Sophistication of attacks Low High Protocol analysis spectrum Hand proofs Poly-time calculus Multiset rewriting with Spi-calculus Athena Paulson NRL Bolignano BAN logic Protocol logic Low Model checking FDR Protocol complexity High Murj

Sophistication of attacks Low High Protocol analysis spectrum Hand proofs Poly-time calculus Multiset rewriting with Spi-calculus Athena Paulson NRL Bolignano BAN logic Protocol logic Low Model checking FDR Protocol complexity High Murj

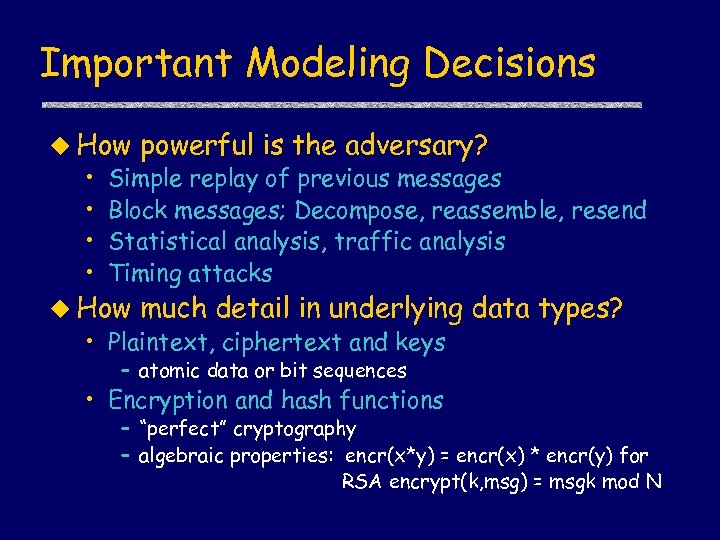

Important Modeling Decisions u How powerful is the adversary? • Simple replay of previous messages • Block messages; Decompose, reassemble, resend • Statistical analysis, traffic analysis • Timing attacks u How much detail in underlying • Plaintext, ciphertext and keys data types? – atomic data or bit sequences • Encryption and hash functions – “perfect” cryptography – algebraic properties: encr(x*y) = encr(x) * encr(y) for RSA encrypt(k, msg) = msgk mod N

Important Modeling Decisions u How powerful is the adversary? • Simple replay of previous messages • Block messages; Decompose, reassemble, resend • Statistical analysis, traffic analysis • Timing attacks u How much detail in underlying • Plaintext, ciphertext and keys data types? – atomic data or bit sequences • Encryption and hash functions – “perfect” cryptography – algebraic properties: encr(x*y) = encr(x) * encr(y) for RSA encrypt(k, msg) = msgk mod N

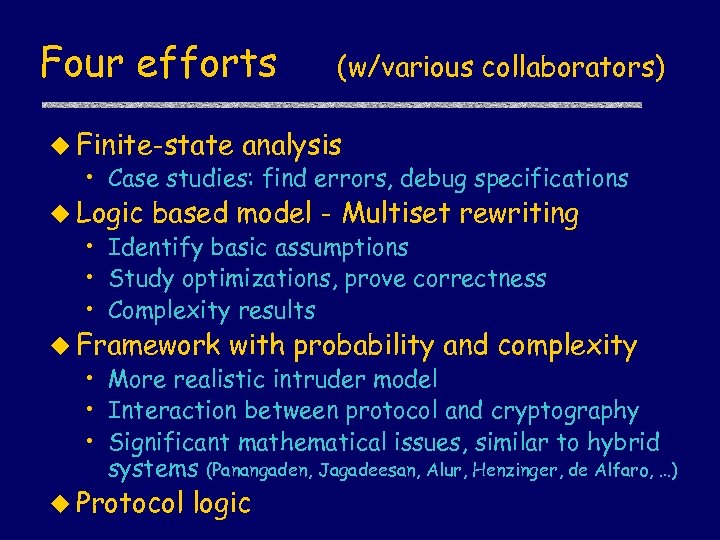

Four efforts (w/various collaborators) u Finite-state analysis • Case studies: find errors, debug specifications u Logic based model - Multiset rewriting • Identify basic assumptions • Study optimizations, prove correctness • Complexity results u Framework with probability and complexity • More realistic intruder model • Interaction between protocol and cryptography • Significant mathematical issues, similar to hybrid systems (Panangaden, Jagadeesan, Alur, Henzinger, de Alfaro, …) u Protocol logic

Four efforts (w/various collaborators) u Finite-state analysis • Case studies: find errors, debug specifications u Logic based model - Multiset rewriting • Identify basic assumptions • Study optimizations, prove correctness • Complexity results u Framework with probability and complexity • More realistic intruder model • Interaction between protocol and cryptography • Significant mathematical issues, similar to hybrid systems (Panangaden, Jagadeesan, Alur, Henzinger, de Alfaro, …) u Protocol logic

Rest of talk u Model checking • Contract signing u MSR • Overview, complexity results u PPoly • Key definitions, concepts u Protocol logic • Short overview Likely to run out of time …

Rest of talk u Model checking • Contract signing u MSR • Overview, complexity results u PPoly • Key definitions, concepts u Protocol logic • Short overview Likely to run out of time …

Contract-signing protocols John Mitchell, Vitaly Shmatikov Stanford University Subsequent work by Chadha, Kanovich, Scedrov, Other analysis by Kremer, Raskin

Contract-signing protocols John Mitchell, Vitaly Shmatikov Stanford University Subsequent work by Chadha, Kanovich, Scedrov, Other analysis by Kremer, Raskin

Example Immunity deal u Both parties want to sign the contract u Neither wants to commit first

Example Immunity deal u Both parties want to sign the contract u Neither wants to commit first

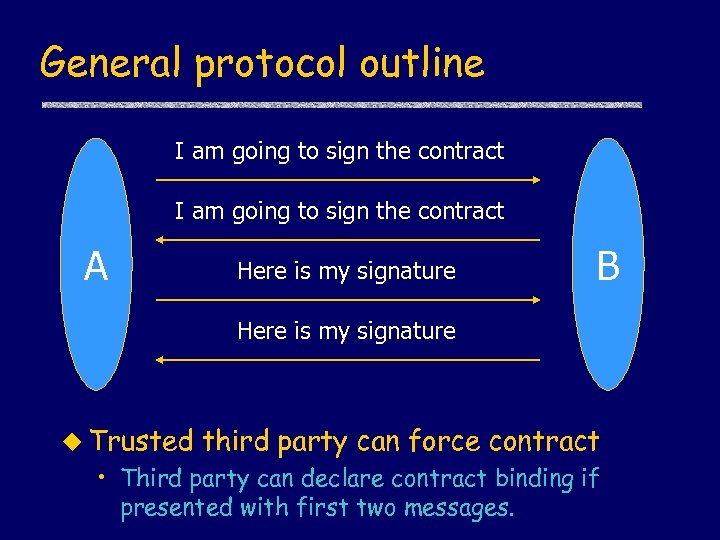

General protocol outline I am going to sign the contract A Here is my signature B Here is my signature u Trusted third party can force contract • Third party can declare contract binding if presented with first two messages.

General protocol outline I am going to sign the contract A Here is my signature B Here is my signature u Trusted third party can force contract • Third party can declare contract binding if presented with first two messages.

Assumptions u Cannot trust communication channel • Messages may be lost • Attacker may insert additional messages u Cannot trust other party in protocol u Third party is generally reliable • Use only if something goes wrong • Want TTP accountability

Assumptions u Cannot trust communication channel • Messages may be lost • Attacker may insert additional messages u Cannot trust other party in protocol u Third party is generally reliable • Use only if something goes wrong • Want TTP accountability

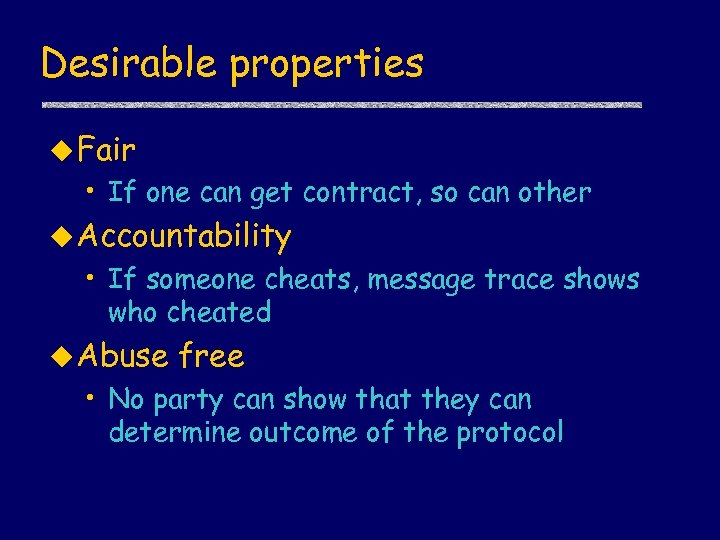

Desirable properties u Fair • If one can get contract, so can other u Accountability • If someone cheats, message trace shows who cheated u Abuse free • No party can show that they can determine outcome of the protocol

Desirable properties u Fair • If one can get contract, so can other u Accountability • If someone cheats, message trace shows who cheated u Abuse free • No party can show that they can determine outcome of the protocol

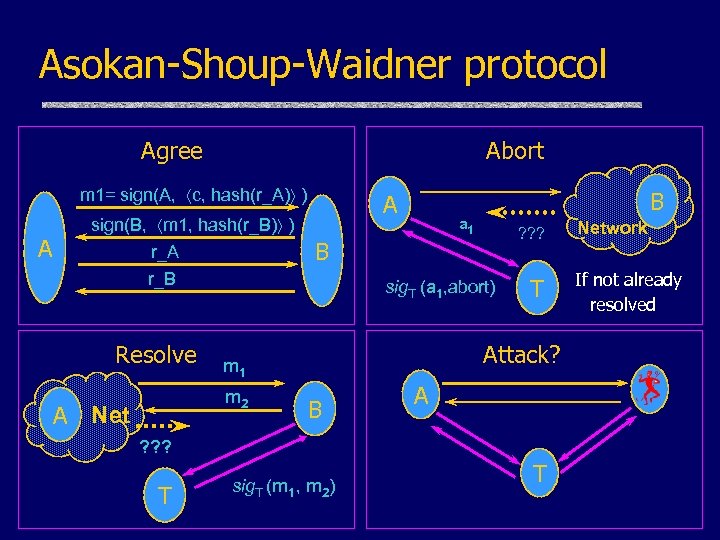

Asokan-Shoup-Waidner protocol Agree Abort m 1= sign(A, c, hash(r_A) ) A sign(B, m 1, hash(r_B) ) r_A A Resolve ? ? ? sig. T (a 1, abort) T Attack? m 1 m 2 A Net a 1 B r_B B B A ? ? ? T sig. T (m 1, m 2) T Network If not already resolved

Asokan-Shoup-Waidner protocol Agree Abort m 1= sign(A, c, hash(r_A) ) A sign(B, m 1, hash(r_B) ) r_A A Resolve ? ? ? sig. T (a 1, abort) T Attack? m 1 m 2 A Net a 1 B r_B B B A ? ? ? T sig. T (m 1, m 2) T Network If not already resolved

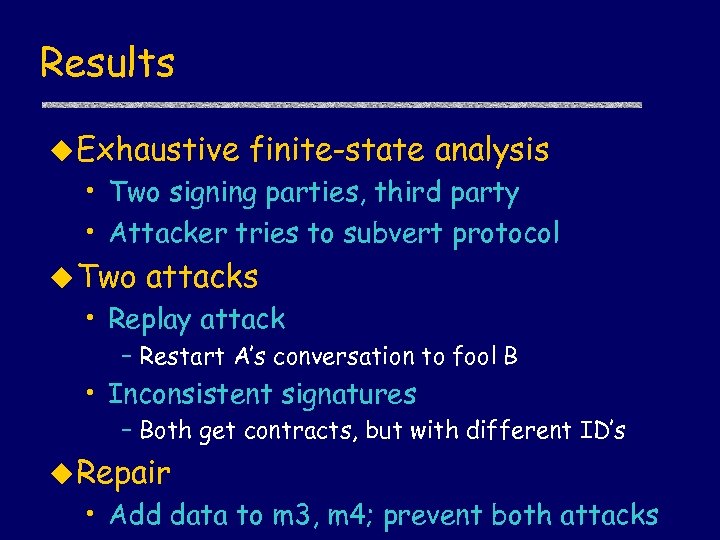

Results u Exhaustive finite-state analysis • Two signing parties, third party • Attacker tries to subvert protocol u Two attacks • Replay attack – Restart A’s conversation to fool B • Inconsistent signatures – Both get contracts, but with different ID’s u Repair • Add data to m 3, m 4; prevent both attacks

Results u Exhaustive finite-state analysis • Two signing parties, third party • Attacker tries to subvert protocol u Two attacks • Replay attack – Restart A’s conversation to fool B • Inconsistent signatures – Both get contracts, but with different ID’s u Repair • Add data to m 3, m 4; prevent both attacks

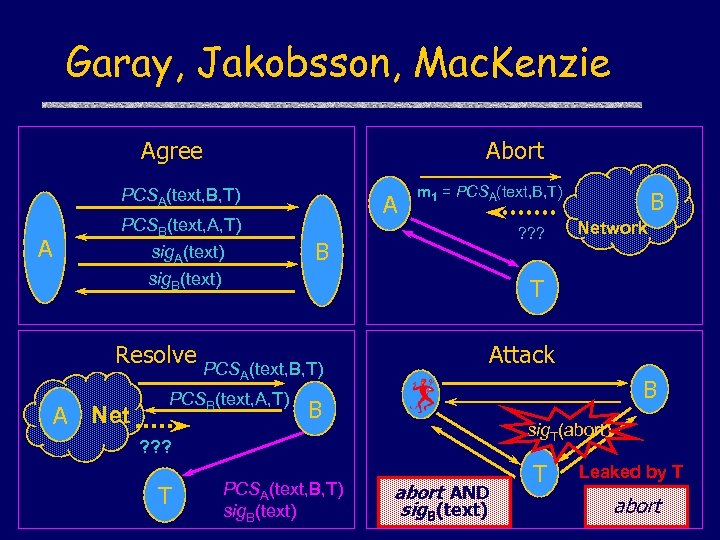

![Related protocol u Designed [Garay, Jakobsson, Mac. Kenzie] to be “abuse free” • B Related protocol u Designed [Garay, Jakobsson, Mac. Kenzie] to be “abuse free” • B](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-47.jpg) Related protocol u Designed [Garay, Jakobsson, Mac. Kenzie] to be “abuse free” • B cannot take msg from A and show to C • Uses special cryptographic primitive • T converts signatures, does not use own u Finite-state analysis • Attack gives A both contract and abort • T colludes weakly, not shown accountable • Simple repair using same crypto primitive

Related protocol u Designed [Garay, Jakobsson, Mac. Kenzie] to be “abuse free” • B cannot take msg from A and show to C • Uses special cryptographic primitive • T converts signatures, does not use own u Finite-state analysis • Attack gives A both contract and abort • T colludes weakly, not shown accountable • Simple repair using same crypto primitive

Garay, Jakobsson, Mac. Kenzie Agree Abort PCSA(text, B, T) A PCSB(text, A, T) sig. A(text) A m 1 = PCSA(text, B, T) ? ? ? B sig. B(text) A(text, B, T) PCSB(text, A, T) Attack B B sig. T(abort) ? ? ? T Network T Resolve PCS A Net B PCSA(text, B, T) sig. B(text) abort AND sig. B(text) T Leaked by T abort

Garay, Jakobsson, Mac. Kenzie Agree Abort PCSA(text, B, T) A PCSB(text, A, T) sig. A(text) A m 1 = PCSA(text, B, T) ? ? ? B sig. B(text) A(text, B, T) PCSB(text, A, T) Attack B B sig. T(abort) ? ? ? T Network T Resolve PCS A Net B PCSA(text, B, T) sig. B(text) abort AND sig. B(text) T Leaked by T abort

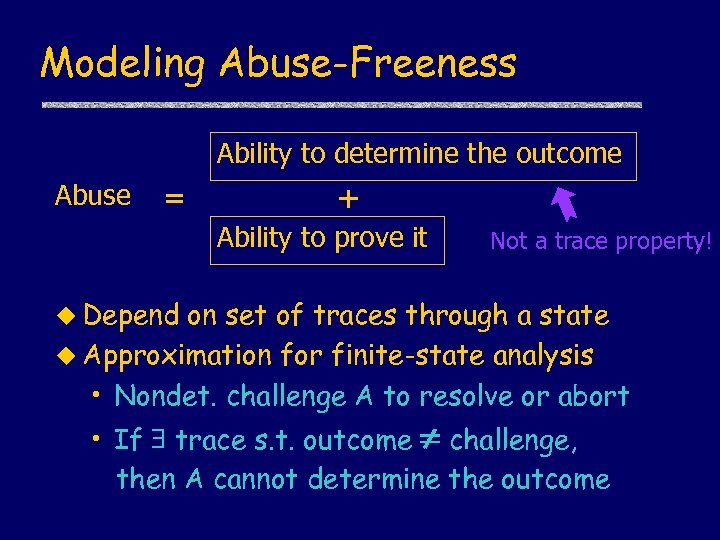

Modeling Abuse-Freeness Ability to determine the outcome Abuse = + Ability to prove it Not a trace property! u Depend on set of traces through a state u Approximation for finite-state analysis • Nondet. challenge A to resolve or abort • If trace s. t. outcome challenge, then A cannot determine the outcome

Modeling Abuse-Freeness Ability to determine the outcome Abuse = + Ability to prove it Not a trace property! u Depend on set of traces through a state u Approximation for finite-state analysis • Nondet. challenge A to resolve or abort • If trace s. t. outcome challenge, then A cannot determine the outcome

Conclusions u Online contract signing is subtle • Fairness • Abuse-freeness • Accountability u Several interdependent subprotocols • Many cases and interleavings u Finite-state tool great for case analysis! • Find bugs in protocols proved correct

Conclusions u Online contract signing is subtle • Fairness • Abuse-freeness • Accountability u Several interdependent subprotocols • Many cases and interleavings u Finite-state tool great for case analysis! • Find bugs in protocols proved correct

Multiset Rewriting and Security Protocol Analysis John Mitchell Stanford University I. Cervesato, N. Durgin, P. Lincoln, A. Scedrov

Multiset Rewriting and Security Protocol Analysis John Mitchell Stanford University I. Cervesato, N. Durgin, P. Lincoln, A. Scedrov

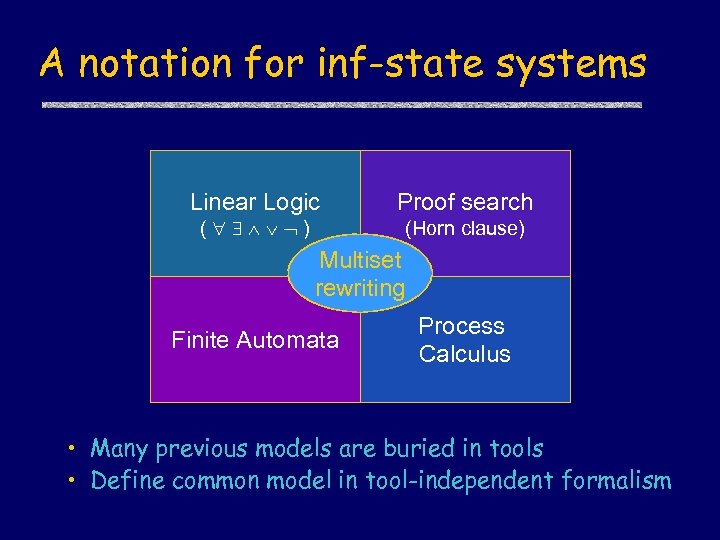

A notation for inf-state systems Linear Logic ( ) Proof search (Horn clause) Multiset rewriting Finite Automata Process Calculus • Many previous models are buried in tools • Define common model in tool-independent formalism

A notation for inf-state systems Linear Logic ( ) Proof search (Horn clause) Multiset rewriting Finite Automata Process Calculus • Many previous models are buried in tools • Define common model in tool-independent formalism

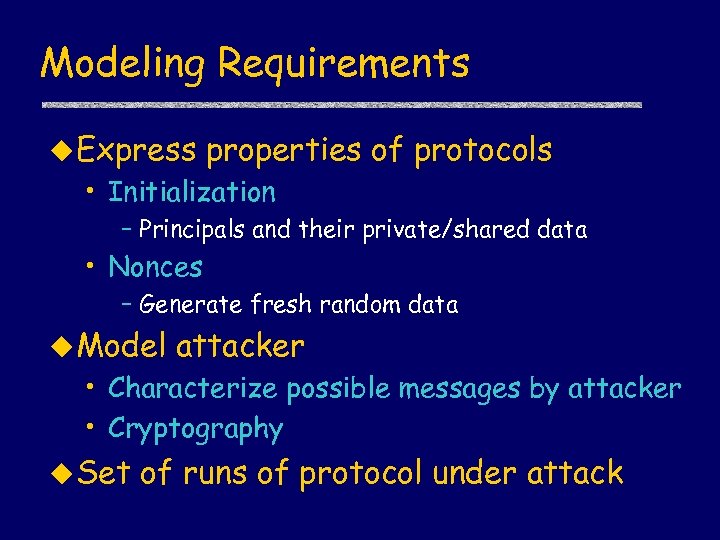

Modeling Requirements u Express properties of protocols • Initialization – Principals and their private/shared data • Nonces – Generate fresh random data u Model attacker • Characterize possible messages by attacker • Cryptography u Set of runs of protocol under attack

Modeling Requirements u Express properties of protocols • Initialization – Principals and their private/shared data • Nonces – Generate fresh random data u Model attacker • Characterize possible messages by attacker • Cryptography u Set of runs of protocol under attack

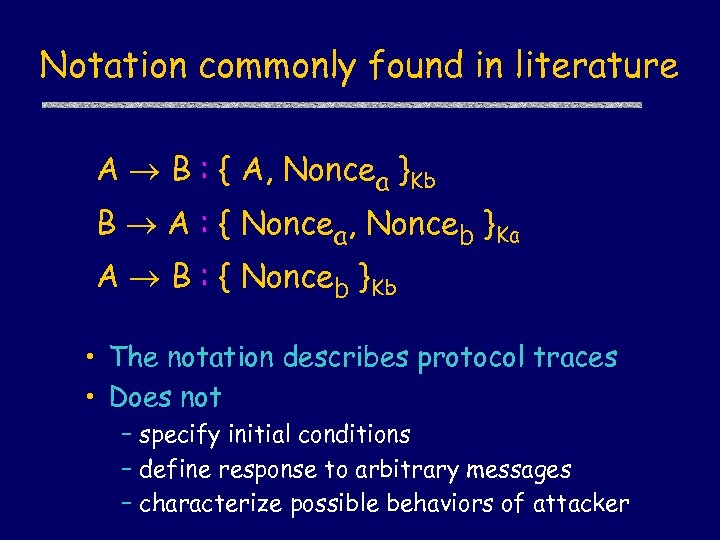

Notation commonly found in literature A B : { A, Noncea }Kb B A : { Noncea, Nonceb }Ka A B : { Nonceb }Kb • The notation describes protocol traces • Does not – specify initial conditions – define response to arbitrary messages – characterize possible behaviors of attacker

Notation commonly found in literature A B : { A, Noncea }Kb B A : { Noncea, Nonceb }Ka A B : { Nonceb }Kb • The notation describes protocol traces • Does not – specify initial conditions – define response to arbitrary messages – characterize possible behaviors of attacker

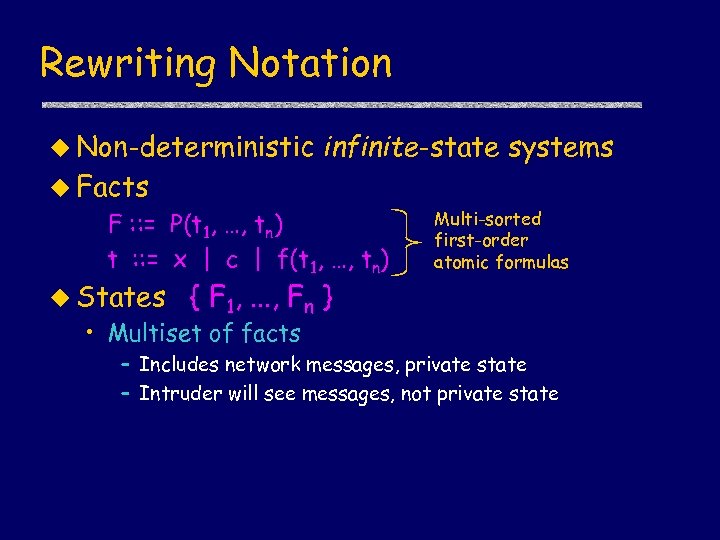

Rewriting Notation u Non-deterministic infinite-state systems u Facts F : : = P(t 1, …, tn) t : : = x | c | f(t 1, …, tn) u States Multi-sorted first-order atomic formulas { F 1, . . . , Fn } • Multiset of facts – Includes network messages, private state – Intruder will see messages, not private state

Rewriting Notation u Non-deterministic infinite-state systems u Facts F : : = P(t 1, …, tn) t : : = x | c | f(t 1, …, tn) u States Multi-sorted first-order atomic formulas { F 1, . . . , Fn } • Multiset of facts – Includes network messages, private state – Intruder will see messages, not private state

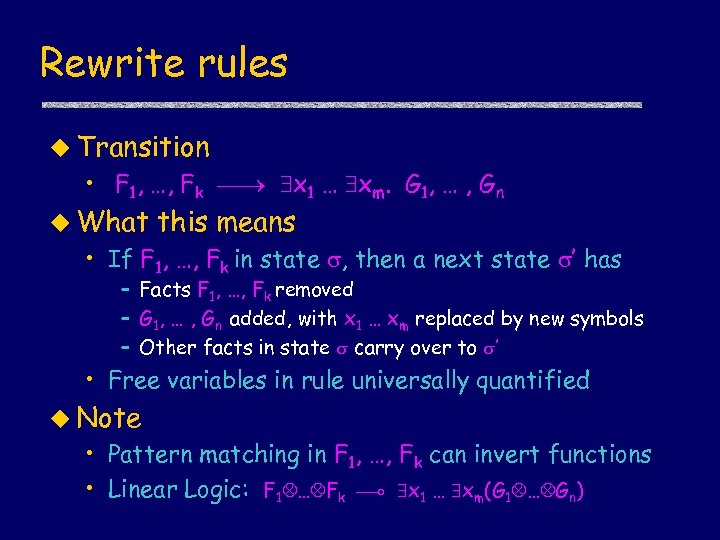

Rewrite rules u Transition • F 1, …, Fk x 1 … xm. G 1, … , Gn u What this means • If F 1, …, Fk in state , then a next state ’ has – Facts F 1, …, Fk removed – G 1, … , Gn added, with x 1 … xm replaced by new symbols – Other facts in state carry over to ’ • Free variables in rule universally quantified u Note • Pattern matching in F 1, …, Fk can invert functions • Linear Logic: F 1 … Fk x 1 … xm(G 1 … Gn)

Rewrite rules u Transition • F 1, …, Fk x 1 … xm. G 1, … , Gn u What this means • If F 1, …, Fk in state , then a next state ’ has – Facts F 1, …, Fk removed – G 1, … , Gn added, with x 1 … xm replaced by new symbols – Other facts in state carry over to ’ • Free variables in rule universally quantified u Note • Pattern matching in F 1, …, Fk can invert functions • Linear Logic: F 1 … Fk x 1 … xm(G 1 … Gn)

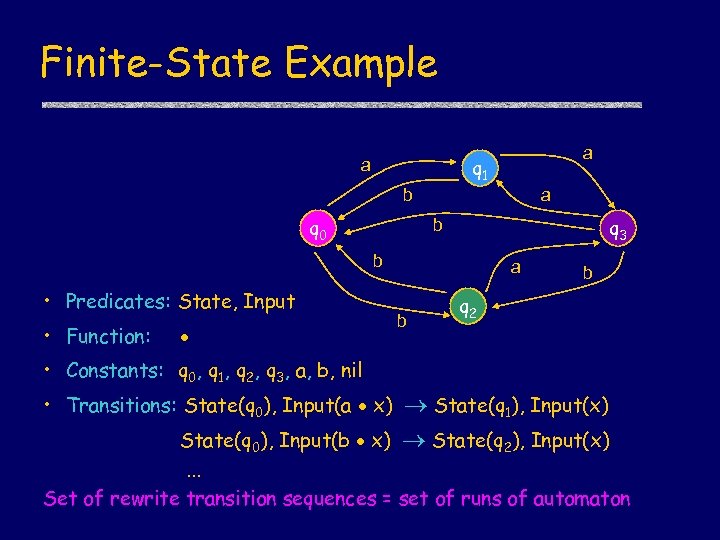

Finite-State Example a q 1 a b q 0 a q 3 b b • Predicates: State, Input • Function: a b b q 2 • Constants: q 0, q 1, q 2, q 3, a, b, nil • Transitions: State(q 0), Input(a x) State(q 1), Input(x) State(q 0), Input(b x) State(q 2), Input(x). . . Set of rewrite transition sequences = set of runs of automaton

Finite-State Example a q 1 a b q 0 a q 3 b b • Predicates: State, Input • Function: a b b q 2 • Constants: q 0, q 1, q 2, q 3, a, b, nil • Transitions: State(q 0), Input(a x) State(q 1), Input(x) State(q 0), Input(b x) State(q 2), Input(x). . . Set of rewrite transition sequences = set of runs of automaton

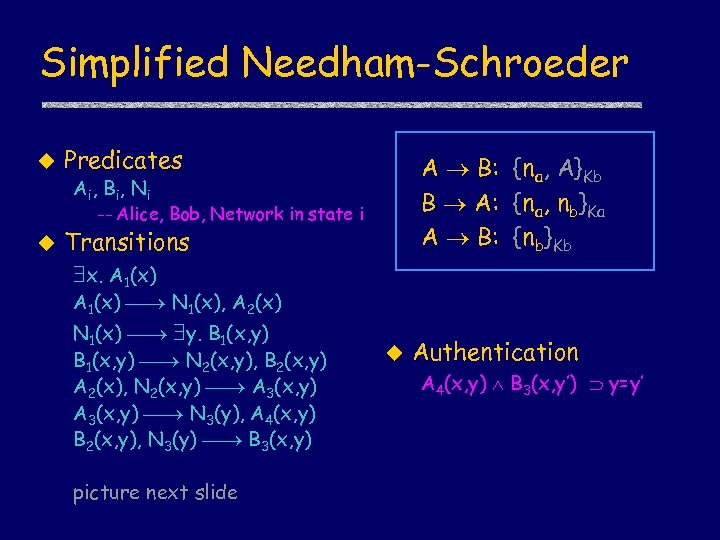

Simplified Needham-Schroeder u Predicates A B: {na, A}Kb B A: {na, nb}Ka A B: {nb}Kb Ai , B i , N i -- Alice, Bob, Network in state i u Transitions x. A 1(x) N 1(x), A 2(x) N 1(x) y. B 1(x, y) N 2(x, y), B 2(x, y) A 2(x), N 2(x, y) A 3(x, y) N 3(y), A 4(x, y) B 2(x, y), N 3(y) B 3(x, y) picture next slide u Authentication A 4(x, y) B 3(x, y’) y=y’

Simplified Needham-Schroeder u Predicates A B: {na, A}Kb B A: {na, nb}Ka A B: {nb}Kb Ai , B i , N i -- Alice, Bob, Network in state i u Transitions x. A 1(x) N 1(x), A 2(x) N 1(x) y. B 1(x, y) N 2(x, y), B 2(x, y) A 2(x), N 2(x, y) A 3(x, y) N 3(y), A 4(x, y) B 2(x, y), N 3(y) B 3(x, y) picture next slide u Authentication A 4(x, y) B 3(x, y’) y=y’

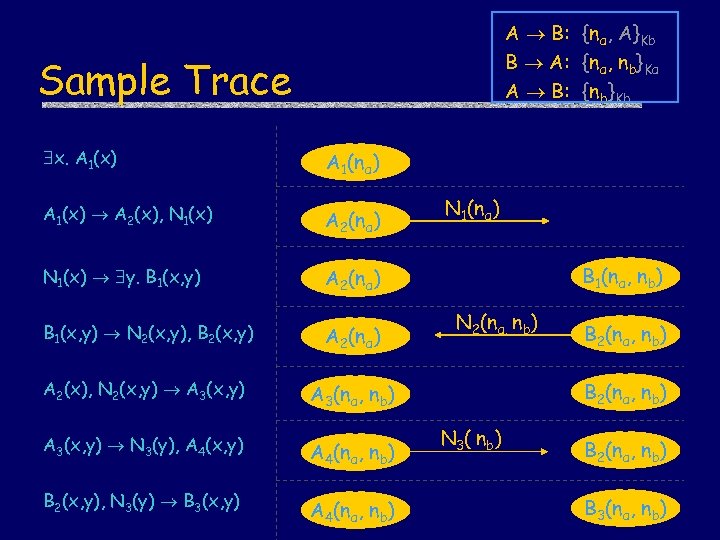

A B: {na, A}Kb B A: {na, nb}Ka A B: {nb}Kb Sample Trace x. A 1(x) A 1(na) A 1(x) A 2(x), N 1(x) A 2(na) N 1(x) y. B 1(x, y) A 2(na) B 1(x, y) N 2(x, y), B 2(x, y) A 2(na) A 2(x), N 2(x, y) A 3(x, y) A 3(na, nb) A 3(x, y) N 3(y), A 4(x, y) A 4(na, nb) B 2(x, y), N 3(y) B 3(x, y) A 4(na, nb) N 1(na) B 1(na, nb) N 2(na, nb) B 2(na, nb) N 3( nb) B 2(na, nb) B 3(na, nb)

A B: {na, A}Kb B A: {na, nb}Ka A B: {nb}Kb Sample Trace x. A 1(x) A 1(na) A 1(x) A 2(x), N 1(x) A 2(na) N 1(x) y. B 1(x, y) A 2(na) B 1(x, y) N 2(x, y), B 2(x, y) A 2(na) A 2(x), N 2(x, y) A 3(x, y) A 3(na, nb) A 3(x, y) N 3(y), A 4(x, y) A 4(na, nb) B 2(x, y), N 3(y) B 3(x, y) A 4(na, nb) N 1(na) B 1(na, nb) N 2(na, nb) B 2(na, nb) N 3( nb) B 2(na, nb) B 3(na, nb)

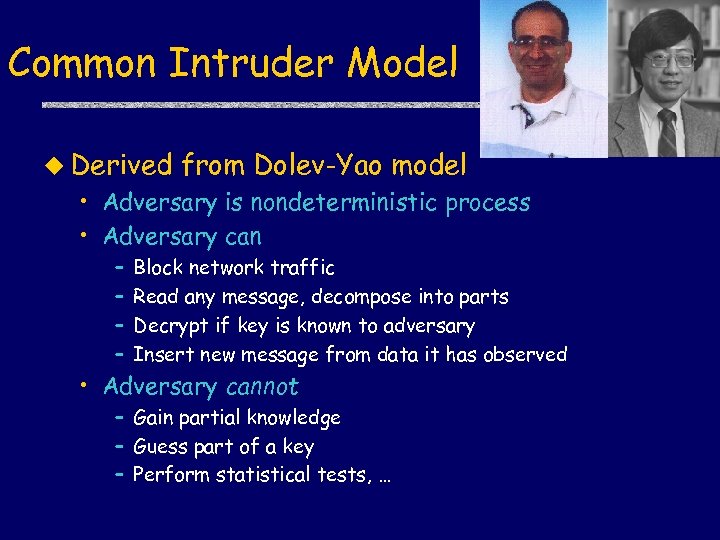

Common Intruder Model u Derived from Dolev-Yao model • Adversary is nondeterministic process • Adversary can – – Block network traffic Read any message, decompose into parts Decrypt if key is known to adversary Insert new message from data it has observed • Adversary cannot – Gain partial knowledge – Guess part of a key – Perform statistical tests, …

Common Intruder Model u Derived from Dolev-Yao model • Adversary is nondeterministic process • Adversary can – – Block network traffic Read any message, decompose into parts Decrypt if key is known to adversary Insert new message from data it has observed • Adversary cannot – Gain partial knowledge – Guess part of a key – Perform statistical tests, …

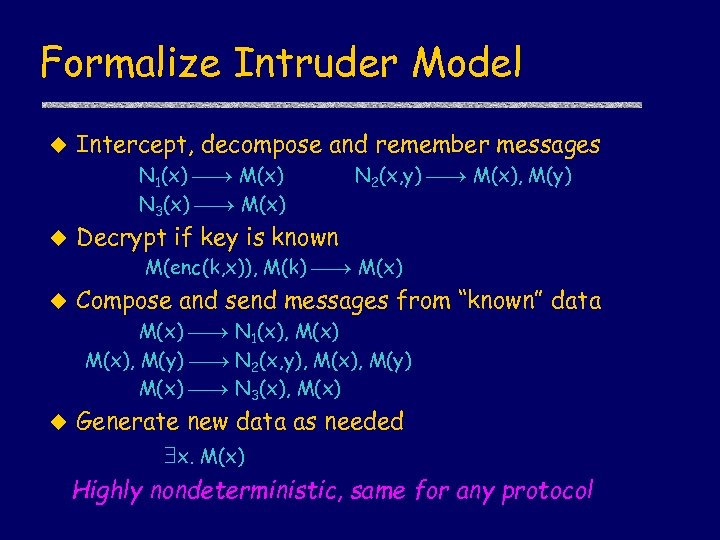

Formalize Intruder Model u Intercept, decompose and remember messages N 1(x) M(x) N 3(x) M(x) u N 2(x, y) M(x), M(y) Decrypt if key is known M(enc(k, x)), M(k) M(x) u Compose and send messages from “known” data M(x) N 1(x), M(x), M(y) N 2(x, y), M(x), M(y) M(x) N 3(x), M(x) u Generate new data as needed x. M(x) Highly nondeterministic, same for any protocol

Formalize Intruder Model u Intercept, decompose and remember messages N 1(x) M(x) N 3(x) M(x) u N 2(x, y) M(x), M(y) Decrypt if key is known M(enc(k, x)), M(k) M(x) u Compose and send messages from “known” data M(x) N 1(x), M(x), M(y) N 2(x, y), M(x), M(y) M(x) N 3(x), M(x) u Generate new data as needed x. M(x) Highly nondeterministic, same for any protocol

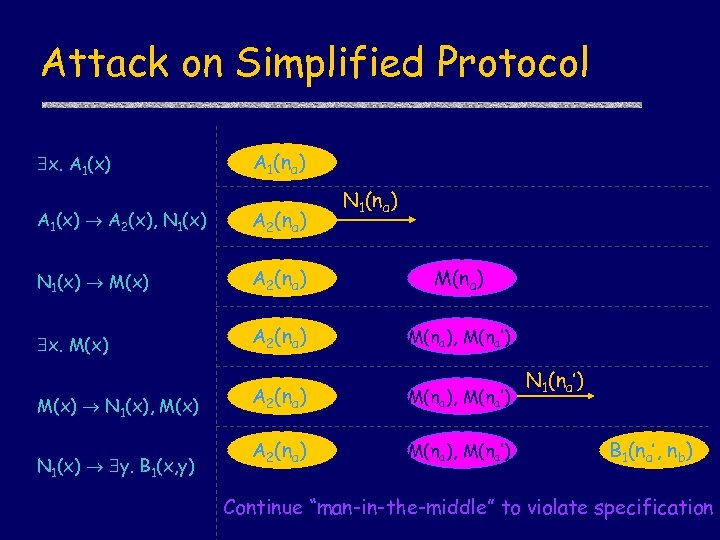

Attack on Simplified Protocol x. A 1(x) A 1(na) N 1(na) A 1(x) A 2(x), N 1(x) A 2(na) N 1(x) M(x) A 2(na) M(na) x. M(x) A 2(na) M(na), M(na’) M(x) N 1(x), M(x) N 1(x) y. B 1(x, y) A 2(na) M(na), M(na’) N 1(na’) B 1(na’, nb) Continue “man-in-the-middle” to violate specification

Attack on Simplified Protocol x. A 1(x) A 1(na) N 1(na) A 1(x) A 2(x), N 1(x) A 2(na) N 1(x) M(x) A 2(na) M(na) x. M(x) A 2(na) M(na), M(na’) M(x) N 1(x), M(x) N 1(x) y. B 1(x, y) A 2(na) M(na), M(na’) N 1(na’) B 1(na’, nb) Continue “man-in-the-middle” to violate specification

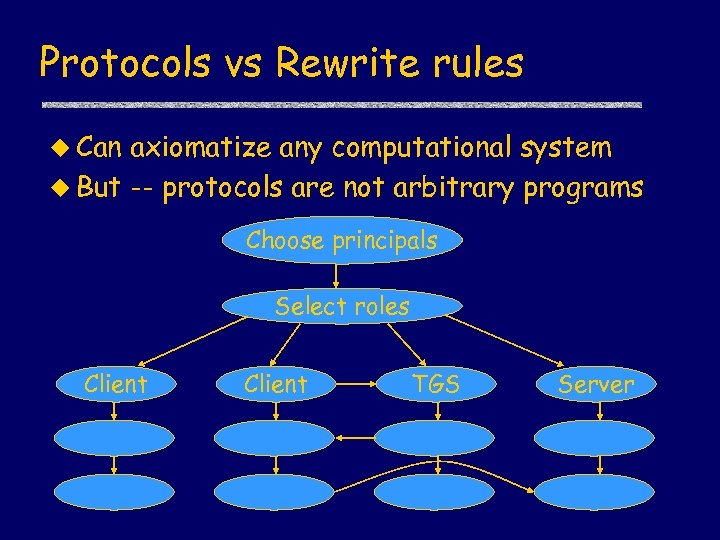

Protocols vs Rewrite rules u Can axiomatize any computational system u But -- protocols are not arbitrary programs Choose principals Select roles Client TGS Server

Protocols vs Rewrite rules u Can axiomatize any computational system u But -- protocols are not arbitrary programs Choose principals Select roles Client TGS Server

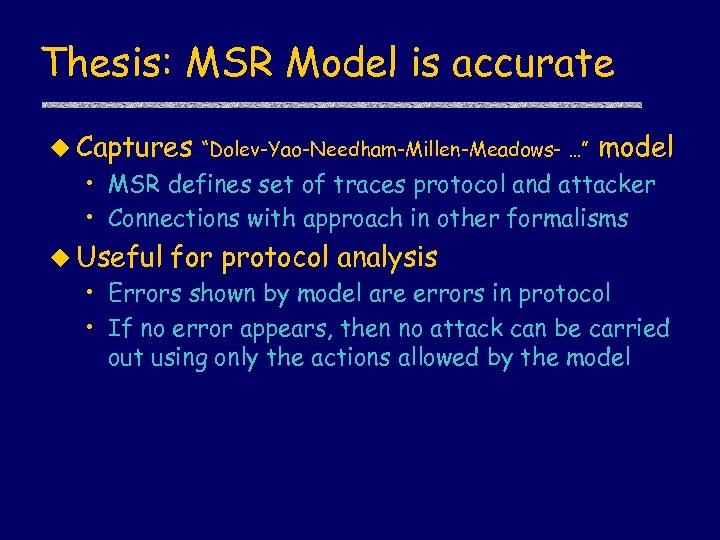

Thesis: MSR Model is accurate u Captures “Dolev-Yao-Needham-Millen-Meadows- …” model • MSR defines set of traces protocol and attacker • Connections with approach in other formalisms u Useful for protocol analysis • Errors shown by model are errors in protocol • If no error appears, then no attack can be carried out using only the actions allowed by the model

Thesis: MSR Model is accurate u Captures “Dolev-Yao-Needham-Millen-Meadows- …” model • MSR defines set of traces protocol and attacker • Connections with approach in other formalisms u Useful for protocol analysis • Errors shown by model are errors in protocol • If no error appears, then no attack can be carried out using only the actions allowed by the model

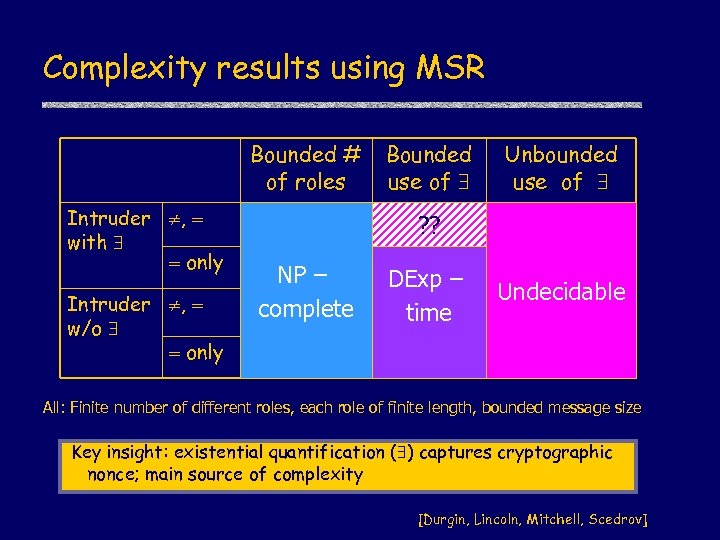

Complexity results using MSR Bounded # of roles Intruder , with only Intruder , w/o only Bounded use of Unbounded use of ? ? NP – complete DExp – time Undecidable All: Finite number of different roles, each role of finite length, bounded message size Key insight: existential quantification ( ) captures cryptographic nonce; main source of complexity [Durgin, Lincoln, Mitchell, Scedrov]

Complexity results using MSR Bounded # of roles Intruder , with only Intruder , w/o only Bounded use of Unbounded use of ? ? NP – complete DExp – time Undecidable All: Finite number of different roles, each role of finite length, bounded message size Key insight: existential quantification ( ) captures cryptographic nonce; main source of complexity [Durgin, Lincoln, Mitchell, Scedrov]

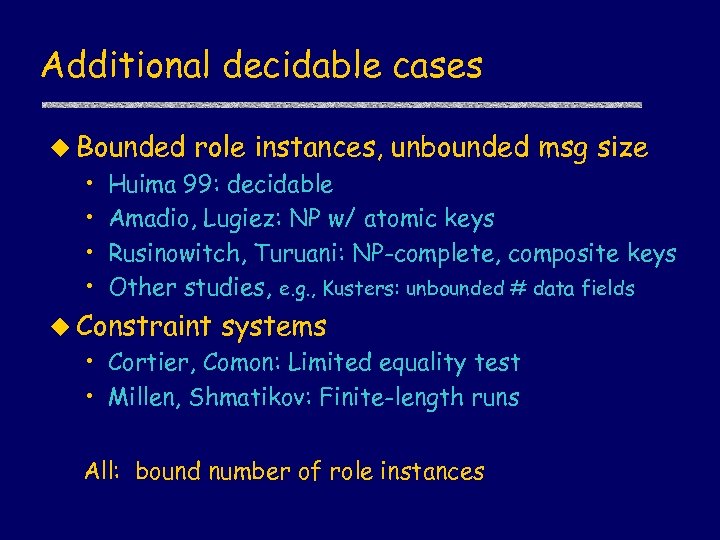

Additional decidable cases u Bounded • • role instances, unbounded msg size Huima 99: decidable Amadio, Lugiez: NP w/ atomic keys Rusinowitch, Turuani: NP-complete, composite keys Other studies, e. g. , Kusters: unbounded # data fields u Constraint systems • Cortier, Comon: Limited equality test • Millen, Shmatikov: Finite-length runs All: bound number of role instances

Additional decidable cases u Bounded • • role instances, unbounded msg size Huima 99: decidable Amadio, Lugiez: NP w/ atomic keys Rusinowitch, Turuani: NP-complete, composite keys Other studies, e. g. , Kusters: unbounded # data fields u Constraint systems • Cortier, Comon: Limited equality test • Millen, Shmatikov: Finite-length runs All: bound number of role instances

Probabilistic Polynomial-Time Process Calculus for Security Protocol Analysis J. Mitchell, A. Ramanathan, A. Scedrov, V. Teague A. P. Lincoln, M. Mitchell

Probabilistic Polynomial-Time Process Calculus for Security Protocol Analysis J. Mitchell, A. Ramanathan, A. Scedrov, V. Teague A. P. Lincoln, M. Mitchell

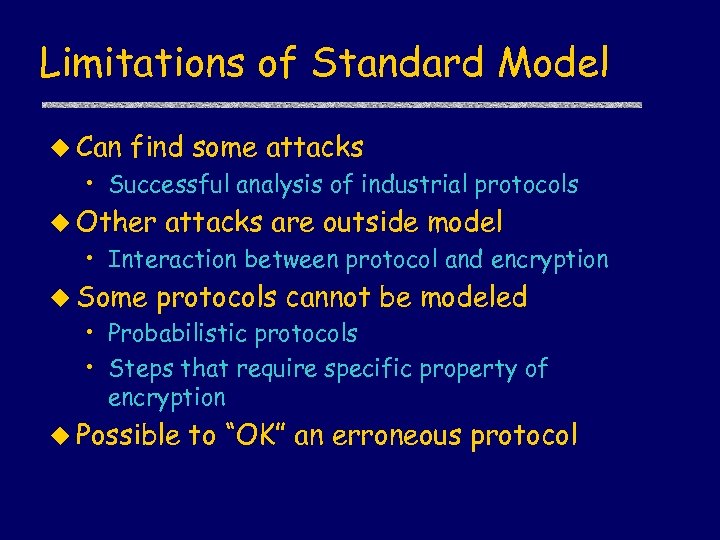

Limitations of Standard Model u Can find some attacks • Successful analysis of industrial protocols u Other attacks are outside model • Interaction between protocol and encryption u Some protocols cannot be modeled • Probabilistic protocols • Steps that require specific property of encryption u Possible to “OK” an erroneous protocol

Limitations of Standard Model u Can find some attacks • Successful analysis of industrial protocols u Other attacks are outside model • Interaction between protocol and encryption u Some protocols cannot be modeled • Probabilistic protocols • Steps that require specific property of encryption u Possible to “OK” an erroneous protocol

Non-formal state of the art u Turing-machine-based • • analysis Canetti Bellare, Rogaway Bellare, Canetti, Krawczyk others … u Prove correctness of protocol transformations • Example: secure channel -> insecure channel

Non-formal state of the art u Turing-machine-based • • analysis Canetti Bellare, Rogaway Bellare, Canetti, Krawczyk others … u Prove correctness of protocol transformations • Example: secure channel -> insecure channel

![Language Approach [Abadi, Gordon] u Write protocol in process calculus u Express security using Language Approach [Abadi, Gordon] u Write protocol in process calculus u Express security using](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-70.jpg) Language Approach [Abadi, Gordon] u Write protocol in process calculus u Express security using observational equivalence • Standard relation from programming language theory P Q iff for all contexts C[ ], same observations about C[P] and C[Q] • Context (environment) represents adversary u Use proof rules for to prove security • Protocol is secure if no adversary can distinguish it from some idealized version of the protocol

Language Approach [Abadi, Gordon] u Write protocol in process calculus u Express security using observational equivalence • Standard relation from programming language theory P Q iff for all contexts C[ ], same observations about C[P] and C[Q] • Context (environment) represents adversary u Use proof rules for to prove security • Protocol is secure if no adversary can distinguish it from some idealized version of the protocol

![Probabilistic Poly-time Analysis [Lincoln, Mitchell, Scedrov] u Adopt spi-calculus approach, add probability u Probabilistic Probabilistic Poly-time Analysis [Lincoln, Mitchell, Scedrov] u Adopt spi-calculus approach, add probability u Probabilistic](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-71.jpg) Probabilistic Poly-time Analysis [Lincoln, Mitchell, Scedrov] u Adopt spi-calculus approach, add probability u Probabilistic polynomial-time process calculus • Protocols use probabilistic primitives – Key generation, nonce, probabilistic encryption, . . . • Adversary may be probabilistic • Modal type system guarantees complexity bounds u Express protocol and specification in calculus u Study security using observational equivalence • Use probabilistic form of process equivalence

Probabilistic Poly-time Analysis [Lincoln, Mitchell, Scedrov] u Adopt spi-calculus approach, add probability u Probabilistic polynomial-time process calculus • Protocols use probabilistic primitives – Key generation, nonce, probabilistic encryption, . . . • Adversary may be probabilistic • Modal type system guarantees complexity bounds u Express protocol and specification in calculus u Study security using observational equivalence • Use probabilistic form of process equivalence

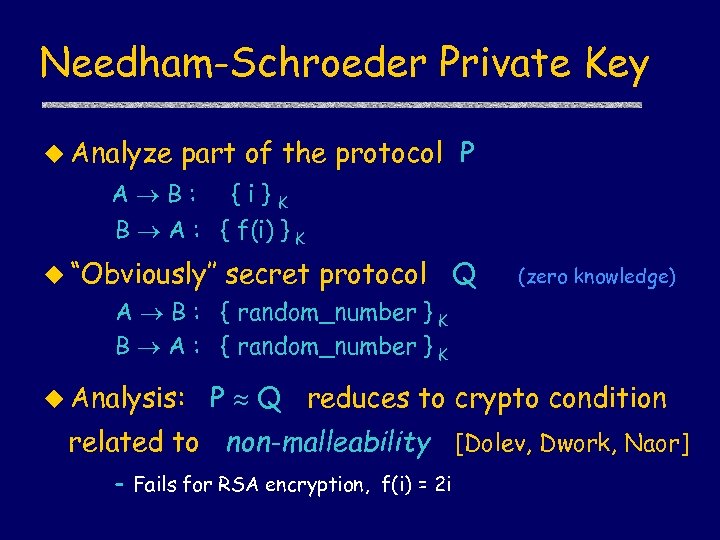

Needham-Schroeder Private Key u Analyze part of the protocol P A B: {i}K B A : { f(i) } K u “Obviously’’ secret protocol Q (zero knowledge) A B : { random_number } K B A : { random_number } K u Analysis: P Q reduces to crypto condition related to non-malleability [Dolev, Dwork, Naor] – Fails for RSA encryption, f(i) = 2 i

Needham-Schroeder Private Key u Analyze part of the protocol P A B: {i}K B A : { f(i) } K u “Obviously’’ secret protocol Q (zero knowledge) A B : { random_number } K B A : { random_number } K u Analysis: P Q reduces to crypto condition related to non-malleability [Dolev, Dwork, Naor] – Fails for RSA encryption, f(i) = 2 i

Technical Challenges u Language for prob. poly-time functions • Extend Hofmann language with rand u Replace nondeterminism with probability • Otherwise adversary is too strong. . . u Define probabilistic equivalence • Related to poly-time statistical tests. . . u Develop specification by equivalence • Several examples carried out u Proof systems for probabilistic equivalence • Work in progress

Technical Challenges u Language for prob. poly-time functions • Extend Hofmann language with rand u Replace nondeterminism with probability • Otherwise adversary is too strong. . . u Define probabilistic equivalence • Related to poly-time statistical tests. . . u Develop specification by equivalence • Several examples carried out u Proof systems for probabilistic equivalence • Work in progress

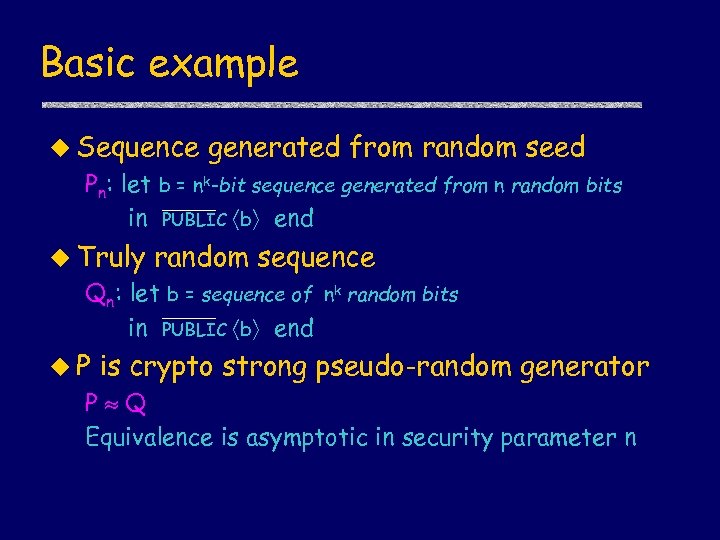

Basic example u Sequence generated from random seed Pn: let b = nk-bit sequence generated from n random bits in PUBLIC b end u Truly random sequence Qn: let b = sequence of nk random bits in PUBLIC b end u. P is crypto strong pseudo-random generator P Q Equivalence is asymptotic in security parameter n

Basic example u Sequence generated from random seed Pn: let b = nk-bit sequence generated from n random bits in PUBLIC b end u Truly random sequence Qn: let b = sequence of nk random bits in PUBLIC b end u. P is crypto strong pseudo-random generator P Q Equivalence is asymptotic in security parameter n

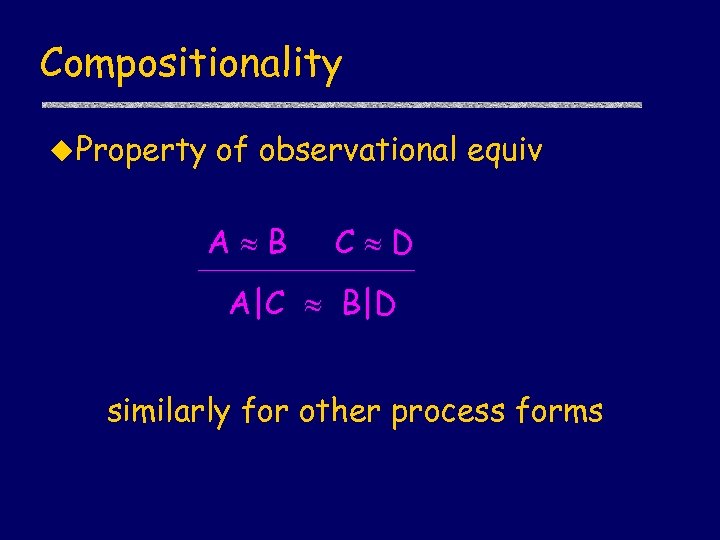

Compositionality u Property of observational equiv A B C D A|C B|D similarly for other process forms

Compositionality u Property of observational equiv A B C D A|C B|D similarly for other process forms

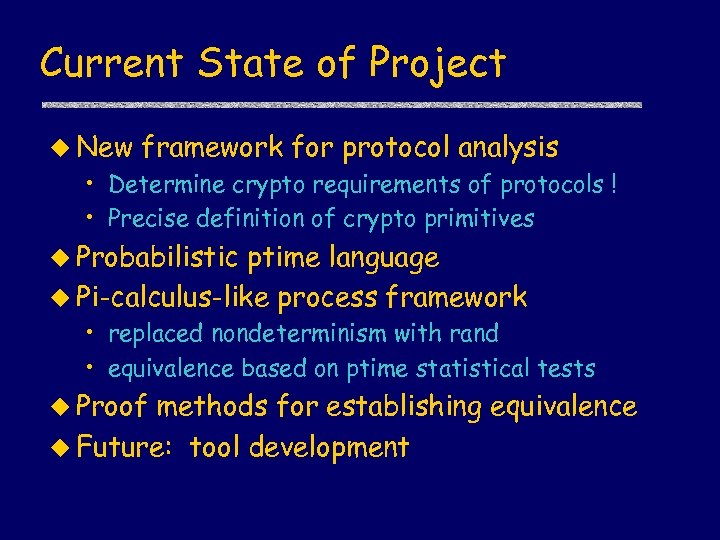

Current State of Project u New framework for protocol analysis • Determine crypto requirements of protocols ! • Precise definition of crypto primitives u Probabilistic ptime language u Pi-calculus-like process framework • replaced nondeterminism with rand • equivalence based on ptime statistical tests u Proof methods for establishing equivalence u Future: tool development

Current State of Project u New framework for protocol analysis • Determine crypto requirements of protocols ! • Precise definition of crypto primitives u Probabilistic ptime language u Pi-calculus-like process framework • replaced nondeterminism with rand • equivalence based on ptime statistical tests u Proof methods for establishing equivalence u Future: tool development

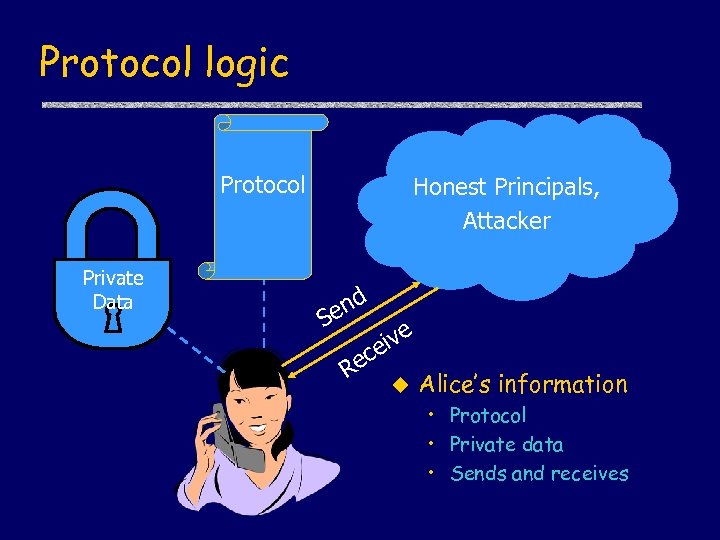

Protocol logic Protocol Private Data Honest Principals, Attacker nd e S ec R ive e u Alice’s information • Protocol • Private data • Sends and receives

Protocol logic Protocol Private Data Honest Principals, Attacker nd e S ec R ive e u Alice’s information • Protocol • Private data • Sends and receives

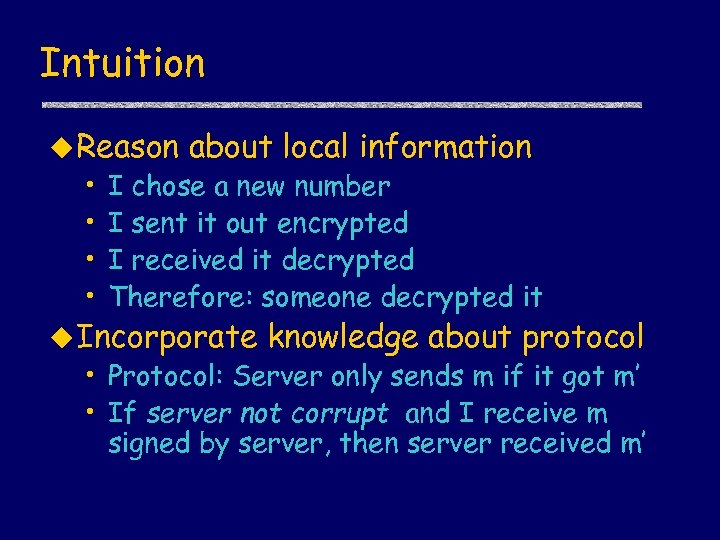

Intuition u Reason • • about local information I chose a new number I sent it out encrypted I received it decrypted Therefore: someone decrypted it u Incorporate knowledge about protocol • Protocol: Server only sends m if it got m’ • If server not corrupt and I receive m signed by server, then server received m’

Intuition u Reason • • about local information I chose a new number I sent it out encrypted I received it decrypted Therefore: someone decrypted it u Incorporate knowledge about protocol • Protocol: Server only sends m if it got m’ • If server not corrupt and I receive m signed by server, then server received m’

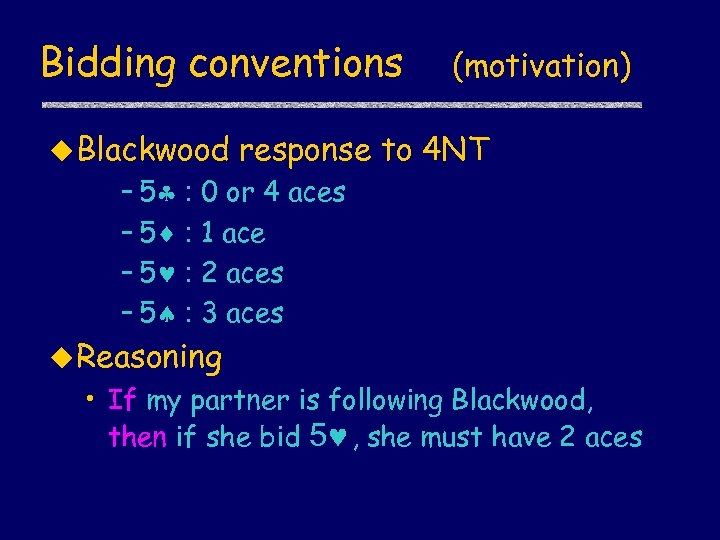

Bidding conventions u Blackwood (motivation) response to 4 NT – 5§ : 0 or 4 aces – 5¨ : 1 ace – 5© : 2 aces – 5ª : 3 aces u Reasoning • If my partner is following Blackwood, then if she bid 5©, she must have 2 aces

Bidding conventions u Blackwood (motivation) response to 4 NT – 5§ : 0 or 4 aces – 5¨ : 1 ace – 5© : 2 aces – 5ª : 3 aces u Reasoning • If my partner is following Blackwood, then if she bid 5©, she must have 2 aces

![Logical assertions u Modal operator • [ actions ] P - after actions, P Logical assertions u Modal operator • [ actions ] P - after actions, P](https://present5.com/presentation/072dce30bf46b53e86eb4962c2c30407/image-80.jpg) Logical assertions u Modal operator • [ actions ] P - after actions, P reasons u Predicates • • in Sent(X, m) - principal X sent message m Created(X, m) – X assembled m from parts Decrypts(X, m) - X has m and key to decrypt m Knows(X, m) - X created m or received msg containing m and has keys to extract m from msg • Source(m, X, S) – Y X can only learn m from set S • Honest(X) – X follows rules of protocol

Logical assertions u Modal operator • [ actions ] P - after actions, P reasons u Predicates • • in Sent(X, m) - principal X sent message m Created(X, m) – X assembled m from parts Decrypts(X, m) - X has m and key to decrypt m Knows(X, m) - X created m or received msg containing m and has keys to extract m from msg • Source(m, X, S) – Y X can only learn m from set S • Honest(X) – X follows rules of protocol

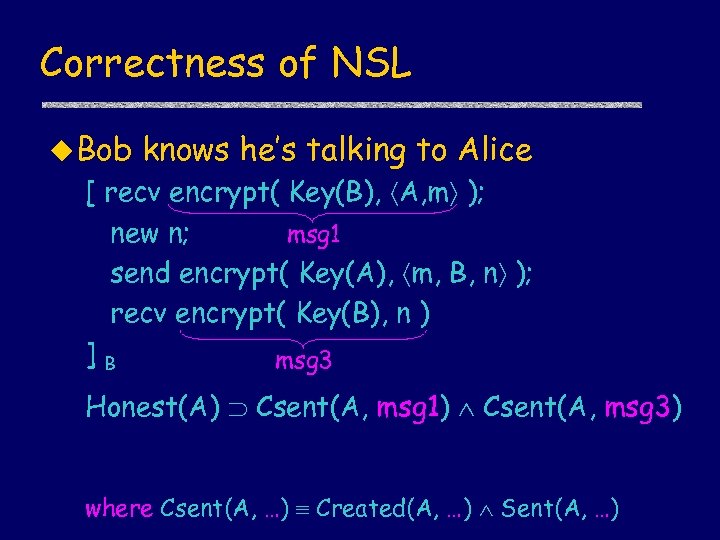

Correctness of NSL u Bob knows he’s talking to Alice [ recv encrypt( Key(B), A, m ); new n; msg 1 send encrypt( Key(A), m, B, n ); recv encrypt( Key(B), n ) ]B msg 3 Honest(A) Csent(A, msg 1) Csent(A, msg 3) where Csent(A, …) Created(A, …) Sent(A, …)

Correctness of NSL u Bob knows he’s talking to Alice [ recv encrypt( Key(B), A, m ); new n; msg 1 send encrypt( Key(A), m, B, n ); recv encrypt( Key(B), n ) ]B msg 3 Honest(A) Csent(A, msg 1) Csent(A, msg 3) where Csent(A, …) Created(A, …) Sent(A, …)

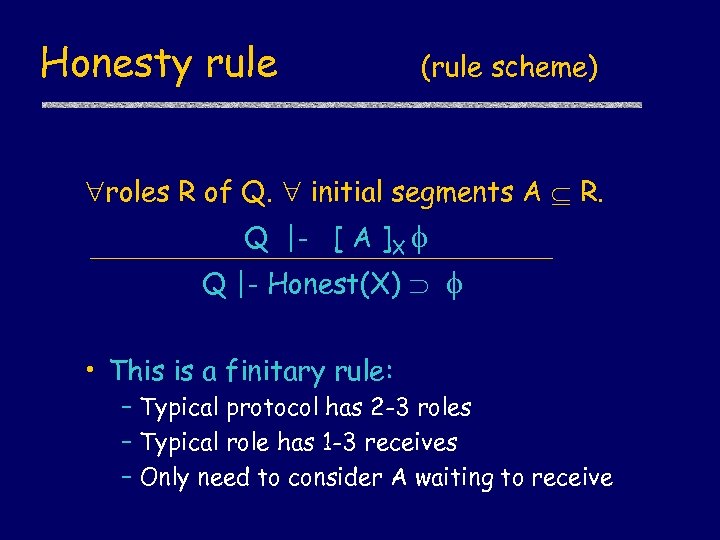

Honesty rule (rule scheme) roles R of Q. initial segments A R. Q |- [ A ]X Q |- Honest(X) • This is a finitary rule: – Typical protocol has 2 -3 roles – Typical role has 1 -3 receives – Only need to consider A waiting to receive

Honesty rule (rule scheme) roles R of Q. initial segments A R. Q |- [ A ]X Q |- Honest(X) • This is a finitary rule: – Typical protocol has 2 -3 roles – Typical role has 1 -3 receives – Only need to consider A waiting to receive

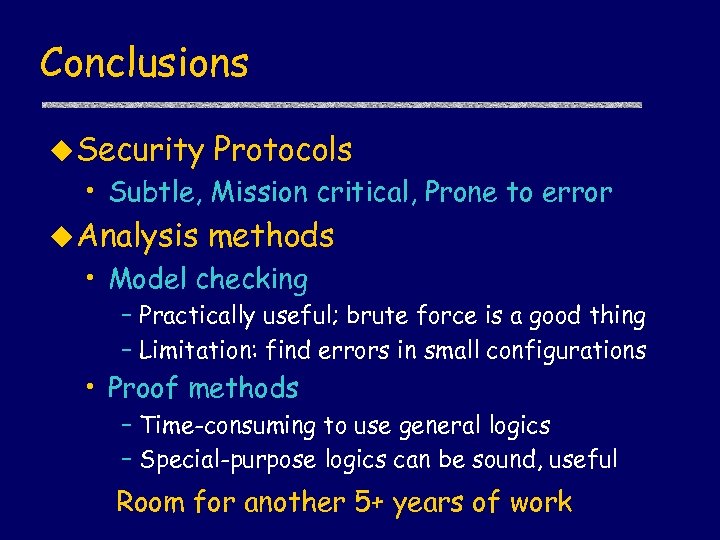

Conclusions u Security Protocols • Subtle, Mission critical, Prone to error u Analysis methods • Model checking – Practically useful; brute force is a good thing – Limitation: find errors in small configurations • Proof methods – Time-consuming to use general logics – Special-purpose logics can be sound, useful Room for another 5+ years of work

Conclusions u Security Protocols • Subtle, Mission critical, Prone to error u Analysis methods • Model checking – Practically useful; brute force is a good thing – Limitation: find errors in small configurations • Proof methods – Time-consuming to use general logics – Special-purpose logics can be sound, useful Room for another 5+ years of work

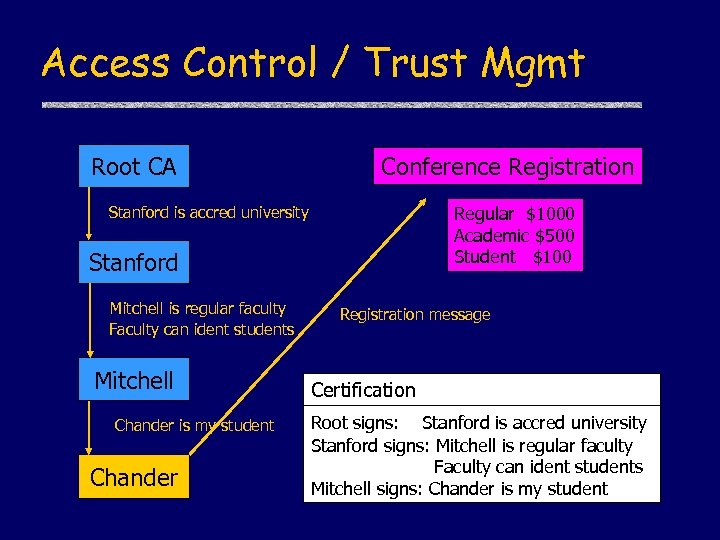

Access Control / Trust Mgmt Root CA Conference Registration Stanford is accred university Regular $1000 Academic $500 Student $100 Stanford Mitchell is regular faculty Faculty can ident students Mitchell Chander is my student Chander Registration message Certification Root signs: Stanford is accred university Stanford signs: Mitchell is regular faculty Faculty can ident students Mitchell signs: Chander is my student

Access Control / Trust Mgmt Root CA Conference Registration Stanford is accred university Regular $1000 Academic $500 Student $100 Stanford Mitchell is regular faculty Faculty can ident students Mitchell Chander is my student Chander Registration message Certification Root signs: Stanford is accred university Stanford signs: Mitchell is regular faculty Faculty can ident students Mitchell signs: Chander is my student

Users * Importance Formal methods Not feasible Not worth Worthwhile the effort System complexity * Property complexity

Users * Importance Formal methods Not feasible Not worth Worthwhile the effort System complexity * Property complexity

Sophistication of attacks Low High System-Property Tradeoff Hand proofs Poly-time calculus Multiset rewriting with Spi-calculus Athena Paulson NRL Bolignano BAN logic Protocol logic Low Model checking FDR Protocol complexity High Murj

Sophistication of attacks Low High System-Property Tradeoff Hand proofs Poly-time calculus Multiset rewriting with Spi-calculus Athena Paulson NRL Bolignano BAN logic Protocol logic Low Model checking FDR Protocol complexity High Murj

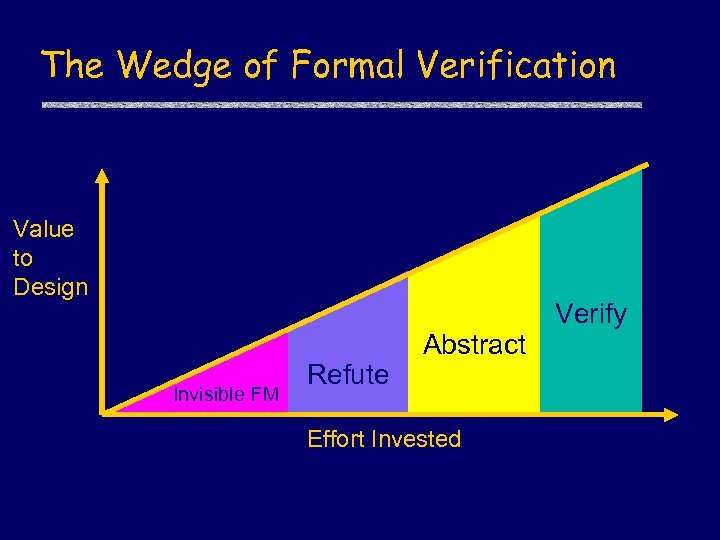

The Wedge of Formal Verification Value to Design Invisible FM Refute Abstract Effort Invested Verify

The Wedge of Formal Verification Value to Design Invisible FM Refute Abstract Effort Invested Verify

Big Picture u Biggest problem in CS • Produce good software efficiently u Best tool • The computer u Therefore • Future improvements in computer science/industry depend on our ability to automate software design, development, and quality control processes

Big Picture u Biggest problem in CS • Produce good software efficiently u Best tool • The computer u Therefore • Future improvements in computer science/industry depend on our ability to automate software design, development, and quality control processes