16b81041492c8cdbd06010ffe2b230a0.ppt

- Количество слайдов: 81

For 정보과학회, Pattern Recognition Winter School Introduction to Pattern Recognition 2011년 2월 김 진형 KAIST 전산학과 http: //ai. kaist. ac. kr/~jkim

What is Pattern Recognition? A pattern is an object, process or event that can be given a name Pattern Recognition assignment of physical object or event to one of several prespecified categeries -- Duda & Hart A subfield of Artificial Intelligence human intelligence is based on pattern recognition 2

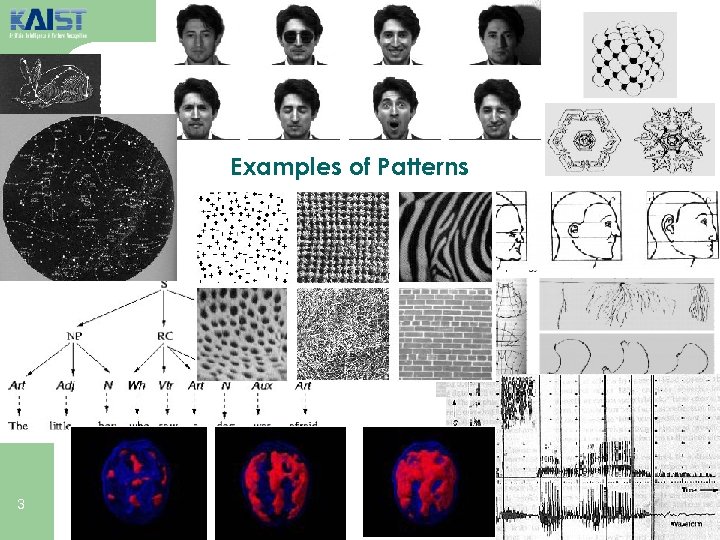

Examples of Patterns 3

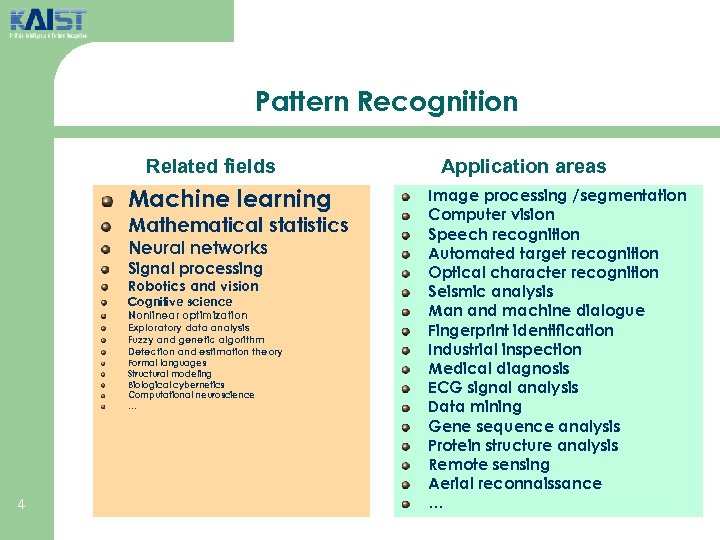

Pattern Recognition Related fields Machine learning Mathematical statistics Neural networks Signal processing Robotics and vision Cognitive science Nonlinear optimization Exploratory data analysis Fuzzy and genetic algorithm Detection and estimation theory Formal languages Structural modeling Biological cybernetics Computational neuroscience … 4 Application areas Image processing /segmentation Computer vision Speech recognition Automated target recognition Optical character recognition Seismic analysis Man and machine dialogue Fingerprint identification Industrial inspection Medical diagnosis ECG signal analysis Data mining Gene sequence analysis Protein structure analysis Remote sensing Aerial reconnaissance …

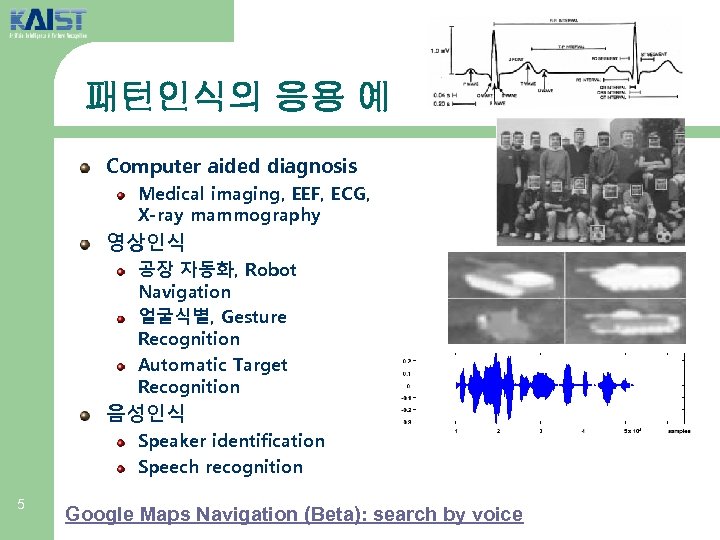

패턴인식의 응용 예 Computer aided diagnosis Medical imaging, EEF, ECG, X-ray mammography 영상인식 공장 자동화, Robot Navigation 얼굴식별, Gesture Recognition Automatic Target Recognition 음성인식 Speaker identification Speech recognition 5 Google Maps Navigation (Beta): search by voice

패턴인식의 응용 생체 인식(Biometrics Recognition) 불변의 생체 특징을 이용한 사람 식별 정적 패턴 지문, 홍채, 얼굴, 장문, … DNA 동적 패턴 Signature, 성문 Typing pattern 활용 출입통제 전자상거래 인증 6

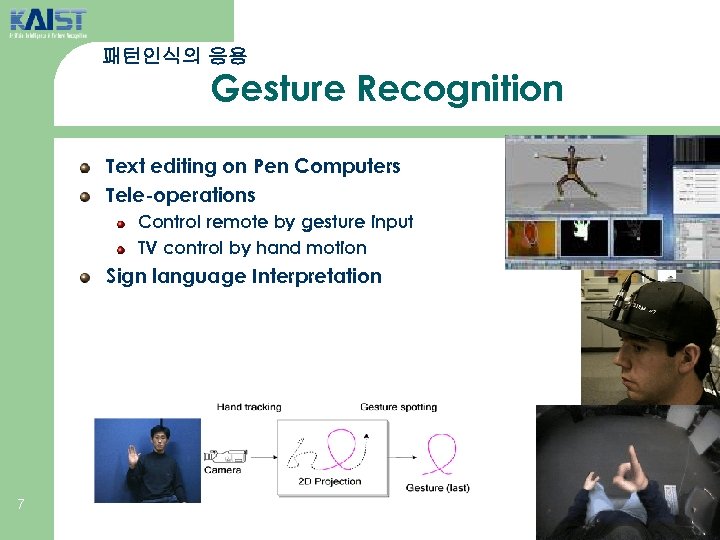

패턴인식의 응용 Gesture Recognition Text editing on Pen Computers Tele-operations Control remote by gesture input TV control by hand motion Sign language Interpretation 7

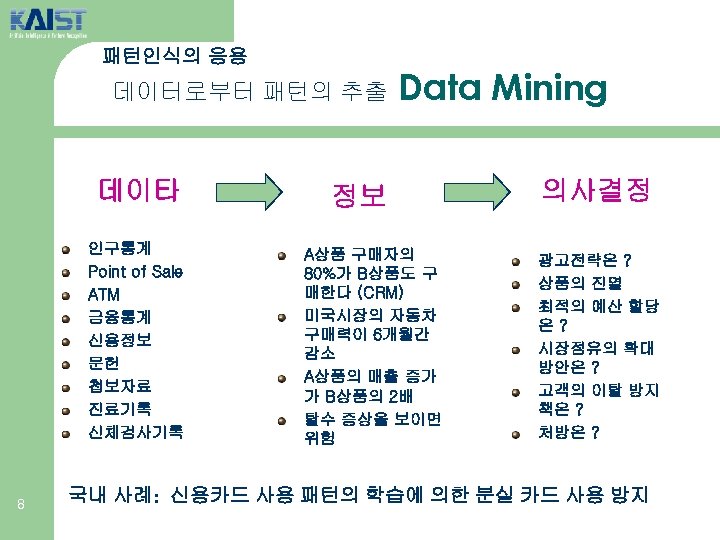

패턴인식의 응용 데이터로부터 패턴의 추출 데이타 인구통계 Point of Sale ATM 금융통계 신용정보 문헌 첩보자료 진료기록 신체검사기록 8 Data Mining 정보 A상품 구매자의 80%가 B상품도 구 매한다 (CRM) 미국시장의 자동차 구매력이 6개월간 감소 A상품의 매출 증가 가 B상품의 2배 탈수 증상을 보이면 위험 의사결정 광고전략은 ? 상품의 진열 최적의 예산 할당 은? 시장점유의 확대 방안은 ? 고객의 이탈 방지 책은 ? 처방은 ? 국내 사례: 신용카드 사용 패턴의 학습에 의한 분실 카드 사용 방지

패턴인식의 응용 e-Book, Tablet PC, i. Pad, Smart-phone 9

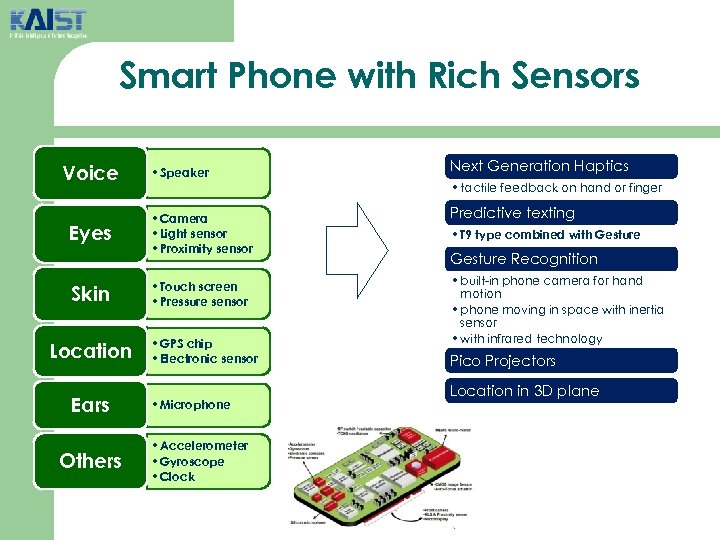

Smart Phone with Rich Sensors Voice Eyes Skin Location Ears Others • Speaker • Camera • Light sensor • Proximity sensor • Touch screen • Pressure sensor • GPS chip • Electronic sensor • Microphone • Accelerometer • Gyroscope • Clock Next Generation Haptics • tactile feedback on hand or finger Predictive texting • T 9 type combined with Gesture Recognition • built-in phone camera for hand motion • phone moving in space with inertia sensor • with infrared technology Pico Projectors Location in 3 D plane

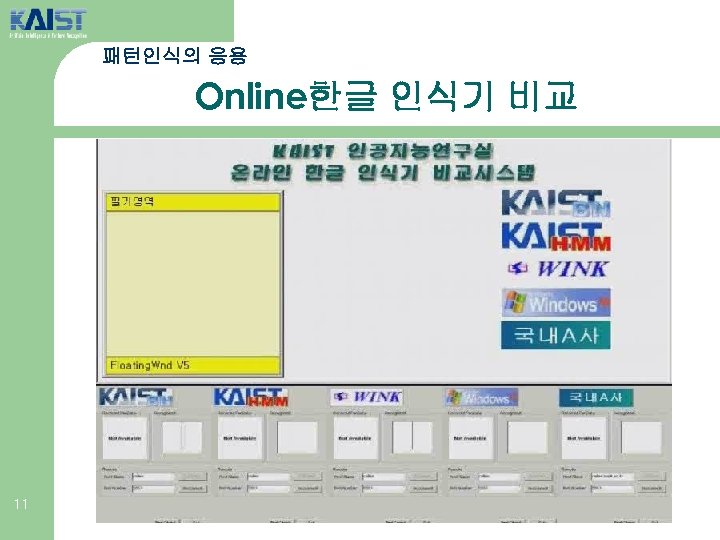

패턴인식의 응용 Online한글 인식기 비교 11

패턴인식의 응용 KAIST Math Expression Recognizer : 12 Demo

패턴인식의 응용 Math. Tutor-SE Demo 1 3

패턴인식의 응용 古文書 認識 : 承政院 日記 14

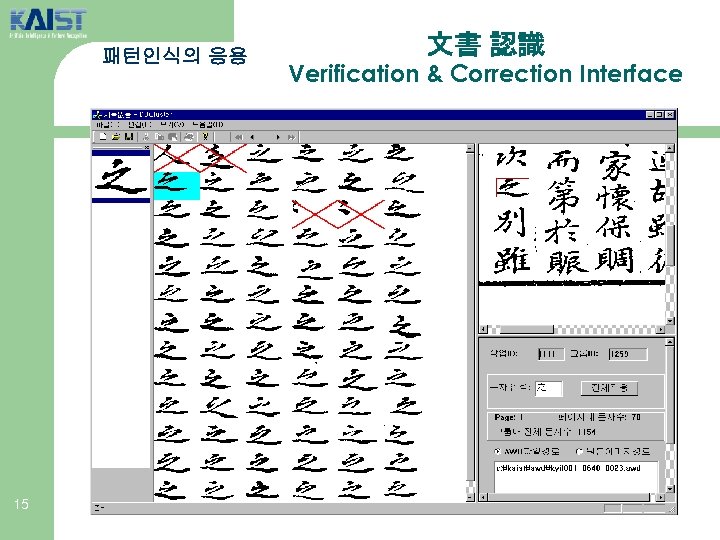

패턴인식의 응용 15 文書 認識 Verification & Correction Interface

패턴인식의 응용 Mail Sorter 16

패턴인식의 응용 17 Scene Text Recognition

패턴인식의 응용 18 Autonomous Land Vehicle (DARPA’s Grand. Challenge contest) http: //www. youtube. com/watch? v=y. Q 5 U 8 su. TUw 0

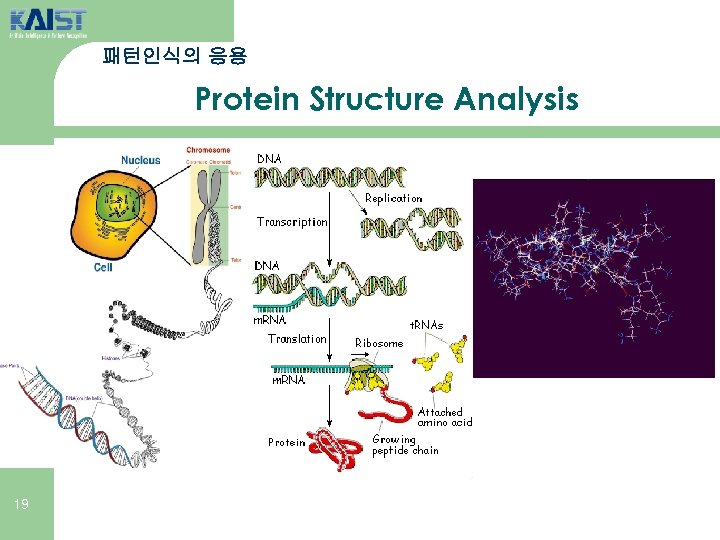

패턴인식의 응용 Protein Structure Analysis 19

패턴인식의 응용 Protein Structure Analysis 20

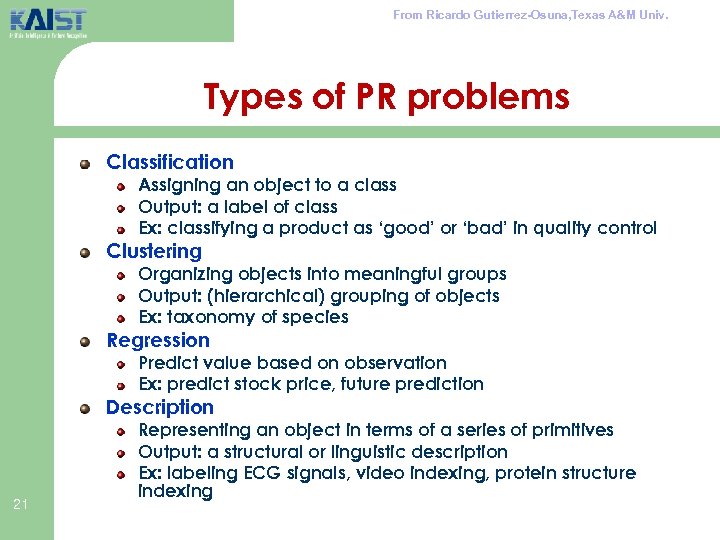

From Ricardo Gutierrez-Osuna, Texas A&M Univ. Types of PR problems Classification Assigning an object to a class Output: a label of class Ex: classifying a product as ‘good’ or ‘bad’ in quality control Clustering Organizing objects into meaningful groups Output: (hierarchical) grouping of objects Ex: taxonomy of species Regression Predict value based on observation Ex: predict stock price, future prediction Description 21 Representing an object in terms of a series of primitives Output: a structural or linguistic description Ex: labeling ECG signals, video indexing, protein structure indexing

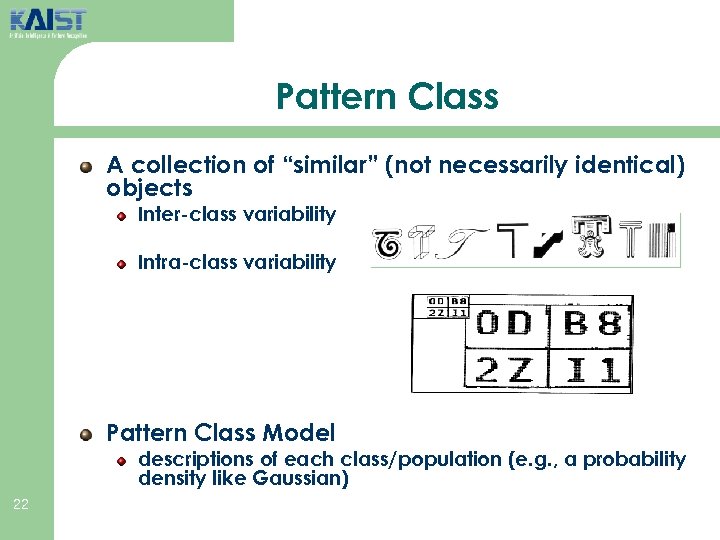

Pattern Class A collection of “similar” (not necessarily identical) objects Inter-class variability Intra-class variability Pattern Class Model descriptions of each class/population (e. g. , a probability density like Gaussian) 22

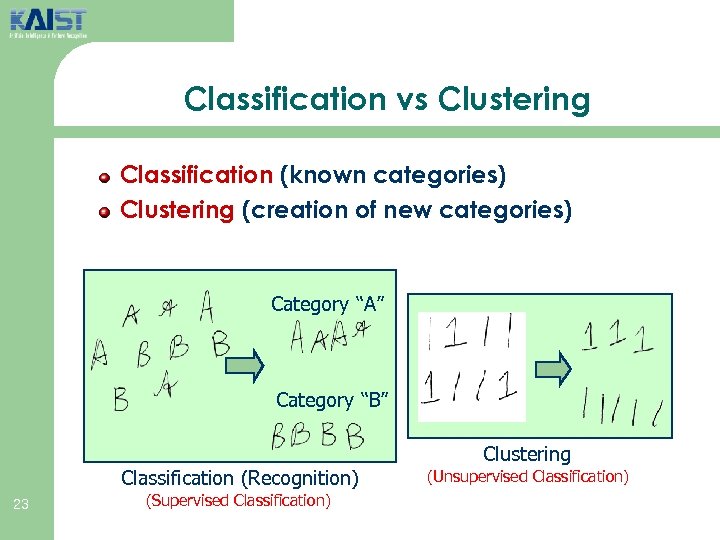

Classification vs Clustering Classification (known categories) Clustering (creation of new categories) Category “A” Category “B” Classification (Recognition) 23 (Supervised Classification) Clustering (Unsupervised Classification)

Pattern Recognition : Key Objectives Process the sensed data to eliminate noise Data vs Noise Hypothesize models that describe each class population Then we may recover the process that generated the patterns. Choose the best-fitting model for given sensed data to assign the class label associated with the model. 24

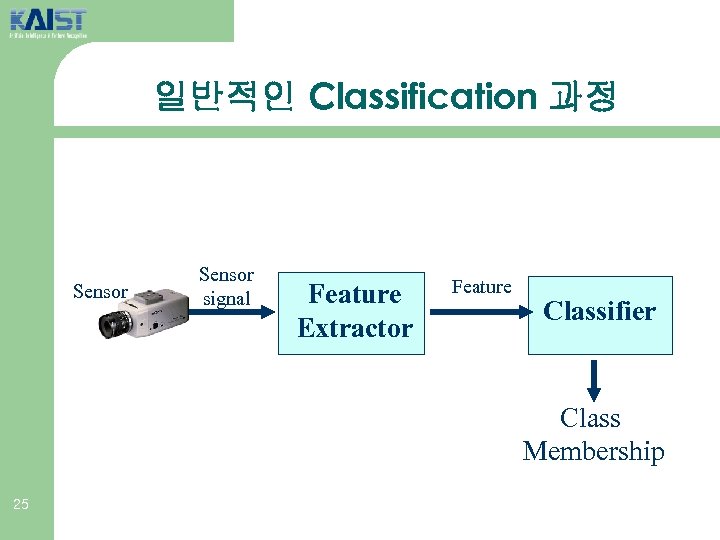

일반적인 Classification 과정 Sensor signal Feature Extractor Feature Classifier Class Membership 25

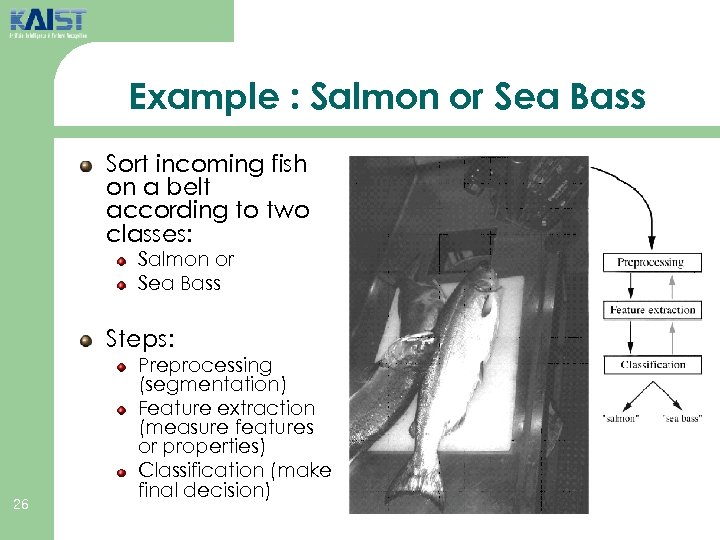

Example : Salmon or Sea Bass Sort incoming fish on a belt according to two classes: Salmon or Sea Bass Steps: 26 Preprocessing (segmentation) Feature extraction (measure features or properties) Classification (make final decision)

Length Lightness Width Number and shape of fins Position of the mouth … Sea bass vs Salmon (by Image) 27

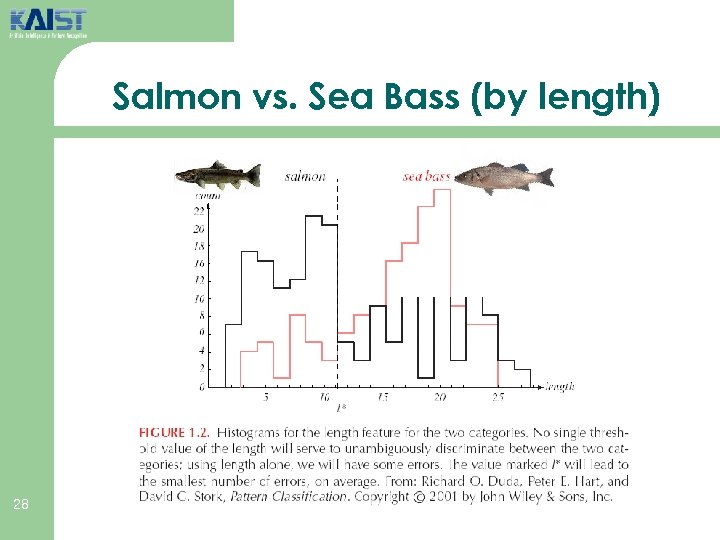

Salmon vs. Sea Bass (by length) 28

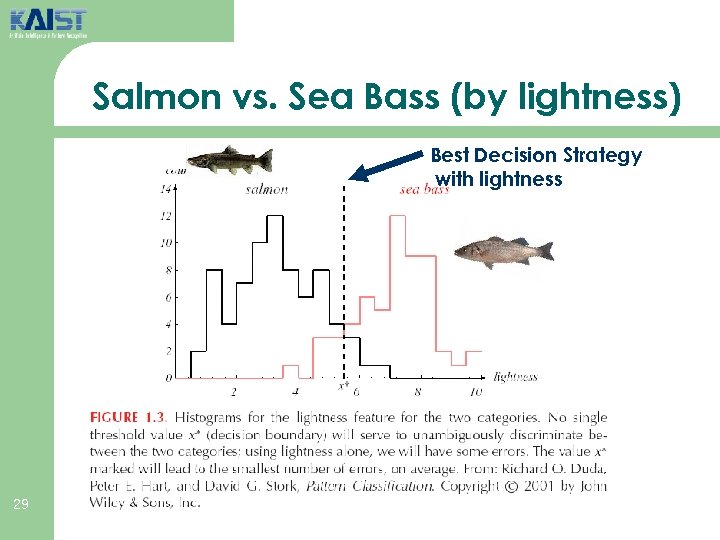

Salmon vs. Sea Bass (by lightness) Best Decision Strategy with lightness 29

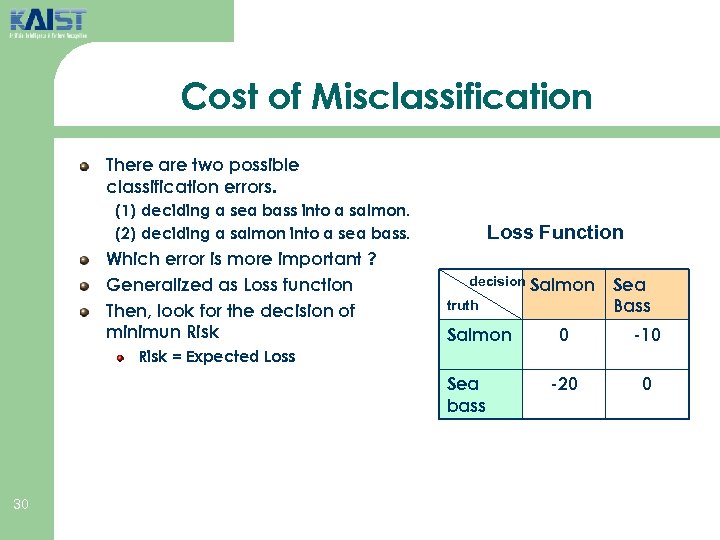

Cost of Misclassification There are two possible classification errors. (1) deciding a sea bass into a salmon. (2) deciding a salmon into a sea bass. Which error is more important ? Generalized as Loss function Then, look for the decision of minimun Risk Loss Function decision Salmon truth Salmon Sea Bass 0 -10 -20 0 Risk = Expected Loss Sea bass 30

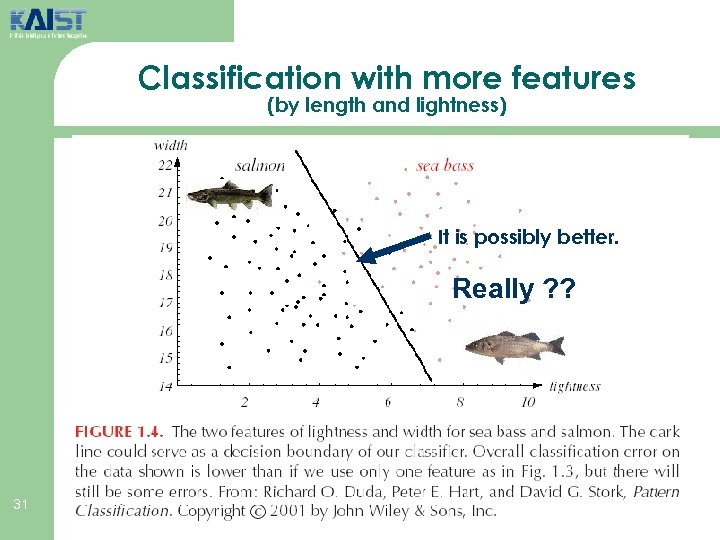

Classification with more features (by length and lightness) It is possibly better. Really ? ? 31

How Many Features and Which? Choice of features determines success or failure of classification task For a given feature, we may compute the best decision strategy from the (training) data Is called training, parameter adaptation, learning Machine Learning Issues 32

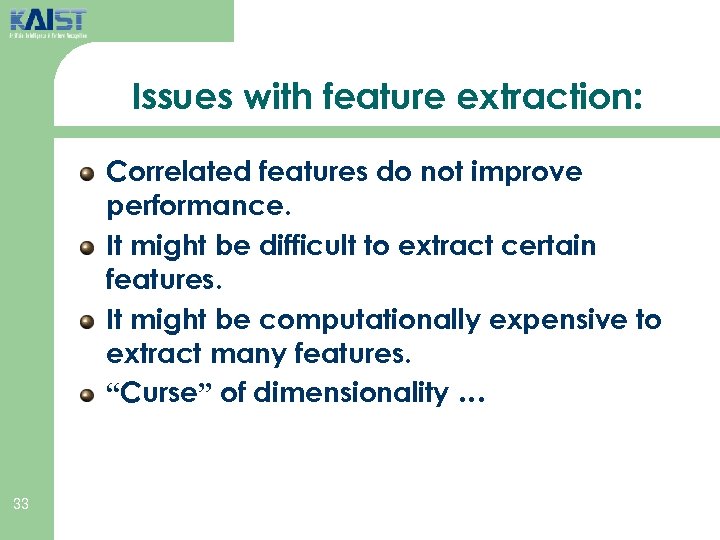

Issues with feature extraction: Correlated features do not improve performance. It might be difficult to extract certain features. It might be computationally expensive to extract many features. “Curse” of dimensionality … 33

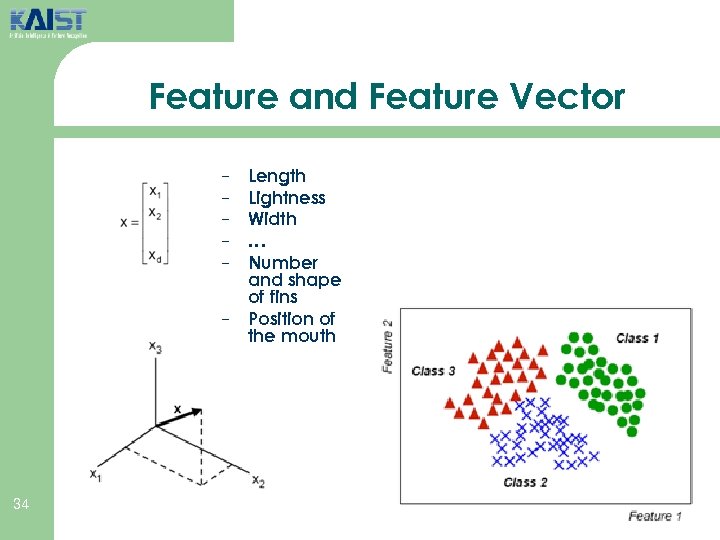

Feature and Feature Vector − − − 34 Length Lightness Width … Number and shape of fins Position of the mouth

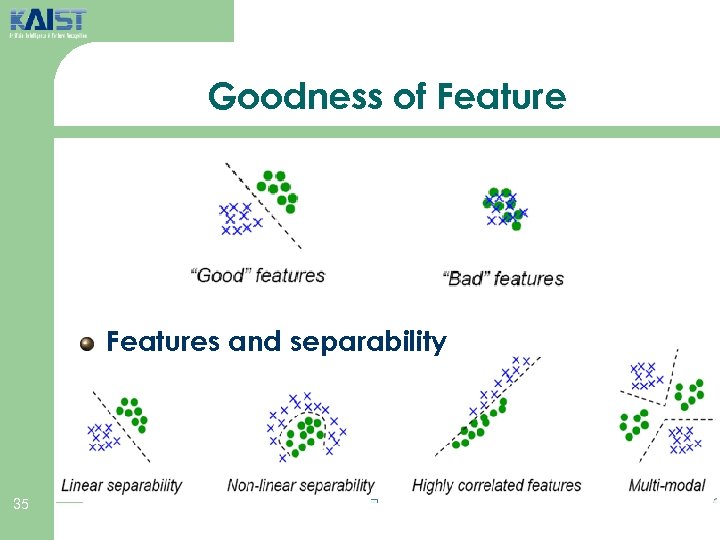

Goodness of Features and separability 35

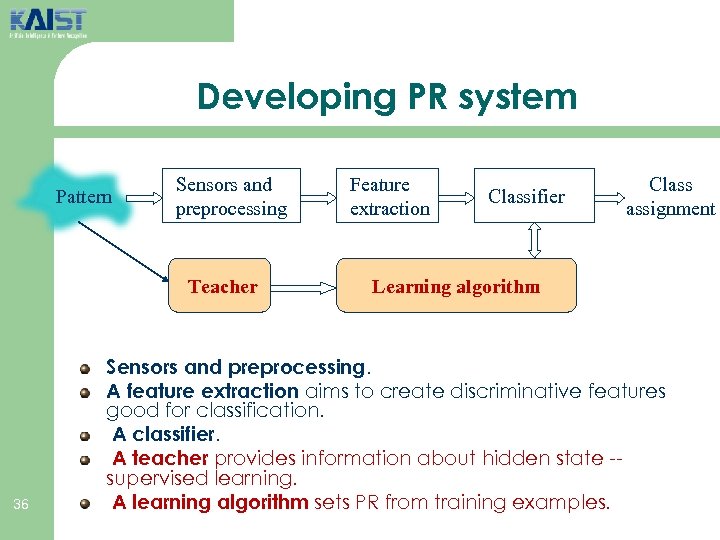

Developing PR system Pattern Sensors and preprocessing Teacher 36 Feature extraction Classifier Class assignment Learning algorithm Sensors and preprocessing. A feature extraction aims to create discriminative features good for classification. A classifier. A teacher provides information about hidden state -supervised learning. A learning algorithm sets PR from training examples.

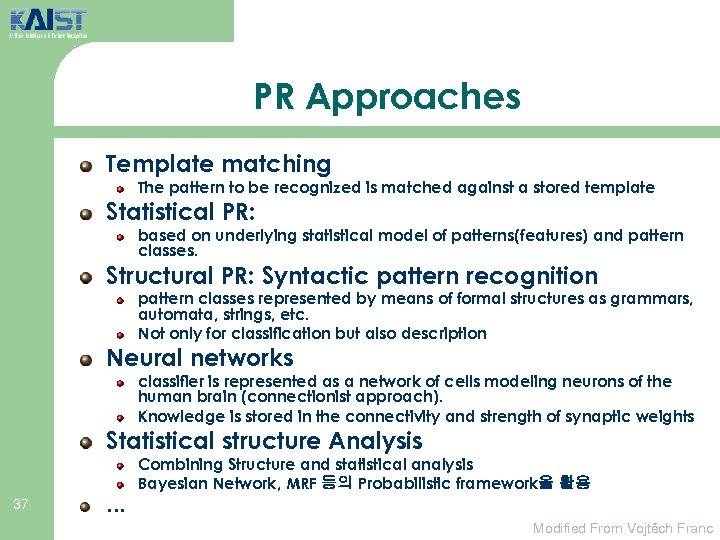

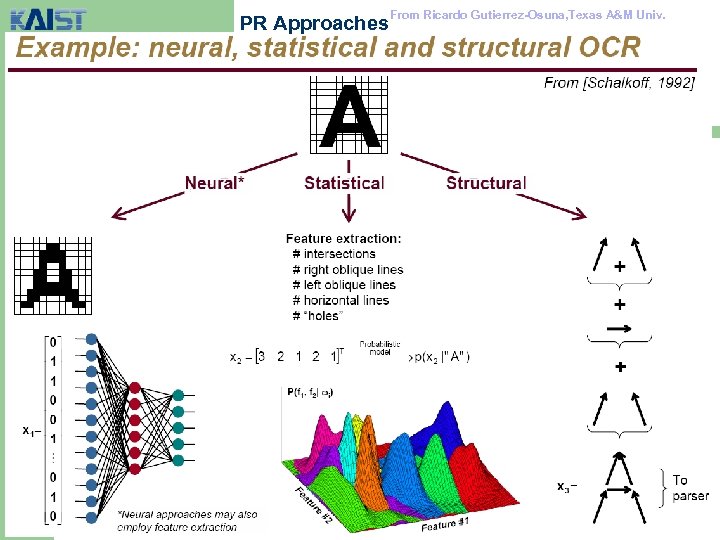

PR Approaches Template matching The pattern to be recognized is matched against a stored template Statistical PR: based on underlying statistical model of patterns(features) and pattern classes. Structural PR: Syntactic pattern recognition pattern classes represented by means of formal structures as grammars, automata, strings, etc. Not only for classification but also description Neural networks classifier is represented as a network of cells modeling neurons of the human brain (connectionist approach). Knowledge is stored in the connectivity and strength of synaptic weights Statistical structure Analysis 37 … Combining Structure and statistical analysis Bayesian Network, MRF 등의 Probabilistic framework을 활용 Modified From Vojtěch Franc

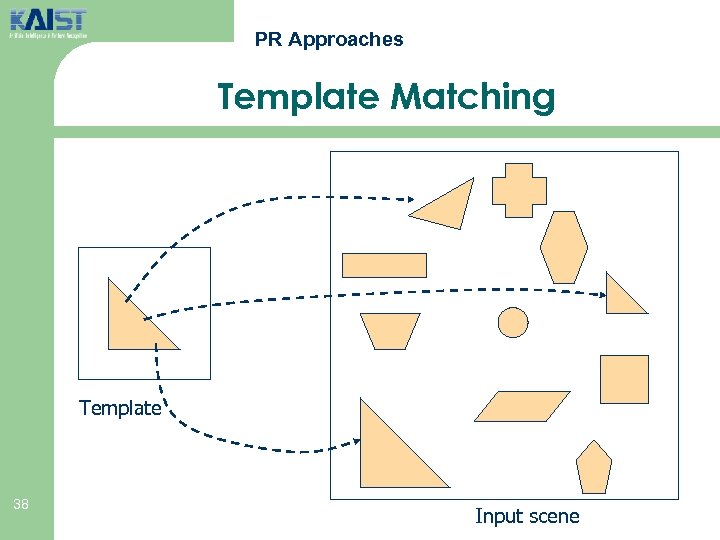

PR Approaches Template Matching Template 38 Input scene

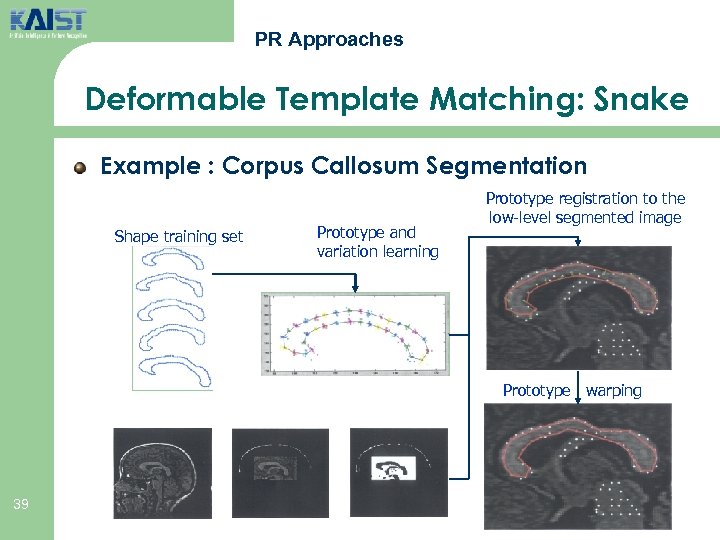

PR Approaches Deformable Template Matching: Snake Example : Corpus Callosum Segmentation Shape training set Prototype and variation learning Prototype registration to the low-level segmented image Prototype warping 39

PR Approaches 40 From Ricardo Gutierrez-Osuna, Texas A&M Univ.

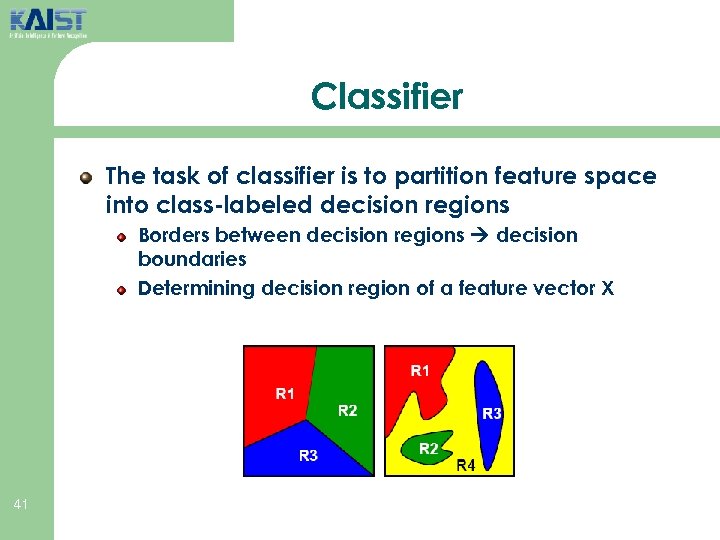

Classifier The task of classifier is to partition feature space into class-labeled decision regions Borders between decision regions decision boundaries Determining decision region of a feature vector X 41

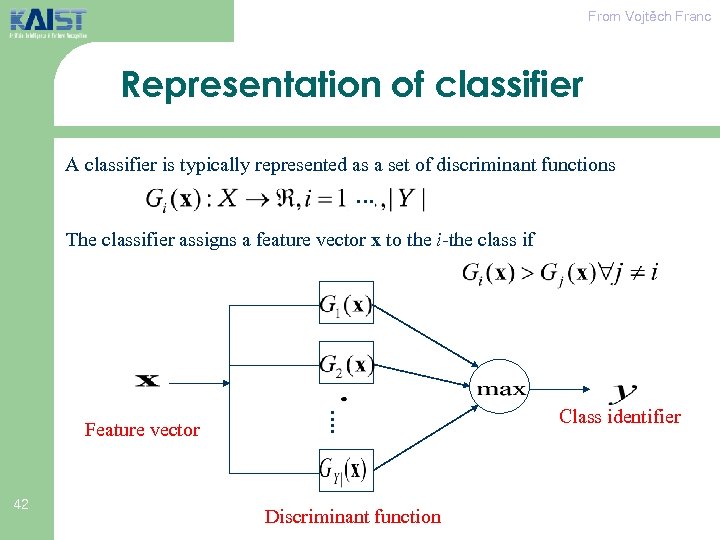

From Vojtěch Franc Representation of classifier A classifier is typically represented as a set of discriminant functions … The classifier assigns a feature vector x to the i-the class if 42 …. Feature vector Discriminant function Class identifier

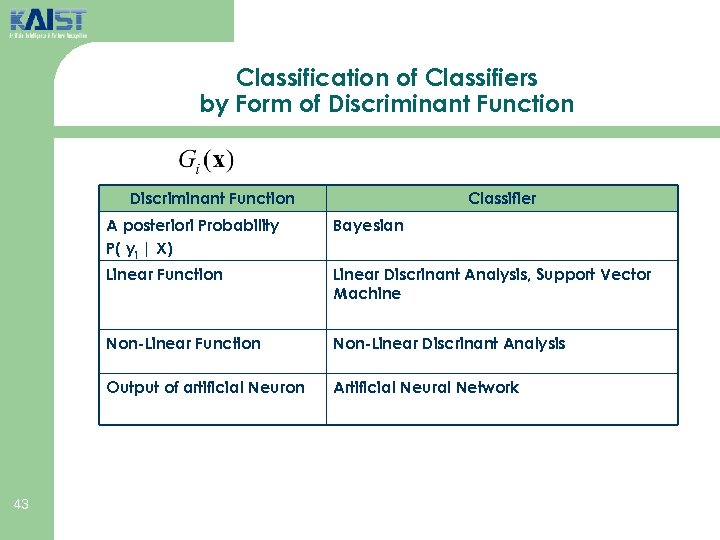

Classification of Classifiers by Form of Discriminant Function Classifier A posteriori Probability P( yi | X) Linear Function Linear Discrinant Analysis, Support Vector Machine Non-Linear Function Non-Linear Discrinant Analysis Output of artificial Neuron 43 Bayesian Artificial Neural Network

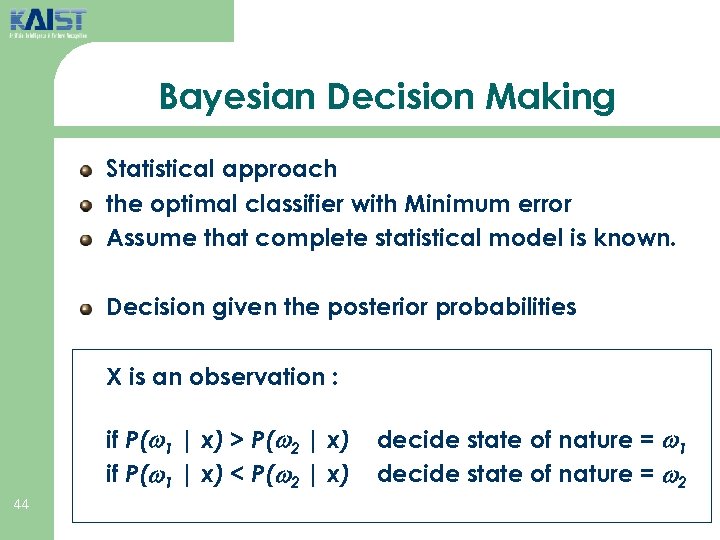

Bayesian Decision Making Statistical approach the optimal classifier with Minimum error Assume that complete statistical model is known. Decision given the posterior probabilities X is an observation : if P( 1 | x) > P( 2 | x) if P( 1 | x) < P( 2 | x) 44 decide state of nature = 1 decide state of nature = 2

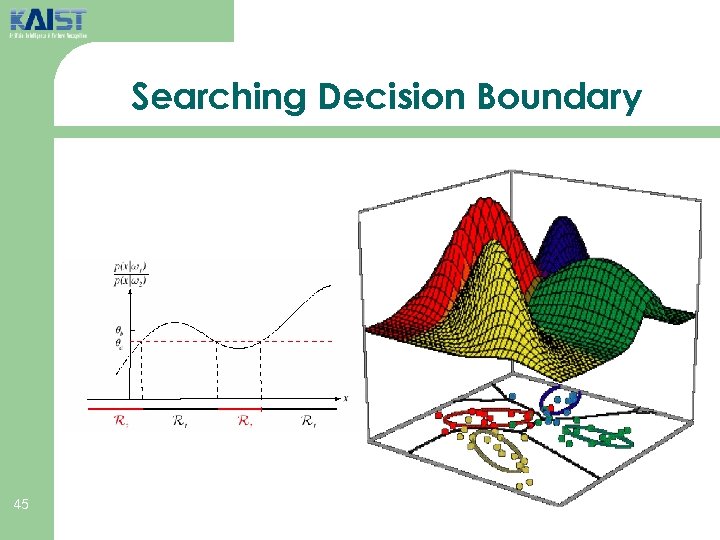

Searching Decision Boundary 45

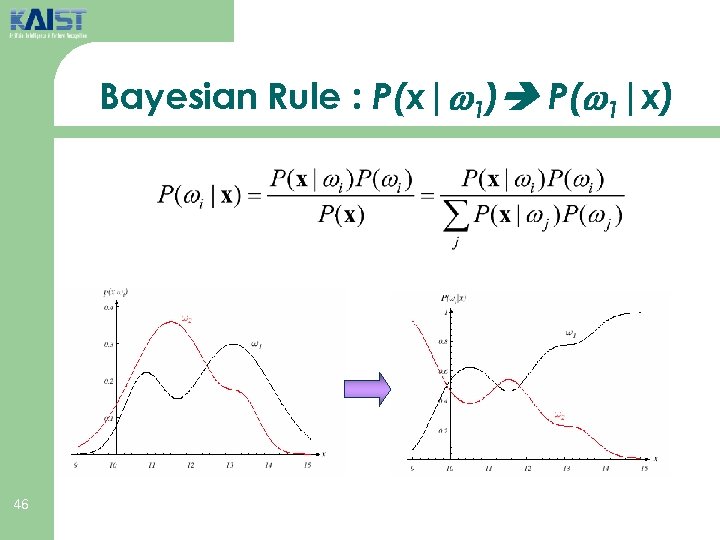

Bayesian Rule : P(x| 1) P( 1|x) 46

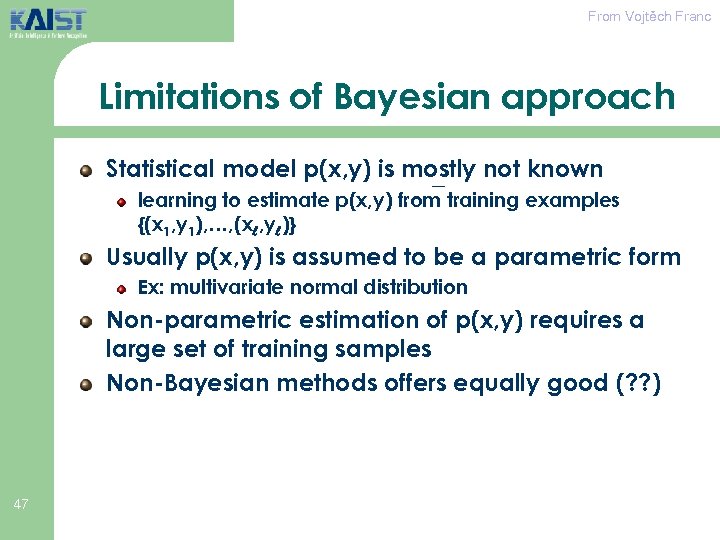

From Vojtěch Franc Limitations of Bayesian approach Statistical model p(x, y) is mostly not known learning to estimate p(x, y) from training examples {(x 1, y 1), …, (x , y )} Usually p(x, y) is assumed to be a parametric form Ex: multivariate normal distribution Non-parametric estimation of p(x, y) requires a large set of training samples Non-Bayesian methods offers equally good (? ? ) 47

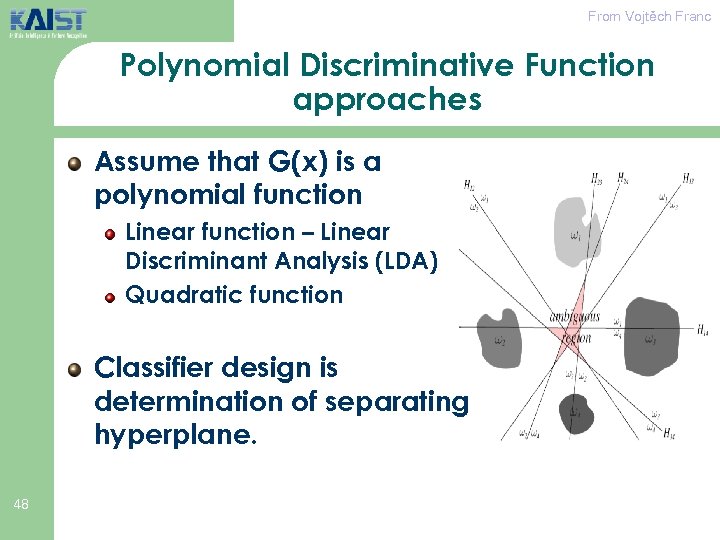

From Vojtěch Franc Polynomial Discriminative Function approaches Assume that G(x) is a polynomial function Linear function – Linear Discriminant Analysis (LDA) Quadratic function Classifier design is determination of separating hyperplane. 48

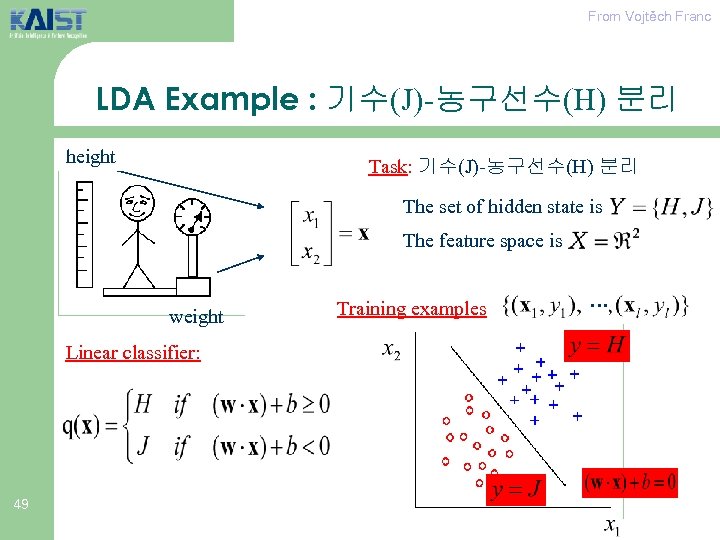

From Vojtěch Franc LDA Example : 기수(J)-농구선수(H) 분리 height Task: 기수(J)-농구선수(H) 분리 The set of hidden state is The feature space is weight Linear classifier: 49 Training examples …

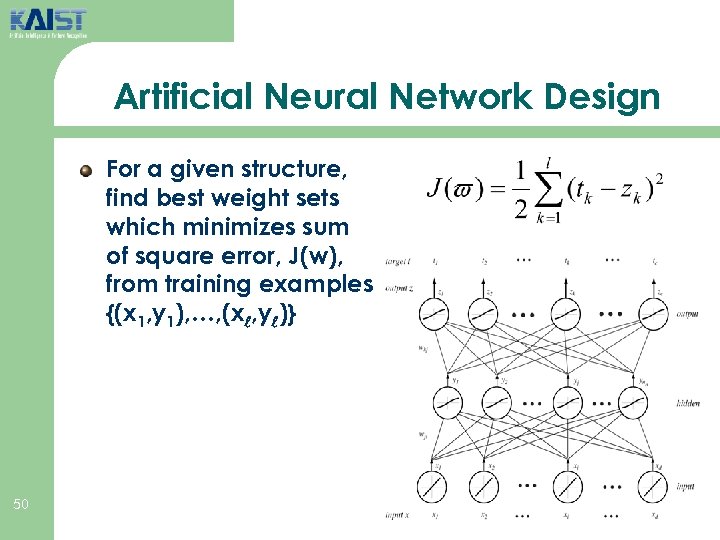

Artificial Neural Network Design For a given structure, find best weight sets which minimizes sum of square error, J(w), from training examples {(x 1, y 1), …, (x , y )} 50

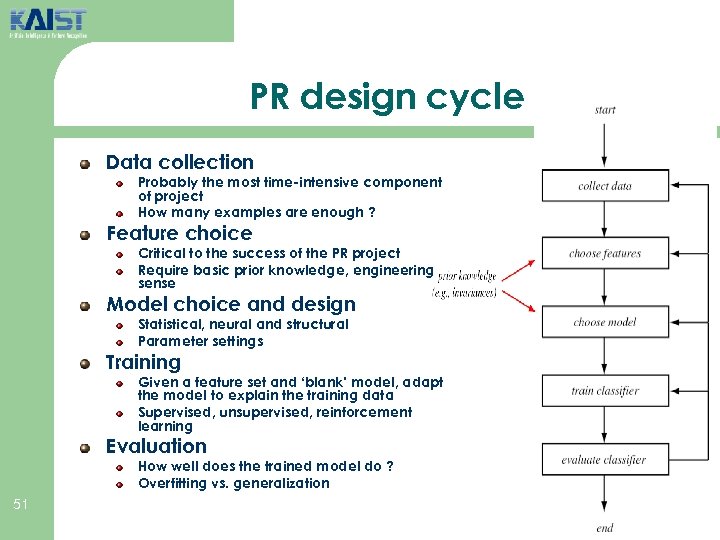

PR design cycle Data collection Probably the most time-intensive component of project How many examples are enough ? Feature choice Critical to the success of the PR project Require basic prior knowledge, engineering sense Model choice and design Statistical, neural and structural Parameter settings Training Given a feature set and ‘blank’ model, adapt the model to explain the training data Supervised, unsupervised, reinforcement learning Evaluation How well does the trained model do ? Overfitting vs. generalization 51

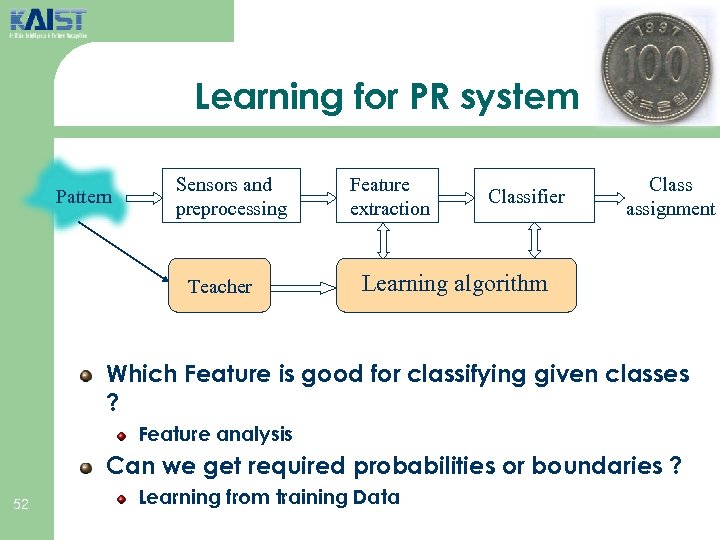

Learning for PR system Pattern Sensors and preprocessing Teacher Feature extraction Classifier Class assignment Learning algorithm Which Feature is good for classifying given classes ? Feature analysis Can we get required probabilities or boundaries ? 52 Learning from training Data

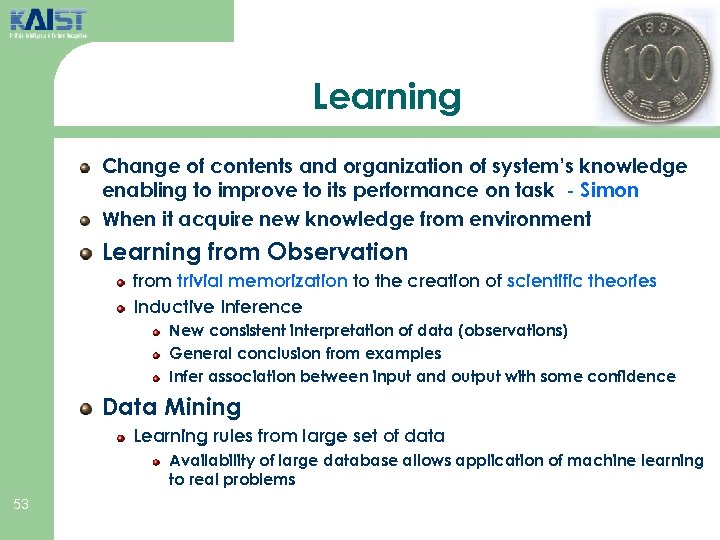

Learning Change of contents and organization of system’s knowledge enabling to improve to its performance on task - Simon When it acquire new knowledge from environment Learning from Observation from trivial memorization to the creation of scientific theories Inductive Inference New consistent interpretation of data (observations) General conclusion from examples Infer association between input and output with some confidence Data Mining Learning rules from large set of data Availability of large database allows application of machine learning to real problems 53

Learning Algorithm Categorization Depending on Available Feedback Supervised learning examples of correct input/output pair is available Induction Unsupervised learning No hint at all about the correct outputs. Clustering or consistent interpretation. Reinforcement learning Receives no examples, but rewards or punishments at the end Semi-supervised learning Training with labeled training examples and unlabeled examples 54

Issues on Learning Algorithm Prior Knowledge Prior knowledge can help in learning. Assumptions on parametric forms and range of values Incremental learning Update old knowledge whenever new example arrives Batch learning Apply learning algorithm to the entire set of examples Analytic approach : find the optimal parameter values by analysis Iterative adaptation : improve parameter values from initial guess 55

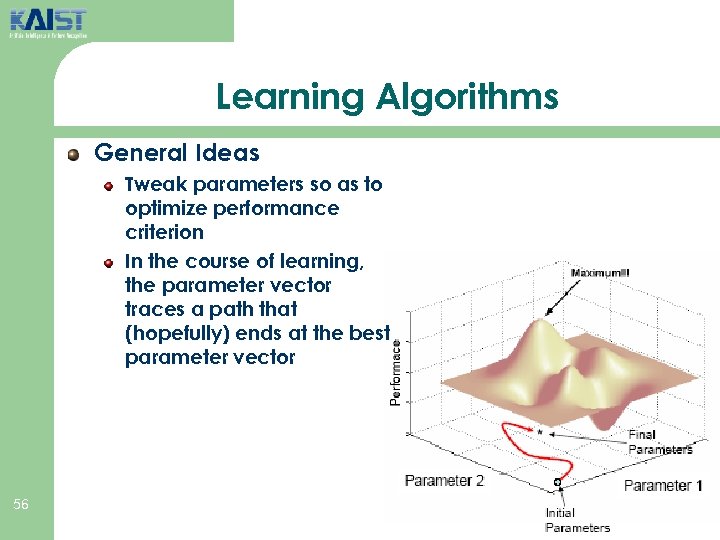

Learning Algorithms General Ideas Tweak parameters so as to optimize performance criterion In the course of learning, the parameter vector traces a path that (hopefully) ends at the best parameter vector 56

Inductive Learning For given training examples correct input-output pairs), Recover unknown underlying function from which the training data generated Generalization ability for unseen data is required Forms of the Function Logical sentences / Polynomials / Set of weights (Neural Networks), … Given form of function, adjust parameters to minimize error 57

Theory of Inductive Inference Concept C X Examples are given as (x, y) where x X and y = 1 if x C, y = 0 if x C Find F such that F(x)= 1 if x C, and F(x)= 0 if x C Inductive bias constraints on hypothesis space Table of all observation is not a choice Restricted Hypothesis space biases Preference biases Occam’s razor (Ockham) : simple hypo is best 58

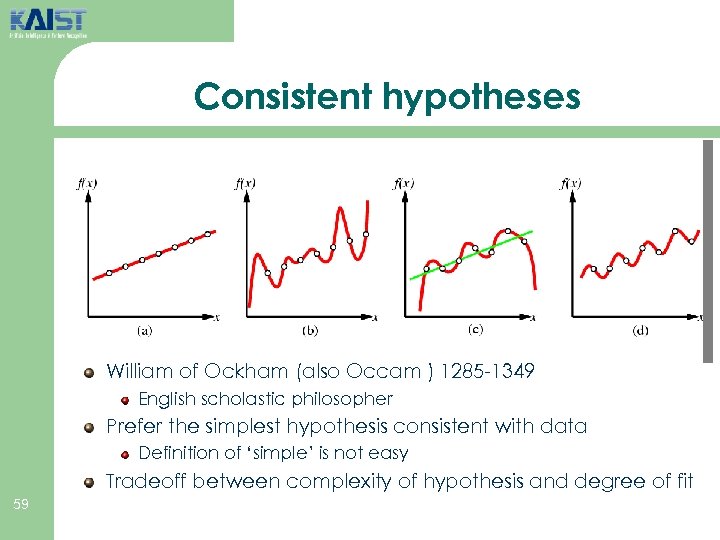

Consistent hypotheses William of Ockham (also Occam ) 1285 -1349 English scholastic philosopher Prefer the simplest hypothesis consistent with data Definition of ‘simple’ is not easy Tradeoff between complexity of hypothesis and degree of fit 59

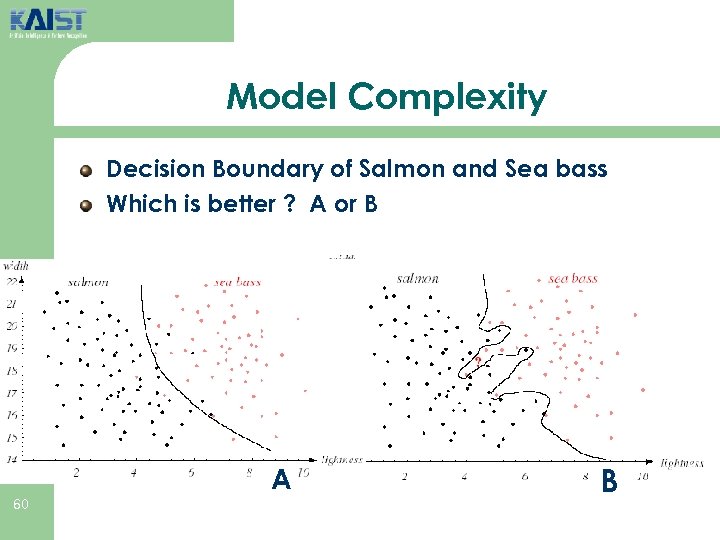

Model Complexity Decision Boundary of Salmon and Sea bass Which is better ? A or B A 60 B

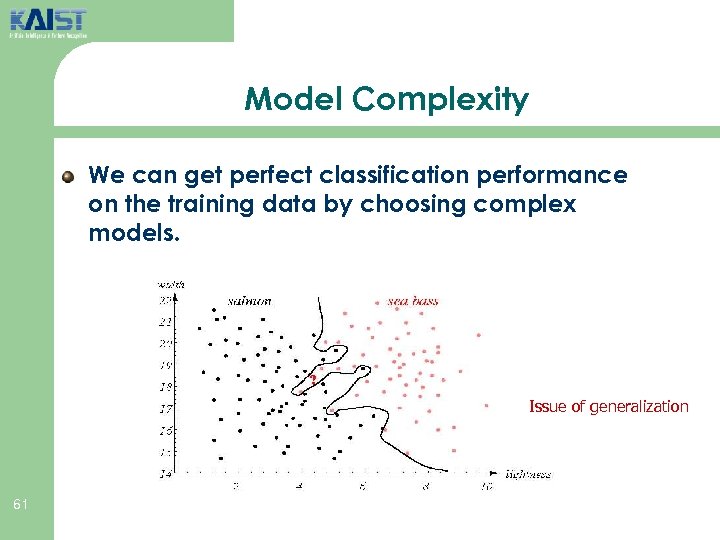

Model Complexity We can get perfect classification performance on the training data by choosing complex models. Issue of generalization 61

Generalization The main goal of pattern classification system is to suggest the class of objects yet unseen : Generalization Some complex decision boundaries are not good at generalization. Some simple boundaries are not good either. Tradeoff between performance and simplicity core of statistical pattern recognition 62

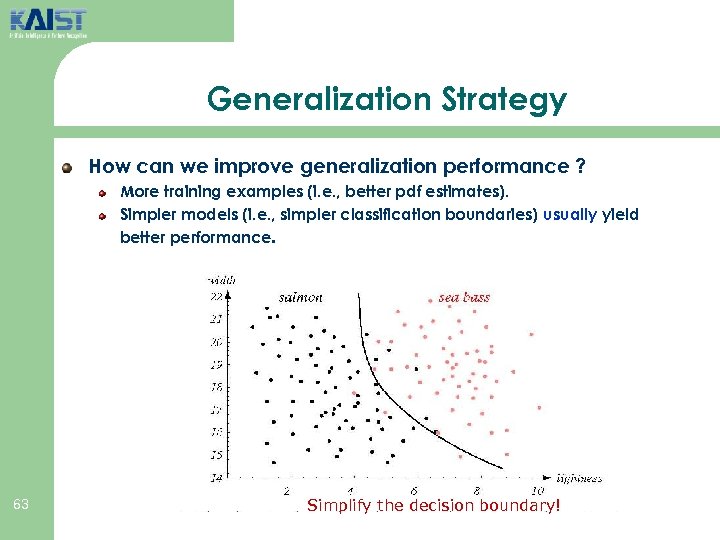

Generalization Strategy How can we improve generalization performance ? More training examples (i. e. , better pdf estimates). Simpler models (i. e. , simpler classification boundaries) usually yield better performance. 63 Simplify the decision boundary!

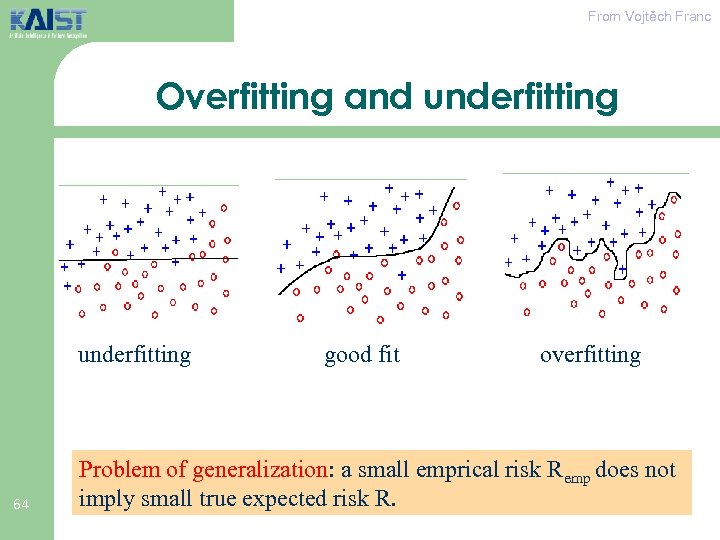

From Vojtěch Franc Overfitting and underfitting 64 good fit overfitting Problem of generalization: a small emprical risk Remp does not imply small true expected risk R.

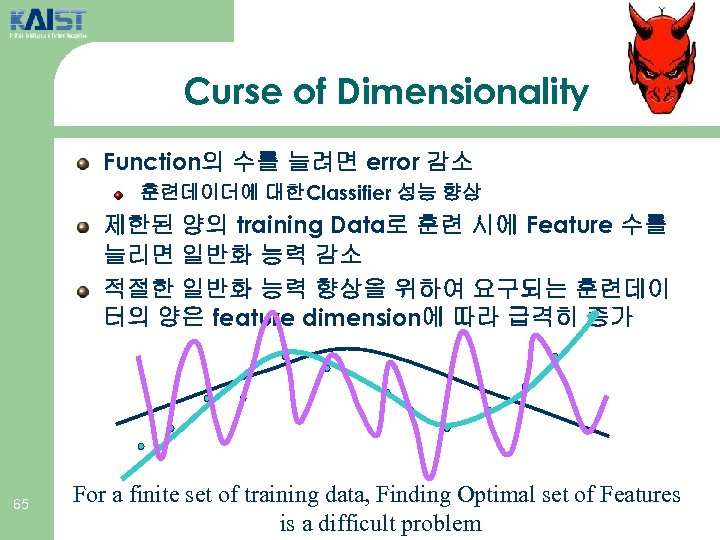

Curse of Dimensionality Function의 수를 늘려면 error 감소 훈련데이더에 대한Classifier 성능 향상 제한된 양의 training Data로 훈련 시에 Feature 수를 늘리면 일반화 능력 감소 적절한 일반화 능력 향상을 위하여 요구되는 훈련데이 터의 양은 feature dimension에 따라 급격히 증가 65 For a finite set of training data, Finding Optimal set of Features is a difficult problem

Two Slot Machine Problem Maximize outcomes from two slot machines of unknown return rates 66 How much coins should be spent to find the better machine ?

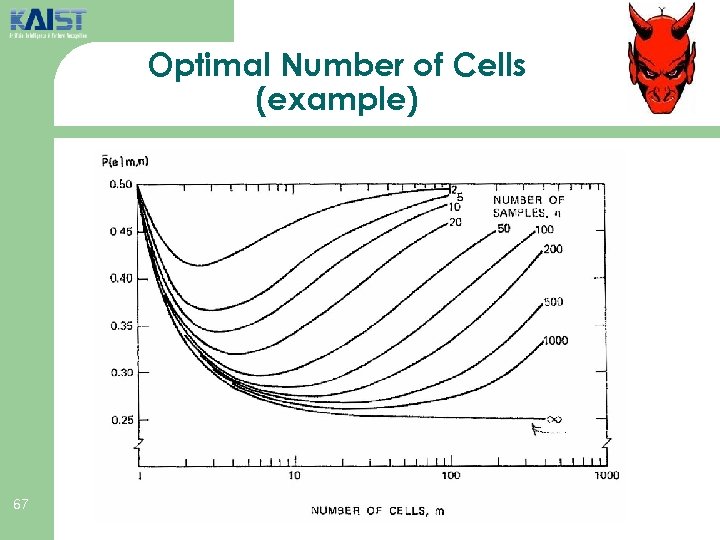

Optimal Number of Cells (example) 67

Implication of Curse of Dimensionality to PR system design With finite training samples, be cautious of adding features Features of high Discrimination power first Feature analysis is mandatory Simple neural networks is generally better small number of hidden nodes, links Tips for structure simplification Parameter tying Eliminate links during learning 68

Cross-Validation Validate learned model on different set to assess the generalization performance guarding against overfitting Partition Training set into Estimation subset for learning parameters validation subset cross-validation for best model selection determine when to stop training Leave-one-out validation method 69 N-1 for training, 1 for validation, takes turn Overcome Small training set

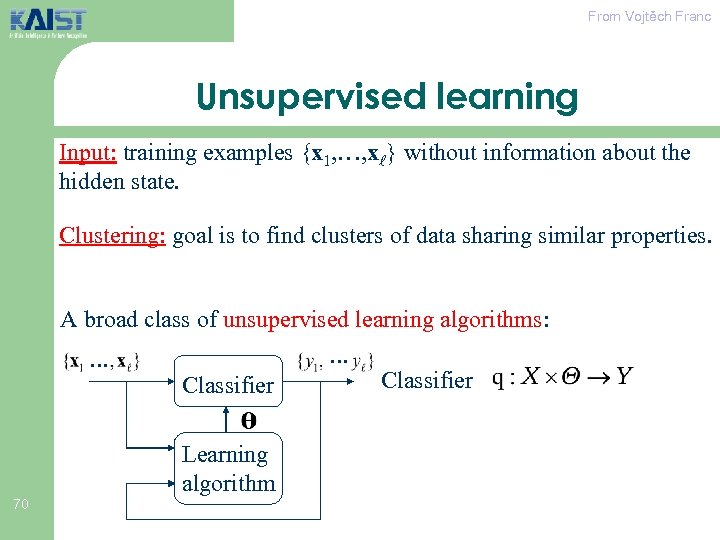

From Vojtěch Franc Unsupervised learning Input: training examples {x 1, …, x } without information about the hidden state. Clustering: goal is to find clusters of data sharing similar properties. A broad class of unsupervised learning algorithms: … … Classifier Learning algorithm 70 Classifier

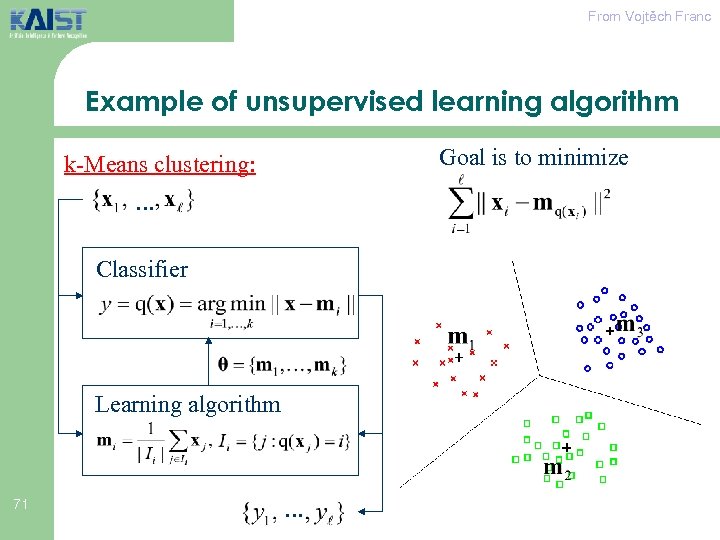

From Vojtěch Franc Example of unsupervised learning algorithm Goal is to minimize k-Means clustering: … Classifier Learning algorithm 71 …

Other Issues in Pattern Recognition

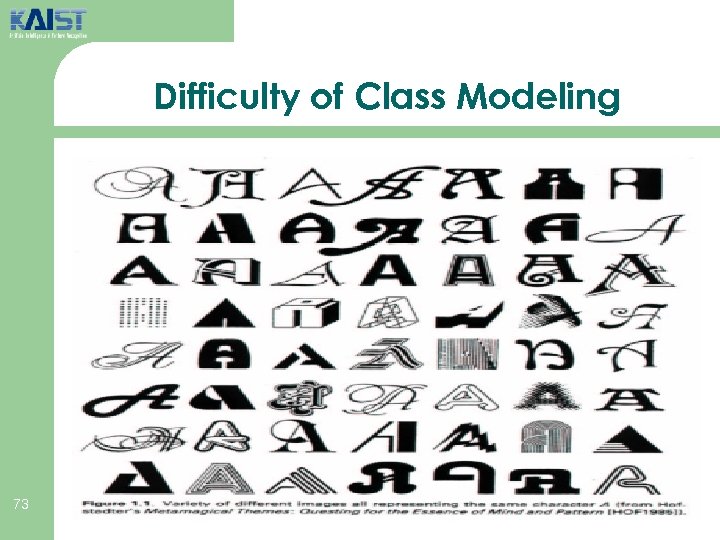

Difficulty of Class Modeling 73

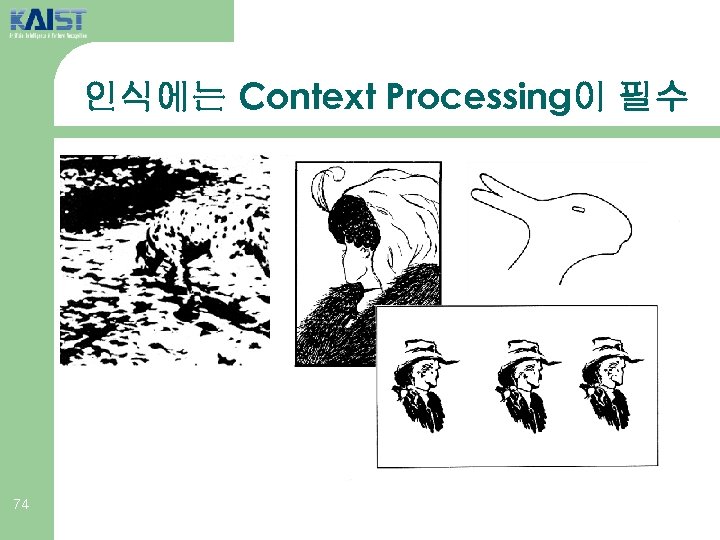

인식에는 Context Processing이 필수 74

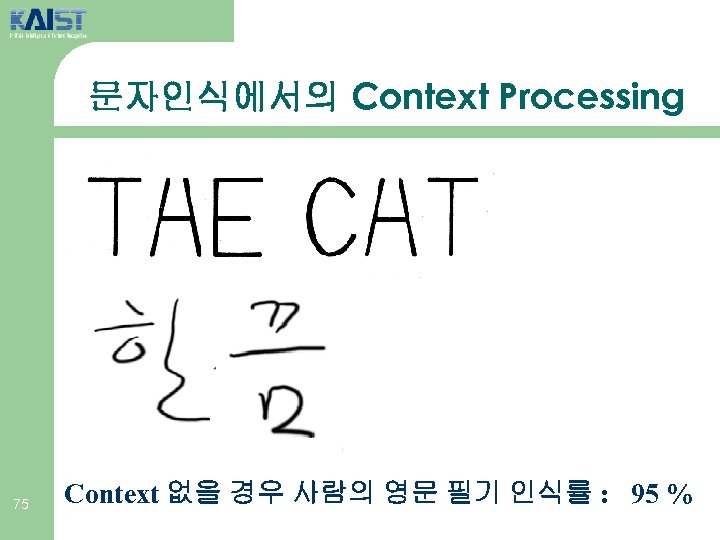

문자인식에서의 Context Processing 75 Context 없을 경우 사람의 영문 필기 인식률 : 95 %

Global Consistency 76 Local decision is not enough

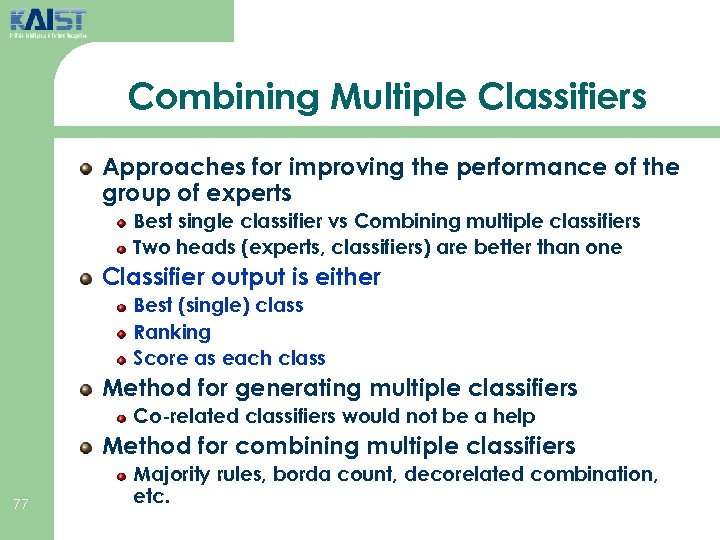

Combining Multiple Classifiers Approaches for improving the performance of the group of experts Best single classifier vs Combining multiple classifiers Two heads (experts, classifiers) are better than one Classifier output is either Best (single) class Ranking Score as each class Method for generating multiple classifiers Co-related classifiers would not be a help Method for combining multiple classifiers 77 Majority rules, borda count, decorelated combination, etc.

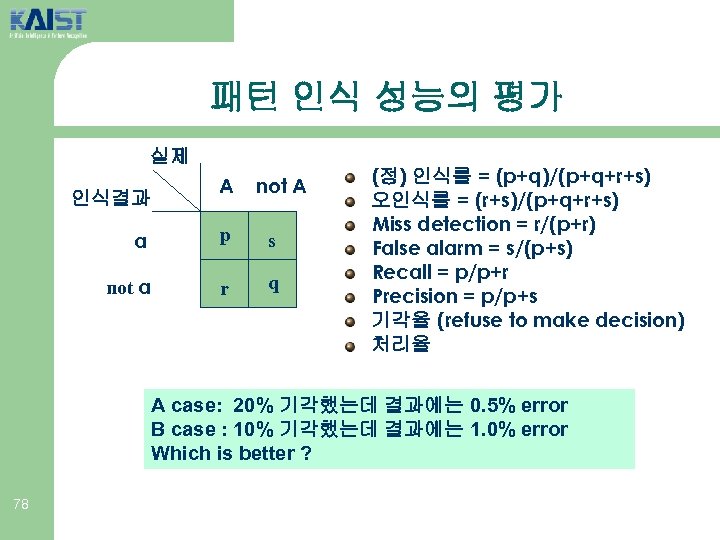

패턴 인식 성능의 평가 실제 인식결과 A not A a p s not a r q (정) 인식률 = (p+q)/(p+q+r+s) 오인식률 = (r+s)/(p+q+r+s) Miss detection = r/(p+r) False alarm = s/(p+s) Recall = p/p+r Precision = p/p+s 기각율 (refuse to make decision) 처리율 A case: 20% 기각했는데 결과에는 0. 5% error B case : 10% 기각했는데 결과에는 1. 0% error Which is better ? 78

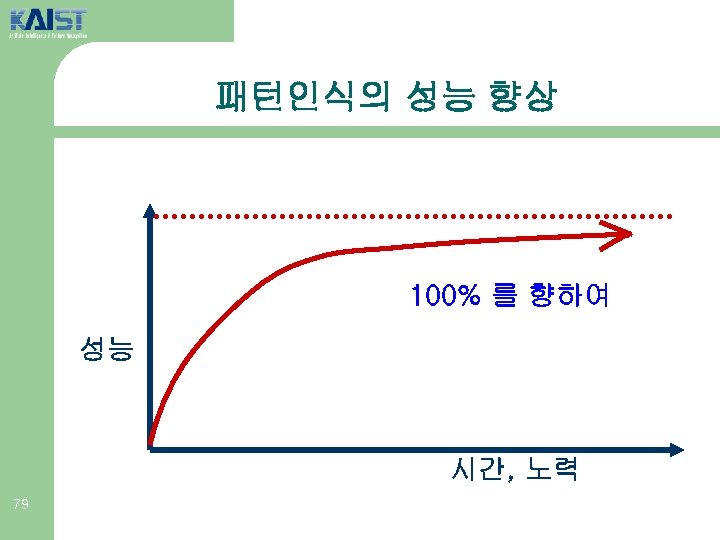

패턴인식의 성능 향상 100% 를 향하여 성능 시간, 노력 79

첨부

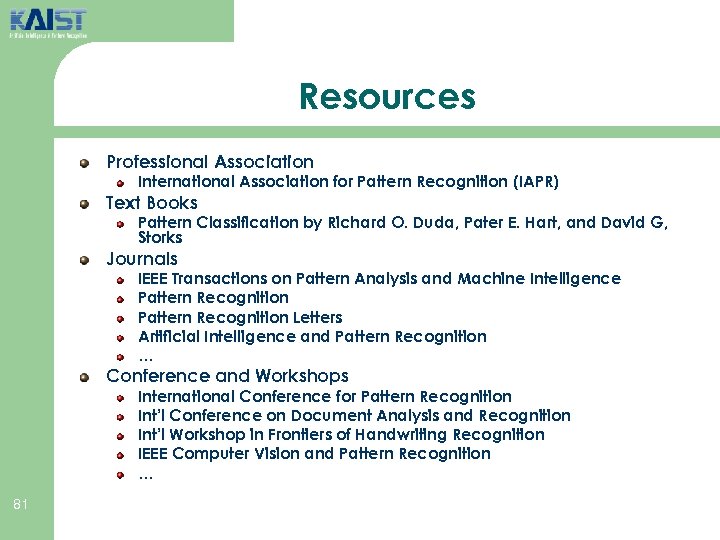

Resources Professional Association International Association for Pattern Recognition (IAPR) Text Books Pattern Classification by Richard O. Duda, Pater E. Hart, and David G, Storks Journals IEEE Transactions on Pattern Analysis and Machine Intelligence Pattern Recognition Letters Artificial Intelligence and Pattern Recognition … Conference and Workshops International Conference for Pattern Recognition Int’l Conference on Document Analysis and Recognition Int’l Workshop in Frontiers of Handwriting Recognition IEEE Computer Vision and Pattern Recognition … 81

16b81041492c8cdbd06010ffe2b230a0.ppt