958609566d886fcc8f19e81ad10594e3.ppt

- Количество слайдов: 26

Florida Tech Grid Cluster P. Ford 2 * X. Fave 1 * M. Hohlmann 1 High Energy Physics Group 1 Department of Physics and Space Sciences 2 Department of Electrical & Computer Engineering

Florida Tech Grid Cluster P. Ford 2 * X. Fave 1 * M. Hohlmann 1 High Energy Physics Group 1 Department of Physics and Space Sciences 2 Department of Electrical & Computer Engineering

History Original conception in 2004 with FIT ACITC grant. 2007 - Received over 30 more low-end systems from UF. Basic cluster software operational. 2008 - Purchased high-end servers and designed new cluster. Established Cluster on Open Science Grid. 2009 - Upgraded and added systems. Registered as CMS Tier 3 site.

History Original conception in 2004 with FIT ACITC grant. 2007 - Received over 30 more low-end systems from UF. Basic cluster software operational. 2008 - Purchased high-end servers and designed new cluster. Established Cluster on Open Science Grid. 2009 - Upgraded and added systems. Registered as CMS Tier 3 site.

Current Status OS: Rocks V (Cent. OS 5. 0) Job Manager: Condor 7. 2. 0 Grid Middleware: OSG 1. 2, Berkeley Storage Manager (Be. St. Man) 2. 2. 1. 2. i 7. p 3, Physics Experiment Data Exports (Ph. EDEx) 3. 2. 0 Contributed over 400, 000 wall hours to CMS experiment. Over 1. 3 M wall hours total. Fully Compliant on OSG Resource Service Validation (RSV), and CMS Site Availability Monitoring (SAM) tests.

Current Status OS: Rocks V (Cent. OS 5. 0) Job Manager: Condor 7. 2. 0 Grid Middleware: OSG 1. 2, Berkeley Storage Manager (Be. St. Man) 2. 2. 1. 2. i 7. p 3, Physics Experiment Data Exports (Ph. EDEx) 3. 2. 0 Contributed over 400, 000 wall hours to CMS experiment. Over 1. 3 M wall hours total. Fully Compliant on OSG Resource Service Validation (RSV), and CMS Site Availability Monitoring (SAM) tests.

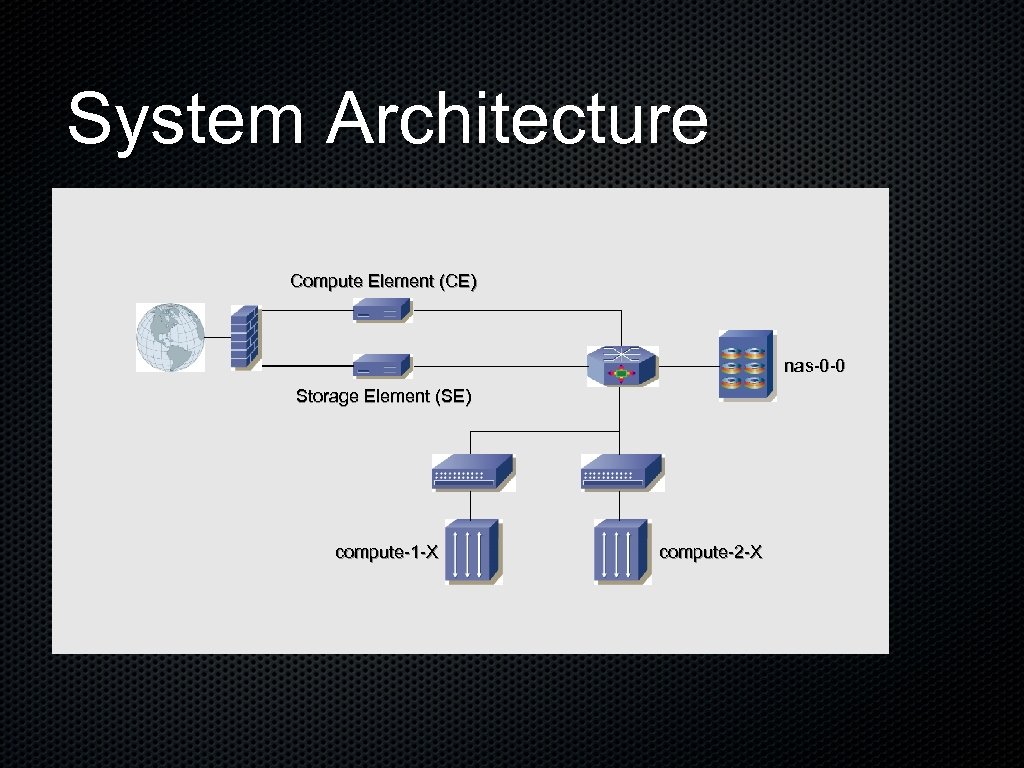

System Architecture Compute Element (CE) nas-0 -0 Storage Element (SE) compute-1 -X compute-2 -X

System Architecture Compute Element (CE) nas-0 -0 Storage Element (SE) compute-1 -X compute-2 -X

Hardware CE/Frontend: 8 Intel Xeon E 5410, 16 GB RAM, RAID 5 NAS 0: 4 CPUs, 8 GB RAM, 9. 6 TB RAID 6 Array SE: 8 CPUs, 64 GB RAM, 1 TB RAID 5 20 Compute Nodes: 8 CPUs & 16 GB RAM each. 160 total batch slots. Gigabit networking, Cisco Express at core. 2 x 208 V 5 k. VA UPS for nodes, 1 x 120 V 3 k. VA UPS for critical systems.

Hardware CE/Frontend: 8 Intel Xeon E 5410, 16 GB RAM, RAID 5 NAS 0: 4 CPUs, 8 GB RAM, 9. 6 TB RAID 6 Array SE: 8 CPUs, 64 GB RAM, 1 TB RAID 5 20 Compute Nodes: 8 CPUs & 16 GB RAM each. 160 total batch slots. Gigabit networking, Cisco Express at core. 2 x 208 V 5 k. VA UPS for nodes, 1 x 120 V 3 k. VA UPS for critical systems.

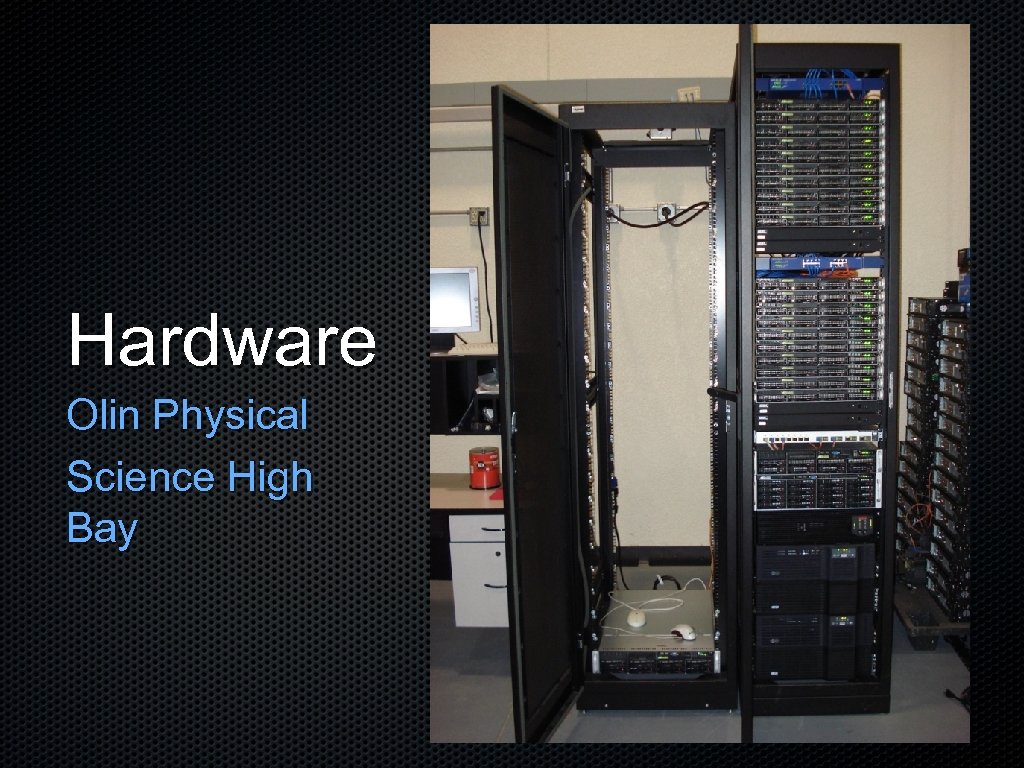

Hardware Olin Physical Science High Bay

Hardware Olin Physical Science High Bay

Rocks OS Huge software package for clusters (e. g. 411, dev tools, apache, autofs, ganglia) Allows customization through “Rolls” and appliances. Config stored in My. SQL. Customizable appliances auto-install nodes and post-install scripts.

Rocks OS Huge software package for clusters (e. g. 411, dev tools, apache, autofs, ganglia) Allows customization through “Rolls” and appliances. Config stored in My. SQL. Customizable appliances auto-install nodes and post-install scripts.

Storage Set up XFS on NAS partition - mounted on all machines. NAS stores all user and grid data, streams over NFS. Storage Element gateway for Grid storage on NAS array.

Storage Set up XFS on NAS partition - mounted on all machines. NAS stores all user and grid data, streams over NFS. Storage Element gateway for Grid storage on NAS array.

Condor Batch Job Manager Batch job system that enables distribution of workflow jobs to compute nodes. Distributed computing, NOT parallel. Users submit jobs to a queue and system finds places to process them. Great for Grid Computing, most-used in OSG/CMS. Supports “Universes” - Vanilla, Standard, Grid. . .

Condor Batch Job Manager Batch job system that enables distribution of workflow jobs to compute nodes. Distributed computing, NOT parallel. Users submit jobs to a queue and system finds places to process them. Great for Grid Computing, most-used in OSG/CMS. Supports “Universes” - Vanilla, Standard, Grid. . .

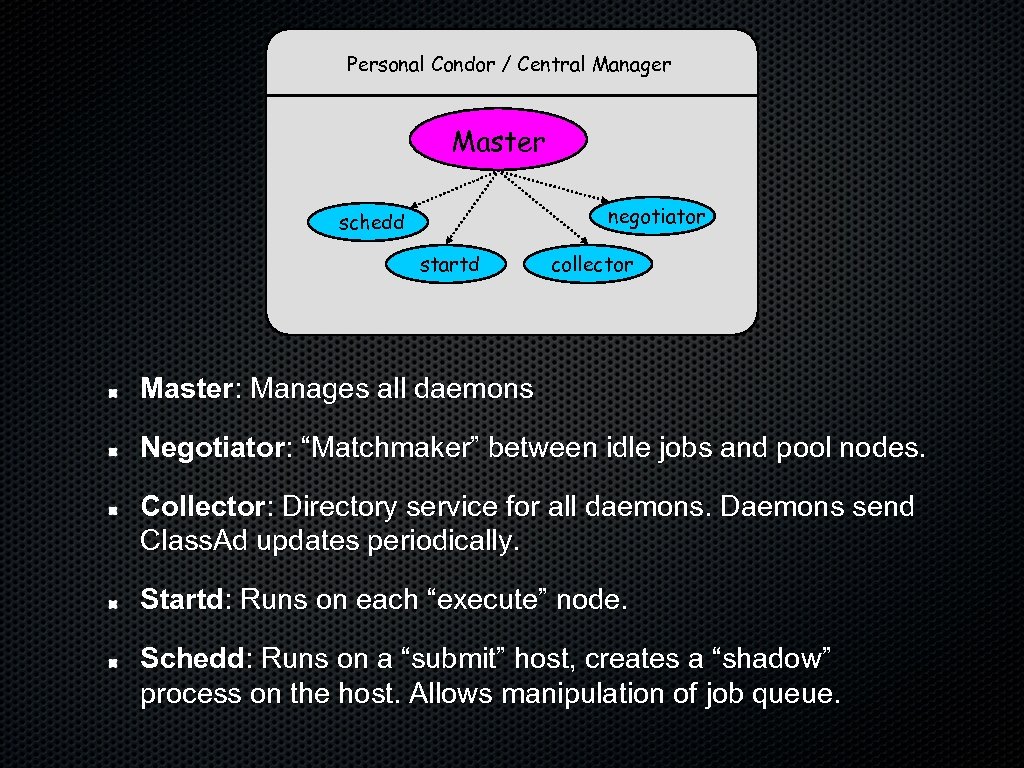

Personal Condor / Central Manager Master negotiator schedd startd collector Master: Manages all daemons Negotiator: “Matchmaker” between idle jobs and pool nodes. Collector: Directory service for all daemons. Daemons send Class. Ad updates periodically. Startd: Runs on each “execute” node. Schedd: Runs on a “submit” host, creates a “shadow” process on the host. Allows manipulation of job queue.

Personal Condor / Central Manager Master negotiator schedd startd collector Master: Manages all daemons Negotiator: “Matchmaker” between idle jobs and pool nodes. Collector: Directory service for all daemons. Daemons send Class. Ad updates periodically. Startd: Runs on each “execute” node. Schedd: Runs on a “submit” host, creates a “shadow” process on the host. Allows manipulation of job queue.

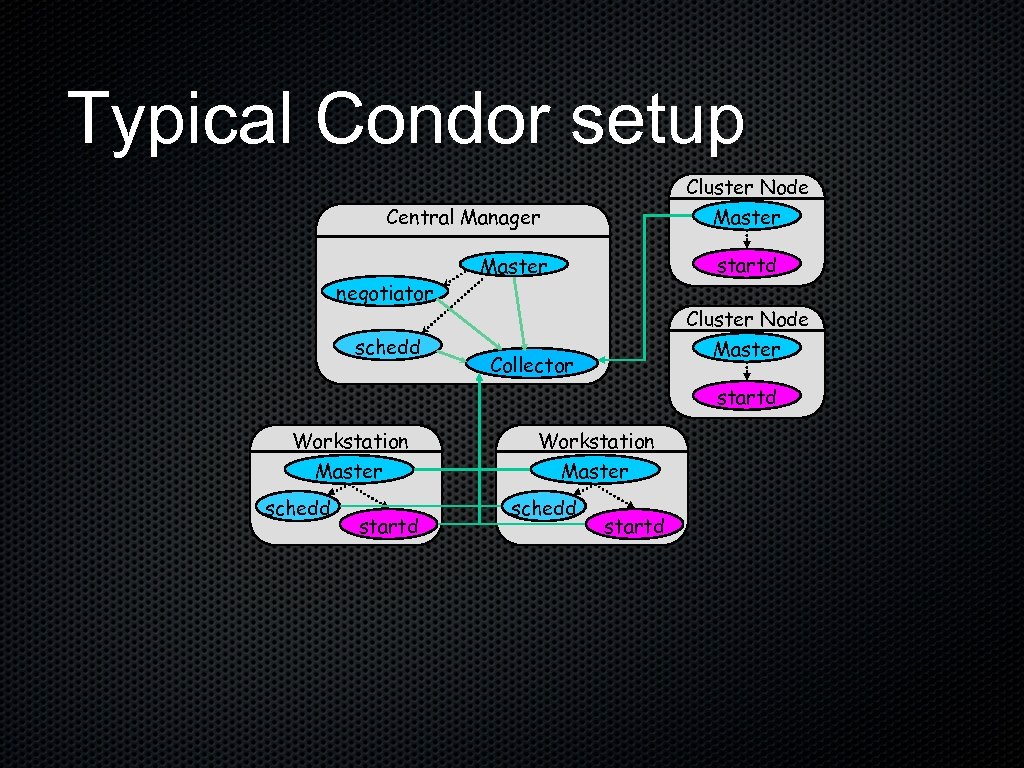

Typical Condor setup Cluster Node Master Central Manager negotiator schedd startd Master Cluster Node Master Collector startd Workstation Master schedd startd

Typical Condor setup Cluster Node Master Central Manager negotiator schedd startd Master Cluster Node Master Collector startd Workstation Master schedd startd

Condor Priority User priority managed by complex algorithm (half-life) with configurable parameters. System does not kick off running jobs. Resource claim is freed as soon as job is finished. Enforces fair use AND allows vanilla jobs to finish. Optimized for Grid Computing.

Condor Priority User priority managed by complex algorithm (half-life) with configurable parameters. System does not kick off running jobs. Resource claim is freed as soon as job is finished. Enforces fair use AND allows vanilla jobs to finish. Optimized for Grid Computing.

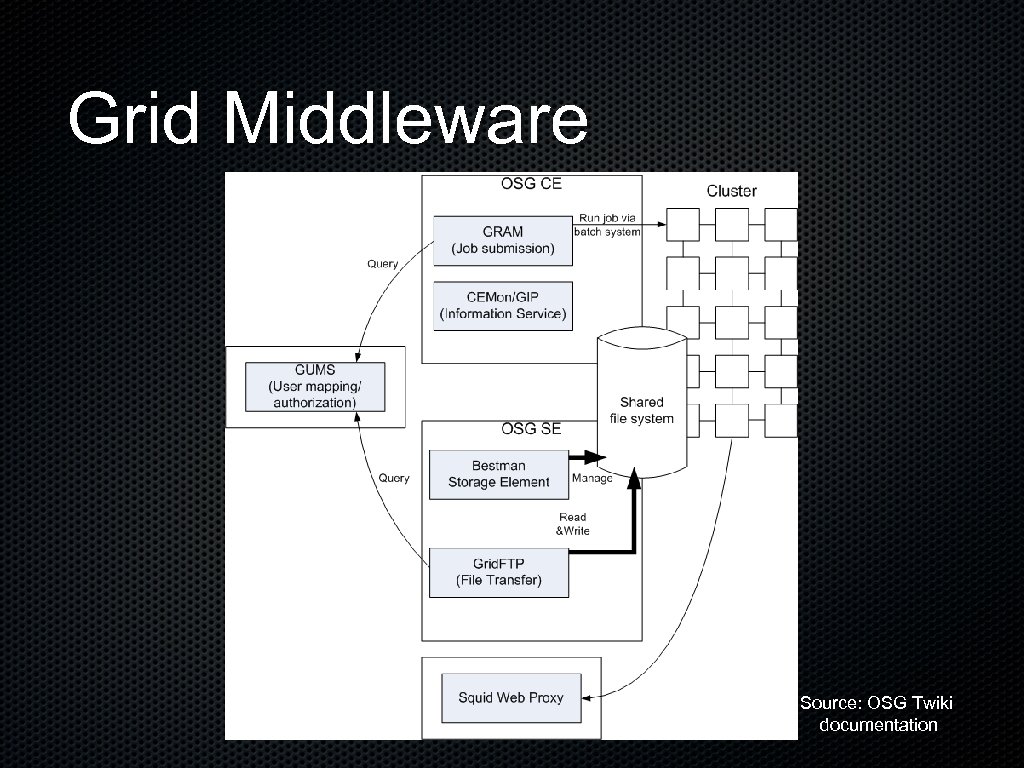

Grid Middleware Source: OSG Twiki documentation

Grid Middleware Source: OSG Twiki documentation

OSG Middleware OSG middleware installed/updated by Virtual Data Toolkit (VDT). Site configuration was complex before 1. 0 release. Simpler now. Provides Globus framework & security via Certificate Authority. Low maintenance: Resource Service Validation (RSV) provides snapshot of site. Grid User Management System (GUMS) handles mapping of grid certs to local users.

OSG Middleware OSG middleware installed/updated by Virtual Data Toolkit (VDT). Site configuration was complex before 1. 0 release. Simpler now. Provides Globus framework & security via Certificate Authority. Low maintenance: Resource Service Validation (RSV) provides snapshot of site. Grid User Management System (GUMS) handles mapping of grid certs to local users.

Be. St. Man Storage Berkeley Storage Manager: SE runs basic gateway configuration - short config but hard to get working. Not nearly as difficult as d. Cache - Be. St. Man is a good replacement for small to medium sites. Allows grid users to transfer data to-and-from designated storage via LFN e. g. srm: //uscms 1 -se. fltech-grid 3. fit. edu: 8443/srm/v 2/server? SFN=/bestman/Be. St. Man/cms. . .

Be. St. Man Storage Berkeley Storage Manager: SE runs basic gateway configuration - short config but hard to get working. Not nearly as difficult as d. Cache - Be. St. Man is a good replacement for small to medium sites. Allows grid users to transfer data to-and-from designated storage via LFN e. g. srm: //uscms 1 -se. fltech-grid 3. fit. edu: 8443/srm/v 2/server? SFN=/bestman/Be. St. Man/cms. . .

WLCG Large Hadron Collider - expected 15 PB/year. Compact Muon Solenoid detector will be a large part of this. World LHC Computing Grid (WLCG) handles the data, interfaces with sites in OSG, EGEE (european), etc. Tier 0 - CERN, Tier 1 - Fermilab, Closest Tier 2 - UFlorida. Tier 3 - US! Not officially part of CMS computing group (i. e. no funding), but very important for dataset storage and analysis.

WLCG Large Hadron Collider - expected 15 PB/year. Compact Muon Solenoid detector will be a large part of this. World LHC Computing Grid (WLCG) handles the data, interfaces with sites in OSG, EGEE (european), etc. Tier 0 - CERN, Tier 1 - Fermilab, Closest Tier 2 - UFlorida. Tier 3 - US! Not officially part of CMS computing group (i. e. no funding), but very important for dataset storage and analysis.

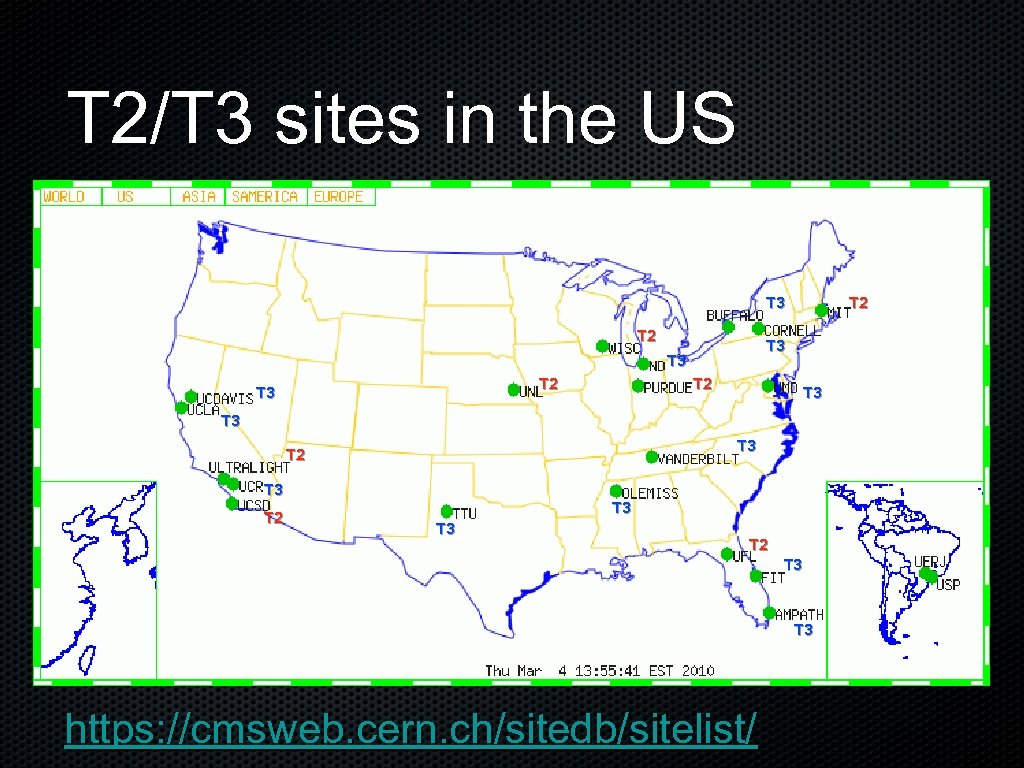

T 2/T 3 sites in the US T 3 T 2 T 3 T 2 T 3 https: //cmsweb. cern. ch/sitedb/sitelist/

T 2/T 3 sites in the US T 3 T 2 T 3 T 2 T 3 https: //cmsweb. cern. ch/sitedb/sitelist/

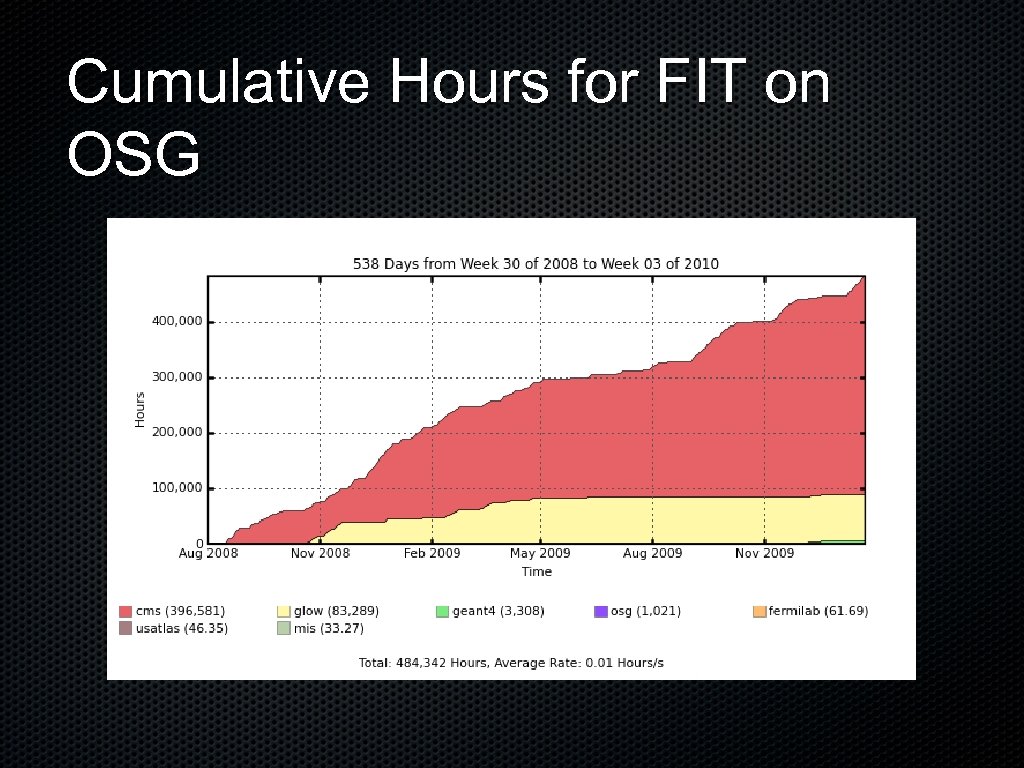

Cumulative Hours for FIT on OSG

Cumulative Hours for FIT on OSG

Local Usage Trends Over 400, 000 cumulative hours for CMS Over 900, 000 cumulative hours by local users Total of 1. 3 million CPU hours utilized

Local Usage Trends Over 400, 000 cumulative hours for CMS Over 900, 000 cumulative hours by local users Total of 1. 3 million CPU hours utilized

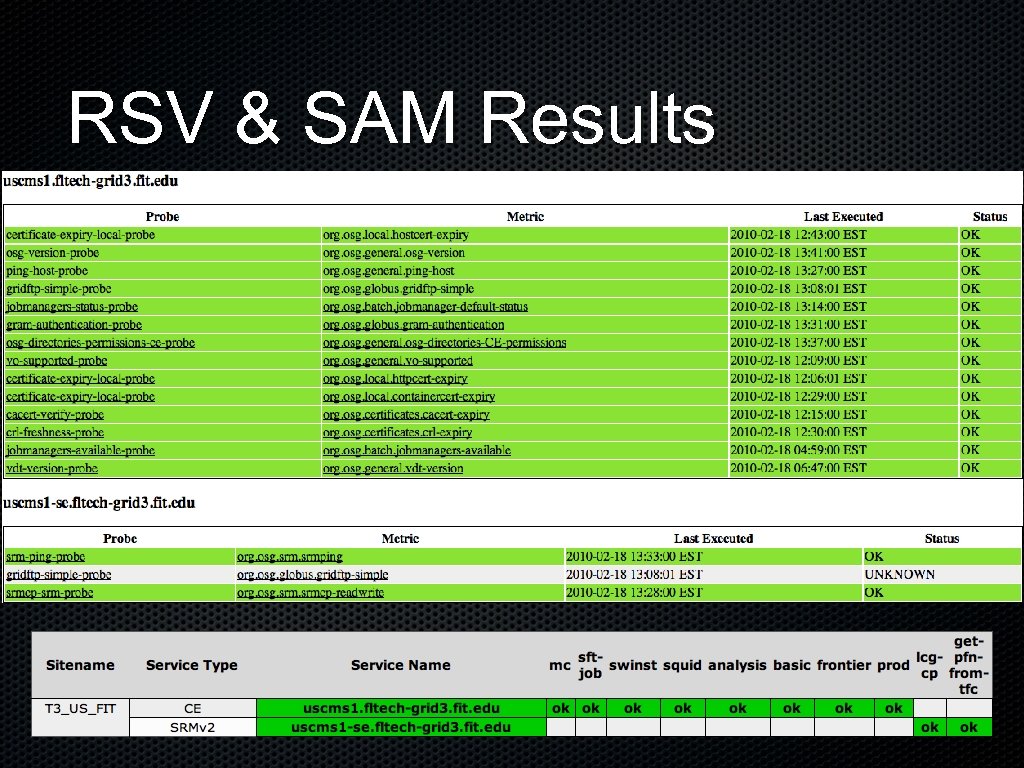

Tier-3 Sites Not yet completely defined. Consensus: T 3 sites give scientists a framework for collaboration (via transfer of datasets), also provide compute resources. Regular testing by RSV and Site Availability Monitoring (SAM) tests, and OSG site info publishing to CMS. FIT is one of the largest Tier 3 sites.

Tier-3 Sites Not yet completely defined. Consensus: T 3 sites give scientists a framework for collaboration (via transfer of datasets), also provide compute resources. Regular testing by RSV and Site Availability Monitoring (SAM) tests, and OSG site info publishing to CMS. FIT is one of the largest Tier 3 sites.

RSV & SAM Results

RSV & SAM Results

Ph. EDEx Physics Experiment Data Exports: Final milestone for our site. Physics datasets can be downloaded from other sites or exported to other sites. All relevant datasets catalogued on CMS Data Bookkeeping System (DBS) - keeps track of locations of datasets on the grid. Central web interface allows dataset copy/deletion requests.

Ph. EDEx Physics Experiment Data Exports: Final milestone for our site. Physics datasets can be downloaded from other sites or exported to other sites. All relevant datasets catalogued on CMS Data Bookkeeping System (DBS) - keeps track of locations of datasets on the grid. Central web interface allows dataset copy/deletion requests.

Demo http: //myosg. grid. iu. edu http: //uscms 1. fltech-grid 3. fit. edu/ganglia/ https: //cmsweb. cern. ch/dbs_discovery/a. Search? case Sensitive=on&user. Mode=user&sort. Order=desc&sort Name=&grid=0&method=dbsapi&dbs. Inst=cms_dbs_p h_analysis_02&user. Input=find+dataset+where+site+li ke+*FLTECH*+and+dataset. status+like+VALID*

Demo http: //myosg. grid. iu. edu http: //uscms 1. fltech-grid 3. fit. edu/ganglia/ https: //cmsweb. cern. ch/dbs_discovery/a. Search? case Sensitive=on&user. Mode=user&sort. Order=desc&sort Name=&grid=0&method=dbsapi&dbs. Inst=cms_dbs_p h_analysis_02&user. Input=find+dataset+where+site+li ke+*FLTECH*+and+dataset. status+like+VALID*

CMS Remote Analysis Builder (CRAB) Universal method for experimental data processing Automates analysis workflow, i. e. status tracking, resubmissions Datasets can be exported to Data Discovery Page Locally used extensively in our muon tomography simulations.

CMS Remote Analysis Builder (CRAB) Universal method for experimental data processing Automates analysis workflow, i. e. status tracking, resubmissions Datasets can be exported to Data Discovery Page Locally used extensively in our muon tomography simulations.

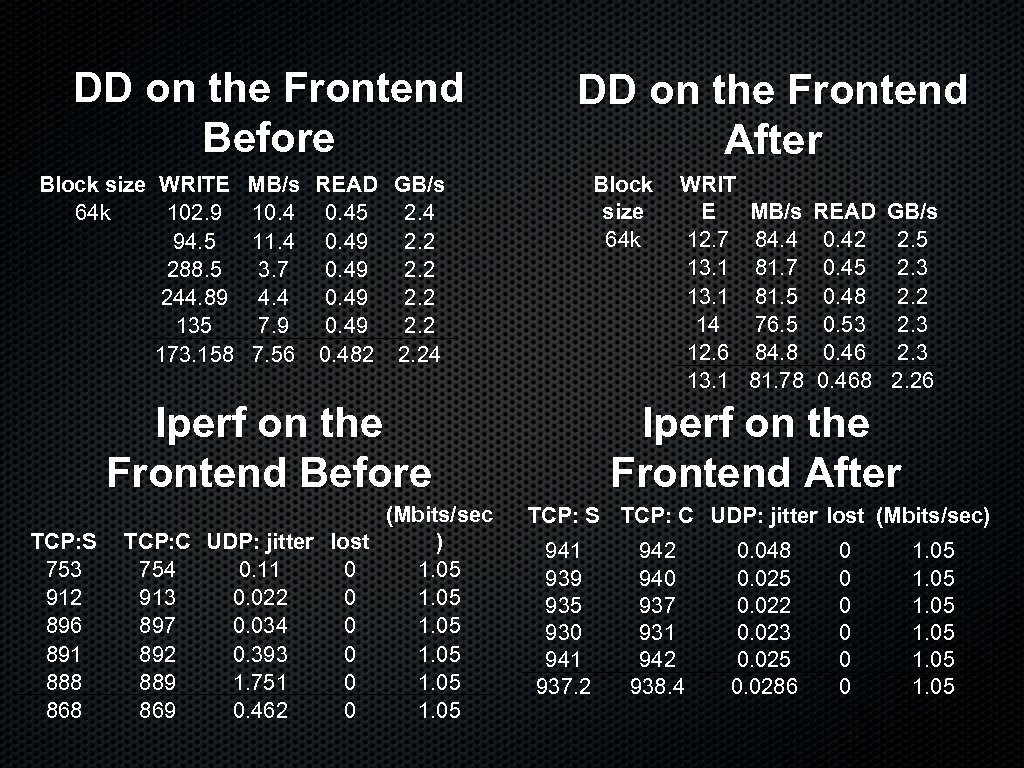

Network Performance Changed to a default 64 k. B blocksize across NFS RAID Array change to fix write-caching Increased kernel memory allocation for TCP Improvements in both network and grid transfer rates DD copy tests across network Changes from 2. 24 to 2. 26 GB/s in reading Changes from 7. 56 to 81. 78 MB/s in Writing

Network Performance Changed to a default 64 k. B blocksize across NFS RAID Array change to fix write-caching Increased kernel memory allocation for TCP Improvements in both network and grid transfer rates DD copy tests across network Changes from 2. 24 to 2. 26 GB/s in reading Changes from 7. 56 to 81. 78 MB/s in Writing

DD on the Frontend Before Block size WRITE 64 k 102. 9 94. 5 288. 5 244. 89 135 173. 158 DD on the Frontend After MB/s READ GB/s 10. 45 2. 4 11. 4 0. 49 2. 2 3. 7 0. 49 2. 2 4. 4 0. 49 2. 2 7. 9 0. 49 2. 2 7. 56 0. 482 2. 24 Block size 64 k Iperf on the Frontend Before TCP: S 753 912 896 891 888 868 (Mbits/sec TCP: C UDP: jitter lost ) 754 0. 11 0 1. 05 913 0. 022 0 1. 05 897 0. 034 0 1. 05 892 0. 393 0 1. 05 889 1. 751 0 1. 05 869 0. 462 0 1. 05 WRIT E 12. 7 13. 1 14 12. 6 13. 1 MB/s 84. 4 81. 7 81. 5 76. 5 84. 8 81. 78 READ 0. 42 0. 45 0. 48 0. 53 0. 468 GB/s 2. 5 2. 3 2. 26 Iperf on the Frontend After TCP: S TCP: C UDP: jitter lost (Mbits/sec) 941 939 935 930 941 937. 2 940 937 931 942 938. 4 0. 048 0. 025 0. 022 0. 023 0. 025 0. 0286 0 0 0 1. 05

DD on the Frontend Before Block size WRITE 64 k 102. 9 94. 5 288. 5 244. 89 135 173. 158 DD on the Frontend After MB/s READ GB/s 10. 45 2. 4 11. 4 0. 49 2. 2 3. 7 0. 49 2. 2 4. 4 0. 49 2. 2 7. 9 0. 49 2. 2 7. 56 0. 482 2. 24 Block size 64 k Iperf on the Frontend Before TCP: S 753 912 896 891 888 868 (Mbits/sec TCP: C UDP: jitter lost ) 754 0. 11 0 1. 05 913 0. 022 0 1. 05 897 0. 034 0 1. 05 892 0. 393 0 1. 05 889 1. 751 0 1. 05 869 0. 462 0 1. 05 WRIT E 12. 7 13. 1 14 12. 6 13. 1 MB/s 84. 4 81. 7 81. 5 76. 5 84. 8 81. 78 READ 0. 42 0. 45 0. 48 0. 53 0. 468 GB/s 2. 5 2. 3 2. 26 Iperf on the Frontend After TCP: S TCP: C UDP: jitter lost (Mbits/sec) 941 939 935 930 941 937. 2 940 937 931 942 938. 4 0. 048 0. 025 0. 022 0. 023 0. 025 0. 0286 0 0 0 1. 05