1526a62d85b009580d5fbca8f6cc91ca.ppt

- Количество слайдов: 27

Finite State Parsing & Information Extraction CMSC 35100 Intro to NLP January 10, 2006

Finite State Parsing & Information Extraction CMSC 35100 Intro to NLP January 10, 2006

Roadmap • Motivation – Limitations & Advantages • Example: Fastus – Finite state cascades • Other applications

Roadmap • Motivation – Limitations & Advantages • Example: Fastus – Finite state cascades • Other applications

Why NOT Finite State? • Fundamental representational limitations – Finite state systems can’t handle recursion – Unsupported phenomena: center embedding, etc – Fundamentally a strict subset of context-free languages

Why NOT Finite State? • Fundamental representational limitations – Finite state systems can’t handle recursion – Unsupported phenomena: center embedding, etc – Fundamentally a strict subset of context-free languages

Why Finite State? • Significant computational advantages – FAST!!!! • 10 mins vs 36 hours for 100 sentences – Can compile rules, even CFGs, to transducers • Approximate CFGs, overgenerate in specific ways – Toolkits • Minimal representational limitations – Most recursion is actually bounded • Human memory practically limits depth of recursion • Unroll finite number of recursions • Sufficient simple representation for many tasks – Information extraction, speech recognition

Why Finite State? • Significant computational advantages – FAST!!!! • 10 mins vs 36 hours for 100 sentences – Can compile rules, even CFGs, to transducers • Approximate CFGs, overgenerate in specific ways – Toolkits • Minimal representational limitations – Most recursion is actually bounded • Human memory practically limits depth of recursion • Unroll finite number of recursions • Sufficient simple representation for many tasks – Information extraction, speech recognition

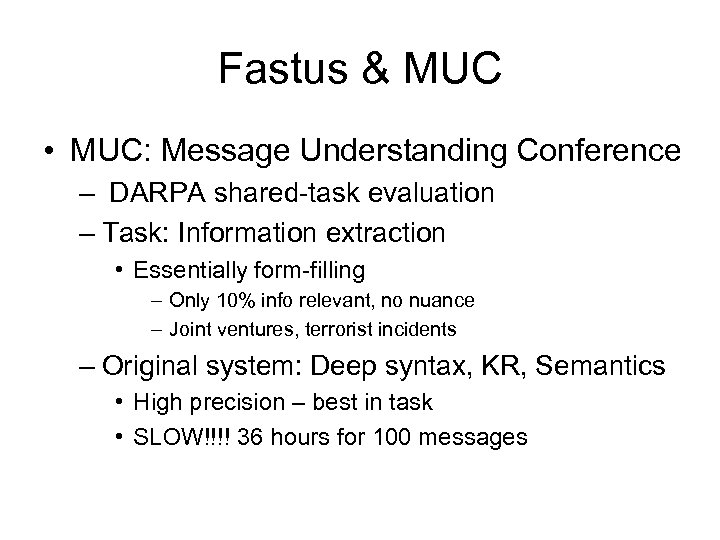

Fastus & MUC • MUC: Message Understanding Conference – DARPA shared-task evaluation – Task: Information extraction • Essentially form-filling – Only 10% info relevant, no nuance – Joint ventures, terrorist incidents – Original system: Deep syntax, KR, Semantics • High precision – best in task • SLOW!!!! 36 hours for 100 messages

Fastus & MUC • MUC: Message Understanding Conference – DARPA shared-task evaluation – Task: Information extraction • Essentially form-filling – Only 10% info relevant, no nuance – Joint ventures, terrorist incidents – Original system: Deep syntax, KR, Semantics • High precision – best in task • SLOW!!!! 36 hours for 100 messages

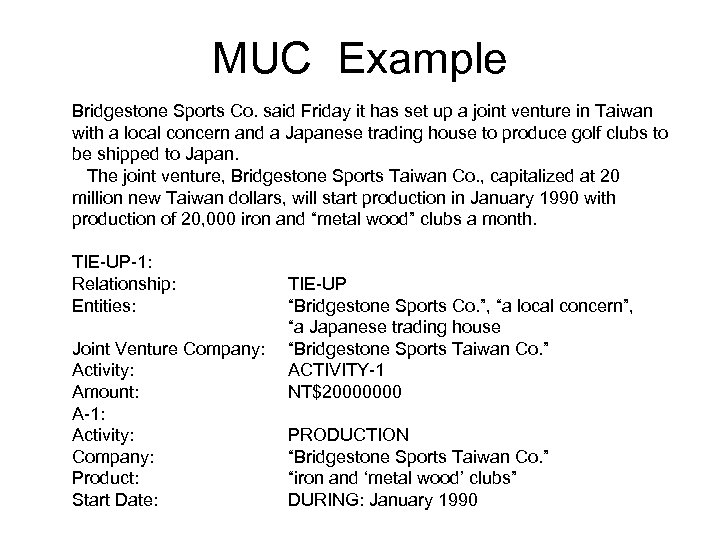

MUC Example Bridgestone Sports Co. said Friday it has set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs to be shipped to Japan. The joint venture, Bridgestone Sports Taiwan Co. , capitalized at 20 million new Taiwan dollars, will start production in January 1990 with production of 20, 000 iron and “metal wood” clubs a month. TIE-UP-1: Relationship: Entities: Joint Venture Company: Activity: Amount: A-1: Activity: Company: Product: Start Date: TIE-UP “Bridgestone Sports Co. ”, “a local concern”, “a Japanese trading house “Bridgestone Sports Taiwan Co. ” ACTIVITY-1 NT$20000000 PRODUCTION “Bridgestone Sports Taiwan Co. ” “iron and ‘metal wood’ clubs” DURING: January 1990

MUC Example Bridgestone Sports Co. said Friday it has set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs to be shipped to Japan. The joint venture, Bridgestone Sports Taiwan Co. , capitalized at 20 million new Taiwan dollars, will start production in January 1990 with production of 20, 000 iron and “metal wood” clubs a month. TIE-UP-1: Relationship: Entities: Joint Venture Company: Activity: Amount: A-1: Activity: Company: Product: Start Date: TIE-UP “Bridgestone Sports Co. ”, “a local concern”, “a Japanese trading house “Bridgestone Sports Taiwan Co. ” ACTIVITY-1 NT$20000000 PRODUCTION “Bridgestone Sports Taiwan Co. ” “iron and ‘metal wood’ clubs” DURING: January 1990

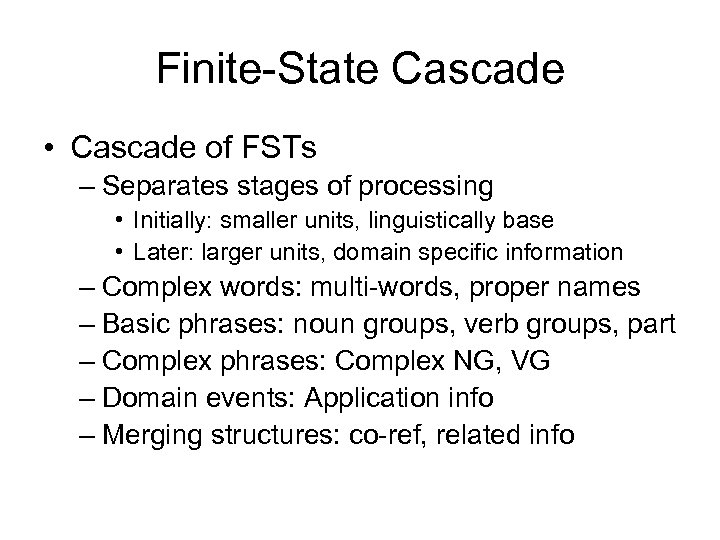

Finite-State Cascade • Cascade of FSTs – Separates stages of processing • Initially: smaller units, linguistically base • Later: larger units, domain specific information – Complex words: multi-words, proper names – Basic phrases: noun groups, verb groups, part – Complex phrases: Complex NG, VG – Domain events: Application info – Merging structures: co-ref, related info

Finite-State Cascade • Cascade of FSTs – Separates stages of processing • Initially: smaller units, linguistically base • Later: larger units, domain specific information – Complex words: multi-words, proper names – Basic phrases: noun groups, verb groups, part – Complex phrases: Complex NG, VG – Domain events: Application info – Merging structures: co-ref, related info

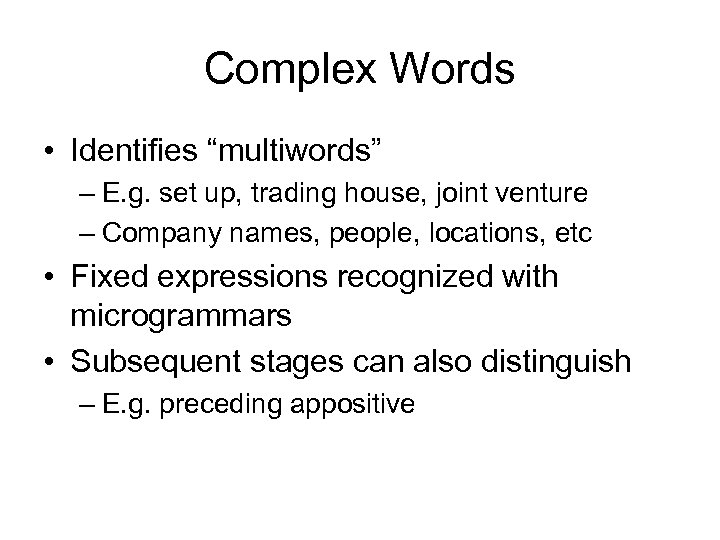

Complex Words • Identifies “multiwords” – E. g. set up, trading house, joint venture – Company names, people, locations, etc • Fixed expressions recognized with microgrammars • Subsequent stages can also distinguish – E. g. preceding appositive

Complex Words • Identifies “multiwords” – E. g. set up, trading house, joint venture – Company names, people, locations, etc • Fixed expressions recognized with microgrammars • Subsequent stages can also distinguish – E. g. preceding appositive

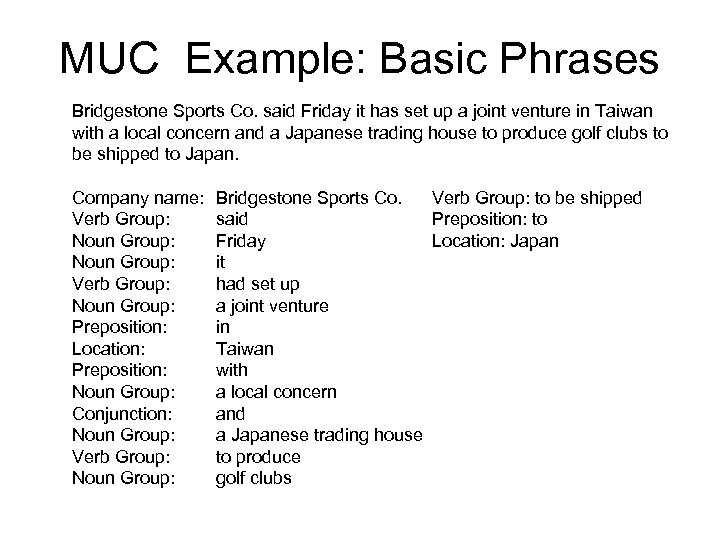

MUC Example: Basic Phrases Bridgestone Sports Co. said Friday it has set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs to be shipped to Japan. Company name: Verb Group: Noun Group: Preposition: Location: Preposition: Noun Group: Conjunction: Noun Group: Verb Group: Noun Group: Bridgestone Sports Co. Verb Group: to be shipped said Preposition: to Friday Location: Japan it had set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs

MUC Example: Basic Phrases Bridgestone Sports Co. said Friday it has set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs to be shipped to Japan. Company name: Verb Group: Noun Group: Preposition: Location: Preposition: Noun Group: Conjunction: Noun Group: Verb Group: Noun Group: Bridgestone Sports Co. Verb Group: to be shipped said Preposition: to Friday Location: Japan it had set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs

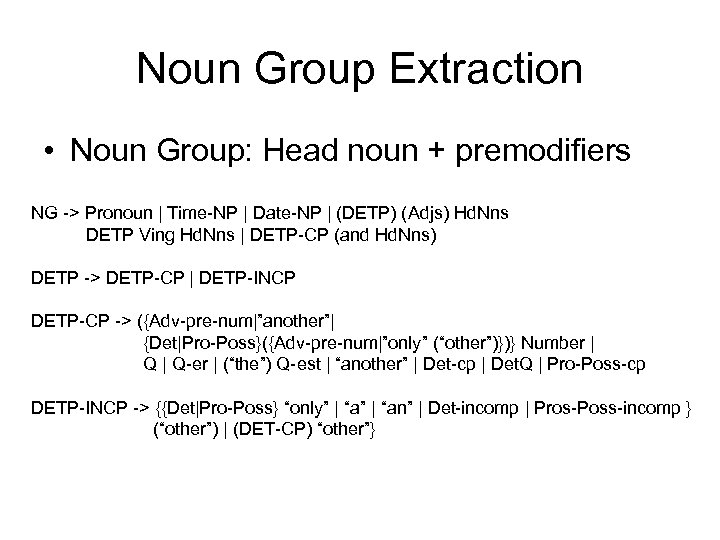

Noun Group Extraction • Noun Group: Head noun + premodifiers NG -> Pronoun | Time-NP | Date-NP | (DETP) (Adjs) Hd. Nns DETP Ving Hd. Nns | DETP-CP (and Hd. Nns) DETP -> DETP-CP | DETP-INCP DETP-CP -> ({Adv-pre-num|”another”| {Det|Pro-Poss}({Adv-pre-num|”only” (“other”)})} Number | Q-er | (“the”) Q-est | “another” | Det-cp | Det. Q | Pro-Poss-cp DETP-INCP -> {{Det|Pro-Poss} “only” | “an” | Det-incomp | Pros-Poss-incomp } (“other”) | (DET-CP) “other”}

Noun Group Extraction • Noun Group: Head noun + premodifiers NG -> Pronoun | Time-NP | Date-NP | (DETP) (Adjs) Hd. Nns DETP Ving Hd. Nns | DETP-CP (and Hd. Nns) DETP -> DETP-CP | DETP-INCP DETP-CP -> ({Adv-pre-num|”another”| {Det|Pro-Poss}({Adv-pre-num|”only” (“other”)})} Number | Q-er | (“the”) Q-est | “another” | Det-cp | Det. Q | Pro-Poss-cp DETP-INCP -> {{Det|Pro-Poss} “only” | “an” | Det-incomp | Pros-Poss-incomp } (“other”) | (DET-CP) “other”}

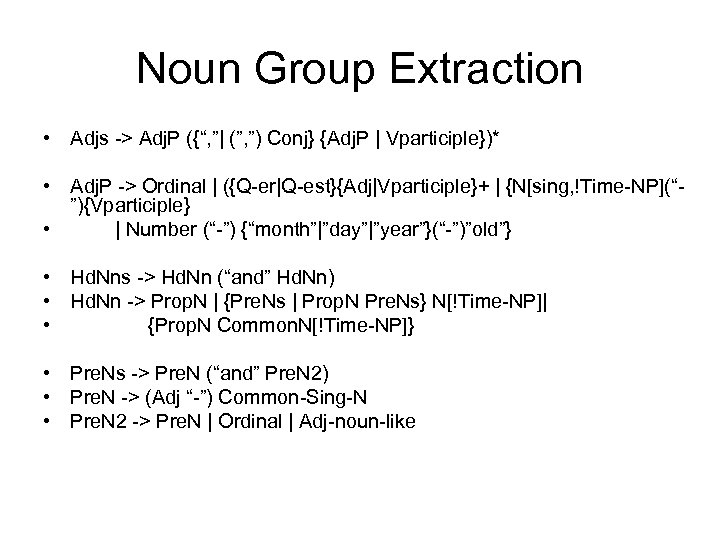

Noun Group Extraction • Adjs -> Adj. P ({“, ”| (”, ”) Conj} {Adj. P | Vparticiple})* • Adj. P -> Ordinal | ({Q-er|Q-est}{Adj|Vparticiple}+ | {N[sing, !Time-NP](“”){Vparticiple} • | Number (“-”) {“month”|”day”|”year”}(“-”)”old”} • Hd. Nns -> Hd. Nn (“and” Hd. Nn) • Hd. Nn -> Prop. N | {Pre. Ns | Prop. N Pre. Ns} N[!Time-NP]| • {Prop. N Common. N[!Time-NP]} • Pre. Ns -> Pre. N (“and” Pre. N 2) • Pre. N -> (Adj “-”) Common-Sing-N • Pre. N 2 -> Pre. N | Ordinal | Adj-noun-like

Noun Group Extraction • Adjs -> Adj. P ({“, ”| (”, ”) Conj} {Adj. P | Vparticiple})* • Adj. P -> Ordinal | ({Q-er|Q-est}{Adj|Vparticiple}+ | {N[sing, !Time-NP](“”){Vparticiple} • | Number (“-”) {“month”|”day”|”year”}(“-”)”old”} • Hd. Nns -> Hd. Nn (“and” Hd. Nn) • Hd. Nn -> Prop. N | {Pre. Ns | Prop. N Pre. Ns} N[!Time-NP]| • {Prop. N Common. N[!Time-NP]} • Pre. Ns -> Pre. N (“and” Pre. N 2) • Pre. N -> (Adj “-”) Common-Sing-N • Pre. N 2 -> Pre. N | Ordinal | Adj-noun-like

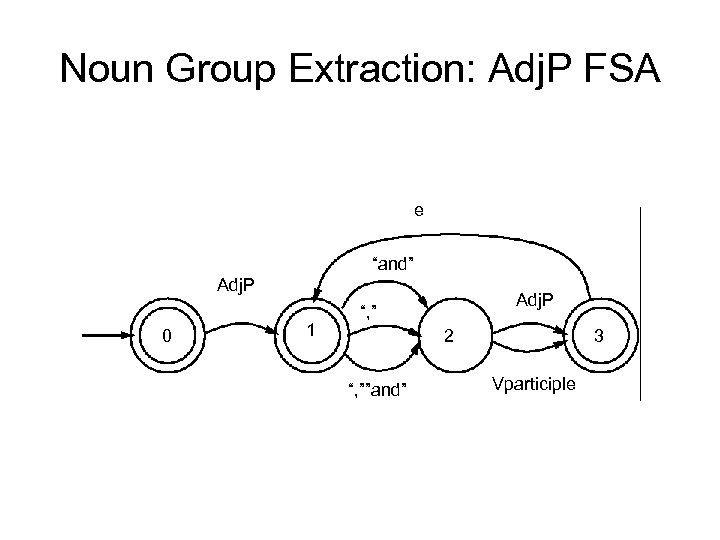

Noun Group Extraction: Adj. P FSA e “and” Adj. P 0 1 Adj. P “, ” 2 “, ””and” 3 Vparticiple

Noun Group Extraction: Adj. P FSA e “and” Adj. P 0 1 Adj. P “, ” 2 “, ””and” 3 Vparticiple

![Noun Group Extraction: Adj FSA “-” Nsing[!Time. NP] Vparticiple 1 2 Ordinal 0 Q-est Noun Group Extraction: Adj FSA “-” Nsing[!Time. NP] Vparticiple 1 2 Ordinal 0 Q-est](https://present5.com/presentation/1526a62d85b009580d5fbca8f6cc91ca/image-13.jpg) Noun Group Extraction: Adj FSA “-” Nsing[!Time. NP] Vparticiple 1 2 Ordinal 0 Q-est e 5 Vparticiple Adj Vparticiple 4 Q-er 9 “old” e Adj “-” 6 e 3 “month” “day” “year” 7 e 8

Noun Group Extraction: Adj FSA “-” Nsing[!Time. NP] Vparticiple 1 2 Ordinal 0 Q-est e 5 Vparticiple Adj Vparticiple 4 Q-er 9 “old” e Adj “-” 6 e 3 “month” “day” “year” 7 e 8

Complex Phrases • Build up from basic noun and verb groups – Attach appositives – Construct measure phrases – Attach prepositional phrases – Conjoin noun phrases • Combine syntactic variants, modalities with common meaning • Identify domain entities and events

Complex Phrases • Build up from basic noun and verb groups – Attach appositives – Construct measure phrases – Attach prepositional phrases – Conjoin noun phrases • Combine syntactic variants, modalities with common meaning • Identify domain entities and events

Domain Events • Ordered list of complex phrases – Drops out all other elements -> robustness • Transitions driven by headword + phrasetype – E. g. “company-Noun. Group”, ”Formed. Passive. Verb. Group” •

Domain Events • Ordered list of complex phrases – Drops out all other elements -> robustness • Transitions driven by headword + phrasetype – E. g. “company-Noun. Group”, ”Formed. Passive. Verb. Group” •

Multi-layer Cascades • Finesse the recursion problem – Automata construction expands rules>automata – Adj. P’s are duplicated, but no self-reference – Adj. Ps and NPs in conjunction independent • One level identifies base, non-recursive NGs • Next levels combine with – Measure phrases, prepositional phrases, conjunction • Limits depth of possible “recursive” constructs

Multi-layer Cascades • Finesse the recursion problem – Automata construction expands rules>automata – Adj. P’s are duplicated, but no self-reference – Adj. Ps and NPs in conjunction independent • One level identifies base, non-recursive NGs • Next levels combine with – Measure phrases, prepositional phrases, conjunction • Limits depth of possible “recursive” constructs

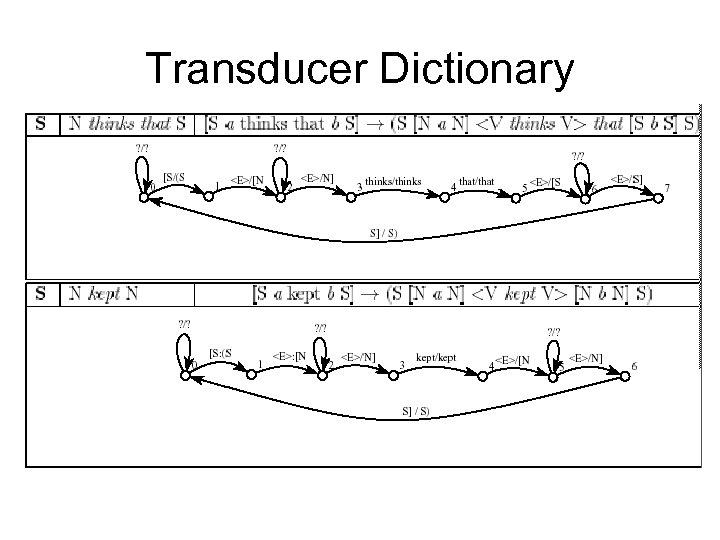

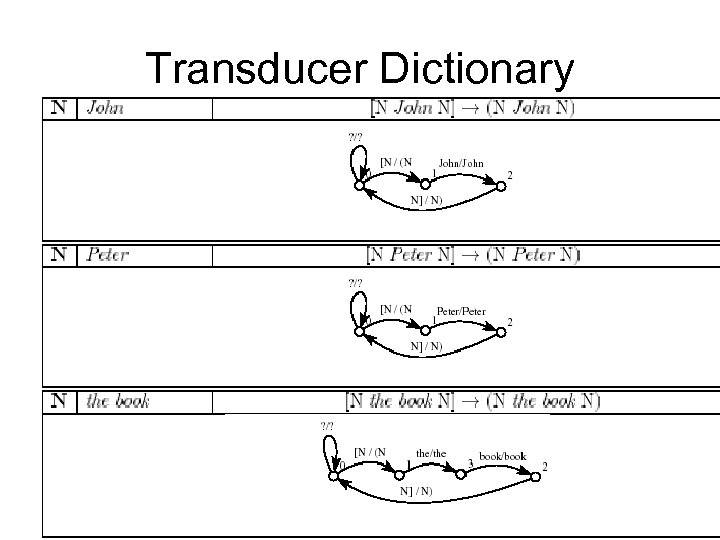

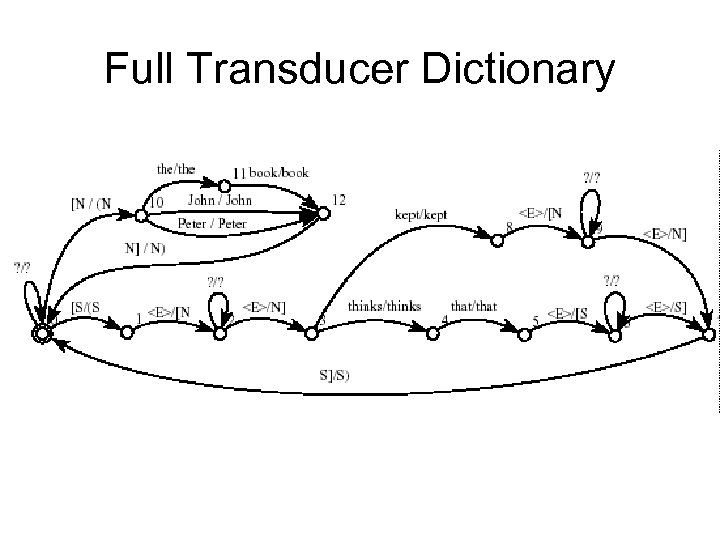

More Complete FST Parsing • Roche 1996, 97, etc • Construct syntactic dictionary – S | N thinks that S; S| N kept N – N | John; N| Peter; N|the book • Convert entries to finite-state transducers – [S a thinks that B S]-> • (S [N a N]

More Complete FST Parsing • Roche 1996, 97, etc • Construct syntactic dictionary – S | N thinks that S; S| N kept N – N | John; N| Peter; N|the book • Convert entries to finite-state transducers – [S a thinks that B S]-> • (S [N a N]

Transducer Dictionary

Transducer Dictionary

Transducer Dictionary

Transducer Dictionary

Full Transducer Dictionary

Full Transducer Dictionary

Transducers -> Parser • Transducer dictionary = Union of transducers – T_dic = U T_i • Parser = Repeated application of transducers – Repeat until output = input • Transduction causes no change

Transducers -> Parser • Transducer dictionary = Union of transducers – T_dic = U T_i • Parser = Repeated application of transducers – Repeat until output = input • Transduction causes no change

Finite-State Extensions • Finite-State Approaches to – Tree Adjoining Grammars – Machine translation – Multimodal analysis and interpretation

Finite-State Extensions • Finite-State Approaches to – Tree Adjoining Grammars – Machine translation – Multimodal analysis and interpretation

Probabilistic CFGs

Probabilistic CFGs

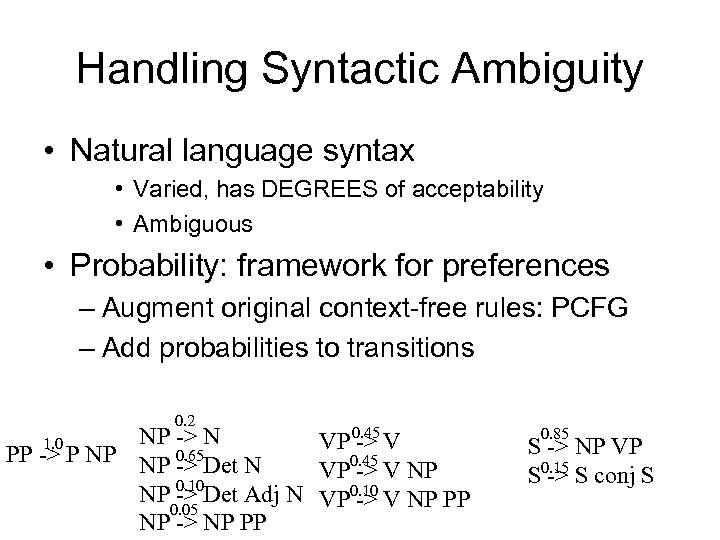

Handling Syntactic Ambiguity • Natural language syntax • Varied, has DEGREES of acceptability • Ambiguous • Probability: framework for preferences – Augment original context-free rules: PCFG – Add probabilities to transitions 0. 2 NP -> N VP 0. 45 V -> 0. 65 PP -> P NP NP -> Det N 0. 45 VP -> V NP 0. 10 NP -> Det Adj N VP 0. 10 V NP PP -> 0. 05 NP -> NP PP 1. 0 0. 85 S -> NP VP S 0. 15 S conj S ->

Handling Syntactic Ambiguity • Natural language syntax • Varied, has DEGREES of acceptability • Ambiguous • Probability: framework for preferences – Augment original context-free rules: PCFG – Add probabilities to transitions 0. 2 NP -> N VP 0. 45 V -> 0. 65 PP -> P NP NP -> Det N 0. 45 VP -> V NP 0. 10 NP -> Det Adj N VP 0. 10 V NP PP -> 0. 05 NP -> NP PP 1. 0 0. 85 S -> NP VP S 0. 15 S conj S ->

PCFGs • Learning probabilities – Strategy 1: Write (manual) CFG, • Use treebank (collection of parse trees) to find probabilities • Parsing with PCFGs – Rank parse trees based on probability – Provides graceful degradation • Can get some parse even for unusual constructions - low value

PCFGs • Learning probabilities – Strategy 1: Write (manual) CFG, • Use treebank (collection of parse trees) to find probabilities • Parsing with PCFGs – Rank parse trees based on probability – Provides graceful degradation • Can get some parse even for unusual constructions - low value

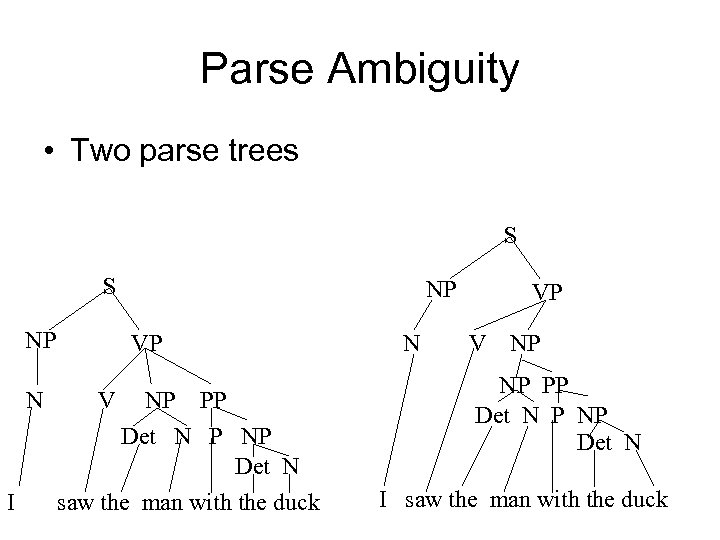

Parse Ambiguity • Two parse trees S S NP N I NP VP V NP PP Det N P NP Det N saw the man with the duck N VP V NP NP PP Det N P NP Det N I saw the man with the duck

Parse Ambiguity • Two parse trees S S NP N I NP VP V NP PP Det N P NP Det N saw the man with the duck N VP V NP NP PP Det N P NP Det N I saw the man with the duck

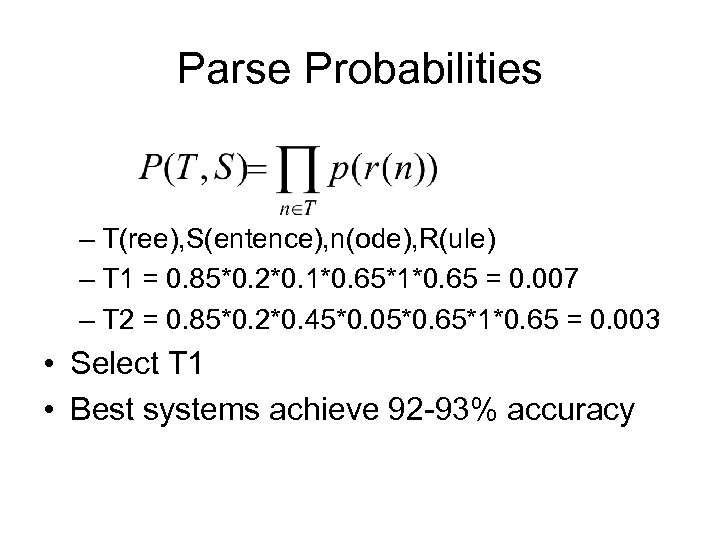

Parse Probabilities – T(ree), S(entence), n(ode), R(ule) – T 1 = 0. 85*0. 2*0. 1*0. 65*1*0. 65 = 0. 007 – T 2 = 0. 85*0. 2*0. 45*0. 05*0. 65*1*0. 65 = 0. 003 • Select T 1 • Best systems achieve 92 -93% accuracy

Parse Probabilities – T(ree), S(entence), n(ode), R(ule) – T 1 = 0. 85*0. 2*0. 1*0. 65*1*0. 65 = 0. 007 – T 2 = 0. 85*0. 2*0. 45*0. 05*0. 65*1*0. 65 = 0. 003 • Select T 1 • Best systems achieve 92 -93% accuracy