67b1e2226dd7842daa2eea729de771e4.ppt

- Количество слайдов: 48

Fine-Grained Soft Semantic Constraints Yuval Marton University of Maryland http: //umiacs. umd. edu/~ymarton/pub/ibm/Hybrid Knowledge -Corpus. Based. Sem-IBM_090728. ppt

Fine-Grained Soft Semantic Constraints Yuval Marton University of Maryland http: //umiacs. umd. edu/~ymarton/pub/ibm/Hybrid Knowledge -Corpus. Based. Sem-IBM_090728. ppt

Why Care? Tell’em apart: In spite of similar contexts These, too: In spite of same form Yuval Marton, IBM talk 2

Why Care? Tell’em apart: In spite of similar contexts These, too: In spite of same form Yuval Marton, IBM talk 2

Road map • Brief overview of doctoral work • Hybrid knowledge / corpus-based semantic similarity methods – Pure and hybrid methods – Hard and soft constraints – Fine-grained – Named-entities Yuval Marton, IBM talk 3

Road map • Brief overview of doctoral work • Hybrid knowledge / corpus-based semantic similarity methods – Pure and hybrid methods – Hard and soft constraints – Fine-grained – Named-entities Yuval Marton, IBM talk 3

Dissertation Theme • Hybrid Knowledge/Corpus-Based Statistical NLP Models Using Fine-Grained Soft Syntactic and Semantic Constraints – Soft Constraints – Fine-Grained – Syntactic (parsing) – Semantic (“concepts”, paraphrases) • Evaluated in – Word-pair semantic similarity ranking and – Statistical Machine Translation (SMT) Yuval Marton, IBM talk 4

Dissertation Theme • Hybrid Knowledge/Corpus-Based Statistical NLP Models Using Fine-Grained Soft Syntactic and Semantic Constraints – Soft Constraints – Fine-Grained – Syntactic (parsing) – Semantic (“concepts”, paraphrases) • Evaluated in – Word-pair semantic similarity ranking and – Statistical Machine Translation (SMT) Yuval Marton, IBM talk 4

![Soft Constraints • Hard constraints – [0, 1]; in/out – Decrease search space – Soft Constraints • Hard constraints – [0, 1]; in/out – Decrease search space –](https://present5.com/presentation/67b1e2226dd7842daa2eea729de771e4/image-5.jpg) Soft Constraints • Hard constraints – [0, 1]; in/out – Decrease search space – “structural zeroes” – Theory-driven – Faster, slimmer Universe Hard Universe • Soft constraints Soft – [0. . 1]; fuzzy – Only bias the model – Data-driven: Let patterns emerge Yuval Marton, IBM talk 5

Soft Constraints • Hard constraints – [0, 1]; in/out – Decrease search space – “structural zeroes” – Theory-driven – Faster, slimmer Universe Hard Universe • Soft constraints Soft – [0. . 1]; fuzzy – Only bias the model – Data-driven: Let patterns emerge Yuval Marton, IBM talk 5

Fine-grained • Granularity is a big deal – Soft syntactic constraints in SMT • Chiang 2005 vs. Marton and Resnik 2008 • Neg results pos results – Soft semantic constraints in word-pair similarity ranking • Mohammad and Hirst 2006 vs. Marton, Mohammad and Resnik 2009 • Pos results better results Yuval Marton, IBM talk 6

Fine-grained • Granularity is a big deal – Soft syntactic constraints in SMT • Chiang 2005 vs. Marton and Resnik 2008 • Neg results pos results – Soft semantic constraints in word-pair similarity ranking • Mohammad and Hirst 2006 vs. Marton, Mohammad and Resnik 2009 • Pos results better results Yuval Marton, IBM talk 6

Soft Syntactic Constraints • X X 1 speech ||| X 1 espiche – What should be the span of X 1? • Chiang’s 2005 constituency feature – Reward rule’s score if rule’s source -side matches a constituent span – Constituency-incompatible emergent patterns can still ‘win’ (in spite of no reward) – Good idea -- Neg-result • But what if… Yuval Marton, IBM talk 7

Soft Syntactic Constraints • X X 1 speech ||| X 1 espiche – What should be the span of X 1? • Chiang’s 2005 constituency feature – Reward rule’s score if rule’s source -side matches a constituent span – Constituency-incompatible emergent patterns can still ‘win’ (in spite of no reward) – Good idea -- Neg-result • But what if… Yuval Marton, IBM talk 7

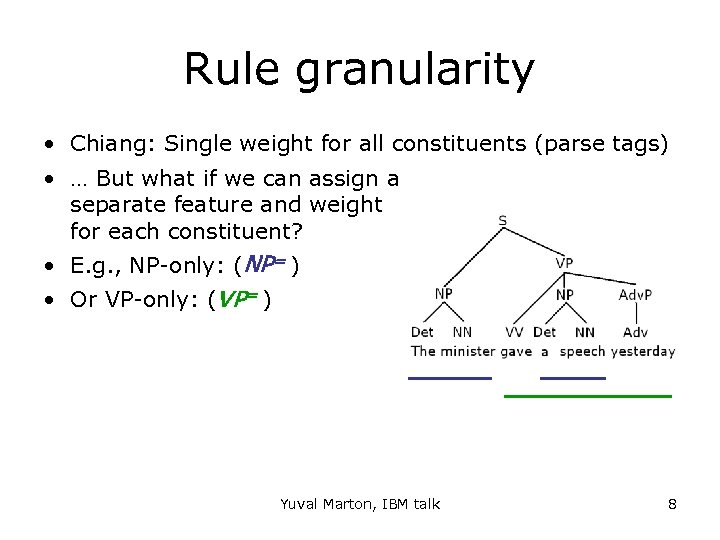

Rule granularity • Chiang: Single weight for all constituents (parse tags) • … But what if we can assign a separate feature and weight for each constituent? • E. g. , NP-only: (NP= ) • Or VP-only: (VP= ) Yuval Marton, IBM talk 8

Rule granularity • Chiang: Single weight for all constituents (parse tags) • … But what if we can assign a separate feature and weight for each constituent? • E. g. , NP-only: (NP= ) • Or VP-only: (VP= ) Yuval Marton, IBM talk 8

Fine-grained • Granularity is a big deal – Soft syntactic constraints in SMT • Chiang 2005 vs. Marton and Resnik 2008 • Neg results pos results – Soft semantic constraints in word-pair similarity ranking • Mohammad and Hirst 2006 vs. Marton, Mohammad and Resnik 2009 • Pos results better results Yuval Marton, IBM talk 9

Fine-grained • Granularity is a big deal – Soft syntactic constraints in SMT • Chiang 2005 vs. Marton and Resnik 2008 • Neg results pos results – Soft semantic constraints in word-pair similarity ranking • Mohammad and Hirst 2006 vs. Marton, Mohammad and Resnik 2009 • Pos results better results Yuval Marton, IBM talk 9

Word-pair similarity ranking • Give each word pair a similarity score – Rooster – voyage – Coast – shore • Noun-noun (Rubinstein & Goodenough, 1965) • Verb-verb (Resnik & Diab, 2000) • Result: list of pairs ordered by similarity • Spearman rank correlation Yuval Marton, IBM talk 10

Word-pair similarity ranking • Give each word pair a similarity score – Rooster – voyage – Coast – shore • Noun-noun (Rubinstein & Goodenough, 1965) • Verb-verb (Resnik & Diab, 2000) • Result: list of pairs ordered by similarity • Spearman rank correlation Yuval Marton, IBM talk 10

Similarity measures • Distributional profiles (DP) – Which words did I occur next to? • Context vectors • Similar vectors similar meaning Yuval Marton, IBM talk 11

Similarity measures • Distributional profiles (DP) – Which words did I occur next to? • Context vectors • Similar vectors similar meaning Yuval Marton, IBM talk 11

Bank (pure word-based) Bank Yuval Marton, IBM talk 12

Bank (pure word-based) Bank Yuval Marton, IBM talk 12

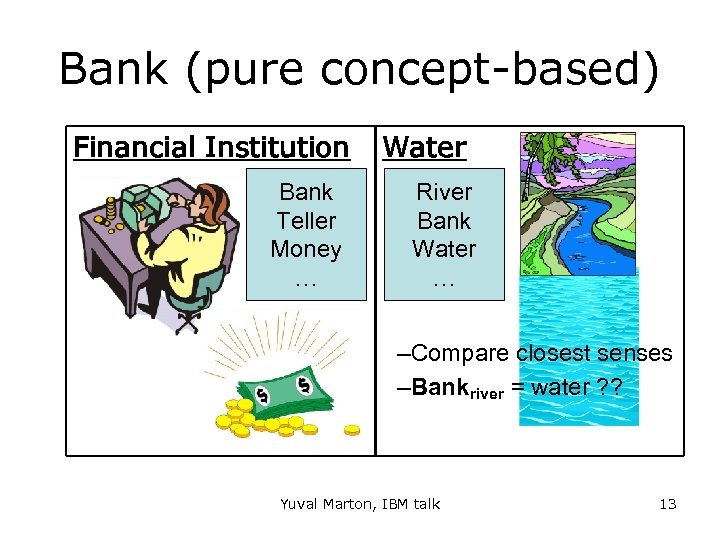

Bank (pure concept-based) Financial Institution Bank Teller Money … Water River Bank Water … –Compare closest senses –Bankriver = water ? ? Yuval Marton, IBM talk 13

Bank (pure concept-based) Financial Institution Bank Teller Money … Water River Bank Water … –Compare closest senses –Bankriver = water ? ? Yuval Marton, IBM talk 13

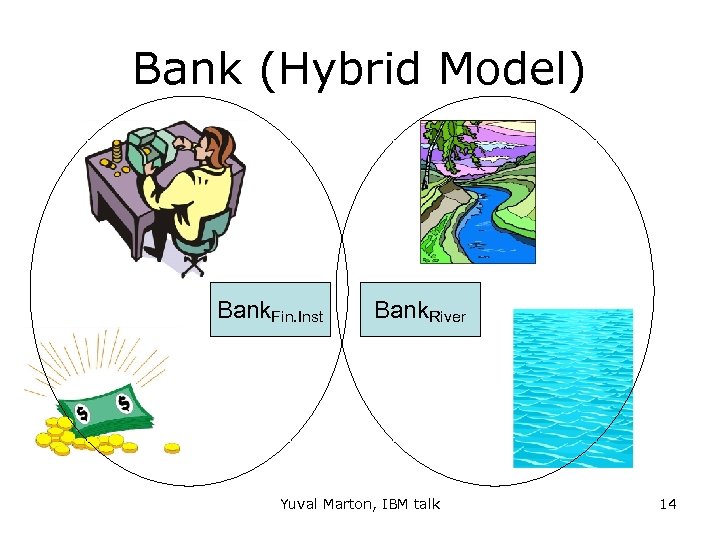

Bank (Hybrid Model) Bank. Fin. Inst Bank. River Yuval Marton, IBM talk 14

Bank (Hybrid Model) Bank. Fin. Inst Bank. River Yuval Marton, IBM talk 14

Fine-grained • Granularity is a big deal – Soft syntactic constraints in SMT • Chiang 2005 vs. Marton and Resnik 2008 • Neg results pos results – Soft semantic constraints in word-pair similarity ranking • Mohammad and Hirst 2006 vs. Marton, Mohammad and Resnik 2009 • Pos results better results Yuval Marton, IBM talk 15

Fine-grained • Granularity is a big deal – Soft syntactic constraints in SMT • Chiang 2005 vs. Marton and Resnik 2008 • Neg results pos results – Soft semantic constraints in word-pair similarity ranking • Mohammad and Hirst 2006 vs. Marton, Mohammad and Resnik 2009 • Pos results better results Yuval Marton, IBM talk 15

Unified Model • Soft constraints in a log-linear model – Syntactic – Semantic –… • ihi(x) • Constraints = Add more terms to the sum Yuval Marton, IBM talk 16

Unified Model • Soft constraints in a log-linear model – Syntactic – Semantic –… • ihi(x) • Constraints = Add more terms to the sum Yuval Marton, IBM talk 16

Road map • Brief overview of doctoral work • Hybrid knowledge / corpus-based semantic similarity methods – Pure and hybrid methods – Hard and soft constraints – Fine-grained – Named-entities Yuval Marton, IBM talk 17

Road map • Brief overview of doctoral work • Hybrid knowledge / corpus-based semantic similarity methods – Pure and hybrid methods – Hard and soft constraints – Fine-grained – Named-entities Yuval Marton, IBM talk 17

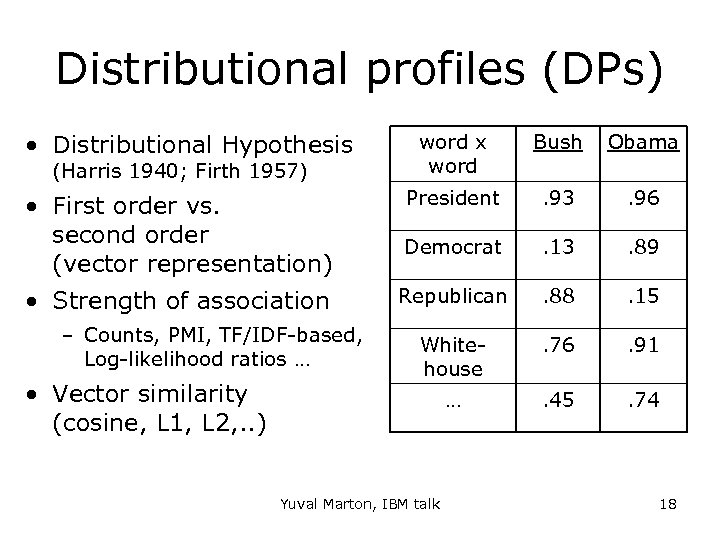

Distributional profiles (DPs) • Distributional Hypothesis • First order vs. second order (vector representation) • Strength of association – Counts, PMI, TF/IDF-based, Log-likelihood ratios … • Vector similarity (cosine, L 1, L 2, . . ) Bush Obama President . 93 . 96 Democrat . 13 . 89 Republican . 88 . 15 Whitehouse . 76 . 91 … (Harris 1940; Firth 1957) word x word . 45 . 74 Yuval Marton, IBM talk 18

Distributional profiles (DPs) • Distributional Hypothesis • First order vs. second order (vector representation) • Strength of association – Counts, PMI, TF/IDF-based, Log-likelihood ratios … • Vector similarity (cosine, L 1, L 2, . . ) Bush Obama President . 93 . 96 Democrat . 13 . 89 Republican . 88 . 15 Whitehouse . 76 . 91 … (Harris 1940; Firth 1957) word x word . 45 . 74 Yuval Marton, IBM talk 18

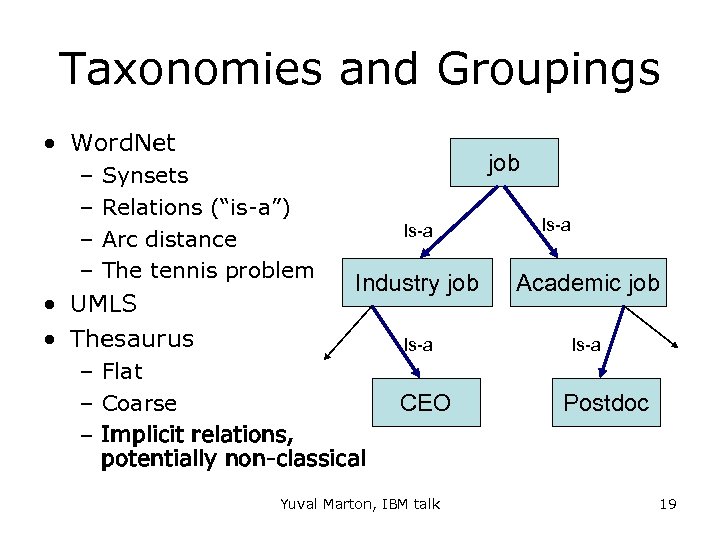

Taxonomies and Groupings • Word. Net – – Synsets Relations (“is-a”) Arc distance The tennis problem • UMLS • Thesaurus job Is-a Industry job Academic job Is-a – Flat – Coarse – Implicit relations, potentially non-classical CEO Yuval Marton, IBM talk Postdoc 19

Taxonomies and Groupings • Word. Net – – Synsets Relations (“is-a”) Arc distance The tennis problem • UMLS • Thesaurus job Is-a Industry job Academic job Is-a – Flat – Coarse – Implicit relations, potentially non-classical CEO Yuval Marton, IBM talk Postdoc 19

Hybrid measures • Word. Net – Resnik’s method (info content) – Lin and others • Thesaurus Concept-based – Mohammad and Hirst (coarse-grained) – Distance b/w most similar senses – Pro: Semantic relatedness (non-classical relations) Resource-poor languages and domains – Con: Small thesaurus low applicability Bankriver = water ? ? Yuval Marton, IBM talk 20

Hybrid measures • Word. Net – Resnik’s method (info content) – Lin and others • Thesaurus Concept-based – Mohammad and Hirst (coarse-grained) – Distance b/w most similar senses – Pro: Semantic relatedness (non-classical relations) Resource-poor languages and domains – Con: Small thesaurus low applicability Bankriver = water ? ? Yuval Marton, IBM talk 20

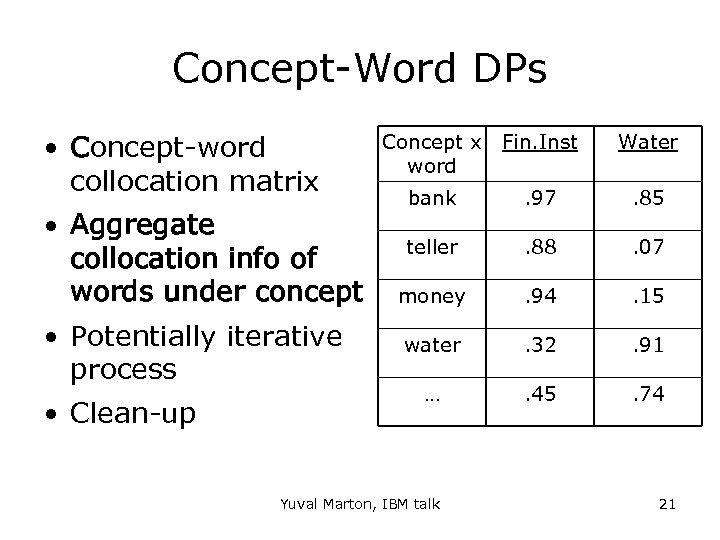

Concept-Word DPs • Concept-word collocation matrix • Aggregate collocation info of words under concept • Potentially iterative process • Clean-up Concept x Fin. Inst word Water bank . 97 . 85 teller . 88 . 07 money . 94 . 15 water . 32 . 91 … . 45 . 74 Yuval Marton, IBM talk 21

Concept-Word DPs • Concept-word collocation matrix • Aggregate collocation info of words under concept • Potentially iterative process • Clean-up Concept x Fin. Inst word Water bank . 97 . 85 teller . 88 . 07 money . 94 . 15 water . 32 . 91 … . 45 . 74 Yuval Marton, IBM talk 21

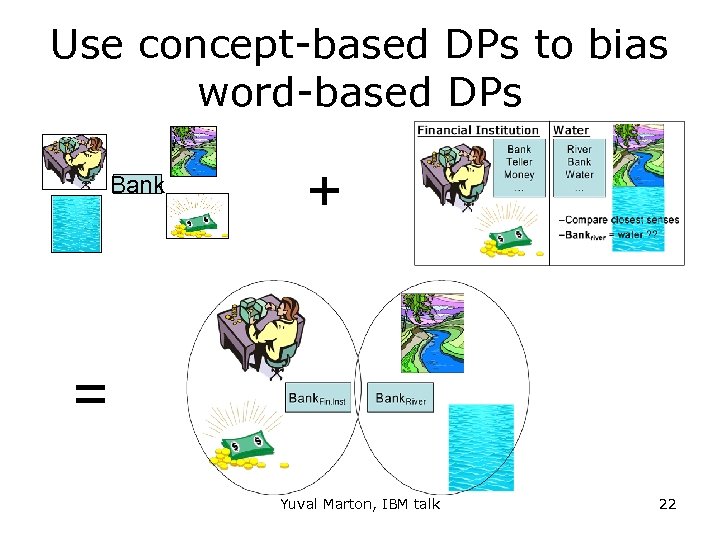

Use concept-based DPs to bias word-based DPs Bank + = Yuval Marton, IBM talk 22

Use concept-based DPs to bias word-based DPs Bank + = Yuval Marton, IBM talk 22

Fine-grained soft constraints • DPWS: distributional profile of word senses • Use concept-based DPs to bias word-based DPs – Hybrid-filtered – Hybrid-proportional Yuval Marton, IBM talk 23

Fine-grained soft constraints • DPWS: distributional profile of word senses • Use concept-based DPs to bias word-based DPs – Hybrid-filtered – Hybrid-proportional Yuval Marton, IBM talk 23

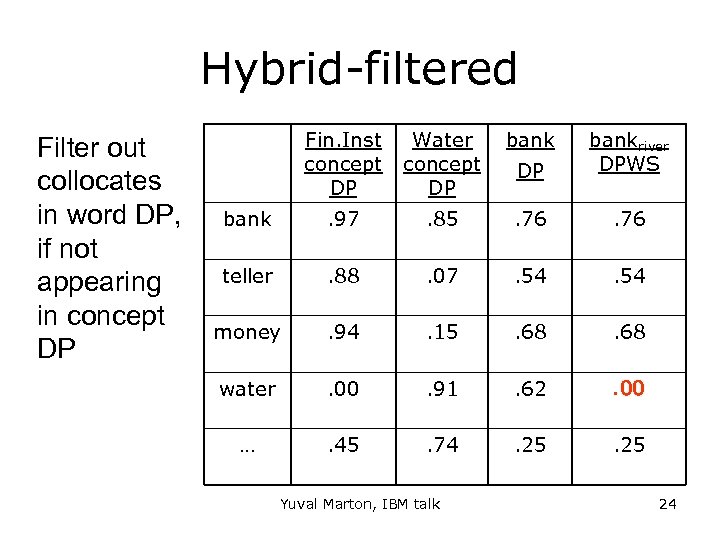

Hybrid-filtered Filter out collocates in word DP, if not appearing in concept DP Fin. Inst concept DP Water concept DP bankriver DPWS bank . 97 . 85 . 76 teller . 88 . 07 . 54 money . 94 . 15 . 68 water . 00 . 91 . 62 . 00 … . 45 . 74 . 25 Yuval Marton, IBM talk 24

Hybrid-filtered Filter out collocates in word DP, if not appearing in concept DP Fin. Inst concept DP Water concept DP bankriver DPWS bank . 97 . 85 . 76 teller . 88 . 07 . 54 money . 94 . 15 . 68 water . 00 . 91 . 62 . 00 … . 45 . 74 . 25 Yuval Marton, IBM talk 24

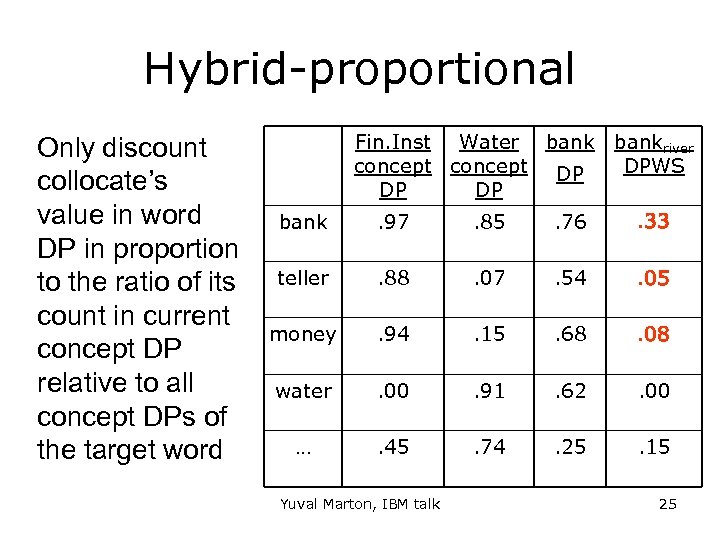

Hybrid-proportional Only discount collocate’s value in word DP in proportion to the ratio of its count in current concept DP relative to all concept DPs of the target word Fin. Inst Water bankriver concept DP DPWS DP DP bank . 97 . 85 . 76 . 33 teller . 88 . 07 . 54 . 05 money . 94 . 15 . 68 . 08 water . 00 . 91 . 62 . 00 … . 45 . 74 . 25 . 15 Yuval Marton, IBM talk 25

Hybrid-proportional Only discount collocate’s value in word DP in proportion to the ratio of its count in current concept DP relative to all concept DPs of the target word Fin. Inst Water bankriver concept DP DPWS DP DP bank . 97 . 85 . 76 . 33 teller . 88 . 07 . 54 . 05 money . 94 . 15 . 68 . 08 water . 00 . 91 . 62 . 00 … . 45 . 74 . 25 . 15 Yuval Marton, IBM talk 25

WSD with DPWS • Each sense of each word has a unique profile – Bankfin. inst ≠ Bankriver ≠ water ! • Pro: – Not aggregated: unlike concept DPs – Non/less smearing: unlike word DPs that smear all senses in a single profile Yuval Marton, IBM talk 26

WSD with DPWS • Each sense of each word has a unique profile – Bankfin. inst ≠ Bankriver ≠ water ! • Pro: – Not aggregated: unlike concept DPs – Non/less smearing: unlike word DPs that smear all senses in a single profile Yuval Marton, IBM talk 26

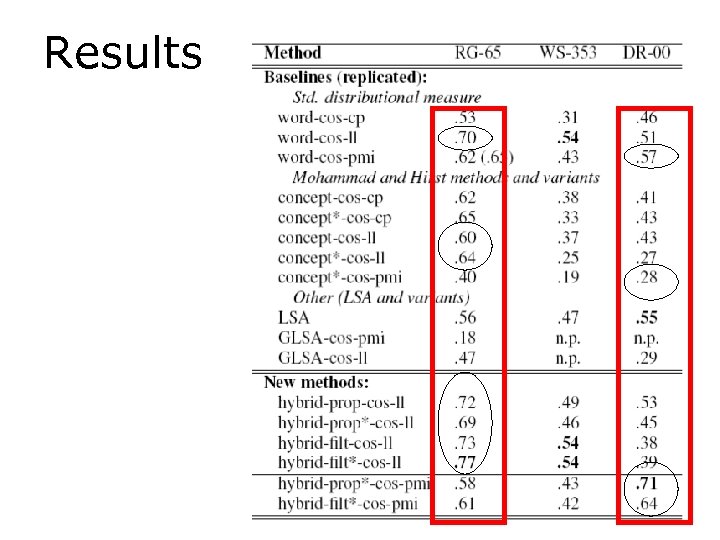

Results Yuval Marton, IBM talk 27

Results Yuval Marton, IBM talk 27

evaluation • Word-pair similarity ranking – Spearman Rank correlation • Paraphrasing in SMT – BLEU, TER, METEOR, . . Yuval Marton, IBM talk 28

evaluation • Word-pair similarity ranking – Spearman Rank correlation • Paraphrasing in SMT – BLEU, TER, METEOR, . . Yuval Marton, IBM talk 28

comparison • Word. Net results • LSA results Yuval Marton, IBM talk 29

comparison • Word. Net results • LSA results Yuval Marton, IBM talk 29

Challenges • Antonyms (black – white) • “Hyperonyms” (vehicle – car) • Co-hypernyms / co-taxonyms Yuval Marton, IBM talk 30

Challenges • Antonyms (black – white) • “Hyperonyms” (vehicle – car) • Co-hypernyms / co-taxonyms Yuval Marton, IBM talk 30

Univ. Soft conclusion • Hybrid Knowledge/Corpus-Based Statistical NLP Models Using Fine-Grained Soft Constraints – Fine-Grained – Semantic (“concepts”) – Semantic relatedness, resource-poor setting, special domains Yuval Marton, IBM talk 31

Univ. Soft conclusion • Hybrid Knowledge/Corpus-Based Statistical NLP Models Using Fine-Grained Soft Constraints – Fine-Grained – Semantic (“concepts”) – Semantic relatedness, resource-poor setting, special domains Yuval Marton, IBM talk 31

Thank you! Questions? ymarton@umiacs. umd. edu Advisors: Philip Resnik & Amy Weinberg Department of Linguistics and CLIP Lab Yuval Marton, IBM talk 32

Thank you! Questions? ymarton@umiacs. umd. edu Advisors: Philip Resnik & Amy Weinberg Department of Linguistics and CLIP Lab Yuval Marton, IBM talk 32

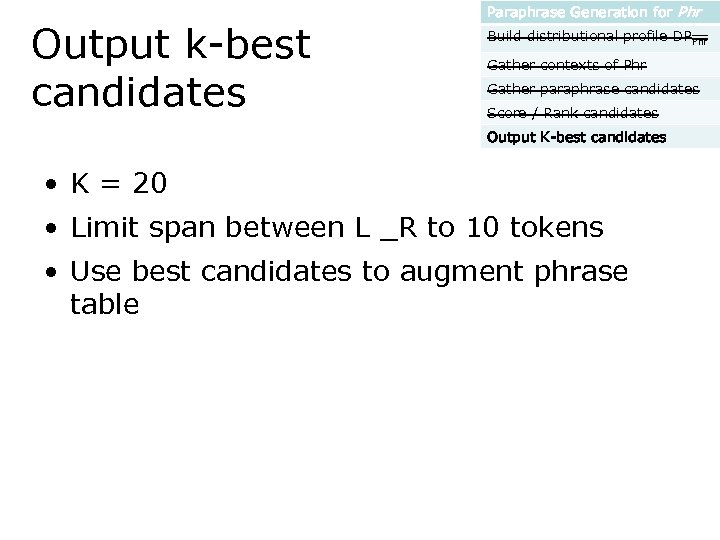

Paraphrase generation Paraphrase Generation for Phr Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates For some target OOV phrase Phr: • Build distributional profile DPPhr • Gather contexts of Phr • Gather paraphrase candidates • Score / Rank candidates • Output K-best candidates

Paraphrase generation Paraphrase Generation for Phr Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates For some target OOV phrase Phr: • Build distributional profile DPPhr • Gather contexts of Phr • Gather paraphrase candidates • Score / Rank candidates • Output K-best candidates

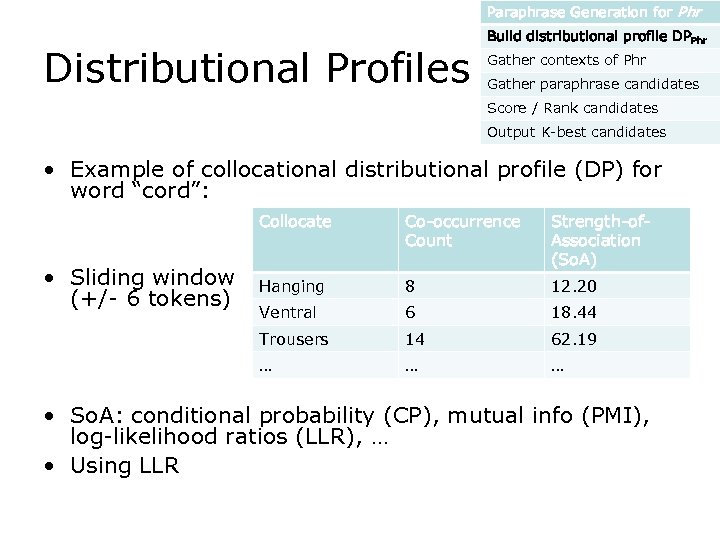

Paraphrase Generation for Phr Distributional Profiles Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Example of collocational distributional profile (DP) for word “cord”: Collocate Strength-of. Association (So. A) Hanging 8 12. 20 Ventral 6 18. 44 Trousers 14 62. 19 … • Sliding window (+/- 6 tokens) Co-occurrence Count … … • So. A: conditional probability (CP), mutual info (PMI), log-likelihood ratios (LLR), … • Using LLR

Paraphrase Generation for Phr Distributional Profiles Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Example of collocational distributional profile (DP) for word “cord”: Collocate Strength-of. Association (So. A) Hanging 8 12. 20 Ventral 6 18. 44 Trousers 14 62. 19 … • Sliding window (+/- 6 tokens) Co-occurrence Count … … • So. A: conditional probability (CP), mutual info (PMI), log-likelihood ratios (LLR), … • Using LLR

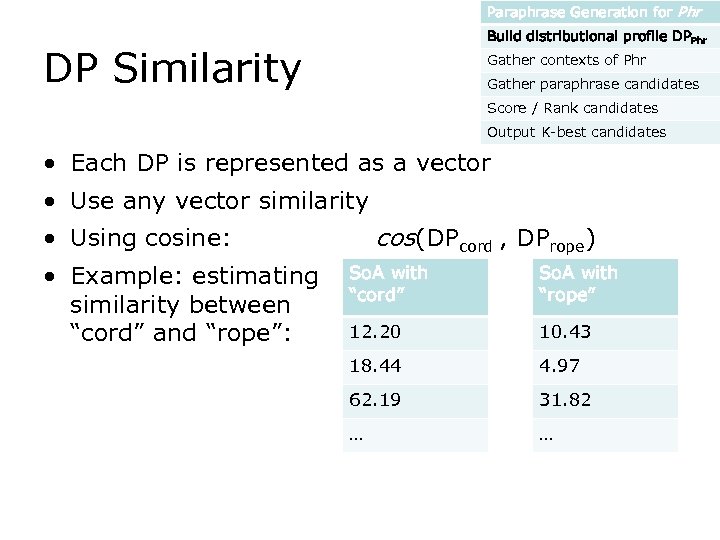

Paraphrase Generation for Phr Build distributional profile DPPhr DP Similarity Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Each DP is represented as a vector • Use any vector similarity cos(DPcord , DPrope) • Using cosine: • Example: estimating similarity between “cord” and “rope”: So. A with “cord” So. A with “rope” 12. 20 10. 43 18. 44 4. 97 62. 19 31. 82 … …

Paraphrase Generation for Phr Build distributional profile DPPhr DP Similarity Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Each DP is represented as a vector • Use any vector similarity cos(DPcord , DPrope) • Using cosine: • Example: estimating similarity between “cord” and “rope”: So. A with “cord” So. A with “rope” 12. 20 10. 43 18. 44 4. 97 62. 19 31. 82 … …

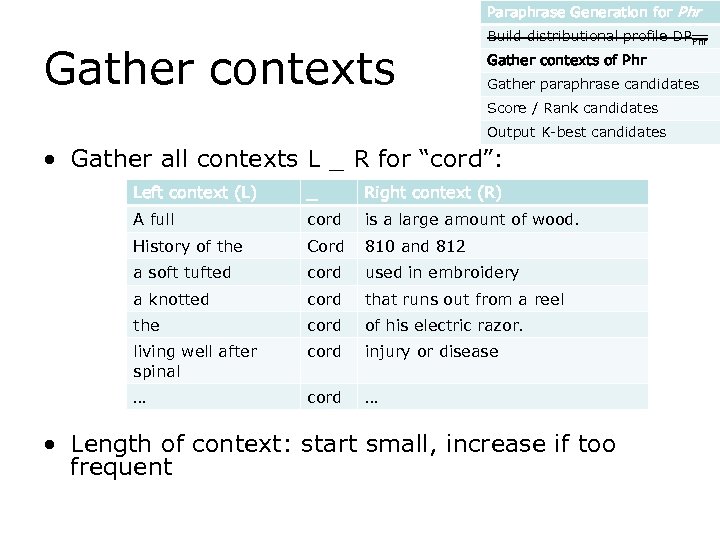

Paraphrase Generation for Phr Gather contexts Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Gather all contexts L _ R for “cord”: Left context (L) _ Right context (R) A full cord is a large amount of wood. History of the Cord 810 and 812 a soft tufted cord used in embroidery a knotted cord that runs out from a reel the cord of his electric razor. living well after spinal cord injury or disease … cord … • Length of context: start small, increase if too frequent

Paraphrase Generation for Phr Gather contexts Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Gather all contexts L _ R for “cord”: Left context (L) _ Right context (R) A full cord is a large amount of wood. History of the Cord 810 and 812 a soft tufted cord used in embroidery a knotted cord that runs out from a reel the cord of his electric razor. living well after spinal cord injury or disease … cord … • Length of context: start small, increase if too frequent

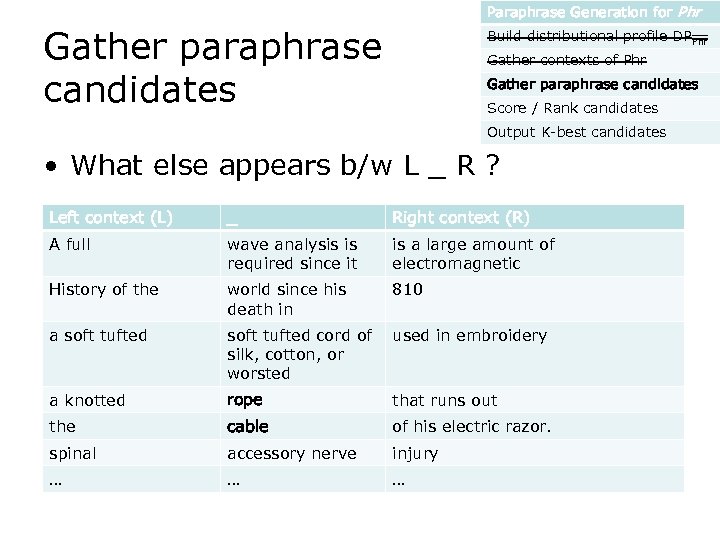

Paraphrase Generation for Phr Gather paraphrase candidates Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • What else appears b/w L _ R ? Left context (L) _ Right context (R) A full wave analysis is required since it is a large amount of electromagnetic History of the world since his death in 810 a soft tufted cord of silk, cotton, or worsted used in embroidery a knotted rope that runs out the cable of his electric razor. spinal accessory nerve injury … … …

Paraphrase Generation for Phr Gather paraphrase candidates Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • What else appears b/w L _ R ? Left context (L) _ Right context (R) A full wave analysis is required since it is a large amount of electromagnetic History of the world since his death in 810 a soft tufted cord of silk, cotton, or worsted used in embroidery a knotted rope that runs out the cable of his electric razor. spinal accessory nerve injury … … …

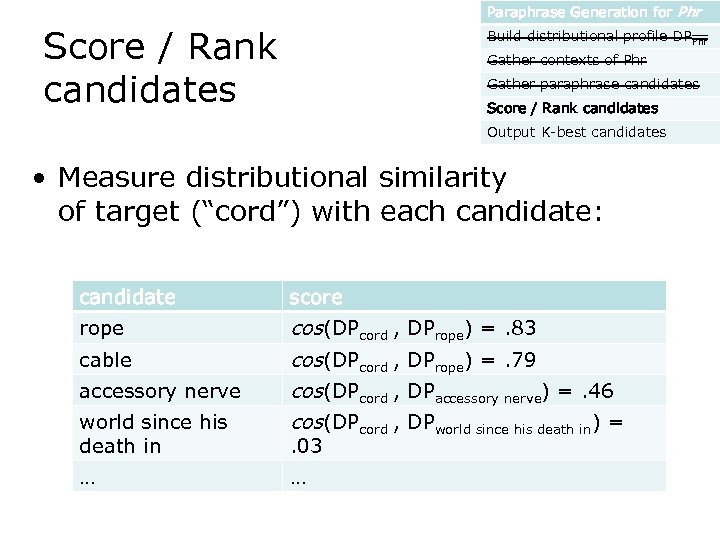

Paraphrase Generation for Phr Score / Rank candidates Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Measure distributional similarity of target (“cord”) with each candidate: candidate score rope cos(DPcord , cable accessory nerve world since his death in … . 03 … DPrope) =. 83 DPrope) =. 79 DPaccessory nerve) =. 46 DPworld since his death in) =

Paraphrase Generation for Phr Score / Rank candidates Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • Measure distributional similarity of target (“cord”) with each candidate: candidate score rope cos(DPcord , cable accessory nerve world since his death in … . 03 … DPrope) =. 83 DPrope) =. 79 DPaccessory nerve) =. 46 DPworld since his death in) =

Output k-best candidates Paraphrase Generation for Phr Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • K = 20 • Limit span between L _R to 10 tokens • Use best candidates to augment phrase table

Output k-best candidates Paraphrase Generation for Phr Build distributional profile DPPhr Gather contexts of Phr Gather paraphrase candidates Score / Rank candidates Output K-best candidates • K = 20 • Limit span between L _R to 10 tokens • Use best candidates to augment phrase table

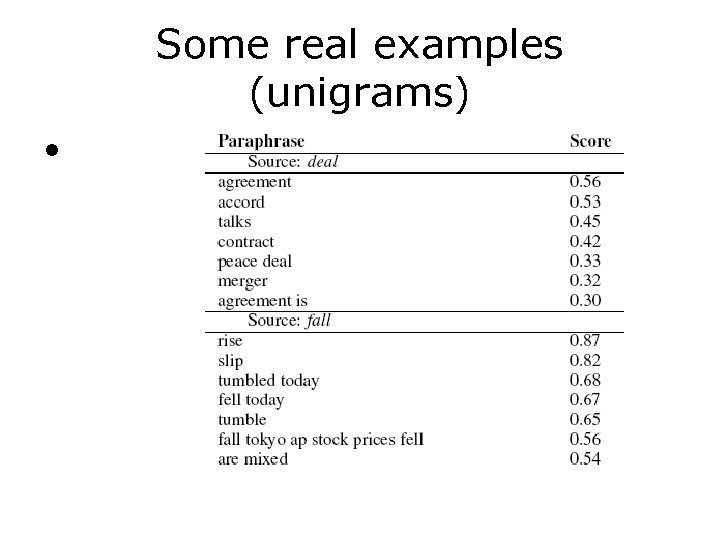

Some real examples (unigrams) •

Some real examples (unigrams) •

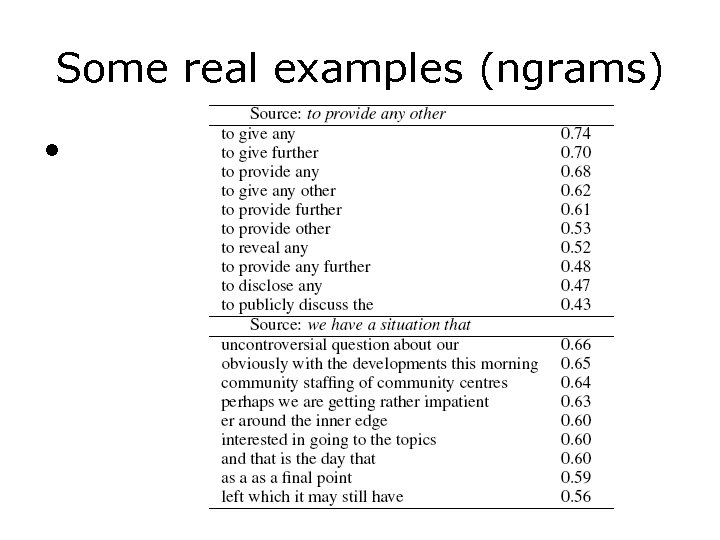

Some real examples (ngrams) •

Some real examples (ngrams) •

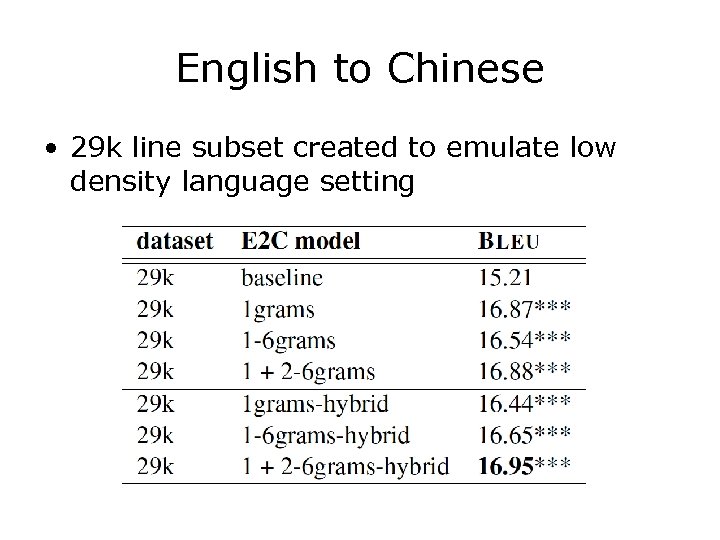

English to Chinese • 29 k line subset created to emulate low density language setting

English to Chinese • 29 k line subset created to emulate low density language setting

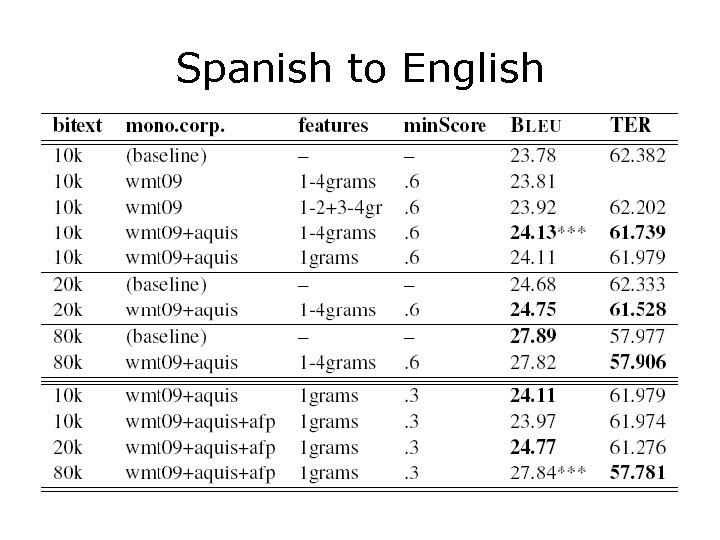

Spanish to English •

Spanish to English •

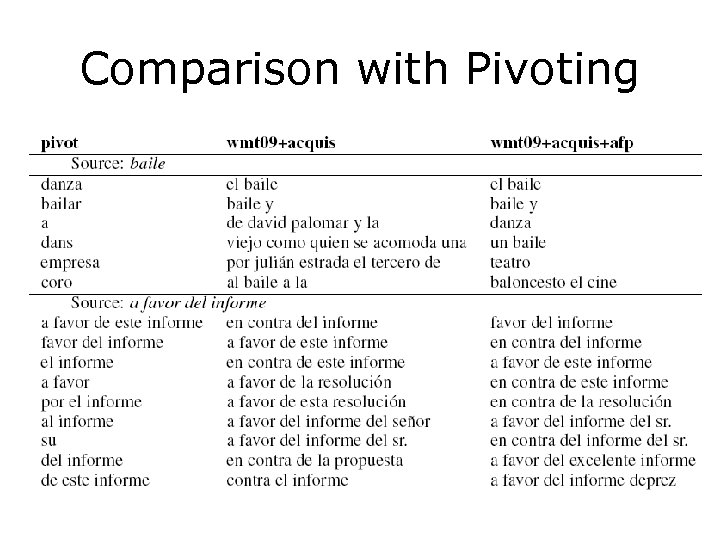

Comparison with Pivoting •

Comparison with Pivoting •

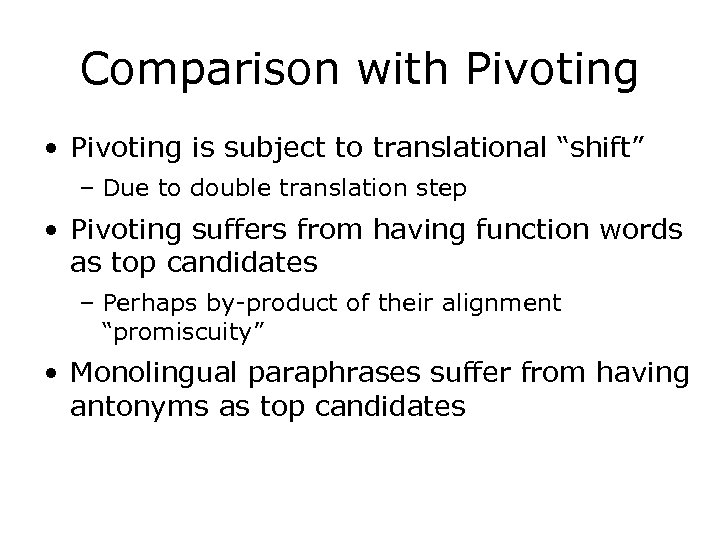

Comparison with Pivoting • Pivoting is subject to translational “shift” – Due to double translation step • Pivoting suffers from having function words as top candidates – Perhaps by-product of their alignment “promiscuity” • Monolingual paraphrases suffer from having antonyms as top candidates

Comparison with Pivoting • Pivoting is subject to translational “shift” – Due to double translation step • Pivoting suffers from having function words as top candidates – Perhaps by-product of their alignment “promiscuity” • Monolingual paraphrases suffer from having antonyms as top candidates

Monolingually-Derived Paraphrases: Advantages • Significant gains in SMT results for small sets • Good for resource-poor languages • Not relying on bitexts (a limited resource) • Larger monolingual paraphrase training set yields better paraphrases • General: Can plug in any similarity measure

Monolingually-Derived Paraphrases: Advantages • Significant gains in SMT results for small sets • Good for resource-poor languages • Not relying on bitexts (a limited resource) • Larger monolingual paraphrase training set yields better paraphrases • General: Can plug in any similarity measure

Challenges • Quality: distributional paraphrases suffer from high ranking antonyms, co-hypernyms • Smaller gains than the pivoting technique Callison-Burch et al. (2006), but can scale up. • How to benefit from POS and syntactic info e. g, Callison-Burch (2008) • How to benefit from semantic info / WSD e. g. , Marton, Mohammad & Resnik 2009; Erk & Pado 2008 • Scaling: need to explore if can get gains on bigger SMT sets before exhausting capacity of handling huge monolingual set.

Challenges • Quality: distributional paraphrases suffer from high ranking antonyms, co-hypernyms • Smaller gains than the pivoting technique Callison-Burch et al. (2006), but can scale up. • How to benefit from POS and syntactic info e. g, Callison-Burch (2008) • How to benefit from semantic info / WSD e. g. , Marton, Mohammad & Resnik 2009; Erk & Pado 2008 • Scaling: need to explore if can get gains on bigger SMT sets before exhausting capacity of handling huge monolingual set.

Thank you! • Questions

Thank you! • Questions