95dcdb7d8aab7374864c21befc5968c1.ppt

- Количество слайдов: 30

Financial Noise And Overconfidence

Ubiquitous News and Advice § CNBC § WSJ § Jim Cramer § Assorted talking heads § How good can it be?

Thought Experiment § Listen to CNBC every day § Choose ten stocks from recommendations § Buy stocks § How good can this strategy be?

Thought Experiment § 10 stocks x 260 business days = 2, 600 bets § Assume the bets are independent § How good does the information have to be such that the probability of losing money is 1 in 10, 000?

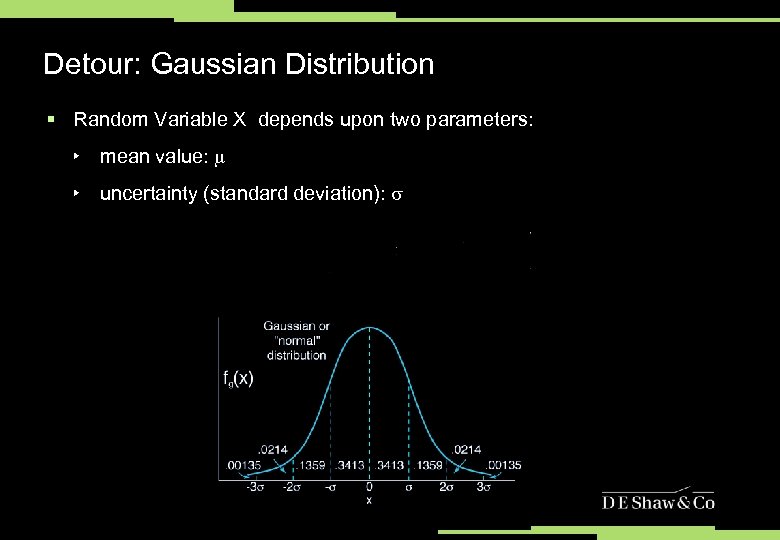

Detour: Gaussian Distribution § Random Variable X depends upon two parameters: ‣ mean value: ‣ uncertainty (standard deviation):

Detour: Gaussian Distribution § Universal “Bell Curve” Distribution § Prob(X < 0) depends only on the ratio of /

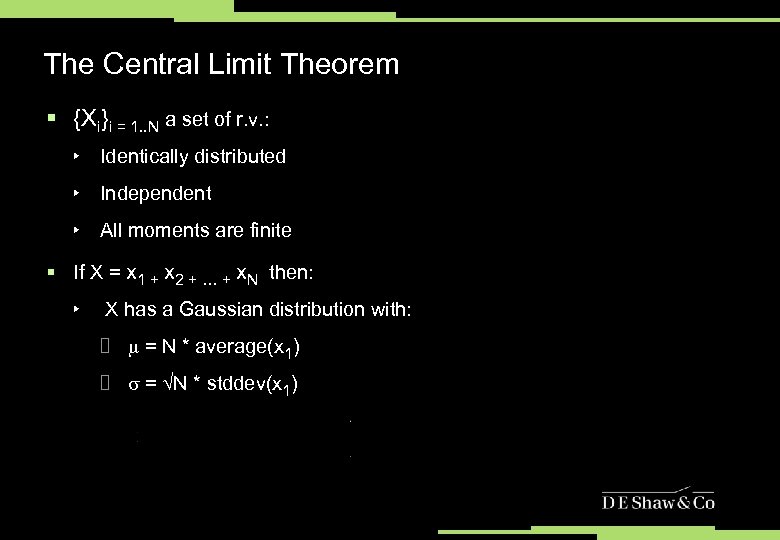

The Central Limit Theorem § {Xi}i = 1. . N a set of r. v. : ‣ Identically distributed ‣ Independent ‣ All moments are finite § If X = x 1 + x 2 + … + x. N then: ‣ X has a Gaussian distribution with: = N * average(x 1) = N * stddev(x 1)

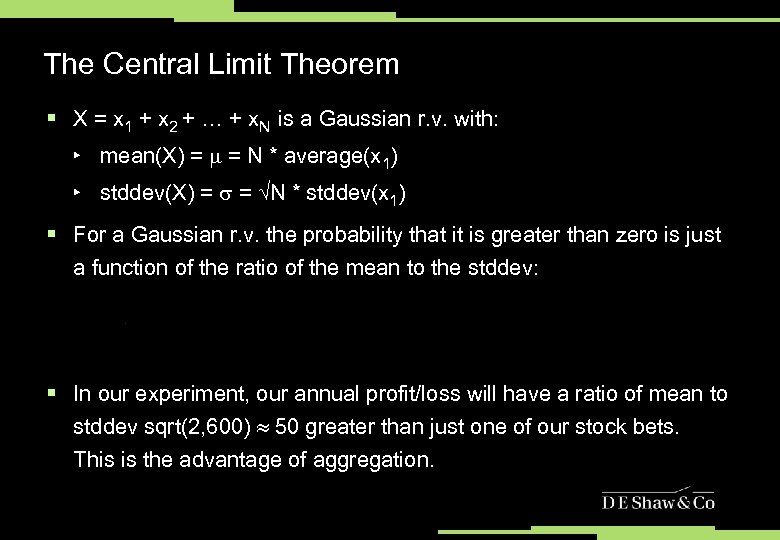

The Central Limit Theorem § X = x 1 + x 2 + … + x. N is a Gaussian r. v. with: ‣ mean(X) = = N * average(x 1) ‣ stddev(X) = = N * stddev(x 1) § For a Gaussian r. v. the probability that it is greater than zero is just a function of the ratio of the mean to the stddev: § In our experiment, our annual profit/loss will have a ratio of mean to stddev sqrt(2, 600) 50 greater than just one of our stock bets. This is the advantage of aggregation.

How Good is the Information? § Assume Prob(Xannual < 0) 1 / 10, 000 § mean(Xannual) 3. 7 * stddev(Xannual) § mean(Xind) (3. 7 / 50) * stddev(Xind) 0. 07 * stdev(Xind) § Prob(Xind < 0) 47%

Probability That Advice Is Right? § Any given piece of advice can’t be much more than 53% reliable, compared to 50% for a random flip of a coin § Otherwise, we could make a strategy that almost never loses money by just aggregating all the different pieces of advice § Contrast “my price target for this stock is 102 by mid-November” with “I think it’s 53– 47% that this goes up vs. down” § Precision here greatly exceeds possible accuracy § Overconfidence § No error bars

Probability that Advice is Right? § If we want Prob(Xannual < 0) 1 / 1, 000 then: ‣ Prob(Xind < 0) 46%, instead of 47% § A computer algorithm (or team of analysts) predicting something everyday for all 2, 000 U. S. stocks, instead of just 10 stocks, would need only Prob(Xind < 0) 49. 75% to get Prob(Xannual < 0) to 1 in 1, 000! § The existence of such a strategy seems unlikely § Statements expressed with certainty about the market need to be viewed with skepticism § Low signal-to-noise ratio

Where Are Our Assumptions Wrong? § Transaction costs matter § Individual stock bets almost certainly not independent § By placing bets on linear combinations of individual stocks one can partially overcome correlations between bets, but you reduce the effective number of bets. § These aren’t just technical details. They can make the difference between making or losing money.

Honesty Is The Best Policy § Despite caveats, the big picture is correct ‣ Only small effects exist ‣ The data is very noisy ‣ One can find statistical significance only by aggregating lots of data § As a result there is great value in: ‣ Clean data ‣ Quantitative sophistication ‣ Discipline ‣ Honesty

Overconfidence and Overfitting § Humans are good at learning patterns when: ‣ Patterns are persistent ‣ Signal-to-noise ratio isn’t too bad § Examples include: language acquisition, human motor control, physics, chemistry, biology, etc. § Humans are bad at finding weak patterns that come and go as markets evolve § They tend to overestimate their abilities to find patterns

Further Consequences of Aggregation § If profit/loss distribution is Gaussian then only the mean and the standard deviation matter § Consider 3 strategies: ‣ Invest all your money in the S&P 500 ‣ Invest all your money in the Nasdaq ‣ 50% in the Nasdaq and 50% in short-term U. S. Government Bonds § Over the past 40 years, these strategies returned approximately: ‣ 7% +/- 20% ‣ 8% +/- 35% ‣ 5. 5% +/- 17. 5%

Diversifying Risk § Imagine we can lend or borrow at some risk-free rate r § Suppose we have an amount of capital c, which we distribute: ‣ X in S&P 500 ‣ Y in Nasdaq ‣ Z in “cash” (where c = X + Y + Z) § Our investment returns are: ‣ r. P = X*r. SP + Y*r. ND + Z*r ‣ r. P = X*(r. SP – r) + Y*(r. ND – r) + c*r ‣ E(r. P) = X*E(r. SP – r) + Y*E(r. ND – r) + c*r

Consequences of Diversification § As long as E(r. SP) and/or E(r. ND) exceeds the risk-free rate, r, we can target any desired return § The price for high return is high volatility § One measure of a strategy is the ratio of the return above the risk-free rate divided by the volatility of the strategy § Investing everything in Nasdaq gave the best return of the three strategies in the original list § Assuming the correlation between ND and SP is 0. 85, the optimal mixture of investments gives:

Consequences of Diversification § For our example, we want Y - 0. 2*X, but we get to choose the value of X. We can choose the level of risk! § If we choose X 2. 4 and Y -0. 5, we get the same risk as investing everything in the Nasdaq but our return is 10. 1% rather than 8% § Returns are meaningless if not quoted with: ‣ Volatility ‣ Correlations § Why does everyone just quote absolute returns?

Past Results Do Not Guarantee Future Results § If we only look back over the past 20 years, the numbers change: ‣ 3. 5% +/- 20% for S&P ‣ 7% +/- 35% for Nasdaq ‣ 5. 0% +/- 17. 5% for 50% Nasdaq and 50 % Risk-free § The same optimal weighting calculation gave X -2. 6 and Y 1. 9 which gave the same risk as Nasdaq but with a return of 9. 3%! § 40 years of data suggests that an investor should go long S&P and short Nasdaq, but 20 years of data suggests to opposite. If we used the 40 year weight on the last 20 years we’d end up making 2%.

Application to the Credit Crisis § Securitization ‣ Bundles of loans meant to behave independently ‣ CDOs are slices of securitized bundles ‣ Rating agencies suggested 1 in 10, 000 chance of investments losing money ‣ All the loans defaulted together, rather than independently!

Credit Models Were Not Robust § Even if mortgages were independent, the process of securitization can be very unstable. § Thought Experiment: ‣ Imagine a mortgage pays out $1 unless it defaults and then pays $0. ‣ All mortgages are independent and have a default probability of 5%. ‣ What happens to default probabilities when one bundles mortgages?

Mortgage Bundling Primer: Tranches § Combine the payout of 100 mortgages to make 100 new instruments called “Tranches. ” § Tranche 1 pays $1 if no mortgage defaults. § Tranche 2 pays $1 if only one mortgage defaults. § Tranche i pays $1 if the number of defaulted mortgages < i. § So far all we have done is transformed the cashflow. § What are the default rates for the new Tranches?

Mortgage Bundling Primer: Tranches § If each mortgage has default rate p=0. 05 then the ith Tranche has default rate: § Where p. T(i) is the default probability for the ith Tranche. § For the first Tranche p. T(1) = 99. 4%, i. e. , it’s very likely to default. But for the 10 th Tranche p. T(10) = 2. 8%. § By the 10 th Tranche we have created an instrument that is safer than the original mortgages.

Securitizing Tranches: CDOs § That was fun, let’s do it again! § Take 100 type-10 Tranches and bundle them up together using the same method. § The default rate for the kth type-10 Tranche is then: § The default probability on the 10 th type-10 Tranche is then p. CDO(k) = 0. 05%. Only 1/100 the default probability of the original mortgages!

Why Did We Do This: Risk Averse Investors § After making these manipulations we still only have the same cash flow from 10, 000 mortgages, so why did we do it? § Some investors will pay a premium for very low risk investments. § They may even be required by their charter to only invest in instruments rated very low risk (AAA) by rating agencies. § They do not have direct access to the mortgage market, so they can’t mimic securitization themselves.

Are They Really So Safe? § These results are very sensitive to the assumptions. § If the underlying mortgages actually have a default probability of 6% (a 20% increase) than the 10 th Tranches have a default probability of 7. 8% (a 275% increase). § Worse, the 10 th type-10 Tranches will have a default probability of 25%, a 50, 000% increase! § These models are not robust to errors in the assumptions!

Application to the Credit Crisis § Connections with thought experiment: ‣ Overconfidence (bad estimate of error bars) ‣ Insufficient independence between loans (bad use of Central Limit Theorem) ‣ Illusory diversification (just one big bet houses wouldn’t lose value)

About the D. E. Shaw Group § Founded in 1988 § Quantitative and qualitative investment strategies § Offices in North America, Europe, and Asia § 1, 500 employees worldwide § Managing approximately $22 billion (as of April 1, 2010)

About What I Do § Quantitative Analyst (“Quant”) § Most Quants have a background in math, physics, EE, CS, or statistics ‣ Many hold a Ph. D. in one of these subjects § We work on: ‣ Forecasting future prices ‣ Reducing transaction costs ‣ Modeling/managing risk

95dcdb7d8aab7374864c21befc5968c1.ppt