8e8a3d93521bd71b2575f78c15689dd9.ppt

- Количество слайдов: 1

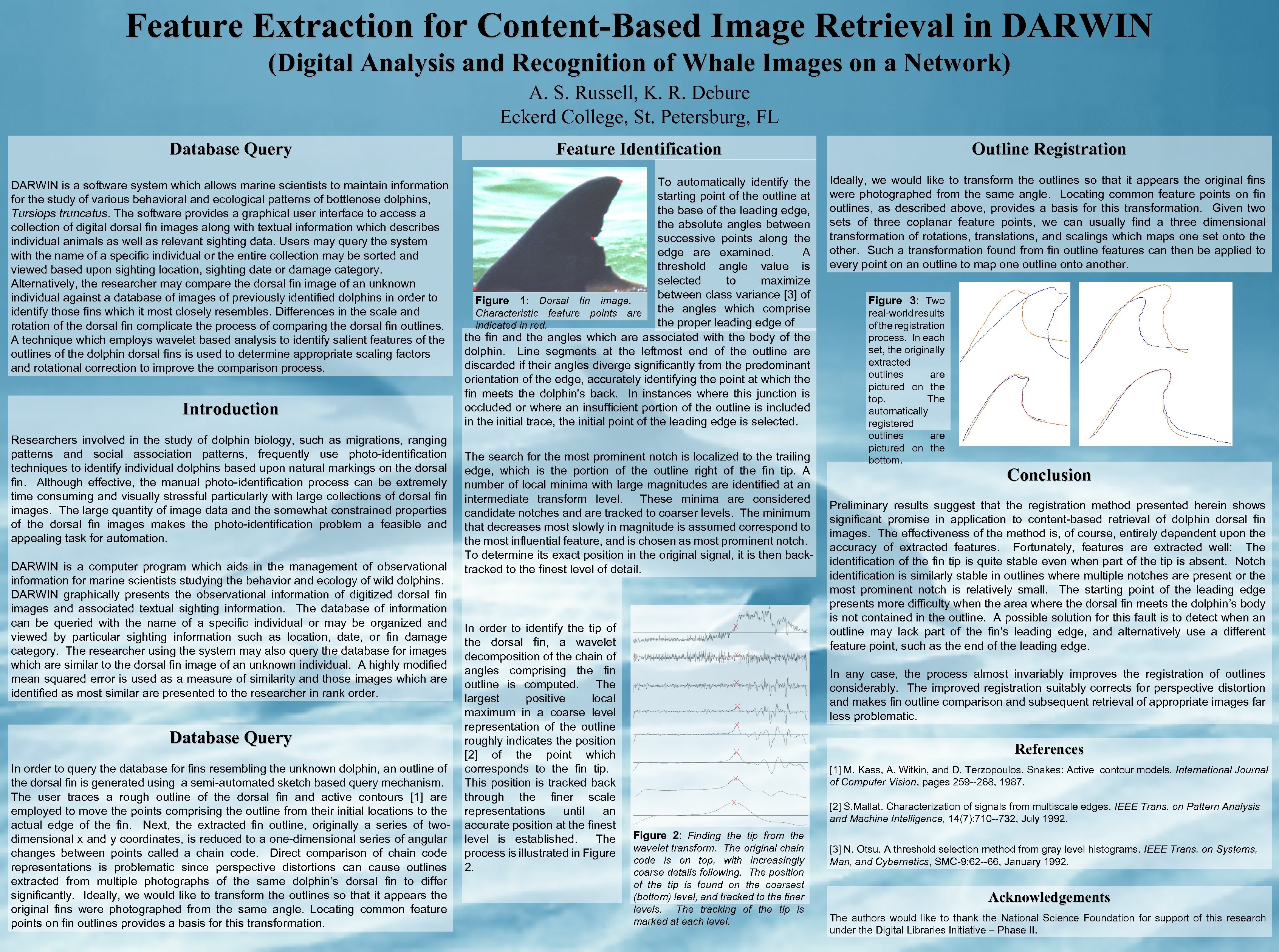

Feature Extraction for Content-Based Image Retrieval in DARWIN (Digital Analysis and Recognition of Whale Images on a Network) A. S. Russell, K. R. Debure Eckerd College, St. Petersburg, FL Database Query Feature Identification Outline Registration DARWIN is a software system which allows marine scientists to maintain information for the study of various behavioral and ecological patterns of bottlenose dolphins, Tursiops truncatus. The software provides a graphical user interface to access a collection of digital dorsal fin images along with textual information which describes individual animals as well as relevant sighting data. Users may query the system with the name of a specific individual or the entire collection may be sorted and viewed based upon sighting location, sighting date or damage category. Alternatively, the researcher may compare the dorsal fin image of an unknown individual against a database of images of previously identified dolphins in order to identify those fins which it most closely resembles. Differences in the scale and rotation of the dorsal fin complicate the process of comparing the dorsal fin outlines. A technique which employs wavelet based analysis to identify salient features of the outlines of the dolphin dorsal fins is used to determine appropriate scaling factors and rotational correction to improve the comparison process. To automatically identify the starting point of the outline at the base of the leading edge, the absolute angles between successive points along the edge are examined. A threshold angle value is selected to maximize between class variance [3] of Figure 1: Dorsal fin image. the angles which comprise Characteristic feature points are the proper leading edge of indicated in red. the fin and the angles which are associated with the body of the dolphin. Line segments at the leftmost end of the outline are discarded if their angles diverge significantly from the predominant orientation of the edge, accurately identifying the point at which the fin meets the dolphin's back. In instances where this junction is occluded or where an insufficient portion of the outline is included in the initial trace, the initial point of the leading edge is selected. Ideally, we would like to transform the outlines so that it appears the original fins were photographed from the same angle. Locating common feature points on fin outlines, as described above, provides a basis for this transformation. Given two sets of three coplanar feature points, we can usually find a three dimensional transformation of rotations, translations, and scalings which maps one set onto the other. Such a transformation found from fin outline features can then be applied to every point on an outline to map one outline onto another. Introduction Researchers involved in the study of dolphin biology, such as migrations, ranging patterns and social association patterns, frequently use photo-identification techniques to identify individual dolphins based upon natural markings on the dorsal fin. Although effective, the manual photo-identification process can be extremely time consuming and visually stressful particularly with large collections of dorsal fin images. The large quantity of image data and the somewhat constrained properties of the dorsal fin images makes the photo-identification problem a feasible and appealing task for automation. DARWIN is a computer program which aids in the management of observational information for marine scientists studying the behavior and ecology of wild dolphins. DARWIN graphically presents the observational information of digitized dorsal fin images and associated textual sighting information. The database of information can be queried with the name of a specific individual or may be organized and viewed by particular sighting information such as location, date, or fin damage category. The researcher using the system may also query the database for images which are similar to the dorsal fin image of an unknown individual. A highly modified mean squared error is used as a measure of similarity and those images which are identified as most similar are presented to the researcher in rank order. Database Query In order to query the database for fins resembling the unknown dolphin, an outline of the dorsal fin is generated using a semi-automated sketch based query mechanism. The user traces a rough outline of the dorsal fin and active contours [1] are employed to move the points comprising the outline from their initial locations to the actual edge of the fin. Next, the extracted fin outline, originally a series of twodimensional x and y coordinates, is reduced to a one-dimensional series of angular changes between points called a chain code. Direct comparison of chain code representations is problematic since perspective distortions can cause outlines extracted from multiple photographs of the same dolphin’s dorsal fin to differ significantly. Ideally, we would like to transform the outlines so that it appears the original fins were photographed from the same angle. Locating common feature points on fin outlines provides a basis for this transformation. The search for the most prominent notch is localized to the trailing edge, which is the portion of the outline right of the fin tip. A number of local minima with large magnitudes are identified at an intermediate transform level. These minima are considered candidate notches and are tracked to coarser levels. The minimum that decreases most slowly in magnitude is assumed correspond to the most influential feature, and is chosen as most prominent notch. To determine its exact position in the original signal, it is then backtracked to the finest level of detail. In order to identify the tip of the dorsal fin, a wavelet decomposition of the chain of angles comprising the fin outline is computed. The largest positive local maximum in a coarse level representation of the outline roughly indicates the position [2] of the point which corresponds to the fin tip. This position is tracked back through the finer scale representations until an accurate position at the finest level is established. The process is illustrated in Figure 2. Figure 3: Two real-world results of the registration process. In each set, the originally extracted outlines are pictured on the top. The automatically registered outlines are pictured on the bottom. Conclusion Preliminary results suggest that the registration method presented herein shows significant promise in application to content-based retrieval of dolphin dorsal fin images. The effectiveness of the method is, of course, entirely dependent upon the accuracy of extracted features. Fortunately, features are extracted well: The identification of the fin tip is quite stable even when part of the tip is absent. Notch identification is similarly stable in outlines where multiple notches are present or the most prominent notch is relatively small. The starting point of the leading edge presents more difficulty when the area where the dorsal fin meets the dolphin’s body is not contained in the outline. A possible solution for this fault is to detect when an outline may lack part of the fin's leading edge, and alternatively use a different feature point, such as the end of the leading edge. In any case, the process almost invariably improves the registration of outlines considerably. The improved registration suitably corrects for perspective distortion and makes fin outline comparison and subsequent retrieval of appropriate images far less problematic. References [1] M. Kass, A. Witkin, and D. Terzopoulos. Snakes: Active contour models. International Journal of Computer Vision, pages 259 --268, 1987. [2] S. Mallat. Characterization of signals from multiscale edges. IEEE Trans. on Pattern Analysis and Machine Intelligence, 14(7): 710 --732, July 1992. Figure 2: Finding the tip from the wavelet transform. The original chain code is on top, with increasingly coarse details following. The position of the tip is found on the coarsest (bottom) level, and tracked to the finer levels. The tracking of the tip is marked at each level. [3] N. Otsu. A threshold selection method from gray level histograms. IEEE Trans. on Systems, Man, and Cybernetics, SMC-9: 62 --66, January 1992. Acknowledgements The authors would like to thank the National Science Foundation for support of this research under the Digital Libraries Initiative – Phase II.

8e8a3d93521bd71b2575f78c15689dd9.ppt