d33534bc327de10ca248b9cd166077d7.ppt

- Количество слайдов: 72

Fall 2004, CIS, Temple University CIS 527: Data Warehousing, Filtering, and Mining Lecture 11 • Mining Complex Types of Data: Information Retrieval Lecture slides taken/modified from: – Raymond J. Mooney (http: //www. cs. utexas. edu/users/mooney/ir-course/) Reading Material: – Download a sample chapter on Text Analysis from “Modeling the Internet and the Web”, by Pierre Baldi, Paolo Frasconi, Padhraic Smyth (http: //ibook. ics. uci. edu/) 1

Fall 2004, CIS, Temple University CIS 527: Data Warehousing, Filtering, and Mining Lecture 11 • Mining Complex Types of Data: Information Retrieval Lecture slides taken/modified from: – Raymond J. Mooney (http: //www. cs. utexas. edu/users/mooney/ir-course/) Reading Material: – Download a sample chapter on Text Analysis from “Modeling the Internet and the Web”, by Pierre Baldi, Paolo Frasconi, Padhraic Smyth (http: //ibook. ics. uci. edu/) 1

Information Retrieval (IR) • The indexing and retrieval of textual documents. • Searching for pages on the World Wide Web is the most recent “killer app. ” • Concerned firstly with retrieving relevant documents to a query. • Concerned secondly with retrieving from large sets of documents efficiently. 2

Information Retrieval (IR) • The indexing and retrieval of textual documents. • Searching for pages on the World Wide Web is the most recent “killer app. ” • Concerned firstly with retrieving relevant documents to a query. • Concerned secondly with retrieving from large sets of documents efficiently. 2

Typical IR Task • Given: – A corpus of textual natural-language documents. – A user query in the form of a textual string. • Find: – A ranked set of documents that are relevant to the query. 3

Typical IR Task • Given: – A corpus of textual natural-language documents. – A user query in the form of a textual string. • Find: – A ranked set of documents that are relevant to the query. 3

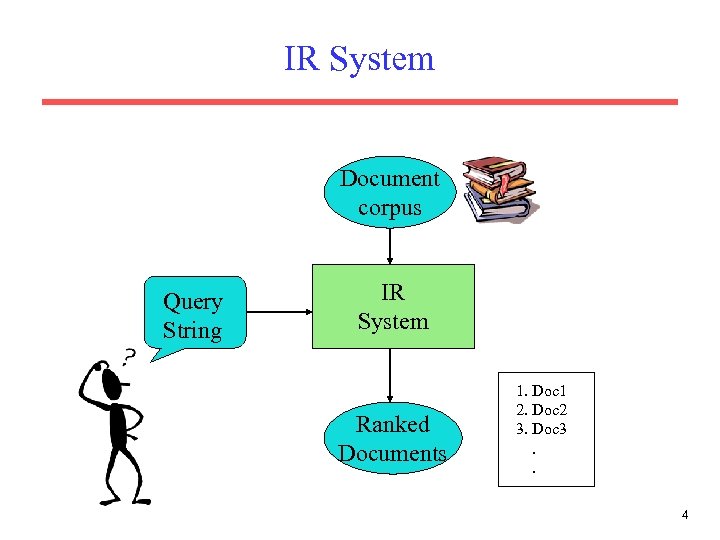

IR System Document corpus Query String IR System Ranked Documents 1. Doc 1 2. Doc 2 3. Doc 3. . 4

IR System Document corpus Query String IR System Ranked Documents 1. Doc 1 2. Doc 2 3. Doc 3. . 4

Relevance • Relevance is a subjective judgment and may include: – Being on the proper subject. – Being timely (recent information). – Being authoritative (from a trusted source). – Satisfying the goals of the user and his/her intended use of the information (information need). 5

Relevance • Relevance is a subjective judgment and may include: – Being on the proper subject. – Being timely (recent information). – Being authoritative (from a trusted source). – Satisfying the goals of the user and his/her intended use of the information (information need). 5

Keyword Search • Simplest notion of relevance is that the query string appears verbatim in the document. • Slightly less strict notion is that the words in the query appear frequently in the document, in any order (bag of words). 6

Keyword Search • Simplest notion of relevance is that the query string appears verbatim in the document. • Slightly less strict notion is that the words in the query appear frequently in the document, in any order (bag of words). 6

Intelligent IR • Taking into account the meaning of the words used. • Taking into account the order of words in the query. • Adapting to the user based on direct or indirect feedback. • Taking into account the authority of the source. 7

Intelligent IR • Taking into account the meaning of the words used. • Taking into account the order of words in the query. • Adapting to the user based on direct or indirect feedback. • Taking into account the authority of the source. 7

IR System Components • Text Operations forms index words (tokens). – Stopword removal – Stemming • Indexing constructs an inverted index of word to document pointers. • Searching retrieves documents that contain a given query token from the inverted index. • Ranking scores all retrieved documents according to a relevance metric. 8

IR System Components • Text Operations forms index words (tokens). – Stopword removal – Stemming • Indexing constructs an inverted index of word to document pointers. • Searching retrieves documents that contain a given query token from the inverted index. • Ranking scores all retrieved documents according to a relevance metric. 8

IR System Components (continued) • User Interface manages interaction with the user: – Query input and document output. – Relevance feedback. – Visualization of results. • Query Operations transform the query to improve retrieval: – Query expansion using a thesaurus. – Query transformation using relevance feedback. 9

IR System Components (continued) • User Interface manages interaction with the user: – Query input and document output. – Relevance feedback. – Visualization of results. • Query Operations transform the query to improve retrieval: – Query expansion using a thesaurus. – Query transformation using relevance feedback. 9

Web Search • Application of IR to HTML documents on the World Wide Web. • Differences: – Must assemble document corpus by spidering the web. – Can exploit the structural layout information in HTML (XML). – Documents change uncontrollably. – Can exploit the link structure of the web. 10

Web Search • Application of IR to HTML documents on the World Wide Web. • Differences: – Must assemble document corpus by spidering the web. – Can exploit the structural layout information in HTML (XML). – Documents change uncontrollably. – Can exploit the link structure of the web. 10

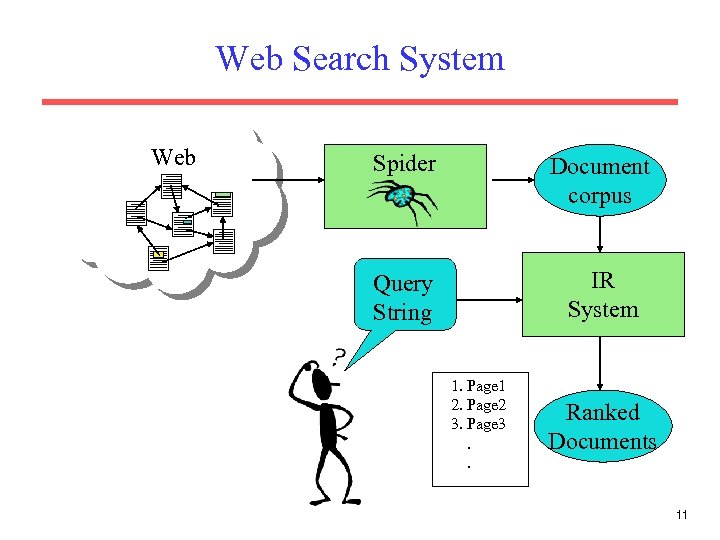

Web Search System Web Spider Document corpus Query String IR System 1. Page 1 2. Page 2 3. Page 3. . Ranked Documents 11

Web Search System Web Spider Document corpus Query String IR System 1. Page 1 2. Page 2 3. Page 3. . Ranked Documents 11

Other IR-Related Tasks • • Automated document categorization Information filtering (spam filtering) Information routing Automated document clustering Recommending information or products Information extraction Information integration Question answering 12

Other IR-Related Tasks • • Automated document categorization Information filtering (spam filtering) Information routing Automated document clustering Recommending information or products Information extraction Information integration Question answering 12

Related Areas • • • Database Management Library and Information Science Artificial Intelligence Natural Language Processing Machine Learning 13

Related Areas • • • Database Management Library and Information Science Artificial Intelligence Natural Language Processing Machine Learning 13

Retrieval Models • A retrieval model specifies the details of: – Document representation – Query representation – Retrieval function • Determines a notion of relevance. • Notion of relevance can be binary or continuous (i. e. ranked retrieval). 14

Retrieval Models • A retrieval model specifies the details of: – Document representation – Query representation – Retrieval function • Determines a notion of relevance. • Notion of relevance can be binary or continuous (i. e. ranked retrieval). 14

Classes of Retrieval Models • Boolean models (set theoretic) – Extended Boolean • Vector space models (statistical/algebraic) – Generalized VS – Latent Semantic Indexing • Probabilistic models 15

Classes of Retrieval Models • Boolean models (set theoretic) – Extended Boolean • Vector space models (statistical/algebraic) – Generalized VS – Latent Semantic Indexing • Probabilistic models 15

Retrieval Tasks • Ad hoc retrieval: Fixed document corpus, varied queries. • Filtering: Fixed query, continuous document stream. – User Profile: A model of relative static preferences. – Binary decision of relevant/not-relevant. • Routing: Same as filtering but continuously supply ranked lists rather than binary filtering. 16

Retrieval Tasks • Ad hoc retrieval: Fixed document corpus, varied queries. • Filtering: Fixed query, continuous document stream. – User Profile: A model of relative static preferences. – Binary decision of relevant/not-relevant. • Routing: Same as filtering but continuously supply ranked lists rather than binary filtering. 16

Boolean Model • A document is represented as a set of keywords. • Queries are Boolean expressions of keywords, connected by AND, OR, and NOT, including the use of brackets to indicate scope. – [[Rio & Brazil] | [Hilo & Hawaii]] & hotel & !Hilton] • Output: Document is relevant or not. No partial matches or ranking. 17

Boolean Model • A document is represented as a set of keywords. • Queries are Boolean expressions of keywords, connected by AND, OR, and NOT, including the use of brackets to indicate scope. – [[Rio & Brazil] | [Hilo & Hawaii]] & hotel & !Hilton] • Output: Document is relevant or not. No partial matches or ranking. 17

Boolean Retrieval Model • Popular retrieval model because: – Easy to understand for simple queries. – Clean formalism. • Boolean models can be extended to include ranking. • Reasonably efficient implementations possible for normal queries. 18

Boolean Retrieval Model • Popular retrieval model because: – Easy to understand for simple queries. – Clean formalism. • Boolean models can be extended to include ranking. • Reasonably efficient implementations possible for normal queries. 18

Boolean Models Problems • Very rigid: AND means all; OR means any. • Difficult to express complex user requests. • Difficult to control the number of documents retrieved. – All matched documents will be returned. • Difficult to rank output. – All matched documents logically satisfy the query. • Difficult to perform relevance feedback. – If a document is identified by the user as relevant or irrelevant, how should the query be modified? 19

Boolean Models Problems • Very rigid: AND means all; OR means any. • Difficult to express complex user requests. • Difficult to control the number of documents retrieved. – All matched documents will be returned. • Difficult to rank output. – All matched documents logically satisfy the query. • Difficult to perform relevance feedback. – If a document is identified by the user as relevant or irrelevant, how should the query be modified? 19

Statistical Retrieval • Retrieval based on similarity between query and documents. • Output documents are ranked according to similarity to query. • Similarity based on occurrence frequencies of keywords in query and document. • Automatic relevance feedback can be supported: – Relevant documents “added” to query. – Irrelevant documents “subtracted” from query. 20

Statistical Retrieval • Retrieval based on similarity between query and documents. • Output documents are ranked according to similarity to query. • Similarity based on occurrence frequencies of keywords in query and document. • Automatic relevance feedback can be supported: – Relevant documents “added” to query. – Irrelevant documents “subtracted” from query. 20

The Vector-Space Model • A document is typically represented by a bag of words (unordered words with frequencies). • Assume a vocabulary of t distinct terms • Each term, i, in a document or query, j, is given a real-valued weight, wij. • Both documents and queries are expressed as dimensional vectors: t- dj = (w 1 j, w 2 j, …, wtj) 21

The Vector-Space Model • A document is typically represented by a bag of words (unordered words with frequencies). • Assume a vocabulary of t distinct terms • Each term, i, in a document or query, j, is given a real-valued weight, wij. • Both documents and queries are expressed as dimensional vectors: t- dj = (w 1 j, w 2 j, …, wtj) 21

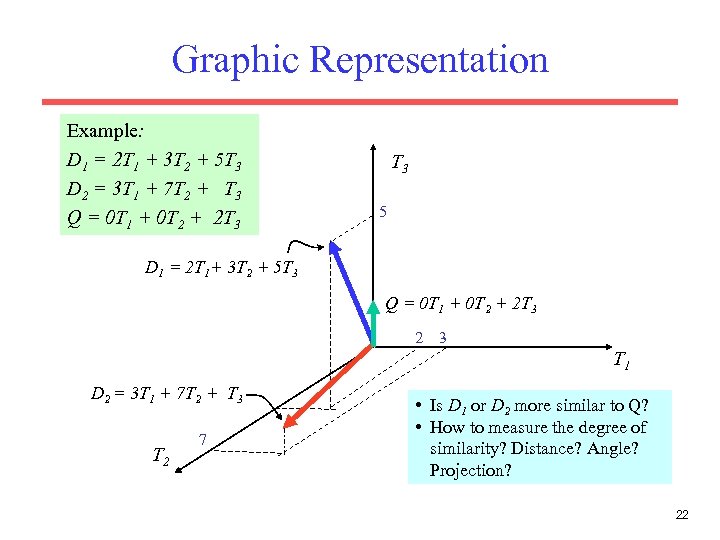

Graphic Representation Example: D 1 = 2 T 1 + 3 T 2 + 5 T 3 D 2 = 3 T 1 + 7 T 2 + T 3 Q = 0 T 1 + 0 T 2 + 2 T 3 5 D 1 = 2 T 1+ 3 T 2 + 5 T 3 Q = 0 T 1 + 0 T 2 + 2 T 3 2 3 T 1 D 2 = 3 T 1 + 7 T 2 + T 3 T 2 7 • Is D 1 or D 2 more similar to Q? • How to measure the degree of similarity? Distance? Angle? Projection? 22

Graphic Representation Example: D 1 = 2 T 1 + 3 T 2 + 5 T 3 D 2 = 3 T 1 + 7 T 2 + T 3 Q = 0 T 1 + 0 T 2 + 2 T 3 5 D 1 = 2 T 1+ 3 T 2 + 5 T 3 Q = 0 T 1 + 0 T 2 + 2 T 3 2 3 T 1 D 2 = 3 T 1 + 7 T 2 + T 3 T 2 7 • Is D 1 or D 2 more similar to Q? • How to measure the degree of similarity? Distance? Angle? Projection? 22

Document Collection • A collection of n documents can be represented in the vector space model by a term-document matrix. • An entry in the matrix corresponds to the “weight” of a term in the document; zero means the term has no significance in the document or it simply doesn’t exist in the document. T 1 T 2 …. Tt D 1 w 11 w 21 … wt 1 D 2 w 12 w 22 … wt 2 : : : : Dn w 1 n w 2 n … wtn 23

Document Collection • A collection of n documents can be represented in the vector space model by a term-document matrix. • An entry in the matrix corresponds to the “weight” of a term in the document; zero means the term has no significance in the document or it simply doesn’t exist in the document. T 1 T 2 …. Tt D 1 w 11 w 21 … wt 1 D 2 w 12 w 22 … wt 2 : : : : Dn w 1 n w 2 n … wtn 23

Term Weights: Term Frequency • More frequent terms in a document are more important, i. e. more indicative of the topic. fij = frequency of term i in document j • May want to normalize term frequency (tf) across the entire corpus: tfij = fij / max{fij} 24

Term Weights: Term Frequency • More frequent terms in a document are more important, i. e. more indicative of the topic. fij = frequency of term i in document j • May want to normalize term frequency (tf) across the entire corpus: tfij = fij / max{fij} 24

Term Weights: Inverse Document Frequency • Terms that appear in many different documents are less indicative of overall topic. df i = document frequency of term i = number of documents containing term i idfi = inverse document frequency of term i, = log 2 (N/ df i) (N: total number of documents) • An indication of a term’s discrimination power. • Log used to dampen the effect relative to tf. 25

Term Weights: Inverse Document Frequency • Terms that appear in many different documents are less indicative of overall topic. df i = document frequency of term i = number of documents containing term i idfi = inverse document frequency of term i, = log 2 (N/ df i) (N: total number of documents) • An indication of a term’s discrimination power. • Log used to dampen the effect relative to tf. 25

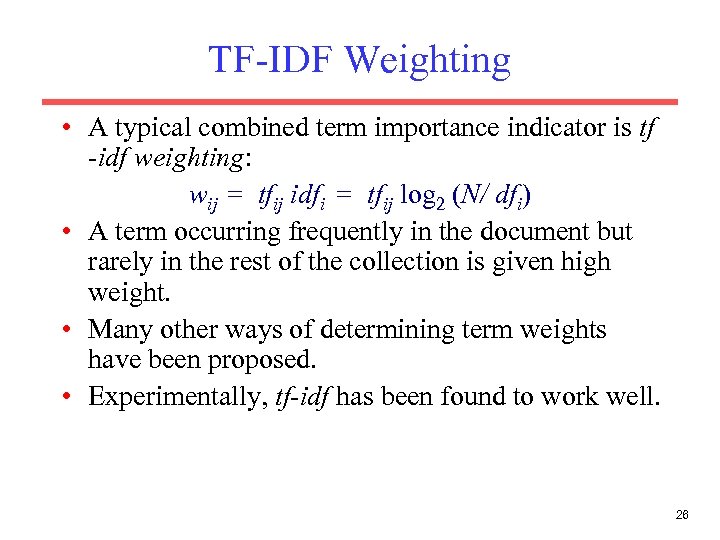

TF-IDF Weighting • A typical combined term importance indicator is tf -idf weighting: wij = tfij idfi = tfij log 2 (N/ dfi) • A term occurring frequently in the document but rarely in the rest of the collection is given high weight. • Many other ways of determining term weights have been proposed. • Experimentally, tf-idf has been found to work well. 26

TF-IDF Weighting • A typical combined term importance indicator is tf -idf weighting: wij = tfij idfi = tfij log 2 (N/ dfi) • A term occurring frequently in the document but rarely in the rest of the collection is given high weight. • Many other ways of determining term weights have been proposed. • Experimentally, tf-idf has been found to work well. 26

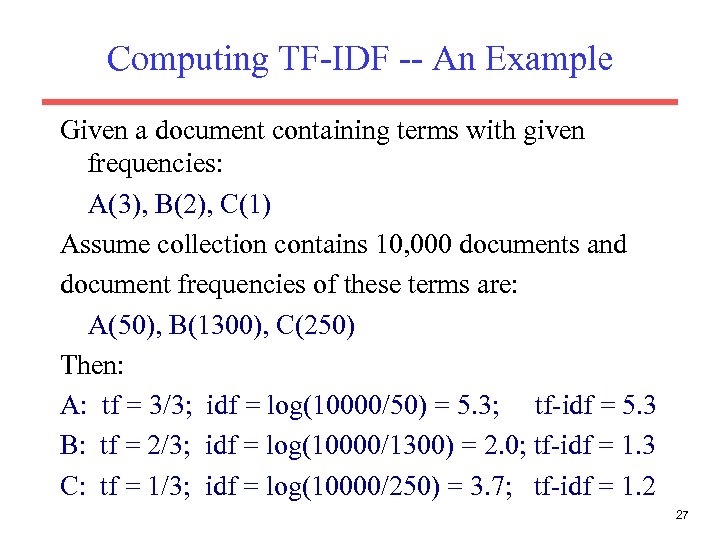

Computing TF-IDF -- An Example Given a document containing terms with given frequencies: A(3), B(2), C(1) Assume collection contains 10, 000 documents and document frequencies of these terms are: A(50), B(1300), C(250) Then: A: tf = 3/3; idf = log(10000/50) = 5. 3; tf-idf = 5. 3 B: tf = 2/3; idf = log(10000/1300) = 2. 0; tf-idf = 1. 3 C: tf = 1/3; idf = log(10000/250) = 3. 7; tf-idf = 1. 2 27

Computing TF-IDF -- An Example Given a document containing terms with given frequencies: A(3), B(2), C(1) Assume collection contains 10, 000 documents and document frequencies of these terms are: A(50), B(1300), C(250) Then: A: tf = 3/3; idf = log(10000/50) = 5. 3; tf-idf = 5. 3 B: tf = 2/3; idf = log(10000/1300) = 2. 0; tf-idf = 1. 3 C: tf = 1/3; idf = log(10000/250) = 3. 7; tf-idf = 1. 2 27

Query Vector • Query vector is typically treated as a document and also tf-idf weighted. • Alternative is for the user to supply weights for the given query terms. 28

Query Vector • Query vector is typically treated as a document and also tf-idf weighted. • Alternative is for the user to supply weights for the given query terms. 28

Similarity Measure • A similarity measure is a function that computes the degree of similarity between two vectors. • Using a similarity measure between the query and each document: – It is possible to rank the retrieved documents in the order of presumed relevance. – It is possible to enforce a certain threshold so that the size of the retrieved set can be controlled. 29

Similarity Measure • A similarity measure is a function that computes the degree of similarity between two vectors. • Using a similarity measure between the query and each document: – It is possible to rank the retrieved documents in the order of presumed relevance. – It is possible to enforce a certain threshold so that the size of the retrieved set can be controlled. 29

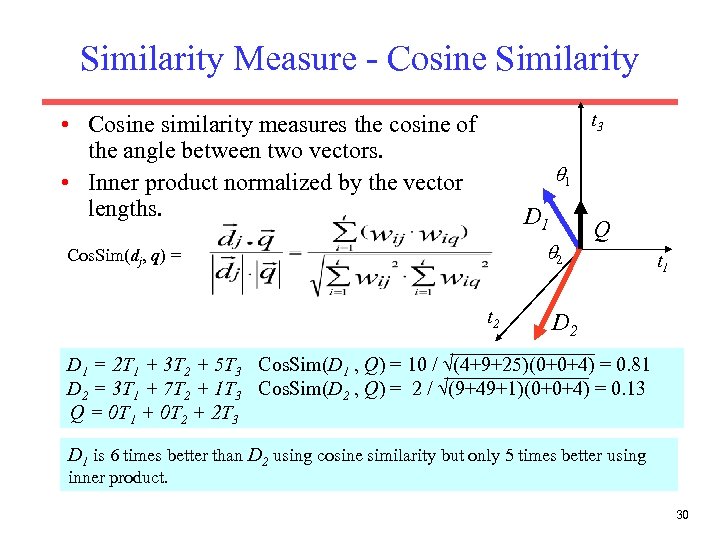

Similarity Measure - Cosine Similarity t 3 • Cosine similarity measures the cosine of the angle between two vectors. • Inner product normalized by the vector lengths. 1 D 1 2 Cos. Sim(dj, q) = t 2 Q t 1 D 2 D 1 = 2 T 1 + 3 T 2 + 5 T 3 Cos. Sim(D 1 , Q) = 10 / (4+9+25)(0+0+4) = 0. 81 D 2 = 3 T 1 + 7 T 2 + 1 T 3 Cos. Sim(D 2 , Q) = 2 / (9+49+1)(0+0+4) = 0. 13 Q = 0 T 1 + 0 T 2 + 2 T 3 D 1 is 6 times better than D 2 using cosine similarity but only 5 times better using inner product. 30

Similarity Measure - Cosine Similarity t 3 • Cosine similarity measures the cosine of the angle between two vectors. • Inner product normalized by the vector lengths. 1 D 1 2 Cos. Sim(dj, q) = t 2 Q t 1 D 2 D 1 = 2 T 1 + 3 T 2 + 5 T 3 Cos. Sim(D 1 , Q) = 10 / (4+9+25)(0+0+4) = 0. 81 D 2 = 3 T 1 + 7 T 2 + 1 T 3 Cos. Sim(D 2 , Q) = 2 / (9+49+1)(0+0+4) = 0. 13 Q = 0 T 1 + 0 T 2 + 2 T 3 D 1 is 6 times better than D 2 using cosine similarity but only 5 times better using inner product. 30

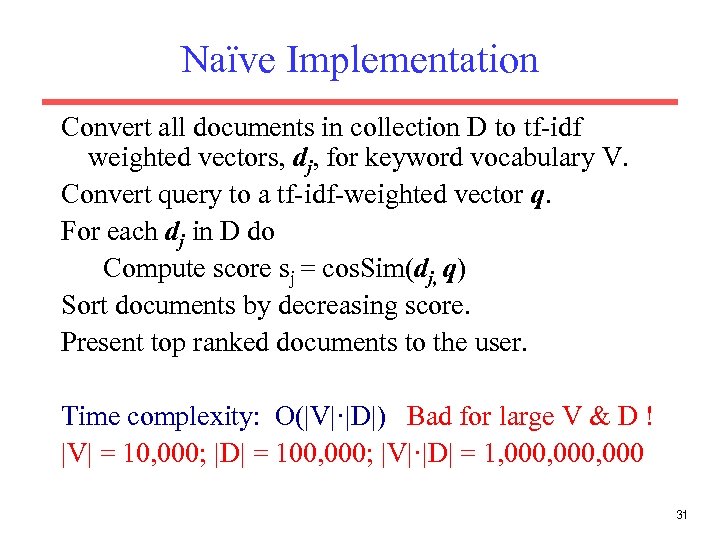

Naïve Implementation Convert all documents in collection D to tf-idf weighted vectors, dj, for keyword vocabulary V. Convert query to a tf-idf-weighted vector q. For each dj in D do Compute score sj = cos. Sim(dj, q) Sort documents by decreasing score. Present top ranked documents to the user. Time complexity: O(|V|·|D|) Bad for large V & D ! |V| = 10, 000; |D| = 100, 000; |V|·|D| = 1, 000, 000 31

Naïve Implementation Convert all documents in collection D to tf-idf weighted vectors, dj, for keyword vocabulary V. Convert query to a tf-idf-weighted vector q. For each dj in D do Compute score sj = cos. Sim(dj, q) Sort documents by decreasing score. Present top ranked documents to the user. Time complexity: O(|V|·|D|) Bad for large V & D ! |V| = 10, 000; |D| = 100, 000; |V|·|D| = 1, 000, 000 31

Text Preprocessing: Simple Tokenizing • Analyze text into a sequence of discrete tokens (words). • Sometimes punctuation (e-mail), numbers (1999), and case (Republican vs. republican) can be a meaningful part of a token. • However, frequently they are not. • Simplest approach is to ignore all numbers and punctuation and use only case-insensitive unbroken strings of alphabetic characters as tokens. 32

Text Preprocessing: Simple Tokenizing • Analyze text into a sequence of discrete tokens (words). • Sometimes punctuation (e-mail), numbers (1999), and case (Republican vs. republican) can be a meaningful part of a token. • However, frequently they are not. • Simplest approach is to ignore all numbers and punctuation and use only case-insensitive unbroken strings of alphabetic characters as tokens. 32

Text Preprocessing: Stopwords • It is typical to exclude high-frequency words (e. g. function words: “a”, “the”, “in”, “to”; pronouns: “I”, “he”, “she”, “it”). • Stopwords are language dependent. A standard set for English consists of about 500. 33

Text Preprocessing: Stopwords • It is typical to exclude high-frequency words (e. g. function words: “a”, “the”, “in”, “to”; pronouns: “I”, “he”, “she”, “it”). • Stopwords are language dependent. A standard set for English consists of about 500. 33

Text Preprocessing: Stemming • Reduce tokens to “root” form of words to recognize morphological variation. – “computer”, “computational”, “computation” all reduced to same token “compute” • Correct morphological analysis is language specific and can be complex. • Stemming “blindly” strips off known affixes (prefixes and suffixes) in an iterative fashion. 34

Text Preprocessing: Stemming • Reduce tokens to “root” form of words to recognize morphological variation. – “computer”, “computational”, “computation” all reduced to same token “compute” • Correct morphological analysis is language specific and can be complex. • Stemming “blindly” strips off known affixes (prefixes and suffixes) in an iterative fashion. 34

Text Preprocessing: Porter Stemmer • Simple procedure for removing known affixes in English without using a dictionary. • Can produce unusual stems that are not English words: – “computer”, “computational”, “computation” all reduced to same token “comput” • May conflate (reduce to the same token) words that are actually distinct. • Not recognize all morphological derivations. 35

Text Preprocessing: Porter Stemmer • Simple procedure for removing known affixes in English without using a dictionary. • Can produce unusual stems that are not English words: – “computer”, “computational”, “computation” all reduced to same token “comput” • May conflate (reduce to the same token) words that are actually distinct. • Not recognize all morphological derivations. 35

Text Preprocessing: Porter Stemmer Errors • Errors of “comission”: – organization, organ – police, policy polic – arm, army arm • Errors of “omission”: – cylinder, cylindrical – create, creation – Europe, European 36

Text Preprocessing: Porter Stemmer Errors • Errors of “comission”: – organization, organ – police, policy polic – arm, army arm • Errors of “omission”: – cylinder, cylindrical – create, creation – Europe, European 36

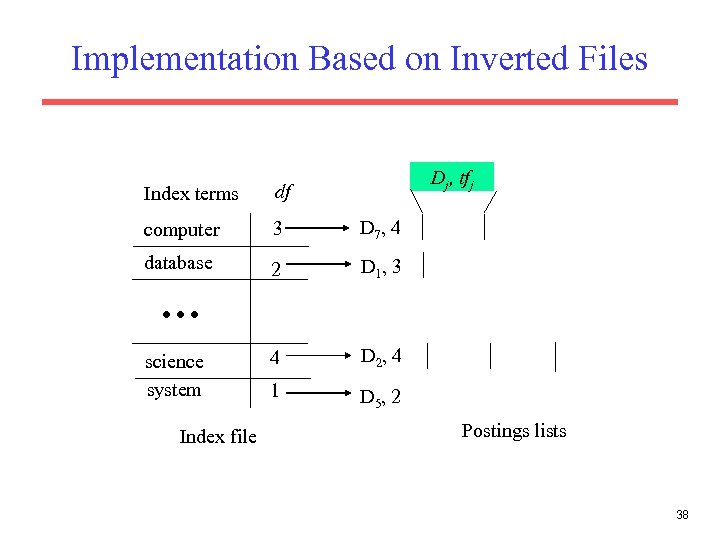

Implementation Issues: Sparse Vectors • Vocabulary and therefore dimensionality of vectors can be very large, ~104. • However, most documents and queries do not contain most words, so vectors are sparse (i. e. most entries are 0). • Need efficient methods for storing and computing with sparse vectors. • In practice, document vectors are not stored directly; an inverted organization provides much better efficiency. 37

Implementation Issues: Sparse Vectors • Vocabulary and therefore dimensionality of vectors can be very large, ~104. • However, most documents and queries do not contain most words, so vectors are sparse (i. e. most entries are 0). • Need efficient methods for storing and computing with sparse vectors. • In practice, document vectors are not stored directly; an inverted organization provides much better efficiency. 37

Implementation Based on Inverted Files Dj, tfj Index terms df computer 3 D 7 , 4 database 2 D 1 , 3 4 D 2 , 4 1 D 5 , 2 science system Index file Postings lists 38

Implementation Based on Inverted Files Dj, tfj Index terms df computer 3 D 7 , 4 database 2 D 1 , 3 4 D 2 , 4 1 D 5 , 2 science system Index file Postings lists 38

Retrieval with an Inverted Index • Tokens that are not in both the query and the document do not effect cosine similarity. – Product of token weights is zero and does not contribute to the dot product. • Usually the query is fairly short, and therefore its vector is extremely sparse. • Use inverted index to find the limited set of documents that contain at least one of the query words. 39

Retrieval with an Inverted Index • Tokens that are not in both the query and the document do not effect cosine similarity. – Product of token weights is zero and does not contribute to the dot product. • Usually the query is fairly short, and therefore its vector is extremely sparse. • Use inverted index to find the limited set of documents that contain at least one of the query words. 39

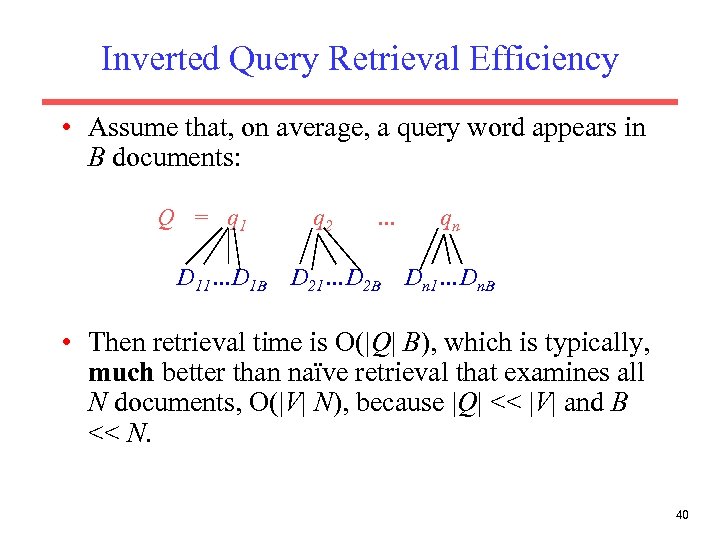

Inverted Query Retrieval Efficiency • Assume that, on average, a query word appears in B documents: Q = q 1 D 11…D 1 B q 2 … D 21…D 2 B qn Dn 1…Dn. B • Then retrieval time is O(|Q| B), which is typically, much better than naïve retrieval that examines all N documents, O(|V| N), because |Q| << |V| and B << N. 40

Inverted Query Retrieval Efficiency • Assume that, on average, a query word appears in B documents: Q = q 1 D 11…D 1 B q 2 … D 21…D 2 B qn Dn 1…Dn. B • Then retrieval time is O(|Q| B), which is typically, much better than naïve retrieval that examines all N documents, O(|V| N), because |Q| << |V| and B << N. 40

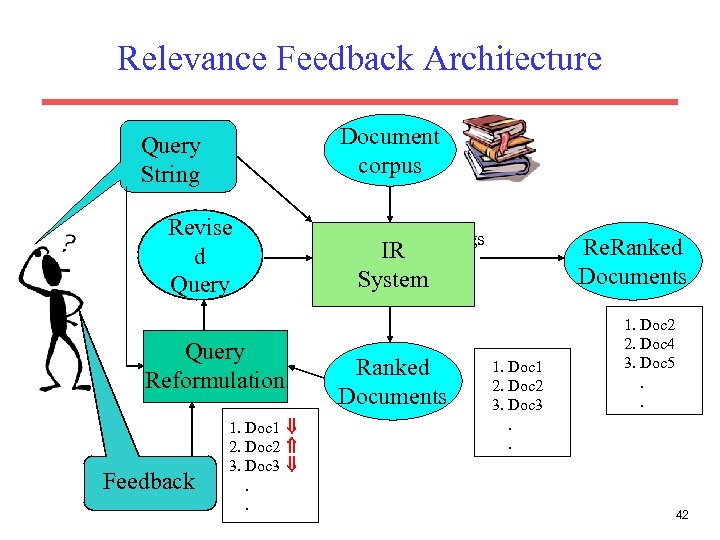

Relevance Feedback • After initial retrieval results are presented, allow the user to provide feedback on the relevance of one or more of the retrieved documents. • Use this feedback information to reformulate the query. • Produce new results based on reformulated query. • Allows more interactive, multi-pass process. 41

Relevance Feedback • After initial retrieval results are presented, allow the user to provide feedback on the relevance of one or more of the retrieved documents. • Use this feedback information to reformulate the query. • Produce new results based on reformulated query. • Allows more interactive, multi-pass process. 41

Relevance Feedback Architecture Document corpus Query String Revise d Query Reformulation Feedback 1. Doc 1 2. Doc 2 3. Doc 3 . . Rankings Re. Ranked Documents IR System Ranked Documents 1. Doc 1 2. Doc 2 3. Doc 3. . 1. Doc 2 2. Doc 4 3. Doc 5. . 42

Relevance Feedback Architecture Document corpus Query String Revise d Query Reformulation Feedback 1. Doc 1 2. Doc 2 3. Doc 3 . . Rankings Re. Ranked Documents IR System Ranked Documents 1. Doc 1 2. Doc 2 3. Doc 3. . 1. Doc 2 2. Doc 4 3. Doc 5. . 42

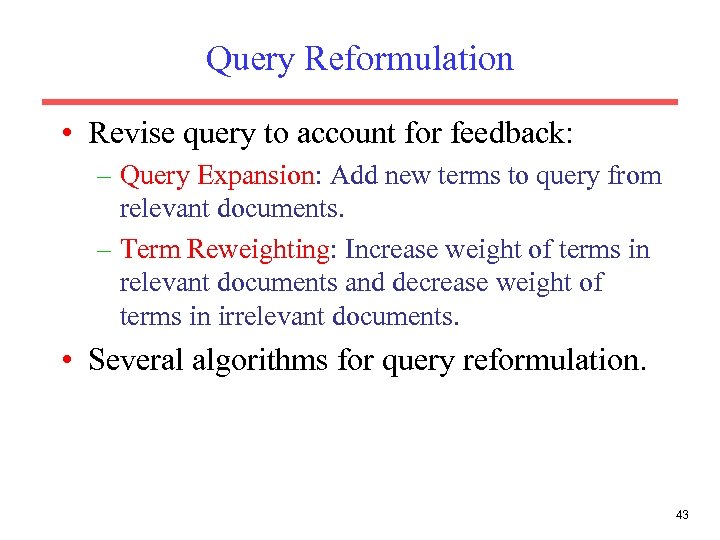

Query Reformulation • Revise query to account for feedback: – Query Expansion: Add new terms to query from relevant documents. – Term Reweighting: Increase weight of terms in relevant documents and decrease weight of terms in irrelevant documents. • Several algorithms for query reformulation. 43

Query Reformulation • Revise query to account for feedback: – Query Expansion: Add new terms to query from relevant documents. – Term Reweighting: Increase weight of terms in relevant documents and decrease weight of terms in irrelevant documents. • Several algorithms for query reformulation. 43

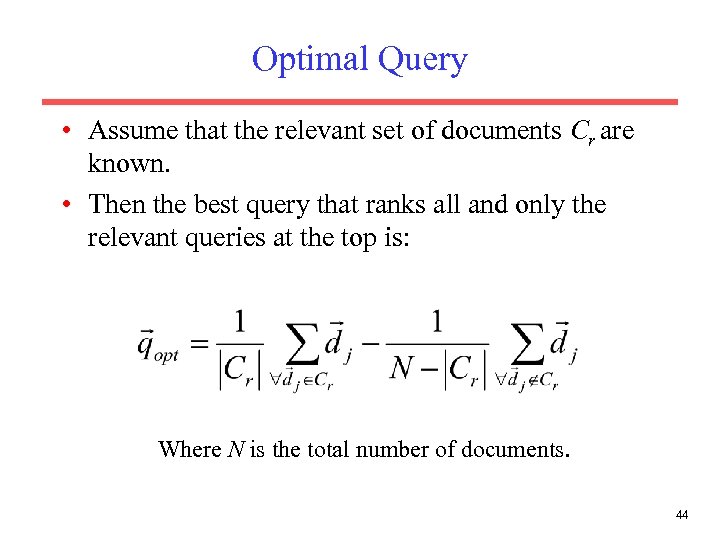

Optimal Query • Assume that the relevant set of documents Cr are known. • Then the best query that ranks all and only the relevant queries at the top is: Where N is the total number of documents. 44

Optimal Query • Assume that the relevant set of documents Cr are known. • Then the best query that ranks all and only the relevant queries at the top is: Where N is the total number of documents. 44

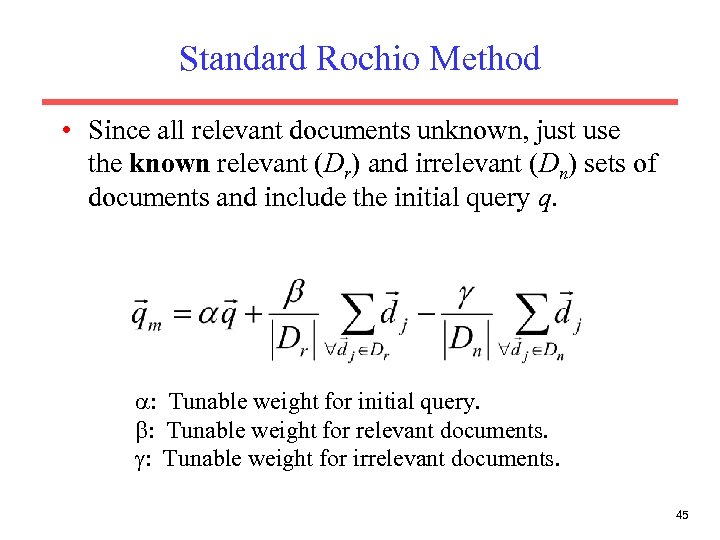

Standard Rochio Method • Since all relevant documents unknown, just use the known relevant (Dr) and irrelevant (Dn) sets of documents and include the initial query q. : Tunable weight for initial query. : Tunable weight for relevant documents. : Tunable weight for irrelevant documents. 45

Standard Rochio Method • Since all relevant documents unknown, just use the known relevant (Dr) and irrelevant (Dn) sets of documents and include the initial query q. : Tunable weight for initial query. : Tunable weight for relevant documents. : Tunable weight for irrelevant documents. 45

Why is Feedback Not Widely Used • Users sometimes reluctant to provide explicit feedback. • Results in long queries that require more computation to retrieve, and search engines process lots of queries and allow little time for each one. • Makes it harder to understand why a particular document was retrieved. 46

Why is Feedback Not Widely Used • Users sometimes reluctant to provide explicit feedback. • Results in long queries that require more computation to retrieve, and search engines process lots of queries and allow little time for each one. • Makes it harder to understand why a particular document was retrieved. 46

Improving IR with Thesaurus • A thesaurus provides information on synonyms and semantically related words and phrases. • Example: physician syn: ||croaker, doctor, MD, medical, mediciner, medico, ||sawbones rel: medic, general practitioner, surgeon, 47

Improving IR with Thesaurus • A thesaurus provides information on synonyms and semantically related words and phrases. • Example: physician syn: ||croaker, doctor, MD, medical, mediciner, medico, ||sawbones rel: medic, general practitioner, surgeon, 47

Thesaurus-based Query Expansion • For each term, t, in a query, expand the query with synonyms and related words of t from thesaurus. • May weight added terms less than original query terms. • Generally increases recall. • May significantly decrease precision, particularly with ambiguous terms. – “interest rate” “interest rate fascinate evaluate” 48

Thesaurus-based Query Expansion • For each term, t, in a query, expand the query with synonyms and related words of t from thesaurus. • May weight added terms less than original query terms. • Generally increases recall. • May significantly decrease precision, particularly with ambiguous terms. – “interest rate” “interest rate fascinate evaluate” 48

Example of Thesaurus: Word. Net • A more detailed database of semantic relationships between English words. • Developed by famous cognitive psychologist George Miller and a team at Princeton University. • About 144, 000 English words. • Nouns, adjectives, verbs, and adverbs grouped into about 109, 000 synonym sets called synsets. 49

Example of Thesaurus: Word. Net • A more detailed database of semantic relationships between English words. • Developed by famous cognitive psychologist George Miller and a team at Princeton University. • About 144, 000 English words. • Nouns, adjectives, verbs, and adverbs grouped into about 109, 000 synonym sets called synsets. 49

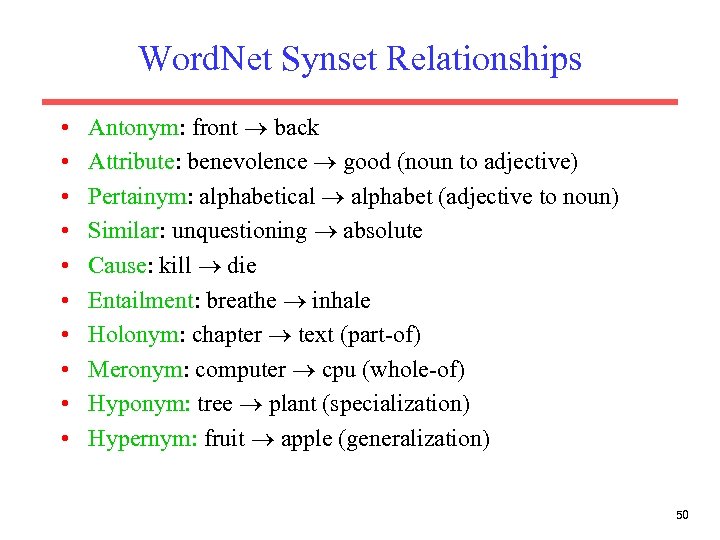

Word. Net Synset Relationships • • • Antonym: front back Attribute: benevolence good (noun to adjective) Pertainym: alphabetical alphabet (adjective to noun) Similar: unquestioning absolute Cause: kill die Entailment: breathe inhale Holonym: chapter text (part-of) Meronym: computer cpu (whole-of) Hyponym: tree plant (specialization) Hypernym: fruit apple (generalization) 50

Word. Net Synset Relationships • • • Antonym: front back Attribute: benevolence good (noun to adjective) Pertainym: alphabetical alphabet (adjective to noun) Similar: unquestioning absolute Cause: kill die Entailment: breathe inhale Holonym: chapter text (part-of) Meronym: computer cpu (whole-of) Hyponym: tree plant (specialization) Hypernym: fruit apple (generalization) 50

Statistical Thesaurus • Existing human-developed thesauri are not easily available in all languages. • Human thesuari are limited in the type and range of synonymy and semantic relations they represent. • Semantically related terms can be discovered from statistical analysis of corpora. 51

Statistical Thesaurus • Existing human-developed thesauri are not easily available in all languages. • Human thesuari are limited in the type and range of synonymy and semantic relations they represent. • Semantically related terms can be discovered from statistical analysis of corpora. 51

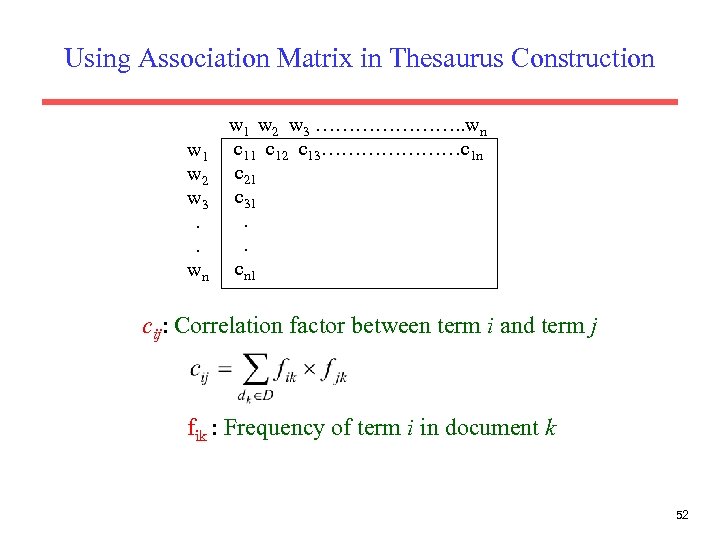

Using Association Matrix in Thesaurus Construction w 1 w 2 w 3. . wn w 1 w 2 w 3 …………………. . wn c 11 c 12 c 13…………………c 1 n c 21 c 31. . cn 1 cij: Correlation factor between term i and term j fik : Frequency of term i in document k 52

Using Association Matrix in Thesaurus Construction w 1 w 2 w 3. . wn w 1 w 2 w 3 …………………. . wn c 11 c 12 c 13…………………c 1 n c 21 c 31. . cn 1 cij: Correlation factor between term i and term j fik : Frequency of term i in document k 52

IR Performance Evaluation • There are many retrieval models/ algorithms/ systems, which one is the best? • What is the best component for: – Ranking function (dot-product, cosine, …) – Term selection (stopword removal, stemming…) – Term weighting (TF, TF-IDF, …) • How far down the ranked list will a user need to look to find some/all relevant documents? 53

IR Performance Evaluation • There are many retrieval models/ algorithms/ systems, which one is the best? • What is the best component for: – Ranking function (dot-product, cosine, …) – Term selection (stopword removal, stemming…) – Term weighting (TF, TF-IDF, …) • How far down the ranked list will a user need to look to find some/all relevant documents? 53

Difficulties in Evaluating IR Systems • Effectiveness is related to the relevancy of retrieved items. • Relevancy is not typically binary but continuous. • Even if relevancy is binary, it can be a difficult judgment to make. • Relevancy, from a human standpoint, is: – – Subjective: Depends upon a specific user’s judgment. Situational: Relates to user’s current needs. Cognitive: Depends on human perception and behavior. Dynamic: Changes over time. 54

Difficulties in Evaluating IR Systems • Effectiveness is related to the relevancy of retrieved items. • Relevancy is not typically binary but continuous. • Even if relevancy is binary, it can be a difficult judgment to make. • Relevancy, from a human standpoint, is: – – Subjective: Depends upon a specific user’s judgment. Situational: Relates to user’s current needs. Cognitive: Depends on human perception and behavior. Dynamic: Changes over time. 54

Human Labeled Corpora (Gold Standard) • Start with a corpus of documents. • Collect a set of queries for this corpus. • Have one or more human experts exhaustively label the relevant documents for each query. • Typically assumes binary relevance judgments. • Requires considerable human effort for large document/query corpora. 55

Human Labeled Corpora (Gold Standard) • Start with a corpus of documents. • Collect a set of queries for this corpus. • Have one or more human experts exhaustively label the relevant documents for each query. • Typically assumes binary relevance judgments. • Requires considerable human effort for large document/query corpora. 55

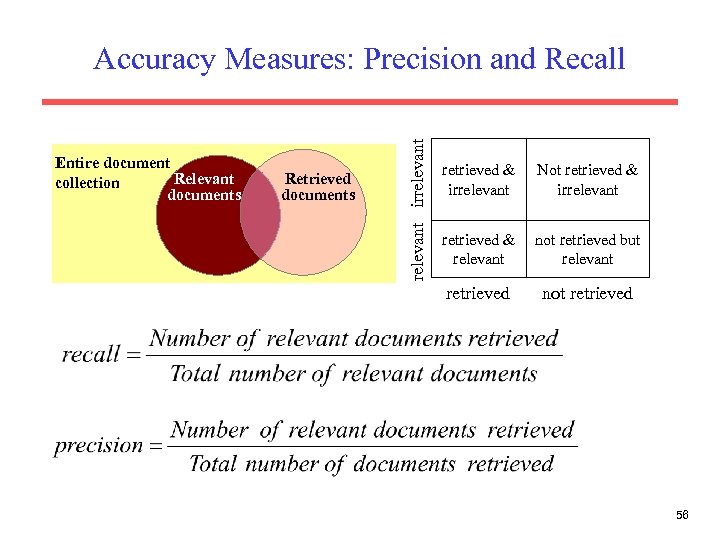

Entire document Relevant collection documents Retrieved documents relevant irrelevant Accuracy Measures: Precision and Recall retrieved & irrelevant Not retrieved & irrelevant retrieved & relevant not retrieved but relevant retrieved not retrieved 56

Entire document Relevant collection documents Retrieved documents relevant irrelevant Accuracy Measures: Precision and Recall retrieved & irrelevant Not retrieved & irrelevant retrieved & relevant not retrieved but relevant retrieved not retrieved 56

Precision and Recall • Precision – The ability to retrieve top-ranked documents that are mostly relevant. • Recall – The ability of the search to find all of the relevant items in the corpus. 57

Precision and Recall • Precision – The ability to retrieve top-ranked documents that are mostly relevant. • Recall – The ability of the search to find all of the relevant items in the corpus. 57

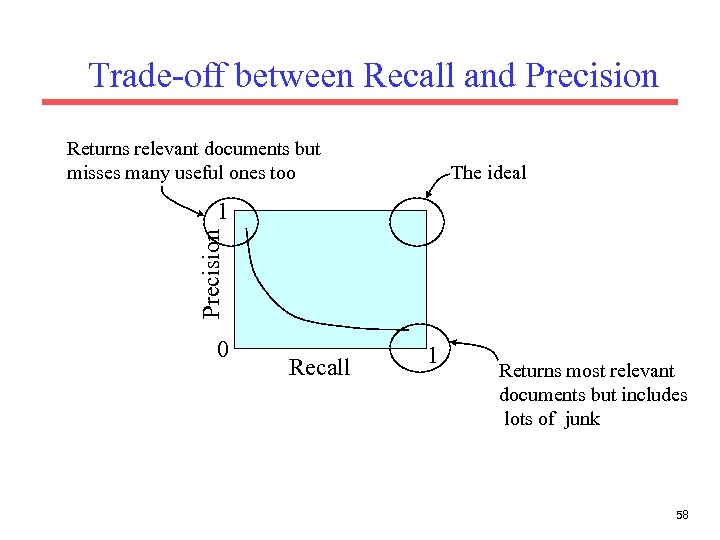

Trade-off between Recall and Precision Returns relevant documents but misses many useful ones too The ideal Precision 1 0 Recall 1 Returns most relevant documents but includes lots of junk 58

Trade-off between Recall and Precision Returns relevant documents but misses many useful ones too The ideal Precision 1 0 Recall 1 Returns most relevant documents but includes lots of junk 58

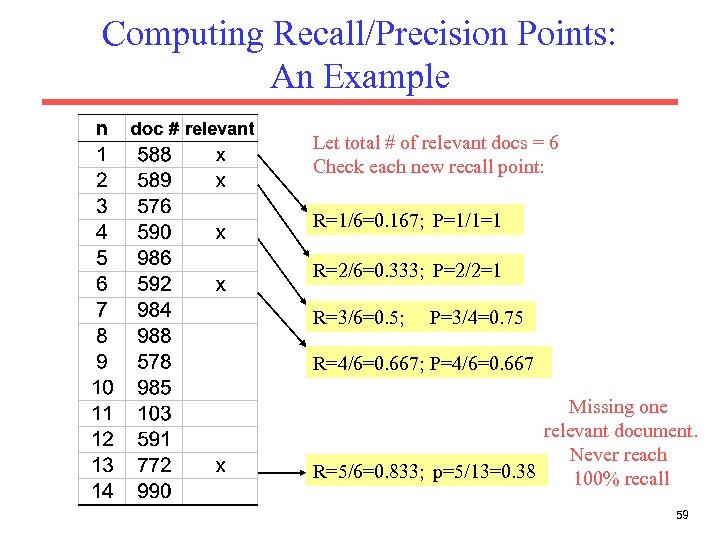

Computing Recall/Precision Points: An Example Let total # of relevant docs = 6 Check each new recall point: R=1/6=0. 167; P=1/1=1 R=2/6=0. 333; P=2/2=1 R=3/6=0. 5; P=3/4=0. 75 R=4/6=0. 667; P=4/6=0. 667 Missing one relevant document. Never reach R=5/6=0. 833; p=5/13=0. 38 100% recall 59

Computing Recall/Precision Points: An Example Let total # of relevant docs = 6 Check each new recall point: R=1/6=0. 167; P=1/1=1 R=2/6=0. 333; P=2/2=1 R=3/6=0. 5; P=3/4=0. 75 R=4/6=0. 667; P=4/6=0. 667 Missing one relevant document. Never reach R=5/6=0. 833; p=5/13=0. 38 100% recall 59

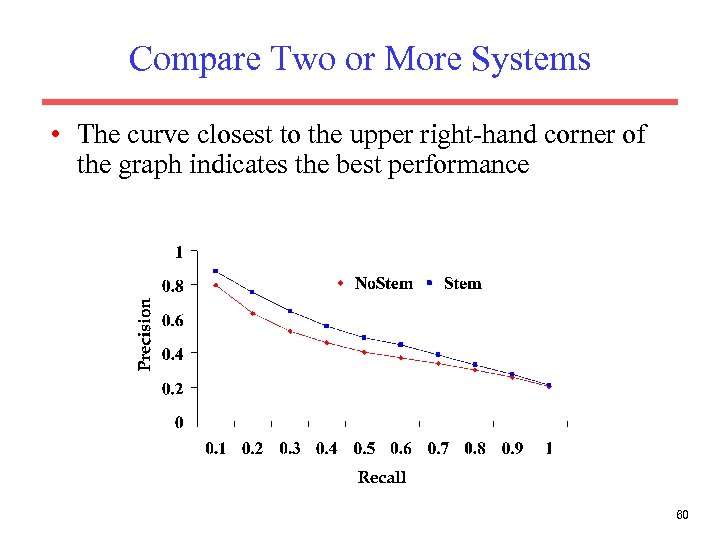

Compare Two or More Systems • The curve closest to the upper right-hand corner of the graph indicates the best performance 60

Compare Two or More Systems • The curve closest to the upper right-hand corner of the graph indicates the best performance 60

Other IR Performance Factors to Consider • User effort: Work required from the user in formulating queries, conducting the search, and screening the output. • Response time: Time interval between receipt of a user query and the presentation of system responses. • Form of presentation: Influence of search output format on the user’s ability to utilize the retrieved materials. • Collection coverage: Extent to which any/all relevant items are included in the document corpus. 61

Other IR Performance Factors to Consider • User effort: Work required from the user in formulating queries, conducting the search, and screening the output. • Response time: Time interval between receipt of a user query and the presentation of system responses. • Form of presentation: Influence of search output format on the user’s ability to utilize the retrieved materials. • Collection coverage: Extent to which any/all relevant items are included in the document corpus. 61

Statistical Properties of Text • How is the frequency of different words distributed? • How fast does vocabulary size grow with the size of a corpus? • Such factors affect the performance of information retrieval and can be used to select appropriate term weights and other aspects of an IR system. 62

Statistical Properties of Text • How is the frequency of different words distributed? • How fast does vocabulary size grow with the size of a corpus? • Such factors affect the performance of information retrieval and can be used to select appropriate term weights and other aspects of an IR system. 62

About Word Frequency • A few words are very common. – 2 most frequent words (e. g. “the”, “of”) can account for about 10% of word occurrences. • Most words are very rare. – Half the words in a corpus appear only once, called hapax legomena (Greek for “read only once”) • Called a “heavy tailed” distribution, since most of the probability mass is in the “tail” • Zipf law: f: word frequency r: word rank 63

About Word Frequency • A few words are very common. – 2 most frequent words (e. g. “the”, “of”) can account for about 10% of word occurrences. • Most words are very rare. – Half the words in a corpus appear only once, called hapax legomena (Greek for “read only once”) • Called a “heavy tailed” distribution, since most of the probability mass is in the “tail” • Zipf law: f: word frequency r: word rank 63

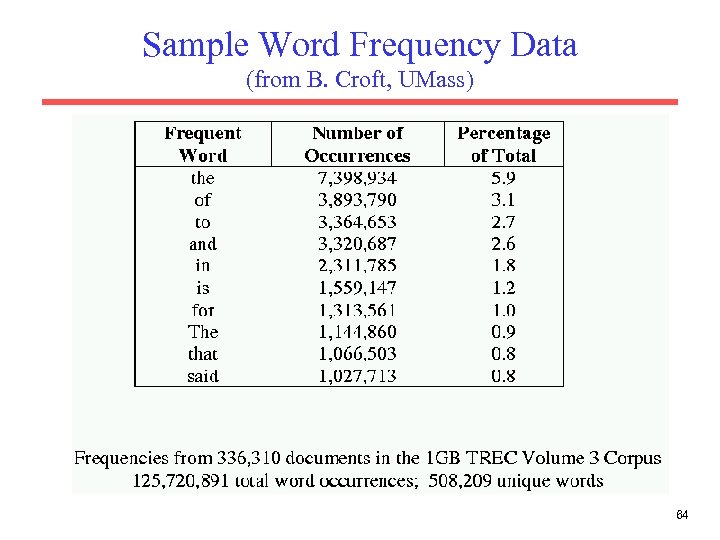

Sample Word Frequency Data (from B. Croft, UMass) 64

Sample Word Frequency Data (from B. Croft, UMass) 64

Zipf’s Law Impact on IR • Good News: Stopwords will account for a large fraction of text so eliminating them greatly reduces inverted-index storage costs. • Bad News: For most words, gathering sufficient data for meaningful statistical analysis (e. g. for correlation analysis for query expansion) is difficult since they are extremely rare. 65

Zipf’s Law Impact on IR • Good News: Stopwords will account for a large fraction of text so eliminating them greatly reduces inverted-index storage costs. • Bad News: For most words, gathering sufficient data for meaningful statistical analysis (e. g. for correlation analysis for query expansion) is difficult since they are extremely rare. 65

Vocabulary Growth • How does the size of the overall vocabulary (number of unique words) grow with the size of the corpus? • This determines how the size of the inverted index will scale with the size of the corpus. • Vocabulary not really upper-bounded due to proper names, typos, etc. 66

Vocabulary Growth • How does the size of the overall vocabulary (number of unique words) grow with the size of the corpus? • This determines how the size of the inverted index will scale with the size of the corpus. • Vocabulary not really upper-bounded due to proper names, typos, etc. 66

Enhancing IR with Metadata • Information about a document that may not be a part of the document itself (data about data). • Descriptive metadata is external to the meaning of the document: – – – – Author Title Source (book, magazine, newspaper, journal) Date ISBN Publisher Length 67

Enhancing IR with Metadata • Information about a document that may not be a part of the document itself (data about data). • Descriptive metadata is external to the meaning of the document: – – – – Author Title Source (book, magazine, newspaper, journal) Date ISBN Publisher Length 67

Enhancing IR with Metadata (cont) • Semantic metadata concerns the content: – Abstract – Keywords – Subject Codes • Library of Congress • Dewey Decimal • UMLS (Unified Medical Language System) • Subject terms may come from specific ontologies (hierarchical taxonomies of standardized semantic terms). 68

Enhancing IR with Metadata (cont) • Semantic metadata concerns the content: – Abstract – Keywords – Subject Codes • Library of Congress • Dewey Decimal • UMLS (Unified Medical Language System) • Subject terms may come from specific ontologies (hierarchical taxonomies of standardized semantic terms). 68

Enhancing IR with Metadata: RDF Format • Resource Description Framework. • XML compatible metadata format. • New standard for web metadata. – – – Content description Collection description Privacy information Intellectual property rights (e. g. copyright) Content ratings Digital signatures for authority 69

Enhancing IR with Metadata: RDF Format • Resource Description Framework. • XML compatible metadata format. • New standard for web metadata. – – – Content description Collection description Privacy information Intellectual property rights (e. g. copyright) Content ratings Digital signatures for authority 69

Beyond Queries: Text Categorization • Given: – A set of training documents D = {d 1, d 2, …dn} each assigned to one category from a set of categories C={c 1, c 2, …ck} • Learn how to determine: – A category of a new document d 70

Beyond Queries: Text Categorization • Given: – A set of training documents D = {d 1, d 2, …dn} each assigned to one category from a set of categories C={c 1, c 2, …ck} • Learn how to determine: – A category of a new document d 70

Beyond Queries: Text Categorization • Assigning documents to a fixed set of categories. • Applications: – Web pages • Recommending • Yahoo-like classification – Newsgroup Messages • Recommending • Spam filtering – News articles • Personalized newspaper – Email messages • Routing • Prioritizing • Spam filtering 71

Beyond Queries: Text Categorization • Assigning documents to a fixed set of categories. • Applications: – Web pages • Recommending • Yahoo-like classification – Newsgroup Messages • Recommending • Spam filtering – News articles • Personalized newspaper – Email messages • Routing • Prioritizing • Spam filtering 71

Beyond Queries: Text Clustering • Hierarchical and K-Means clustering have been applied to text in a straightforward way. • Typically use normalized, TF/IDF-weighted vectors and cosine similarity. • Optimize computations for sparse vectors. • Applications: – During retrieval, add other documents in the same cluster as the initial retrieved documents to improve recall. – Clustering of results of retrieval to present more organized results to the user. – Automated production of hierarchical taxonomies of documents for browsing purposes (e. g. Yahoo). 72

Beyond Queries: Text Clustering • Hierarchical and K-Means clustering have been applied to text in a straightforward way. • Typically use normalized, TF/IDF-weighted vectors and cosine similarity. • Optimize computations for sparse vectors. • Applications: – During retrieval, add other documents in the same cluster as the initial retrieved documents to improve recall. – Clustering of results of retrieval to present more organized results to the user. – Automated production of hierarchical taxonomies of documents for browsing purposes (e. g. Yahoo). 72