3f2e037294f0acfca453150e8eb01af1.ppt

- Количество слайдов: 20

Extracting Knowledge-Bases from Machine. Readable Dictionaries: Have We Wasted Our Time? Nancy Ide and Jean Veronis Proc KB&KB’ 93 Workshop, 1993, pp 257 -266 http: //www. cs. vassar. edu/faculty/ide/pubs. html As (mis-)interpreted by Peter Clark

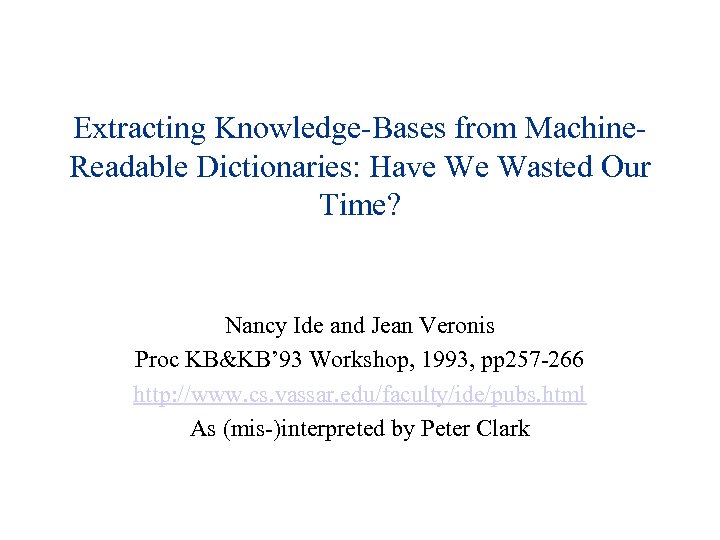

The Postulates of MRD Work • P 1: MRDs contain information that is useful for NLP e. g. :

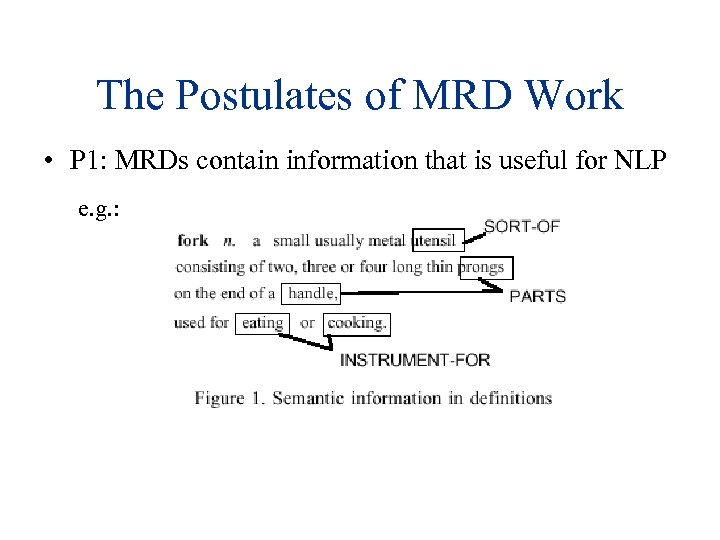

The Postulates of MRD Work • P 1: MRDs contain information that is useful for NLP • P 2: This info is relatively easy to extract from MRDs e. g. , extraction of hypernyms (generalizations): Dipper isa Ladle isa Spoon isa Utensil

But… • Not much to show for it so far (1993) – handful of limited and imperfect taxonomies – few studies on the quality of knowledge in MRDs – few studies on extracting more complex info

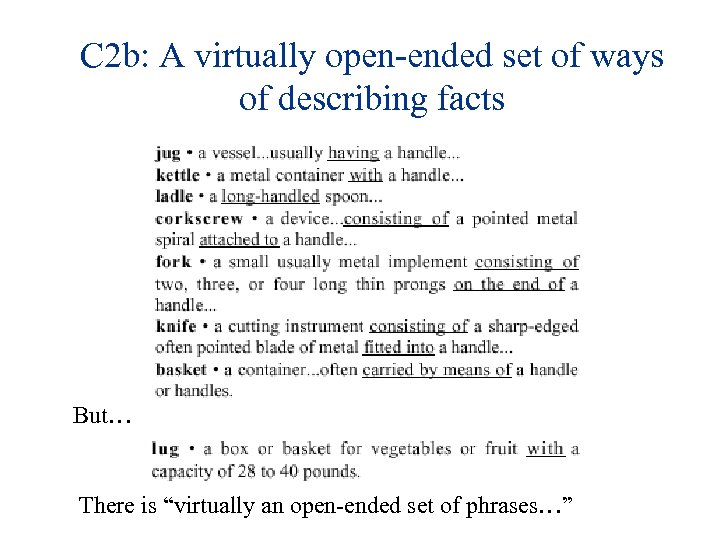

Complaints… • P 1: useful info in MRDs: – C 1 a: 50%-70% of info in dictionaries is “garbled” – C 1 b: sense definitions concept usage (“real concepts”) – C 1 c: some types of knowledge simply not there • P 2: Info can be easily extracted • Most successes have been for hypernyms only – C 2 a: MRD formats are a nightmare to deal with – C 2 b: A virtually open-ended set of ways of describing facts – C 2 c: Bootstrapping: Need a KB to build a KB from a MRD

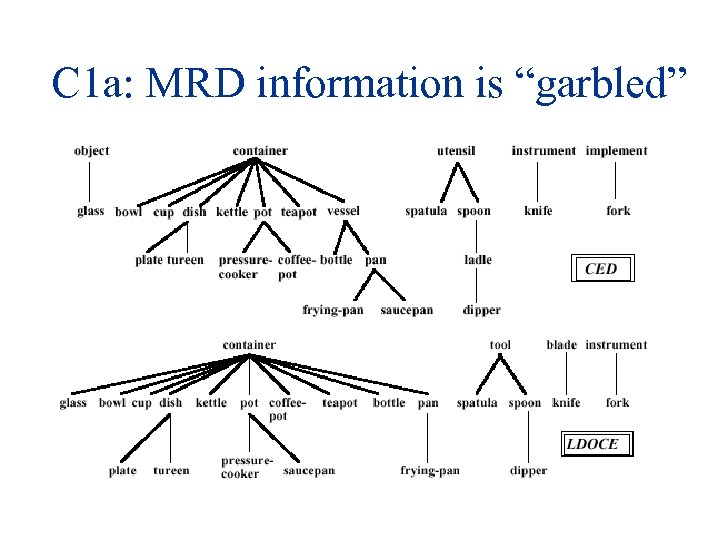

C 1 a: MRD information is “garbled” • Multiple people, multiple years effort • Space restrictions, syntactic restrictions • Particular problem 1: – Attachment of terms too high (21%-34%) • e. g. , “pan” and “bottle” are “vessels”, but “cup” and “bowl” are simply “containers” • occurs fairly randomly – Categories less clear at top levels • “fork” and “spoon” is ok, but “implement” and “utensil” = ? • Sometimes no word there to refer to a concept – leads to circular definitions

C 1 a: MRD information is “garbled”

C 1 a: MRD information is “garbled” • Particular problem 2: – Categories less clear at top levels • “fork” and “spoon” is ok, but “implement” and “utensil” = ? • Leads to disjuncts e. g. “implement or utensil” • Sometimes no word there to refer to a concept – leads to circular definitions – leads to “covert categories”, e. g. , INSTRUMENTAL-OBJECT (a hypernym for “tool”, “utensil”, “instrument”, and “implement”)

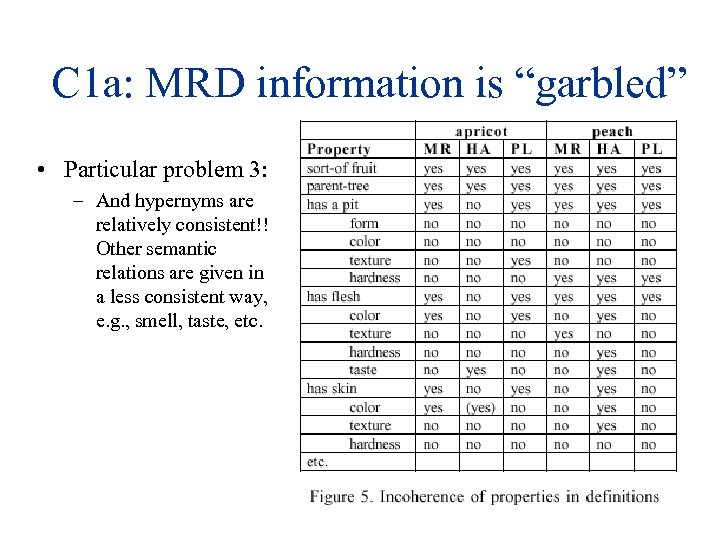

C 1 a: MRD information is “garbled” • Particular problem 3: – And hypernyms are relatively consistent!! Other semantic relations are given in a less consistent way, e. g. , smell, taste, etc.

C 1 b: sense definitions concept usage (“real concepts”) • Ambiguity of word senses, e. g. , – 87% of words in a sample fit > 1 word sense • Word senses don’t reflect actual use • Word sense distinctions differ between MRDs – level of detail – way lines are drawn between senses – no definitive set of distinctions

C 1 c: some types of knowledge simply not there • no broad contextual or world knowledge, e. g. , – no connection between “lawn” and “house”, or between “ash” and “tobacco” – “restaurant, eating house, eating place -- (a building where people go to eat)” [Word. Net] • No mention that it’s a commercial business, e. g. , for “the waitress collected the check. ”

C 2 a: MRD formats are a nightmare to deal with • Ambiguities / inconsistencies in typesetter format • Complex grammars for entries • Conventions are inconsistent, e. g. bracketing for – “Canopic jar, urn, or vase” vs. – “Junggar Pendi, Dzungaria, or Zungaria” • Need a lot of hand pre-processing – not much general value to this – is a vast task in itself – not many processed dictionaries available

C 2 b: A virtually open-ended set of ways of describing facts But… There is “virtually an open-ended set of phrases…”

C 2 c: Bootstrapping: Need a KB to build a KB Need knowledge to do NLP on MRDs! – e. g. “carry by means of a handle” vs. “carry by means of a wagon” • But undisambiguated hierarchy is unusable, e. g. , – “saucepan” isa “leaf” need to build your KB before you even start on the MRD

Synthesis • Underlying postulate of P 1 and P 2: – P 0: Large KBs cannot be built by hand • Counterexamples: – Cyc – Dictionaries themselves! • And besides… – KBs are too hard to extract from MRDs – don’t contain all the knowledge needed • But: MRD contributions: – understanding the structure of dictionaries – convergance of NLP, lexicography, and electronic publishing interests

Ways forward… • Combining Knowledge Sources: – One dictionary has 55%-70% of “problematic cases” [of incompleteness], but 5 dictionaries reduced this to 5% • Also should combine knowledge from corpora as a means of “filling out” KBs • Prediction: – KBs built by people, using corpora and text extraction technology tools, and combined together by hand (Schubert-style; Code 4; Ikarus)

Ways forward… • MRDs will become encoded more consistently • Better analysis needed of the types of knowledge needed for NLP – perhaps don’t need the kind of precision in a KB • Exploitation of associational information – Very useful for sense disambiguation (e. g. , Harabagiu)

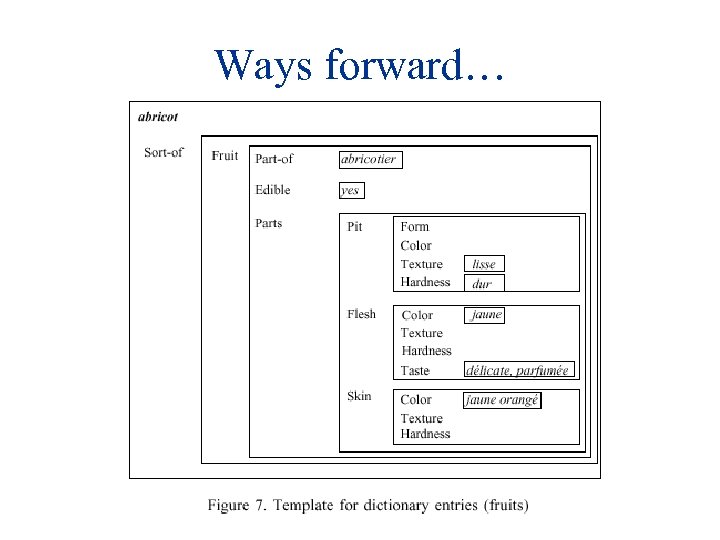

Ways forward… • Lexicographers increasingly interested in using lexical databases for their work • Could create a NLP-like KB directly – Create explicit semantic links between word entries – Ensure consistency of content (e. g. , using templates/frames ensures all the important information is provided)

Ways forward…

Ways forward… • Lexicographers increasingly interested in using lexical databases for their work • Could create a NLP-like KB directly – Create explicit semantic links between word entries – Ensure consistency of content (e. g. , using templates/frames ensures all the important information is provided) – Ensure consistency of “metatext” (i. e. , be consistent about how semantic relations are stated) – Ensure consistency of sense division • e. g. , “cup” and “bowl” have two senses (literal and metonymic) but “glass” only has one (literal) could spot this inconsistency

3f2e037294f0acfca453150e8eb01af1.ppt