f56ac71bbe5cc9383f94de723840ef7d.ppt

- Количество слайдов: 14

Experiments and Tools for DDo. S Attacks Roman Chertov, Sonia Fahmy, Rupak Sanjel, Ness Shroff Center for Education and Research in Information Assurance and Security (CERIAS) Purdue University October 25 th, 2004 1

Experiments and Tools for DDo. S Attacks Roman Chertov, Sonia Fahmy, Rupak Sanjel, Ness Shroff Center for Education and Research in Information Assurance and Security (CERIAS) Purdue University October 25 th, 2004 1

Objectives Ø Design, integrate, and deploy a methodology and tools for performing realistic and reproducible DDo. S experiments: Ø Ø Ø Tools to configure traffic and attacks Tools for automation of experiments, measurements, and visualization of results Integration of multiple third-party software components Ø Understand the testing requirements of different types of third party detection and defense mechanisms Ø Gain insight into the phenomenology of attacks including their first-order and their second-order effects, and impact on defenses 2

Objectives Ø Design, integrate, and deploy a methodology and tools for performing realistic and reproducible DDo. S experiments: Ø Ø Ø Tools to configure traffic and attacks Tools for automation of experiments, measurements, and visualization of results Integration of multiple third-party software components Ø Understand the testing requirements of different types of third party detection and defense mechanisms Ø Gain insight into the phenomenology of attacks including their first-order and their second-order effects, and impact on defenses 2

Accomplishments Ø Designed and implemented experimental tools: q Scriptable event system to control and synchronize events at multiple nodes q Automated measurement tools, log processing tools, and plotting tools q Automated configuration of interactive and replayed background traffic, routing, attack parameters, and measurements Ø Generated requirements for DETER to easily support the testing of third party products (e. g. , Man. Hunt, Sentivist) 3

Accomplishments Ø Designed and implemented experimental tools: q Scriptable event system to control and synchronize events at multiple nodes q Automated measurement tools, log processing tools, and plotting tools q Automated configuration of interactive and replayed background traffic, routing, attack parameters, and measurements Ø Generated requirements for DETER to easily support the testing of third party products (e. g. , Man. Hunt, Sentivist) 3

Accomplishments (cont’d) Ø Analytical characterization, simulations, and experiments for low-rate TCP-targeted DDo. S attacks Ø Preliminary analysis of BGP behavior during DDo. S, and BGP impact on DDo. S 4

Accomplishments (cont’d) Ø Analytical characterization, simulations, and experiments for low-rate TCP-targeted DDo. S attacks Ø Preliminary analysis of BGP behavior during DDo. S, and BGP impact on DDo. S 4

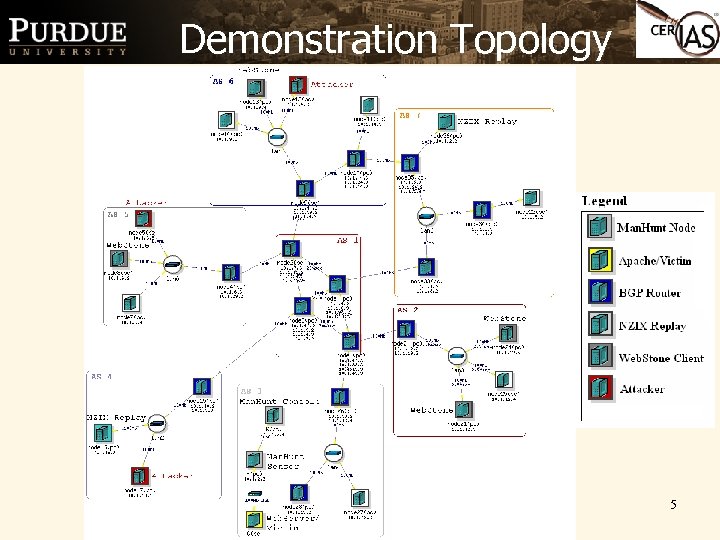

Demonstration Topology 5

Demonstration Topology 5

Scriptable Event System Ø Having more than a few computers proves a real challenge to handle in a fast and reasonable manner. Ø Must have a central way to delegate arbitrary tasks to experimental nodes. Ø Event completion notification is required to trigger further events in the experiment. 6

Scriptable Event System Ø Having more than a few computers proves a real challenge to handle in a fast and reasonable manner. Ø Must have a central way to delegate arbitrary tasks to experimental nodes. Ø Event completion notification is required to trigger further events in the experiment. 6

Routing Ø Deter. Lab experiments can be used with static or OSPF routing; however, there is no support of BGP, RIP, ISIS etc Ø e. BGP and i. BGP routing can be accomplished with Quagga routing daemons Ø Initialization scripts coupled with the central control make it easy to restart all of the routers in experiment to get a clean starting point. 7

Routing Ø Deter. Lab experiments can be used with static or OSPF routing; however, there is no support of BGP, RIP, ISIS etc Ø e. BGP and i. BGP routing can be accomplished with Quagga routing daemons Ø Initialization scripts coupled with the central control make it easy to restart all of the routers in experiment to get a clean starting point. 7

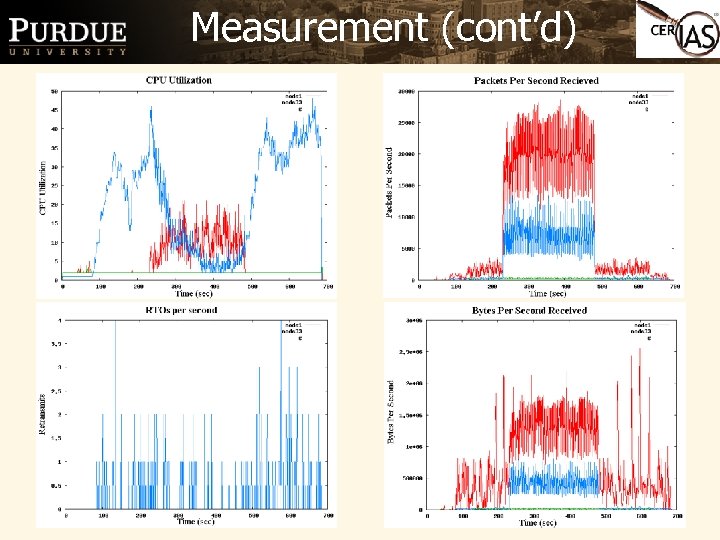

Measurement Ø Measurement of systems statistics at different points in the network can yield an understanding of what events are occurring in the entire network. Ø A tool based on a 1 sec timer records CPU, PPSin, PPSout, BPSin, BPSout, RTO, Memory. The collected logs can be aggregated and used to produce graphs via a collection of scripts. Ø Future scripts will have an ability to correlate events between system measurements/ routing log files 8

Measurement Ø Measurement of systems statistics at different points in the network can yield an understanding of what events are occurring in the entire network. Ø A tool based on a 1 sec timer records CPU, PPSin, PPSout, BPSin, BPSout, RTO, Memory. The collected logs can be aggregated and used to produce graphs via a collection of scripts. Ø Future scripts will have an ability to correlate events between system measurements/ routing log files 8

Measurement (cont’d) 9

Measurement (cont’d) 9

Challenges in Testing Third-Party Mechanisms Ø Man. Hunt license is IP/MAC specific Control of machine selection in DETER Ø Administration software: some products for Windows XP only, e. g. , Sentivist. Luckily command line interface provided in this case. Ø Some mechanisms require their hardware to be installed (sensors/authentication). Ø Certain features of mechanisms like traceback/pushback are dependant on interaction with the network devices (routers/switches) 10

Challenges in Testing Third-Party Mechanisms Ø Man. Hunt license is IP/MAC specific Control of machine selection in DETER Ø Administration software: some products for Windows XP only, e. g. , Sentivist. Luckily command line interface provided in this case. Ø Some mechanisms require their hardware to be installed (sensors/authentication). Ø Certain features of mechanisms like traceback/pushback are dependant on interaction with the network devices (routers/switches) 10

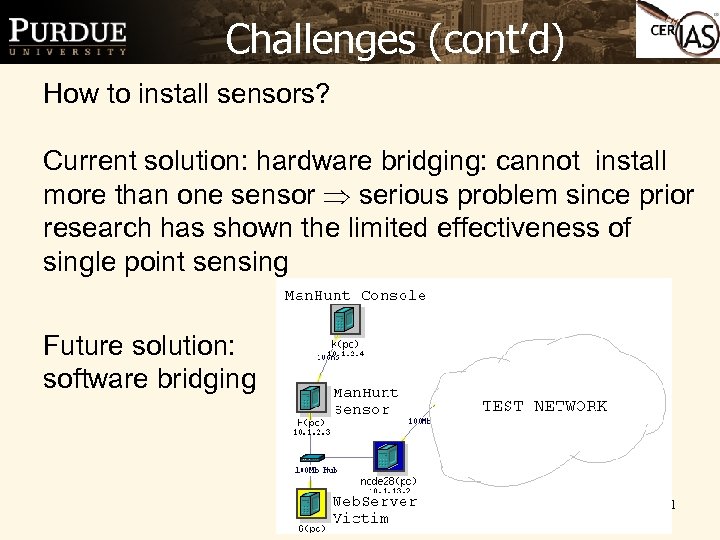

Challenges (cont’d) How to install sensors? Current solution: hardware bridging: cannot install more than one sensor serious problem since prior research has shown the limited effectiveness of single point sensing Future solution: software bridging 11

Challenges (cont’d) How to install sensors? Current solution: hardware bridging: cannot install more than one sensor serious problem since prior research has shown the limited effectiveness of single point sensing Future solution: software bridging 11

Challenges (cont’d) Ø Sentivist Sensor distributed as bootable CD-ROM Ø Is it possible to “boot” a machine from an ISO image? Ø Perhaps using Free. BSD network install (Sentivist Sensor built on Free. BSD), but no administrative privilege to do so Ø Otherwise, need someone to insert CD-ROM in drive Ø Sentivist Sensor installation requires interaction: Ø Must establish serial console connection to machine: COM 1 or COM 2, no COM 1 on DETER IBM machines Ø Else need someone to use a monitor and keyboard 12

Challenges (cont’d) Ø Sentivist Sensor distributed as bootable CD-ROM Ø Is it possible to “boot” a machine from an ISO image? Ø Perhaps using Free. BSD network install (Sentivist Sensor built on Free. BSD), but no administrative privilege to do so Ø Otherwise, need someone to insert CD-ROM in drive Ø Sentivist Sensor installation requires interaction: Ø Must establish serial console connection to machine: COM 1 or COM 2, no COM 1 on DETER IBM machines Ø Else need someone to use a monitor and keyboard 12

Plans Ø Continue development of experiment automation and instrumentation/plotting tools and documentation Ø Design increasingly high fidelity experimental suites Ø Continue investigation of TCP-targeted DDo. S attacks in more depth, and compare analytical and simulation results with DETER testbed results to identify artifacts 13

Plans Ø Continue development of experiment automation and instrumentation/plotting tools and documentation Ø Design increasingly high fidelity experimental suites Ø Continue investigation of TCP-targeted DDo. S attacks in more depth, and compare analytical and simulation results with DETER testbed results to identify artifacts 13

Plans (cont’d) Ø Investigate routing problems/attacks, and compare with DETER testbed results Ø Continue to collaborate with routing team and Mc. Afee team to identify experimental scenarios and build tools for routing experiments 14

Plans (cont’d) Ø Investigate routing problems/attacks, and compare with DETER testbed results Ø Continue to collaborate with routing team and Mc. Afee team to identify experimental scenarios and build tools for routing experiments 14