beff1aa4267989306fbc75d392b090a2.ppt

- Количество слайдов: 119

Experiment Basics: Variables Psych 231: Research Methods in Psychology

n Class Experiment n Turn in your data sheets • I will analyze the data and the results will be discussed in labs n n Labs n n Turn in your consent forms Exercise Swap going to assign Variables exercise on LM pg 25 (instead of the on pg 35), this will be assigned in labs this week & due in labs next week Quiz 5 is due Friday Class Experiment

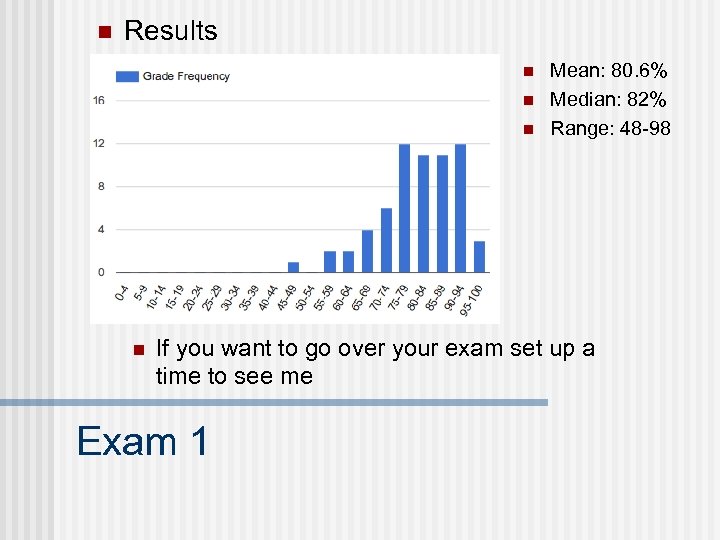

n Results n n Mean: 80. 6% Median: 82% Range: 48 -98 If you want to go over your exam set up a time to see me Exam 1

n Common errors: n Four Cannons of scientific method (& pg 69 of the textbook) Exam 1

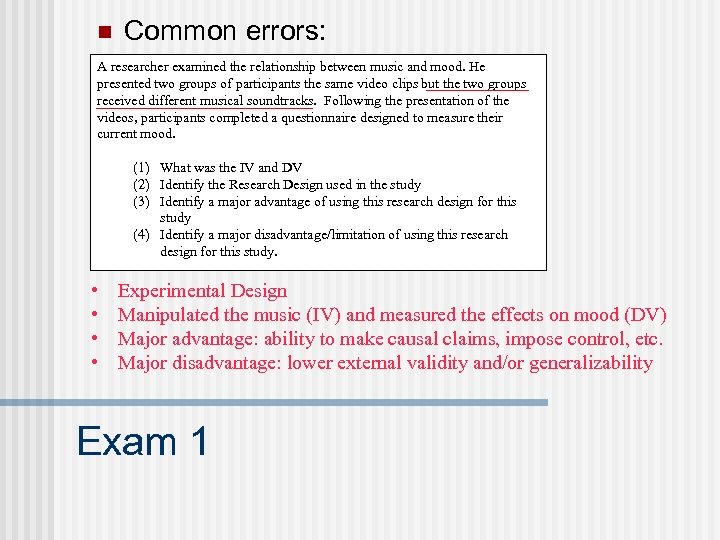

n Common errors: A researcher examined the relationship between music and mood. He presented two groups of participants the same video clips but the two groups received different musical soundtracks. Following the presentation of the videos, participants completed a questionnaire designed to measure their current mood. (1) What was the IV and DV (2) Identify the Research Design used in the study (3) Identify a major advantage of using this research design for this study (4) Identify a major disadvantage/limitation of using this research design for this study. • • Experimental Design Manipulated the music (IV) and measured the effects on mood (DV) Major advantage: ability to make causal claims, impose control, etc. Major disadvantage: lower external validity and/or generalizability Exam 1

n You’ve got your theory. n n What behavior you want to examine Identified what things (variables) you think affects that behavior So you want to do an experiment?

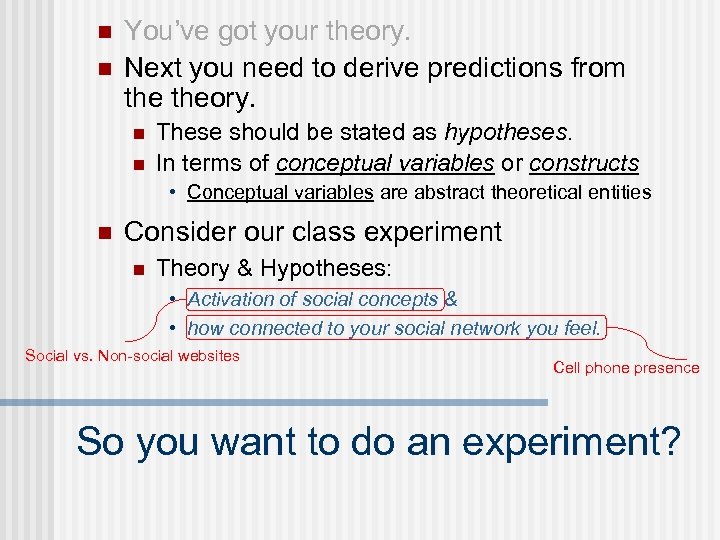

n n You’ve got your theory. Next you need to derive predictions from theory. n n These should be stated as hypotheses. In terms of conceptual variables or constructs • Conceptual variables are abstract theoretical entities n Consider our class experiment n Theory & Hypotheses: • Activation of social concepts & • how connected to your social network you feel. Social vs. Non-social websites Cell phone presence So you want to do an experiment?

n n n You’ve got your theory. Next you need to derive predictions from theory. Now you need to design the experiment. n You need to operationalize your variables in terms of how they will be: • Manipulated • Measured • Controlled n Be aware of the underlying assumptions connecting your constructs to your operational variables • Be prepared to justify all of your choices So you want to do an experiment?

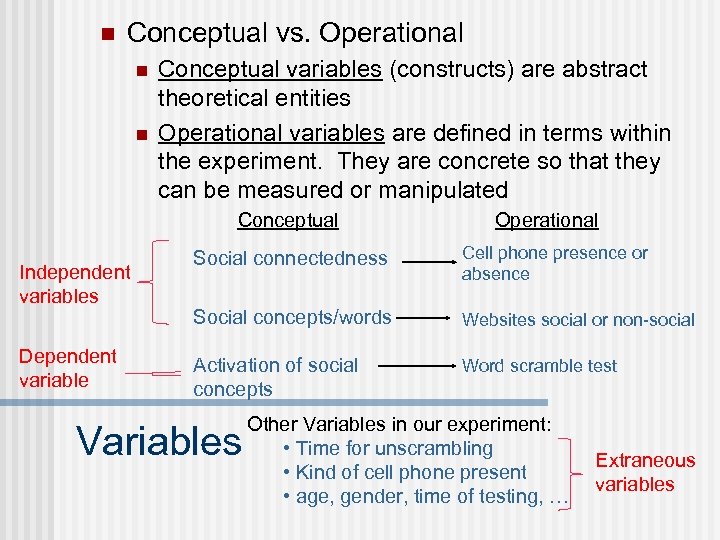

n Conceptual vs. Operational n n Conceptual variables (constructs) are abstract theoretical entities Operational variables are defined in terms within the experiment. They are concrete so that they can be measured or manipulated Conceptual Independent variables Dependent variable Operational Social connectedness Cell phone presence or absence Social concepts/words Websites social or non-social Activation of social concepts Word scramble test Variables Other Variables in our experiment: • Time for unscrambling • Kind of cell phone present • age, gender, time of testing, … Extraneous variables

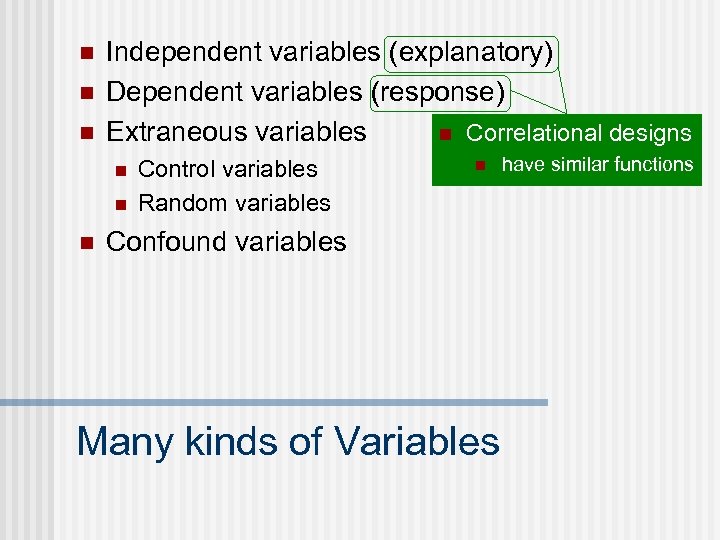

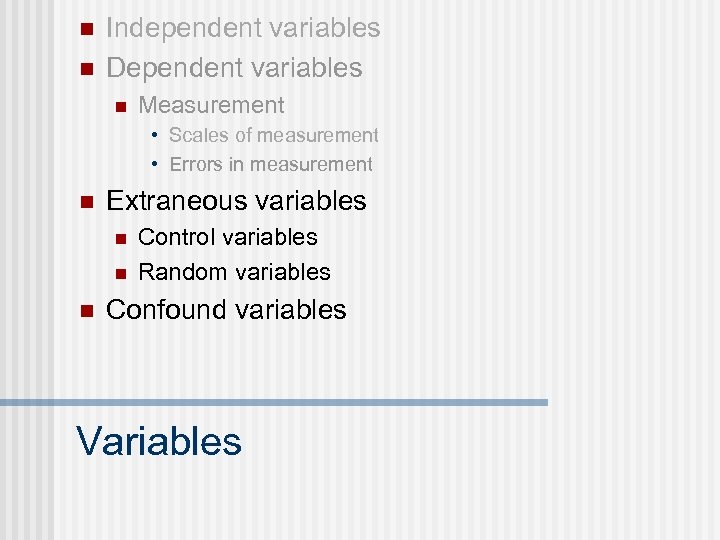

n n n Independent variables (explanatory) Dependent variables (response) n Correlational designs Extraneous variables n n n Control variables Random variables n Confound variables Many kinds of Variables have similar functions

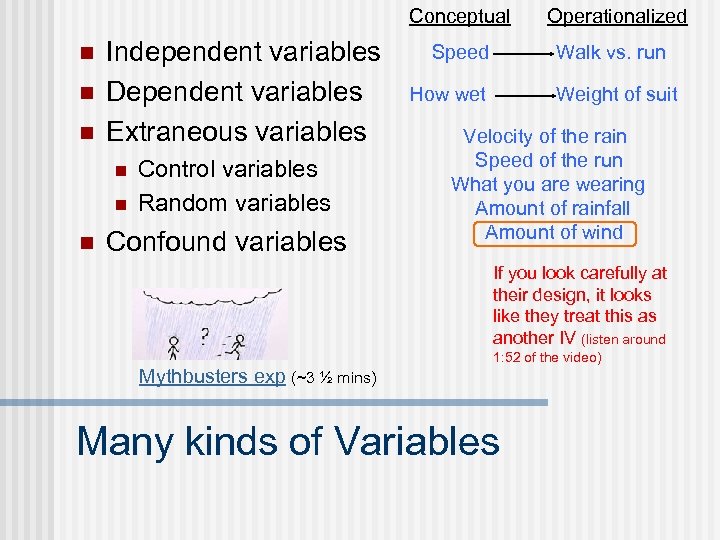

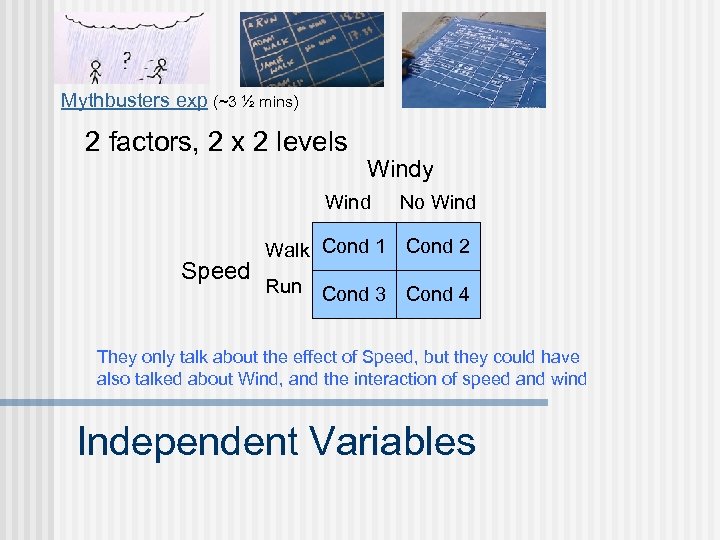

Conceptual n n n Independent variables Dependent variables Extraneous variables n n n Control variables Random variables Confound variables Speed Operationalized Walk vs. run How wet Weight of suit Velocity of the rain Speed of the run What you are wearing Amount of rainfall Amount of wind If you look carefully at their design, it looks like they treat this as another IV (listen around 1: 52 of the video) Mythbusters exp (~3 ½ mins) Many kinds of Variables

n n n Independent variables (explanatory) Dependent variables (response) Extraneous variables n n n Control variables Random variables Confound variables Many kinds of Variables

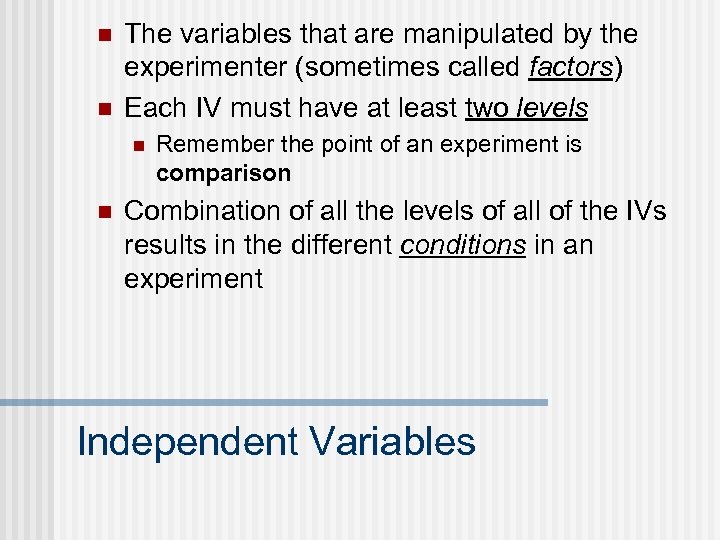

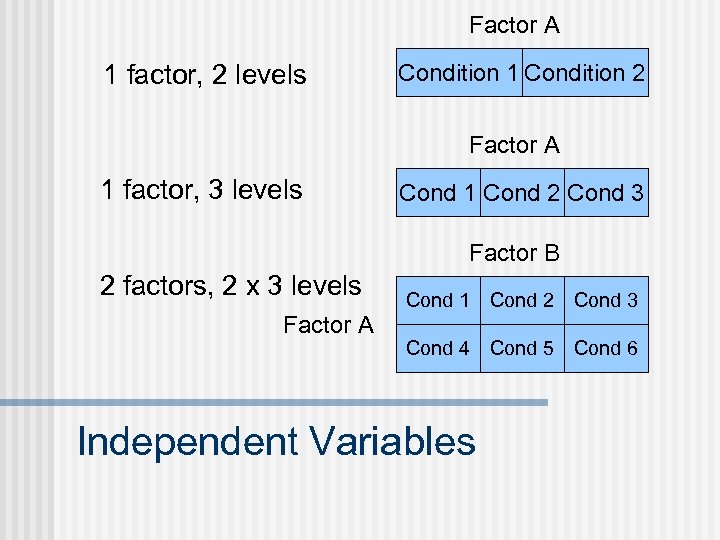

n n The variables that are manipulated by the experimenter (sometimes called factors) Each IV must have at least two levels n n Remember the point of an experiment is comparison Combination of all the levels of all of the IVs results in the different conditions in an experiment Independent Variables

Factor A 1 factor, 2 levels Condition 1 Condition 2 Factor A 1 factor, 3 levels Cond 1 Cond 2 Cond 3 Factor B 2 factors, 2 x 3 levels Factor A Cond 1 Cond 2 Cond 3 Cond 4 Cond 5 Cond 6 Independent Variables

Mythbusters exp (~3 ½ mins) 2 factors, 2 x 2 levels Windy Wind Speed No Wind Walk Cond 1 Cond 2 Run Cond 3 Cond 4 They only talk about the effect of Speed, but they could have also talked about Wind, and the interaction of speed and wind Independent Variables

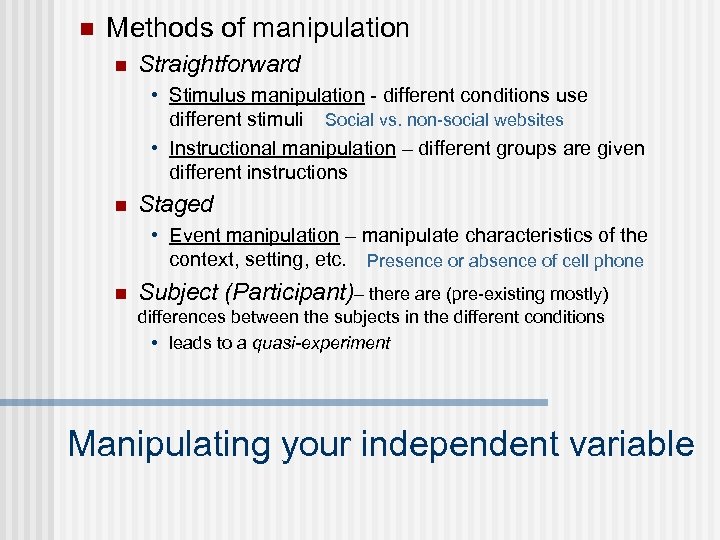

n Methods of manipulation n Straightforward • Stimulus manipulation - different conditions use different stimuli Social vs. non-social websites • Instructional manipulation – different groups are given different instructions n Staged • Event manipulation – manipulate characteristics of the context, setting, etc. Presence or absence of cell phone n Subject (Participant)– there are (pre-existing mostly) differences between the subjects in the different conditions • leads to a quasi-experiment Manipulating your independent variable

n Choosing the right levels of your independent variable n n Review the literature Do a pilot experiment Consider the costs, your resources, your limitations Be realistic • Pick levels found in the “real world” n Pay attention to the range of the levels • Pick a large enough range to show the effect • Aim for the middle of the range Choosing your independent variable

n These are things that you want to try to avoid by careful selection of the levels of your IV (may be issues for your DV as well). n n Demand characteristics Experimenter bias Reactivity Floor and ceiling effects (range effects) Identifying potential problems

n n Characteristics of the study that may give away the purpose of the experiment May influence how the participants behave in the study n Examples: • Experiment title: The effects of horror movies on mood • Obvious manipulation: Having participants see lists of words and pictures and then later testing to see if pictures or words are remembered better • Biased or leading questions: Don’t you think it’s bad to murder unborn children? Demand characteristics

n Experimenter bias (expectancy effects) n The experimenter may influence the results (intentionally and unintentionally) • E. g. , Clever Hans n One solution is to keep the experimenter (as well as the participants) “blind” as to what conditions are being tested Experimenter Bias

n Knowing that you are being measured n Just being in an experimental setting, people don’t always respond the way that they “normally” would. • Cooperative • Defensive • Non-cooperative Reactivity

n Floor: A value below which a response cannot be made n n n Ceiling: When the dependent variable reaches a level that cannot be exceeded n n n As a result the effects of your IV (if there are indeed any) can’t be seen. Imagine a task that is so difficult, that none of your participants can do it. So while there may be an effect of the IV, that effect can’t be seen because everybody has “maxed out” Imagine a task that is so easy, that everybody scores a 100% To avoid floor and ceiling effects you want to pick levels of your IV that result in middle level performance in your DV Range effects

n n n Independent variables (explanatory) Dependent variables (response) Extraneous variables n n n Control variables Random variables Confound variables Variables

n n The variables that are measured by the experimenter They are “dependent” on the independent variables (if there is a relationship between the IV and DV as the hypothesis predicts). Dependent Variables

n How to measure your construct: n Can the participant provide self-report? • Introspection – specially trained observers of their own thought processes, method fell out of favor in early 1900’s • Rating scales – strongly agree - undecided - disagree - strongly disagree n Is the dependent variable directly observable? • Choice/decision n Is the dependent variable indirectly observable? • Physiological measures (e. g. GSR, heart rate) • Behavioral measures (e. g. speed, accuracy) Choosing your dependent variable

n n Scales of measurement Errors in measurement Measuring your dependent variables

n n Scales of measurement Errors in measurement Measuring your dependent variables

n Scales of measurement - the correspondence between the numbers representing the properties that we’re measuring n The scale that you use will (partially) determine what kinds of statistical analyses you can perform Measuring your dependent variables

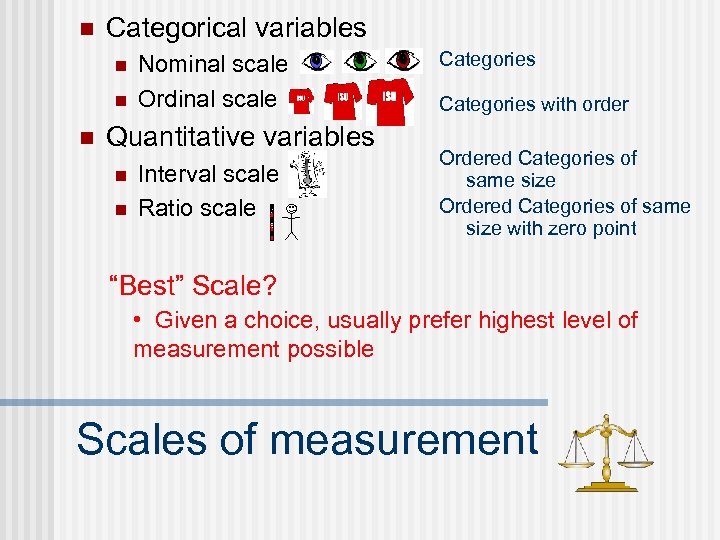

n Categorical variables (qualitative) n n n Nominal scale Ordinal scale Quantitative variables n n Interval scale Ratio scale Scales of measurement

n Nominal Scale: Consists of a set of categories that have different names. n n n Label and categorize observations, Do not make any quantitative distinctions between observations. Example: • Eye color: blue, green, brown, hazel Scales of measurement

n Categorical variables (qualitative) n n n Nominal scale Ordinal scale Categories Quantitative variables n n Interval scale Ratio scale Scales of measurement

n Ordinal Scale: Consists of a set of categories that are organized in an ordered sequence. n Rank observations in terms of size or magnitude. n Example: • T-shirt size: Small, Med, Lrg, XL, Scales of measurement XXL

n Categorical variables n n n Nominal scale Ordinal scale Categories with order Quantitative variables n n Interval scale Ratio scale Scales of measurement

n Interval Scale: Consists of ordered categories where all of the categories are intervals of exactly the same size. n Example: Fahrenheit temperature scale n With an interval scale, equal differences between numbers on the scale reflect equal differences in magnitude. 20º increase 60º n 40º 80º 20º increase The amount of temperature increase is the same However, Ratios of magnitudes are not meaningful. 40º “Not Twice as hot” 20º Scales of measurement

n Categorical variables n n n Nominal scale Ordinal scale Quantitative variables n n Interval scale Ratio scale Categories with order Ordered Categories of same size Scales of measurement

n Ratio scale: An interval scale with the additional feature of an absolute zero point. n Ratios of numbers DO reflect ratios of magnitude. n It is easy to get ratio and interval scales confused • Example: Measuring your height with playing cards Scales of measurement

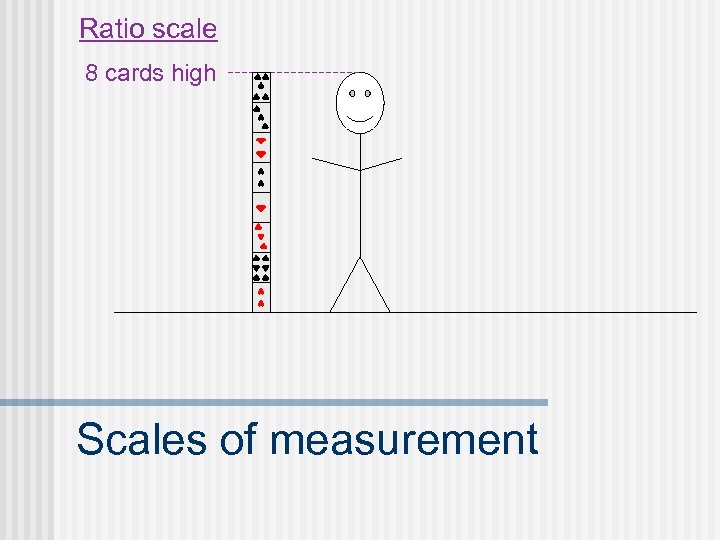

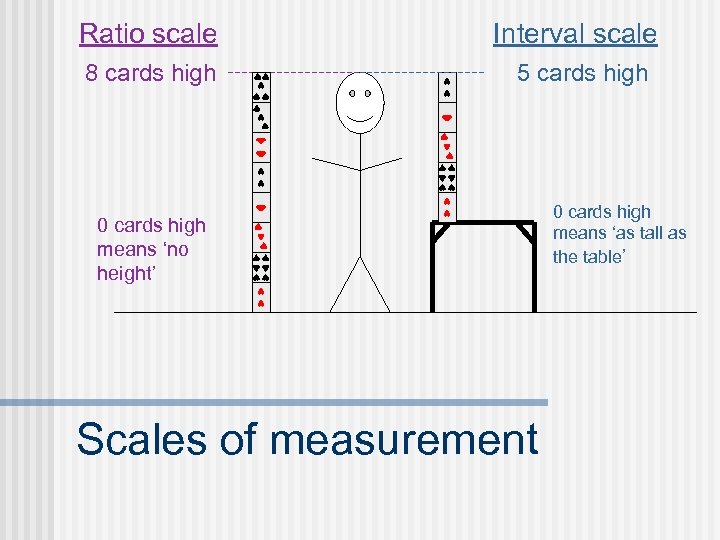

Ratio scale 8 cards high Scales of measurement

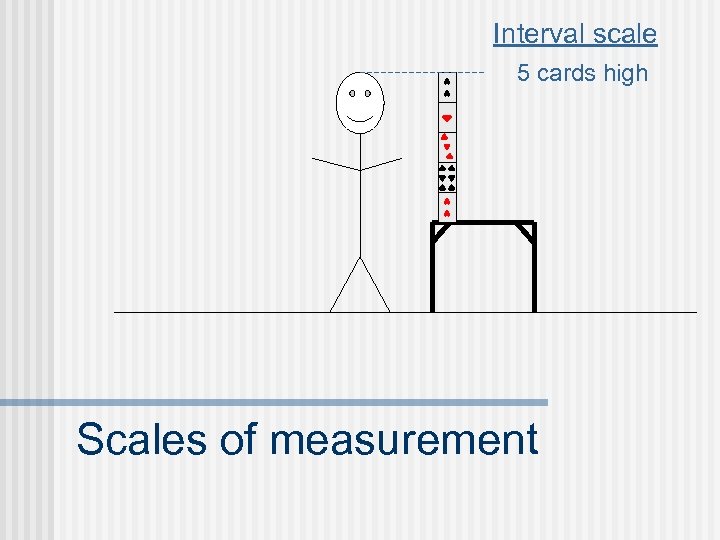

Interval scale 5 cards high Scales of measurement

Ratio scale Interval scale 8 cards high 5 cards high 0 cards high means ‘no height’ Scales of measurement 0 cards high means ‘as tall as the table’

n Categorical variables n n n Nominal scale Ordinal scale Quantitative variables n n Interval scale Ratio scale Categories with order Ordered Categories of same size with zero point “Best” Scale? • Given a choice, usually prefer highest level of measurement possible Scales of measurement

n n Scales of measurement Errors in measurement n n Reliability & Validity Sampling error Measuring your dependent variables

Example: Measuring intelligence? n n How do we measure the construct? How good is our measure? How does it compare to other measures of the construct? Is it a self-consistent measure? Internet IQ tests: Are they valid? (The Guardian Nov. 2013) Measuring the true score

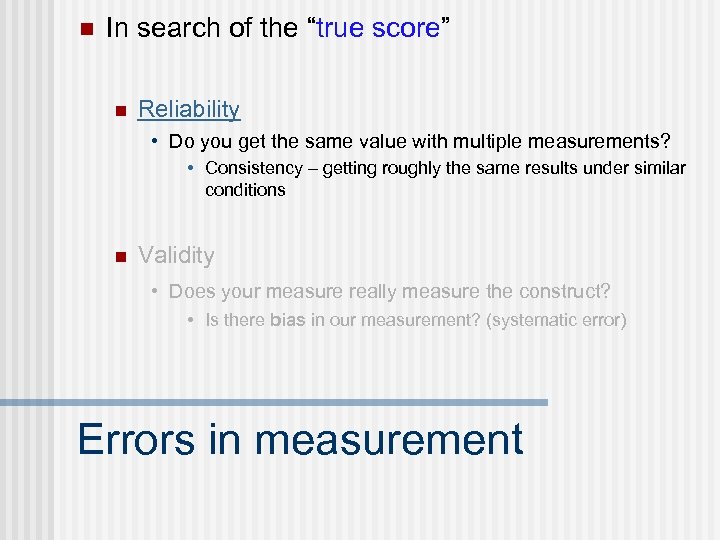

n In search of the “true score” n Reliability • Do you get the same value with multiple measurements? • Consistency – getting roughly the same results under similar conditions n Validity • Does your measure really measure the construct? • Is there bias in our measurement? (systematic error) Errors in measurement

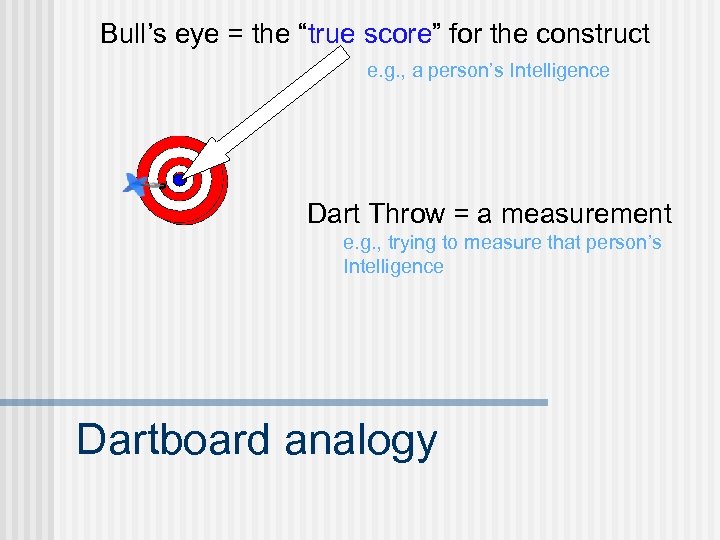

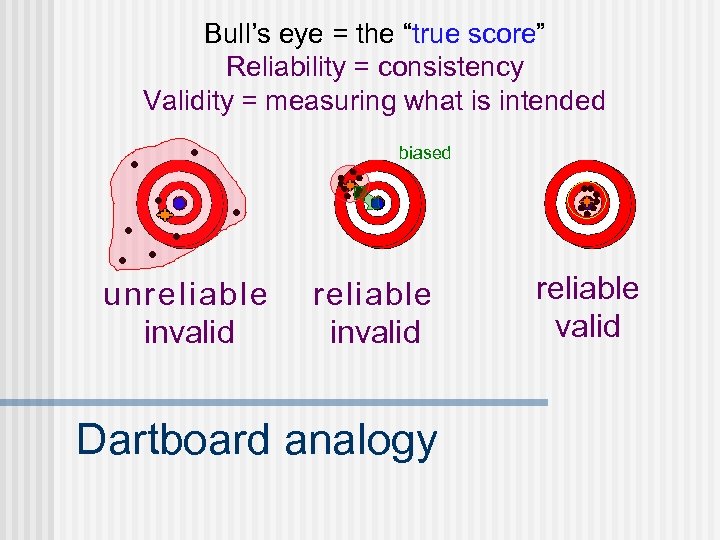

Bull’s eye = the “true score” for the construct e. g. , a person’s Intelligence Dart Throw = a measurement e. g. , trying to measure that person’s Intelligence Dartboard analogy

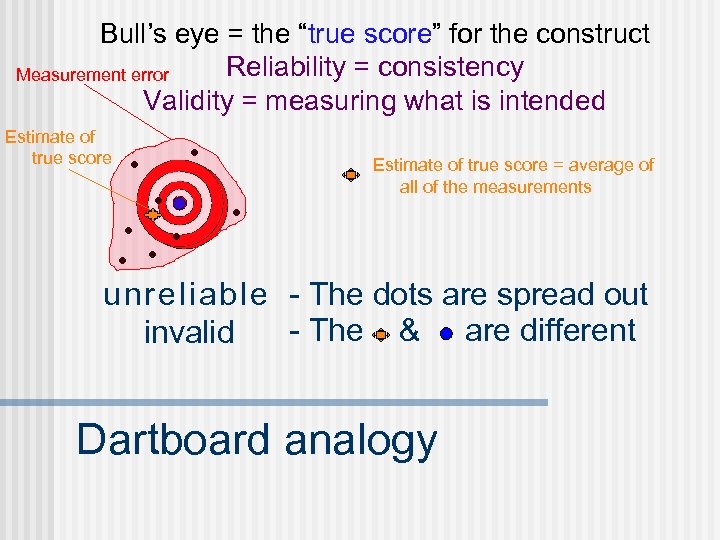

Bull’s eye = the “true score” for the construct Reliability = consistency Measurement error Validity = measuring what is intended Estimate of true score = average of all of the measurements unreliable - The dots are spread out - The & are different invalid Dartboard analogy

Bull’s eye = the “true score” Reliability = consistency Validity = measuring what is intended biased unreliable invalid Dartboard analogy reliable valid

n In search of the “true score” n Reliability • Do you get the same value with multiple measurements? • Consistency – getting roughly the same results under similar conditions n Validity • Does your measure really measure the construct? • Is there bias in our measurement? (systematic error) Errors in measurement

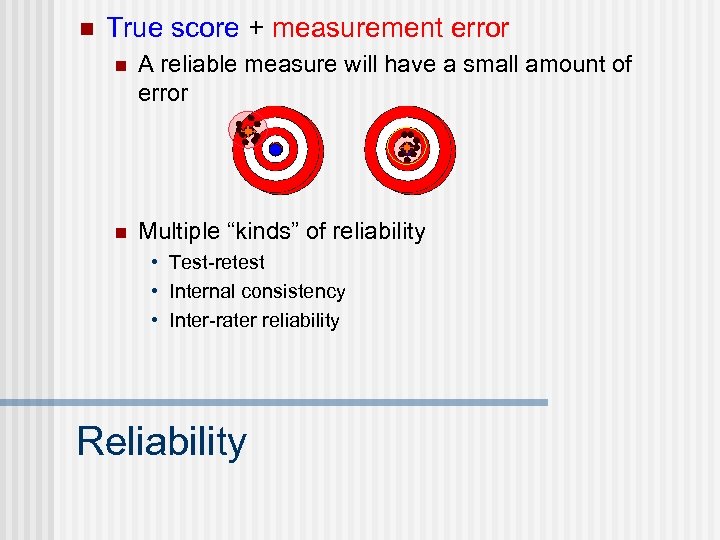

n True score + measurement error n A reliable measure will have a small amount of error n Multiple “kinds” of reliability • Test-retest • Internal consistency • Inter-rater reliability Reliability

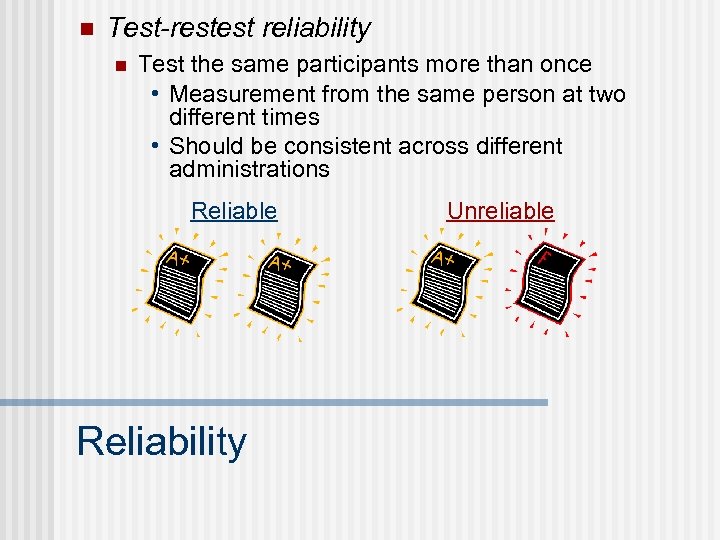

n Test-restest reliability n Test the same participants more than once • Measurement from the same person at two different times • Should be consistent across different administrations Reliable Reliability Unreliable

n Internal consistency reliability n n Multiple items testing the same construct Extent to which scores on the items of a measure correlate with each other • Cronbach’s alpha (α) • Split-half reliability • Correlation of score on one half of the measure with the other half (randomly determined) Reliability

n Inter-rater reliability n n At least 2 raters observe behavior Extent to which raters agree in their observations • Are the raters consistent? n Requires some training in judgment Funny 4: 56 Reliability 5: 00 Not very funny

n In search of the “true score” n Reliability • Do you get the same value with multiple measurements? • Consistency – getting roughly the same results under similar conditions n Validity • Does your measure really measure the construct? • Is there bias in our measurement? (systematic error) Errors in measurement

n Does your measure really measure what it is supposed to measure (the construct)? n There are many “kinds” of validity Validity

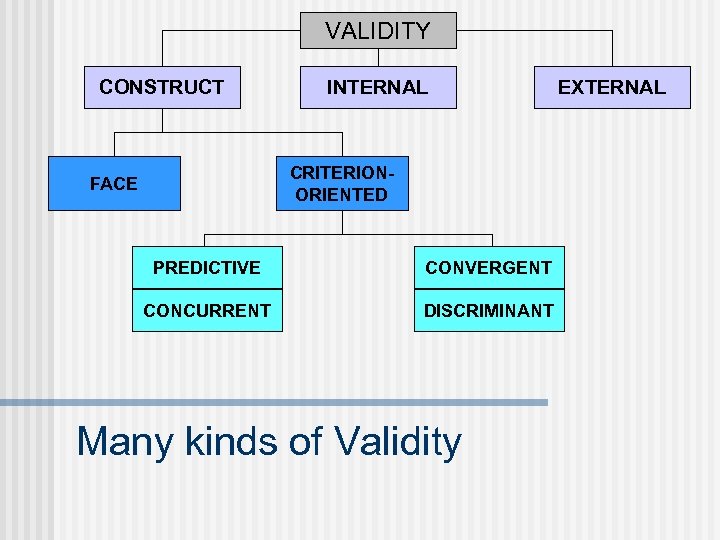

VALIDITY CONSTRUCT INTERNAL CRITERIONORIENTED FACE PREDICTIVE CONVERGENT CONCURRENT DISCRIMINANT Many kinds of Validity EXTERNAL

VALIDITY CONSTRUCT INTERNAL CRITERIONORIENTED FACE PREDICTIVE CONVERGENT CONCURRENT DISCRIMINANT Many kinds of Validity EXTERNAL

n At the surface level, does it look as if the measure is testing the construct? “This guy seems smart to me, and he got a high score on my IQ measure. ” Face Validity

n Usually requires multiple studies, a large body of evidence that supports the claim that the measure really tests the construct Construct Validity

n The precision of the results n Did the change in the DV result from the changes in the IV or does it come from something else? Internal Validity

n Experimenter bias & reactivity n History – an event happens the experiment n Maturation – participants get older (and other changes) n Selection – nonrandom selection may lead to biases n Mortality (attrition) – participants drop out or can’t continue n Regression toward the mean – extreme performance is often followed by performance closer to the mean n The SI cover jinx | Madden Curse Threats to internal validity

n Are experiments “real life” behavioral situations, or does the process of control put too much limitation on the “way things really work? ” Example: Measuring driving while distracted External Validity

n Variable representativeness n n Subject representativeness n n Relevant variables for the behavior studied along which the sample may vary Characteristics of sample and target population along these relevant variables Setting representativeness n Ecological validity - are the properties of the research setting similar to those outside the lab External Validity

n n Scales of measurement Errors in measurement n n Reliability & Validity Sampling error Measuring your dependent variables

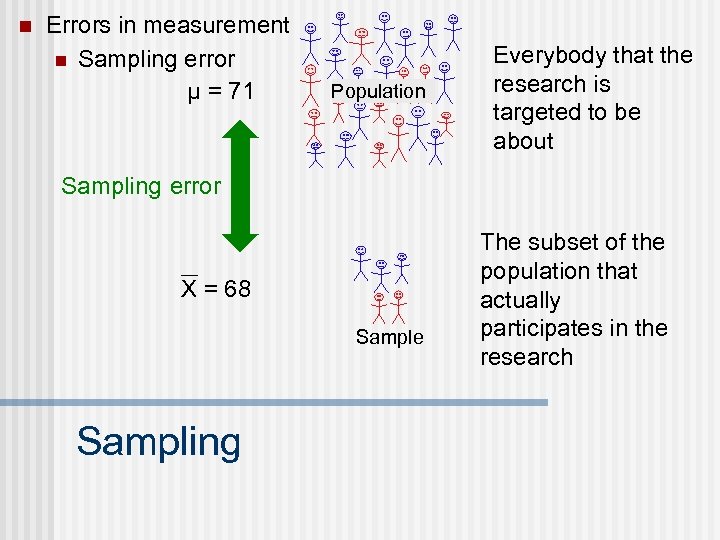

n Errors in measurement n Sampling error μ = 71 Population Everybody that the research is targeted to be about Sampling error X = 68 Sample Sampling The subset of the population that actually participates in the research

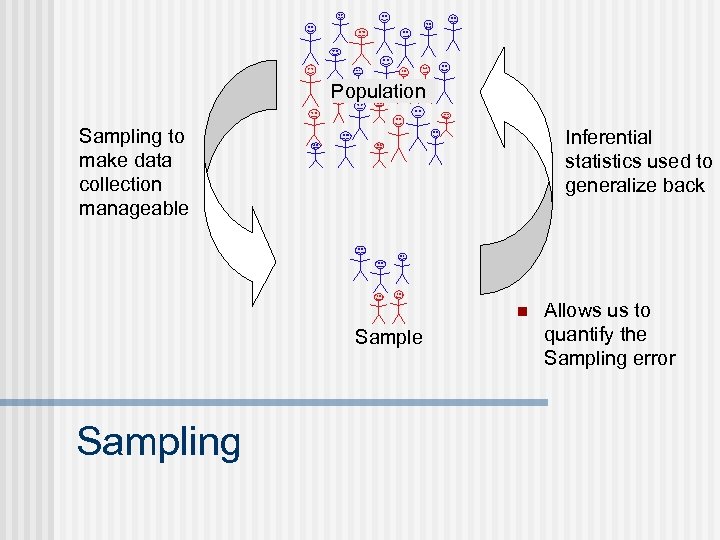

Population Sampling to make data collection manageable Inferential statistics used to generalize back n Sample Sampling Allows us to quantify the Sampling error

n Goals of “good” sampling: – Maximize Representativeness: – To what extent do the characteristics of those in the sample reflect those in the population – Reduce Bias: – A systematic difference between those in the sample and those in the population n Key tool: Random selection Sampling

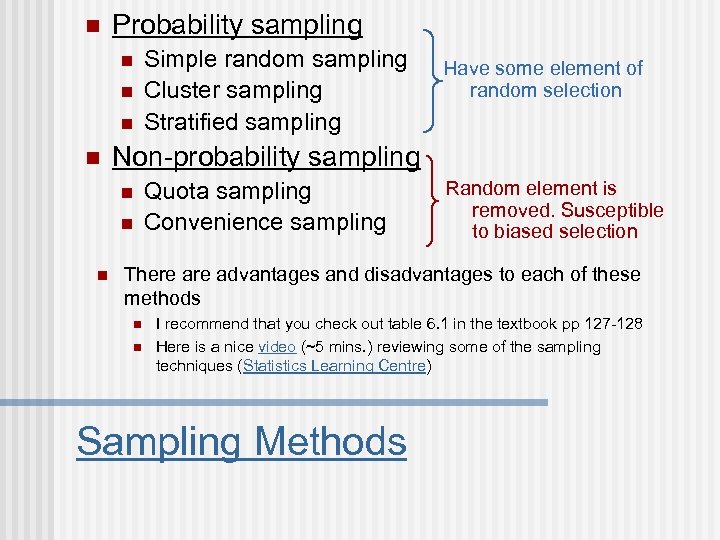

n Probability sampling n n Have some element of random selection Non-probability sampling n n n Simple random sampling Cluster sampling Stratified sampling Quota sampling Convenience sampling Random element is removed. Susceptible to biased selection There advantages and disadvantages to each of these methods n n I recommend that you check out table 6. 1 in the textbook pp 127 -128 Here is a nice video (~5 mins. ) reviewing some of the sampling techniques (Statistics Learning Centre) Sampling Methods

n Every individual has a equal and independent chance of being selected from the population Simple random sampling

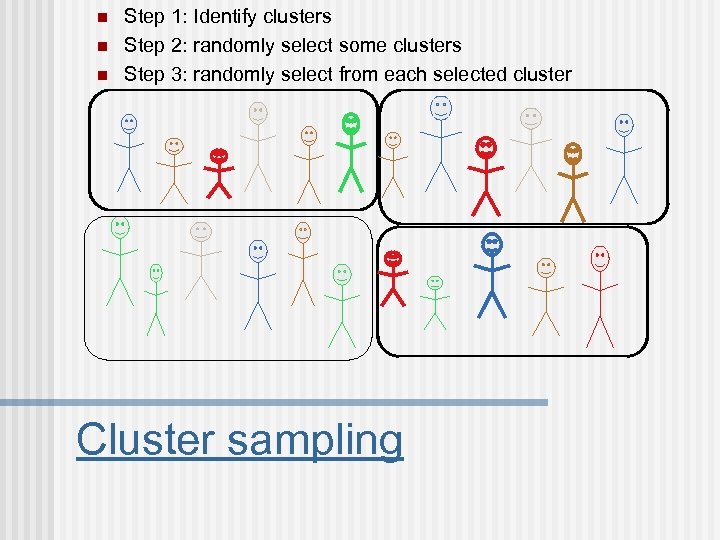

n n n Step 1: Identify clusters Step 2: randomly select some clusters Step 3: randomly select from each selected cluster Cluster sampling

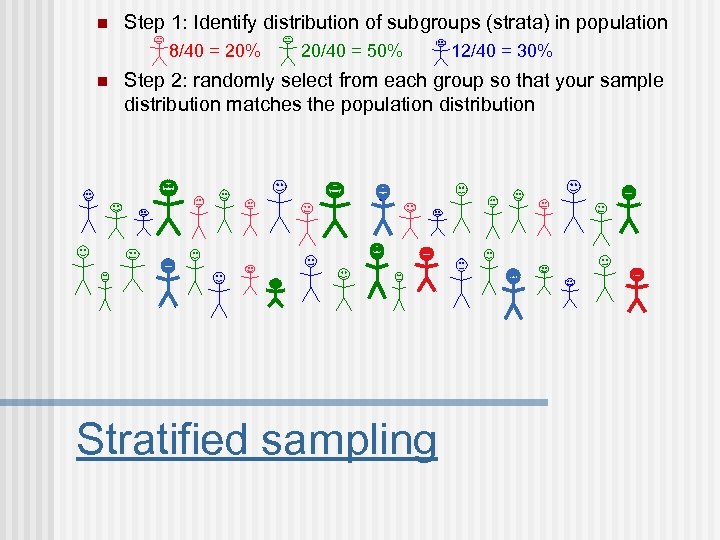

n Step 1: Identify distribution of subgroups (strata) in population 8/40 = 20% n 20/40 = 50% 12/40 = 30% Step 2: randomly select from each group so that your sample distribution matches the population distribution Stratified sampling

n n Step 1: identify the specific subgroups (strata) Step 2: take from each group until desired number of individuals (not using random selection) Quota sampling

n Use the participants who are easy to get (e. g. , volunteer sign-up sheets, using a group that you already have access to, etc. ) Convenience sampling

n Use the participants who are easy to get (e. g. , volunteer sign-up sheets, using a group that you already have access to, etc. ) n College student bias (World of Psychology Blog) n Western Culture bias “Who are the people studied in behavioral science research? A recent analysis of the top journals in six sub-disciplines of psychology from 2003 to 2007 revealed that 68% of subjects came from the United States, and a full 96% of subjects were from Western industrialized countries, specifically those in North America and Europe, as well as Australia and Israel (Arnett 2008). The make-up of these samples appears to largely reflect the country of residence of the authors, as 73% of first authors were at American universities, and 99% were at universities in Western countries. This means that 96% of psychological samples come from countries with only 12% of the world's population. ” Henrich, J. Heine, S. J. , & Norenzayan, A. (2010). The weirdest people in the world? (free access). Behavioral and Brain Sciences, 33(2 -3), 61 -83. Convenience sampling

n n Independent variables Dependent variables n Measurement • Scales of measurement • Errors in measurement n Extraneous variables n n n Control variables Random variables Confound variables Variables

n Control variables n n Holding things constant - Controls for excessive random variability Random variables – may freely vary, to spread variability equally across all experimental conditions n Randomization • A procedure that assures that each level of an extraneous variable has an equal chance of occurring in all conditions of observation. n Confound variables n n Variables that haven’t been accounted for (manipulated, measured, randomized, controlled) that can impact changes in the dependent variable(s) Co-varys with both the dependent AND an independent variable Extraneous Variables

n Divide into two groups: n n men women n Instructions: Read aloud the COLOR that the words are presented in. When done raise your hand. n Women first. Men please close your eyes. Okay ready? n Colors and words

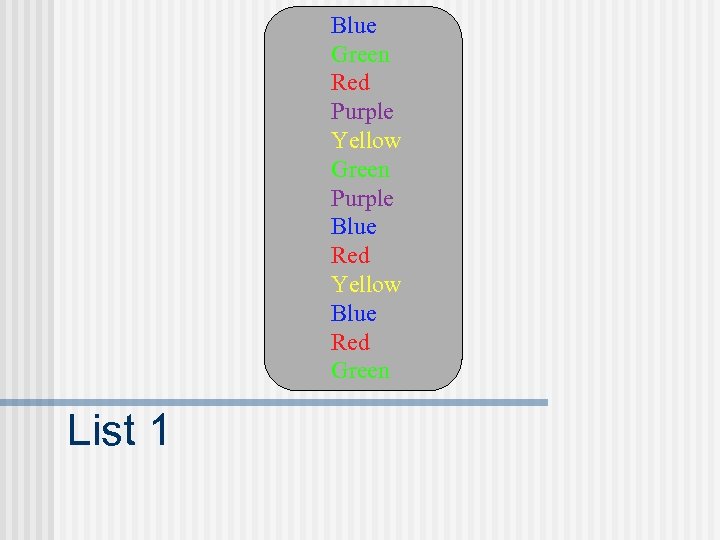

Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green List 1

n n n Okay, now it is the men’s turn. Remember the instructions: Read aloud the COLOR that the words are presented in. When done raise your hand. Okay ready?

Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green List 2

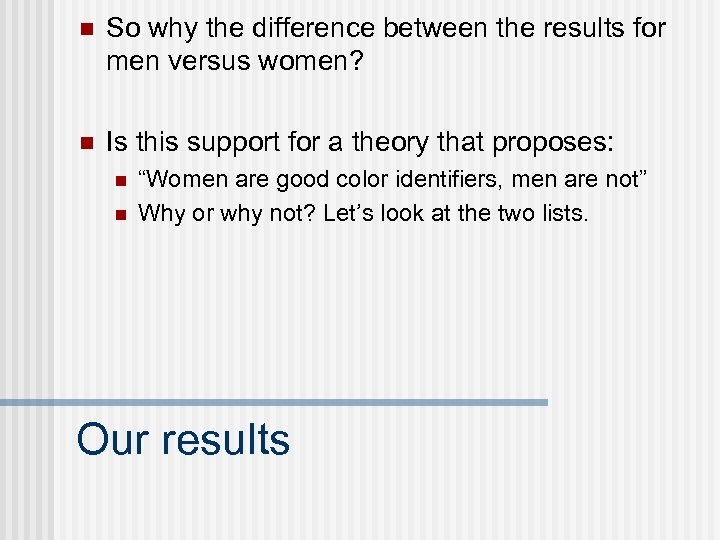

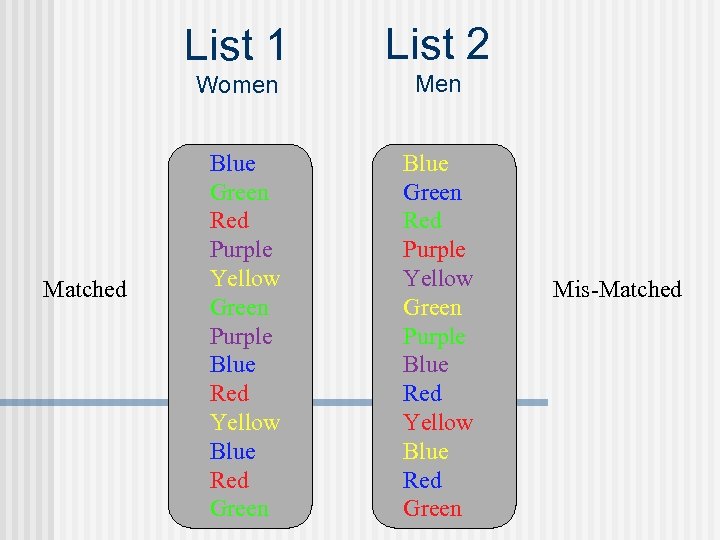

n So why the difference between the results for men versus women? n Is this support for a theory that proposes: n n “Women are good color identifiers, men are not” Why or why not? Let’s look at the two lists. Our results

List 1 Women Matched List 2 Men Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green Mis-Matched

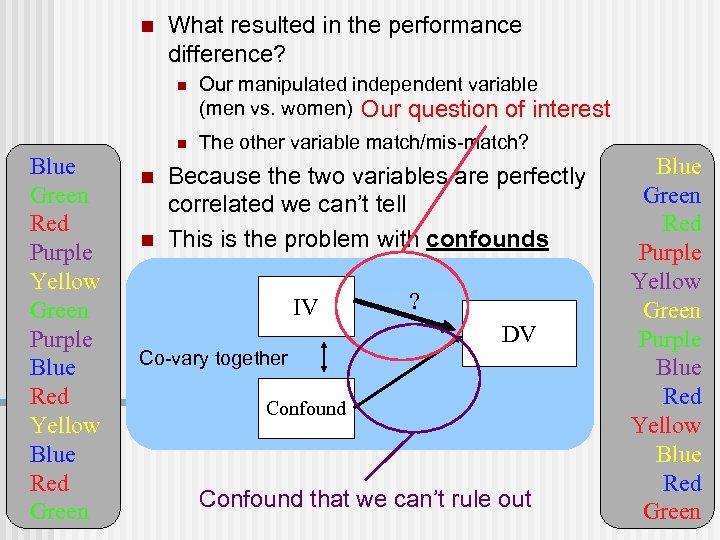

n What resulted in the performance difference? n n Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green n n Our manipulated independent variable (men vs. women) Our question of interest The other variable match/mis-match? Because the two variables are perfectly correlated we can’t tell This is the problem with confounds IV Co-vary together ? DV Confound that we can’t rule out Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green

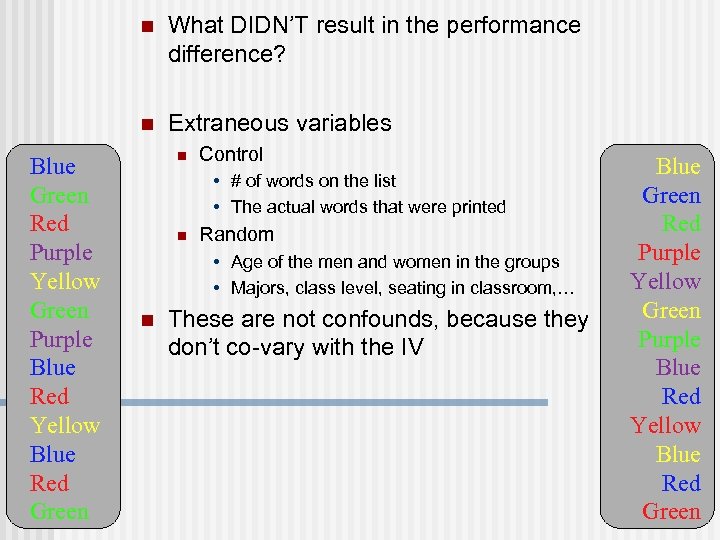

n n Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green What DIDN’T result in the performance difference? Extraneous variables n Control • # of words on the list • The actual words that were printed n Random • Age of the men and women in the groups • Majors, class level, seating in classroom, … n These are not confounds, because they don’t co-vary with the IV Blue Green Red Purple Yellow Green Purple Blue Red Yellow Blue Red Green

n Our goal: n To test the possibility of a systematic relationship between the variability in our IV and how that affects the variability of our DV. n Control is used to: • Minimize excessive variability • To reduce the potential of confounds (systematic variability not part of the research design) Experimental Control

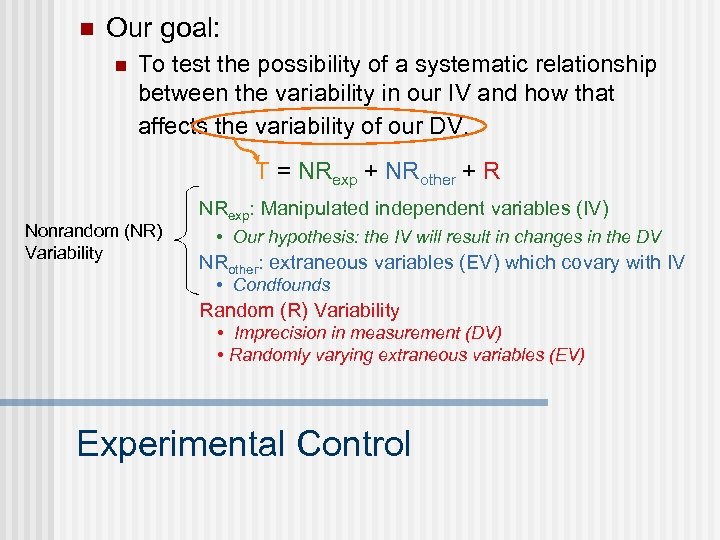

n Our goal: n To test the possibility of a systematic relationship between the variability in our IV and how that affects the variability of our DV. T = NRexp + NRother + R Nonrandom (NR) Variability NRexp: Manipulated independent variables (IV) • Our hypothesis: the IV will result in changes in the DV NRother: extraneous variables (EV) which covary with IV • Condfounds Random (R) Variability • Imprecision in measurement (DV) • Randomly varying extraneous variables (EV) Experimental Control

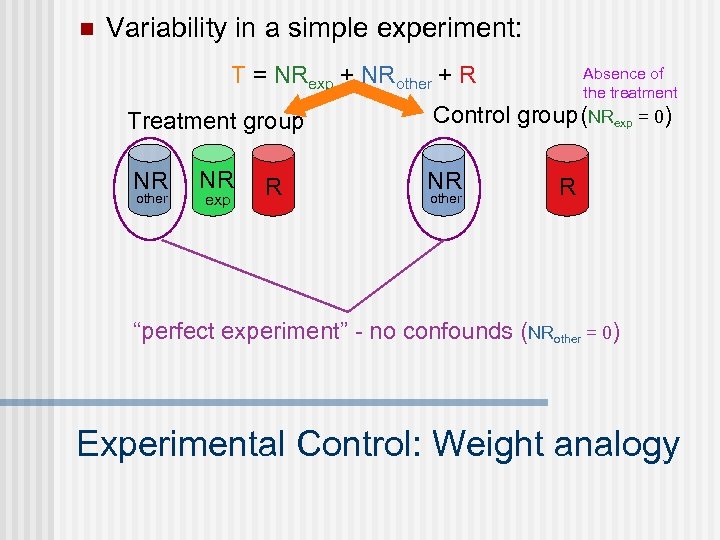

n Variability in a simple experiment: T = NRexp + NRother + R Treatment group NR other NR exp R Absence of the treatment Control group (NRexp = 0) NR other R “perfect experiment” - no confounds (NRother = 0) Experimental Control: Weight analogy

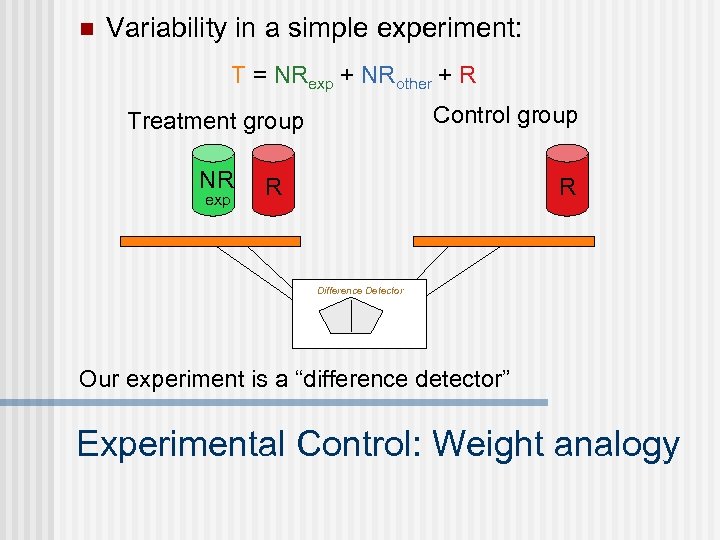

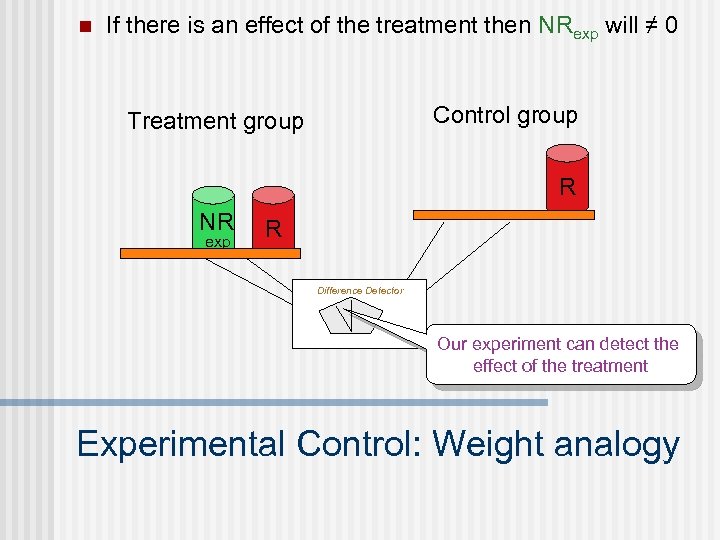

n Variability in a simple experiment: T = NRexp + NRother + R Control group Treatment group NR exp R R Difference Detector Our experiment is a “difference detector” Experimental Control: Weight analogy

n If there is an effect of the treatment then NRexp will ≠ 0 Control group Treatment group R NR exp R Difference Detector Our experiment can detect the effect of the treatment Experimental Control: Weight analogy

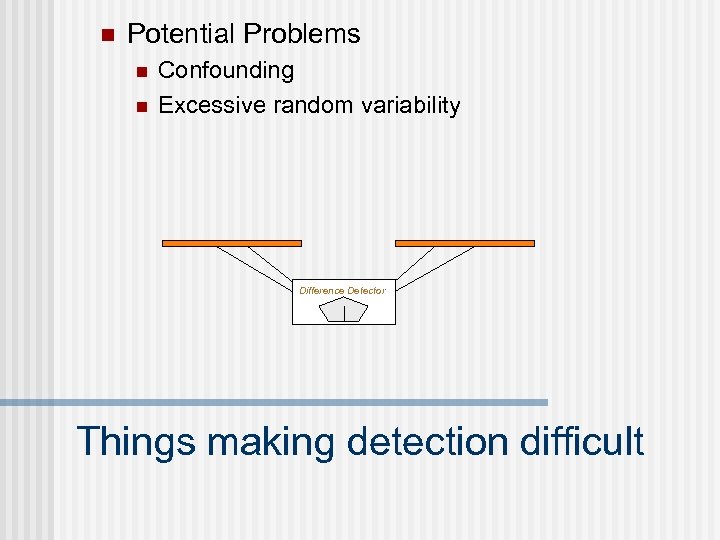

n Potential Problems n n Confounding Excessive random variability Difference Detector Things making detection difficult

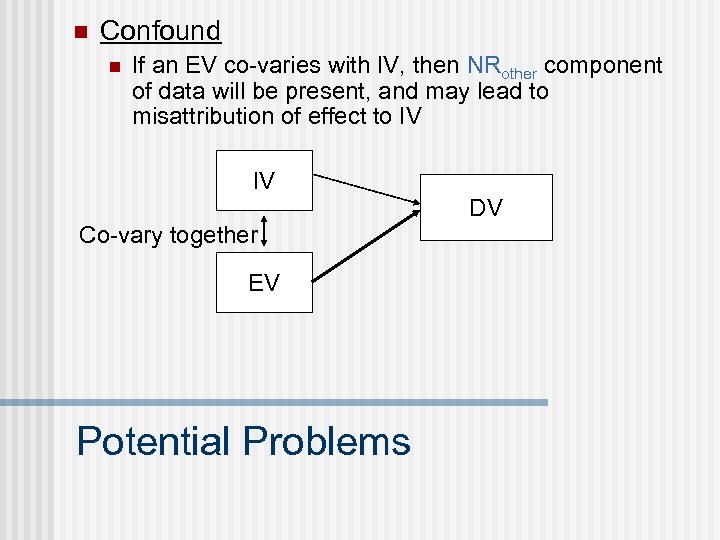

n Confound n If an EV co-varies with IV, then NRother component of data will be present, and may lead to misattribution of effect to IV IV DV Co-vary together EV Potential Problems

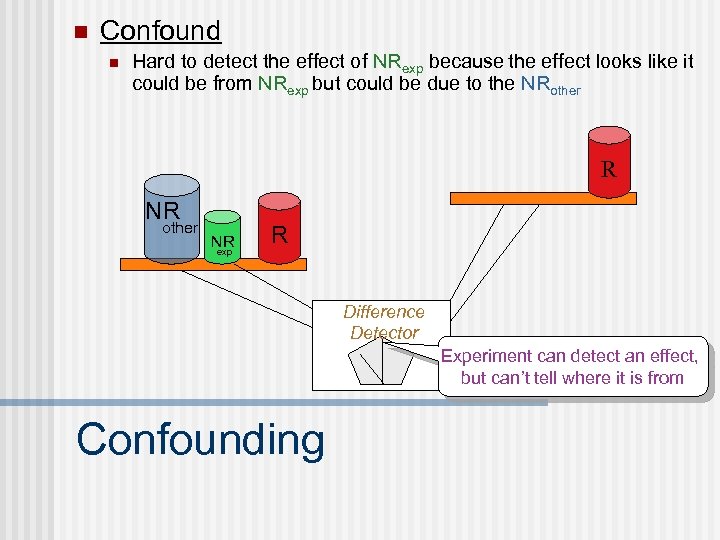

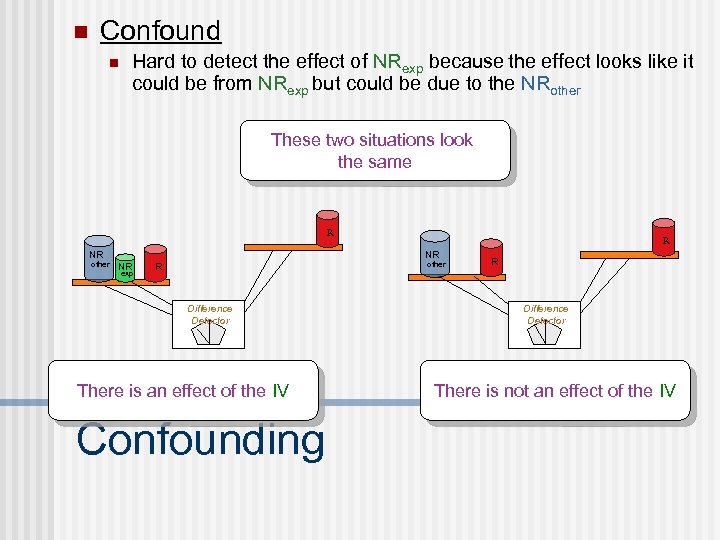

n Confound n Hard to detect the effect of NRexp because the effect looks like it could be from NRexp but could be due to the NRother R NR other NR exp R Difference Detector Experiment can detect an effect, but can’t tell where it is from Confounding

n Confound n Hard to detect the effect of NRexp because the effect looks like it could be from NRexp but could be due to the NRother These two situations look the same R NR other R NR NR exp other R Difference Detector There is an effect of the IV Confounding R Difference Detector There is not an effect of the IV

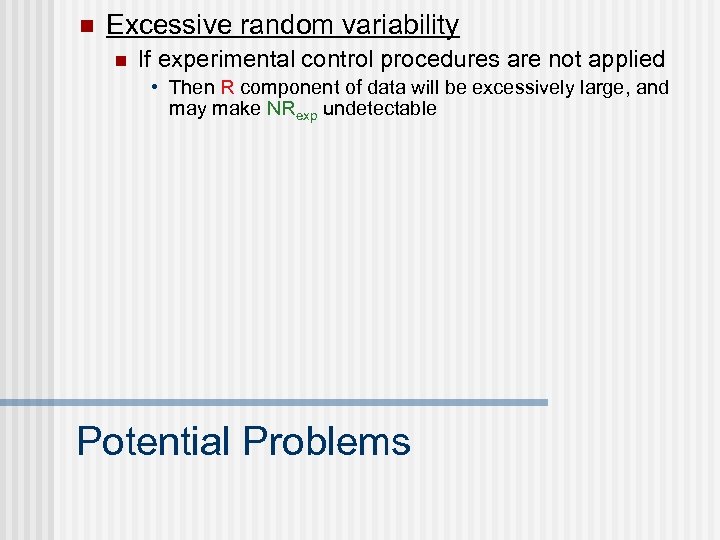

n Excessive random variability n If experimental control procedures are not applied • Then R component of data will be excessively large, and may make NRexp undetectable Potential Problems

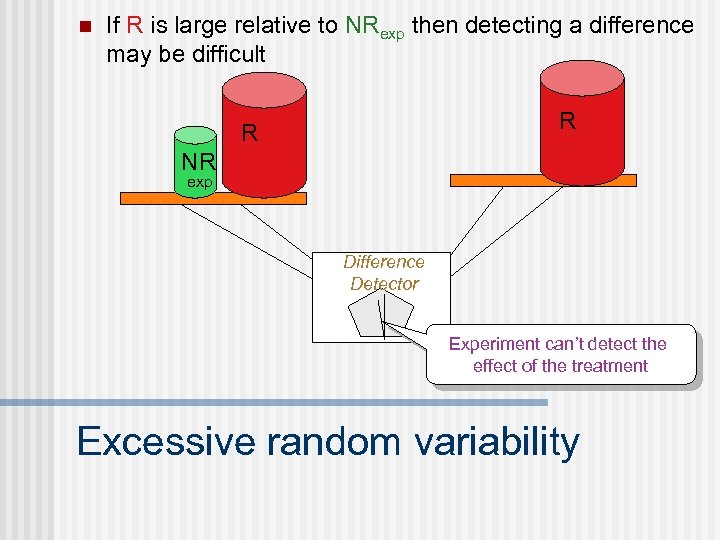

n If R is large relative to NRexp then detecting a difference may be difficult R R NR exp Difference Detector Experiment can’t detect the effect of the treatment Excessive random variability

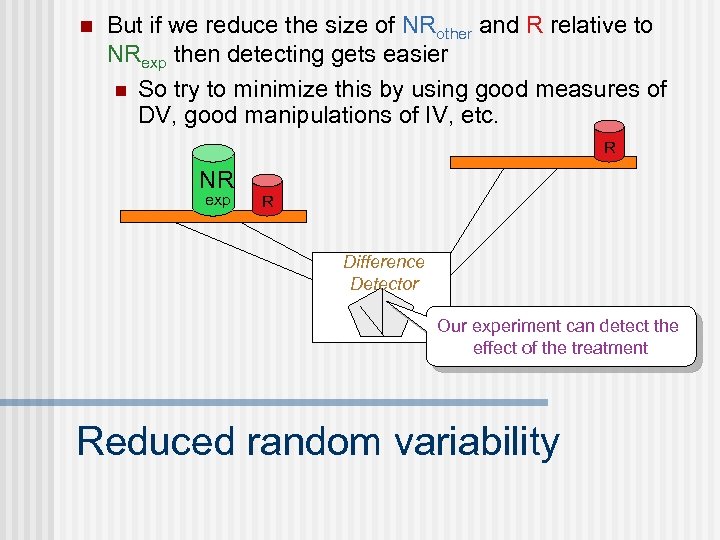

n But if we reduce the size of NRother and R relative to NRexp then detecting gets easier n So try to minimize this by using good measures of DV, good manipulations of IV, etc. R NR exp R Difference Detector Our experiment can detect the effect of the treatment Reduced random variability

n How do we introduce control? n Methods of Experimental Control • Constancy/Randomization • Comparison • Production Controlling Variability

n Constancy/Randomization n If there is a variable that may be related to the DV that you can’t (or don’t want to) manipulate • Control variable: hold it constant • Random variable: let it vary randomly across all of the experimental conditions Methods of Controlling Variability

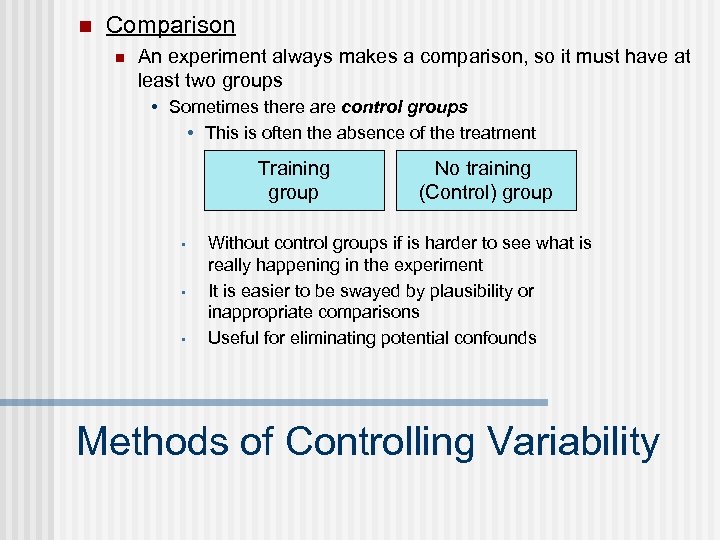

n Comparison n An experiment always makes a comparison, so it must have at least two groups • Sometimes there are control groups • This is often the absence of the treatment Training group • • • No training (Control) group Without control groups if is harder to see what is really happening in the experiment It is easier to be swayed by plausibility or inappropriate comparisons Useful for eliminating potential confounds Methods of Controlling Variability

n Comparison n An experiment always makes a comparison, so it must have at least two groups • Sometimes there are control groups • This is often the absence of the treatment • Sometimes there a range of values of the IV 1 week of Training group 2 weeks of Training group 3 weeks of Training group Methods of Controlling Variability

n Production n The experimenter selects the specific values of the Independent Variables 1 week of Training group 2 weeks of Training group 3 weeks of Training group • Need to do this carefully • Suppose that you don’t find a difference in the DV across your different groups • Is this because the IV and DV aren’t related? • Or is it because your levels of IV weren’t different enough Methods of Controlling Variability

n n So far we’ve covered a lot of the about details experiments generally Now let’s consider some specific experimental designs. n n Some bad (but common) designs Some good designs • • 1 Factor, two levels 1 Factor, multi-levels Between & within factors Factorial (more than 1 factor) Experimental designs

n Bad design example 1: Does standing close to somebody cause them to move? n n n “hmm… that’s an empirical question. Let’s see what happens if …” So you stand closely to people and see how long before they move Problem: no control group to establish the comparison group (this design is sometimes called “one-shot case study design”) Poorly designed experiments

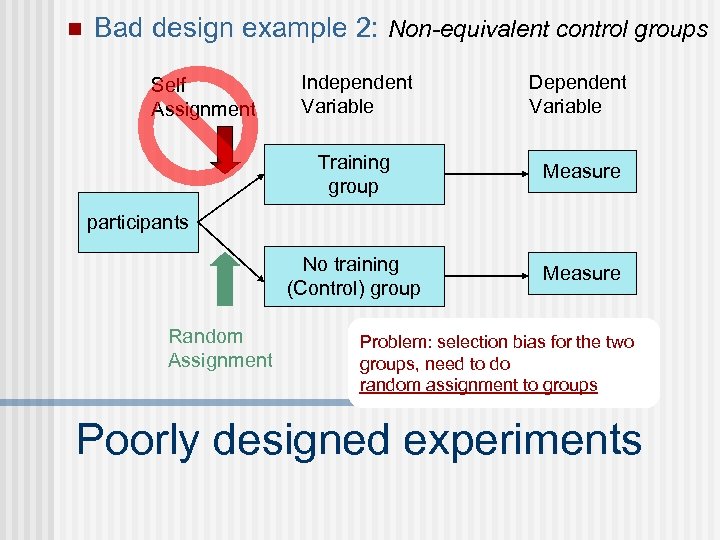

n Bad design example 2: n n Testing the effectiveness of a stop smoking relaxation program The participants choose which group (relaxation or no program) to be in Poorly designed experiments

n Bad design example 2: Non-equivalent control groups Independent Variable Dependent Variable Training group Measure No training (Control) group Self Assignment Measure participants Random Assignment Problem: selection bias for the two groups, need to do random assignment to groups Poorly designed experiments

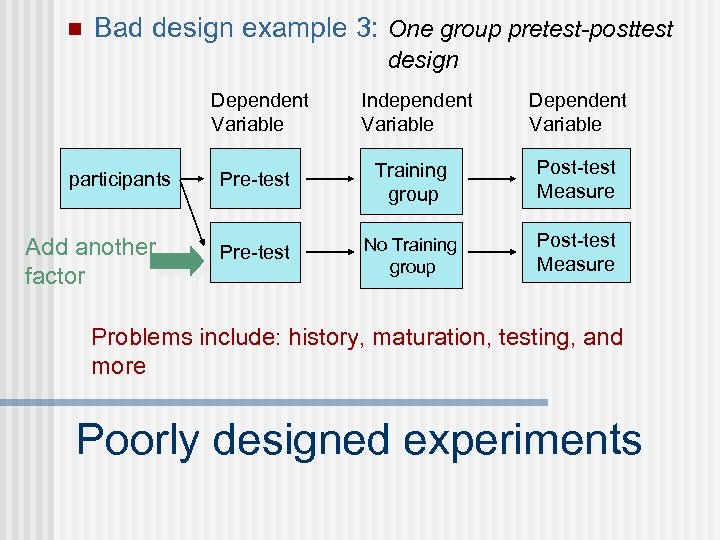

n Bad design example 3: Does a relaxation program decrease the urge to smoke? n Pretest desire level – give relaxation program – posttest desire to smoke Poorly designed experiments

n Bad design example 3: One group pretest-posttest design Dependent Variable participants Add another factor Independent Variable Dependent Variable Pre-test Training group Post-test Measure Pre-test No Training group Post-test Measure Problems include: history, maturation, testing, and more Poorly designed experiments

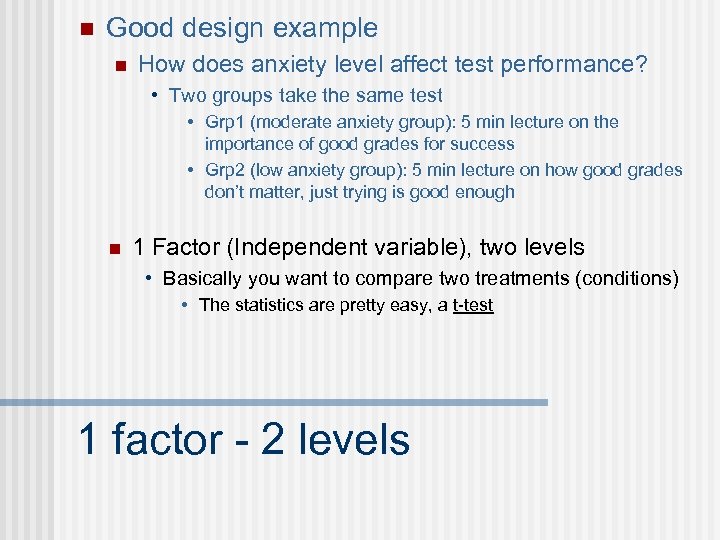

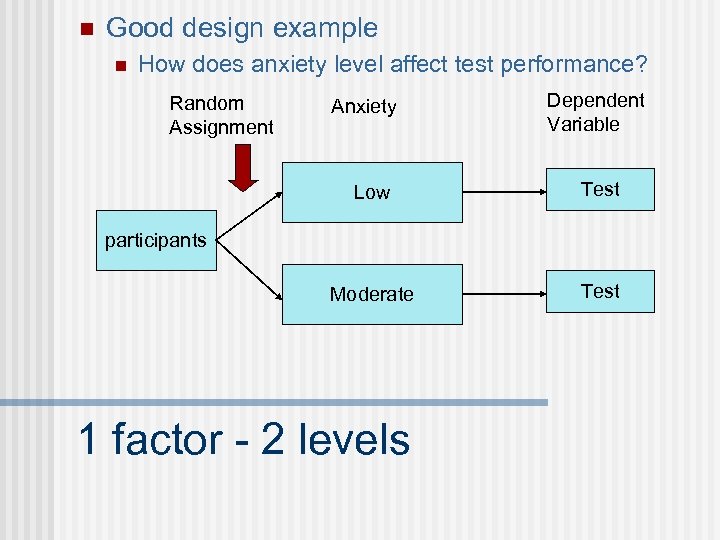

n Good design example n How does anxiety level affect test performance? • Two groups take the same test • Grp 1 (moderate anxiety group): 5 min lecture on the importance of good grades for success • Grp 2 (low anxiety group): 5 min lecture on how good grades don’t matter, just trying is good enough n 1 Factor (Independent variable), two levels • Basically you want to compare two treatments (conditions) • The statistics are pretty easy, a t-test 1 factor - 2 levels

n Good design example n How does anxiety level affect test performance? Random Assignment Anxiety Dependent Variable Low Test Moderate Test participants 1 factor - 2 levels

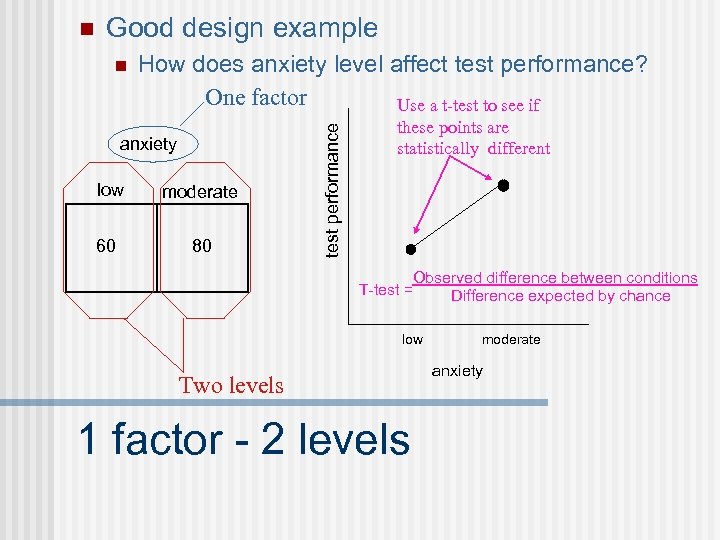

Good design example n How does anxiety level affect test performance? One factor Use a t-test to see if anxiety low moderate 60 80 test performance n these points are statistically different Observed difference between conditions T-test = Difference expected by chance low Two levels 1 factor - 2 levels moderate anxiety

n Advantages: n n Simple, relatively easy to interpret the results Is the independent variable worth studying? • If no effect, then usually don’t bother with a more complex design n Sometimes two levels is all you need • One theory predicts one pattern and another predicts a different pattern 1 factor - 2 levels

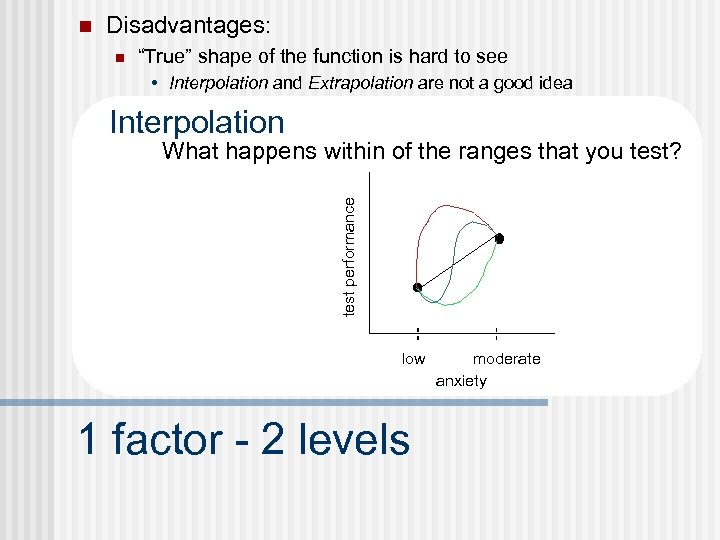

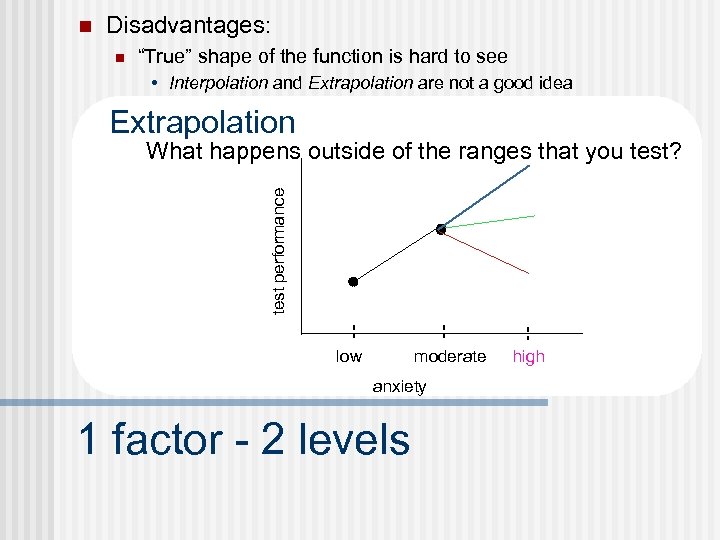

n Disadvantages: n “True” shape of the function is hard to see • Interpolation and Extrapolation are not a good idea Interpolation test performance What happens within of the ranges that you test? low 1 factor - 2 levels moderate anxiety

n Disadvantages: n “True” shape of the function is hard to see • Interpolation and Extrapolation are not a good idea Extrapolation test performance What happens outside of the ranges that you test? low moderate anxiety 1 factor - 2 levels high

n n For more complex theories you will typically need more complex designs (more than two levels of one IV) 1 factor - more than two levels n n Basically you want to compare more than two conditions The statistics are a little more difficult, an ANOVA (Analysis of Variance) 1 Factor - multilevel experiments

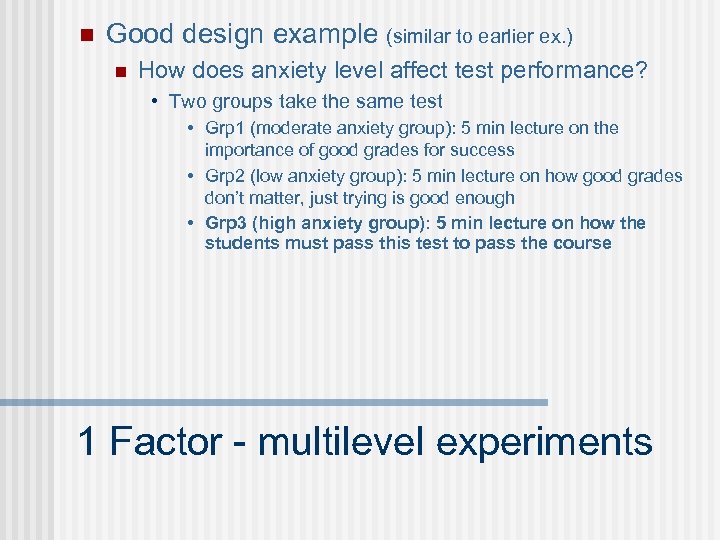

n Good design example (similar to earlier ex. ) n How does anxiety level affect test performance? • Two groups take the same test • Grp 1 (moderate anxiety group): 5 min lecture on the importance of good grades for success • Grp 2 (low anxiety group): 5 min lecture on how good grades don’t matter, just trying is good enough • Grp 3 (high anxiety group): 5 min lecture on how the students must pass this test to pass the course 1 Factor - multilevel experiments

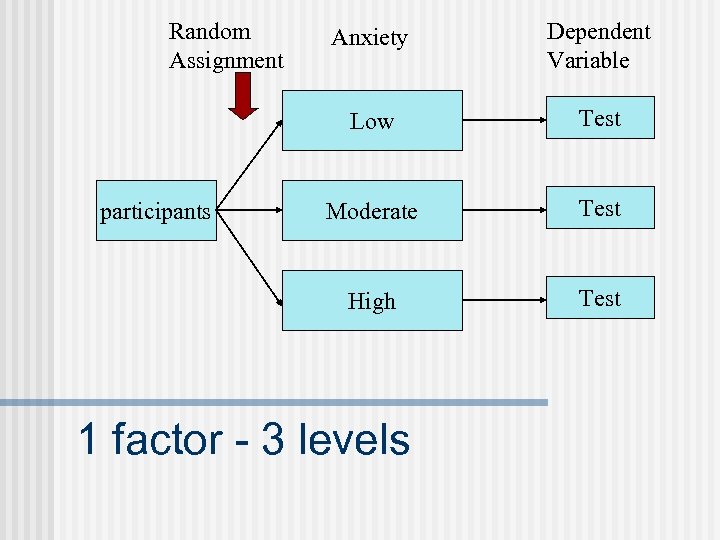

Random Assignment Dependent Variable Low Test Moderate Test High participants Anxiety Test 1 factor - 3 levels

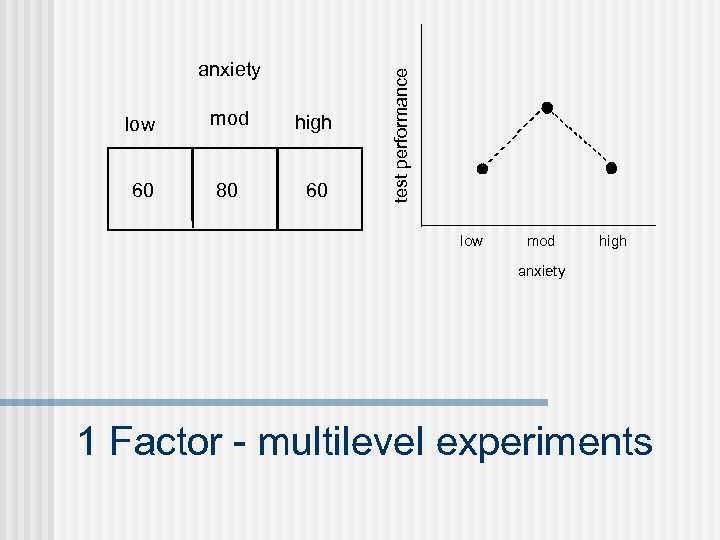

low mod high 60 80 60 test performance anxiety low mod high anxiety 1 Factor - multilevel experiments

n Advantages n n Gives a better picture of the relationship (function) Generally, the more levels you have, the less you have to worry about your range of the independent variable 1 Factor - multilevel experiments

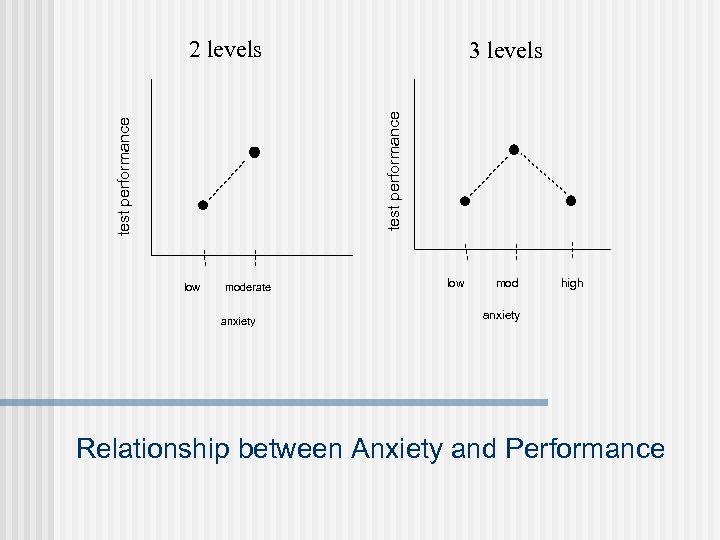

2 levels test performance 3 levels low moderate anxiety low mod high anxiety Relationship between Anxiety and Performance

n Disadvantages n n Needs more resources (participants and/or stimuli) Requires more complex statistical analysis (analysis of variance and pair-wise comparisons) 1 Factor - multilevel experiments

n The ANOVA just tells you that not all of the groups are equal. n If this is your conclusion (you get a “significant ANOVA”) then you should do further tests to see where the differences are • High vs. Low • High vs. Moderate • Low vs. Moderate Pair-wise comparisons

beff1aa4267989306fbc75d392b090a2.ppt