54c4edc6c55fd2ff61617dcd272dd0e2.ppt

- Количество слайдов: 128

Evolutionary Computation (진 화 연 산) 장병탁 서울대 컴퓨터공학부 E-mail: btzhang@cse. snu. ac. kr http: //scai. snu. ac. kr. /~btzhang/ Byoung-Tak Zhang School of Computer Science and Engineering Seoul National University This material is also available online at http: //scai. snu. ac. kr/ (c) 2000 Byoung-Tak Zhang, SNU CSE

Outline 1. Basic Concepts 2. Theoretical Backgrounds 3. Applications 4. Current Issues 5. References and URLs 2

1. Basic Concepts 3

Charles Darwin (1859) “Owing to this struggle for life, any variation, however slight and from whatever cause proceeding, if it be in any degree profitable to an individual of any species, in its infinitely complex relations to other organic beings and to external nature, will tend to the preservation of that individual, and will generally be inherited by its offspring. ” 4

Evolutionary Algorithms l l l A Computational Model Inspired by Natural Evolution and Genetics Proved Useful for Search, Machine Learning and Optimization Population-Based Search (vs. Point-Based Search) Probabilistic Search (vs. Deterministic Search) Collective Learning (vs. Individual Learning) Balance of Exploration (Global Search) and Exploitation (Local Search) 5

Biological Terminology l l l Gene 4 Functional entity that codes for a specific feature e. g. eye color 4 Set of possible alleles Allele 4 Value of a gene e. g. blue, green, brown 4 Codes for a specific variation of the gene/feature Locus 4 Position of a gene on the chromosome Genome 4 Set of all genes that define a species 4 The genome of a specific individual is called genotype 4 The genome of a living organism is composed of several 4 Chromosomes Population 4 Set of competing genomes/individuals 6

Analogy to Evolutionary Biology l Individual (Chromosome) = Possible Solution l Population = A Collection of Possible Solutions l Fitness = Goodness of Solutions l Selection (Reproduction) = Survival of the Fittest l Crossover = Recombination of Partial Solutions l Mutation = Alteration of an Existing Solution 7

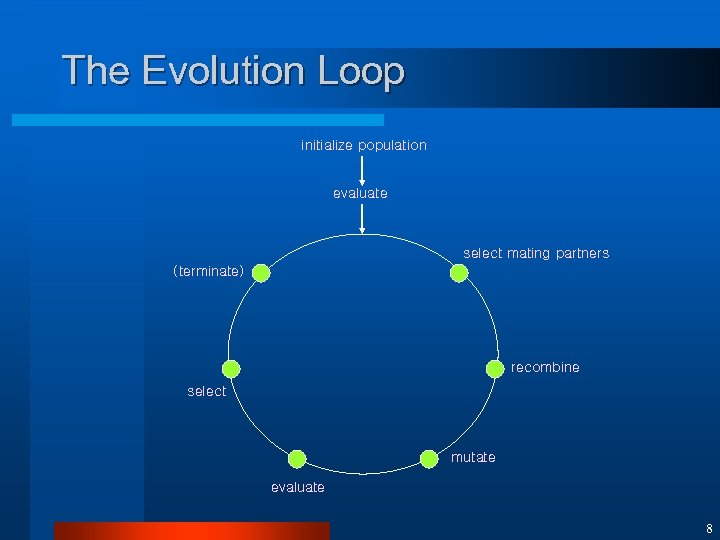

The Evolution Loop initialize population evaluate select mating partners (terminate) recombine select mutate evaluate 8

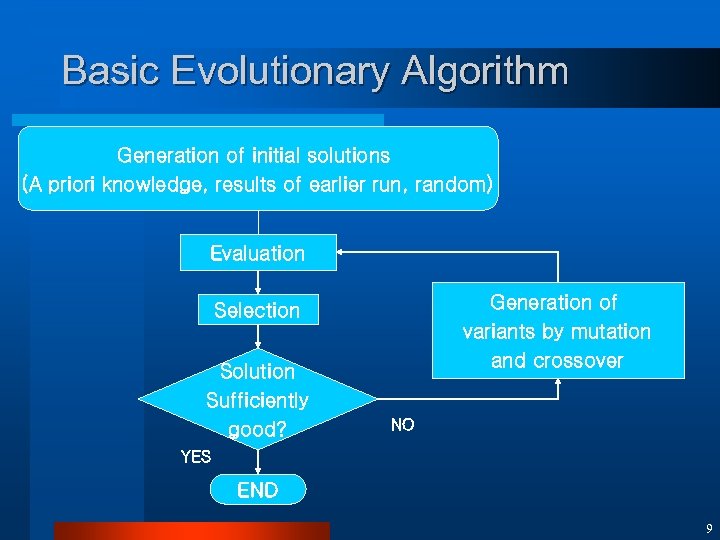

Basic Evolutionary Algorithm Generation of initial solutions (A priori knowledge, results of earlier run, random) Evaluation Generation of variants by mutation and crossover Selection Solution Sufficiently good? NO YES END 9

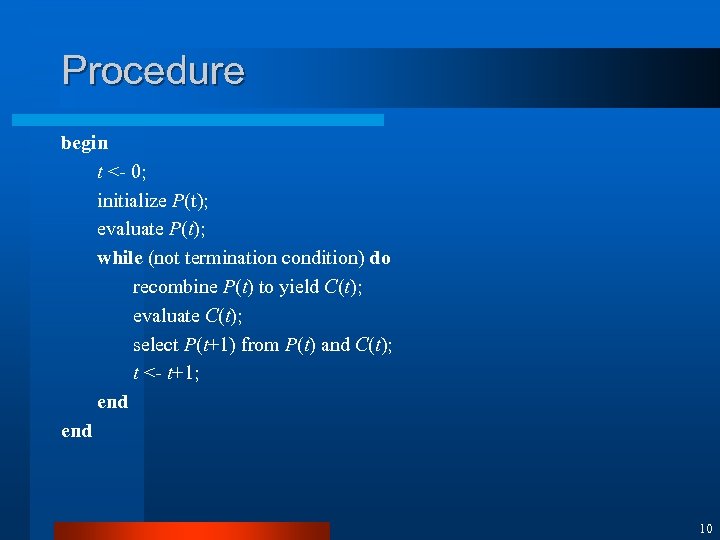

Procedure begin t <- 0; initialize P(t); evaluate P(t); while (not termination condition) do recombine P(t) to yield C(t); evaluate C(t); select P(t+1) from P(t) and C(t); t <- t+1; end 10

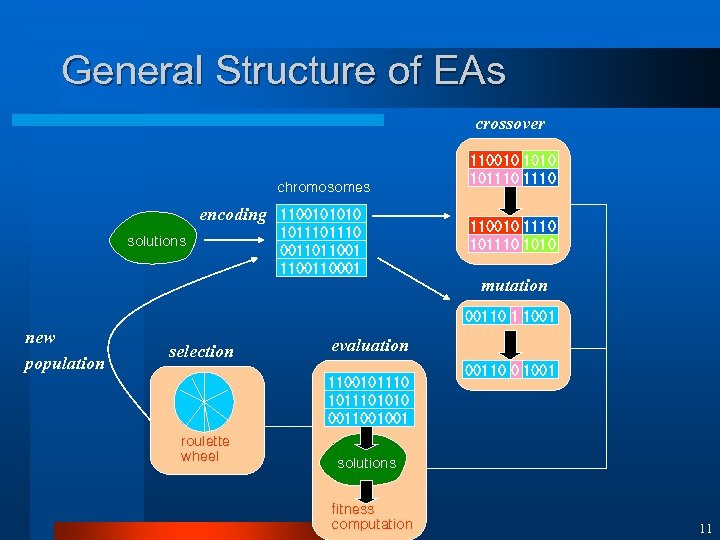

General Structure of EAs crossover chromosomes encoding solutions 1100101010 101110 0011011001 110001 110010 101110 110010 1110 1010 mutation 00110 1 1001 new population selection evaluation 11001011101010 0011001001 roulette wheel 00110 0 1001 solutions fitness computation 11

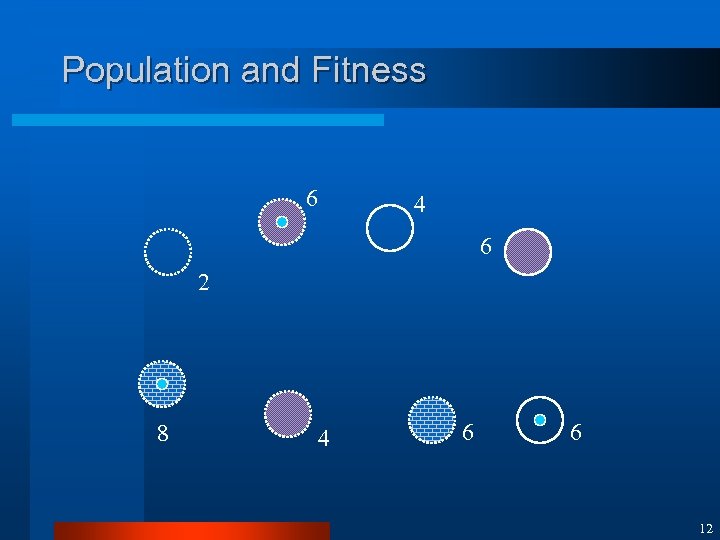

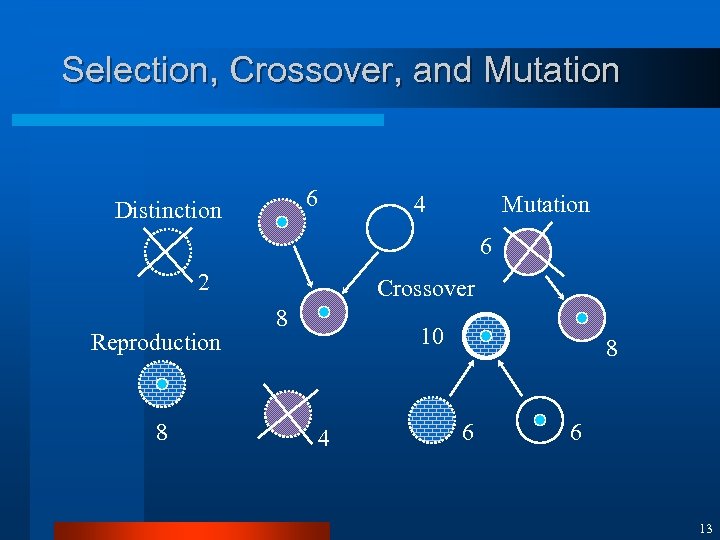

Population and Fitness 6 4 6 2 8 4 6 6 12

Selection, Crossover, and Mutation 6 Distinction 4 Mutation 6 2 Reproduction 8 Crossover 8 10 4 8 6 6 13

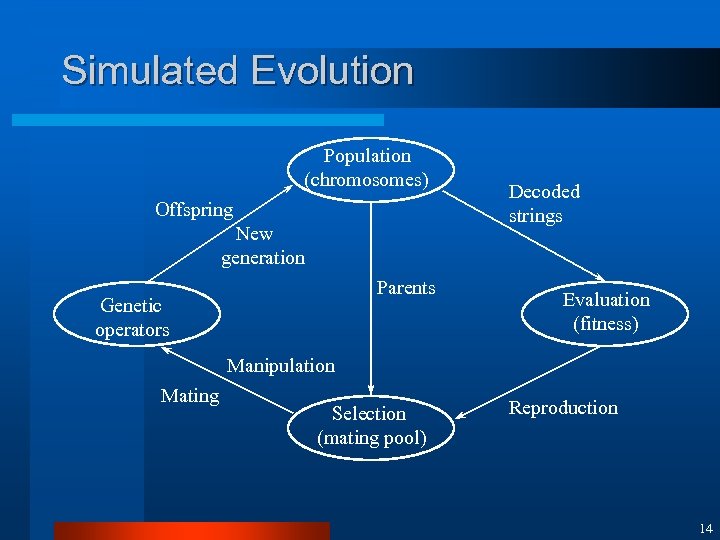

Simulated Evolution Population (chromosomes) Offspring New generation Parents Genetic operators Decoded strings Evaluation (fitness) Manipulation Mating Selection (mating pool) Reproduction 14

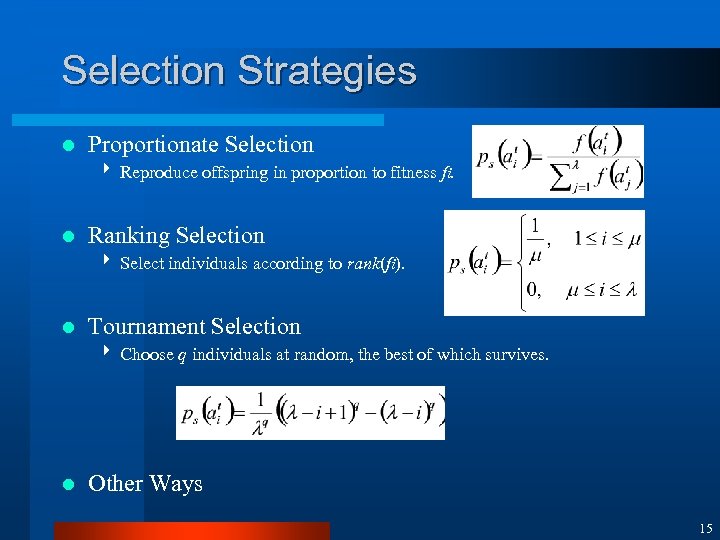

Selection Strategies l Proportionate Selection 4 Reproduce offspring in proportion to fitness fi. l Ranking Selection 4 Select individuals according to rank(fi). l Tournament Selection 4 Choose q individuals at random, the best of which survives. l Other Ways 15

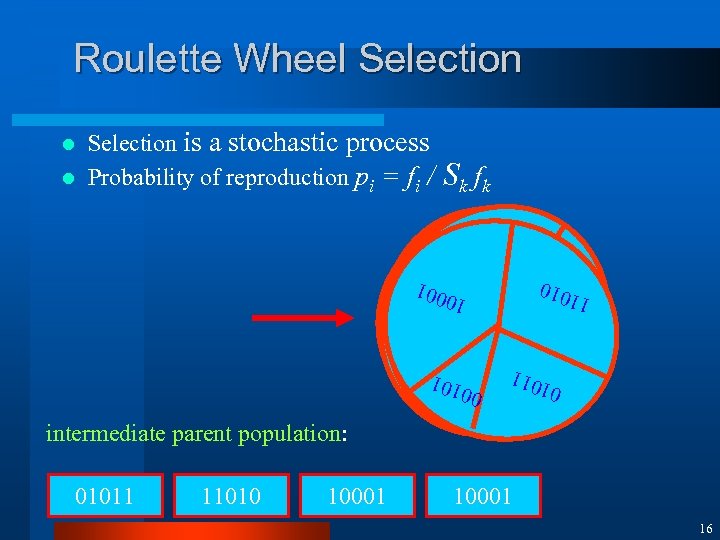

Roulette Wheel Selection is a stochastic process l Probability of reproduction pi = fi / l Sk fk 10 1 00 100 0 0 01 1 001 0 11 010 1 10001 0 00 10 1 01 100 1 11010 1 1 10001 1 00 0 01011 01 001 1 10 11010 intermediate parent population: 1 01011 00 10 1 11 11010 01 0 00101 10001 16

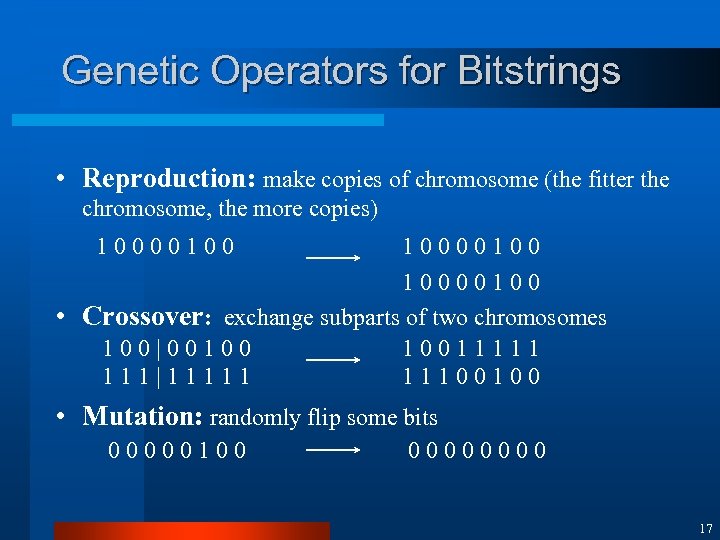

Genetic Operators for Bitstrings • Reproduction: make copies of chromosome (the fitter the chromosome, the more copies) 10000100 • Crossover: exchange subparts of two chromosomes 100|00100 10011111 111|11111 11100100 • Mutation: randomly flip some bits 00000100 0000 17

Mutation For a binary string, just randomly “flip” a bit l For a more complex structure, randomly select a site, delete the structure associated with this site, and randomly create a new sub-structure l Some EAs just use mutation (no crossover) l Normally, however, mutation is used to search in the “local search space”, by allowing small changes in the genotype (and therefore hopefully in the phenotype) l 18

Recombination (Crossover) Crossover is used to swap (fit) parts of individuals, in a similar way to sexual reproduction l Parents are selected based on fitness l Crossover sites selected (randomly, although other mechanisms exist), with some prob. l Parts of the parents are exchanged to produce children l 19

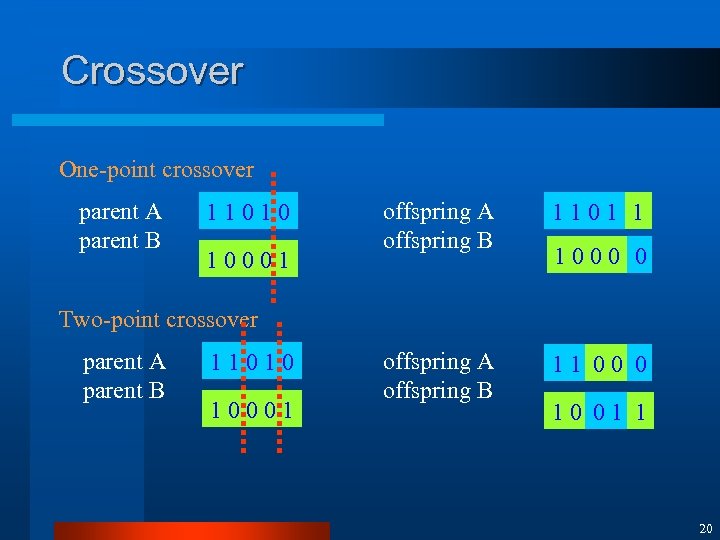

Crossover One-point crossover parent A parent B 11010 10001 offspring A offspring B 1101 1 offspring A offspring B 11 00 0 1000 0 Two-point crossover parent A parent B 11010 10001 10 01 1 20

2. Theoretical Backgrounds 21

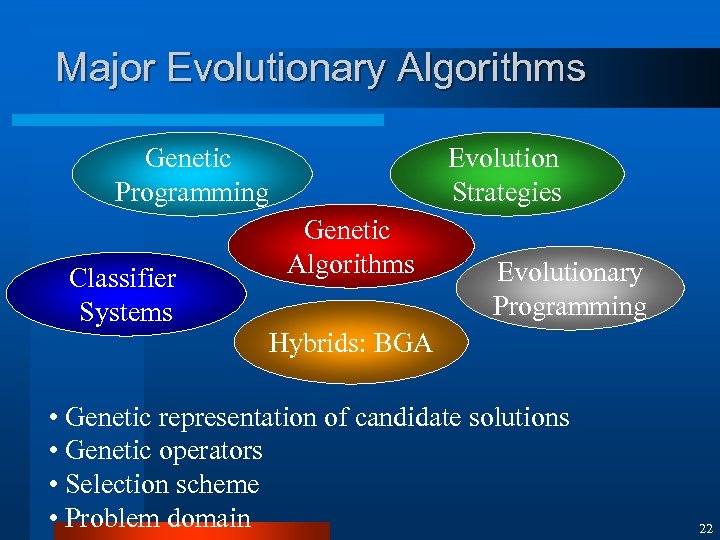

Major Evolutionary Algorithms Genetic Programming Classifier Systems Evolution Strategies Genetic Algorithms Evolutionary Programming Hybrids: BGA • Genetic representation of candidate solutions • Genetic operators • Selection scheme • Problem domain 22

Variants of Evolutionary Algorithms l l l Genetic Algorithm (Holland et al. , 1960’s) 4 Bitstrings, mainly crossover, proportionate selection Evolution Strategy (Rechenberg et al. , 1960’s) 4 Real values, mainly mutation, truncation selection Evolutionary Programming (Fogel et al. , 1960’s) 4 FSMs, mutation only, tournament selection Genetic Programming (Koza, 1990) 4 Trees, mainly crossover, proportionate selection Hybrids: BGA (Muehlenbein et al. , 1993) BGP (Zhang et al. , 1995) and others. 23

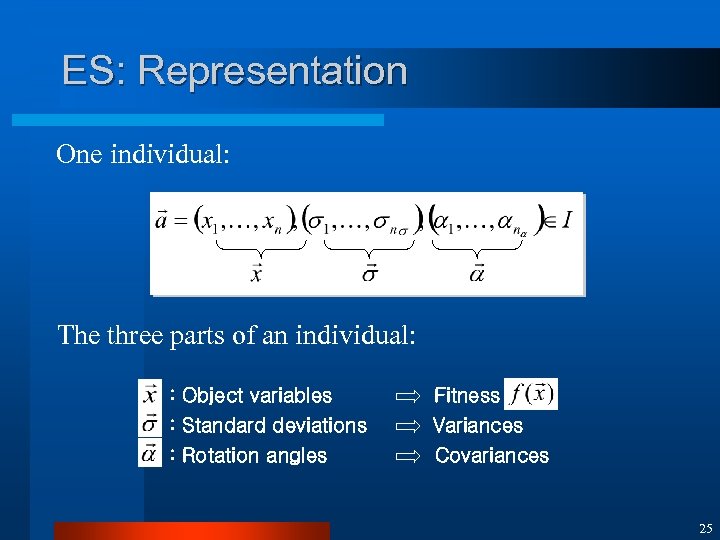

Evolution Strategy (ES) l Problem of real-valued optimization Find extremum (minimum) of function F(X): Rn ->R Operate directly on real-valued vector X l Generate new solutions through Gaussian mutation of all components l Selection mechanism for determining new parents l 24

ES: Representation One individual: The three parts of an individual: : Object variables : Standard deviations : Rotation angles Fitness Variances Covariances 25

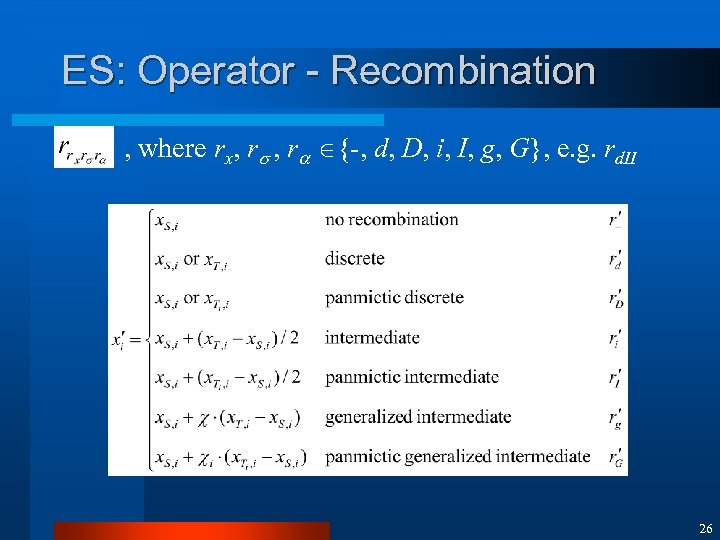

ES: Operator - Recombination , where rx, r {-, d, D, i, I, g, G}, e. g. rd. II 26

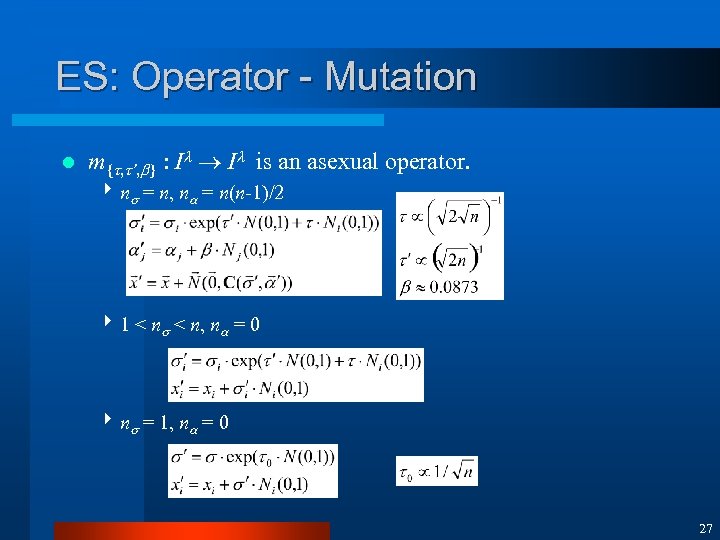

ES: Operator - Mutation l m{ , ’, } : I I is an asexual operator. 4 n = n, n = n(n-1)/2 4 1 < n, n = 0 4 n = 1, n = 0 27

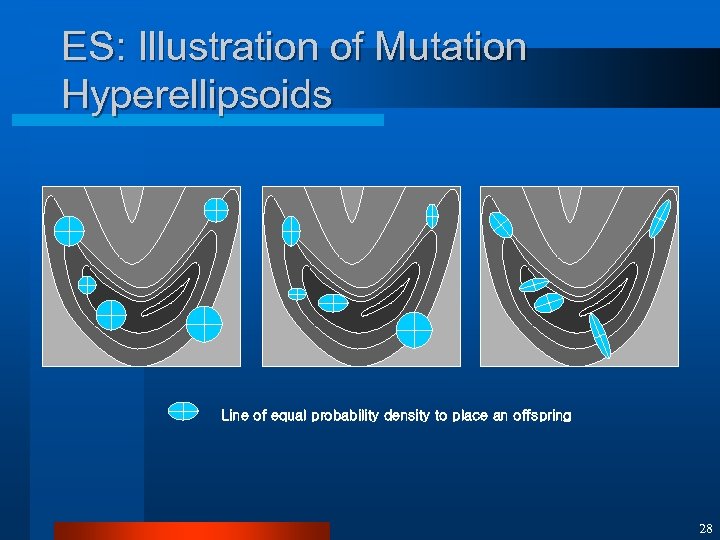

ES: Illustration of Mutation Hyperellipsoids Line of equal probability density to place an offspring 28

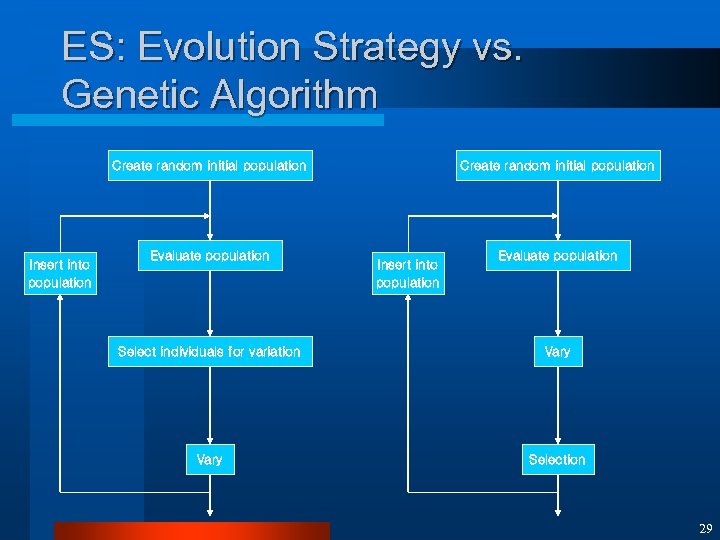

ES: Evolution Strategy vs. Genetic Algorithm Create random initial population Insert into population Evaluate population Select individuals for variation Vary Selection 29

Evolutionary Programming (EP) l Original form (L. J. Fogel) 4 Uniform random mutations 4 Discrete alphabets 4 selection l Extended Evolutionary Programming (D. B. Fogel) 4 Continuous parameter optimization 4 Similarities with ES • Normally distributed mutation • Self-adaptation of mutation parameters 30

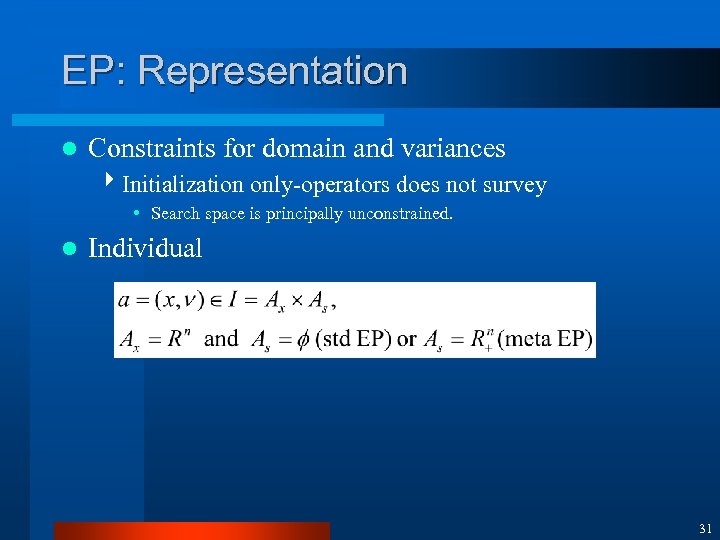

EP: Representation l Constraints for domain and variances 4 Initialization only-operators does not survey • Search space is principally unconstrained. l Individual 31

EP: Operator - Recombination l No recombination 4 Gaussian mutation does better (Fogel and Atmar). • Not all situations 4 Evolutionary biology • The role of crossover is often overemphasized. 4 Mutation-enhancing evolutionary optimization 4 Crossover-segregating defects 4 The main point of view from researchers in the field of Genetic Algorithms 32

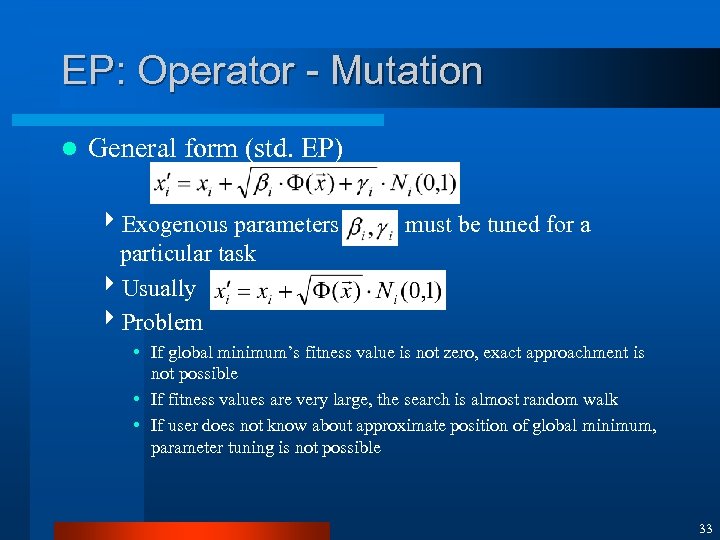

EP: Operator - Mutation l General form (std. EP) 4 Exogenous parameters particular task 4 Usually 4 Problem must be tuned for a • If global minimum’s fitness value is not zero, exact approachment is not possible • If fitness values are very large, the search is almost random walk • If user does not know about approximate position of global minimum, parameter tuning is not possible 33

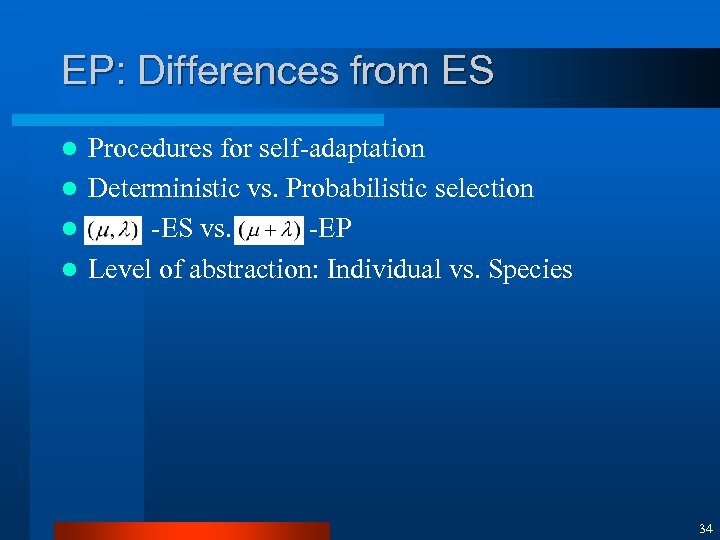

EP: Differences from ES Procedures for self-adaptation l Deterministic vs. Probabilistic selection l -ES vs. -EP l Level of abstraction: Individual vs. Species l 34

Genetic Programming Applies principles of natural selection to computer search and optimization problems - has advantages over other procedures for “badly behaved” solution spaces [Koza, 1992] l Genetic programming uses variable-size tree-representations rather than fixed-length strings of binary values. l Program tree = S-expression = LISP parse tree l Tree = Functions (Nonterminals) + Terminals l 35

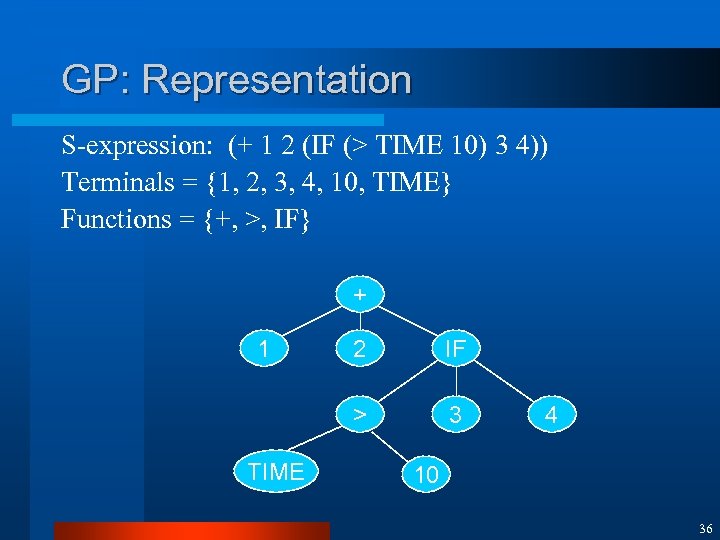

GP: Representation S-expression: (+ 1 2 (IF (> TIME 10) 3 4)) Terminals = {1, 2, 3, 4, 10, TIME} Functions = {+, >, IF} + 1 IF > TIME 2 3 4 10 36

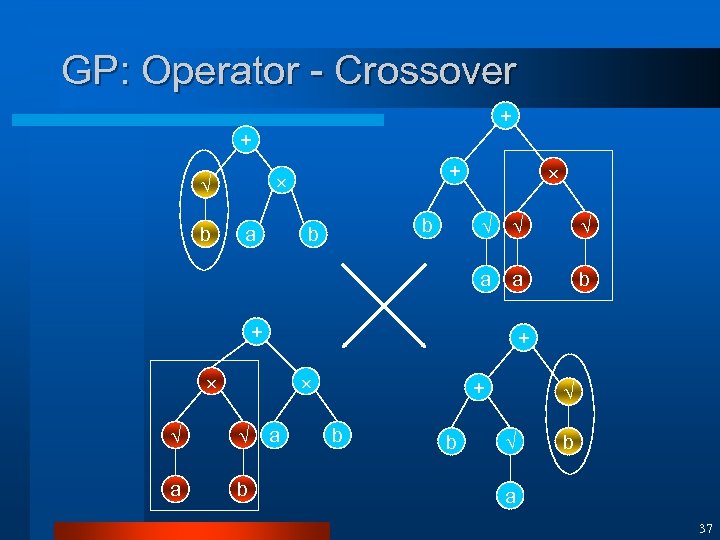

GP: Operator - Crossover + + + a b b a b + + a b a + b b b a 37

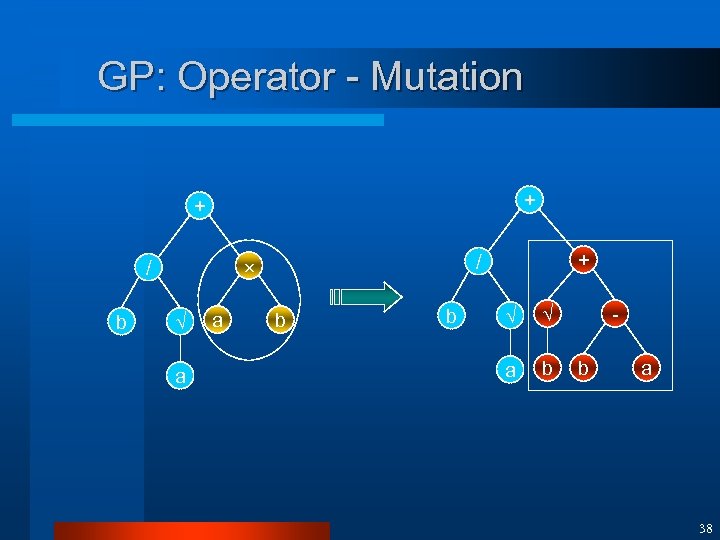

GP: Operator - Mutation + + b a a + / / b b a b b a 38

![Breeder GP (BGP) [Zhang and Muehlenbein, 1993, 1995] ES (real-vector) GA (bitstring) GP (tree) Breeder GP (BGP) [Zhang and Muehlenbein, 1993, 1995] ES (real-vector) GA (bitstring) GP (tree)](https://present5.com/presentation/54c4edc6c55fd2ff61617dcd272dd0e2/image-39.jpg)

Breeder GP (BGP) [Zhang and Muehlenbein, 1993, 1995] ES (real-vector) GA (bitstring) GP (tree) Muehlenbein et al. (1993) Breeder GA (BGA) (real-vector + bitstring) Zhang et al. (1993) Breeder GP (BGP) (tree + real-vector + bitstring) 39

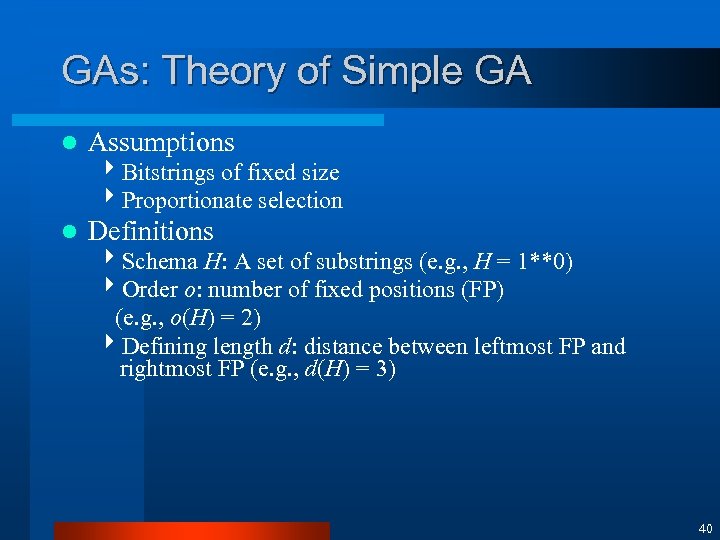

GAs: Theory of Simple GA l Assumptions l Definitions 4 Bitstrings of fixed size 4 Proportionate selection 4 Schema H: A set of substrings (e. g. , H = 1**0) 4 Order o: number of fixed positions (FP) (e. g. , o(H) = 2) 4 Defining length d: distance between leftmost FP and rightmost FP (e. g. , d(H) = 3) 40

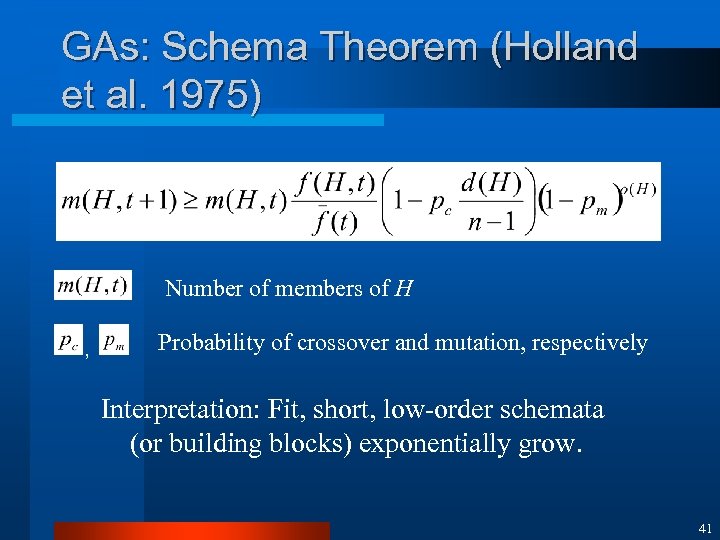

GAs: Schema Theorem (Holland et al. 1975) Number of members of H , Probability of crossover and mutation, respectively Interpretation: Fit, short, low-order schemata (or building blocks) exponentially grow. 41

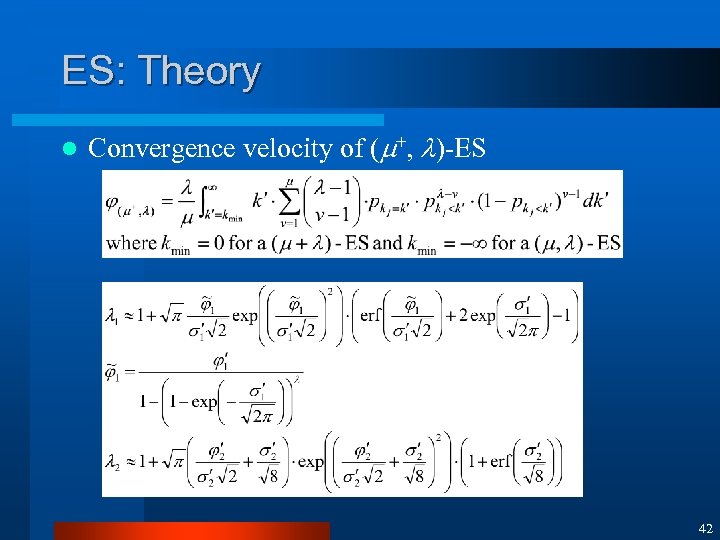

ES: Theory l Convergence velocity of ( +, )-ES 42

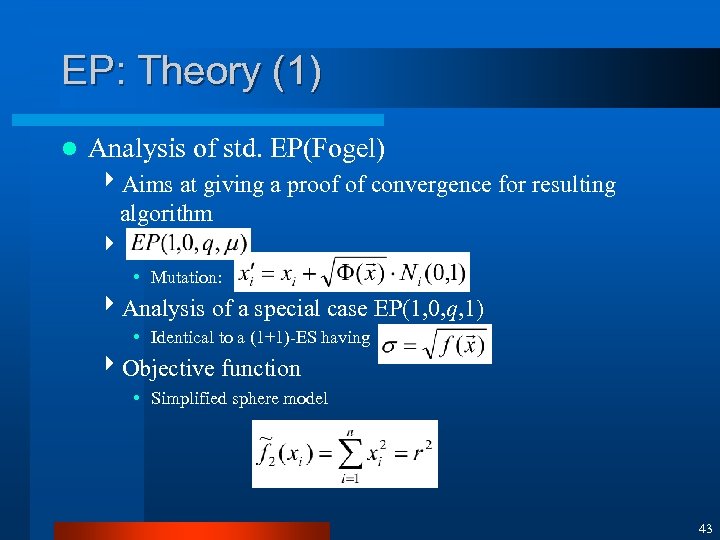

EP: Theory (1) l Analysis of std. EP(Fogel) 4 Aims at giving a proof of convergence for resulting algorithm 4 • Mutation: 4 Analysis of a special case EP(1, 0, q, 1) • Identical to a (1+1)-ES having 4 Objective function • Simplified sphere model 43

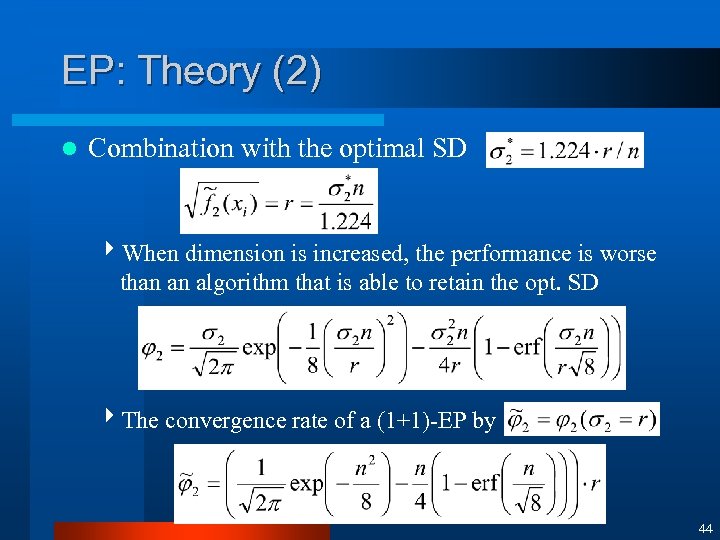

EP: Theory (2) l Combination with the optimal SD 4 When dimension is increased, the performance is worse than an algorithm that is able to retain the opt. SD 4 The convergence rate of a (1+1)-EP by 44

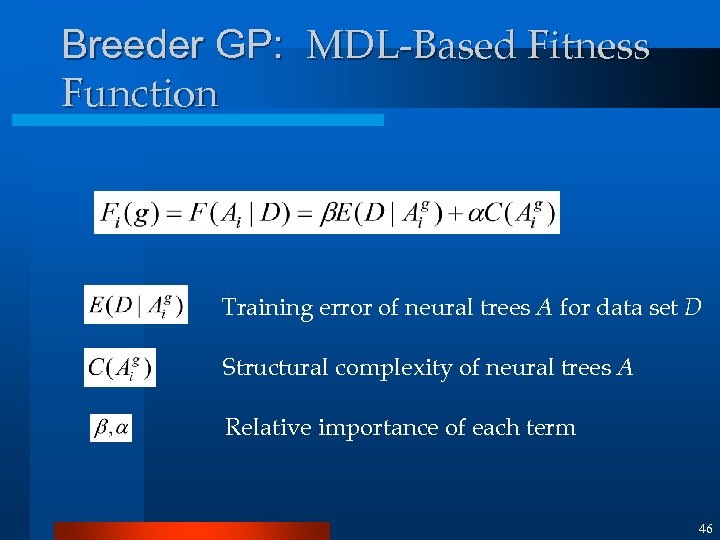

Breeder GP: Motivation for GP Theory In GP, parse trees of Lisp-like programs are used as chromosomes. l Performance of programs are evaluated by training error and the program size tends to grow as training error decreases. l Eventual goal of learning is to get small generalization error and the generalization error tends to increase as program size grows. l How to control the program growth? l 45

Breeder GP: MDL-Based Fitness Function Training error of neural trees A for data set D Structural complexity of neural trees A Relative importance of each term 46

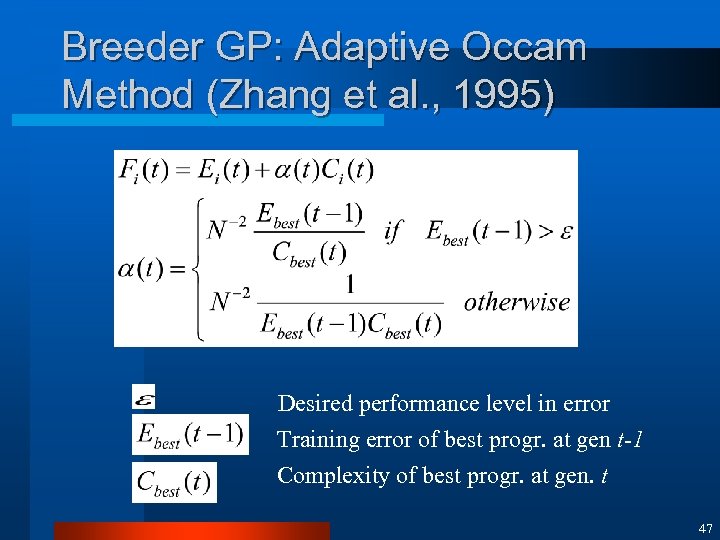

Breeder GP: Adaptive Occam Method (Zhang et al. , 1995) Desired performance level in error Training error of best progr. at gen t-1 Complexity of best progr. at gen. t 47

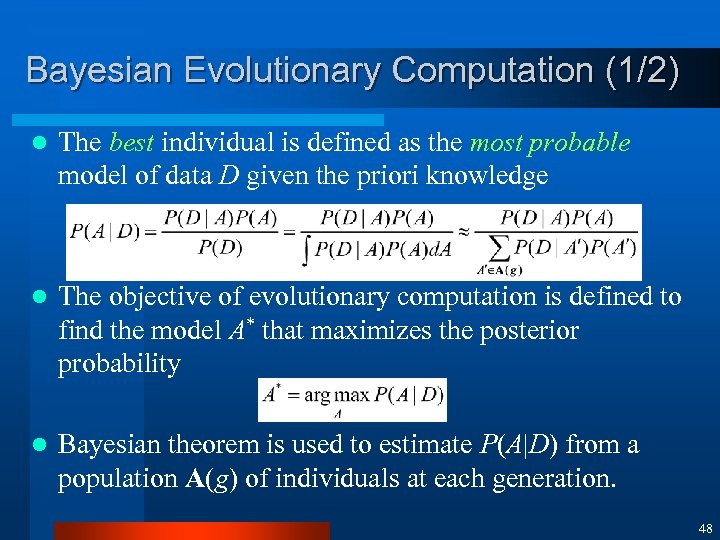

Bayesian Evolutionary Computation (1/2) l The best individual is defined as the most probable model of data D given the priori knowledge l The objective of evolutionary computation is defined to find the model A* that maximizes the posterior probability l Bayesian theorem is used to estimate P(A|D) from a population A(g) of individuals at each generation. 48

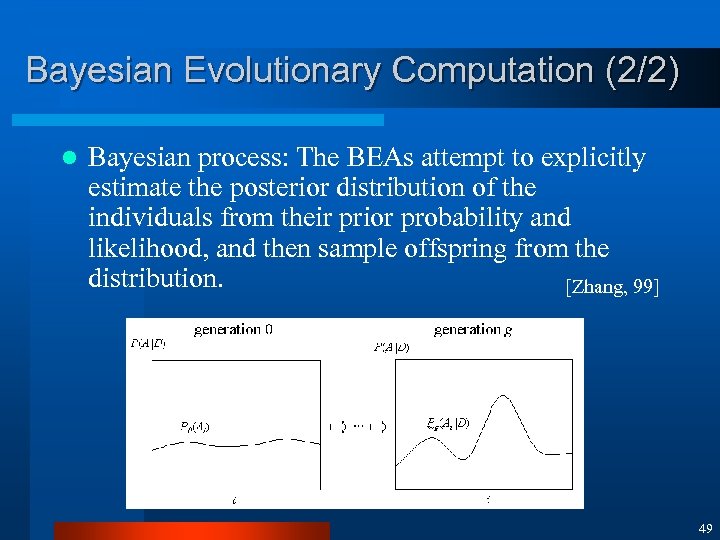

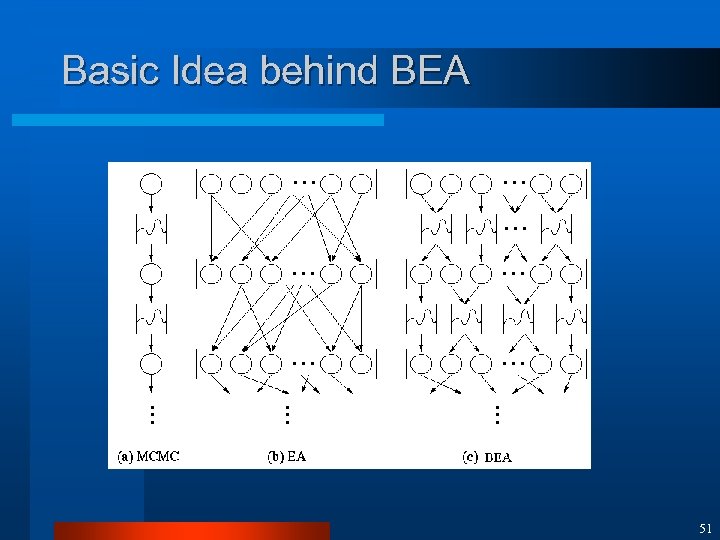

Bayesian Evolutionary Computation (2/2) l Bayesian process: The BEAs attempt to explicitly estimate the posterior distribution of the individuals from their prior probability and likelihood, and then sample offspring from the distribution. [Zhang, 99] 49

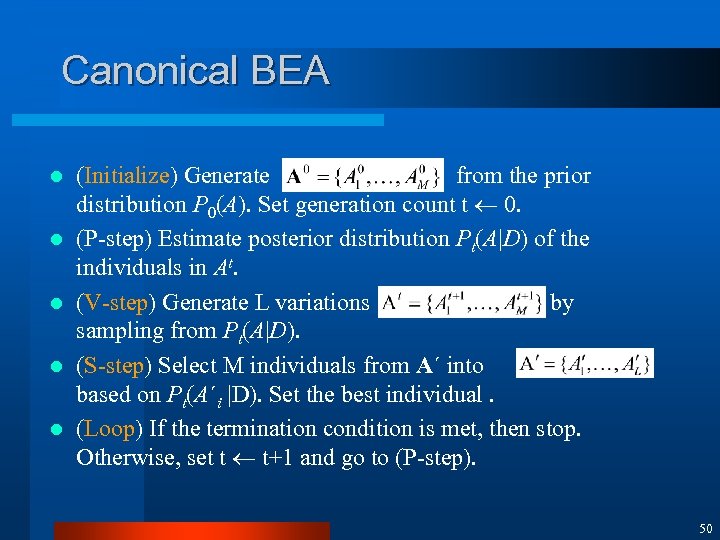

Canonical BEA l l l (Initialize) Generate from the prior distribution P 0(A). Set generation count t 0. (P-step) Estimate posterior distribution Pt(A|D) of the individuals in At. (V-step) Generate L variations by sampling from Pt(A|D). (S-step) Select M individuals from A´ into based on Pt(A´i |D). Set the best individual. (Loop) If the termination condition is met, then stop. Otherwise, set t t+1 and go to (P-step). 50

Basic Idea behind BEA 51

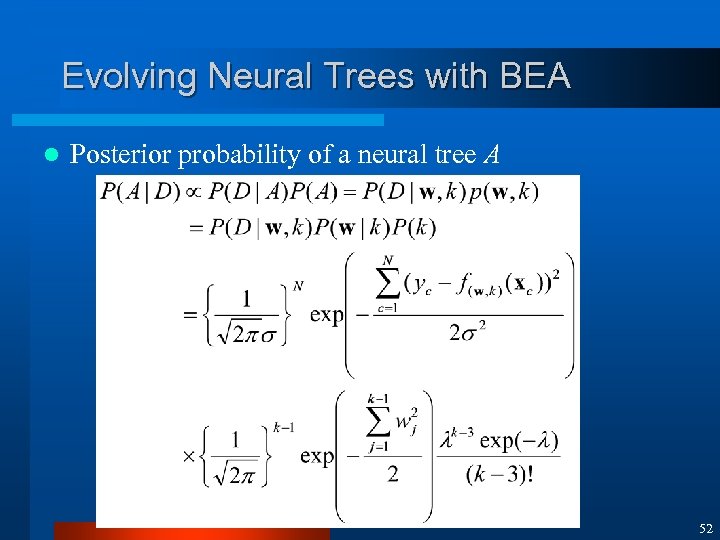

Evolving Neural Trees with BEA l Posterior probability of a neural tree A 52

Features of EAs l l l Evolutionary techniques are good for problems that are ill-defined or difficult Many different forms of representation Many different types of EA Leads to many different types of crossover, mutation, etc. Some problems with convergence, efficiency However, they are able to solve a diverse range of problems 53

Advantages of EAs l Efficient investigation of large search spaces 4 Quickly investigate a problem with a large number of possible solutions l Problem independence 4 Can be applied to many different problems l Best suited to difficult combinatorial problems 54

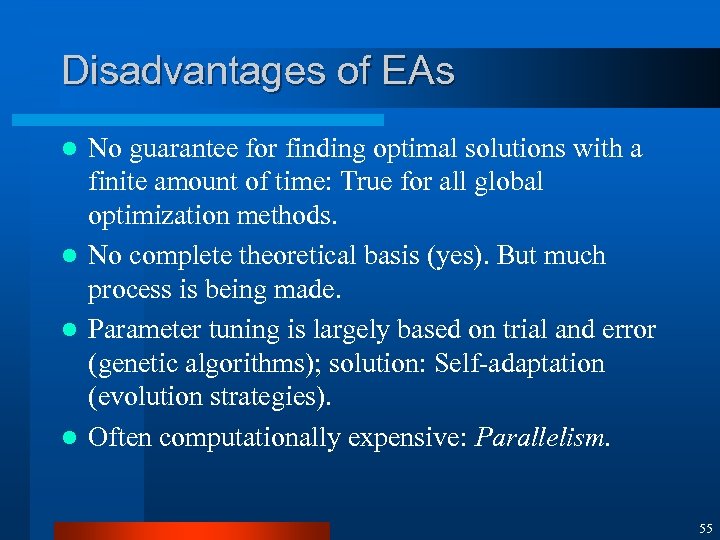

Disadvantages of EAs No guarantee for finding optimal solutions with a finite amount of time: True for all global optimization methods. l No complete theoretical basis (yes). But much process is being made. l Parameter tuning is largely based on trial and error (genetic algorithms); solution: Self-adaptation (evolution strategies). l Often computationally expensive: Parallelism. l 55

3. Applications 56

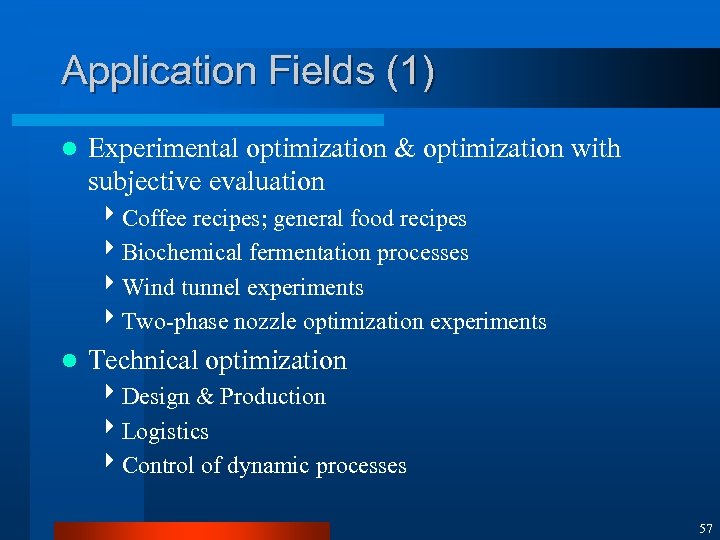

Application Fields (1) l Experimental optimization & optimization with subjective evaluation 4 Coffee recipes; general food recipes 4 Biochemical fermentation processes 4 Wind tunnel experiments 4 Two-phase nozzle optimization experiments l Technical optimization 4 Design & Production 4 Logistics 4 Control of dynamic processes 57

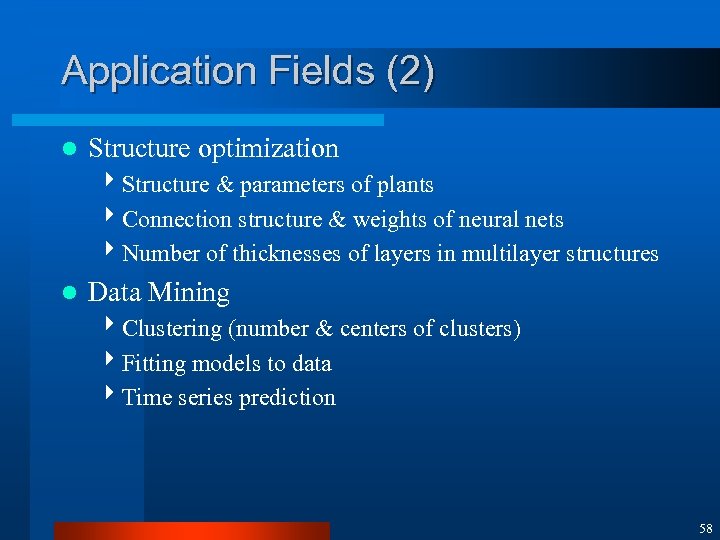

Application Fields (2) l Structure optimization 4 Structure & parameters of plants 4 Connection structure & weights of neural nets 4 Number of thicknesses of layers in multilayer structures l Data Mining 4 Clustering (number & centers of clusters) 4 Fitting models to data 4 Time series prediction 58

Application Fields (3) l Path Planning 4 Traveling Salesman Problem l Robot Control 4 Evolutionary Robotics 4 Evolvable Hardware 59

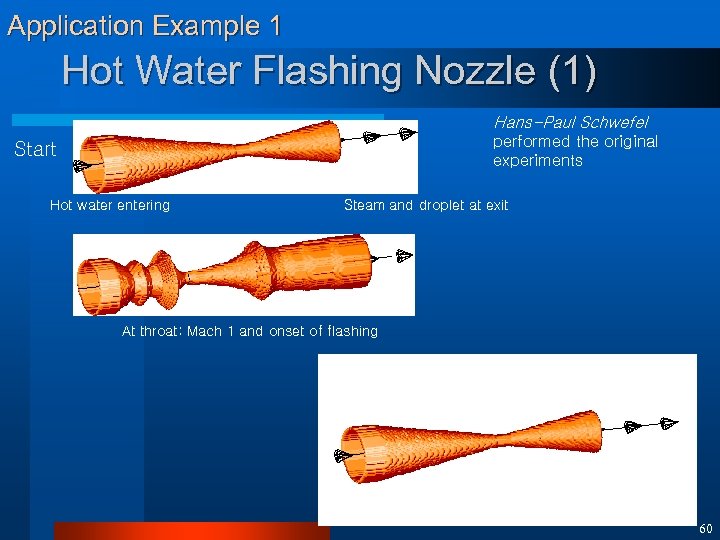

Application Example 1 Hot Water Flashing Nozzle (1) Hans-Paul Schwefel performed the original experiments Start Hot water entering Steam and droplet at exit At throat: Mach 1 and onset of flashing 60

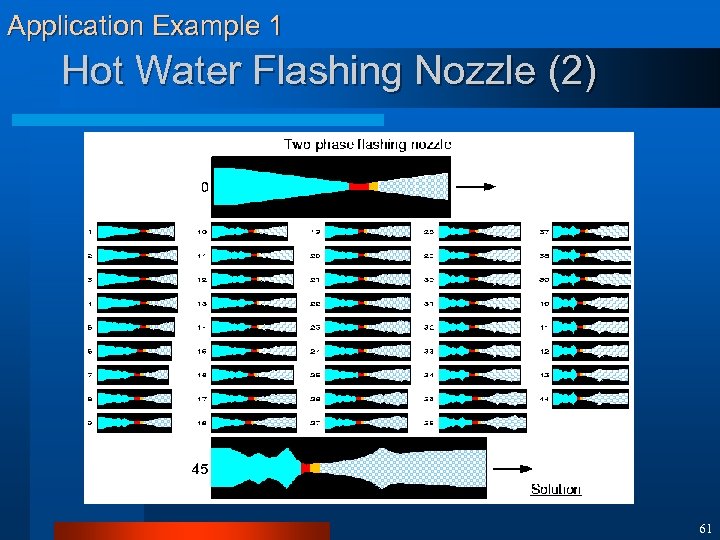

Application Example 1 Hot Water Flashing Nozzle (2) 61

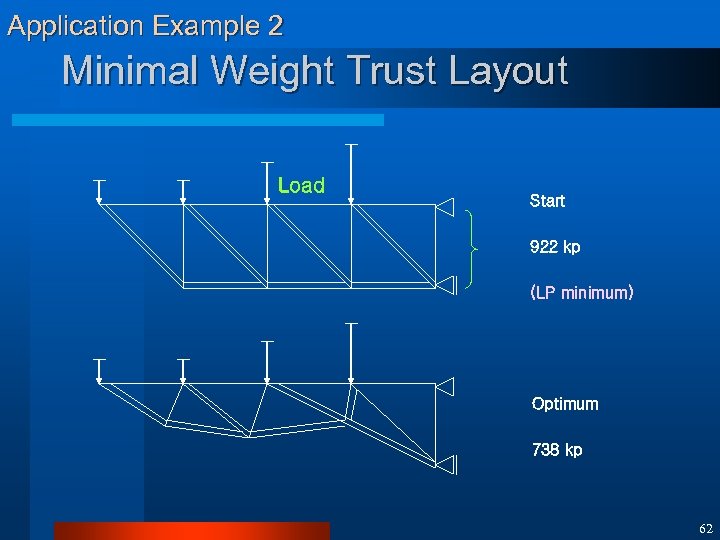

Application Example 2 Minimal Weight Trust Layout Load Start 922 kp (LP minimum) Optimum 738 kp 62

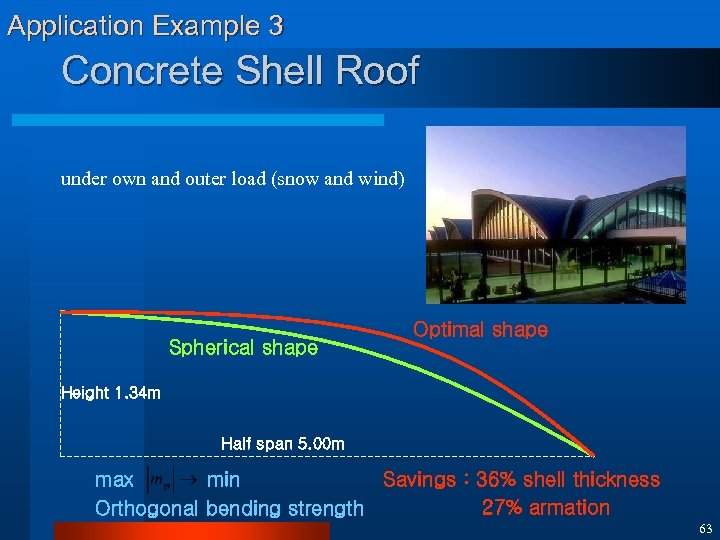

Application Example 3 Concrete Shell Roof under own and outer load (snow and wind) Spherical shape Optimal shape Height 1. 34 m Half span 5. 00 m Savings : 36% shell thickness max min 27% armation Orthogonal bending strength 63

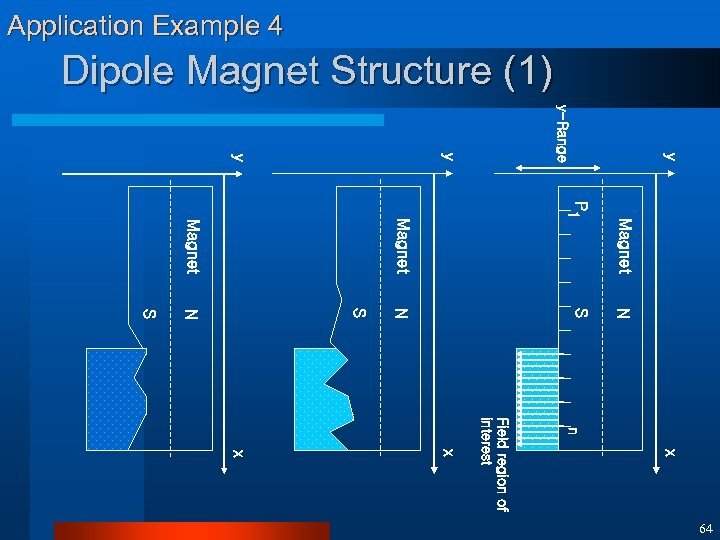

Application Example 4 Dipole Magnet Structure (1) y y-Range y y Magnet P 1 Magnet N S N S n x Field region of interest x x 64

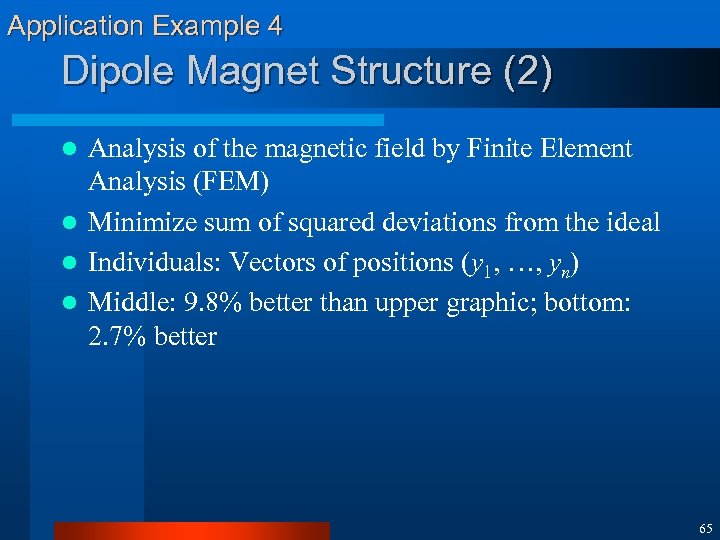

Application Example 4 Dipole Magnet Structure (2) Analysis of the magnetic field by Finite Element Analysis (FEM) l Minimize sum of squared deviations from the ideal l Individuals: Vectors of positions (y 1, …, yn) l Middle: 9. 8% better than upper graphic; bottom: 2. 7% better l 65

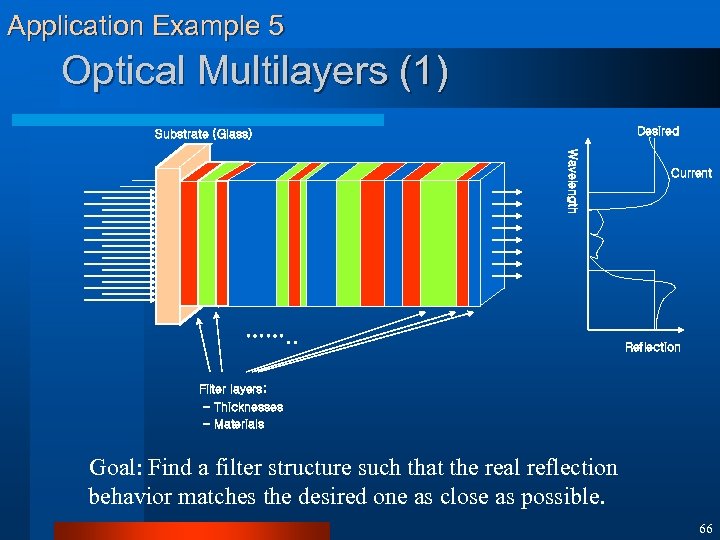

Application Example 5 Optical Multilayers (1) Desired Substrate (Glass) Wavelength ……. . Current Reflection Filter layers; - Thicknesses - Materials Goal: Find a filter structure such that the real reflection behavior matches the desired one as close as possible. 66

Application Example 5 Optical Multilayers (2) Problem parameters; 4 Thickness 4 Layer materials 4 Number of layers n. of layers (integer values) Mixed-integer, variable-dimensional problem. 67

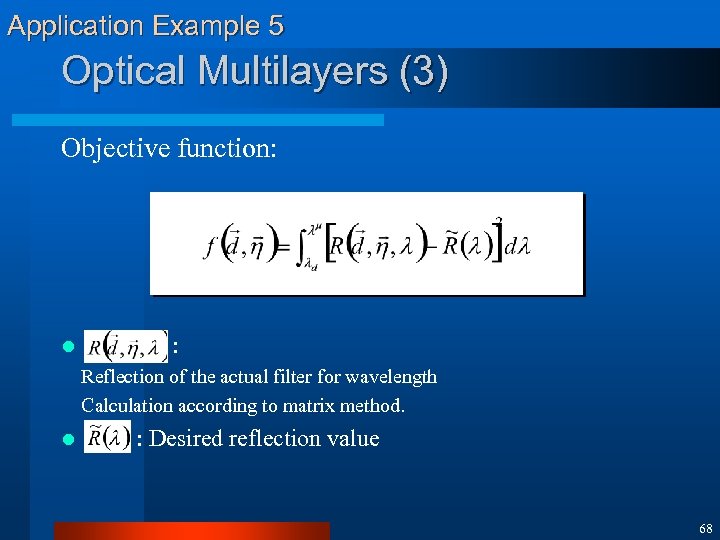

Application Example 5 Optical Multilayers (3) Objective function: l : Reflection of the actual filter for wavelength Calculation according to matrix method. l : Desired reflection value 68

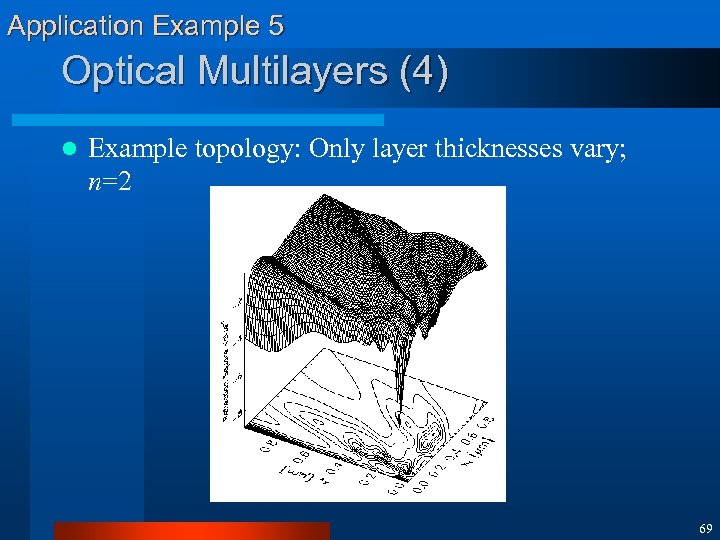

Application Example 5 Optical Multilayers (4) l Example topology: Only layer thicknesses vary; n=2 69

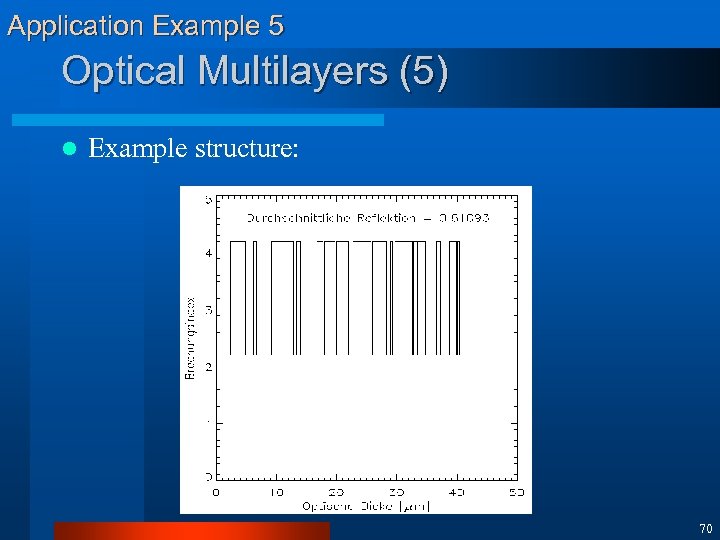

Application Example 5 Optical Multilayers (5) l Example structure: 70

Application Example 5 Optical Multilayers (6) l Parallel evolutionary algorithm 4 Per node: EA for mixed-integer representation 4 Isolation and migration of best individuals 4 Mutation of discrete variables: Fixed pm per population 71

Application Example 6 Circuit Design (1) Difficulty of automated circuit design: l A vary hard problem 4 Exponential in the number of components 4 More than 10300 circuits with a mere 20 components l An important problem 4 Too few analog designers 4 There is an “Egg-shell” of analog circuitry around almost all digital circuits 4 Analog circuits must be redesigned with each new generation of process technology l No existing automated techniques 4 In contrast with digital 4 Existing analog techniques do only sizing of components, but do not create the topology 72

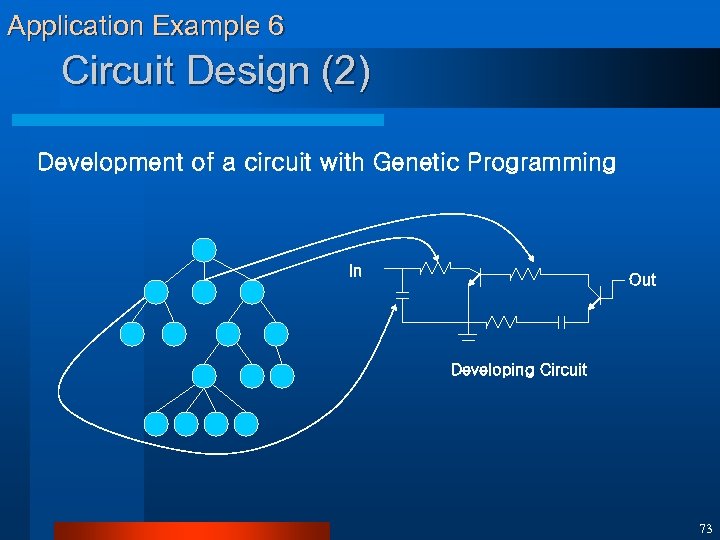

Application Example 6 Circuit Design (2) Development of a circuit with Genetic Programming In Out Developing Circuit 73

Application Example 6 Circuit Design (3) Each function in the circuit-constructing tree acts on a part of the circuit and changes it in some way (e. g. creates a capacitor, creates a parallel structure, adds a connection to ground, etc) l A “writing head” points from each function to the part of the circuit that the function will act on. l Each function inherits writing heads from its parent in the tree l 74

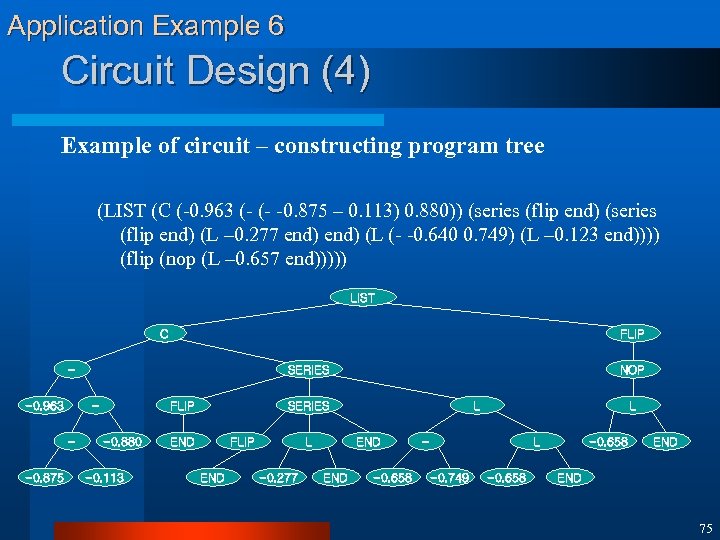

Application Example 6 Circuit Design (4) Example of circuit – constructing program tree (LIST (C (-0. 963 (- (- -0. 875 – 0. 113) 0. 880)) (series (flip end) (L – 0. 277 end) (L (- -0. 640 0. 749) (L – 0. 123 end)))) (flip (nop (L – 0. 657 end))))) LIST C FLIP -0. 963 - -0. 875 SERIES FLIP -0. 880 -0. 113 NOP SERIES END FLIP END L -0. 277 L END -0. 658 L -0. 749 -0. 658 END 75

Application Example 7 Neural Network Design (1) Introduction: EC for NNs l Preprocessing of Training Data 4 Feature selection 4 Training set optimization l Training of Network Weights 4 Non-gradient search l Optimization of Network Architecture 4 Topology adaptation 4 Pruning unnecessary connections/units 76

Application Example 7 Neural Network Design (2) Encoding Schemes for NNs l Bit-strings 4 Properties of network structure are encoded as bitstrings. l Rules 4 Network configuration is specified by a graphgeneration grammar. l Trees 4 Network is represented as “neural trees”. 77

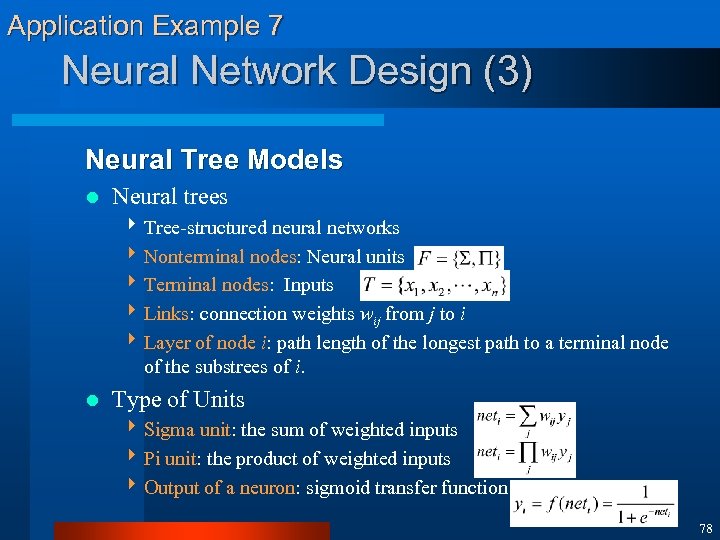

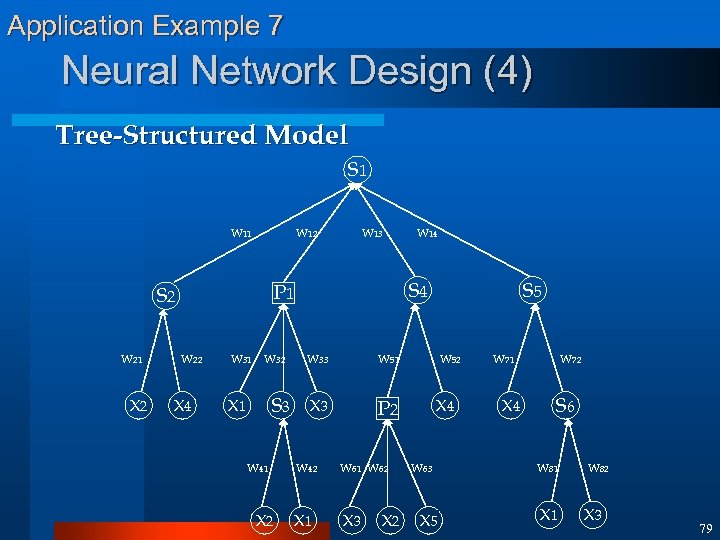

Application Example 7 Neural Network Design (3) Neural Tree Models l Neural trees 4 Tree-structured neural networks 4 Nonterminal nodes: Neural units 4 Terminal nodes: Inputs 4 Links: connection weights wij from j to i 4 Layer of node i: path length of the longest path to a terminal node of the substrees of i. l Type of Units 4 Sigma unit: the sum of weighted inputs 4 Pi unit: the product of weighted inputs 4 Output of a neuron: sigmoid transfer function 78

Application Example 7 Neural Network Design (4) Tree-Structured Model S 1 W 12 X 2 W 22 X 4 W 31 W 32 S 3 X 1 W 41 X 2 W 14 S 4 P 1 S 2 W 21 W 13 S 5 W 33 W 51 W 52 X 3 P 2 X 4 W 42 W 61 W 62 X 1 X 3 X 2 W 63 X 5 W 71 X 4 W 72 S 6 W 81 W 82 X 1 X 3 79

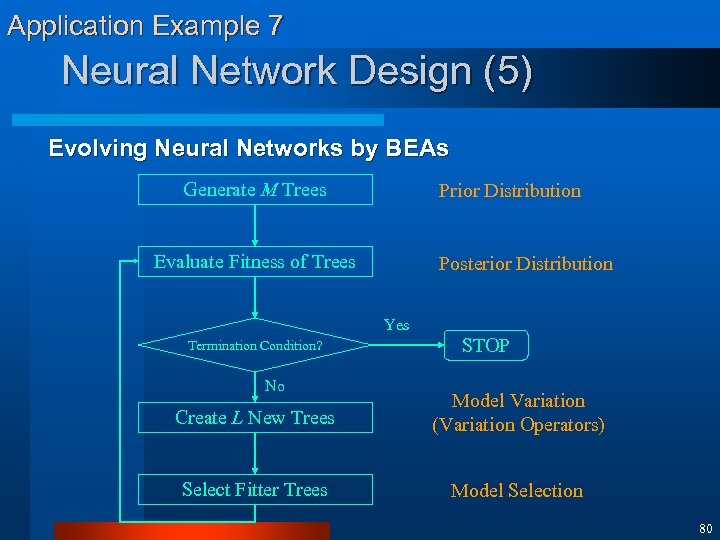

Application Example 7 Neural Network Design (5) Evolving Neural Networks by BEAs Generate M Trees Prior Distribution Evaluate Fitness of Trees Posterior Distribution Yes Termination Condition? No STOP Create L New Trees Model Variation (Variation Operators) Select Fitter Trees Model Selection 80

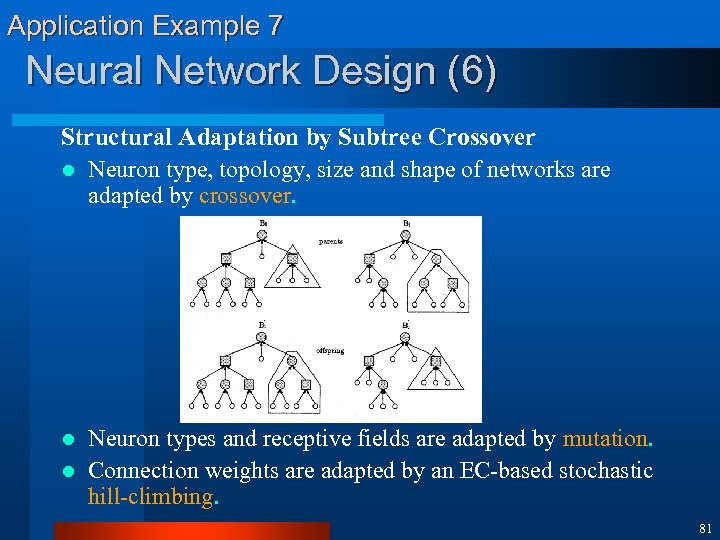

Application Example 7 Neural Network Design (6) Structural Adaptation by Subtree Crossover l Neuron type, topology, size and shape of networks are adapted by crossover. Neuron types and receptive fields are adapted by mutation. l Connection weights are adapted by an EC-based stochastic hill-climbing. l 81

Application Example 8 Fuzzy System Design (1) l Fuzzy system comprises 4 Fuzzy membership functions (MF) 4 Rules Task is to tailor the MF and rules to get best performance l Every change to MF affects the rules l Every change to the rules affects the MF l 82

Application Example 8 Fuzzy System Design (2) Solution is to design MF and rules simultaneously l Encode in chromosomes l 4 Aarameters of the MF 4 Associations and Certainty Factors in rules l Fitness is measured by performance of the Fuzzy System 83

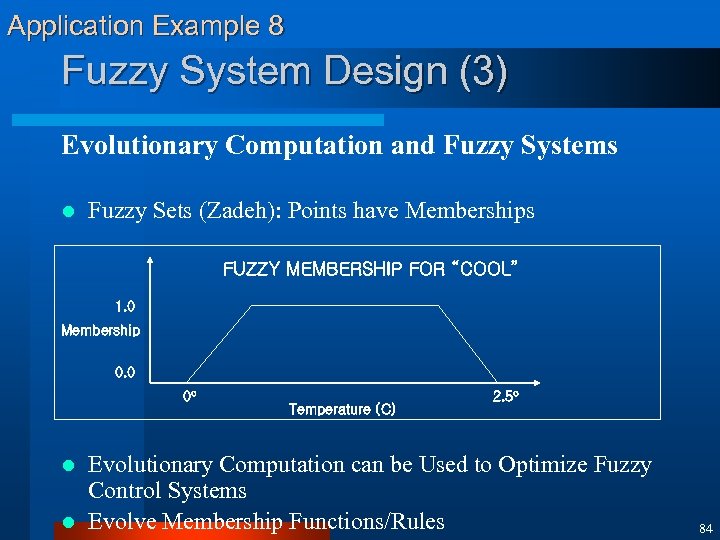

Application Example 8 Fuzzy System Design (3) Evolutionary Computation and Fuzzy Systems l Fuzzy Sets (Zadeh): Points have Memberships FUZZY MEMBERSHIP FOR “COOL” 1. 0 Membership 0. 0 0 o Temperature (C) 2. 5 o Evolutionary Computation can be Used to Optimize Fuzzy Control Systems l Evolve Membership Functions/Rules l 84

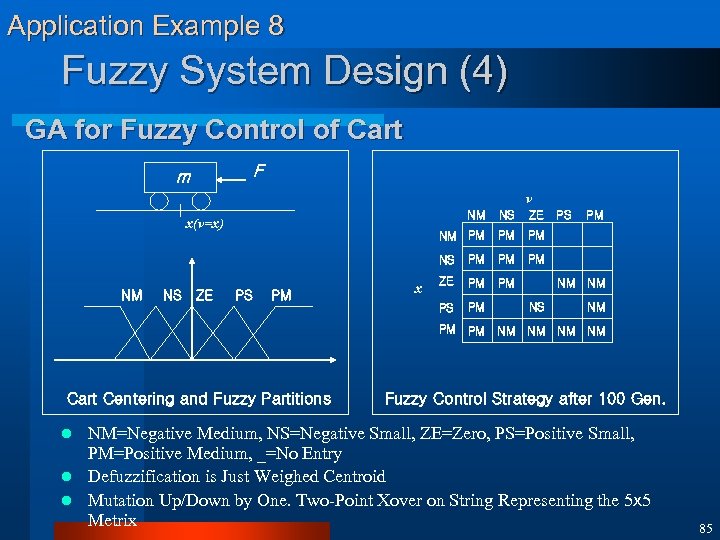

Application Example 8 Fuzzy System Design (4) GA for Fuzzy Control of Cart F m v NM NS ZE PS PM PM PM ZE PM PM PS NM ZE NS x NS NM PM x(v=x) PM PM PM Cart Centering and Fuzzy Partitions PS PM NM NM NS NM NM NM Fuzzy Control Strategy after 100 Gen. NM=Negative Medium, NS=Negative Small, ZE=Zero, PS=Positive Small, PM=Positive Medium, _=No Entry l Defuzzification is Just Weighed Centroid l Mutation Up/Down by One. Two-Point Xover on String Representing the 5 x 5 Metrix l 85

Application Example 9 Data Mining (1) l Data Mining Task 4 Types of problem to be solved: 4 Classification 4 Clustering 4 Dependence Modeling 4 etc. , etc. 86

Application Example 9 Data Mining (2) l Basic Ideas of GAs in Data Mining 4 Candidate rules are represented as individuals of a population 4 Rule quality is computed by a fitness function 4 Using task-specific knowledge 87

Application Example 9 Data Mining (3) l Classification with Genetic Algorithms 4 Each individual represents a rule set • i. e. an independent candidate solution 4 Each individual represents a single rule 4 A set of individuals (or entire population) represents a candidate solution (rule set) 88

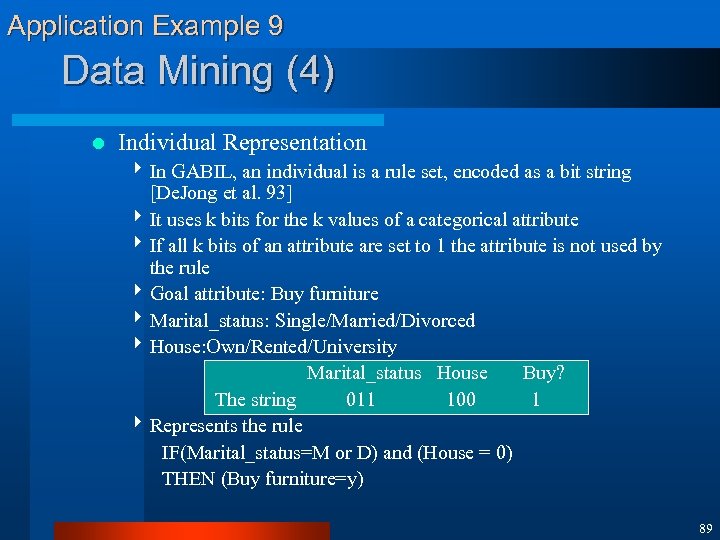

Application Example 9 Data Mining (4) l Individual Representation 4 In GABIL, an individual is a rule set, encoded as a bit string [De. Jong et al. 93] 4 It uses k bits for the k values of a categorical attribute 4 If all k bits of an attribute are set to 1 the attribute is not used by the rule 4 Goal attribute: Buy furniture 4 Marital_status: Single/Married/Divorced 4 House: Own/Rented/University Marital_status House Buy? The string 011 100 1 4 Represents the rule IF(Marital_status=M or D) and (House = 0) THEN (Buy furniture=y) 89

Application Example 9 Data Mining (5) l An individual is a variable-length string representing a set of fixed-length rules rule 1 011 100 1 rule 2 101 110 0 Mutation: traditional bit inversion l Crossover: corresponding crossover points in the two parents must semantically match l 90

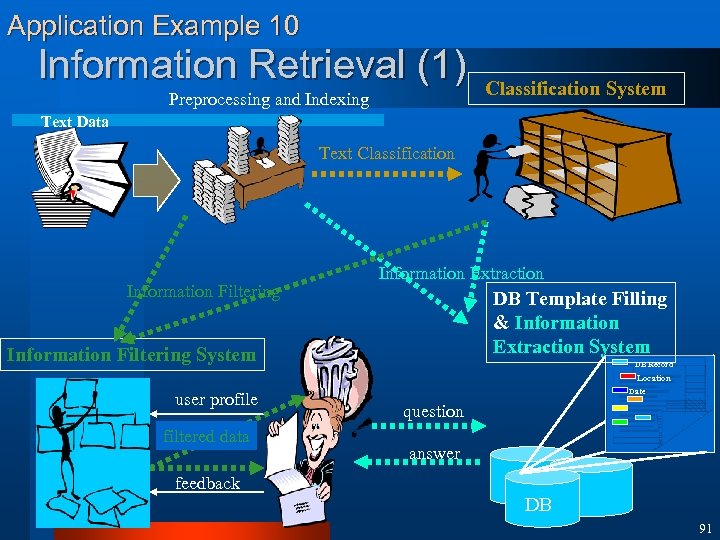

Application Example 10 Information Retrieval (1) Preprocessing and Indexing Classification System Text Data Text Classification Information Filtering Information Extraction DB Template Filling & Information Extraction System Information Filtering System DB Record Location user profile filtered data Date question answer feedback DB 91

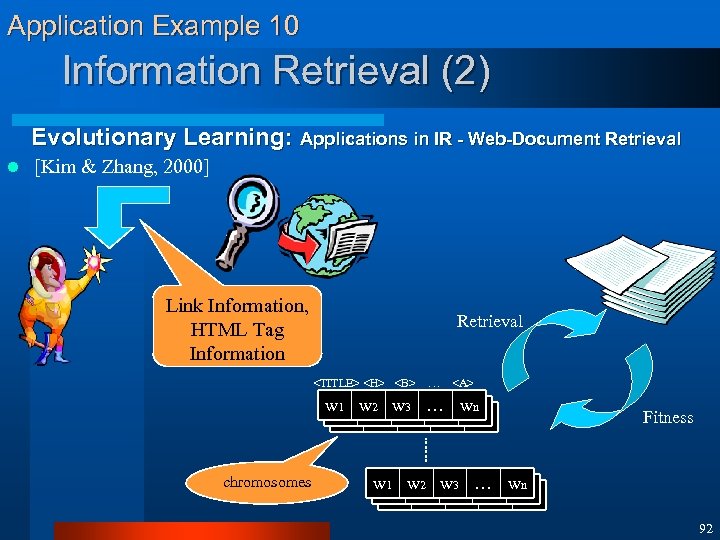

Application Example 10 Information Retrieval (2) Evolutionary Learning: Applications in IR - Web-Document Retrieval l [Kim & Zhang, 2000] Link Information, HTML Tag Information Retrieval <TITLE> <H> <B> … <A> w 11 w 22 w 33 … wnn w 1 w 2 w 3 … wn w w w … w chromosomes Fitness w 11 w 22 w 33 … wnn w 1 w 2 w 3 … wn w w w … w 92

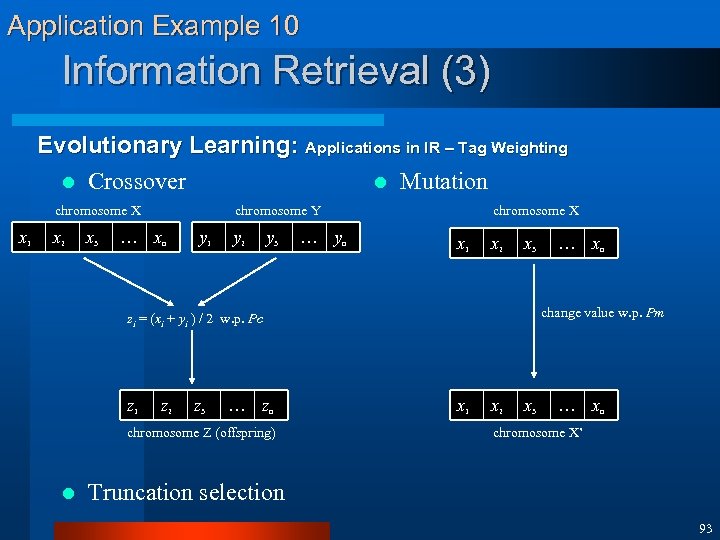

Application Example 10 Information Retrieval (3) Evolutionary Learning: Applications in IR – Tag Weighting l Crossover l Mutation chromosome X x 1 x 2 x 3 chromosome Y … xn y 1 y 2 y 3 … yn chromosome X x 1 x 2 x 3 change value w. p. Pm zi = (xi + yi ) / 2 w. p. Pc z 1 z 2 z 3 … zn chromosome Z (offspring) l … xn x 1 x 2 x 3 … xn chromosome X’ Truncation selection 93

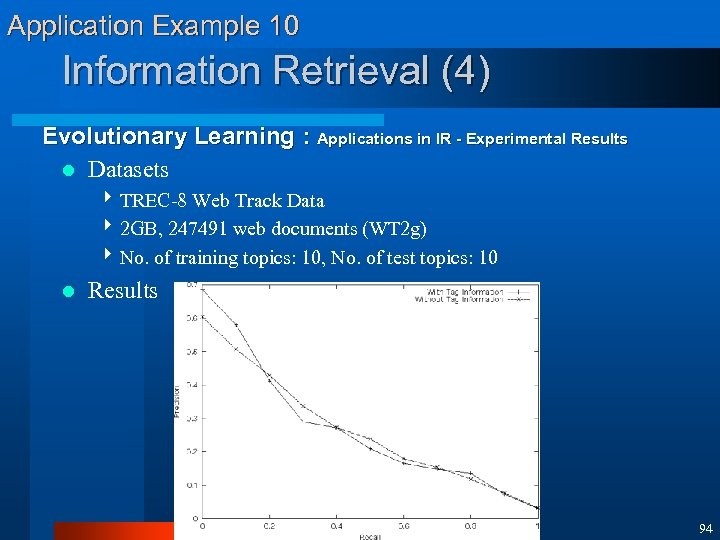

Application Example 10 Information Retrieval (4) Evolutionary Learning : Applications in IR - Experimental Results l Datasets 4 TREC-8 Web Track Data 4 2 GB, 247491 web documents (WT 2 g) 4 No. of training topics: 10, No. of test topics: 10 l Results 94

![Application Example 11 Time Series Prediction (1) l [Zhang & Joung, 2000] Autonomous Model Application Example 11 Time Series Prediction (1) l [Zhang & Joung, 2000] Autonomous Model](https://present5.com/presentation/54c4edc6c55fd2ff61617dcd272dd0e2/image-95.jpg)

Application Example 11 Time Series Prediction (1) l [Zhang & Joung, 2000] Autonomous Model Discovery Raw Time Series Preprocessing Evolutionary Neural Trees (ENTs) Prediction Combining Neural Trees Combine Neural Trees Outputs 95

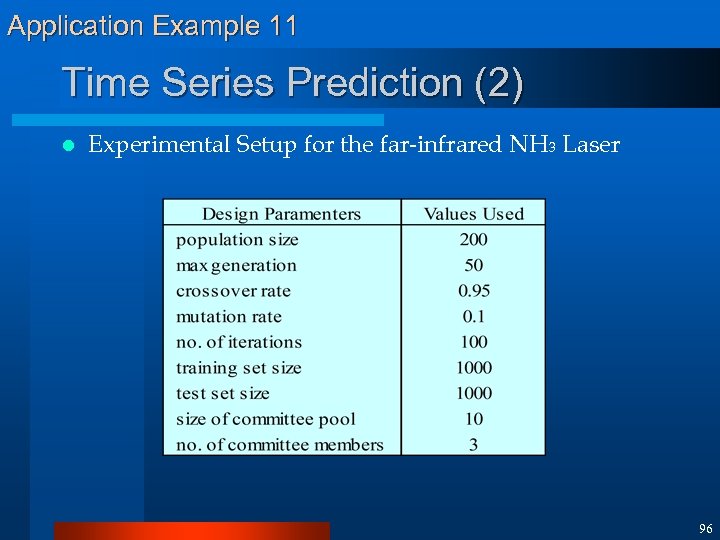

Application Example 11 Time Series Prediction (2) l Experimental Setup for the far-infrared NH 3 Laser 96

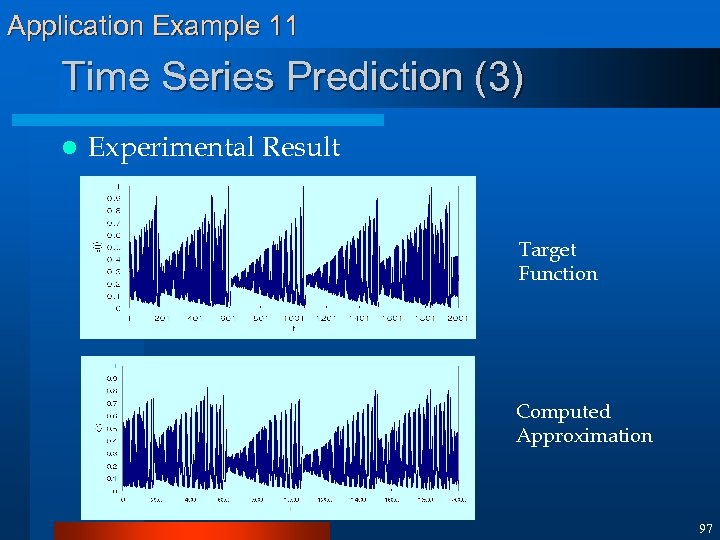

Application Example 11 Time Series Prediction (3) l Experimental Result Target Function Computed Approximation 97

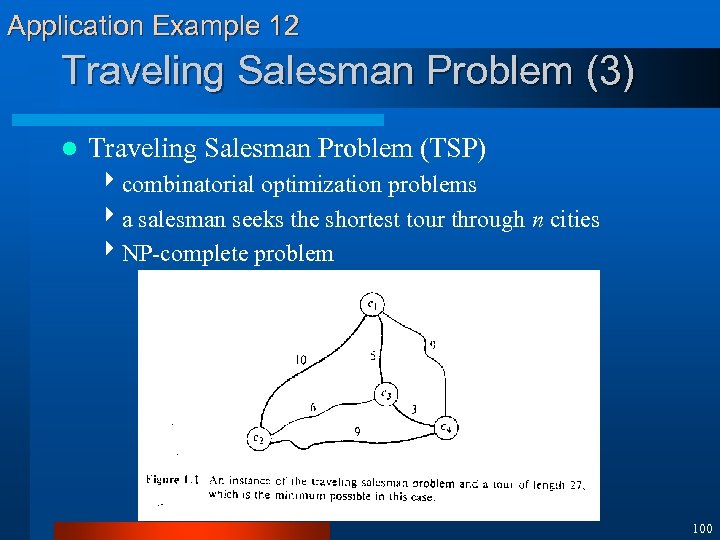

Application Example 12 Traveling Salesman Problem (1) This is a minimization problem l Given n cities, what is the shortest route to each city, visiting each city exactly once l Want to minimize total distance traveled l Also must obey the “Visit Once” constraint l 98

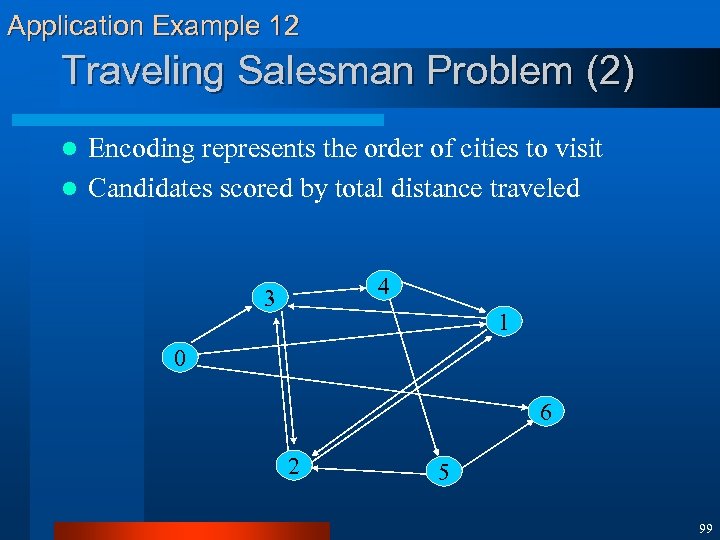

Application Example 12 Traveling Salesman Problem (2) Encoding represents the order of cities to visit l Candidates scored by total distance traveled l 4 3 1 0 6 2 5 99

Application Example 12 Traveling Salesman Problem (3) l Traveling Salesman Problem (TSP) 4 combinatorial optimization problems 4 a salesman seeks the shortest tour through n cities 4 NP-complete problem 100

Application Example 12 Traveling Salesman Problem (4) Simple Example with the TSP 4 The “house-call problem” • Problem: Doctor must visit patients once and only once and return home in the shortest possible path 4 Difficulty: The number of possible routes increases faster than exponentially • 10 patients = 191, 440 possible routes • 22 patients = 10, 000, 000, 000 101

Application Example 12 Traveling Salesman Problem (5) l Representation 4 Permutation Representation • cities are listed in the order in which they are visited [3 2 5 4 7 1 6 9 0 8] : 3 -2 -5 -4 -7 -6 -1 -9 -0 -8 • path presentation, order representation • lead to illegal tour if the traditional one-point crossover operator is used 4 Random Keys Representation • encodes a solution with random numbers from (0, 1) [0. 23 0. 82 0. 45 0. 74 0. 87 0. 11 0. 56 0. 69 0. 78] q position i in the list represent city I q random number in position I determines the visiting order of city I in a TSP tour q sort random keys in ascending order to get tour 6 -3 -7 -8 -4 -9 -2 -5 · eliminate the infeasibility of the offspring 102

Application Example 13 Cooperating Robots (1) l What is Evolutionary Robotics? 4 An attempt to create robots which evolve using various evolutionary computational methods 4 Evolve behaviors or competence modules implemented in various manners: several languages, relay, neuro chips, FPGA’s, etc. 4“Intelligence is emergent” 4 Presently, limited to mostly evolution of robot’s control software. However, some attempts to evolve hardware began. 4 GA and its variants used. Most current attempts center around {population size=50 ~ 500, generations = 50 ~ 500} 103

Application Example 13 Cooperating Robots (2) Industrial Robots Autonomous Mobile Robots OPEN !! CLOSED 104

Application Example 13 Cooperating Robots (3) Cooperating Autonomous Robots 105

Application Example 13 Cooperating Robots (4) l Why Build Cooperating Robots? 4 Increased scope for missions inherently distributed in: • Space • Time • Functionality 4 Increased reliability, robustness (through redundancy) 4 Decreased task completion time (through parallelism) 4 Decreased cost (through simpler individual robot design) 106

Application Example 13 Cooperating Robots (5) l Cooperating Autonomous Robots: Application domain 4 Mining 4 Construction 4 Planetary exploration 4 Automated manufacturing 4 Search and rescue missions 4 Cleanup of hazardous waste 4 Industrial/household maintenance 4 Nuclear power plant decommissioning 4 Security, surveillance, and reconnaissance 107

Application Example 14 Co-evolving Soccer Softbots (1) Co-evolving Soccer Softbots With Genetic Programming At Robo. Cup there are two "leagues": the "real" robot league and the "virtual" softbot league l How do you do this with GP? l 4 GP breeding strategies: homogeneous and heterogeneous 4 Decision of the basic set of function with which to evolve players 4 Creation of an evaluation environment for our GP individuals 108

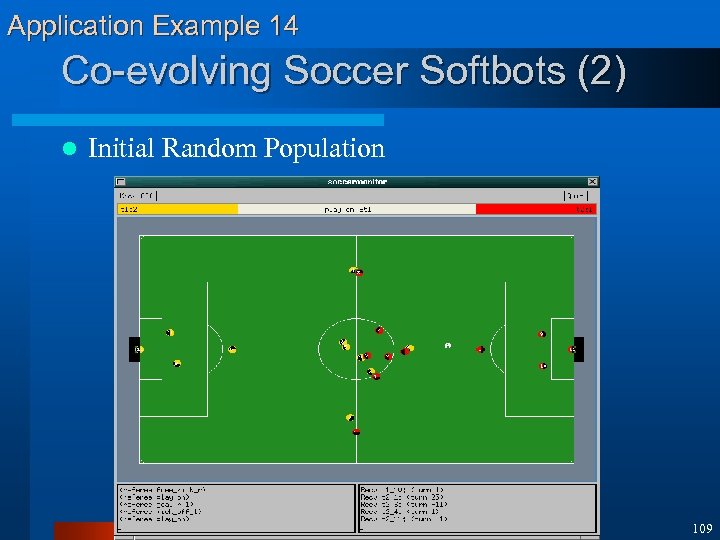

Application Example 14 Co-evolving Soccer Softbots (2) l Initial Random Population 109

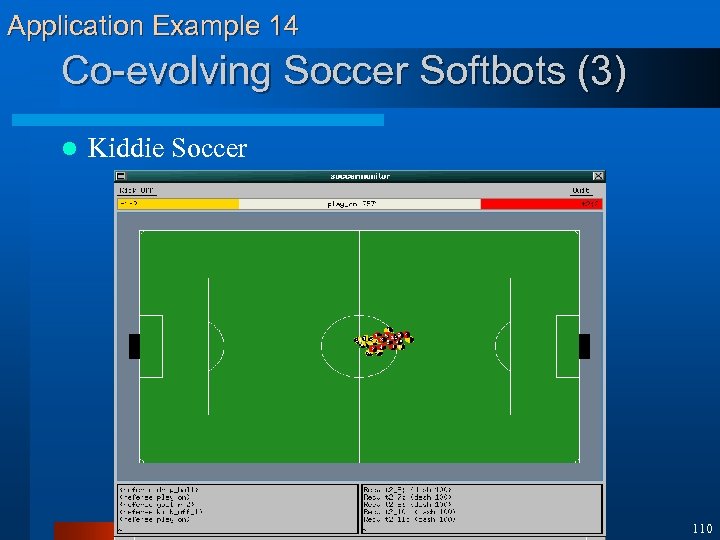

Application Example 14 Co-evolving Soccer Softbots (3) l Kiddie Soccer 110

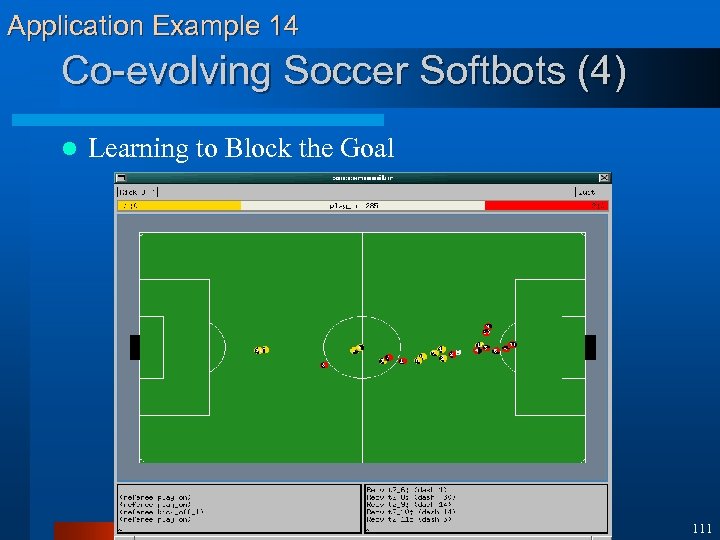

Application Example 14 Co-evolving Soccer Softbots (4) l Learning to Block the Goal 111

Application Example 14 Co-evolving Soccer Softbots (5) l Becoming Territorial 112

Application Example 15 Evolvable Hardware (1) EVOLVABLE HARDWARE IMPLEMENTATION OF EVOLVABLE SOFTWARE (i. e. GP) Reconfigurable logic is too slow to make it worthwhile 113

Application Example 15 Evolvable Hardware (2) FPGAs Bad, because they are designed for conventional electronic design l Good, because they can be abused and allow the exploitation of the physics l WHAT WE NEED l FPMAs 4 Field Programmable Matter Arrays 114

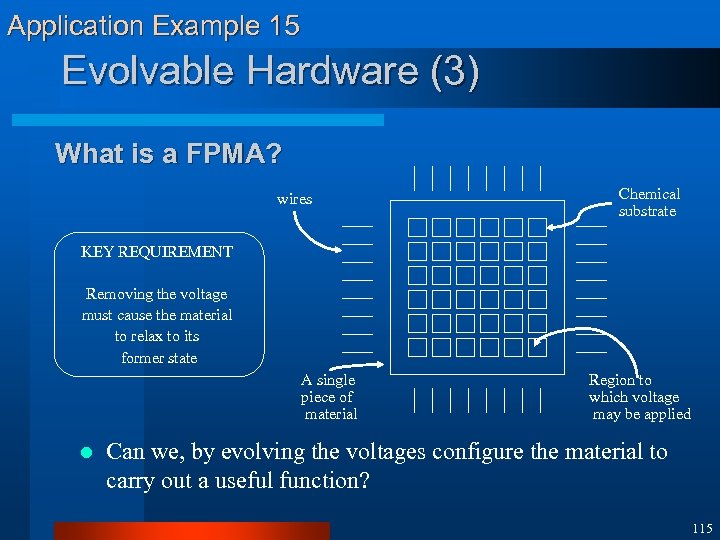

Application Example 15 Evolvable Hardware (3) What is a FPMA? wires Chemical substrate KEY REQUIREMENT Removing the voltage must cause the material to relax to its former state A single piece of material l Region to which voltage may be applied Can we, by evolving the voltages configure the material to carry out a useful function? 115

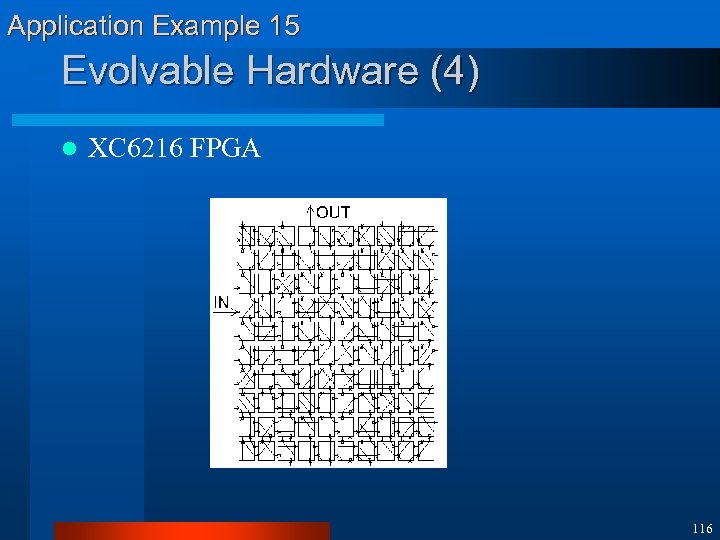

Application Example 15 Evolvable Hardware (4) l XC 6216 FPGA 116

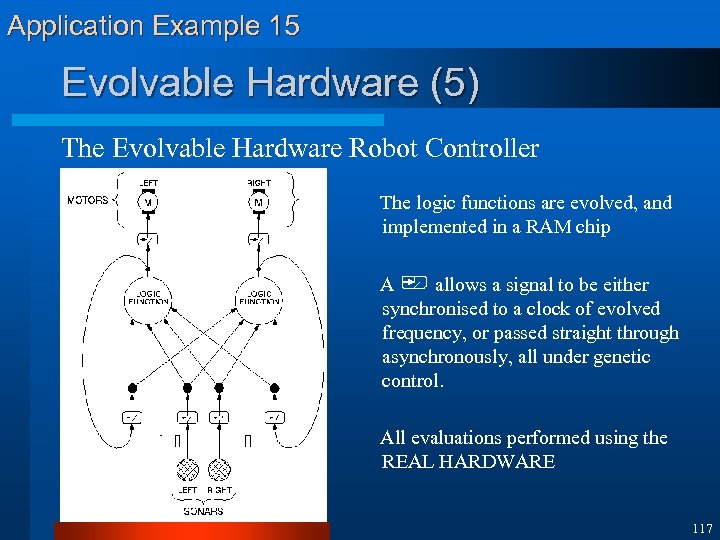

Application Example 15 Evolvable Hardware (5) The Evolvable Hardware Robot Controller The logic functions are evolved, and implemented in a RAM chip A allows a signal to be either synchronised to a clock of evolved frequency, or passed straight through asynchronously, all under genetic control. All evaluations performed using the REAL HARDWARE 117

Application Example 15 Evolvable Hardware (6) 118

4. Current Issues 119

Innovative Techniques for EC l l l Effective Operators Novel Representation Exploration/Exploitation Population Sizing Niching Methods Dynamic Fitness Evaluation Multi-objective Optimization l Co-evolution l Self-Adaptation l EA/NN/Fuzzy Hybrids l Distribution Estimation Algorithms l Parallel Evolutionary Algorithms l Molecular Evolutionary Computation l 120

1000 -Pentium Beowulf-Style Cluster Computer for Parallel GP 121

Molecular Evolutionary Computing 01101010001 ATGCTCGAAGCT 122

Applications of EAs l l l l l Optimization Machine learning Data mining Intelligent Agents Bioinformatics Engineering Design Telecommunications Evolvable Hardware Evolutionary Robotics 123

5. References and URLs 124

Journals & Conferences l Journals: 4 Evolutionary Computation (MIT Press) 4 Trans. on Evolutionary Computation (IEEE) 4 Genetic Programming & Evolvable Hardware (Kluwer) 4 Evolutionary Optimization l Conferences: 4 Congress on Evolutionary Computation (CEC) 4 Genetic and Evolutionary Computation Conference (GECCO) 4 Parallel Problem Solving from Nature (PPSN) 125

WWW Resources • Hitch-Hiker’s Guide to Evolutionary Computation • http: //alife. santafe. edu/~joke/encore/www FAQ for comp. ai. genetic • Genetic Algorithms Archive • http: //www. aic. nrl. navy. mil/galist Repository for GA related information, conferences, etc. • EVONET European Network of Excellence on Evolutionary Comp. : http: //www. dcs. napier. ac. uk/evonet • Genetic Programming Notebook • http: //www. geneticprogramming. com software, people, papers, tutorial, FAQs 126

Books • Bäck, Th. , Evolutionary Algorithms in Theory and Practice, Oxford University Press, New York, 1996. • Mitchell, M. , An Introduction to Genetic Algorithms, MIT Press, Cambridge, MA, 1996. • Fogel, D. , Evolutionary Computation: Toward a New Philosophy of Machine Intelligence, IEEE Press, NJ, 1995. • Schwefel, H-P. , Evolution and Optimum Seeking, Wiley, New York, 1995. • Koza, J. , Genetic Programming, MIT Press, Cambridge, MA, 1992. • Goldberg, D. , Genetic Algorithms in Search and Optimization, Addison. Wesley, Reading, MA, 1989. • Holland, J. , Adaptation in Natural and Artificial Systems, Univeristy of Michigan Press, Ann Arbor, 1975. • Rechenberg, I. , Evolutionsstrategie: Optimierung technischer Systeme nach Prinzipien der biologischen Evolution, Frommann-Holzboog Verlag, Stuttgart, 1973. • Fogel, L. J. , Owens, A. J, and Walsh, M. J. , Artificial Intelligence through Simulated Evolution, John Wiley, NY, 1966. 127

For more information: http: //scai. snu. ac. kr/ (c) 2000 Byoung-Tak Zhang, SNU CSE

54c4edc6c55fd2ff61617dcd272dd0e2.ppt