a36f50d22b371f82ba3d6706c295223e.ppt

- Количество слайдов: 22

Evaluation Use in Program & Policy Change Michael Coplen Michael Zuschlag Joyce Ranney Michael Harnar This research was funded by the Federal Railroad Administration Office of Research and Development. 1 1

Evaluation Use in Program & Policy Change Michael Coplen Michael Zuschlag Joyce Ranney Michael Harnar This research was funded by the Federal Railroad Administration Office of Research and Development. 1 1

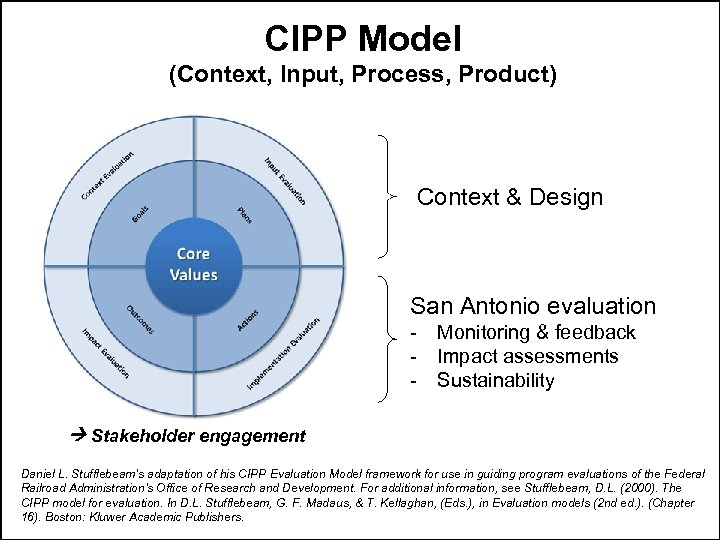

CIPP Model (Context, Input, Process, Product) Context & Design San Antonio evaluation - Monitoring & feedback - Impact assessments - Sustainability Stakeholder engagement Daniel L. Stufflebeam's adaptation of his CIPP Evaluation Model framework for use in guiding program evaluations of the Federal Railroad Administration's Office of Research and Development. For additional information, see Stufflebeam, D. L. (2000). The CIPP model for evaluation. In D. L. Stufflebeam, G. F. Madaus, & T. Kellaghan, (Eds. ), in Evaluation models (2 nd ed. ). (Chapter 16). Boston: Kluwer Academic Publishers. 2

CIPP Model (Context, Input, Process, Product) Context & Design San Antonio evaluation - Monitoring & feedback - Impact assessments - Sustainability Stakeholder engagement Daniel L. Stufflebeam's adaptation of his CIPP Evaluation Model framework for use in guiding program evaluations of the Federal Railroad Administration's Office of Research and Development. For additional information, see Stufflebeam, D. L. (2000). The CIPP model for evaluation. In D. L. Stufflebeam, G. F. Madaus, & T. Kellaghan, (Eds. ), in Evaluation models (2 nd ed. ). (Chapter 16). Boston: Kluwer Academic Publishers. 2

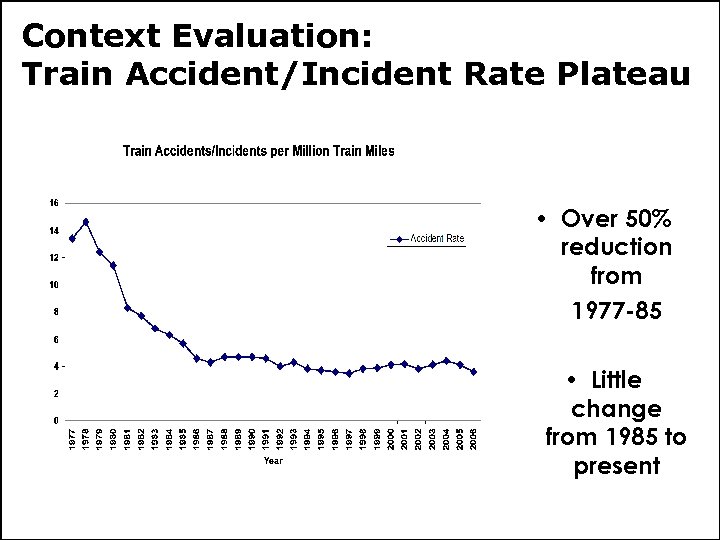

Context Evaluation: Train Accident/Incident Rate Plateau • Over 50% reduction from 1977 -85 • Little change from 1985 to present 3

Context Evaluation: Train Accident/Incident Rate Plateau • Over 50% reduction from 1977 -85 • Little change from 1985 to present 3

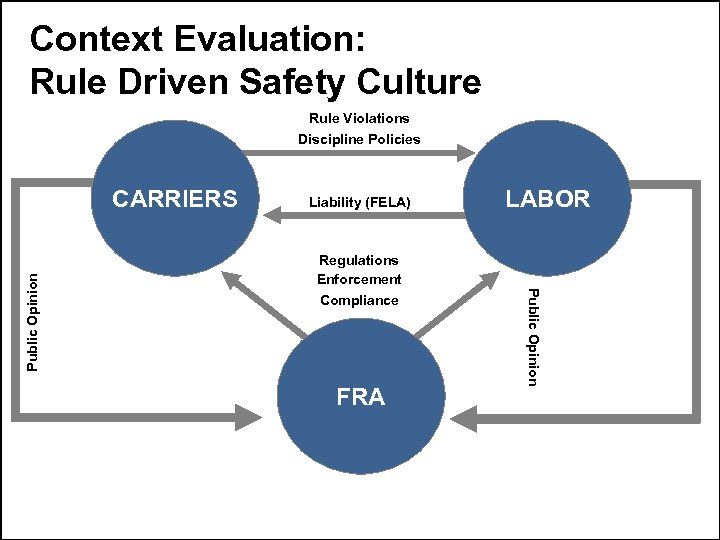

Context Evaluation: Rule Driven Safety Culture Rule Violations Discipline Policies Liability (FELA) Regulations Enforcement Compliance FRA LABOR Public Opinion CARRIERS 4

Context Evaluation: Rule Driven Safety Culture Rule Violations Discipline Policies Liability (FELA) Regulations Enforcement Compliance FRA LABOR Public Opinion CARRIERS 4

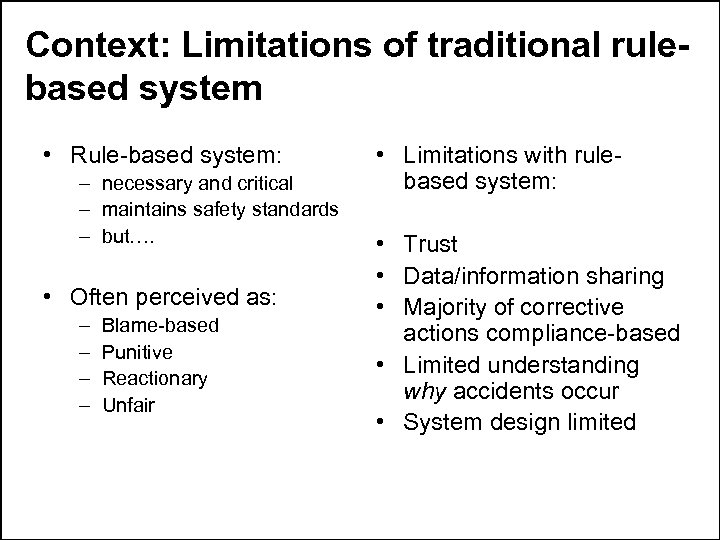

Context: Limitations of traditional rulebased system • Rule-based system: – necessary and critical – maintains safety standards – but…. • Often perceived as: – – Blame-based Punitive Reactionary Unfair • Limitations with rulebased system: • Trust • Data/information sharing • Majority of corrective actions compliance-based • Limited understanding why accidents occur • System design limited 5

Context: Limitations of traditional rulebased system • Rule-based system: – necessary and critical – maintains safety standards – but…. • Often perceived as: – – Blame-based Punitive Reactionary Unfair • Limitations with rulebased system: • Trust • Data/information sharing • Majority of corrective actions compliance-based • Limited understanding why accidents occur • System design limited 5

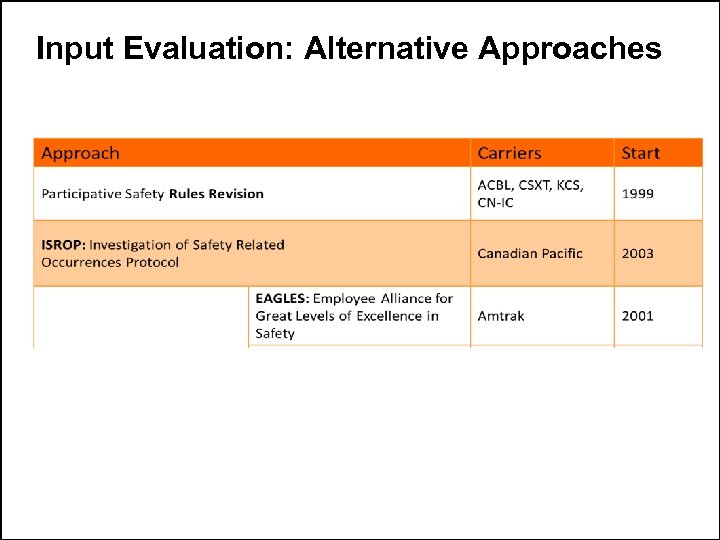

Input Evaluation: Alternative Approaches 6

Input Evaluation: Alternative Approaches 6

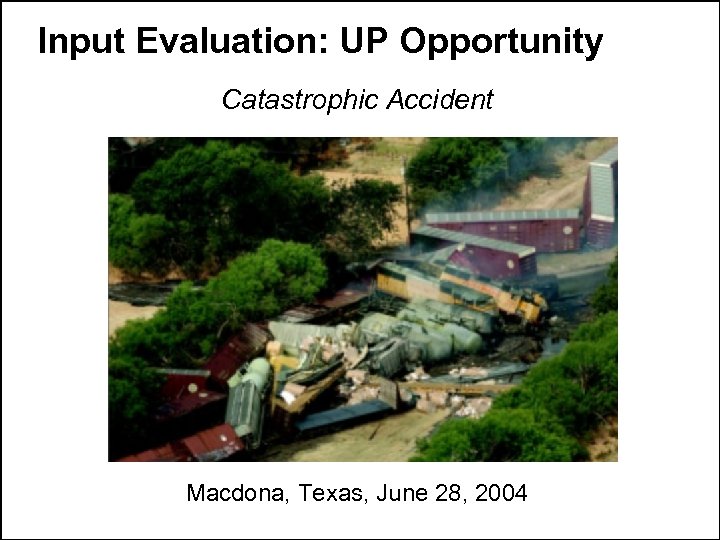

Input Evaluation: UP Opportunity Catastrophic Accident Macdona, Texas, June 28, 2004 7

Input Evaluation: UP Opportunity Catastrophic Accident Macdona, Texas, June 28, 2004 7

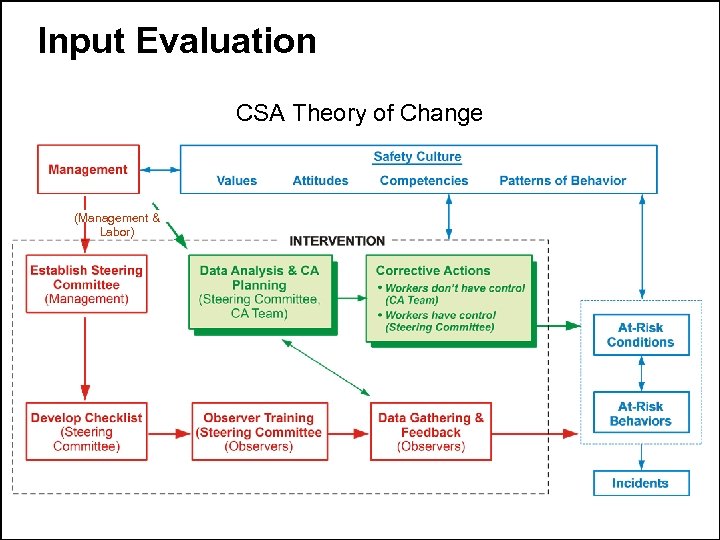

Input Evaluation CSA Theory of Change (Management & Labor) 8

Input Evaluation CSA Theory of Change (Management & Labor) 8

What is Clear Signal for Action? Data Peer-to-peer Feedback Safer Behavior Continuous Improvement Safer Culture Safer Conditions Safety Leadership 9

What is Clear Signal for Action? Data Peer-to-peer Feedback Safer Behavior Continuous Improvement Safer Culture Safer Conditions Safety Leadership 9

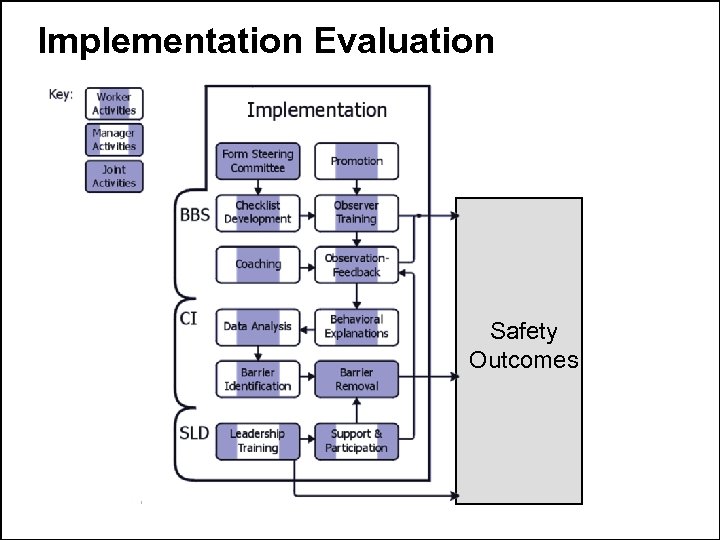

Implementation Evaluation Safety Outcomes 10

Implementation Evaluation Safety Outcomes 10

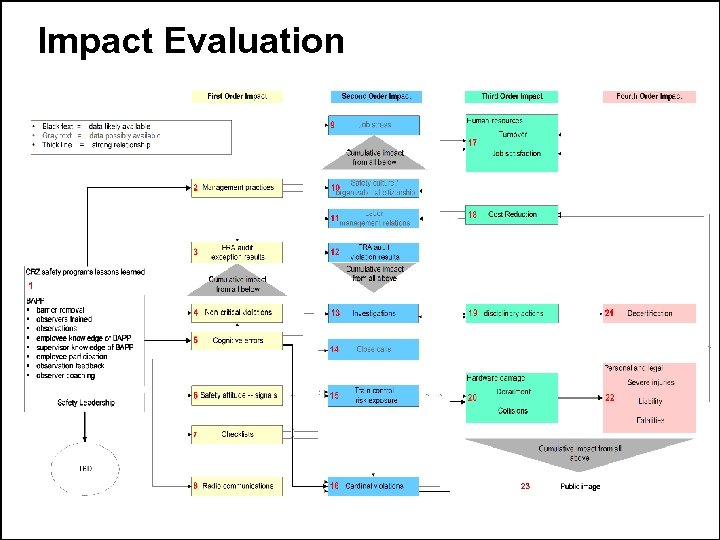

Impact Evaluation 11

Impact Evaluation 11

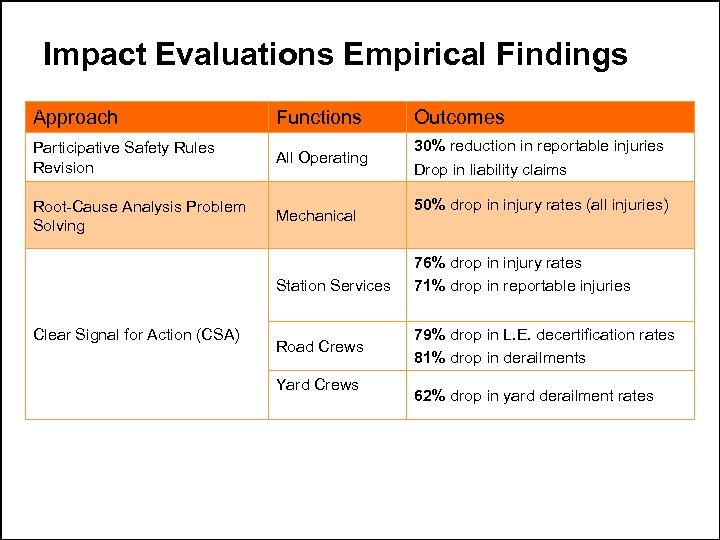

Impact Evaluations Empirical Findings Approach Functions Outcomes Participative Safety Rules Revision All Operating 30% reduction in reportable injuries Drop in liability claims Root-Cause Analysis Problem Solving Mechanical Station Services Clear Signal for Action (CSA) Road Crews Yard Crews 50% drop in injury rates (all injuries) 76% drop in injury rates 71% drop in reportable injuries 79% drop in L. E. decertification rates 81% drop in derailments 62% drop in yard derailment rates 12

Impact Evaluations Empirical Findings Approach Functions Outcomes Participative Safety Rules Revision All Operating 30% reduction in reportable injuries Drop in liability claims Root-Cause Analysis Problem Solving Mechanical Station Services Clear Signal for Action (CSA) Road Crews Yard Crews 50% drop in injury rates (all injuries) 76% drop in injury rates 71% drop in reportable injuries 79% drop in L. E. decertification rates 81% drop in derailments 62% drop in yard derailment rates 12

CSA Industry Impacts: CSA like programs § Union Pacific Railroad – System Wide Total Safety Culture Program § Toronto Transit – System Wide Safety Culture Program § Federal Railroad Administration – Risk Reduction Program § Congress – HR 2095: Rail Safety Improvement Act of 2008 § Amtrak – Safe-2 -Safer Program § BNSF – Safety Leadership Development 13

CSA Industry Impacts: CSA like programs § Union Pacific Railroad – System Wide Total Safety Culture Program § Toronto Transit – System Wide Safety Culture Program § Federal Railroad Administration – Risk Reduction Program § Congress – HR 2095: Rail Safety Improvement Act of 2008 § Amtrak – Safe-2 -Safer Program § BNSF – Safety Leadership Development 13

Stakeholder Engagement Strategies Engagement, buy-in, and commitment strategies from all key stakeholders Clusters ■ External Review Teams ■ Steering Committees ■ Feedback meetings ■ Interviews ■ Focus groups ■ Surveys Also see: A Practical Guide for Engaging Stakeholders in Developing Evaluation Questions, Robert Wood Johnson Foundation Evaluation Series, Hallie Preskill and Nathalie Jones, 2009 (www. rwjf. org) 14

Stakeholder Engagement Strategies Engagement, buy-in, and commitment strategies from all key stakeholders Clusters ■ External Review Teams ■ Steering Committees ■ Feedback meetings ■ Interviews ■ Focus groups ■ Surveys Also see: A Practical Guide for Engaging Stakeholders in Developing Evaluation Questions, Robert Wood Johnson Foundation Evaluation Series, Hallie Preskill and Nathalie Jones, 2009 (www. rwjf. org) 14

Evaluation Use: Lessons Learned • Instrumental Use – Findings a basis for action, directly informs decisions and policy making Rail Safety Improvement Act of 2008 • Process Use – Changes in thinking, attitudes, behavior and organizational change Labor/management communication and organizational policies (UP) • Conceptual Use – Delayed influence on conceptual thinking about a program or policy Amtrak Safe-2 -Safer Program • Joe Boardman, FRA Admin Amtrak CEO 15

Evaluation Use: Lessons Learned • Instrumental Use – Findings a basis for action, directly informs decisions and policy making Rail Safety Improvement Act of 2008 • Process Use – Changes in thinking, attitudes, behavior and organizational change Labor/management communication and organizational policies (UP) • Conceptual Use – Delayed influence on conceptual thinking about a program or policy Amtrak Safe-2 -Safer Program • Joe Boardman, FRA Admin Amtrak CEO 15

Detail slides 16

Detail slides 16

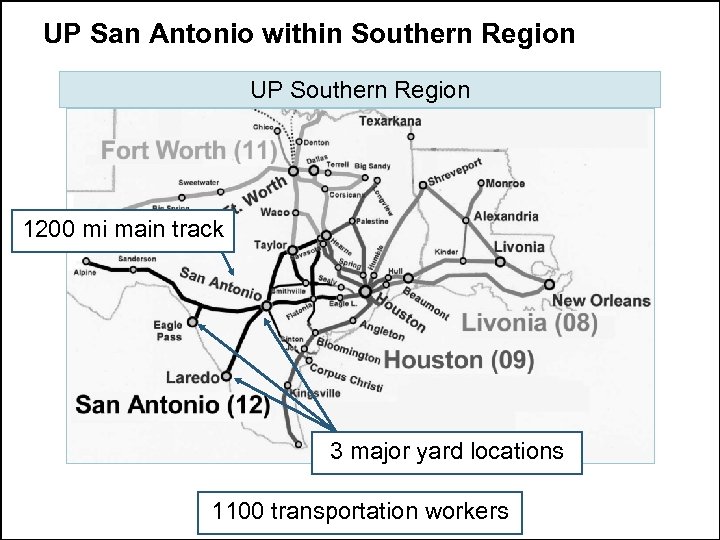

UP San Antonio within Southern Region UP Southern Region 1200 mi main track 3 major yard locations 1100 transportation workers 17

UP San Antonio within Southern Region UP Southern Region 1200 mi main track 3 major yard locations 1100 transportation workers 17

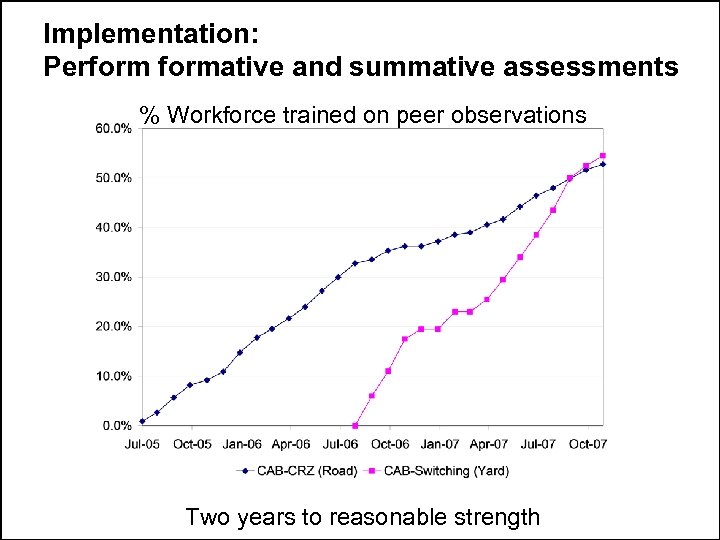

Implementation: Performative and summative assessments % Workforce trained on peer observations Two years to reasonable strength 18

Implementation: Performative and summative assessments % Workforce trained on peer observations Two years to reasonable strength 18

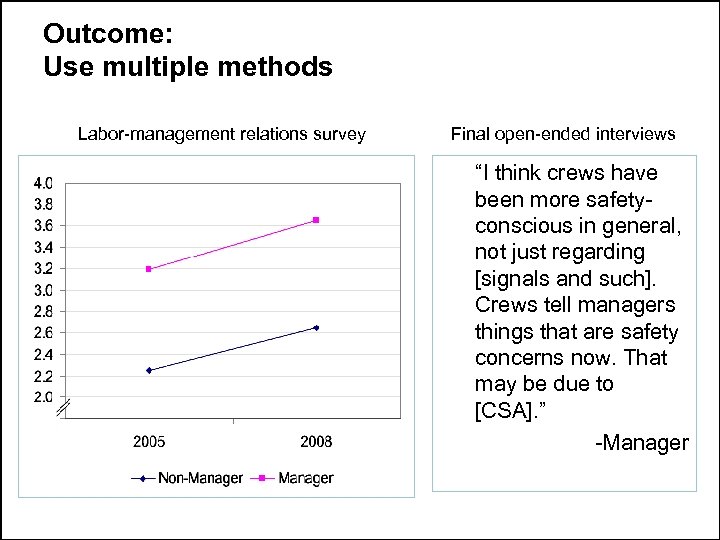

Outcome: Use multiple methods Labor-management relations survey Final open-ended interviews “I think crews have been more safetyconscious in general, not just regarding [signals and such]. Crews tell managers things that are safety concerns now. That may be due to [CSA]. ” -Manager 19

Outcome: Use multiple methods Labor-management relations survey Final open-ended interviews “I think crews have been more safetyconscious in general, not just regarding [signals and such]. Crews tell managers things that are safety concerns now. That may be due to [CSA]. ” -Manager 19

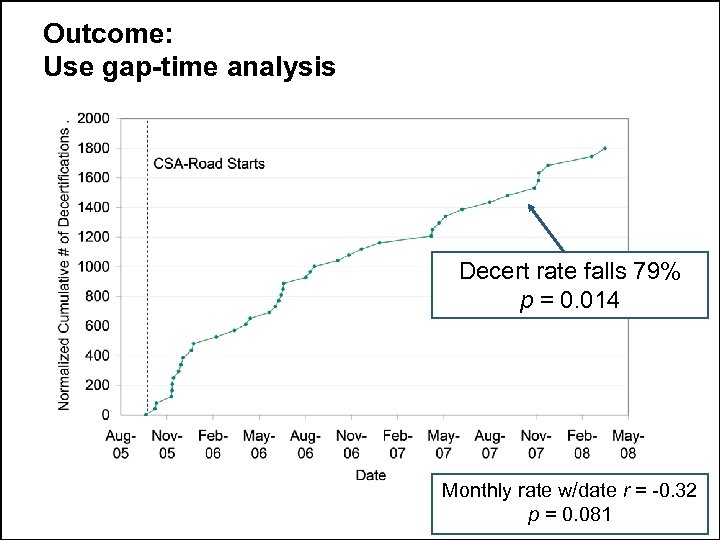

Outcome: Use gap-time analysis Decert rate falls 79% p = 0. 014 Monthly rate w/date r = -0. 32 p = 0. 081 20

Outcome: Use gap-time analysis Decert rate falls 79% p = 0. 014 Monthly rate w/date r = -0. 32 p = 0. 081 20

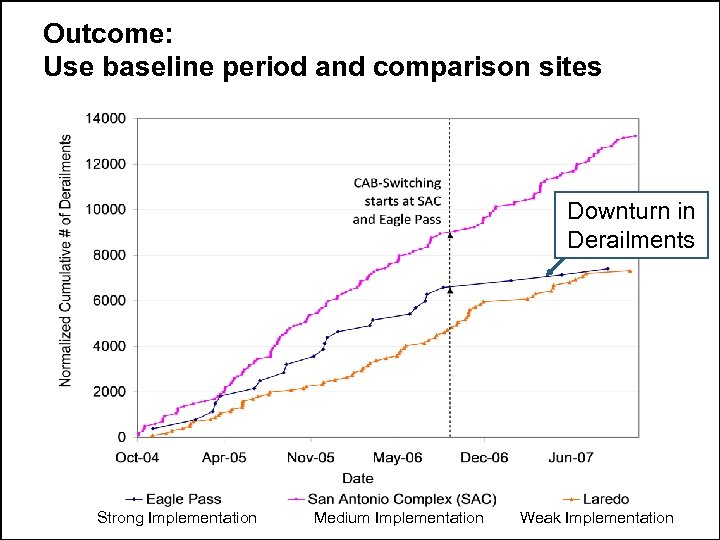

Outcome: Use baseline period and comparison sites Downturn in Derailments Strong Implementation Medium Implementation Weak Implementation 21

Outcome: Use baseline period and comparison sites Downturn in Derailments Strong Implementation Medium Implementation Weak Implementation 21

Employee Realities • • • Distrust of management Perceived blame for accidents/injuries Negative safety communications Poor labor-management relations Safety communications vacuum at employee level means lack of understanding of causal factors in accidents/injuries 22

Employee Realities • • • Distrust of management Perceived blame for accidents/injuries Negative safety communications Poor labor-management relations Safety communications vacuum at employee level means lack of understanding of causal factors in accidents/injuries 22