67988d4fb62249469eccc350a850686a.ppt

- Количество слайдов: 37

Evaluation of Crawling Policies for a Web-Repository Crawler Frank Mc. Cown & Michael L. Nelson Old Dominion University Norfolk, Virginia, USA Odense, Denmark August 23, 2006 HT'06 1

Alternate Models of Preservation • Lazy Preservation – Let Google, IA et al. preserve your website • Just-In-Time Preservation – Find a “good enough” replacement web page • Shared Infrastructure Preservation – Push your content to sites that might preserve it • Web Server Enhanced Preservation – Use Apache modules to create archival-ready resources HT'06 2 image from: http: //www. proex. ufes. br/arsm/knots_interlaced. htm

Outline • Web page threats • Web Infrastructure • Warrick – architectural description – crawling policies – future work HT'06 3

Black hat: http: //img. webpronews. com/securitypronews/110705 blackhat. jpg Virus image: http: //polarboing. com/images/topics/misc/story. computer. virus_1137794805. jpg Hard drive: http: //www. datarecoveryspecialist. com/images/head-crash-2. jpg HT'06 4

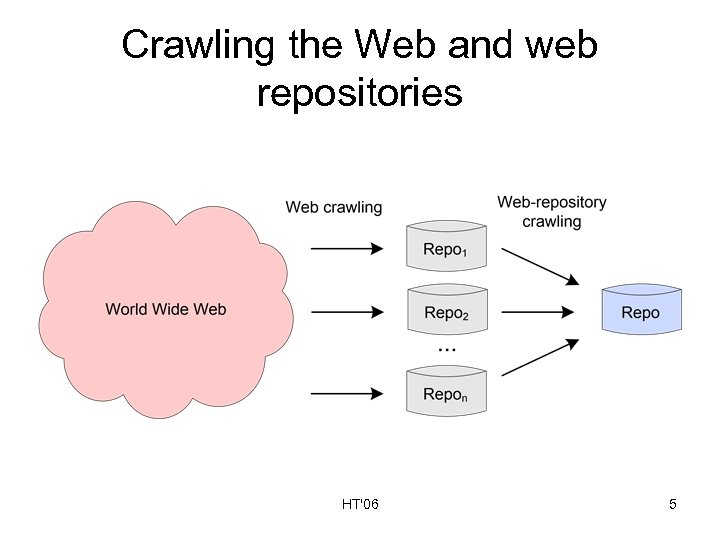

Crawling the Web and web repositories HT'06 5

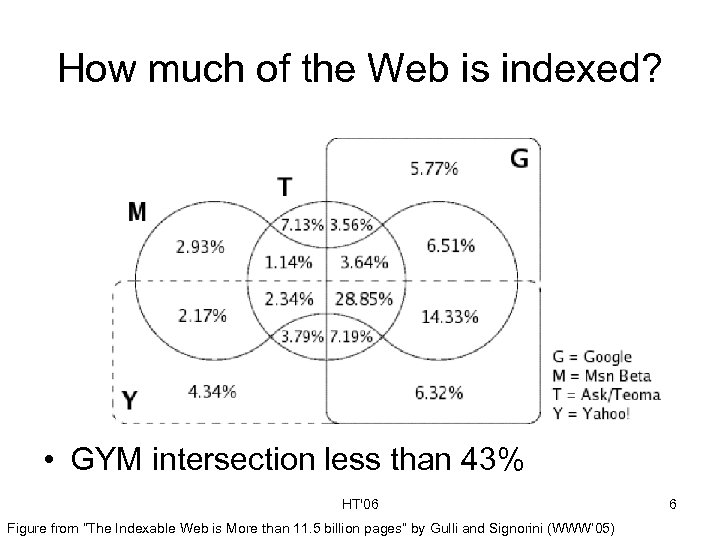

How much of the Web is indexed? • GYM intersection less than 43% HT'06 Figure from “The Indexable Web is More than 11. 5 billion pages” by Gulli and Signorini (WWW’ 05) 6

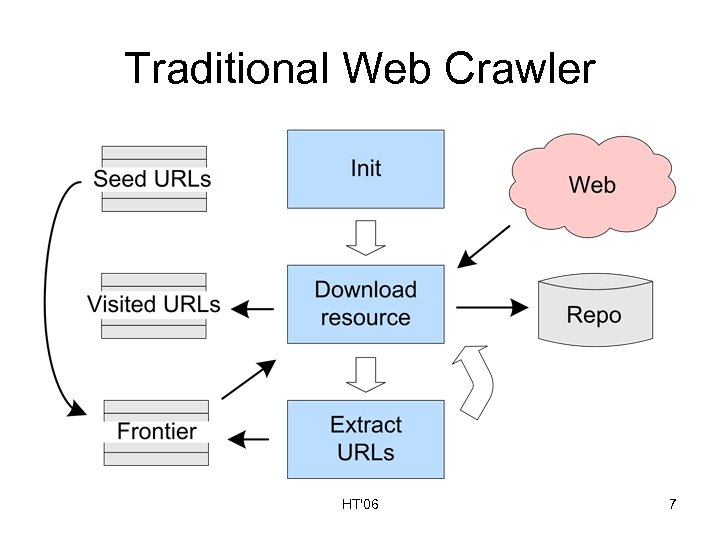

Traditional Web Crawler HT'06 7

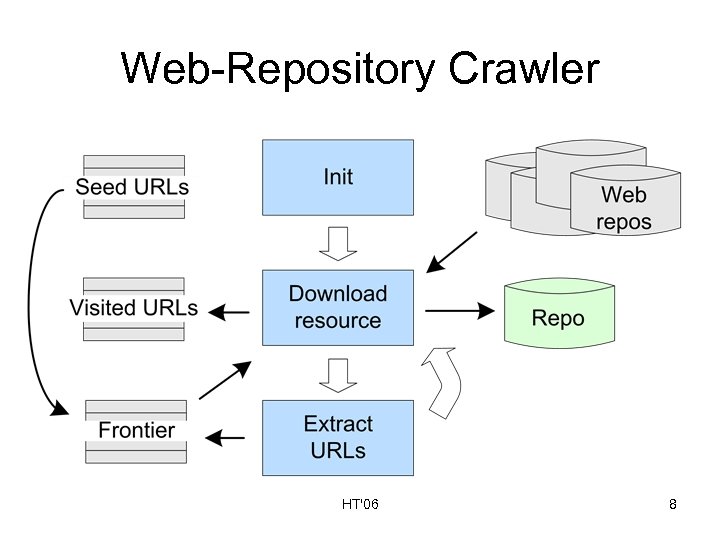

Web-Repository Crawler HT'06 8

HT'06 9

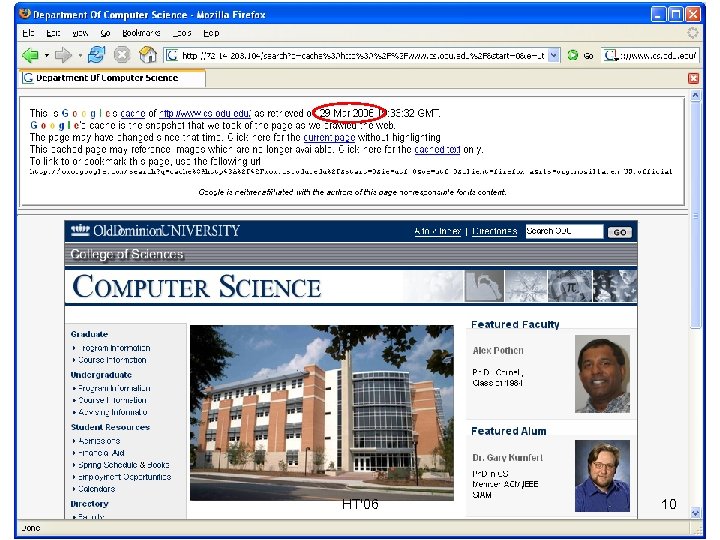

HT'06 10

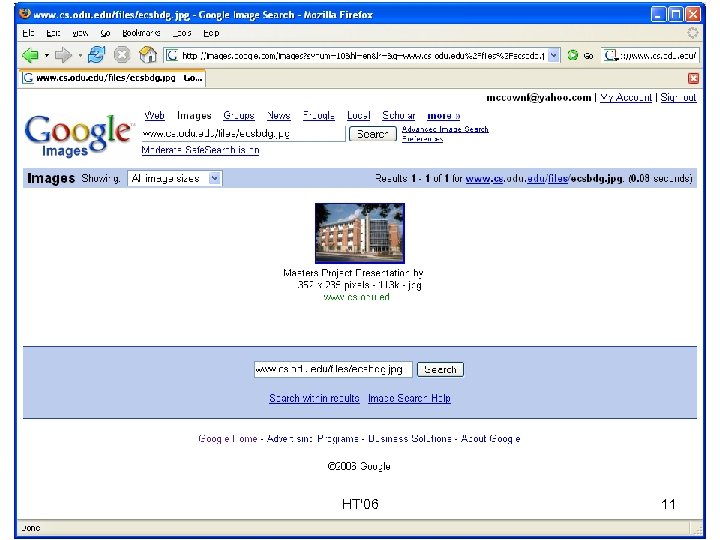

Cached Image HT'06 11

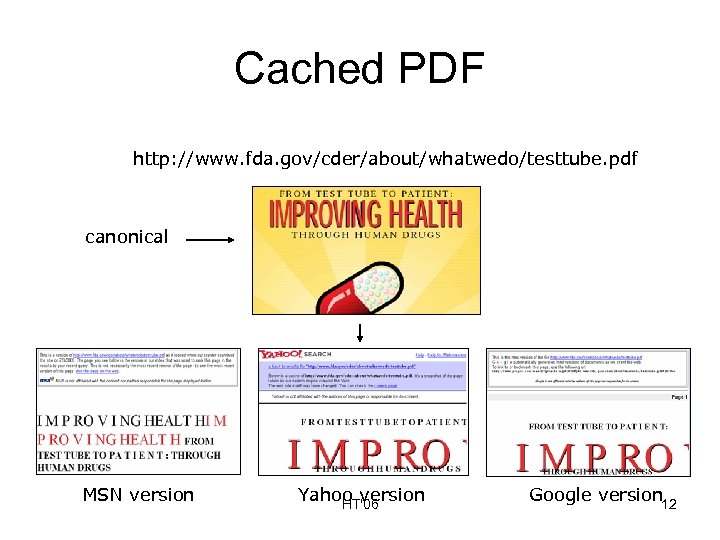

Cached PDF http: //www. fda. gov/cder/about/whatwedo/testtube. pdf canonical MSN version Yahoo version HT'06 Google version 12

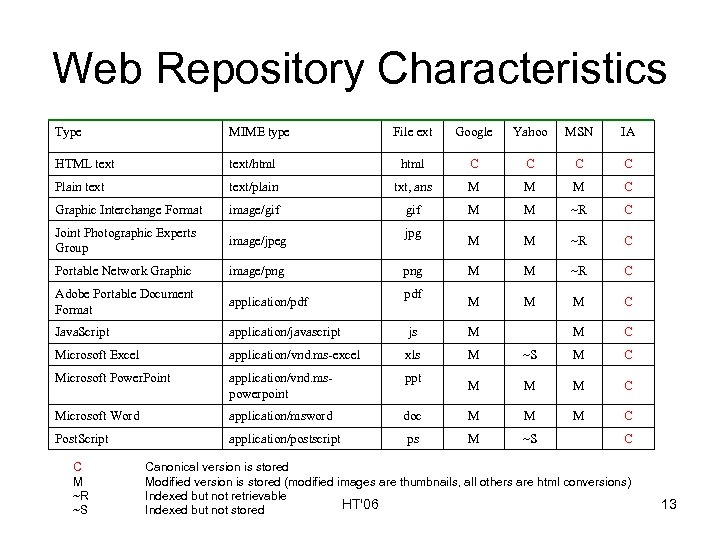

Web Repository Characteristics Type MIME type File ext Google Yahoo MSN IA HTML text/html C C Plain text/plain txt, ans M M M C Graphic Interchange Format image/gif M M ~R C Joint Photographic Experts Group image/jpeg M M ~R C Portable Network Graphic image/png M M ~R C Adobe Portable Document Format application/pdf M M M C Java. Script application/javascript js M M C Microsoft Excel application/vnd. ms-excel xls M ~S M C Microsoft Power. Point application/vnd. mspowerpoint ppt M M M C Microsoft Word application/msword doc M M M C Post. Script application/postscript ps M ~S C M ~R ~S jpg png pdf C Canonical version is stored Modified version is stored (modified images are thumbnails, all others are html conversions) Indexed but not retrievable HT'06 Indexed but not stored 13

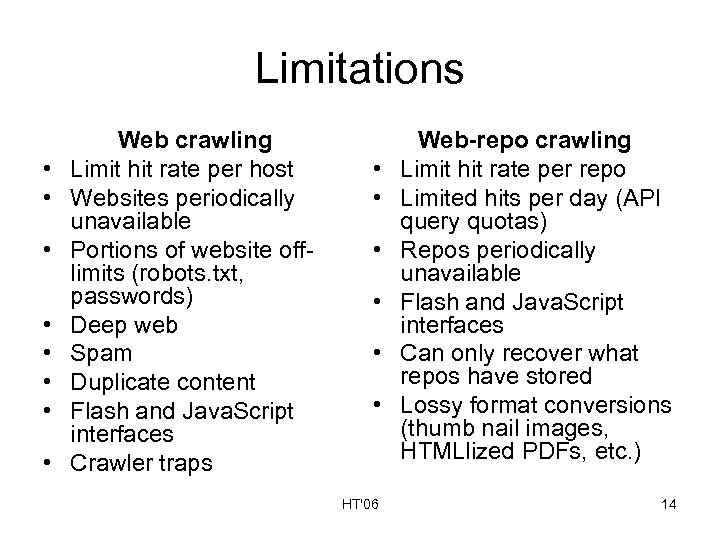

Limitations • • Web crawling Limit hit rate per host Websites periodically unavailable Portions of website offlimits (robots. txt, passwords) Deep web Spam Duplicate content Flash and Java. Script interfaces Crawler traps • • • HT'06 Web-repo crawling Limit hit rate per repo Limited hits per day (API query quotas) Repos periodically unavailable Flash and Java. Script interfaces Can only recover what repos have stored Lossy format conversions (thumb nail images, HTMLlized PDFs, etc. ) 14

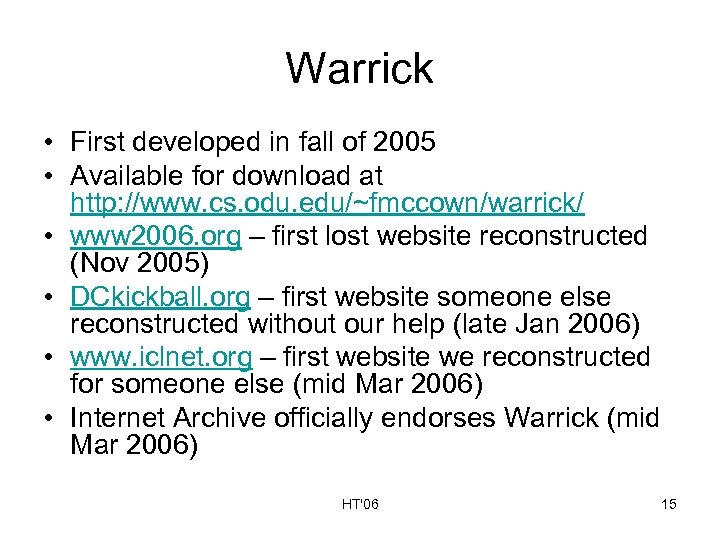

Warrick • First developed in fall of 2005 • Available for download at http: //www. cs. odu. edu/~fmccown/warrick/ • www 2006. org – first lost website reconstructed (Nov 2005) • DCkickball. org – first website someone else reconstructed without our help (late Jan 2006) • www. iclnet. org – first website we reconstructed for someone else (mid Mar 2006) • Internet Archive officially endorses Warrick (mid Mar 2006) HT'06 15

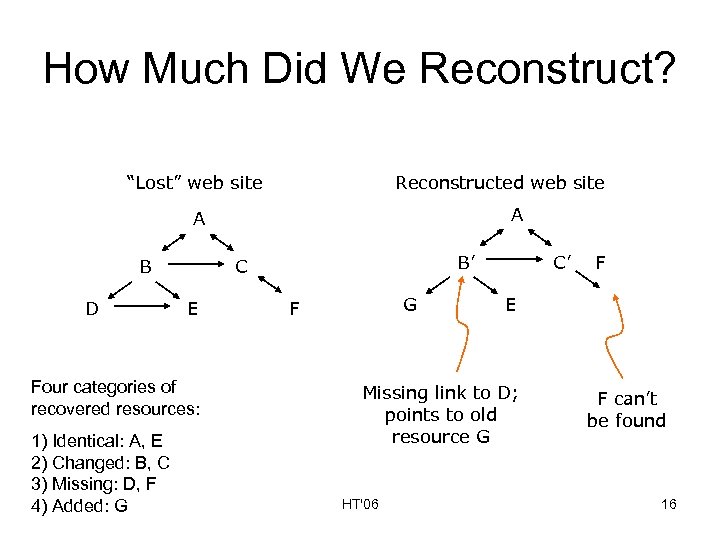

How Much Did We Reconstruct? “Lost” web site Reconstructed web site A A B D E Four categories of recovered resources: 1) Identical: A, E 2) Changed: B, C 3) Missing: D, F 4) Added: G B’ C G F C’ E Missing link to D; points to old resource G HT'06 F F can’t be found 16

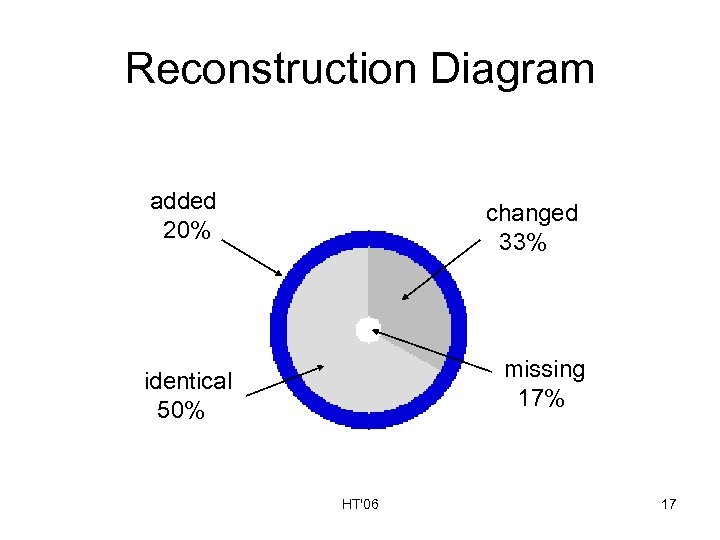

Reconstruction Diagram added 20% changed 33% missing 17% identical 50% HT'06 17

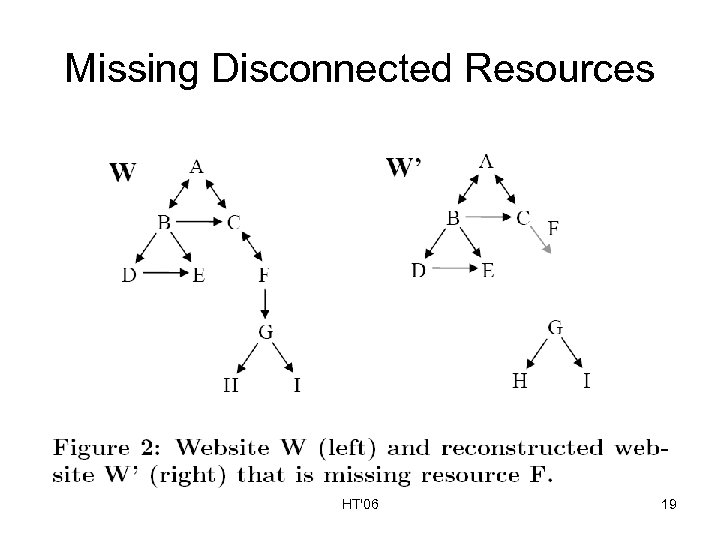

Initial Experiment - April 2005 • Crawl and reconstruct 24 sites in 3 categories: 1. small (1 -150 resources) 2. medium (150 -499 resources) 3. large (500+ resources) • Calculate reconstruction vector for each site • Results: mostly successful at recovering HTML • Observation: many wasted queries, disconnected portions of websites are unrecoverable • See: – Mc. Cown et al. Reconstructing websites for the lazy webmaster. Tech Report, 2005. http: //arxiv. org/abs/cs. IR/0512069 – Smith et al. Observed web robot behavior on decaying web subsites. D-Lib Magazine, 12(2), Feb 2006. HT'06 18

Missing Disconnected Resources HT'06 19

Lister Queries • Problem with initial version of Warrick: wasted queries – – Internet Archive: Do you have X? No Google: Do you have X? No Yahoo: Do you have X? Yes MSN: Do you have X? No • What if we first ask each web repository “What resources do you have? ” We call these “lister queries”. • How many repository requests will this save? • How many more resources will this discover? • What other problems will this help solve? HT'06 20

Lister Queries cont. • Search engines – site: www. foo. org – Limited to first 1000 results or less • Internet Archive – http: //web. archive. org/web/*/http: //www. foo. org/* – Not all URLs reported are actually accessible • Results are given in groups of 100 or less HT'06 21

URL Canonicalization • How do we know if URL X is pointing to the same resource as URL Y? • Web crawlers use several strategies that we may borrow: – – Convert to lowercase Remove www prefix Remove session IDs etc. • All web repositories have different canonicalization policies which lister queries can uncover HT'06 22

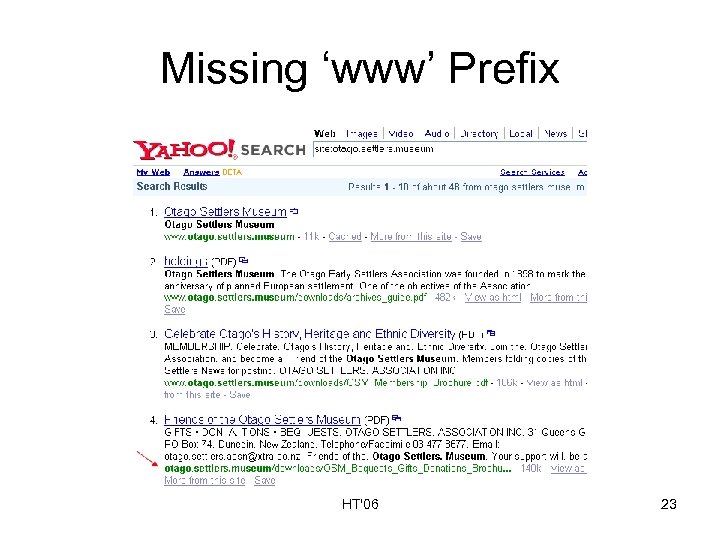

Missing ‘www’ Prefix HT'06 23

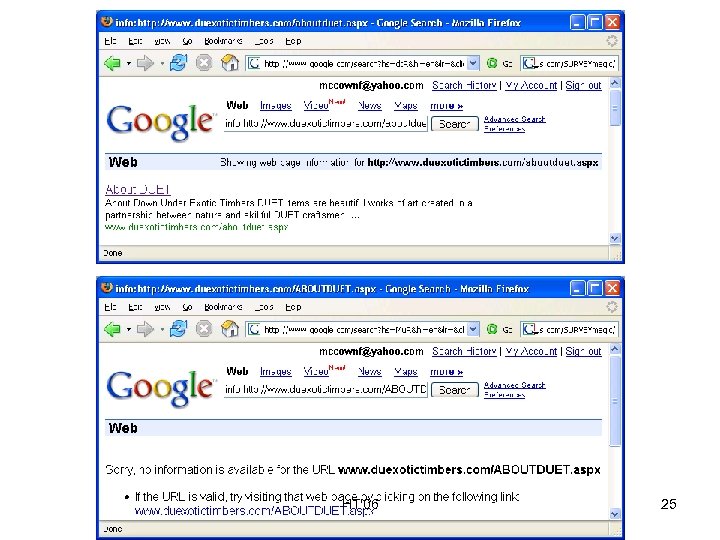

Case Sensitivity • Some web servers run on case-insensitive file systems (e. g. , IIS on Windows) • http: //foo. org/bar. html is equivalent to http: //foo. org/BAR. html • MSN always ignores case, Google and Yahoo do not HT'06 24

HT'06 25

Crawling Policies 1. Naïve Policy - Do not issue lister queries; only recover links that are found in recovered pages. 2. Knowledgeable Policy - Issue lister queries but only recover links that are found in recovered pages. 3. Exhaustive Policy - Issue lister queries and recover all resources found in all repositories. (Repository dump) HT'06 26

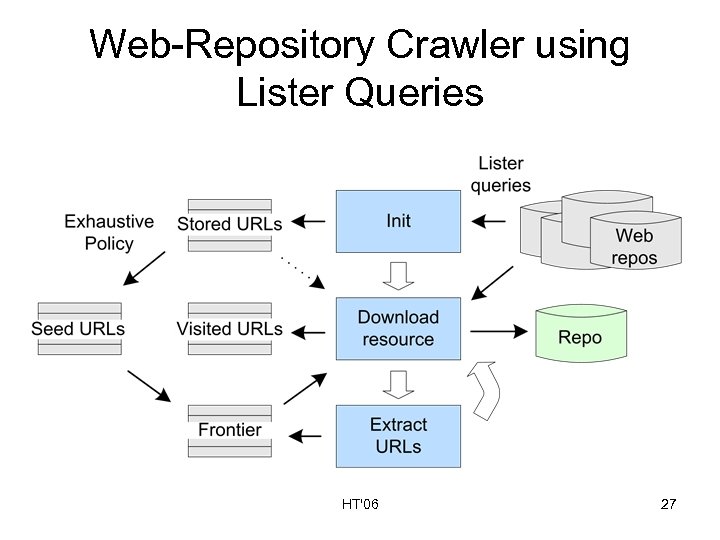

Web-Repository Crawler using Lister Queries HT'06 27

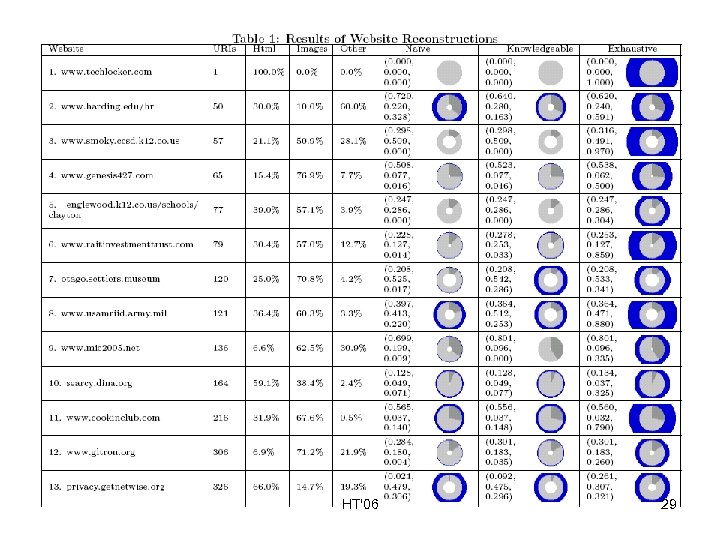

Experiment • Download all 24 websites (from first experiment) • Perform 3 reconstructions for each site using the 3 crawling policies • Compute reconstruction vectors for each reconstruction HT'06 28

HT'06 29

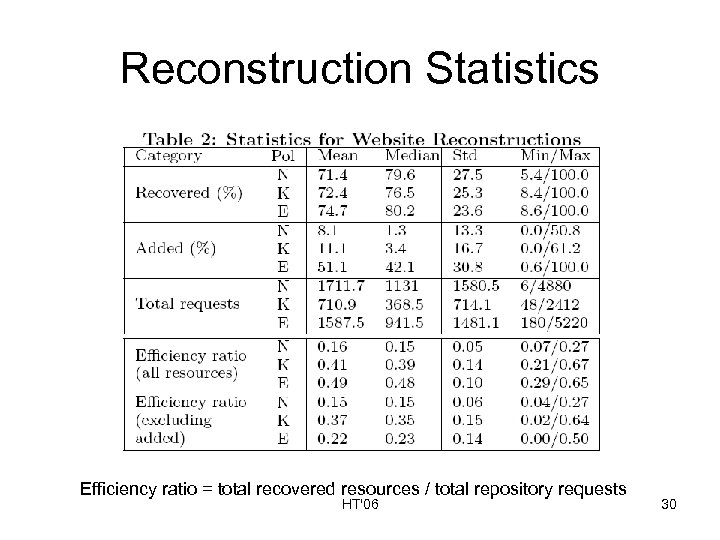

Reconstruction Statistics Efficiency ratio = total recovered resources / total repository requests HT'06 30

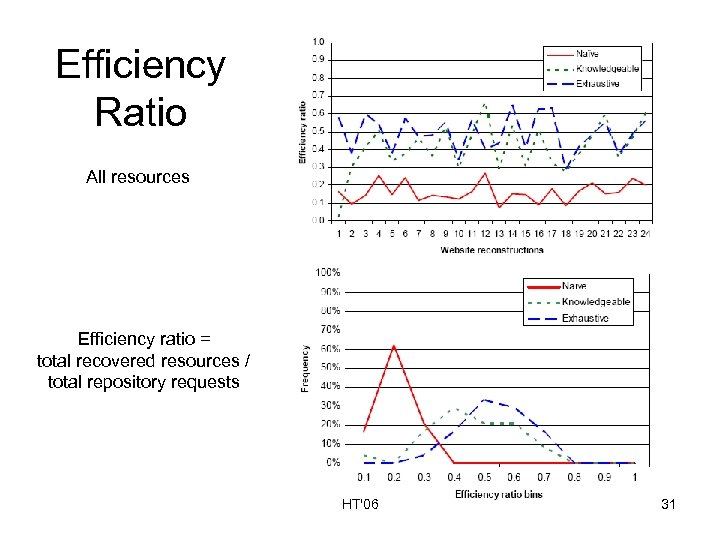

Efficiency Ratio All resources Efficiency ratio = total recovered resources / total repository requests HT'06 31

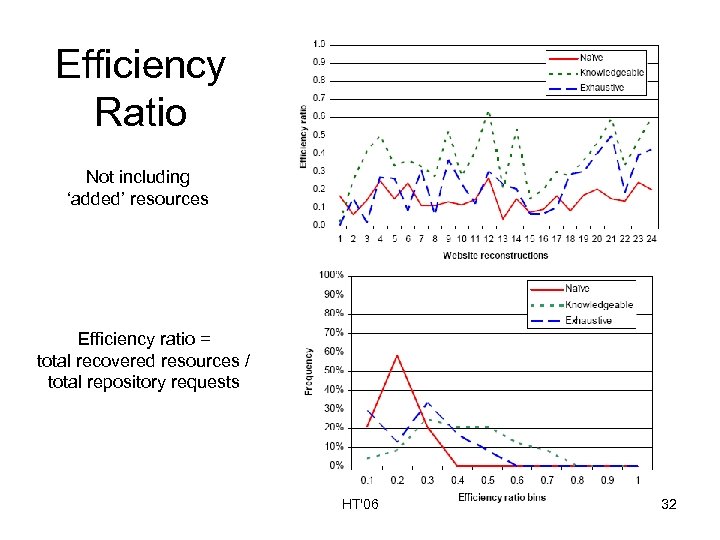

Efficiency Ratio Not including ‘added’ resources Efficiency ratio = total recovered resources / total repository requests HT'06 32

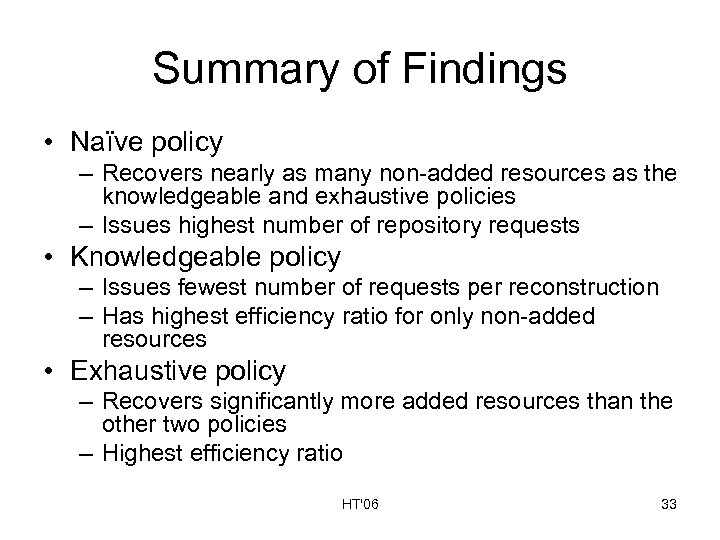

Summary of Findings • Naïve policy – Recovers nearly as many non-added resources as the knowledgeable and exhaustive policies – Issues highest number of repository requests • Knowledgeable policy – Issues fewest number of requests per reconstruction – Has highest efficiency ratio for only non-added resources • Exhaustive policy – Recovers significantly more added resources than the other two policies – Highest efficiency ratio HT'06 33

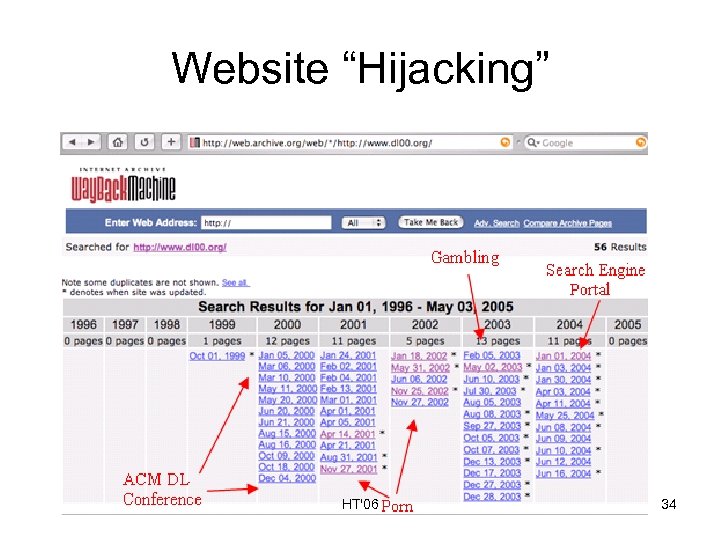

Website “Hijacking” HT'06 34

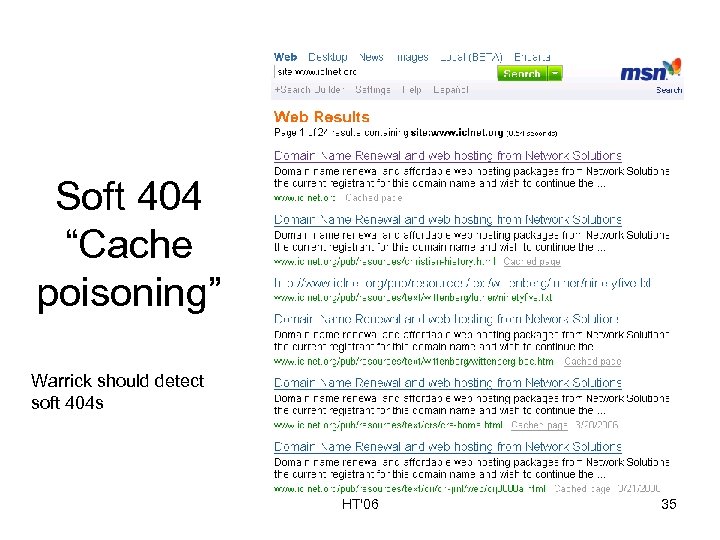

Soft 404 “Cache poisoning” Warrick should detect soft 404 s HT'06 35

Other Web Obstacles • Effective “do not preserve” tags: – – – Flash AJAX http POST session ids, cookies, etc. cloaking URLs that change based on traversal patterns • Lutkenhouse, Nelson, Bollen, Distributed, Real-Time Computation of Community Preferences, Proceedings of ACM Hypertext 05 – http: //doi. acm. org/10. 1145/1083356. 1083374 HT'06 36

Conclusion • Web sites can be reconstructed by accessing the caches of the Web Infrastructure – Some URL canonicalization issues can be tackled using lister queries – multiple policies available depending on reconstruction requirements • Much work to be done – capturing server-side information – moving from descriptive model to proscriptive & predictive model HT'06 37

67988d4fb62249469eccc350a850686a.ppt