8810ceab36f595c3ab4fbe8b163158f1.ppt

- Количество слайдов: 36

Evaluating the Performance of Pub/Sub Platforms for Tactical Information Management Jeff Parsons Ming Xiong j. parsons@vanderbilt. edu xiongm@isis. vanderbilt. edu Dr. Douglas C. Schmidt James Edmondson d. schmidt@vanderbilt. edu Hieu Nguyen hieu. t. nguyen@vanderbilt. edu jedmondson@gmail. com Olabode Ajiboye olabode. ajiboye@vanderbilt. edu July 11, 2006 Research Sponsored by AFRL/IF, NSF, & Vanderbilt University

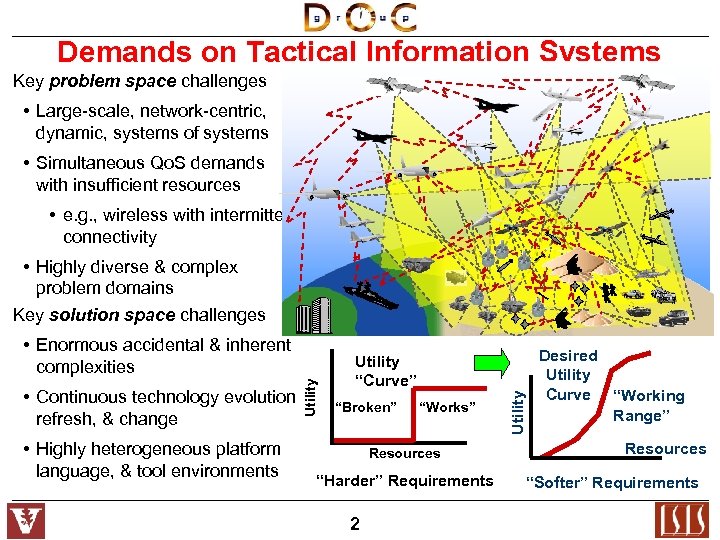

Demands on Tactical Information Systems Key problem space challenges • Large-scale, network-centric, dynamic, systems of systems • Simultaneous Qo. S demands with insufficient resources • e. g. , wireless with intermittent connectivity • Highly diverse & complex problem domains Key solution space challenges • Highly heterogeneous platform, language, & tool environments Utility “Curve” “Broken” “Works” Resources “Harder” Requirements 2 Utility • Continuous technology evolution refresh, & change Utility • Enormous accidental & inherent complexities Desired Utility Curve “Working Range” Resources “Softer” Requirements

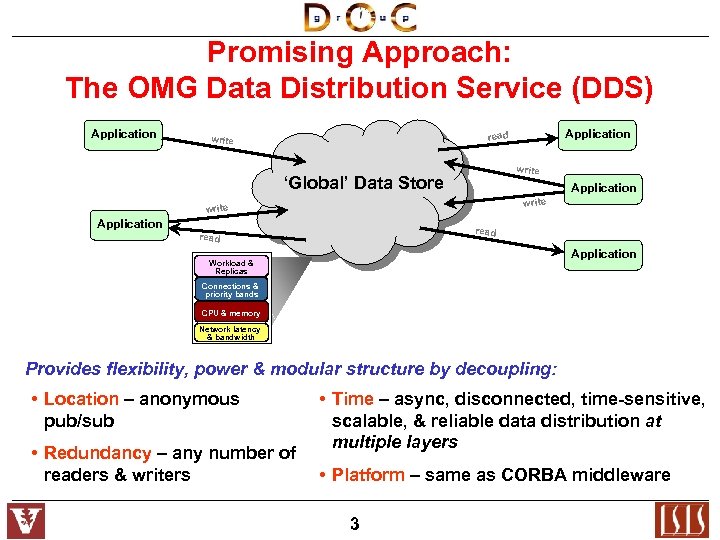

Promising Approach: The OMG Data Distribution Service (DDS) Application read write ‘Global’ Data Store Application write Application read Application Workload & Replicas Connections & priority bands CPU & memory Network latency & bandwidth Provides flexibility, power & modular structure by decoupling: • Location – anonymous pub/sub • Redundancy – any number of readers & writers • Time – async, disconnected, time-sensitive, scalable, & reliable data distribution at multiple layers • Platform – same as CORBA middleware 3

Overview of the Data Distribution Service (DDS) • A highly efficient OMG pub/sub standard • Fewer layers, less overhead • RTPS over UDP will recognize Qo. S Topic R Data Writer R Data Reader R Publisher Subscriber RT Info to Cockpit & Track Processing DDS Pub/Sub Using Proposed Real-Time Publish Subscribe (RTPS) Protocol Tactical Network & RTOS 4

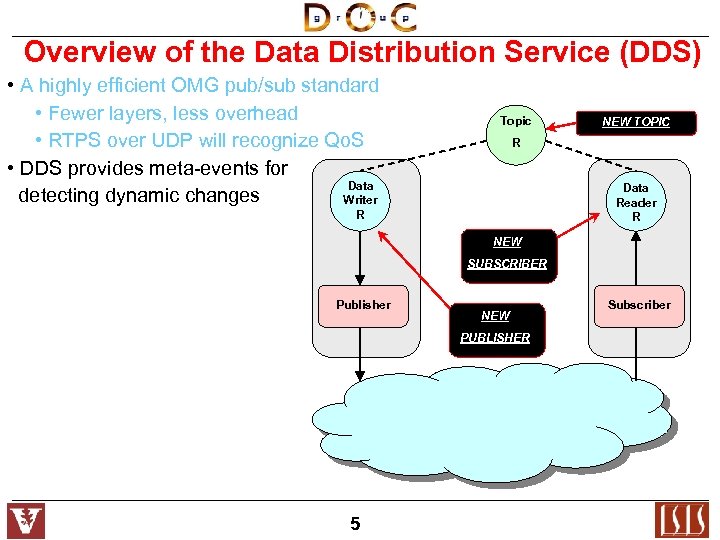

Overview of the Data Distribution Service (DDS) • A highly efficient OMG pub/sub standard • Fewer layers, less overhead • RTPS over UDP will recognize Qo. S • DDS provides meta-events for Data detecting dynamic changes Writer Topic NEW TOPIC R Data Reader R R NEW SUBSCRIBER Publisher NEW PUBLISHER 5 Subscriber

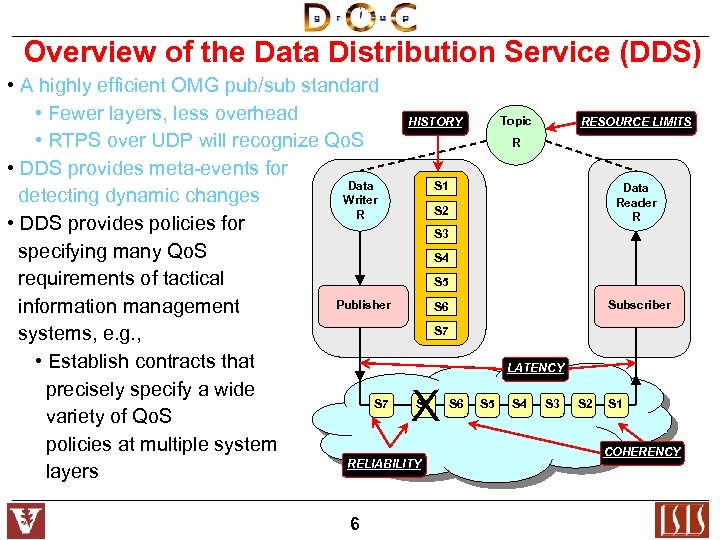

Overview of the Data Distribution Service (DDS) • A highly efficient OMG pub/sub standard • Fewer layers, less overhead HISTORY • RTPS over UDP will recognize Qo. S • DDS provides meta-events for Data S 1 detecting dynamic changes Writer S 2 R • DDS provides policies for S 3 specifying many Qo. S S 4 requirements of tactical S 5 Publisher S 6 information management S 7 systems, e. g. , • Establish contracts that precisely specify a wide S 7 S 6 variety of Qo. S policies at multiple system RELIABILITY layers X 6 Topic RESOURCE LIMITS R Data Reader R Subscriber LATENCY S 5 S 4 S 3 S 2 S 1 COHERENCY

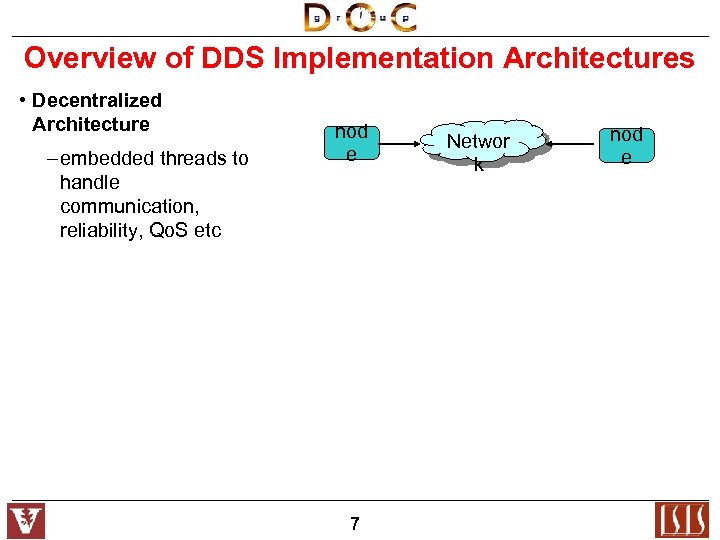

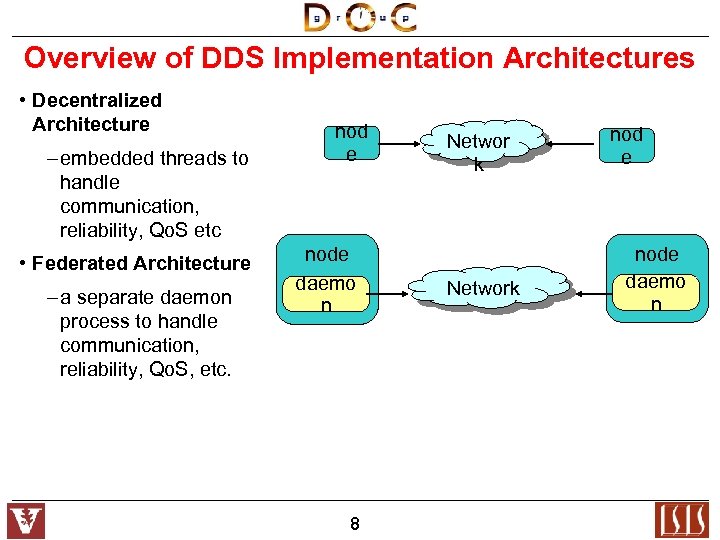

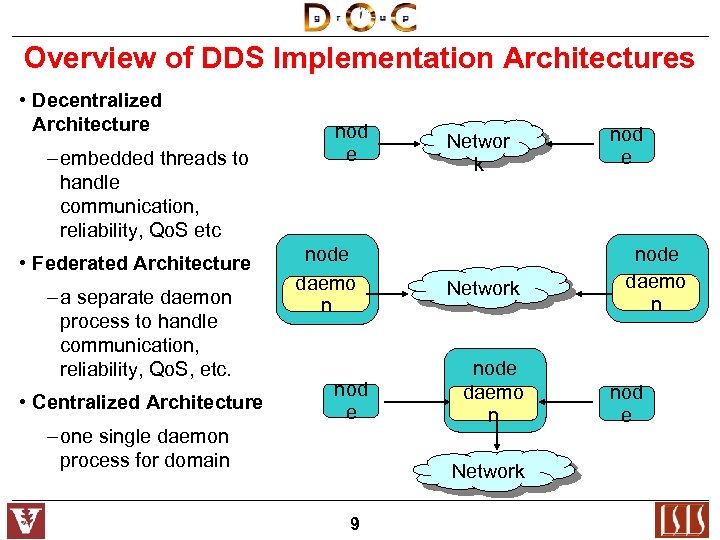

Overview of DDS Implementation Architectures • Decentralized Architecture – embedded threads to handle communication, reliability, Qo. S etc nod e 7 Networ k nod e

Overview of DDS Implementation Architectures • Decentralized Architecture – embedded threads to handle communication, reliability, Qo. S etc • Federated Architecture – a separate daemon process to handle communication, reliability, Qo. S, etc. nod e node daemo n 8 Networ k Network nod e node daemo n

Overview of DDS Implementation Architectures • Decentralized Architecture – embedded threads to handle communication, reliability, Qo. S etc • Federated Architecture – a separate daemon process to handle communication, reliability, Qo. S, etc. • Centralized Architecture node daemo n nod e – one single daemon process for domain Networ k Network node daemo n Network 9 nod e node daemo n nod e

DDS 1 (Decentralized Architecture) Participant comm/ aux threads Participant Network comm/ aux threads User process Node (computer) Pros: Self-contained communication end-points, needs no extra daemons User process more complex, e. g. , must handle config details (efficient discovery, m 10

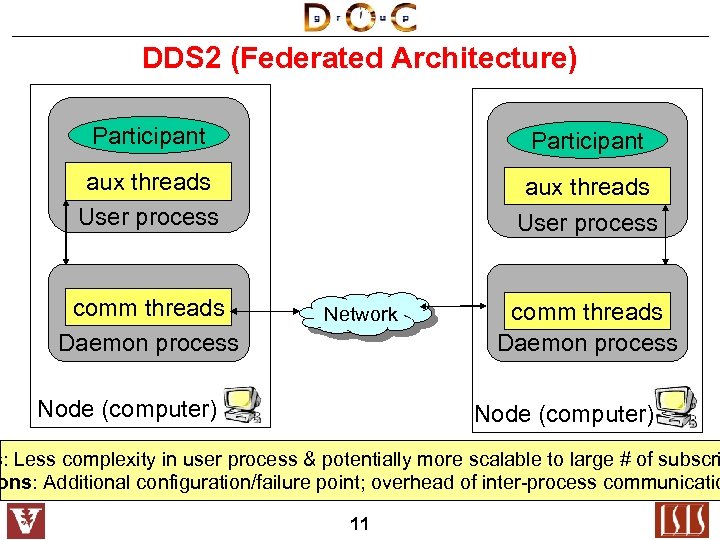

DDS 2 (Federated Architecture) Participant aux threads User process comm threads Daemon process Network Node (computer) comm threads Daemon process Node (computer) s: Less complexity in user process & potentially more scalable to large # of subscri ons: Additional configuration/failure point; overhead of inter-process communicatio 11

DDS 3 (Centralized Architecture) Participant data comm threads User process Node (computer) co nt Networ k ro l l ro t on c comm threads User process Node (computer) Aux + comm threads Daemon process Node (computer) Pros: Easy daemon setup Cons: Single point of failure; scalability problems 12

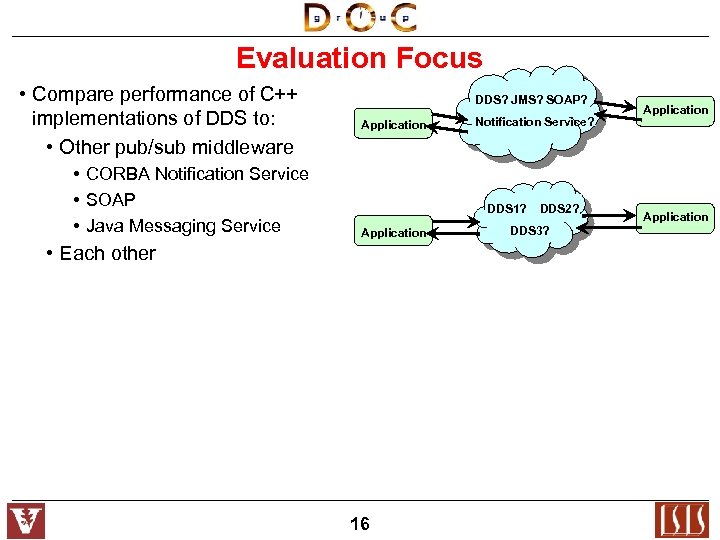

Evaluation Focus • Compare performance of C++ implementations of DDS to: • Other pub/sub middleware DDS? JMS? SOAP? Application • CORBA Notification Service • SOAP • Java Messaging Service 15 Notification Service? Application

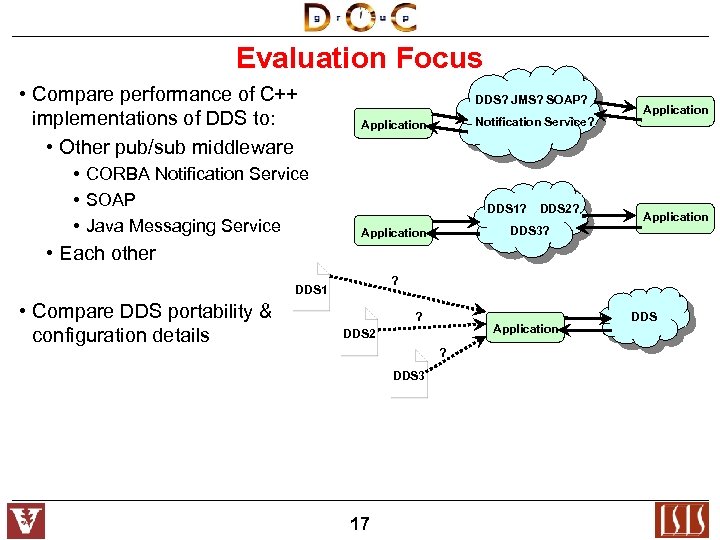

Evaluation Focus • Compare performance of C++ implementations of DDS to: • Other pub/sub middleware • CORBA Notification Service • SOAP • Java Messaging Service DDS? JMS? SOAP? Application Notification Service? DDS 1? Application • Each other 16 DDS 2? DDS 3? Application

Evaluation Focus • Compare performance of C++ implementations of DDS to: • Other pub/sub middleware • CORBA Notification Service • SOAP • Java Messaging Service DDS? JMS? SOAP? Notification Service? Application DDS 1? DDS 2? Application DDS 3? Application • Each other ? DDS 1 • Compare DDS portability & configuration details ? Application DDS 2 ? DDS 3 17 DDS

Evaluation Focus • Compare performance of C++ implementations of DDS to: • Other pub/sub middleware DDS? JMS? SOAP? Notification Service? Application • CORBA Notification Service • SOAP • Java Messaging Service DDS 1? DDS 2? Application DDS 3? Application • Each other ? DDS 1 • Compare DDS portability & configuration details ? Application DDS 2 DDS ? DDS 3 • Compare performance of subscriber notification mechanisms • Listener vs. wait-set Subscriber Wait-set Listener 18 ? ? DDS

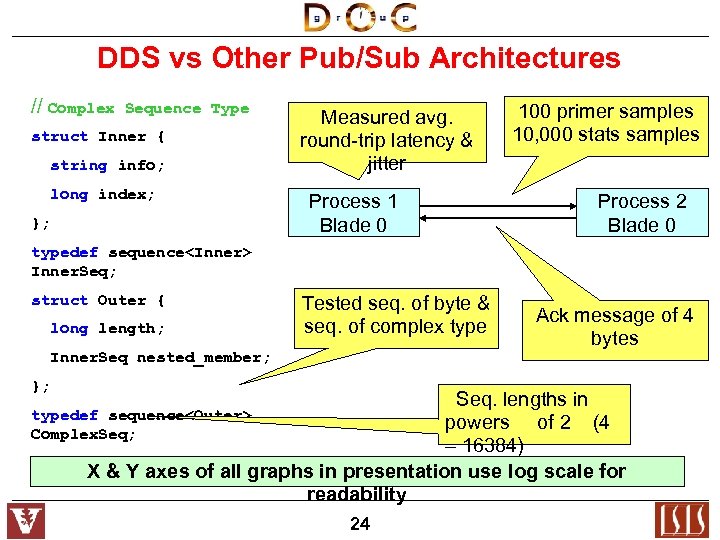

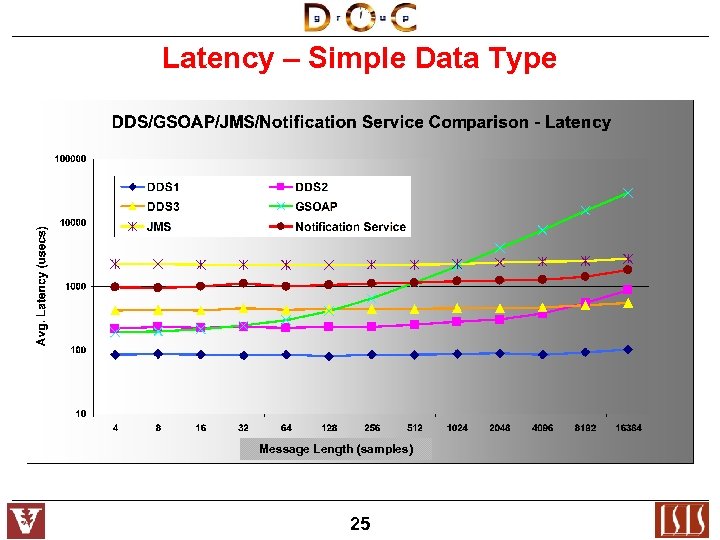

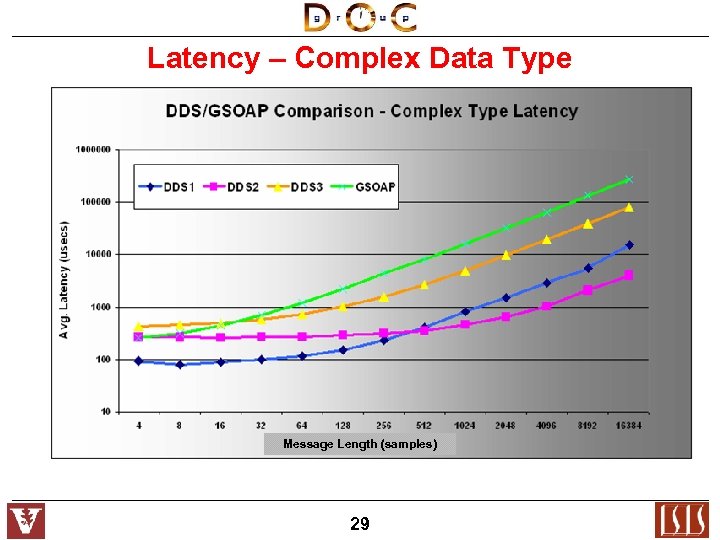

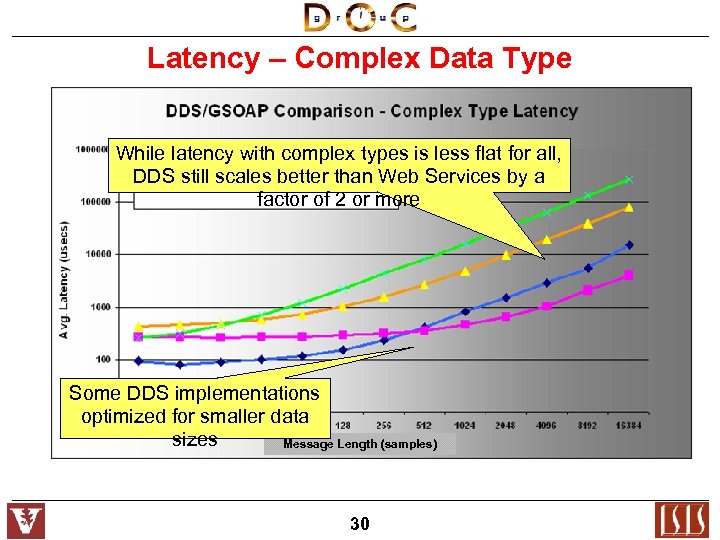

DDS vs Other Pub/Sub Architectures // Complex Sequence Type struct Inner { string info; long index; }; Measured avg. round-trip latency & jitter Process 1 Blade 0 100 primer samples 10, 000 stats samples Process 2 Blade 0 typedef sequence<Inner> Inner. Seq; struct Outer { long length; Tested seq. of byte & seq. of complex type Inner. Seq nested_member; }; Ack message of 4 bytes Seq. lengths in powers of 2 (4 – 16384) X & Y axes of all graphs in presentation use log scale for readability typedef sequence<Outer> Complex. Seq; 24

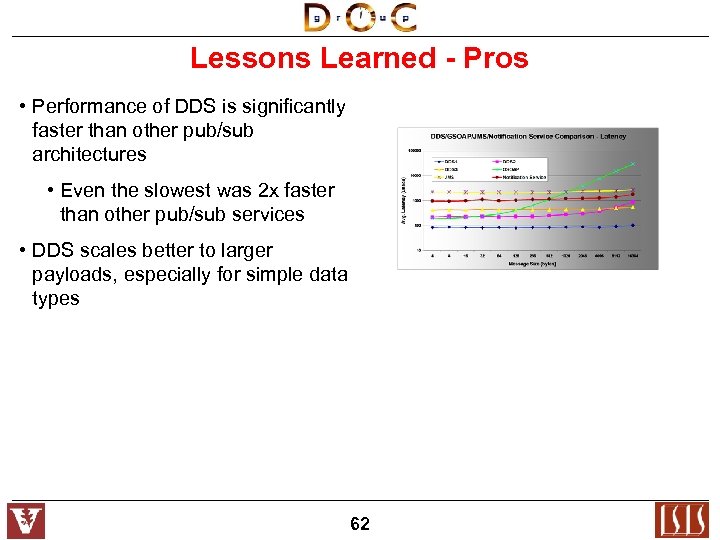

Latency – Simple Data Type Message Length (samples) 25

Latency – Simple Data Type With conventional pub/sub mechanisms the delay before the application learns critical information is very high! In contrast, DDS latency is low across the board Message Length (samples) 26

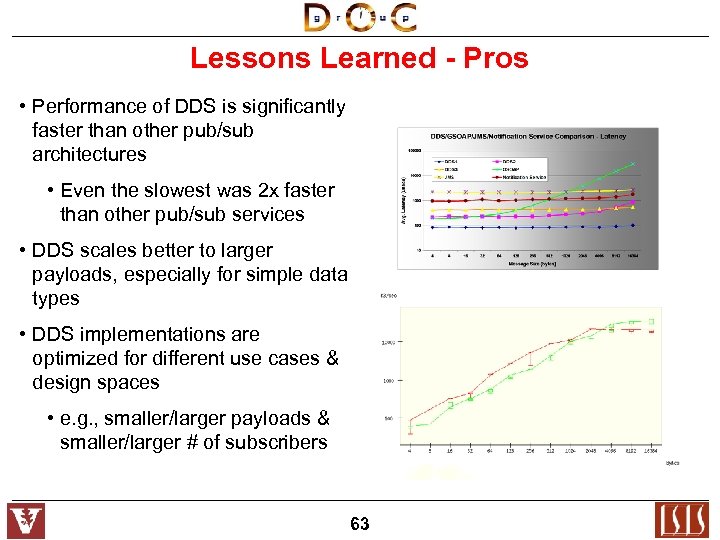

Latency – Complex Data Type Message Length (samples) 29

Latency – Complex Data Type While latency with complex types is less flat for all, DDS still scales better than Web Services by a factor of 2 or more Some DDS implementations optimized for smaller data sizes Message Length (samples) 30

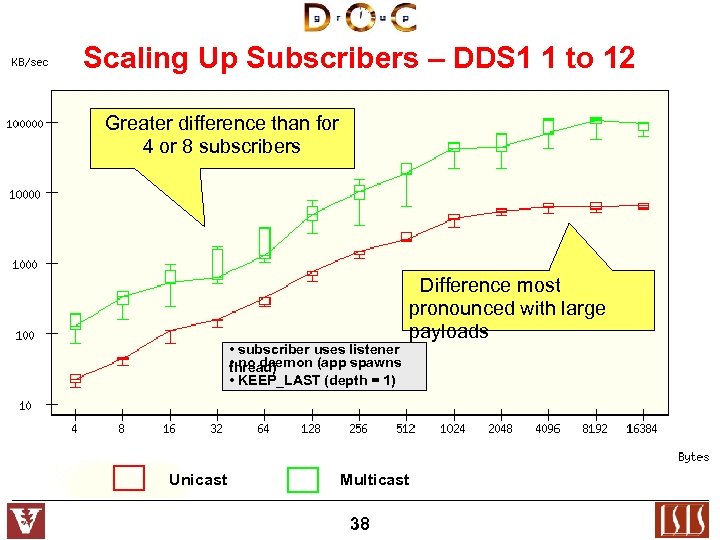

Scaling Up DDS Subscribers • The past 8 slides showed latency/jitter results for 1 -to-1 tests • We now show throughput results for 1 -to-N tests 4, 8, & 12 subscribers each on Publisher oversends to different blades ensure sufficient received samples Blade N Blade … Blade 0 Blade 2 Blade 1 Byte sequences Seq. lengths in powers of 2 (4 – 16384) 100 primer samples 10, 000 stats samples All following graphs plot median + “box-n-whiskers” 33

Scaling Up Subscribers – DDS 1 1 to 12 Greater difference than for 4 or 8 subscribers • subscriber uses listener • no daemon (app spawns thread) • KEEP_LAST (depth = 1) Unicast Difference most pronounced with large payloads Multicast 38

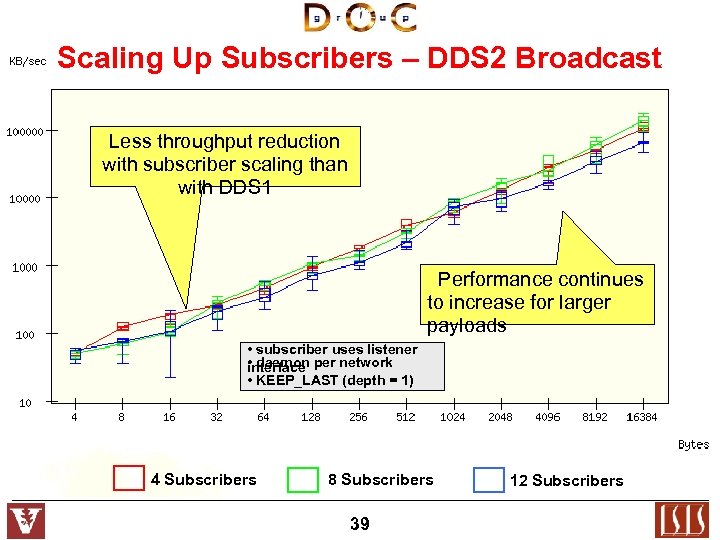

Scaling Up Subscribers – DDS 2 Broadcast Less throughput reduction with subscriber scaling than with DDS 1 Performance continues to increase for larger payloads • subscriber uses listener • daemon per network interface • KEEP_LAST (depth = 1) 4 Subscribers 8 Subscribers 39 12 Subscribers

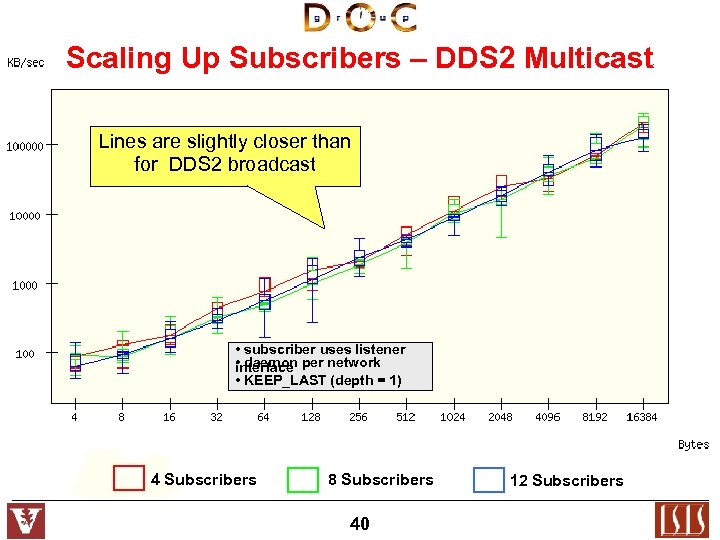

Scaling Up Subscribers – DDS 2 Multicast Lines are slightly closer than for DDS 2 broadcast • subscriber uses listener • daemon per network interface • KEEP_LAST (depth = 1) 4 Subscribers 8 Subscribers 40 12 Subscribers

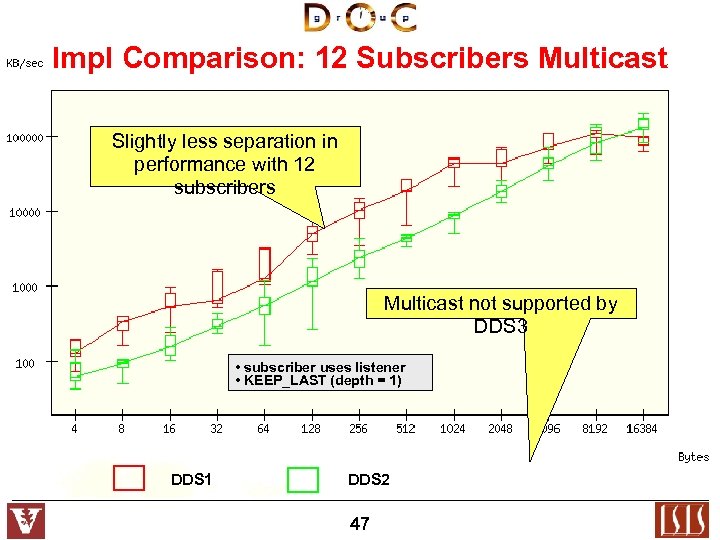

Impl Comparison: 12 Subscribers Multicast Slightly less separation in performance with 12 subscribers Multicast not supported by DDS 3 • subscriber uses listener • KEEP_LAST (depth = 1) DDS 1 DDS 2 47

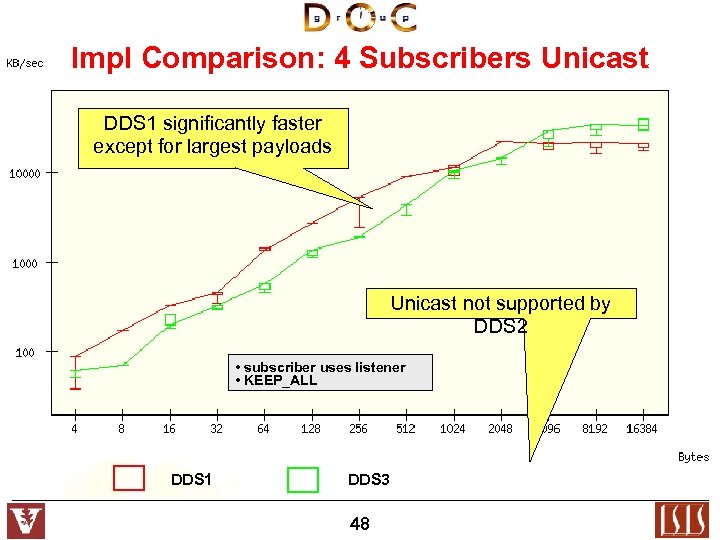

Impl Comparison: 4 Subscribers Unicast DDS 1 significantly faster except for largest payloads Unicast not supported by DDS 2 • subscriber uses listener • KEEP_ALL DDS 1 DDS 3 48

Lessons Learned - Pros • Performance of DDS is significantly faster than other pub/sub architectures • Even the slowest was 2 x faster than other pub/sub services • DDS scales better to larger payloads, especially for simple data types 62

Lessons Learned - Pros • Performance of DDS is significantly faster than other pub/sub architectures • Even the slowest was 2 x faster than other pub/sub services • DDS scales better to larger payloads, especially for simple data types • DDS implementations are optimized for different use cases & design spaces • e. g. , smaller/larger payloads & smaller/larger # of subscribers 63

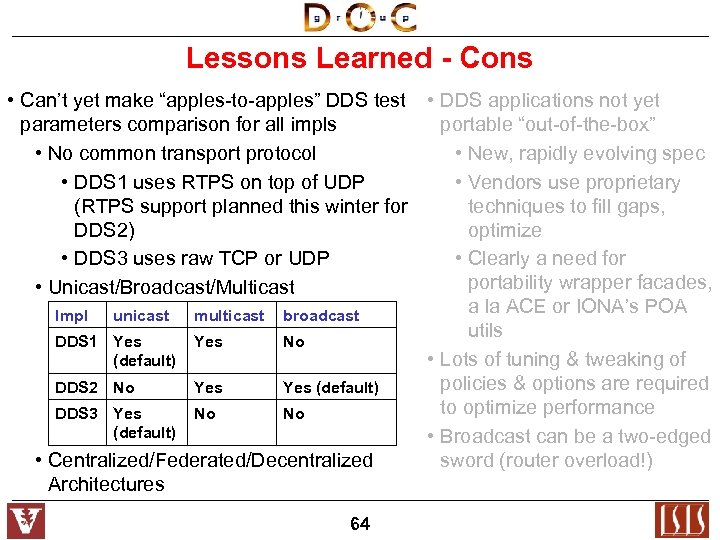

Lessons Learned - Cons • Can’t yet make “apples-to-apples” DDS test • DDS applications not yet parameters comparison for all impls portable “out-of-the-box” • No common transport protocol • New, rapidly evolving spec • DDS 1 uses RTPS on top of UDP • Vendors use proprietary (RTPS support planned this winter for techniques to fill gaps, DDS 2) optimize • DDS 3 uses raw TCP or UDP • Clearly a need for portability wrapper facades, • Unicast/Broadcast/Multicast a la ACE or IONA’s POA Impl unicast multicast broadcast utils DDS 1 Yes No • Lots of tuning & tweaking of (default) policies & options are required DDS 2 No Yes (default) to optimize performance DDS 3 Yes No No (default) • Broadcast can be a two-edged • Centralized/Federated/Decentralized sword (router overload!) Architectures 64

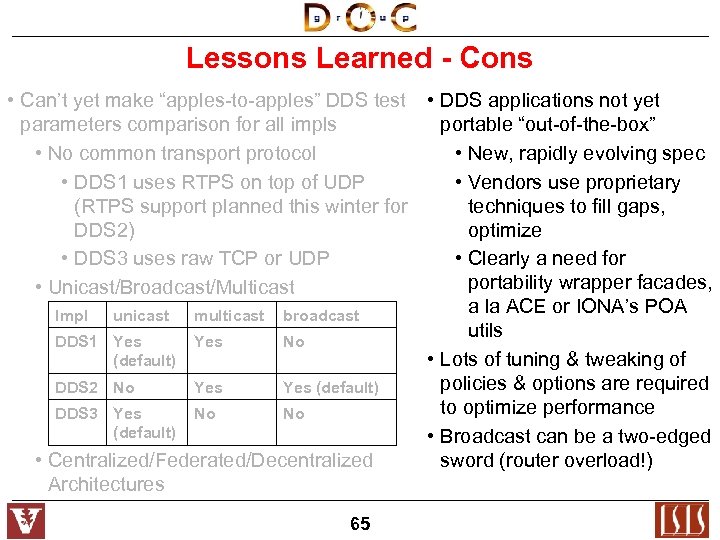

Lessons Learned - Cons • Can’t yet make “apples-to-apples” DDS test • DDS applications not yet parameters comparison for all impls portable “out-of-the-box” • No common transport protocol • New, rapidly evolving spec • DDS 1 uses RTPS on top of UDP • Vendors use proprietary (RTPS support planned this winter for techniques to fill gaps, DDS 2) optimize • DDS 3 uses raw TCP or UDP • Clearly a need for portability wrapper facades, • Unicast/Broadcast/Multicast a la ACE or IONA’s POA Impl unicast multicast broadcast utils DDS 1 Yes No • Lots of tuning & tweaking of (default) policies & options are required DDS 2 No Yes (default) to optimize performance DDS 3 Yes No No (default) • Broadcast can be a two-edged • Centralized/Federated/Decentralized sword (router overload!) Architectures 65

Future Work - Pub/Sub Metrics • Tailor benchmarks to explore key classes of tactical applications • Measure migrating processing to source • e. g. , command & control, targeting, route planning • Measure discovery time for various entities • Devise generators that can emulate various workloads & use cases • e. g. , subscribers, publishers, & topics • Include wider range of Qo. S & configuration, e. g. : • Find scenarios that distinguish performance of Qo. S policies & features, e. g. : • Durability • Reliable vs best effort • Listener vs waitset • Interaction of durability, reliability and history depth • Collocated applications • Very large # of subscribers & payload sizes • Map to classes of tactical applications 66

Future Work - Pub/Sub Metrics • Tailor benchmarks to explore key classes of tactical applications • Measure migrating processing to source • e. g. , command & control, targeting, route planning • Measure discovery time for various entities • Devise generators that can emulate various workloads & use cases • e. g. , subscribers, publishers, & topics • Include wider range of Qo. S & configuration, e. g. : • Find scenarios that distinguish performance of Qo. S policies & features, e. g. : • Durability • Reliable vs best effort • Listener vs waitset • Interaction of durability, reliability and history depth • Collocated applications • Very large # of subscribers & payload sizes • Map to classes of tactical applications 67

Future Work - Benchmarking Framework • Larger, more complex • Alternate throughput measurement strategies automated tests • Fixed # of samples – measure elapsed time • More nodes • More publishers, • Fixed time window – measure # of subscribers per test, per samples node • Controlled publish rate • Variety of data sizes, • Generic testing framework types • Common test code • Multiple topics per test • Wrapper facades to factor out portability • Dynamic tests issues • Late-joining • Include other pub/sub platforms subscribers • WS Notification • Changing Qo. S values • ICE pub/sub • Java impls of DDS benchmarking framework is open-source & available on request 68

Future Work - Benchmarking Framework • Larger, more complex • Alternate throughput measurement strategies automated tests • Fixed # of samples – measure elapsed time • More nodes • More publishers, • Fixed time window – measure # of subscribers per test, per samples node • Controlled publish rate • Variety of data sizes, • Generic testing framework types • Common test code • Multiple topics per test • Wrapper facades to factor out portability • Dynamic tests issues • Late-joining • Include other pub/sub platforms subscribers • WS Notification • Changing Qo. S values • ICE pub/sub • Java impls of DDS benchmarking framework is open-source & available on request 69

Concluding Remarks • Next-generation Qo. S-enabled information management for tactical applications requires innovations & advances in tools & platforms • Emerging COTS standards address some, but not all, hard issues! R&D • These benchmarks are a snapshot of an ongoing process • Keep track of our benchmarking work at www. dre. vanderbilt. edu/DDS • Latest version of these slides at DDS_RTWS 06. pdf in the above directory Thanks to OCI, Prism. Tech, & RTI for providing their DDS implementations & for helping with the benchmark process 70

8810ceab36f595c3ab4fbe8b163158f1.ppt