ba2521f23244942198a41a7cf65af6dd.ppt

- Количество слайдов: 68

Evaluating Stormwater Technology Performance Module II Guidance for the Technology Acceptance Reciprocity Partnership (TARP) Stormwater Protocol: Stormwater Best Management Practice Demonstrations October 2004 Prepared by Eric Winkler Ph. D. and Nicholas Bouthilette Center for Energy Efficiency and Renewable Energy University of Massachusetts – Amherst 1 University of Massachusetts, Amherst, © 2004

Evaluating Stormwater Technology Performance Module II Guidance for the Technology Acceptance Reciprocity Partnership (TARP) Stormwater Protocol: Stormwater Best Management Practice Demonstrations October 2004 Prepared by Eric Winkler Ph. D. and Nicholas Bouthilette Center for Energy Efficiency and Renewable Energy University of Massachusetts – Amherst 1 University of Massachusetts, Amherst, © 2004

Meet the Instructors Nancy Baker Massachusetts Dept. of Environmental Protection 1 Winter Street Boston, MA 02108 (617) 654 -6524 (Voice) (617)292 -5850 (Fax) Nancy. Baker@state. ma. us 2 Eric Winkler, Ph. D. University of Massachusetts 160 Governors Drive Amherst, MA 01003 (413) 545 -2853 (Voice) (413) 545 -1027 (Fax) winkler@ceere. org University of Massachusetts, Amherst, © 2004

Meet the Instructors Nancy Baker Massachusetts Dept. of Environmental Protection 1 Winter Street Boston, MA 02108 (617) 654 -6524 (Voice) (617)292 -5850 (Fax) Nancy. Baker@state. ma. us 2 Eric Winkler, Ph. D. University of Massachusetts 160 Governors Drive Amherst, MA 01003 (413) 545 -2853 (Voice) (413) 545 -1027 (Fax) winkler@ceere. org University of Massachusetts, Amherst, © 2004

Meet the Sponsors TARP Stormwater Work Group California u Maryland u Massachusetts u New Jersey u Pennsylvania u Virginia u TARP Member State of Washington, Illinois, New York, and ETV are collaborating with TARP 3 University of Massachusetts, Amherst, © 2004

Meet the Sponsors TARP Stormwater Work Group California u Maryland u Massachusetts u New Jersey u Pennsylvania u Virginia u TARP Member State of Washington, Illinois, New York, and ETV are collaborating with TARP 3 University of Massachusetts, Amherst, © 2004

Goals of TARP Stormwater Work Group u Use protocol to test new BMPs u Approve effective new stormwater BMPs u Get credible data on BMP effectiveness u Share information and data u Increase expertise on new BMPs u Use protocol for appropriate state initiatives TARP Member State 4 University of Massachusetts, Amherst, © 2004

Goals of TARP Stormwater Work Group u Use protocol to test new BMPs u Approve effective new stormwater BMPs u Get credible data on BMP effectiveness u Share information and data u Increase expertise on new BMPs u Use protocol for appropriate state initiatives TARP Member State 4 University of Massachusetts, Amherst, © 2004

Evaluating Stormwater Technology Performance Learning Objectives Logistical Reminders Use the TARP Stormwater Demonstration Protocol to review a test plan and a technology evaluation u Recognize data gaps and deficiencies u Develop, implement, and review a test plan u Understand, evaluate, and use statistical methods u u Phone audience § Keep phone on mute § * 6 to mute your phone and again to un-mute § Do NOT put call on hold u Simulcast audience § Use at top of each slide to submit questions Course time = 2 hours u 3 question & answer periods u Links to additional resources u 5 u Your feedback University of Massachusetts, Amherst, © 2004

Evaluating Stormwater Technology Performance Learning Objectives Logistical Reminders Use the TARP Stormwater Demonstration Protocol to review a test plan and a technology evaluation u Recognize data gaps and deficiencies u Develop, implement, and review a test plan u Understand, evaluate, and use statistical methods u u Phone audience § Keep phone on mute § * 6 to mute your phone and again to un-mute § Do NOT put call on hold u Simulcast audience § Use at top of each slide to submit questions Course time = 2 hours u 3 question & answer periods u Links to additional resources u 5 u Your feedback University of Massachusetts, Amherst, © 2004

Project Notice Prepared by The Center for Energy Efficiency and Renewable Energy, University of Massachusetts Amherst for submission under Agreement with the Environmental Council of States. The preparation of this training material was financed in part by funds provided by the Environmental Council of States (ECOS). This product may be duplicated for personal and government use and is protected under copyright laws for the purpose of author attribution. “Publication of this document shall not be construed as endorsement of the views expressed therein by the Environmental Council of States/ITRC or any federal agency. " 6 University of Massachusetts, Amherst, © 2004

Project Notice Prepared by The Center for Energy Efficiency and Renewable Energy, University of Massachusetts Amherst for submission under Agreement with the Environmental Council of States. The preparation of this training material was financed in part by funds provided by the Environmental Council of States (ECOS). This product may be duplicated for personal and government use and is protected under copyright laws for the purpose of author attribution. “Publication of this document shall not be construed as endorsement of the views expressed therein by the Environmental Council of States/ITRC or any federal agency. " 6 University of Massachusetts, Amherst, © 2004

DISCLAIMER The contents and views expressed are those of the authors and do not necessarily reflect the views and policies of the Commonwealth of Massachusetts its agencies or the University of Massachusetts. The contents of this training are offered as guidance. The University of Massachusetts and all technical sources referenced herein do not (a) make any warranty or representation, expressed or implied, with respect to accuracy, completeness, or usefulness of the information contained in this training, or that the use of any information, apparatus, method, or process disclosed in this report may not infringe on privately owned rights; (b) assume any liabilities with respect to the use of, or for damages resulting from the use of, any information, apparatus, method or process disclosed in this report. Mention or images of trade names or commercial products does not constitute endorsement or recommendation of use. 7 University of Massachusetts, Amherst, © 2004

DISCLAIMER The contents and views expressed are those of the authors and do not necessarily reflect the views and policies of the Commonwealth of Massachusetts its agencies or the University of Massachusetts. The contents of this training are offered as guidance. The University of Massachusetts and all technical sources referenced herein do not (a) make any warranty or representation, expressed or implied, with respect to accuracy, completeness, or usefulness of the information contained in this training, or that the use of any information, apparatus, method, or process disclosed in this report may not infringe on privately owned rights; (b) assume any liabilities with respect to the use of, or for damages resulting from the use of, any information, apparatus, method or process disclosed in this report. Mention or images of trade names or commercial products does not constitute endorsement or recommendation of use. 7 University of Massachusetts, Amherst, © 2004

Module I: Planning for A Stormwater BMP Demonstration 1. Factors Affecting Stormwater Sampling 2. Data Quality Objectives and the Test QA Plan Module II: Collecting and Analyzing Stormwater BMP Data 3. Sampling Design 4. Statistical Analyses 5. Data Adequacy: Case Study 8 University of Massachusetts, Amherst, © 2004

Module I: Planning for A Stormwater BMP Demonstration 1. Factors Affecting Stormwater Sampling 2. Data Quality Objectives and the Test QA Plan Module II: Collecting and Analyzing Stormwater BMP Data 3. Sampling Design 4. Statistical Analyses 5. Data Adequacy: Case Study 8 University of Massachusetts, Amherst, © 2004

3. Sampling Design u Stormwater data collection guidance u Locating samples and stations u Selecting water quality parameters u QA/QC u Sample handling and record keeping u Field measures 9 University of Massachusetts, Amherst, © 2004

3. Sampling Design u Stormwater data collection guidance u Locating samples and stations u Selecting water quality parameters u QA/QC u Sample handling and record keeping u Field measures 9 University of Massachusetts, Amherst, © 2004

Sampling Plan Elements TARP Data Collection Criteria (Section 3. 3, TARP Protocol) Any relevant historic data u Monthly mean rainfall and snowfall data (12 months over the period of record) u Rainfall intensity over 15 minute increments u 10 University of Massachusetts, Amherst, © 2004

Sampling Plan Elements TARP Data Collection Criteria (Section 3. 3, TARP Protocol) Any relevant historic data u Monthly mean rainfall and snowfall data (12 months over the period of record) u Rainfall intensity over 15 minute increments u 10 University of Massachusetts, Amherst, © 2004

Protocol Minimum Criteria Identifying Qualifying Storm Event (Section 3. 3. 1. 2 and Section 3. 3. 1. 3, TARP Protocol) u Minimum rainfall event depth is 0. 1 inch u Minimum inter-event duration of 6 hours (duration beginning a cessation of flow to unit) u Base flow should not be sampled § Identification of qualifying event needs to verify flow to the unit and rainfall concurrently 11 University of Massachusetts, Amherst, © 2004

Protocol Minimum Criteria Identifying Qualifying Storm Event (Section 3. 3. 1. 2 and Section 3. 3. 1. 3, TARP Protocol) u Minimum rainfall event depth is 0. 1 inch u Minimum inter-event duration of 6 hours (duration beginning a cessation of flow to unit) u Base flow should not be sampled § Identification of qualifying event needs to verify flow to the unit and rainfall concurrently 11 University of Massachusetts, Amherst, © 2004

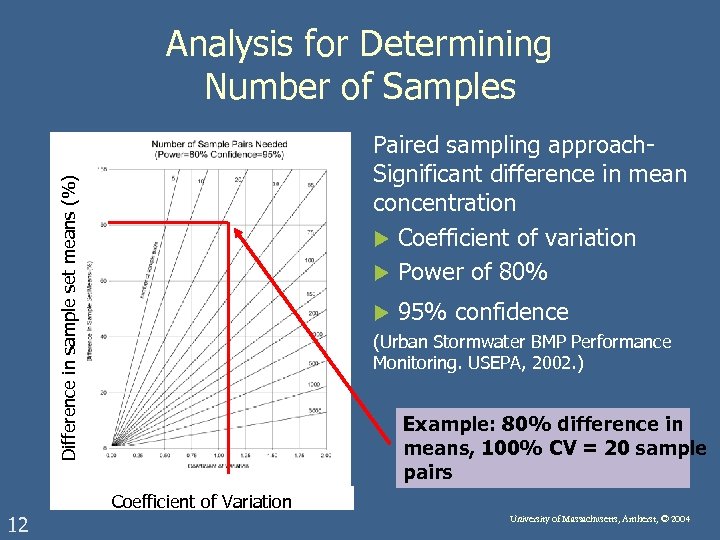

Analysis for Determining Number of Samples Difference in sample set means (%) Paired sampling approach. Significant difference in mean concentration u Coefficient of variation u Power of 80% u 95% confidence (Urban Stormwater BMP Performance Monitoring. USEPA, 2002. ) Example: 80% difference in means, 100% CV = 20 sample pairs Coefficient of Variation 12 University of Massachusetts, Amherst, © 2004

Analysis for Determining Number of Samples Difference in sample set means (%) Paired sampling approach. Significant difference in mean concentration u Coefficient of variation u Power of 80% u 95% confidence (Urban Stormwater BMP Performance Monitoring. USEPA, 2002. ) Example: 80% difference in means, 100% CV = 20 sample pairs Coefficient of Variation 12 University of Massachusetts, Amherst, © 2004

Qualifying Event Sample TARP Protocol Criteria u 10 water quality samples per event § 10 influent and 10 effluent § If composite - 2 composites, 5 sub-samples u Data for flow rate and flow volume u At least 50% of the total annual rainfall § CA – monitor 80 -90% of rainfall 13 University of Massachusetts, Amherst, © 2004

Qualifying Event Sample TARP Protocol Criteria u 10 water quality samples per event § 10 influent and 10 effluent § If composite - 2 composites, 5 sub-samples u Data for flow rate and flow volume u At least 50% of the total annual rainfall § CA – monitor 80 -90% of rainfall 13 University of Massachusetts, Amherst, © 2004

Qualifying Event Sample (continued) Preferably 20 storms, 15 minimum u Sampling over the course of a full year of sampling to account for seasonal variation u Compositing flow-weighted samples cover at least 70% of storm flow (and as much of the first 20% as possible) u Examples of variation within TARP community u § PA - Temporary BMPs sized using 2 year event § NJ – Water Quality design based on volume from a 1. 25 inch event 14 University of Massachusetts, Amherst, © 2004

Qualifying Event Sample (continued) Preferably 20 storms, 15 minimum u Sampling over the course of a full year of sampling to account for seasonal variation u Compositing flow-weighted samples cover at least 70% of storm flow (and as much of the first 20% as possible) u Examples of variation within TARP community u § PA - Temporary BMPs sized using 2 year event § NJ – Water Quality design based on volume from a 1. 25 inch event 14 University of Massachusetts, Amherst, © 2004

Sampling Locations Location in close proximity to BMP technology inlet and outlet u Consider field personnel safety during equipment access u Secure equipment to avoid vandalism u Provide a scaled plan view of site that indicates § All buildings § Land uses § Storm drain inlets § Other control devices u 15 University of Massachusetts, Amherst, © 2004

Sampling Locations Location in close proximity to BMP technology inlet and outlet u Consider field personnel safety during equipment access u Secure equipment to avoid vandalism u Provide a scaled plan view of site that indicates § All buildings § Land uses § Storm drain inlets § Other control devices u 15 University of Massachusetts, Amherst, © 2004

Automated Sampling Equipment u u Access to the equipment should take into consideration confined space safety u 16 Water surface elevation should be less than 15 feet below the elevation of the pump in the sampler Flow measurement equipment should be located to avoid measuring turbulent flow University of Massachusetts, Amherst, © 2004

Automated Sampling Equipment u u Access to the equipment should take into consideration confined space safety u 16 Water surface elevation should be less than 15 feet below the elevation of the pump in the sampler Flow measurement equipment should be located to avoid measuring turbulent flow University of Massachusetts, Amherst, © 2004

Adequacy of Sampling and Flow Monitoring Procedures Primary and secondary flow measurement devices are required u Programmable automatic flow samplers with continuous flow measurements are recommended u Time-weighted composite samples are not acceptable unless flow is monitored and the event mean concentration can be calculated u 17 University of Massachusetts, Amherst, © 2004

Adequacy of Sampling and Flow Monitoring Procedures Primary and secondary flow measurement devices are required u Programmable automatic flow samplers with continuous flow measurements are recommended u Time-weighted composite samples are not acceptable unless flow is monitored and the event mean concentration can be calculated u 17 University of Massachusetts, Amherst, © 2004

Monitoring Location Recommendations Monitoring location design should consider whether the upgradient catchment system is served by a separate storm drain system u Pay attention to potential combined sewer system or illicit connections which may contaminate stormwater system u The storm drain system should be well understood to allow reliable delineation and description of catchment area u Flow-measuring monitoring stations in open channels should have suitable hydraulic control and where possible the ability to install primary flow measurement devices u 18 University of Massachusetts, Amherst, © 2004

Monitoring Location Recommendations Monitoring location design should consider whether the upgradient catchment system is served by a separate storm drain system u Pay attention to potential combined sewer system or illicit connections which may contaminate stormwater system u The storm drain system should be well understood to allow reliable delineation and description of catchment area u Flow-measuring monitoring stations in open channels should have suitable hydraulic control and where possible the ability to install primary flow measurement devices u 18 University of Massachusetts, Amherst, © 2004

Monitoring Location Recommendations (continued) Avoid steep slopes, pipe diameter changes, junctions, and irregular channel shaping (due to breaks, roots, debris) u Avoid locations likely to be affected by backwater and tidal conditions u Pipe, culvert, or tunnel stationing should be located to avoid surcharging (pressure flow) over the normal range of precipitation u Use of reference watershed and remote rainfall data are discouraged u 19 University of Massachusetts, Amherst, © 2004

Monitoring Location Recommendations (continued) Avoid steep slopes, pipe diameter changes, junctions, and irregular channel shaping (due to breaks, roots, debris) u Avoid locations likely to be affected by backwater and tidal conditions u Pipe, culvert, or tunnel stationing should be located to avoid surcharging (pressure flow) over the normal range of precipitation u Use of reference watershed and remote rainfall data are discouraged u 19 University of Massachusetts, Amherst, © 2004

Selecting Applicable Water Quality Parameters Designated uses of the receiving water – consider stormwater discharge constituents u Overall program objectives and resources – adjust parameter list according to resources (test method capability, personnel, funds, and time) u Use of “Keystone” pollutant may vary from state to state u 20 University of Massachusetts, Amherst, © 2004

Selecting Applicable Water Quality Parameters Designated uses of the receiving water – consider stormwater discharge constituents u Overall program objectives and resources – adjust parameter list according to resources (test method capability, personnel, funds, and time) u Use of “Keystone” pollutant may vary from state to state u 20 University of Massachusetts, Amherst, © 2004

Resources for Standardized Test Methods (Section 3. 1, TARP Protocol) u u u 21 EPA Test Methods – pollutant analysis www. epa. gov/epahome/Standards. html ASME Standards and Practices – pressure flow measurements ASCE Standards – hydraulic flow estimation ASTM Standards – precision open-channel flow measurements for water constituent analysis National Field Manual for Collection of Water Quality Data, Wilde et al. , USGS water. usgs. gov/owq/Field. Manual/ “Guidance Manual: Stormwater Monitoring Protocols” – Caltrans. http: //www. dot. ca. gov/hq/env/stormwater/special/newset up/index. htm#monitoring University of Massachusetts, Amherst, © 2004

Resources for Standardized Test Methods (Section 3. 1, TARP Protocol) u u u 21 EPA Test Methods – pollutant analysis www. epa. gov/epahome/Standards. html ASME Standards and Practices – pressure flow measurements ASCE Standards – hydraulic flow estimation ASTM Standards – precision open-channel flow measurements for water constituent analysis National Field Manual for Collection of Water Quality Data, Wilde et al. , USGS water. usgs. gov/owq/Field. Manual/ “Guidance Manual: Stormwater Monitoring Protocols” – Caltrans. http: //www. dot. ca. gov/hq/env/stormwater/special/newset up/index. htm#monitoring University of Massachusetts, Amherst, © 2004

Examples of Analytical Laboratory Methods u u u u u Total Phosphorus – SM 4500 -PE Nitrate and Nitrite – EPA 353. 1 Ammonia – EPA 350. 1 Total Kjeldahl Nitrogen – EPA 351. 2 TSS – SM 2540 D SSC – ASTM D 3977 -97 Enterococci – SM 9230 C Fecal Coliform – SM 9222 D Chronic Microtox Toxicity Test – Azur Environmental Reference: ASTM, EPA, American Public Health Association, (Other non-standard methods or tests approved through acceptable regulatory process, EPA or state authority) 22 University of Massachusetts, Amherst, © 2004

Examples of Analytical Laboratory Methods u u u u u Total Phosphorus – SM 4500 -PE Nitrate and Nitrite – EPA 353. 1 Ammonia – EPA 350. 1 Total Kjeldahl Nitrogen – EPA 351. 2 TSS – SM 2540 D SSC – ASTM D 3977 -97 Enterococci – SM 9230 C Fecal Coliform – SM 9222 D Chronic Microtox Toxicity Test – Azur Environmental Reference: ASTM, EPA, American Public Health Association, (Other non-standard methods or tests approved through acceptable regulatory process, EPA or state authority) 22 University of Massachusetts, Amherst, © 2004

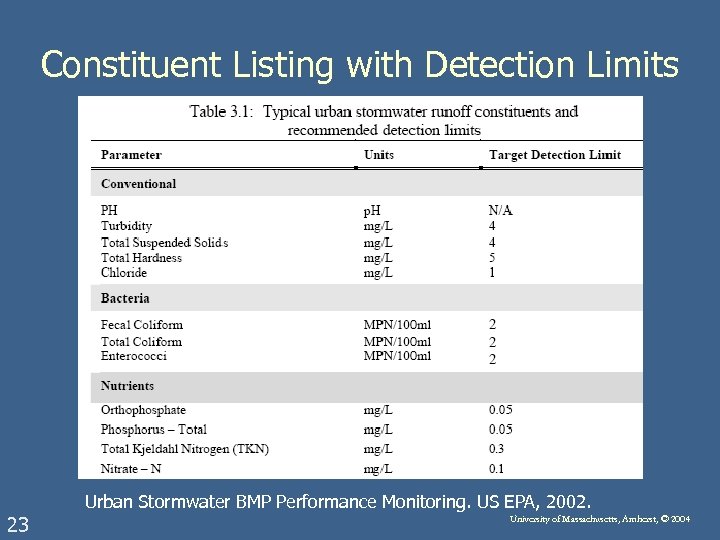

Constituent Listing with Detection Limits Urban Stormwater BMP Performance Monitoring. US EPA, 2002. 23 University of Massachusetts, Amherst, © 2004

Constituent Listing with Detection Limits Urban Stormwater BMP Performance Monitoring. US EPA, 2002. 23 University of Massachusetts, Amherst, © 2004

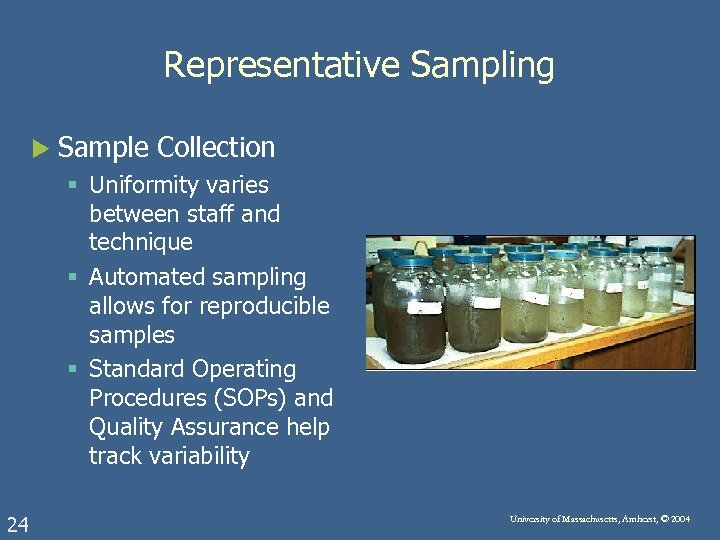

Representative Sampling u Sample Collection § Uniformity varies between staff and technique § Automated sampling allows for reproducible samples § Standard Operating Procedures (SOPs) and Quality Assurance help track variability 24 University of Massachusetts, Amherst, © 2004

Representative Sampling u Sample Collection § Uniformity varies between staff and technique § Automated sampling allows for reproducible samples § Standard Operating Procedures (SOPs) and Quality Assurance help track variability 24 University of Massachusetts, Amherst, © 2004

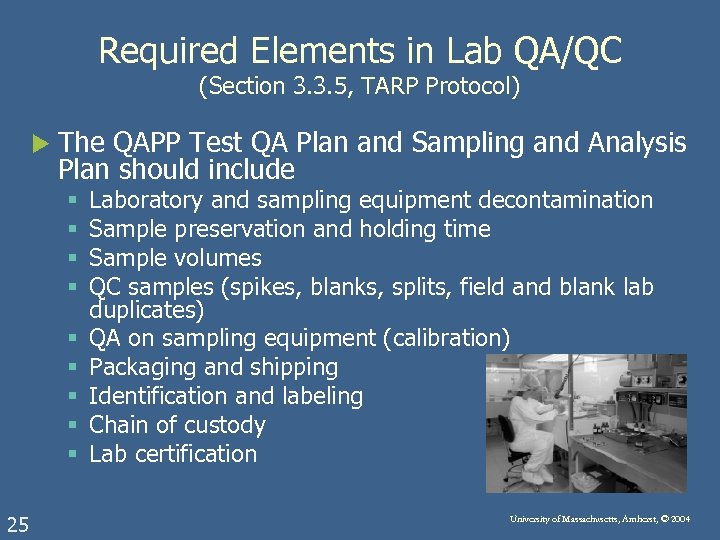

Required Elements in Lab QA/QC (Section 3. 3. 5, TARP Protocol) u The QAPP Test QA Plan and Sampling and Analysis Plan should include § § § § § 25 Laboratory and sampling equipment decontamination Sample preservation and holding time Sample volumes QC samples (spikes, blanks, splits, field and blank lab duplicates) QA on sampling equipment (calibration) Packaging and shipping Identification and labeling Chain of custody Lab certification University of Massachusetts, Amherst, © 2004

Required Elements in Lab QA/QC (Section 3. 3. 5, TARP Protocol) u The QAPP Test QA Plan and Sampling and Analysis Plan should include § § § § § 25 Laboratory and sampling equipment decontamination Sample preservation and holding time Sample volumes QC samples (spikes, blanks, splits, field and blank lab duplicates) QA on sampling equipment (calibration) Packaging and shipping Identification and labeling Chain of custody Lab certification University of Massachusetts, Amherst, © 2004

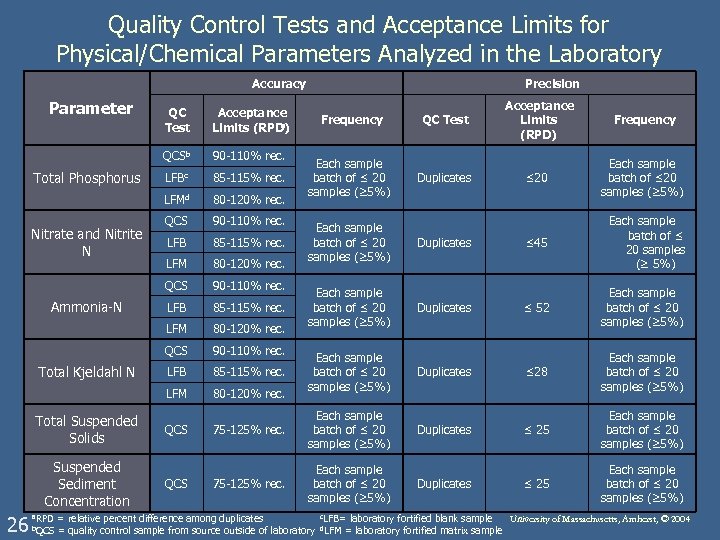

Quality Control Tests and Acceptance Limits for Physical/Chemical Parameters Analyzed in the Laboratory Accuracy Parameter 85 -115% rec. 80 -120% rec. QCS 90 -110% rec. LFB 85 -115% rec. LFM Total Kjeldahl N LFBc QCS Ammonia-N 90 -110% rec. LFMd Nitrate and Nitrite N Acceptance a Limits (RPD) QCSb Total Phosphorus QC Test 80 -120% rec. Total Suspended Solids Suspended Sediment Concentration 26 QCS Precision Frequency Each sample batch of ≤ 20 samples (≥ 5%) QC Test Duplicates Acceptance Limits (RPD) Frequency ≤ 20 Each sample batch of ≤ 20 samples (≥ 5%) Duplicates ≤ 45 Each sample batch of ≤ 20 samples (≥ 5%) Duplicates ≤ 52 Each sample batch of ≤ 20 samples (≥ 5%) ≤ 28 Each sample batch of ≤ 20 samples (≥ 5%) Duplicates 75 -125% rec. Each sample batch of ≤ 20 samples (≥ 5%) Duplicates ≤ 25 Each sample batch of ≤ 20 samples (≥ 5%) a. RPD = relative percent difference among duplicates c. LFB= laboratory fortified blank sample b. QCS = quality control sample from source outside of laboratory d. LFM = laboratory fortified matrix sample University of Massachusetts, Amherst, © 2004

Quality Control Tests and Acceptance Limits for Physical/Chemical Parameters Analyzed in the Laboratory Accuracy Parameter 85 -115% rec. 80 -120% rec. QCS 90 -110% rec. LFB 85 -115% rec. LFM Total Kjeldahl N LFBc QCS Ammonia-N 90 -110% rec. LFMd Nitrate and Nitrite N Acceptance a Limits (RPD) QCSb Total Phosphorus QC Test 80 -120% rec. Total Suspended Solids Suspended Sediment Concentration 26 QCS Precision Frequency Each sample batch of ≤ 20 samples (≥ 5%) QC Test Duplicates Acceptance Limits (RPD) Frequency ≤ 20 Each sample batch of ≤ 20 samples (≥ 5%) Duplicates ≤ 45 Each sample batch of ≤ 20 samples (≥ 5%) Duplicates ≤ 52 Each sample batch of ≤ 20 samples (≥ 5%) ≤ 28 Each sample batch of ≤ 20 samples (≥ 5%) Duplicates 75 -125% rec. Each sample batch of ≤ 20 samples (≥ 5%) Duplicates ≤ 25 Each sample batch of ≤ 20 samples (≥ 5%) a. RPD = relative percent difference among duplicates c. LFB= laboratory fortified blank sample b. QCS = quality control sample from source outside of laboratory d. LFM = laboratory fortified matrix sample University of Massachusetts, Amherst, © 2004

Laboratory Records u Sample data § Number of samples, holding times, location, deviation from SOP’s, time of day, and date § Corrective procedures for samples inconsistent with the protocol u Management of records § Consider electronic filing § Document data validation, calculations and analysis, and data presentation § Review data reports for completeness, including requested analyses and all required QA 27 University of Massachusetts, Amherst, © 2004

Laboratory Records u Sample data § Number of samples, holding times, location, deviation from SOP’s, time of day, and date § Corrective procedures for samples inconsistent with the protocol u Management of records § Consider electronic filing § Document data validation, calculations and analysis, and data presentation § Review data reports for completeness, including requested analyses and all required QA 27 University of Massachusetts, Amherst, © 2004

Field Sample QC u Field matrix spike § A sample prepared at the sampling point by adding a known mass of the target analyte to a specified amount of sample § Used to determine the effect of sample preservation, shipment, storage, and preparation on analyte recovery efficiency u Field split sample § The split of a sample into two representative portions to be sent off to different laboratories § Estimates inter-laboratory precision 28 University of Massachusetts, Amherst, © 2004

Field Sample QC u Field matrix spike § A sample prepared at the sampling point by adding a known mass of the target analyte to a specified amount of sample § Used to determine the effect of sample preservation, shipment, storage, and preparation on analyte recovery efficiency u Field split sample § The split of a sample into two representative portions to be sent off to different laboratories § Estimates inter-laboratory precision 28 University of Massachusetts, Amherst, © 2004

Quality Control u Field blanks § A clean sample carried to the sampling site, exposed to sampling conditions, and returned to the laboratory § Provides useful information about pollutants and error that may be introduced during the sampling process u Field duplicates § Identical samples taken from the sampling location and time (not split) § Identical sampling and analytical procedures to assess variance of sampling and analysis 29 University of Massachusetts, Amherst, © 2004

Quality Control u Field blanks § A clean sample carried to the sampling site, exposed to sampling conditions, and returned to the laboratory § Provides useful information about pollutants and error that may be introduced during the sampling process u Field duplicates § Identical samples taken from the sampling location and time (not split) § Identical sampling and analytical procedures to assess variance of sampling and analysis 29 University of Massachusetts, Amherst, © 2004

Quality Assurance / Quality Control Documentation and Records u Documentation § Include all QC data § Define critical records and information, as well as the data reporting format and document control procedures u Reporting § Field operation records § Laboratory records § Data handling records 30 University of Massachusetts, Amherst, © 2004

Quality Assurance / Quality Control Documentation and Records u Documentation § Include all QC data § Define critical records and information, as well as the data reporting format and document control procedures u Reporting § Field operation records § Laboratory records § Data handling records 30 University of Massachusetts, Amherst, © 2004

Field Operation Records u Sample collection records § Show that the proper sampling protocol was used in the field by indicating persons names, sample numbers, collection points, maps, equipment/methods, climatic conditions, and unusual observations u Chain of custody records § Document the progression of samples as they travel from sampling location to the lab to disposal area u QC sample records § Document QC samples such as field, trip, blanks, and duplicate samples § Provide information on frequency, conditions, level of standards, and instrument calibration history 31 University of Massachusetts, Amherst, © 2004

Field Operation Records u Sample collection records § Show that the proper sampling protocol was used in the field by indicating persons names, sample numbers, collection points, maps, equipment/methods, climatic conditions, and unusual observations u Chain of custody records § Document the progression of samples as they travel from sampling location to the lab to disposal area u QC sample records § Document QC samples such as field, trip, blanks, and duplicate samples § Provide information on frequency, conditions, level of standards, and instrument calibration history 31 University of Massachusetts, Amherst, © 2004

Field Operation Records (continued) u General field procedures § Record field procedures and areas of difficulty in gathering samples u Corrective action reports § Where deviations of standard operating procedures occurs, report methods used and/or details of procedure, and a plan to resolve noncompliance issues 32 University of Massachusetts, Amherst, © 2004

Field Operation Records (continued) u General field procedures § Record field procedures and areas of difficulty in gathering samples u Corrective action reports § Where deviations of standard operating procedures occurs, report methods used and/or details of procedure, and a plan to resolve noncompliance issues 32 University of Massachusetts, Amherst, © 2004

Questions and Answers (1 of 3) 33 University of Massachusetts, Amherst, © 2004

Questions and Answers (1 of 3) 33 University of Massachusetts, Amherst, © 2004

Module I: Planning for A Stormwater BMP Demonstration 1. Factors Affecting Stormwater Sampling 2. Data Quality Objectives and the Test QA Plan Module II: Collecting and Analyzing Stormwater BMP Data 3. Sampling Design 4. Statistical Analyses 5. Data Adequacy: Case Study 34 University of Massachusetts, Amherst, © 2004

Module I: Planning for A Stormwater BMP Demonstration 1. Factors Affecting Stormwater Sampling 2. Data Quality Objectives and the Test QA Plan Module II: Collecting and Analyzing Stormwater BMP Data 3. Sampling Design 4. Statistical Analyses 5. Data Adequacy: Case Study 34 University of Massachusetts, Amherst, © 2004

4. Statistical Analyses u Data reporting and presentation u Statistical method review u Appropriate forms of analyses u Results interpretation 35 University of Massachusetts, Amherst, © 2004

4. Statistical Analyses u Data reporting and presentation u Statistical method review u Appropriate forms of analyses u Results interpretation 35 University of Massachusetts, Amherst, © 2004

Presenting Statistical Data and Applicability Efficiency Calculation Implications Determine the category of BMP u BMPs with well-defined inlets and outlets whose primary treatment depends upon extended detention storage of stormwater u BMPs with well-defined inlets and outlets that do not depend upon significant storage of water u BMPs that do not have a well defined inlet and/or outlet u Widely distributed BMPs that use reference watersheds to evaluate effectiveness u 36 University of Massachusetts, Amherst, © 2004

Presenting Statistical Data and Applicability Efficiency Calculation Implications Determine the category of BMP u BMPs with well-defined inlets and outlets whose primary treatment depends upon extended detention storage of stormwater u BMPs with well-defined inlets and outlets that do not depend upon significant storage of water u BMPs that do not have a well defined inlet and/or outlet u Widely distributed BMPs that use reference watersheds to evaluate effectiveness u 36 University of Massachusetts, Amherst, © 2004

Analysis to Address Data Quality Objectives What degree of pollution control does the BMP provide under typical operating conditions? u How does efficiency vary from pollutant to pollutant? u How does efficiency vary with input concentrations? u How does efficiency vary with storm characteristics? u How do design variables affect performance? u How does efficiency vary with different operational and/or maintenance approaches? u 37 University of Massachusetts, Amherst, © 2004

Analysis to Address Data Quality Objectives What degree of pollution control does the BMP provide under typical operating conditions? u How does efficiency vary from pollutant to pollutant? u How does efficiency vary with input concentrations? u How does efficiency vary with storm characteristics? u How do design variables affect performance? u How does efficiency vary with different operational and/or maintenance approaches? u 37 University of Massachusetts, Amherst, © 2004

Analysis to Address Data Quality Objectives (Continued) u Does efficiency improve, decay, or remain stable over time? u How does efficiency, performance, and effectiveness compare to other BMPs? u Does the BMP reduce toxicity? u Does the BMP cause an improvement or protect downstream biotic communities? u Does the BMP have potential downstream negative impacts? 38 University of Massachusetts, Amherst, © 2004

Analysis to Address Data Quality Objectives (Continued) u Does efficiency improve, decay, or remain stable over time? u How does efficiency, performance, and effectiveness compare to other BMPs? u Does the BMP reduce toxicity? u Does the BMP cause an improvement or protect downstream biotic communities? u Does the BMP have potential downstream negative impacts? 38 University of Massachusetts, Amherst, © 2004

Recommended Statistical Methods for Performance Evaluation u Efficiency ratio u Summation of loads u Regression of loads u Mean concentration u Efficiency of individual storm loads u Lines of Comparative Performance Method 39 University of Massachusetts, Amherst, © 2004

Recommended Statistical Methods for Performance Evaluation u Efficiency ratio u Summation of loads u Regression of loads u Mean concentration u Efficiency of individual storm loads u Lines of Comparative Performance Method 39 University of Massachusetts, Amherst, © 2004

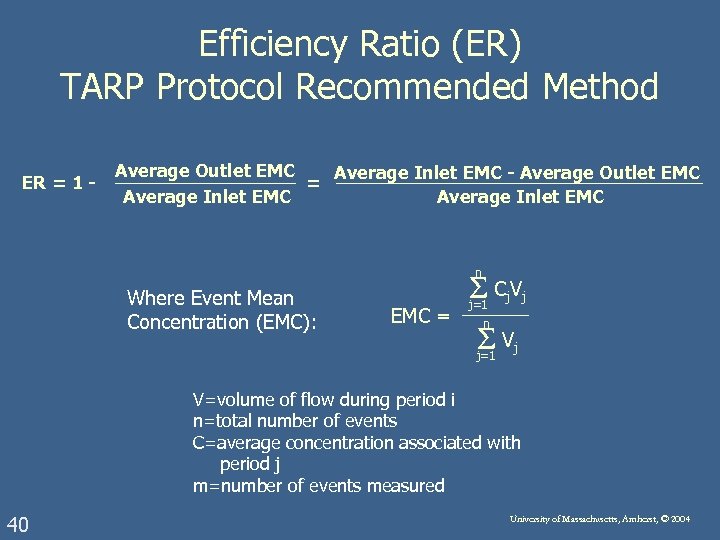

Efficiency Ratio (ER) TARP Protocol Recommended Method ER = 1 - Average Outlet EMC Average Inlet EMC - Average Outlet EMC = Average Inlet EMC n Where Event Mean Concentration (EMC): EMC = Cj. Vj j=1 n Vj j=1 V=volume of flow during period i n=total number of events C=average concentration associated with period j m=number of events measured 40 University of Massachusetts, Amherst, © 2004

Efficiency Ratio (ER) TARP Protocol Recommended Method ER = 1 - Average Outlet EMC Average Inlet EMC - Average Outlet EMC = Average Inlet EMC n Where Event Mean Concentration (EMC): EMC = Cj. Vj j=1 n Vj j=1 V=volume of flow during period i n=total number of events C=average concentration associated with period j m=number of events measured 40 University of Massachusetts, Amherst, © 2004

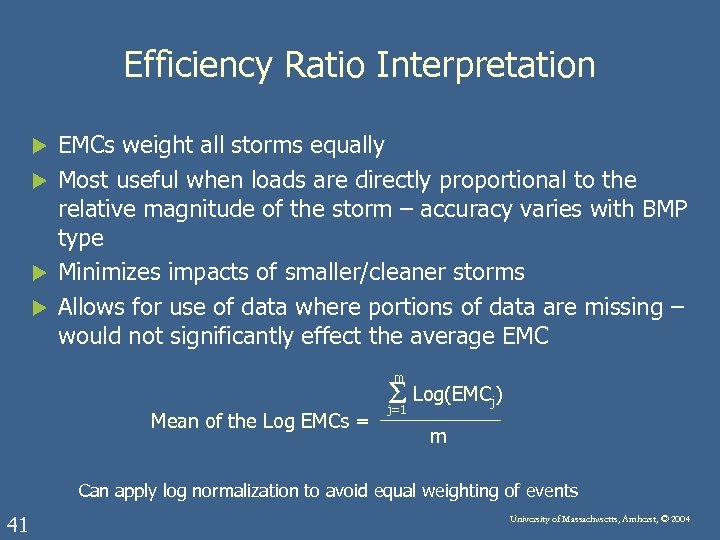

Efficiency Ratio Interpretation EMCs weight all storms equally u Most useful when loads are directly proportional to the relative magnitude of the storm – accuracy varies with BMP type u Minimizes impacts of smaller/cleaner storms u Allows for use of data where portions of data are missing – would not significantly effect the average EMC u m Mean of the Log EMCs = Log(EMCj) j=1 m Can apply log normalization to avoid equal weighting of events 41 University of Massachusetts, Amherst, © 2004

Efficiency Ratio Interpretation EMCs weight all storms equally u Most useful when loads are directly proportional to the relative magnitude of the storm – accuracy varies with BMP type u Minimizes impacts of smaller/cleaner storms u Allows for use of data where portions of data are missing – would not significantly effect the average EMC u m Mean of the Log EMCs = Log(EMCj) j=1 m Can apply log normalization to avoid equal weighting of events 41 University of Massachusetts, Amherst, © 2004

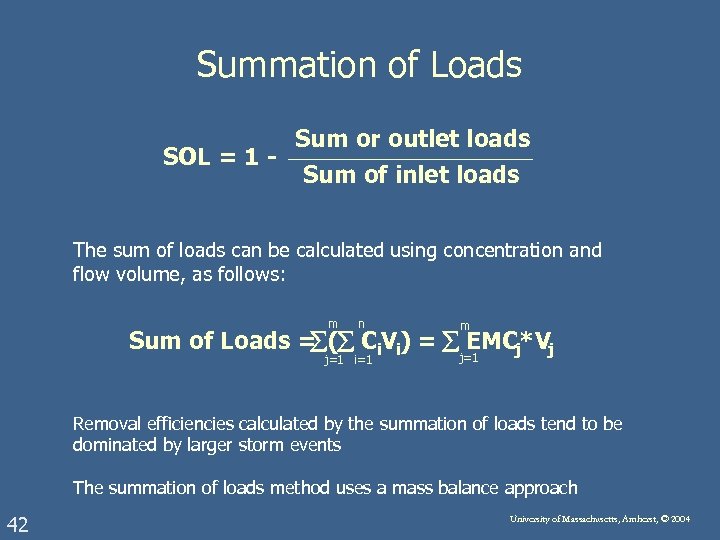

Summation of Loads SOL = 1 - Sum or outlet loads Sum of inlet loads The sum of loads can be calculated using concentration and flow volume, as follows: m n m Sum of Loads = ( Ci. Vi) = EMCj*Vj j=1 i=1 j=1 Removal efficiencies calculated by the summation of loads tend to be dominated by larger storm events The summation of loads method uses a mass balance approach 42 University of Massachusetts, Amherst, © 2004

Summation of Loads SOL = 1 - Sum or outlet loads Sum of inlet loads The sum of loads can be calculated using concentration and flow volume, as follows: m n m Sum of Loads = ( Ci. Vi) = EMCj*Vj j=1 i=1 j=1 Removal efficiencies calculated by the summation of loads tend to be dominated by larger storm events The summation of loads method uses a mass balance approach 42 University of Massachusetts, Amherst, © 2004

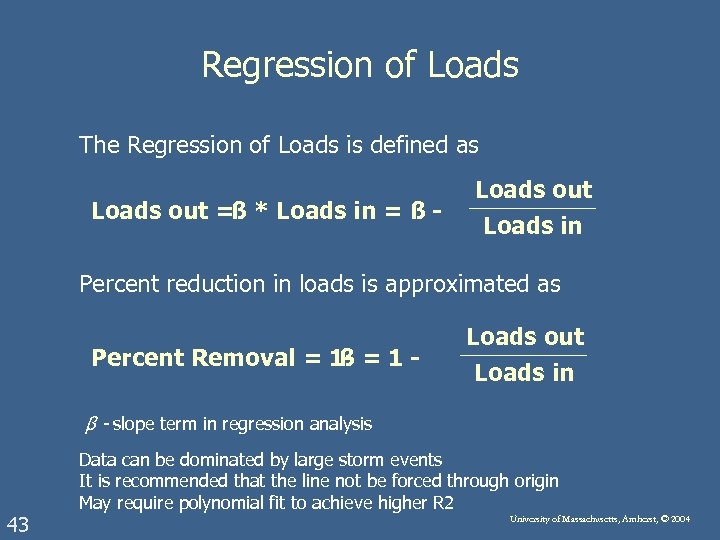

Regression of Loads The Regression of Loads is defined as Loads out =ß * Loads in = ß Loads in Percent reduction in loads is approximated as Percent Removal = 1 - = 1 ß Loads out Loads in β - slope term in regression analysis 43 Data can be dominated by large storm events It is recommended that the line not be forced through origin May require polynomial fit to achieve higher R 2 University of Massachusetts, Amherst, © 2004

Regression of Loads The Regression of Loads is defined as Loads out =ß * Loads in = ß Loads in Percent reduction in loads is approximated as Percent Removal = 1 - = 1 ß Loads out Loads in β - slope term in regression analysis 43 Data can be dominated by large storm events It is recommended that the line not be forced through origin May require polynomial fit to achieve higher R 2 University of Massachusetts, Amherst, © 2004

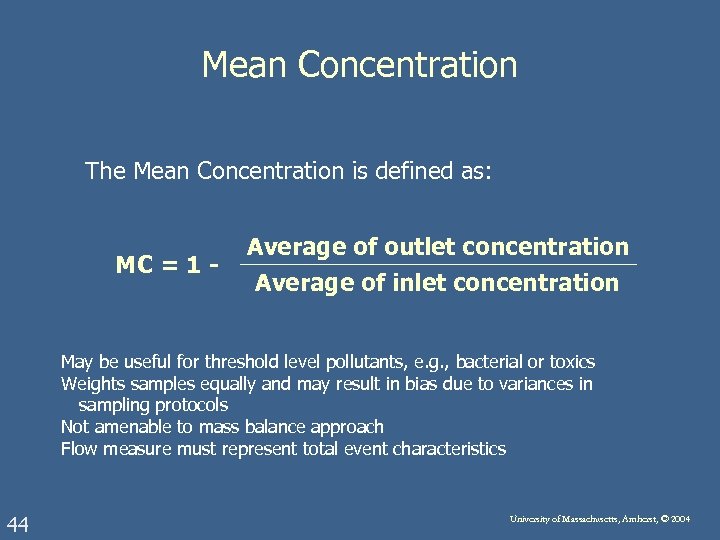

Mean Concentration The Mean Concentration is defined as: MC = 1 - Average of outlet concentration Average of inlet concentration May be useful for threshold level pollutants, e. g. , bacterial or toxics Weights samples equally and may result in bias due to variances in sampling protocols Not amenable to mass balance approach Flow measure must represent total event characteristics 44 University of Massachusetts, Amherst, © 2004

Mean Concentration The Mean Concentration is defined as: MC = 1 - Average of outlet concentration Average of inlet concentration May be useful for threshold level pollutants, e. g. , bacterial or toxics Weights samples equally and may result in bias due to variances in sampling protocols Not amenable to mass balance approach Flow measure must represent total event characteristics 44 University of Massachusetts, Amherst, © 2004

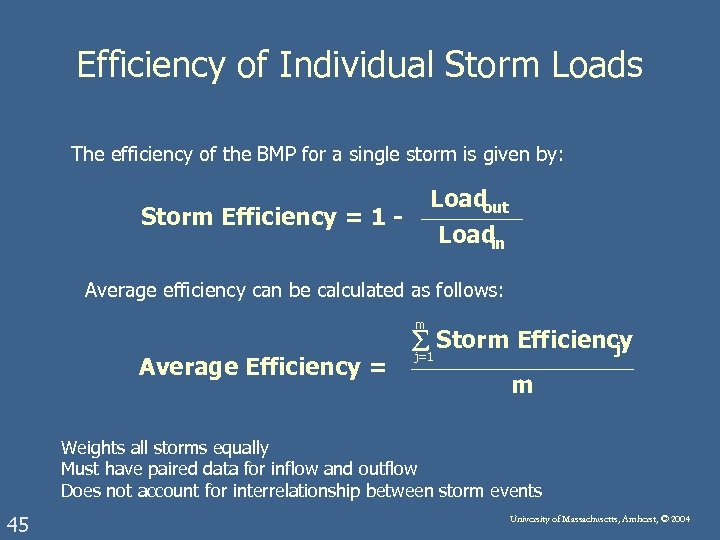

Efficiency of Individual Storm Loads The efficiency of the BMP for a single storm is given by: Load out Load in Storm Efficiency = 1 - Average efficiency can be calculated as follows: m Average Efficiency = Storm Efficiency j j=1 m Weights all storms equally Must have paired data for inflow and outflow Does not account for interrelationship between storm events 45 University of Massachusetts, Amherst, © 2004

Efficiency of Individual Storm Loads The efficiency of the BMP for a single storm is given by: Load out Load in Storm Efficiency = 1 - Average efficiency can be calculated as follows: m Average Efficiency = Storm Efficiency j j=1 m Weights all storms equally Must have paired data for inflow and outflow Does not account for interrelationship between storm events 45 University of Massachusetts, Amherst, © 2004

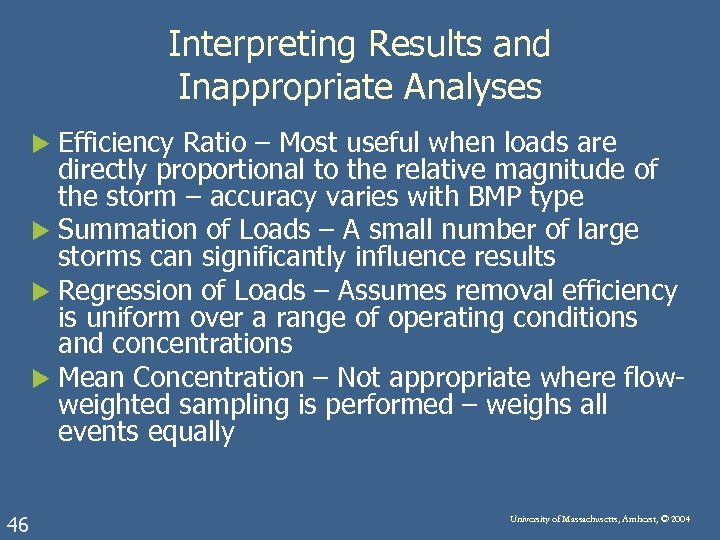

Interpreting Results and Inappropriate Analyses Efficiency Ratio – Most useful when loads are directly proportional to the relative magnitude of the storm – accuracy varies with BMP type u Summation of Loads – A small number of large storms can significantly influence results u Regression of Loads – Assumes removal efficiency is uniform over a range of operating conditions and concentrations u Mean Concentration – Not appropriate where flowweighted sampling is performed – weighs all events equally u 46 University of Massachusetts, Amherst, © 2004

Interpreting Results and Inappropriate Analyses Efficiency Ratio – Most useful when loads are directly proportional to the relative magnitude of the storm – accuracy varies with BMP type u Summation of Loads – A small number of large storms can significantly influence results u Regression of Loads – Assumes removal efficiency is uniform over a range of operating conditions and concentrations u Mean Concentration – Not appropriate where flowweighted sampling is performed – weighs all events equally u 46 University of Massachusetts, Amherst, © 2004

Using Statistics Inappropriately Each method is likely to produce a different efficiency u Method should be chosen by its relevance and applicability to each scenario, not by the efficiency value it produces u Be aware of statistics being misused to support claims u Reporting of ranges may be more appropriate under certain test conditions, e. g. , determination that data is qualitative versus quantitative u 47 University of Massachusetts, Amherst, © 2004

Using Statistics Inappropriately Each method is likely to produce a different efficiency u Method should be chosen by its relevance and applicability to each scenario, not by the efficiency value it produces u Be aware of statistics being misused to support claims u Reporting of ranges may be more appropriate under certain test conditions, e. g. , determination that data is qualitative versus quantitative u 47 University of Massachusetts, Amherst, © 2004

Questions and Answers (2 of 3) 48 University of Massachusetts, Amherst, © 2004

Questions and Answers (2 of 3) 48 University of Massachusetts, Amherst, © 2004

Module I: Planning for A Stormwater BMP Demonstration 1. Factors Affecting Stormwater Sampling 2. Data Quality Objectives and the Test QA Plan Module II: Collecting and Analyzing Stormwater BMP Data 3. Sampling Design 4. Statistical Analyses 5. Data Adequacy: Case Study 49 University of Massachusetts, Amherst, © 2004

Module I: Planning for A Stormwater BMP Demonstration 1. Factors Affecting Stormwater Sampling 2. Data Quality Objectives and the Test QA Plan Module II: Collecting and Analyzing Stormwater BMP Data 3. Sampling Design 4. Statistical Analyses 5. Data Adequacy: Case Study 49 University of Massachusetts, Amherst, © 2004

5. Case Study I: Test Plan and Data Adequacy u Review of data § Test QA Plan and Data Quality Assurance Project Plan § Field and lab data adequacy § Data reporting and depiction of performance claims u Potential problems § Field and lab violations § Data reduction issues 50 University of Massachusetts, Amherst, © 2004

5. Case Study I: Test Plan and Data Adequacy u Review of data § Test QA Plan and Data Quality Assurance Project Plan § Field and lab data adequacy § Data reporting and depiction of performance claims u Potential problems § Field and lab violations § Data reduction issues 50 University of Massachusetts, Amherst, © 2004

Background u u u u u 51 Study of structural BMP Performance claim made – 80% TSS removal Study commenced prior to TARP Protocol as well as other published, public domain stormwater BMP monitoring protocols Resources for conducting study borne by single private entity Study design included limited information on site, sampling equipment, sampler programming, calibration, sample collection and analysis TSS/SSC primary water quality parameter of interest Flow measurement and rain gauge equipment to be installed Analytical testing to be performed by outside laboratory Sample collection by technology developer University of Massachusetts, Amherst, © 2004

Background u u u u u 51 Study of structural BMP Performance claim made – 80% TSS removal Study commenced prior to TARP Protocol as well as other published, public domain stormwater BMP monitoring protocols Resources for conducting study borne by single private entity Study design included limited information on site, sampling equipment, sampler programming, calibration, sample collection and analysis TSS/SSC primary water quality parameter of interest Flow measurement and rain gauge equipment to be installed Analytical testing to be performed by outside laboratory Sample collection by technology developer University of Massachusetts, Amherst, © 2004

Sample Plan Adequacy – Issues Identified u Provide complete engineering drawings of system including § § § u Entire drainage area connected to system Pipe sizing and inlet locations Description of pervious and impervious surfaces Design calculations used to size unit Climatic data used to design structures Any additional structures or site details that may have bearing on system Provide use characteristics of site including § Vehicle and industrial usage § Maintenance of site relative to sweeping, gutter maintenance, spill containment, and snow stockpiling/disposal u 52 Indicate condition and maintenance of system prior to commencing test, e. g. , was system cleaned out during or before initiation of this phase of the study? University of Massachusetts, Amherst, © 2004

Sample Plan Adequacy – Issues Identified u Provide complete engineering drawings of system including § § § u Entire drainage area connected to system Pipe sizing and inlet locations Description of pervious and impervious surfaces Design calculations used to size unit Climatic data used to design structures Any additional structures or site details that may have bearing on system Provide use characteristics of site including § Vehicle and industrial usage § Maintenance of site relative to sweeping, gutter maintenance, spill containment, and snow stockpiling/disposal u 52 Indicate condition and maintenance of system prior to commencing test, e. g. , was system cleaned out during or before initiation of this phase of the study? University of Massachusetts, Amherst, © 2004

Sample Plan Adequacy – Test Equipment and Sampling Issues u u u 53 Provide a description and/or reference to manufacturer’s documentation showing how flow meter and sampling equipment is calibrated and their location in the system (cross-section and plan view) Detailed description of sampling program by design and storm event Indicate when and how much sample to be taken Provide explanation and methodology for discreet sampling. Indicate who, when, and where in the system these samples were taken Identify equipment used and calibrations used for sampling Provide discussion/explanation for sampling without detention delay in the system between inlet and outlet University of Massachusetts, Amherst, © 2004

Sample Plan Adequacy – Test Equipment and Sampling Issues u u u 53 Provide a description and/or reference to manufacturer’s documentation showing how flow meter and sampling equipment is calibrated and their location in the system (cross-section and plan view) Detailed description of sampling program by design and storm event Indicate when and how much sample to be taken Provide explanation and methodology for discreet sampling. Indicate who, when, and where in the system these samples were taken Identify equipment used and calibrations used for sampling Provide discussion/explanation for sampling without detention delay in the system between inlet and outlet University of Massachusetts, Amherst, © 2004

Sample Plan Adequacy – Lab and Data Analysis Issues u Identify laboratory and controls for sampling handling, QA/QC on sample data u Identify methodology used for PSD, including sieve sizes and sample sizes u Analytical method for TSS stated in protocol - EPA 160. 2 u Need explanation and equations used to calculate EMC, including calculation worksheets 54 University of Massachusetts, Amherst, © 2004

Sample Plan Adequacy – Lab and Data Analysis Issues u Identify laboratory and controls for sampling handling, QA/QC on sample data u Identify methodology used for PSD, including sieve sizes and sample sizes u Analytical method for TSS stated in protocol - EPA 160. 2 u Need explanation and equations used to calculate EMC, including calculation worksheets 54 University of Massachusetts, Amherst, © 2004

Field Test Issues Flow meters not calibrated for the first 10 events u Truncated sampling protocol u No documented instrument calibration u Once flow measurement error was identified, an adjusted flow factor is applied to the first 10 events, based on the outcome of the second 10 events u Measured and calculated storm volumes vary by up to a factor of 10 resulting in adjustment to flow volume u 55 University of Massachusetts, Amherst, © 2004

Field Test Issues Flow meters not calibrated for the first 10 events u Truncated sampling protocol u No documented instrument calibration u Once flow measurement error was identified, an adjusted flow factor is applied to the first 10 events, based on the outcome of the second 10 events u Measured and calculated storm volumes vary by up to a factor of 10 resulting in adjustment to flow volume u 55 University of Massachusetts, Amherst, © 2004

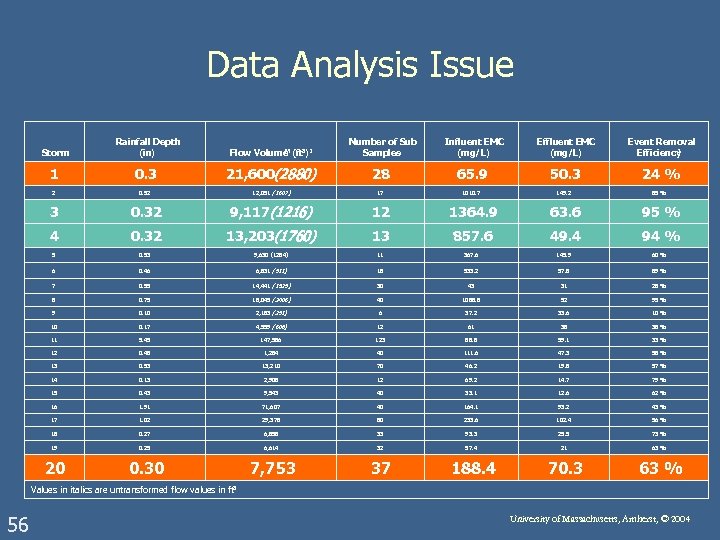

Data Analysis Issue Storm Rainfall Depth (in) Number of Sub Samples Influent EMC (mg/L) Effluent EMC (mg/L) Event Removal 1 Efficiency 1 0. 3 21, 600(2880) 28 65. 9 50. 3 24 % 2 0. 52 12, 051 (1607) 17 1010. 7 149. 2 3 0. 32 9, 117 (1216) 12 1364. 9 63. 6 95 % 4 0. 32 13, 203(1760) 13 857. 6 49. 4 94 % 5 0. 53 9, 630 (1284) 11 367. 6 145. 9 60 % 6 0. 46 6, 831 (911) 18 533. 2 57. 8 89 % 7 0. 55 14, 441 (1925) 30 43 31 28 % 8 0. 75 18, 045 (2406) 40 1088. 8 52 95 % 9 0. 10 2, 183 (291) 6 37. 2 33. 6 10 % 10 0. 17 4, 559 (608) 12 61 38 38 % 11 5. 45 147, 586 123 88. 8 59. 1 33 % 12 0. 48 1, 284 40 111. 6 47. 3 58 % 13 0. 53 13, 210 70 46. 2 19. 8 57 % 14 0. 13 2, 908 12 69. 2 14. 7 79 % 15 0. 43 9, 543 40 33. 1 12. 6 62 % 16 1. 91 71, 607 40 164. 1 93. 2 43 % 17 1. 02 29, 378 80 233. 6 102. 4 56 % 18 0. 27 6, 858 33 93. 3 25. 5 73 % 19 0. 25 6, 614 32 57. 4 21 63 % 20 0. 30 M Flow Volume (ft 3)1 7, 753 37 188. 4 70. 3 85 % 63 % Values in italics are untransformed flow values in ft 3 56 University of Massachusetts, Amherst, © 2004

Data Analysis Issue Storm Rainfall Depth (in) Number of Sub Samples Influent EMC (mg/L) Effluent EMC (mg/L) Event Removal 1 Efficiency 1 0. 3 21, 600(2880) 28 65. 9 50. 3 24 % 2 0. 52 12, 051 (1607) 17 1010. 7 149. 2 3 0. 32 9, 117 (1216) 12 1364. 9 63. 6 95 % 4 0. 32 13, 203(1760) 13 857. 6 49. 4 94 % 5 0. 53 9, 630 (1284) 11 367. 6 145. 9 60 % 6 0. 46 6, 831 (911) 18 533. 2 57. 8 89 % 7 0. 55 14, 441 (1925) 30 43 31 28 % 8 0. 75 18, 045 (2406) 40 1088. 8 52 95 % 9 0. 10 2, 183 (291) 6 37. 2 33. 6 10 % 10 0. 17 4, 559 (608) 12 61 38 38 % 11 5. 45 147, 586 123 88. 8 59. 1 33 % 12 0. 48 1, 284 40 111. 6 47. 3 58 % 13 0. 53 13, 210 70 46. 2 19. 8 57 % 14 0. 13 2, 908 12 69. 2 14. 7 79 % 15 0. 43 9, 543 40 33. 1 12. 6 62 % 16 1. 91 71, 607 40 164. 1 93. 2 43 % 17 1. 02 29, 378 80 233. 6 102. 4 56 % 18 0. 27 6, 858 33 93. 3 25. 5 73 % 19 0. 25 6, 614 32 57. 4 21 63 % 20 0. 30 M Flow Volume (ft 3)1 7, 753 37 188. 4 70. 3 85 % 63 % Values in italics are untransformed flow values in ft 3 56 University of Massachusetts, Amherst, © 2004

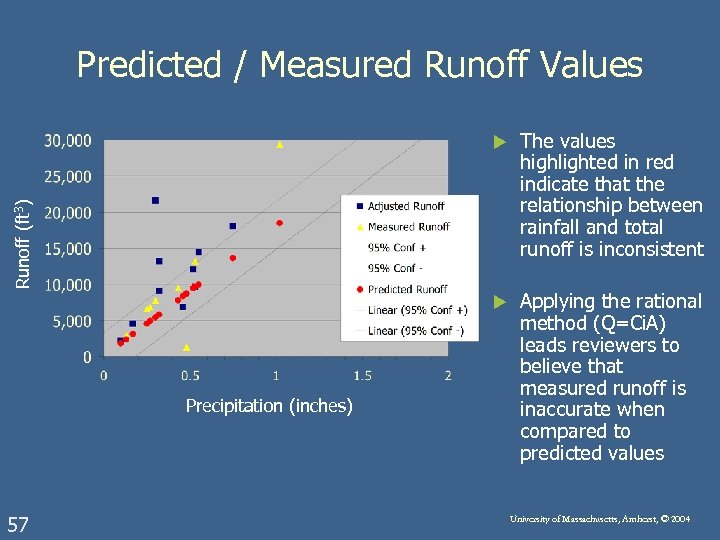

Predicted / Measured Runoff Values The values highlighted in red indicate that the relationship between rainfall and total runoff is inconsistent u Applying the rational method (Q=Ci. A) leads reviewers to believe that measured runoff is inaccurate when compared to predicted values Runoff (ft 3) u Precipitation (inches) 57 University of Massachusetts, Amherst, © 2004

Predicted / Measured Runoff Values The values highlighted in red indicate that the relationship between rainfall and total runoff is inconsistent u Applying the rational method (Q=Ci. A) leads reviewers to believe that measured runoff is inaccurate when compared to predicted values Runoff (ft 3) u Precipitation (inches) 57 University of Massachusetts, Amherst, © 2004

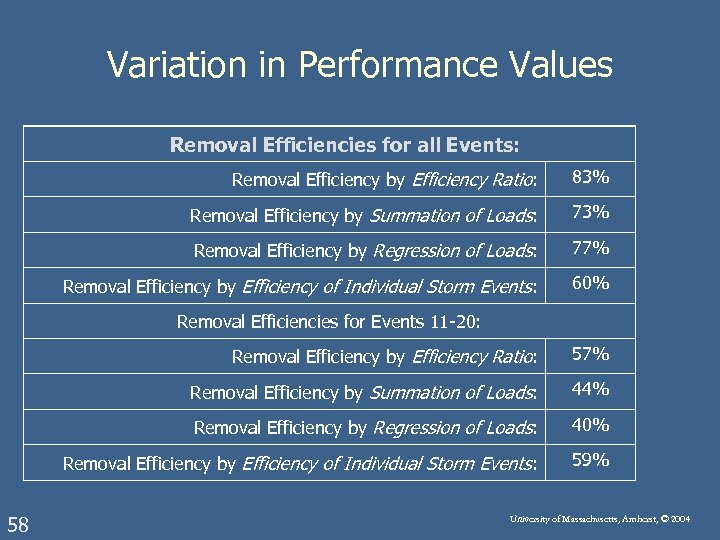

Variation in Performance Values Removal Efficiencies for all Events: Removal Efficiency by Efficiency Ratio: 83% Removal Efficiency by Summation of Loads: 73% Removal Efficiency by Regression of Loads: 77% Removal Efficiency by Efficiency of Individual Storm Events: 60% Removal Efficiencies for Events 11 -20: Removal Efficiency by Efficiency Ratio: Removal Efficiency by Summation of Loads: 44% Removal Efficiency by Regression of Loads: 40% Removal Efficiency by Efficiency of Individual Storm Events: 58 57% 59% University of Massachusetts, Amherst, © 2004

Variation in Performance Values Removal Efficiencies for all Events: Removal Efficiency by Efficiency Ratio: 83% Removal Efficiency by Summation of Loads: 73% Removal Efficiency by Regression of Loads: 77% Removal Efficiency by Efficiency of Individual Storm Events: 60% Removal Efficiencies for Events 11 -20: Removal Efficiency by Efficiency Ratio: Removal Efficiency by Summation of Loads: 44% Removal Efficiency by Regression of Loads: 40% Removal Efficiency by Efficiency of Individual Storm Events: 58 57% 59% University of Massachusetts, Amherst, © 2004

Data Analysis and Presentation Issues u u u 59 Impact of adjusted flow on average net TSS/SSC removal resulted in positive bias in performance efficiency Missing raw data including documented deviations from sampling plan, lab analysis and data management Missing laboratory and data management QA/QC No independent validation of calibrations, sampling and analysis Missing statistical analysis including relative percent differences (precision) and percent recovery (accuracy) as well as number of sample (n), standard deviations, means (specify arithmetic or geometric) and standard errors Missing documentation of chain of custody protocol University of Massachusetts, Amherst, © 2004

Data Analysis and Presentation Issues u u u 59 Impact of adjusted flow on average net TSS/SSC removal resulted in positive bias in performance efficiency Missing raw data including documented deviations from sampling plan, lab analysis and data management Missing laboratory and data management QA/QC No independent validation of calibrations, sampling and analysis Missing statistical analysis including relative percent differences (precision) and percent recovery (accuracy) as well as number of sample (n), standard deviations, means (specify arithmetic or geometric) and standard errors Missing documentation of chain of custody protocol University of Massachusetts, Amherst, © 2004

Ensuring Adequate Data u Provide QC activities including blanks, duplicates, matrix spikes, lab control samples, surrogates, or second column confirmation u State the frequency of analysis and the spike compound sources and levels u State required control limits for each QC activity and specify corrective actions and effectiveness if limits exceeded 60 University of Massachusetts, Amherst, © 2004

Ensuring Adequate Data u Provide QC activities including blanks, duplicates, matrix spikes, lab control samples, surrogates, or second column confirmation u State the frequency of analysis and the spike compound sources and levels u State required control limits for each QC activity and specify corrective actions and effectiveness if limits exceeded 60 University of Massachusetts, Amherst, © 2004

Summary of Data Quality Assessment 5 Steps 1. Review the DQOs and sampling design 2. Conduct a preliminary data review 3. Select the statistical test 4. Verify the assumptions of the statistical test 5. Draw conclusions from the data 61 University of Massachusetts, Amherst, © 2004

Summary of Data Quality Assessment 5 Steps 1. Review the DQOs and sampling design 2. Conduct a preliminary data review 3. Select the statistical test 4. Verify the assumptions of the statistical test 5. Draw conclusions from the data 61 University of Massachusetts, Amherst, © 2004

Summary: Sampling Design u Use of TARP Protocol data collection criteria u Consideration of issues relating to sampling locations u Sampling water quality parameters u Lab analysis of water quality parameters 62 University of Massachusetts, Amherst, © 2004

Summary: Sampling Design u Use of TARP Protocol data collection criteria u Consideration of issues relating to sampling locations u Sampling water quality parameters u Lab analysis of water quality parameters 62 University of Massachusetts, Amherst, © 2004

Summary: Statistical Analysis u Protocol recommends the efficiency ratio u Summation of loads, regression of loads, and mean concentration may be applicable u Each method gives somewhat different results u Check statistics to be sure they support claims 63 University of Massachusetts, Amherst, © 2004

Summary: Statistical Analysis u Protocol recommends the efficiency ratio u Summation of loads, regression of loads, and mean concentration may be applicable u Each method gives somewhat different results u Check statistics to be sure they support claims 63 University of Massachusetts, Amherst, © 2004

Summary: Case Study u Learn to recognize limitations in test plans, equipment data and documentation deficiencies, and problems with the statistical analysis u Making decisions on the usability of the data u Sharing the data among TARP states and others 64 University of Massachusetts, Amherst, © 2004

Summary: Case Study u Learn to recognize limitations in test plans, equipment data and documentation deficiencies, and problems with the statistical analysis u Making decisions on the usability of the data u Sharing the data among TARP states and others 64 University of Massachusetts, Amherst, © 2004

Module II: Retrospective u Sampling design § Planning, contingency planning, and flexibility u Statistical analysis § Methods show different results --- use caution interpreting results u Data adequacy: case study § Learning to deal with sampling and data deficiencies 65 University of Massachusetts, Amherst, © 2004

Module II: Retrospective u Sampling design § Planning, contingency planning, and flexibility u Statistical analysis § Methods show different results --- use caution interpreting results u Data adequacy: case study § Learning to deal with sampling and data deficiencies 65 University of Massachusetts, Amherst, © 2004

What Have You Accomplished? u Guidance for using the TARP protocol u Exposure to key issues in a technology demonstration field test u Knowledge of TARP stormwater work group and others evaluating technologies 66 University of Massachusetts, Amherst, © 2004

What Have You Accomplished? u Guidance for using the TARP protocol u Exposure to key issues in a technology demonstration field test u Knowledge of TARP stormwater work group and others evaluating technologies 66 University of Massachusetts, Amherst, © 2004

Questions and Answers (3 of 3) 67 University of Massachusetts, Amherst, © 2004

Questions and Answers (3 of 3) 67 University of Massachusetts, Amherst, © 2004

Evaluating Stormwater Technology Performance Links to Additional Resources TARP: http: //www. dep. state. pa. us/deputate/pollprev/techservices/tarp/ 68 University of Massachusetts, Amherst, © 2004

Evaluating Stormwater Technology Performance Links to Additional Resources TARP: http: //www. dep. state. pa. us/deputate/pollprev/techservices/tarp/ 68 University of Massachusetts, Amherst, © 2004