80aaabfe5fd9370107d5a17854e08b7d.ppt

- Количество слайдов: 31

Evaluating Comprehensive Equity Projects: An Overview Milbrey Mc. Laughlin Stanford University November 17 2008

What is the “state of the art” of indicators and tools to evaluate a complementary learning system?

Presentation outline What are elements of a “good measure” for a comprehensive equity project The theory of change that guides comprehensive equity initiative Existing indicators : State of the art Issues & challenges for a comprehensive equity initiative Moving to an integrated data system

What is a “Good Measure”-indicators for a complementary learning initiative Meaningful and face valid to multiple stakeholders Useful to policy makers and practitioners— actionable Comparable over time & contexts Reliable– can’t be manipulated Practical to collect & analyze

Elements of a good measurement system for a complementary learning initiative? Looks across domains; collects positive as well as negative evidence Attends to contextual considerations & factors outside policy maker/practitioner control Asks questions stakeholders consider legitimate

A good measurement system for a complementary learning initiative also… Incorporates indicators that are accessible & relevant across agencies and levels Secures buy-in of the multiple stakeholders involved…develops consensus about both measurement & use Is parsimonious & efficient Assesses the underlying theory of change

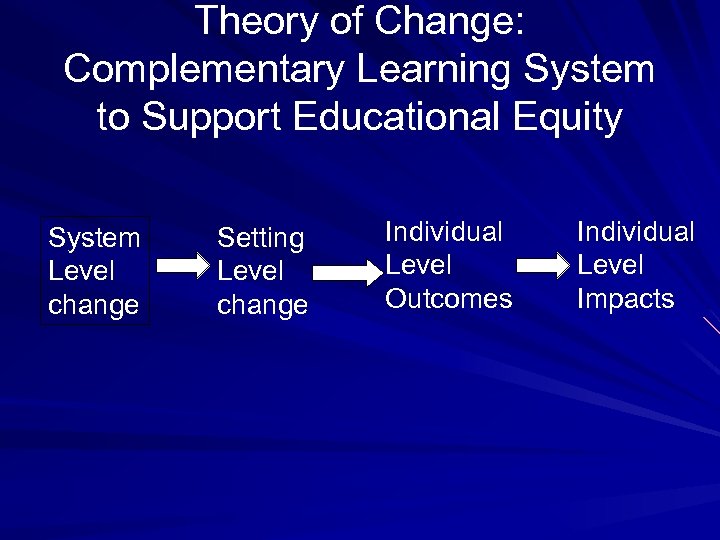

Theory of Change: Complementary Learning System to Support Educational Equity System Level change Setting Level change Individual Level Outcomes Individual Level Impacts

Indicators at each level include attitudinal, behavioral, knowledge and status data

Individual-level indicators measure the “so what” Benefits to youth

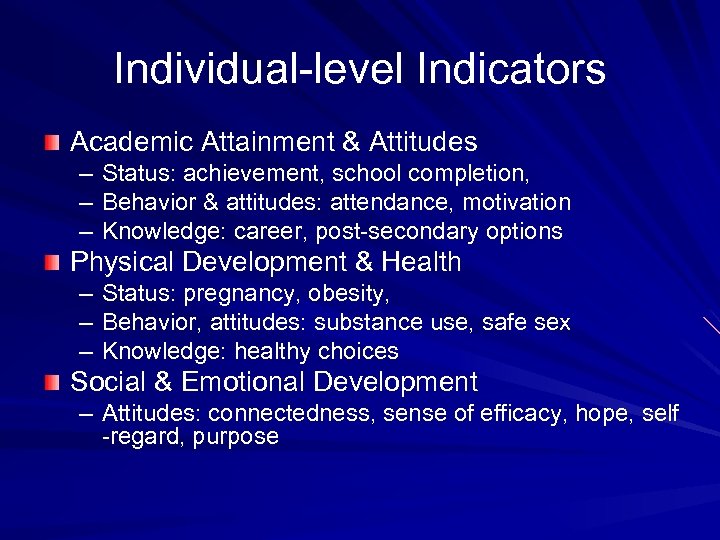

Individual-level Indicators Academic Attainment & Attitudes – Status: achievement, school completion, – Behavior & attitudes: attendance, motivation – Knowledge: career, post-secondary options Physical Development & Health – Status: pregnancy, obesity, – Behavior, attitudes: substance use, safe sex – Knowledge: healthy choices Social & Emotional Development – Attitudes: connectedness, sense of efficacy, hope, self -regard, purpose

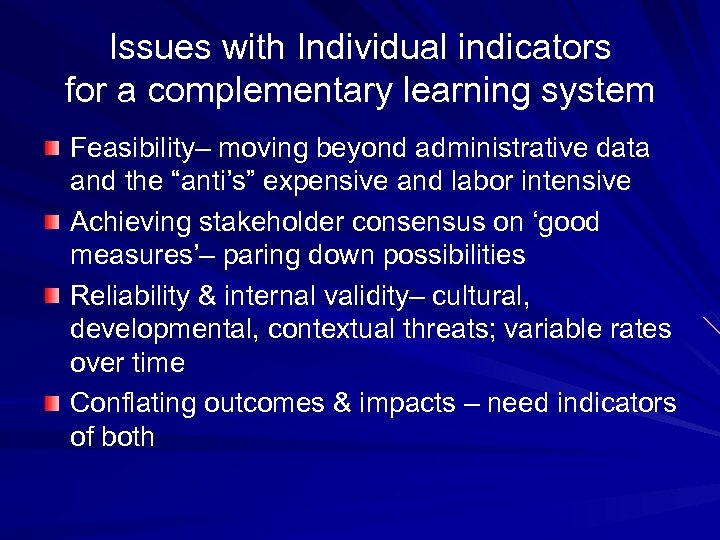

Issues with Individual indicators for a complementary learning system Feasibility– moving beyond administrative data and the “anti’s” expensive and labor intensive Achieving stakeholder consensus on ‘good measures’– paring down possibilities Reliability & internal validity– cultural, developmental, contextual threats; variable rates over time Conflating outcomes & impacts – need indicators of both

Setting-level indicators measure Elements of a program thought to affect individual outcomes & Impact

Setting Level Indicators Participation –youth & parents Professional capacity & staff support Youth relationships with adults Youth leadership/voice Menu of opportunities/quality Partnerships

Issues with setting-level indicators: Practical Feasibility—existing indicators typically part of costly program evaluations [surveys, observations, focus groups]; not replicable in an indicators system Limited local capacity to collect & analyze data, especially among CBOs Data politics– worries about revealing shortfalls, jeopardizing funding

Issues with setting-level indicators: Technical Generalizability– what is the “treatment” given situated practice. Whatever it takes… Churn in participants, staff and providers Qualitative considerations– interpretation more than enumeration Unexamined context considerations & environmental shifts-- misattribution

System-level indicators measure elements of the relevant policy system that affect the settinglevel indicators important to youth outcomes

System Level Indicators Committed, stable resources—financial & technical Cross-Agency/sector collaboration Dedicated infrastructure to support new institutional relationships Capacity to provide support, use indicators Political backing for the initiative & broad stakeholder support policy accommodation—waivers, incentives, e. g.

Issues with System Level Indicators Few models or indicators exist, especially at state level– needs development No great “felt need”- focus on outcomes Political challenges – looking across agencies and budgets for evidence of collaboration/ data integration

Without cross-agency, cross-level indicators … Cannot assess a complementary learning system’s theory of change Difficult to monitor progress toward educational equity in terms of “inputs” Difficult to track outcomes and progress across levels, identify implementation issues, and make necessary adjustments

Needed: An Integrated Data System Local report cards- Philadelphia, Baltimore South Carolina- across public agencies Chapin Hall– Integrated Data Base on Child and Family Programs in IL. The Youth Data Archive, John W. Gardner Center, Stanford

What is the Youth Data Archive? Links existing data from multiple agencies Community resource to answer questions about youth in the larger environment Supports inter-agency collaboration to improve service delivery and youth outcomes

YDA differs from existing data integration efforts Not a data “warehouse”– a partnership with existing communities and agencies; specific attention to stakeholder buy in Includes CBOs, non-profits & qualitative data Includes data from various system levels Matches data at the individual level

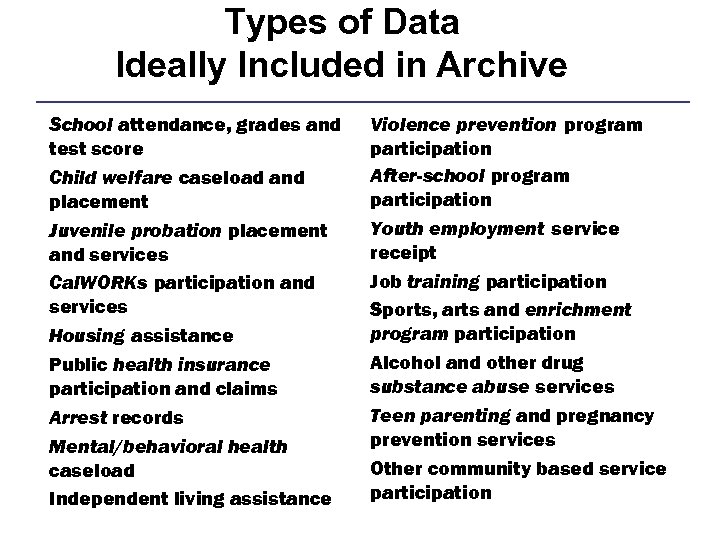

Types of Data Ideally Included in Archive School attendance, grades and test score Child welfare caseload and placement Juvenile probation placement and services Cal. WORKs participation and services Housing assistance Public health insurance participation and claims Arrest records Mental/behavioral health caseload Independent living assistance Violence prevention program participation After-school program participation Youth employment service receipt Job training participation Sports, arts and enrichment program participation Alcohol and other drug substance abuse services Teen parenting and pregnancy prevention services Other community based service participation

Some types of analyses… Event Histories– considering ‘value added’; pathways Comparative contributions of similar resources Conditional ‘treatment’ effects; effects on subpopulations Cross-contexts comparisons [demographic, SES, ‘treatment’, pathways]; create a natural experiment

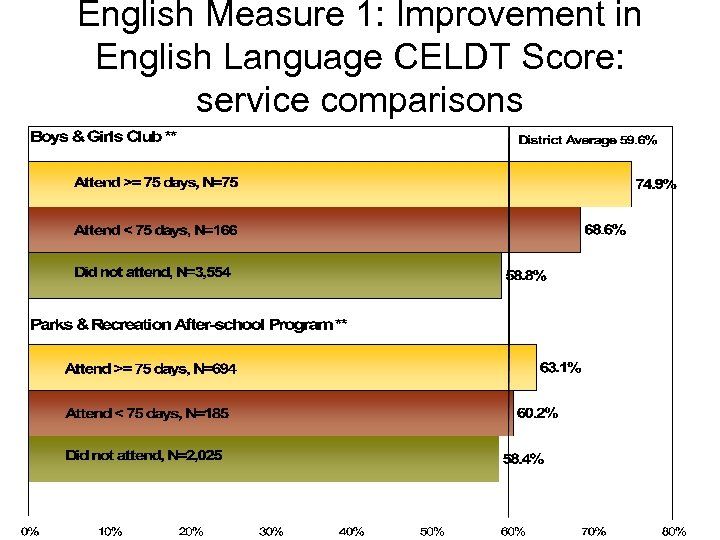

English Measure 1: Improvement in English Language CELDT Score: service comparisons *Significant difference at 90% level **Significant difference at 95% level

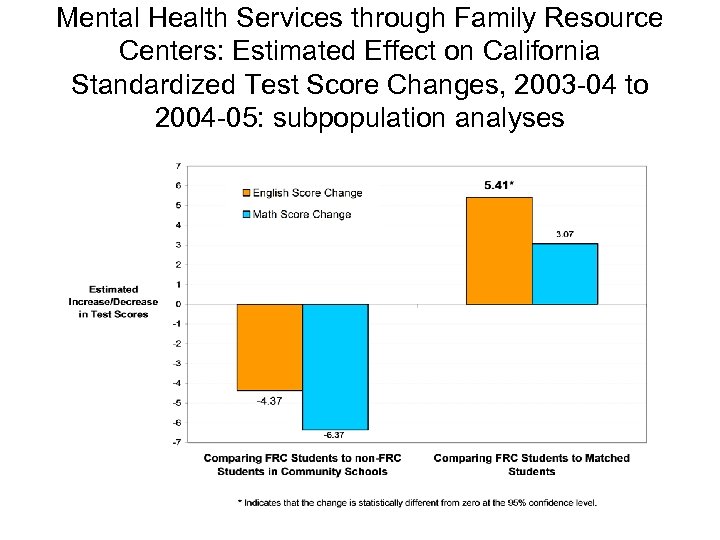

Mental Health Services through Family Resource Centers: Estimated Effect on California Standardized Test Score Changes, 2003 -04 to 2004 -05: subpopulation analyses

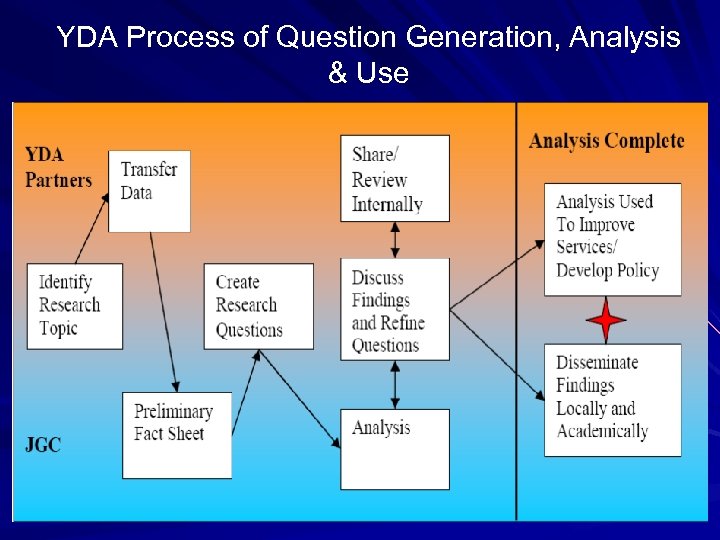

YDA Process of Question Generation, Analysis & Use

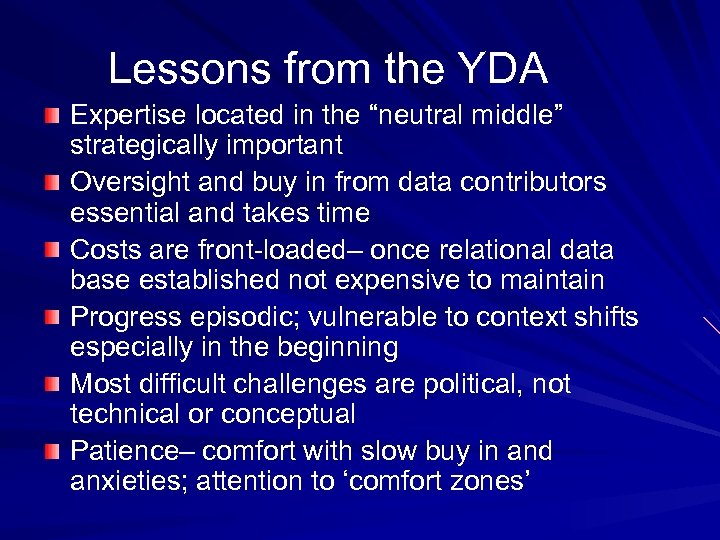

Lessons from the YDA Expertise located in the “neutral middle” strategically important Oversight and buy in from data contributors essential and takes time Costs are front-loaded– once relational data base established not expensive to maintain Progress episodic; vulnerable to context shifts especially in the beginning Most difficult challenges are political, not technical or conceptual Patience– comfort with slow buy in and anxieties; attention to ‘comfort zones’

Summing up… Cross domain indicators exist at the individual level– but expensive to collect outside status information from administrative data sets Public administrative data feature ‘deficit’ indicators– the anti’s At both individual & setting levels, challenge to develop and fund parsimonious, reliable menu of indicators System-level indicators need development— requires specifying a theory of change Process matters– stakeholders need to agree on the legitimacy and relevance of an indicator; no shortcuts

Biggest challenge facing evaluation of an comprehensive educational equity agenda Securing political and other supports for an integrated data system to monitor, assess and inform a complementary learning system

Evaluating Comprehensive Equity Projects: An Overview Milbrey Mc. Laughlin Stanford University November 17 2008

80aaabfe5fd9370107d5a17854e08b7d.ppt