da1ac7eb0d16f554d5af7e40c998de9f.ppt

- Количество слайдов: 15

Evaluating agricultural value chain programs: How we mix our methods Marieke de Ruyter de Wildt AEA Conference San Antonio 10 -13 November 2010

Evaluating agricultural value chain programs: How we mix our methods Marieke de Ruyter de Wildt AEA Conference San Antonio 10 -13 November 2010

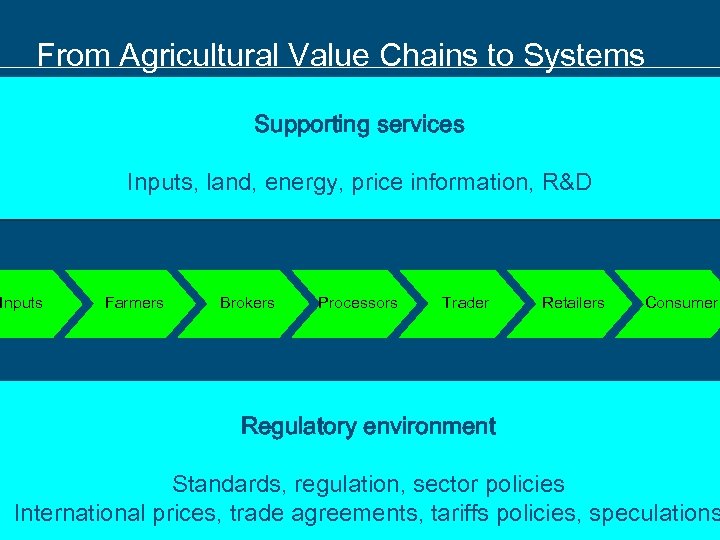

From Agricultural Value Chains to Systems Supporting services Inputs, land, energy, price information, R&D Inputs Farmers Brokers Processors Trader Retailers Consumer Regulatory environment Standards, regulation, sector policies International prices, trade agreements, tariffs policies, speculations

From Agricultural Value Chains to Systems Supporting services Inputs, land, energy, price information, R&D Inputs Farmers Brokers Processors Trader Retailers Consumer Regulatory environment Standards, regulation, sector policies International prices, trade agreements, tariffs policies, speculations

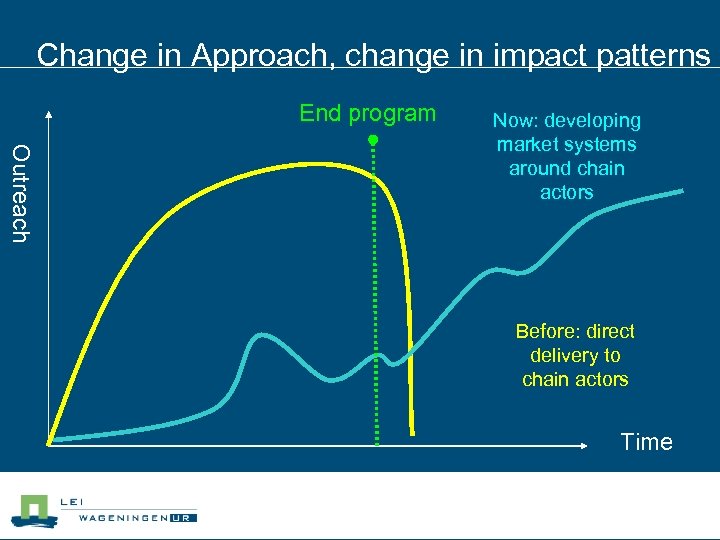

Change in Approach, change in impact patterns End program Outreach Now: developing market systems around chain actors Before: direct delivery to chain actors Time

Change in Approach, change in impact patterns End program Outreach Now: developing market systems around chain actors Before: direct delivery to chain actors Time

Guideline 1: Critical Ingredients Any evaluation should at least have: • Logic Model (beliefs, activities and results) • Methods that can face scrutiny • Insights that allow replication

Guideline 1: Critical Ingredients Any evaluation should at least have: • Logic Model (beliefs, activities and results) • Methods that can face scrutiny • Insights that allow replication

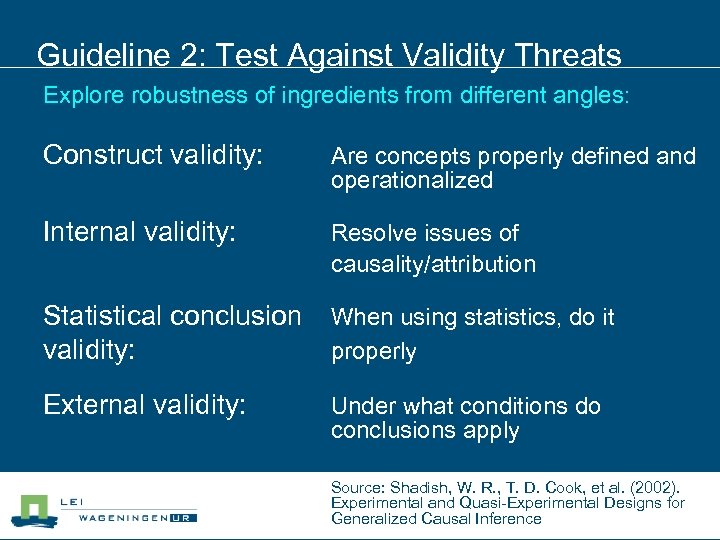

Guideline 2: Test Against Validity Threats Explore robustness of ingredients from different angles: Construct validity: Are concepts properly defined and operationalized Internal validity: Resolve issues of causality/attribution Statistical conclusion validity: When using statistics, do it properly External validity: Under what conditions do conclusions apply Source: Shadish, W. R. , T. D. Cook, et al. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference

Guideline 2: Test Against Validity Threats Explore robustness of ingredients from different angles: Construct validity: Are concepts properly defined and operationalized Internal validity: Resolve issues of causality/attribution Statistical conclusion validity: When using statistics, do it properly External validity: Under what conditions do conclusions apply Source: Shadish, W. R. , T. D. Cook, et al. (2002). Experimental and Quasi-Experimental Designs for Generalized Causal Inference

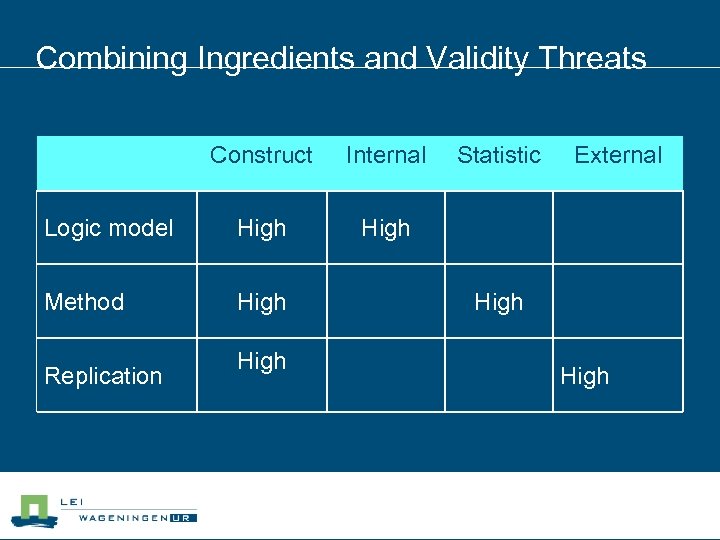

Combining Ingredients and Validity Threats Construct Internal Logic model High Method High Replication High Statistic External High

Combining Ingredients and Validity Threats Construct Internal Logic model High Method High Replication High Statistic External High

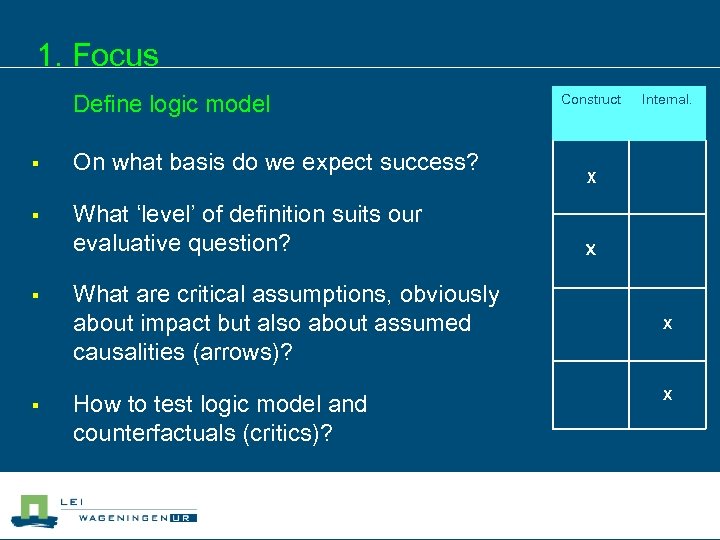

1. Focus Define logic model § On what basis do we expect success? § What ‘level’ of definition suits our evaluative question? § § What are critical assumptions, obviously about impact but also about assumed causalities (arrows)? How to test logic model and counterfactuals (critics)? Construct Internal. X X

1. Focus Define logic model § On what basis do we expect success? § What ‘level’ of definition suits our evaluative question? § § What are critical assumptions, obviously about impact but also about assumed causalities (arrows)? How to test logic model and counterfactuals (critics)? Construct Internal. X X

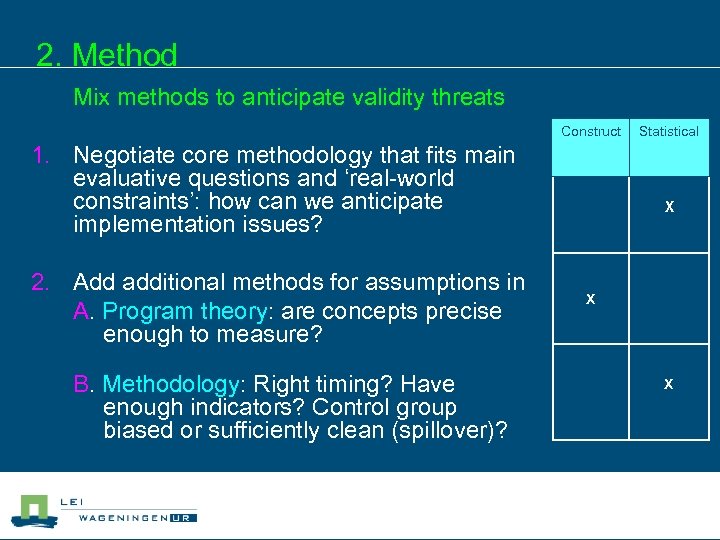

2. Method Mix methods to anticipate validity threats 1. Negotiate core methodology that fits main evaluative questions and ‘real-world constraints’: how can we anticipate implementation issues? 2. Add additional methods for assumptions in A. Program theory: are concepts precise enough to measure? B. Methodology: Right timing? Have enough indicators? Control group biased or sufficiently clean (spillover)? Construct Statistical X X X

2. Method Mix methods to anticipate validity threats 1. Negotiate core methodology that fits main evaluative questions and ‘real-world constraints’: how can we anticipate implementation issues? 2. Add additional methods for assumptions in A. Program theory: are concepts precise enough to measure? B. Methodology: Right timing? Have enough indicators? Control group biased or sufficiently clean (spillover)? Construct Statistical X X X

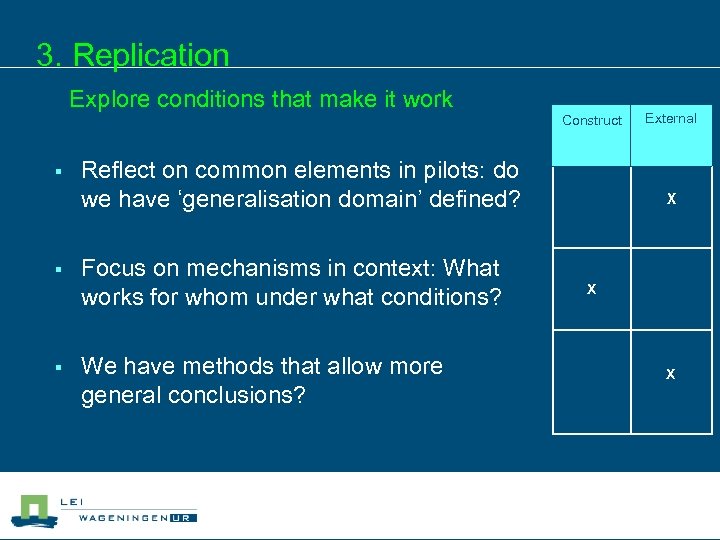

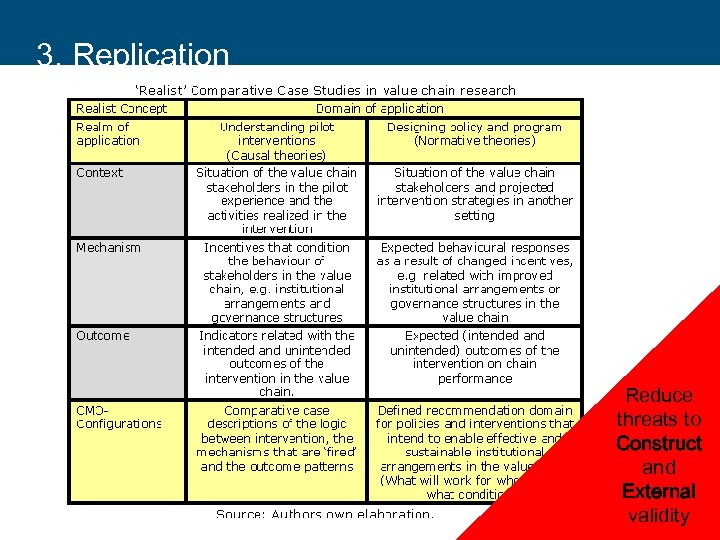

3. Replication Explore conditions that make it work § § § Construct Reflect on common elements in pilots: do we have ‘generalisation domain’ defined? Focus on mechanisms in context: What works for whom under what conditions? We have methods that allow more general conclusions? External X X X

3. Replication Explore conditions that make it work § § § Construct Reflect on common elements in pilots: do we have ‘generalisation domain’ defined? Focus on mechanisms in context: What works for whom under what conditions? We have methods that allow more general conclusions? External X X X

Example: training coffee farmers in Vietnam

Example: training coffee farmers in Vietnam

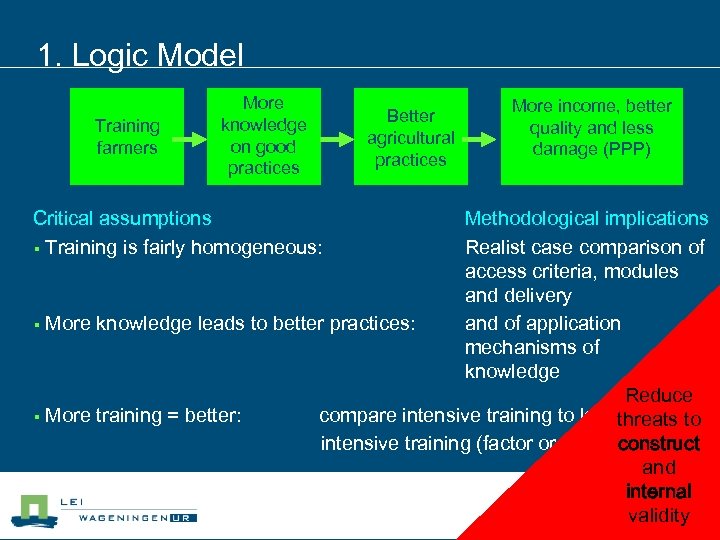

1. Logic Model Training farmers More knowledge on good practices Critical assumptions § Training is fairly homogeneous: Better agricultural practices More income, better quality and less damage (PPP) Methodological implications Realist case comparison of access criteria, modules and delivery § More knowledge leads to better practices: and of application mechanisms of knowledge Reduce § More training = better: compare intensive training to less threats to intensive training (factor or clusterconstruct analysis) and internal validity

1. Logic Model Training farmers More knowledge on good practices Critical assumptions § Training is fairly homogeneous: Better agricultural practices More income, better quality and less damage (PPP) Methodological implications Realist case comparison of access criteria, modules and delivery § More knowledge leads to better practices: and of application mechanisms of knowledge Reduce § More training = better: compare intensive training to less threats to intensive training (factor or clusterconstruct analysis) and internal validity

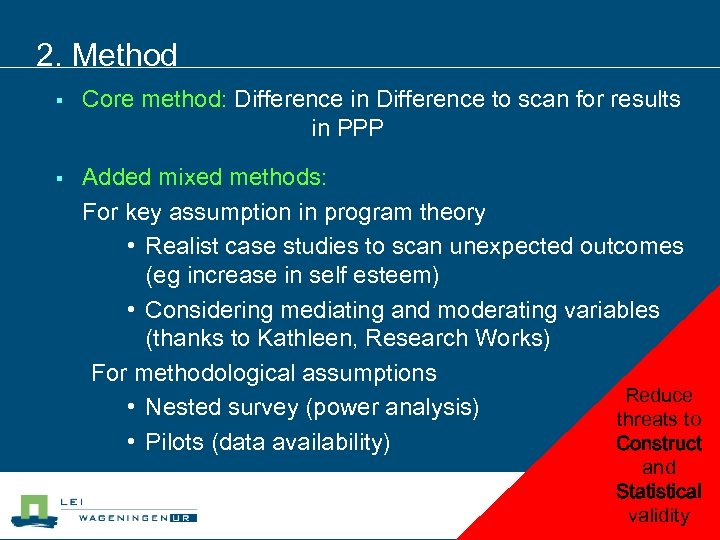

2. Method § Core method: Difference in Difference to scan for results in PPP § Added mixed methods: For key assumption in program theory • Realist case studies to scan unexpected outcomes (eg increase in self esteem) • Considering mediating and moderating variables (thanks to Kathleen, Research Works) For methodological assumptions Reduce • Nested survey (power analysis) threats to • Pilots (data availability) Construct and Statistical validity

2. Method § Core method: Difference in Difference to scan for results in PPP § Added mixed methods: For key assumption in program theory • Realist case studies to scan unexpected outcomes (eg increase in self esteem) • Considering mediating and moderating variables (thanks to Kathleen, Research Works) For methodological assumptions Reduce • Nested survey (power analysis) threats to • Pilots (data availability) Construct and Statistical validity

3. Replication Reduce threats to Construct and External validity

3. Replication Reduce threats to Construct and External validity

Conclusions § One-method research might be good for publication in top journals, but rarely for generating convincing evidence for involved agents § Evaluation design needs to be § Theory-based (clarify evaluative questions) § Using mix methods (minimize validity threats) § Address policy relevance (make sense of diversity) § Considering validity threats up front helps to find a more robust mix of methods

Conclusions § One-method research might be good for publication in top journals, but rarely for generating convincing evidence for involved agents § Evaluation design needs to be § Theory-based (clarify evaluative questions) § Using mix methods (minimize validity threats) § Address policy relevance (make sense of diversity) § Considering validity threats up front helps to find a more robust mix of methods

Marieke. ruyter@wur. nl

Marieke. ruyter@wur. nl