a4bf5318184c9af09297bf83833765f8.ppt

- Количество слайдов: 15

EU Data. Grid Project Test. Bed Status and Plans CERN Bob Jones EU Data. Grid Technical Coordinator CERN 1

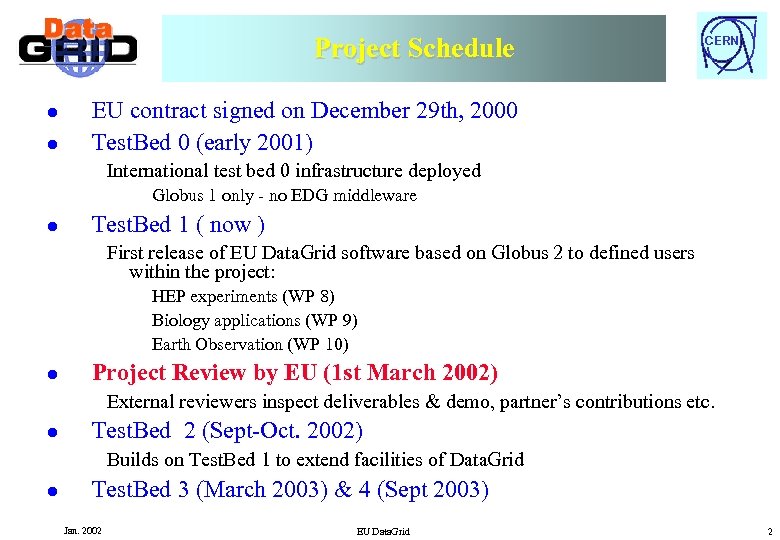

Project Schedule l l CERN EU contract signed on December 29 th, 2000 Test. Bed 0 (early 2001) International test bed 0 infrastructure deployed Globus 1 only - no EDG middleware l Test. Bed 1 ( now ) First release of EU Data. Grid software based on Globus 2 to defined users within the project: HEP experiments (WP 8) Biology applications (WP 9) Earth Observation (WP 10) l Project Review by EU (1 st March 2002) External reviewers inspect deliverables & demo, partner’s contributions etc. l Test. Bed 2 (Sept-Oct. 2002) Builds on Test. Bed 1 to extend facilities of Data. Grid l Test. Bed 3 (March 2003) & 4 (Sept 2003) Jan. 2002 EU Data. Grid 2

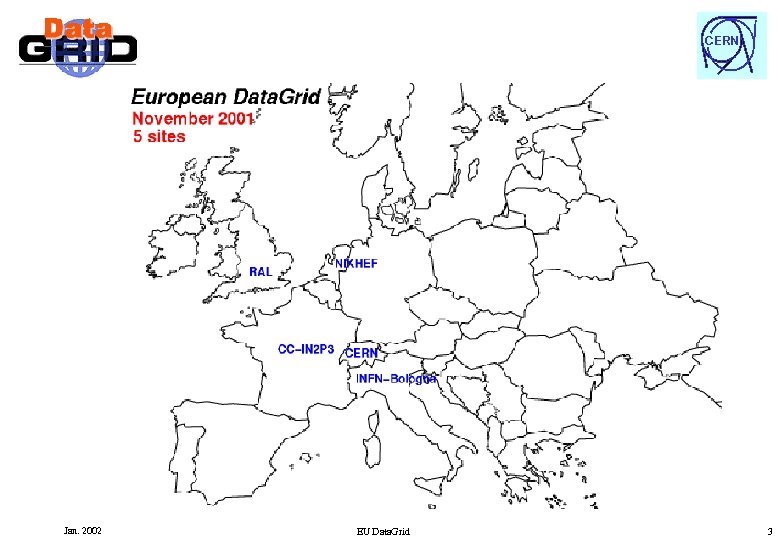

CERN Jan. 2002 EU Data. Grid 3

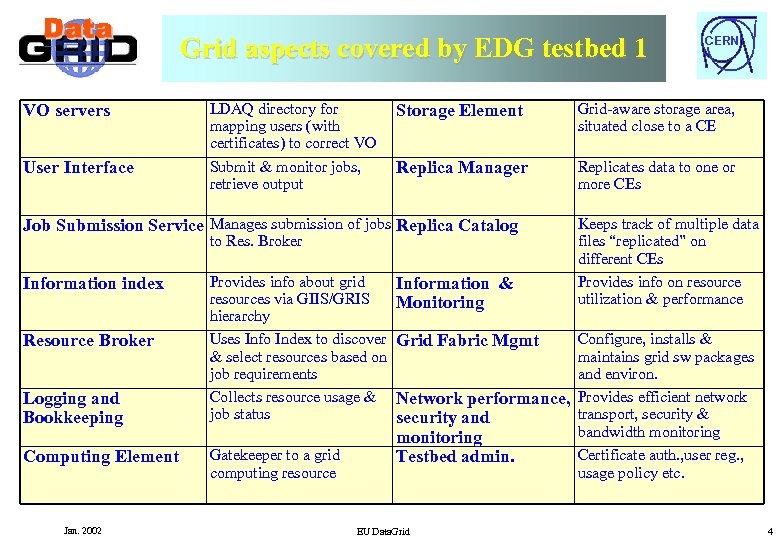

Grid aspects covered by EDG testbed 1 VO servers User Interface LDAQ directory for mapping users (with certificates) to correct VO Submit & monitor jobs, retrieve output Storage Element Grid-aware storage area, situated close to a CE Replica Manager Replicates data to one or more CEs Job Submission Service Manages submission of jobs Replica Catalog to Res. Broker Information index Resource Broker Logging and Bookkeeping Computing Element Jan. 2002 Provides info about grid resources via GIIS/GRIS hierarchy Uses Info Index to discover & select resources based on job requirements Collects resource usage & job status Gatekeeper to a grid computing resource CERN Information & Monitoring Keeps track of multiple data files “replicated” on different CEs Provides info on resource utilization & performance Grid Fabric Mgmt Configure, installs & maintains grid sw packages and environ. Network performance, Provides efficient network transport, security & security and bandwidth monitoring Certificate auth. , user reg. , Testbed admin. usage policy etc. EU Data. Grid 4

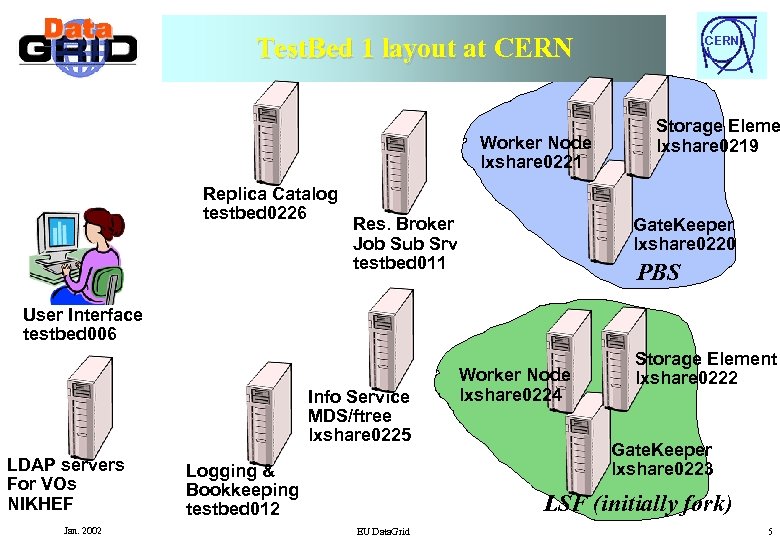

Test. Bed 1 layout at CERN Worker Node lxshare 0221 Replica Catalog testbed 0226 Res. Broker Job Sub Srv testbed 011 CERN Storage Eleme lxshare 0219 Gate. Keeper lxshare 0220 PBS User Interface testbed 006 Info Service MDS/ftree lxshare 0225 LDAP servers For VOs NIKHEF Jan. 2002 Logging & Bookkeeping testbed 012 Worker Node lxshare 0224 Storage Element lxshare 0222 Gate. Keeper lxshare 0223 LSF (initially fork) EU Data. Grid 5

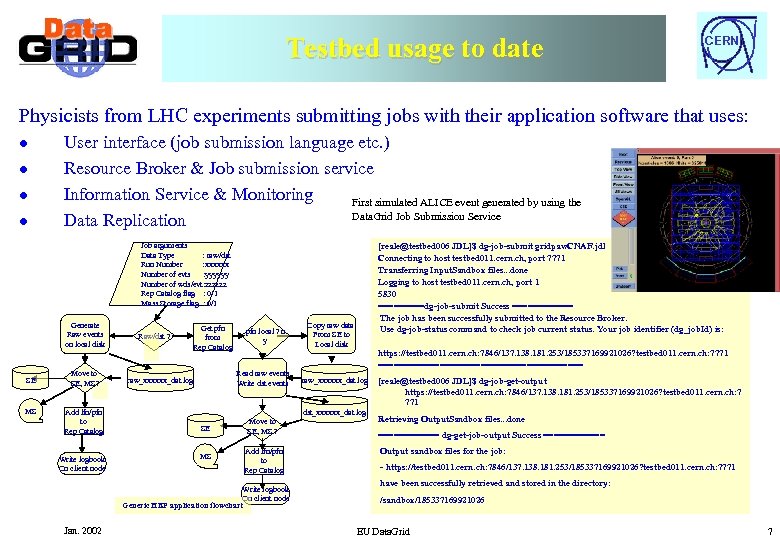

Testbed usage to date CERN Physicists from LHC experiments submitting jobs with their application software that uses: l l User interface (job submission language etc. ) Resource Broker & Job submission service Information Service & Monitoring First simulated ALICE event generated by using the Data. Grid Job Submission Service Data Replication Job arguments Data Type : raw/dst Run Number : xxxxxx Number of evts : yyyyyy Number of wds/evt: zzzzzz Rep Catalog flag : 0/1 Mass Storage flag : 0/1 Generate Raw events on local disk SE MS Move to SE, MS? Add lfn/pfn to Rep Catalog Write logbook On client node Raw/dst ? Get pfn from Rep Catalog pfn local ? n y Read raw events Write dst events raw_xxxxxx_dat. log Copy raw data From SE to Local disk https: //testbed 011. cern. ch: 7846/137. 138. 181. 253/185337169921026? testbed 011. cern. ch: 7771 ==================== raw_xxxxxx_dat. log dst_xxxxxx_dat. log SE MS Move to SE, MS ? Add lfn/pfn to Rep Catalog Write logbook On client node Generic HEP application flowchart Jan. 2002 [reale@testbed 006 JDL]$ dg-job-submit gridpaw. CNAF. jdl Connecting to host testbed 011. cern. ch, port 7771 Transferring Input. Sandbox files. . . done Logging to host testbed 011. cern. ch, port 1 5830 =====dg-job-submit Success ====== The job has been successfully submitted to the Resource Broker. Use dg-job-status command to check job current status. Your job identifier (dg_job. Id) is: [reale@testbed 006 JDL]$ dg-job-get-output https: //testbed 011. cern. ch: 7846/137. 138. 181. 253/185337169921026? testbed 011. cern. ch: 7 771 Retrieving Output. Sandbox files. . . done ====== dg-get-job-output Success ====== Output sandbox files for the job: - https: //testbed 011. cern. ch: 7846/137. 138. 181. 253/185337169921026? testbed 011. cern. ch: 7771 have been successfully retrieved and stored in the directory: /sandbox/185337169921026 EU Data. Grid 7

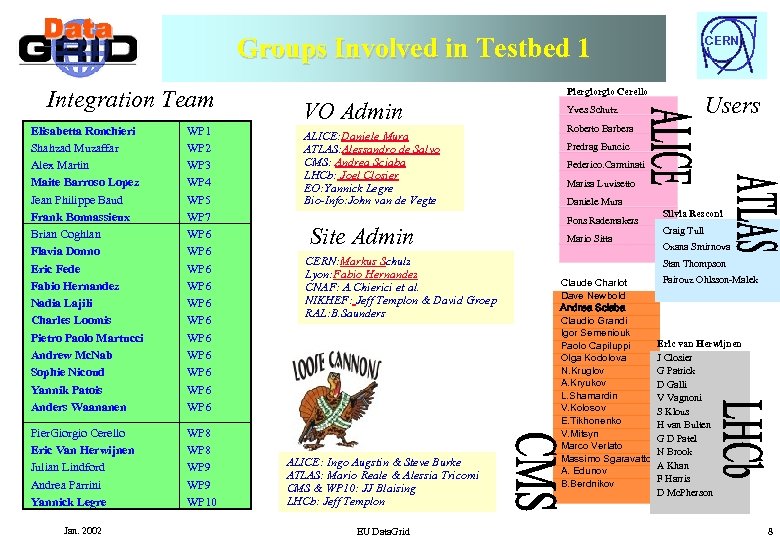

Groups Involved in Testbed 1 Integration Team Elisabetta Ronchieri Shahzad Muzaffar Alex Martin Maite Barroso Lopez Jean Philippe Baud Frank Bonnassieux Brian Coghlan Flavia Donno Eric Fede Fabio Hernandez Nadia Lajili Charles Loomis Pietro Paolo Martucci Andrew Mc. Nab Sophie Nicoud Yannik Patois Anders Waananen WP 1 WP 2 WP 3 WP 4 WP 5 WP 7 WP 6 WP 6 WP 6 Pier. Giorgio Cerello Eric Van Herwijnen Julian Lindford Andrea Parrini Yannick Legre WP 8 WP 9 WP 10 Jan. 2002 CERN Piergio Cerello VO Admin ALICE: Daniele Mura ATLAS: Alessandro de Salvo CMS: Andrea Sciaba LHCb: Joel Closier EO: Yannick Legre Bio-Info: John van de Vegte Site Admin CERN: Markus Schulz Lyon: Fabio Hernandez CNAF: A. Chierici et al. NIKHEF: Jeff Templon & David Groep RAL: B. Saunders ALICE: Ingo Augstin & Steve Burke ATLAS: Mario Reale & Alessia Tricomi CMS & WP 10: JJ Blaising LHCb: Jeff Templon EU Data. Grid Users Yves Schutz Roberto Barbera Predrag Buncic Federico. Carminati Marisa Luvisetto Daniele Mura Fons Rademakers Mario Sitta Silvia Resconi Craig Tull Oxana Smirnova Stan Thompson Claude Charlot Dave Newbold Andrea Sciaba Claudio Grandi Igor Semeniouk Paolo Capiluppi Olga Kodolova N. Kruglov A. Kryukov L. Shamardin V. Kolosov E. Tikhonenko V. Mitsyn Marco Verlato Massimo Sgaravatto A. Edunov B. Berdnikov Fairouz Ohlsson-Malek Eric van Herwijnen J Closier G Patrick D Galli V Vagnoni S Klous H van Bulten G D Patel N Brook A Khan F Harris D Mc. Pherson 8

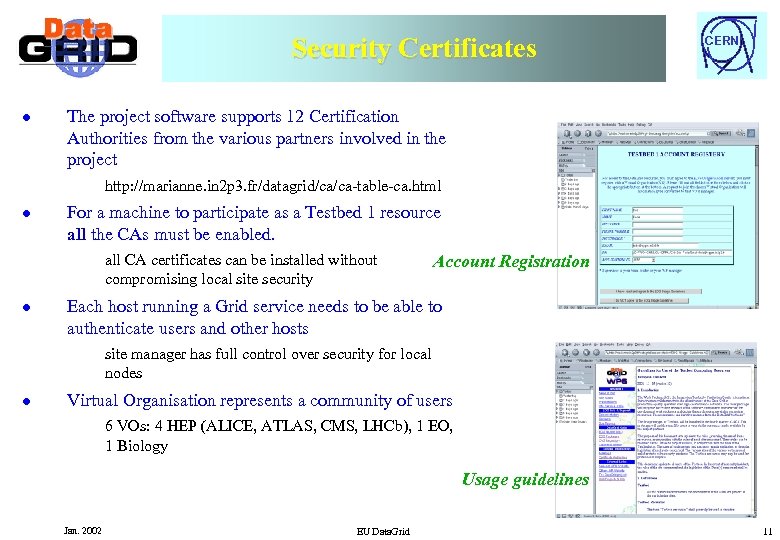

Security Certificates l CERN The project software supports 12 Certification Authorities from the various partners involved in the project http: //marianne. in 2 p 3. fr/datagrid/ca/ca-table-ca. html l For a machine to participate as a Testbed 1 resource all the CAs must be enabled. all CA certificates can be installed without compromising local site security l Account Registration Each host running a Grid service needs to be able to authenticate users and other hosts site manager has full control over security for local nodes l Virtual Organisation represents a community of users 6 VOs: 4 HEP (ALICE, ATLAS, CMS, LHCb), 1 EO, 1 Biology Usage guidelines Jan. 2002 EU Data. Grid 11

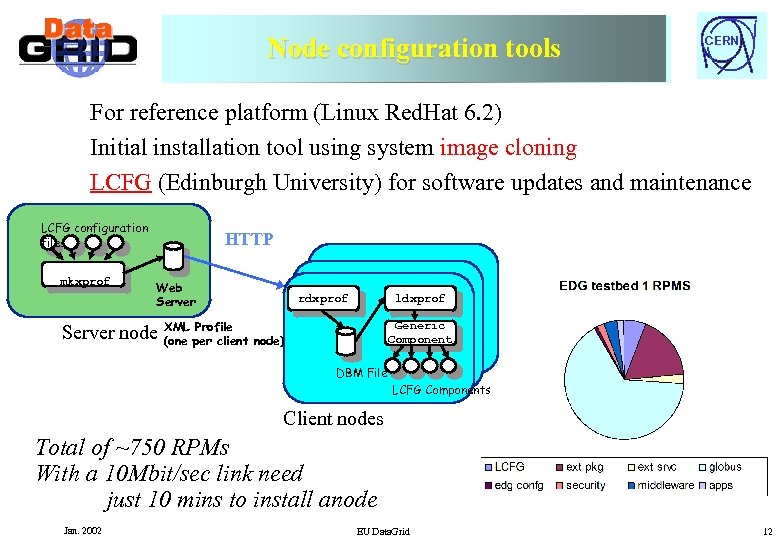

Node configuration tools Node configuration and installation tools CERN For reference platform (Linux Red. Hat 6. 2) Initial installation tool using system image cloning LCFG (Edinburgh University) for software updates and maintenance LCFG configuration files mkxprof HTTP Web Server rdxprof ldxprof Profile Server node XML per client node) (one Generic Component DBM File LCFG Components Client nodes Total of ~750 RPMs With a 10 Mbit/sec link need just 10 mins to install anode Jan. 2002 EU Data. Grid 12

Lessons learnt from testbed 1 l CERN The raw ingredients exist – we just need to be sure of the recipe Sufficient expertise exists in the different institutes to cover all aspects of the project Expertise and enthusiasm needs to be channeled using agreed framework CERN central role underestimated and under resourced l Integration and deployment is a labour intensive task More planning & WP 6 (sw integration) needs reinforcement (especially at CERN) Better done in small steps using iterative releases Support by and relationship with Globus developers is very important l International Aspects Already an international testbed, need to agree plans with US similar activities Underestimated the administrative effort involved in running an international testbed l Need more emphasis on testing More unit & integration testing Middleware WPs need to develop a test-plan (also WP 6 for external packages & integration tests) and involved applications from early stage Jan. 2002 EU Data. Grid 13

Iterative Releases l CERN Planned intermediate release schedule Test. Bed 1: October 2001 Release 1. 1: January 2002 Release 1. 2: March 2002 Release 1. 3: May 2002 Release 1. 4: July 2002 Test. Bed 2: September 2002 l Each release includes feedback from use of previous release by application groups agreed high-priority improvements/extensions use of software infrastructure feeds into architecture group l Similar schedule will be organised for 2003 Jan. 2002 EU Data. Grid 14

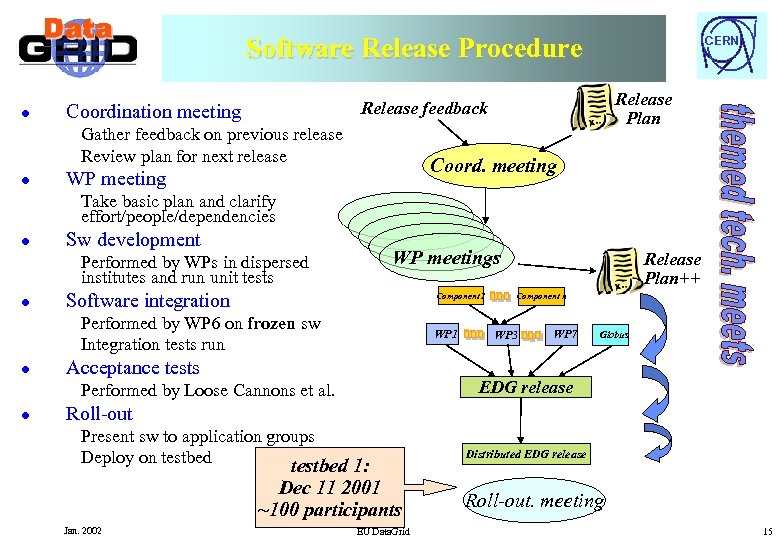

Software Release Procedure l Release Plan Release feedback Coordination meeting Gather feedback on previous release Review plan for next release l CERN Coord. meeting WP meeting Take basic plan and clarify effort/people/dependencies l Sw development Performed by WPs in dispersed institutes and run unit tests l WP meetings Software integration Component 1 Performed by WP 6 on frozen sw Integration tests run l WP 1 Acceptance tests Component n WP 3 WP 7 Globus EDG release Performed by Loose Cannons et al. l Release Plan++ Roll-out Present sw to application groups Deploy on testbed 1: Dec 11 2001 ~100 participants Jan. 2002 EU Data. Grid Distributed EDG release Roll-out. meeting 15

Development & Production testbeds l CERN Development Initial set of 5 sites will keep small cluster of PCs for development purposes to test new versions of the software, configurations etc. l Production More stable environment for use by application groups more sites more nodes per site (grow to meaningful size at major centres) more users per VO Usage already foreseen in Data Challenge schedules for LHC experiments harmonize release schedules Participating in Inter. Grid discussions on testbed organisation Antonia Ghiselli, Bob Jones, Francesco Prelz Analysis of interface with US testbeds to be performed by end of April (Gri. Phy. N/PPDG meeting) Jan. 2002 EU Data. Grid 16

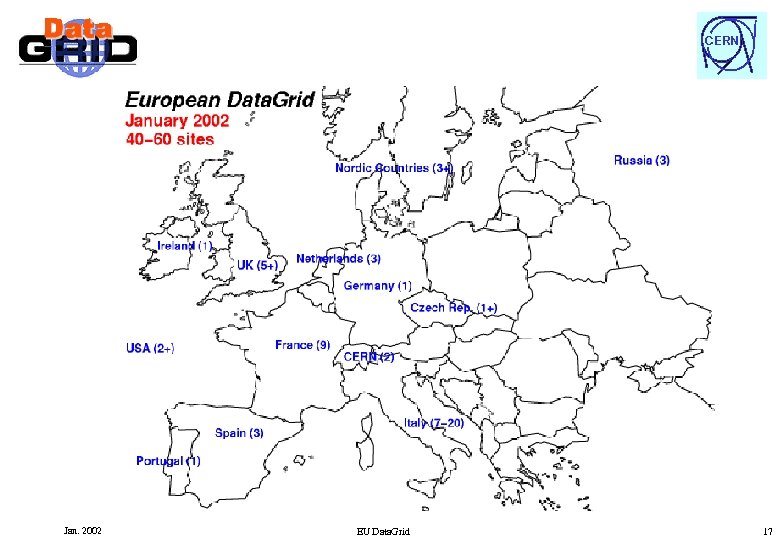

CERN Jan. 2002 EU Data. Grid 17

Future Plans l Prepare for first project EU review 1 st March 2002 (“managed panic”) l CERN Expand testbed More nodes per site, more sites (including US), more users l Evolve architecture and software on the basis of Test. Bed usage and feedback from users Closer integration of the software components Improve software infrastructure toolset and test suites Look for convergence with PPDG/Gri. Phy. N architecture l l Enhance synergy with US via Data. TAG-i. VDGL and Inter. Grid Address shortcomings in plan by collaborating with other EU projects (Data. TAG, Grid. START, Cross. Grid) l Promote early standards adoption with participation to GGF WGs l Final software release by end of 2003 Jan. 2002 EU Data. Grid 18

a4bf5318184c9af09297bf83833765f8.ppt