129216a83cca80dc443022a1504d5397.ppt

- Количество слайдов: 46

Ethics and Technology What You Need to Know About e. Discovery: Trends and TAR NYCLA 2016

Ethics and Technology What You Need to Know About e. Discovery: Trends and TAR NYCLA 2016

e. Discovery: Trends and TAR Agenda e. Discovery Technology Assisted Review – EDiscovery Evolution: Case Law on TAR – State of Information Technology: Where is the data (ESI) – EDiscovery Software Evolution: How do you collect and load the data – EDiscovery TAR and its value add: How do you efficiently / effectively search and produce the data NYCLA 3/18/2018 2

e. Discovery: Trends and TAR Agenda e. Discovery Technology Assisted Review – EDiscovery Evolution: Case Law on TAR – State of Information Technology: Where is the data (ESI) – EDiscovery Software Evolution: How do you collect and load the data – EDiscovery TAR and its value add: How do you efficiently / effectively search and produce the data NYCLA 3/18/2018 2

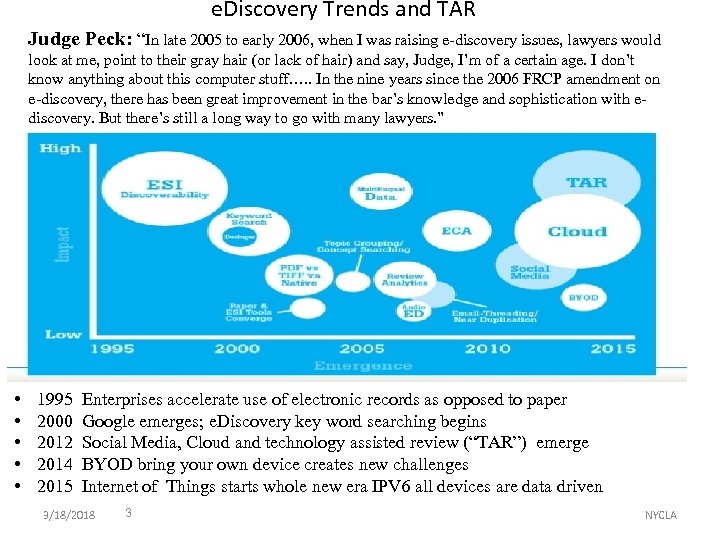

e. Discovery Trends and TAR Judge Peck: “In late 2005 to early 2006, when I was raising e-discovery issues, lawyers would look at me, point to their gray hair (or lack of hair) and say, Judge, I’m of a certain age. I don’t know anything about this computer stuff…. . In the nine years since the 2006 FRCP amendment on e-discovery, there has been great improvement in the bar’s knowledge and sophistication with ediscovery. But there’s still a long way to go with many lawyers. ” • • • 1995 Enterprises accelerate use of electronic records as opposed to paper 2000 Google emerges; e. Discovery key word searching begins 2012 Social Media, Cloud and technology assisted review (“TAR”) emerge 2014 BYOD bring your own device creates new challenges 2015 Internet of Things starts whole new era IPV 6 all devices are data driven 3/18/2018 3 NYCLA

e. Discovery Trends and TAR Judge Peck: “In late 2005 to early 2006, when I was raising e-discovery issues, lawyers would look at me, point to their gray hair (or lack of hair) and say, Judge, I’m of a certain age. I don’t know anything about this computer stuff…. . In the nine years since the 2006 FRCP amendment on e-discovery, there has been great improvement in the bar’s knowledge and sophistication with ediscovery. But there’s still a long way to go with many lawyers. ” • • • 1995 Enterprises accelerate use of electronic records as opposed to paper 2000 Google emerges; e. Discovery key word searching begins 2012 Social Media, Cloud and technology assisted review (“TAR”) emerge 2014 BYOD bring your own device creates new challenges 2015 Internet of Things starts whole new era IPV 6 all devices are data driven 3/18/2018 3 NYCLA

e. Discovery: Trends and TAR 2012: e. Discovery Evolution: Case Law on TAR Da Silva Moore, et al. v. Publicis Groupe, No. 11 Civ. 1279 (ALC)(AJP), 2012 WL 607412 (S. D. N. Y. Feb. 24, 2012), Judge: U. S. Magistrate Judge Andrew J. Peck Holding: The court formally approved the use of TAR to locate responsive documents. The court also held that Federal Rule of Evidence 702 and the Daubert standard for the admissibility of expert testimony do not apply to discovery search methods. This is the first judicial opinion approving the use of TAR in e-discovery “What the Bar should take away from this Opinion is that computer-assisted review is an available tool and should be seriously considered for use in large-data-volume cases where it may save the producing party (or both parties) significant amounts of legal fees in document review. Counsel no longer have to worry about being the ‘first’ or ‘guinea pig’ for judicial acceptance of computer-assisted review. ” Global Aerospace, Inc. v. Landow Aviation, L. P. , No. CL 61040 (Vir. Ct. Apr. 23, 2012). Judge: Circuit Judge James H. Chamblin Holding: Despite plaintiffs’ objection, court ordered that defendants may use predictive coding for the purposes of processing and producing ESI, without prejudice to plaintiffs later raising issues as to the completeness of the production or the ongoing use of predictive coding. First state court case expressly approving the use of TAR. Da Silva Moore, et al. v. Publicis Groupe, 2012 WL 1446534 (S. D. N. Y. Apr. 26, 2012). Judge: U. S. District Judge Andrew L. Carter Jr. Holding: The court affirmed Magistrate Judge Peck’s order approving the use of TAR. Affirmance by Judge Carter further cemented its significance of Judge Peck’s approval of TAR Nat’l Day Laborer Org. Network v. U. S. Immigration & Customs Enforcement Agency, No. 10 Civ. 2488 (SAS), 2012 WL 2878130_(S. D. N. Y. July 13, 2012). Judge: U. S. District Judge Shira Scheindlin Holding: In an action under the federal Freedom of Information Act, the court held that the federal government’s searches for responsive documents were inadequate because of their failure to properly employ modern search technologies. NYCLA 4 Judge Scheindlin urged the government to “learn to use twenty-first century technologies, ” she discussed predictive coding as representative of “emerging best practices” in compensating for the shortcomings of simple keyword search 3/18/2018

e. Discovery: Trends and TAR 2012: e. Discovery Evolution: Case Law on TAR Da Silva Moore, et al. v. Publicis Groupe, No. 11 Civ. 1279 (ALC)(AJP), 2012 WL 607412 (S. D. N. Y. Feb. 24, 2012), Judge: U. S. Magistrate Judge Andrew J. Peck Holding: The court formally approved the use of TAR to locate responsive documents. The court also held that Federal Rule of Evidence 702 and the Daubert standard for the admissibility of expert testimony do not apply to discovery search methods. This is the first judicial opinion approving the use of TAR in e-discovery “What the Bar should take away from this Opinion is that computer-assisted review is an available tool and should be seriously considered for use in large-data-volume cases where it may save the producing party (or both parties) significant amounts of legal fees in document review. Counsel no longer have to worry about being the ‘first’ or ‘guinea pig’ for judicial acceptance of computer-assisted review. ” Global Aerospace, Inc. v. Landow Aviation, L. P. , No. CL 61040 (Vir. Ct. Apr. 23, 2012). Judge: Circuit Judge James H. Chamblin Holding: Despite plaintiffs’ objection, court ordered that defendants may use predictive coding for the purposes of processing and producing ESI, without prejudice to plaintiffs later raising issues as to the completeness of the production or the ongoing use of predictive coding. First state court case expressly approving the use of TAR. Da Silva Moore, et al. v. Publicis Groupe, 2012 WL 1446534 (S. D. N. Y. Apr. 26, 2012). Judge: U. S. District Judge Andrew L. Carter Jr. Holding: The court affirmed Magistrate Judge Peck’s order approving the use of TAR. Affirmance by Judge Carter further cemented its significance of Judge Peck’s approval of TAR Nat’l Day Laborer Org. Network v. U. S. Immigration & Customs Enforcement Agency, No. 10 Civ. 2488 (SAS), 2012 WL 2878130_(S. D. N. Y. July 13, 2012). Judge: U. S. District Judge Shira Scheindlin Holding: In an action under the federal Freedom of Information Act, the court held that the federal government’s searches for responsive documents were inadequate because of their failure to properly employ modern search technologies. NYCLA 4 Judge Scheindlin urged the government to “learn to use twenty-first century technologies, ” she discussed predictive coding as representative of “emerging best practices” in compensating for the shortcomings of simple keyword search 3/18/2018

e. Discovery: Trends and TAR 2013: e. Discovery Evolution: Case Law on TAR Gabriel Techs. , Corp. v. Qualcomm, Inc. , No. 08 CV 1992 AJB (MDD), 2013 WL 410103 (S. D. Cal. Feb. 1, 2013). Judge: U. S. District Judge Anthony J. Battaglia Holding: Following entry of judgment in their favor in a patent infringement case, defendants filed a motion seeking attorneys’ fees, including $2. 8 million “attributable to computer-assisted, algorithm- driven document review. ” The court found that amount to be reasonable and approved it. The court found that the costs of TAR could be recovered as part of the costs and attorneys’ fees awarded to the prevailing party in patent litigation. Court found decision to undertake a more efficient and less time-consuming method of document review to be reasonable under the circumstances. In this case, the nature of the Plaintiffs’ claims resulted in significant discovery and document production, and seemingly reduced the overall fees and attorney hours required by performing electronic document review at the outset. Thus, the Court finds the requested amount of $2, 829, 349. 10 to be reasonable. ” Gordon v. Kaleida Health, No. 08 -CV-378 S(F), 2013 WL 2250579 (W. D. N. Y. May 21, 2013). Judge: U. S. Magistrate Judge Leslie G. Foschio. Holding: Impatient with the parties’ year-long attempts to agree on how to achieve a cost-effective review of some 200, 000 -300, 000 emails, the magistrate judge suggested they try predictive coding. That led to a dispute over the extent to which the parties should meet and confer in order to agree on a TAR protocol. Because the parties ultimately agreed to meet, the judge never decided any substantive TAR issue. The judge, not the litigants, who suggested the use of predictive coding. The court expressed dissatisfaction with the parties’ lack of progress toward resolving issues related to completion of review and production of Defendants’ e -mails using the key-word search method, and pointed to the availability of predictive coding, a computer assisted ESI reviewing and production method, directing the parties’ attention to the recent decision of Magistrate Judge Peck in Moore v. Publicis Groupe & MSL Group, 287 F. R. D. 182 (S. D. N. Y. 2012), approving use of predictive coding in a case involving over 3 million e-mails. In re Biomet M 2 a Magnum Hip Implant Prods. Liab. Litig. , 2013 U. S. Dist. LEXIS 172570 (N. D. Ind. Aug. 21, 2013). Judge: U. S. District Judge Robert L. Miller Holding: The court ruled that defendants need not identify which of the documents, from among those they had already produced, were used in the training of the defendants’ TAR algorithm. Significance: Because defendants had already complied with their obligation under the FRCP to produce relevant documents, the court held that it had no authority to compel the defendants to identify the specific documents it had used as seeds. Even so, the court said that it was troubled by the defendants’ lack of cooperation. “The Steering Committee knows of the existence and location of each discoverable document Biomet used in the seed set: those documents have been disclosed to the Steering Committee. The Steering Committee wants to know, not whether a document exists or where it is, but rather how Biomet used certain documents before disclosing them. Rule 26(b)(1) doesn’t make such information disclosable. ” 5 3/18/2018 NYCLA

e. Discovery: Trends and TAR 2013: e. Discovery Evolution: Case Law on TAR Gabriel Techs. , Corp. v. Qualcomm, Inc. , No. 08 CV 1992 AJB (MDD), 2013 WL 410103 (S. D. Cal. Feb. 1, 2013). Judge: U. S. District Judge Anthony J. Battaglia Holding: Following entry of judgment in their favor in a patent infringement case, defendants filed a motion seeking attorneys’ fees, including $2. 8 million “attributable to computer-assisted, algorithm- driven document review. ” The court found that amount to be reasonable and approved it. The court found that the costs of TAR could be recovered as part of the costs and attorneys’ fees awarded to the prevailing party in patent litigation. Court found decision to undertake a more efficient and less time-consuming method of document review to be reasonable under the circumstances. In this case, the nature of the Plaintiffs’ claims resulted in significant discovery and document production, and seemingly reduced the overall fees and attorney hours required by performing electronic document review at the outset. Thus, the Court finds the requested amount of $2, 829, 349. 10 to be reasonable. ” Gordon v. Kaleida Health, No. 08 -CV-378 S(F), 2013 WL 2250579 (W. D. N. Y. May 21, 2013). Judge: U. S. Magistrate Judge Leslie G. Foschio. Holding: Impatient with the parties’ year-long attempts to agree on how to achieve a cost-effective review of some 200, 000 -300, 000 emails, the magistrate judge suggested they try predictive coding. That led to a dispute over the extent to which the parties should meet and confer in order to agree on a TAR protocol. Because the parties ultimately agreed to meet, the judge never decided any substantive TAR issue. The judge, not the litigants, who suggested the use of predictive coding. The court expressed dissatisfaction with the parties’ lack of progress toward resolving issues related to completion of review and production of Defendants’ e -mails using the key-word search method, and pointed to the availability of predictive coding, a computer assisted ESI reviewing and production method, directing the parties’ attention to the recent decision of Magistrate Judge Peck in Moore v. Publicis Groupe & MSL Group, 287 F. R. D. 182 (S. D. N. Y. 2012), approving use of predictive coding in a case involving over 3 million e-mails. In re Biomet M 2 a Magnum Hip Implant Prods. Liab. Litig. , 2013 U. S. Dist. LEXIS 172570 (N. D. Ind. Aug. 21, 2013). Judge: U. S. District Judge Robert L. Miller Holding: The court ruled that defendants need not identify which of the documents, from among those they had already produced, were used in the training of the defendants’ TAR algorithm. Significance: Because defendants had already complied with their obligation under the FRCP to produce relevant documents, the court held that it had no authority to compel the defendants to identify the specific documents it had used as seeds. Even so, the court said that it was troubled by the defendants’ lack of cooperation. “The Steering Committee knows of the existence and location of each discoverable document Biomet used in the seed set: those documents have been disclosed to the Steering Committee. The Steering Committee wants to know, not whether a document exists or where it is, but rather how Biomet used certain documents before disclosing them. Rule 26(b)(1) doesn’t make such information disclosable. ” 5 3/18/2018 NYCLA

e. Discovery: Trends and TAR 2014: e. Discovery Evolution: Case Law on TAR FDIC v. Bowden, No. CV 413 -245, 2014 WL 2548137 (S. D. Ga. June 6, 2014). Judge: Magistrate Judge G. R. Smith Holding: In case involving some 2. 01 terabytes of data, or 153. 6 million pages of documents, the court suggested that the parties consider using TAR. The court recognized TAR is more accurate than human review or keyword searching. “Predictive coding has emerged as a far more accurate means of producing responsive ESI in discovery. Studies show it is far more accurate than human review or keyword searches which have their own limitations. ” (Quoting Progressive Cas. Ins. Co. v. Delaney, 2014 WL 2112927 at *8 (D. Nev. May 20, 2014)). _ Bridgestone Americas, Inc. v. Int. Bus. Machs. Corp. , No. 3: 13 -1196 (M. D. Tenn. July 22, 2014). Judge: U. S. Magistrate Judge Joe B. Brown. Holding: The court approved the plaintiff’s request to use predictive coding to review over 2 million documents, over defendant’s objections that the request was an unwarranted change in the original case management order and that it would be unfair to use predictive coding after an initial screening has been done with search terms. The opinion suggests that e-discovery should be a fluid and transparent process and that principles of efficiency and proportionality may justify a party to “switch horses in midstream, ” as the magistrate judge wrote. “In the final analysis, the use of predictive coding is a judgment call, hopefully keeping in mind the exhortation of Rule 26 that discovery be tailored by the court to be as efficient and cost-effective as possible. In this case, we are talking about millions of documents to be reviewed with costs likewise in the millions. There is no single, simple, correct solution possible under these circumstances. ” 6 3/18/2018 NYCLA

e. Discovery: Trends and TAR 2014: e. Discovery Evolution: Case Law on TAR FDIC v. Bowden, No. CV 413 -245, 2014 WL 2548137 (S. D. Ga. June 6, 2014). Judge: Magistrate Judge G. R. Smith Holding: In case involving some 2. 01 terabytes of data, or 153. 6 million pages of documents, the court suggested that the parties consider using TAR. The court recognized TAR is more accurate than human review or keyword searching. “Predictive coding has emerged as a far more accurate means of producing responsive ESI in discovery. Studies show it is far more accurate than human review or keyword searches which have their own limitations. ” (Quoting Progressive Cas. Ins. Co. v. Delaney, 2014 WL 2112927 at *8 (D. Nev. May 20, 2014)). _ Bridgestone Americas, Inc. v. Int. Bus. Machs. Corp. , No. 3: 13 -1196 (M. D. Tenn. July 22, 2014). Judge: U. S. Magistrate Judge Joe B. Brown. Holding: The court approved the plaintiff’s request to use predictive coding to review over 2 million documents, over defendant’s objections that the request was an unwarranted change in the original case management order and that it would be unfair to use predictive coding after an initial screening has been done with search terms. The opinion suggests that e-discovery should be a fluid and transparent process and that principles of efficiency and proportionality may justify a party to “switch horses in midstream, ” as the magistrate judge wrote. “In the final analysis, the use of predictive coding is a judgment call, hopefully keeping in mind the exhortation of Rule 26 that discovery be tailored by the court to be as efficient and cost-effective as possible. In this case, we are talking about millions of documents to be reviewed with costs likewise in the millions. There is no single, simple, correct solution possible under these circumstances. ” 6 3/18/2018 NYCLA

e. Discovery: Trends and TAR 2015: EDiscovery Evolution: Case Law on TAR Rio Tinto PLC v. Vale S. A. , —F. R. D. —, 2015 WL 872294 (S. D. N. Y. Mar. 2, 2015) Magistrate Judge Andrew Peck Taking up the topic of technology-assisted review (“TAR”), Judge Peck’s declares that “it is now black letter law that where the producing party wants to utilize TAR for document review, courts will permit it. ” Despite this, there remain open issues surrounding the use of TAR, including, as Magistrate Judge Peck noted, the question of “how transparent and cooperative the parties need to be with respect to the seed or training set(s). ” And, while this opinion did not resolve that question (because the parties in the present case agreed to “a protocol that discloses all non-privileged documents in the control sets”), it does provide some notable commentary on the issue. Judge Peck, recognizing “the interest within the e. Discovery community about TAR cases and protocols, ” discusses the questions of “seed set transparency” and whether TAR should be held to a higher standard than the use of keywords or manual review. • Specifically, on the topic of “seed set transparency, ” following his identification of a number of cases that have addressed the use of TAR and his observation that “[i]f the TAR methodology uses ‘continuous active learning’ (CAL) (as opposed to simple passive learning (SPL) or simple active learning (SAL)), the contents of the seed set is much less significant, ” Then, following his determination that he need not rule on the question of “seed set transparency” in the present case • Judge Peck indicated that, “[i]n any event, while I generally believe in cooperation, requesting parties can insure that training and review was done appropriately by other means, such as statistical estimation of recall at the conclusion of the review as well as by whethere are gaps in the production, and quality control review of samples from the documents categorized as non-responsive. ” • “……it is inappropriate to hold TAR to a higher standard than keywords or manual review. Doing so discourages parties from using TAR for fear of spending more in motion practice than the savings from using TAR for review . ” 7 3/18/2018 NYCLA

e. Discovery: Trends and TAR 2015: EDiscovery Evolution: Case Law on TAR Rio Tinto PLC v. Vale S. A. , —F. R. D. —, 2015 WL 872294 (S. D. N. Y. Mar. 2, 2015) Magistrate Judge Andrew Peck Taking up the topic of technology-assisted review (“TAR”), Judge Peck’s declares that “it is now black letter law that where the producing party wants to utilize TAR for document review, courts will permit it. ” Despite this, there remain open issues surrounding the use of TAR, including, as Magistrate Judge Peck noted, the question of “how transparent and cooperative the parties need to be with respect to the seed or training set(s). ” And, while this opinion did not resolve that question (because the parties in the present case agreed to “a protocol that discloses all non-privileged documents in the control sets”), it does provide some notable commentary on the issue. Judge Peck, recognizing “the interest within the e. Discovery community about TAR cases and protocols, ” discusses the questions of “seed set transparency” and whether TAR should be held to a higher standard than the use of keywords or manual review. • Specifically, on the topic of “seed set transparency, ” following his identification of a number of cases that have addressed the use of TAR and his observation that “[i]f the TAR methodology uses ‘continuous active learning’ (CAL) (as opposed to simple passive learning (SPL) or simple active learning (SAL)), the contents of the seed set is much less significant, ” Then, following his determination that he need not rule on the question of “seed set transparency” in the present case • Judge Peck indicated that, “[i]n any event, while I generally believe in cooperation, requesting parties can insure that training and review was done appropriately by other means, such as statistical estimation of recall at the conclusion of the review as well as by whethere are gaps in the production, and quality control review of samples from the documents categorized as non-responsive. ” • “……it is inappropriate to hold TAR to a higher standard than keywords or manual review. Doing so discourages parties from using TAR for fear of spending more in motion practice than the savings from using TAR for review . ” 7 3/18/2018 NYCLA

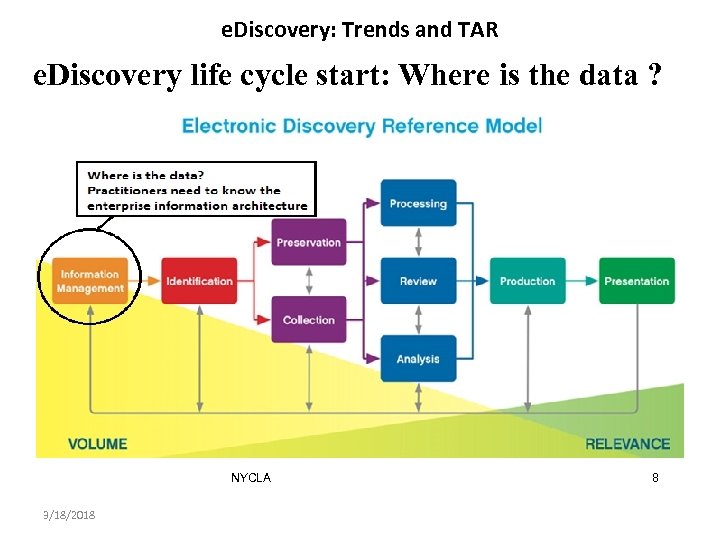

e. Discovery: Trends and TAR e. Discovery life cycle start: Where is the data ? NYCLA 3/18/2018 8

e. Discovery: Trends and TAR e. Discovery life cycle start: Where is the data ? NYCLA 3/18/2018 8

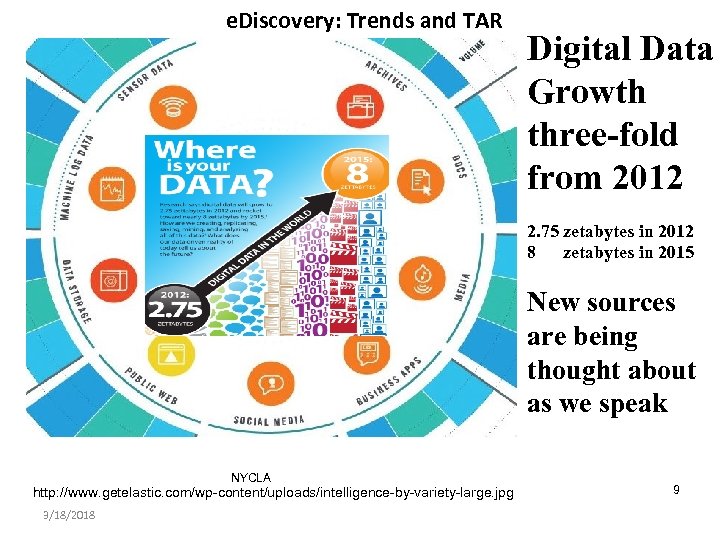

e. Discovery: Trends and TAR Digital Data Growth three-fold from 2012 2. 75 zetabytes in 2012 8 zetabytes in 2015 New sources are being thought about as we speak NYCLA http: //www. getelastic. com/wp-content/uploads/intelligence-by-variety-large. jpg 3/18/2018 9

e. Discovery: Trends and TAR Digital Data Growth three-fold from 2012 2. 75 zetabytes in 2012 8 zetabytes in 2015 New sources are being thought about as we speak NYCLA http: //www. getelastic. com/wp-content/uploads/intelligence-by-variety-large. jpg 3/18/2018 9

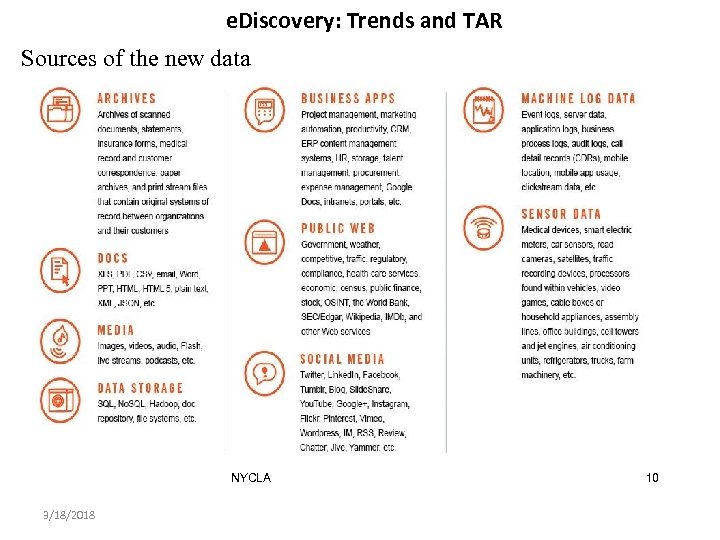

e. Discovery: Trends and TAR Sources of the new data NYCLA 3/18/2018 10

e. Discovery: Trends and TAR Sources of the new data NYCLA 3/18/2018 10

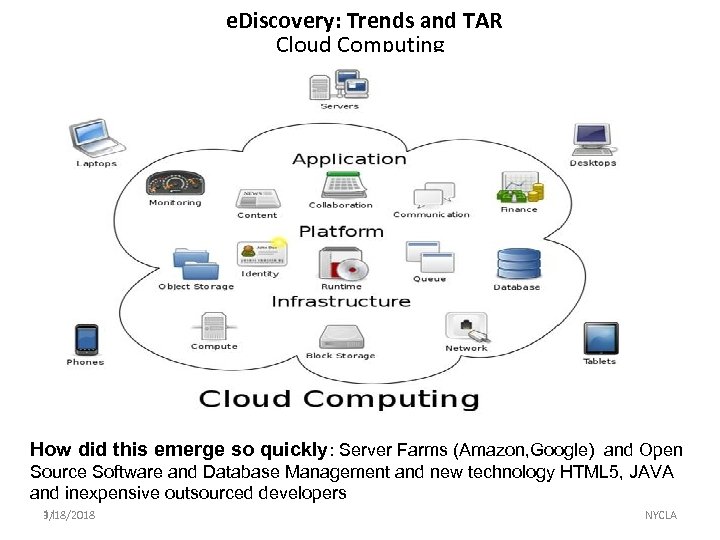

e. Discovery: Trends and TAR Cloud Computing How did this emerge so quickly: Server Farms (Amazon, Google) and Open Source Software and Database Management and new technology HTML 5, JAVA and inexpensive outsourced developers 3/18/2018 11 NYCLA

e. Discovery: Trends and TAR Cloud Computing How did this emerge so quickly: Server Farms (Amazon, Google) and Open Source Software and Database Management and new technology HTML 5, JAVA and inexpensive outsourced developers 3/18/2018 11 NYCLA

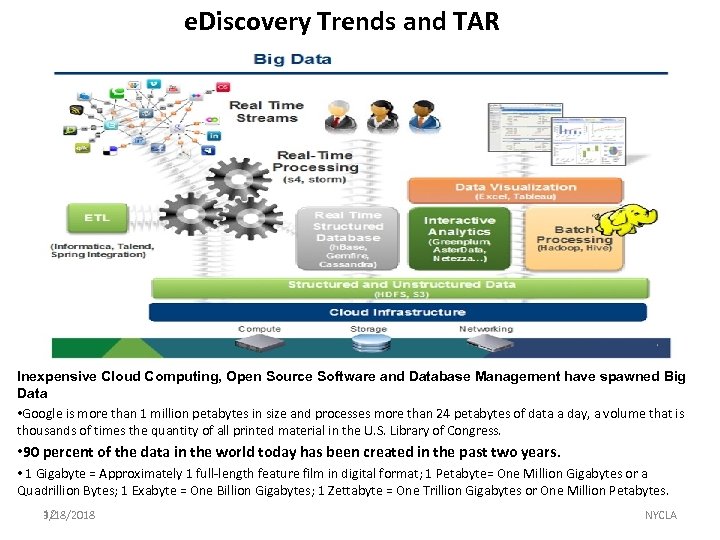

e. Discovery Trends and TAR Big Data: Google, Marketers, Govt Inexpensive Cloud Computing, Open Source Software and Database Management have spawned Big Data • Google is more than 1 million petabytes in size and processes more than 24 petabytes of data a day, a volume that is thousands of times the quantity of all printed material in the U. S. Library of Congress. • 90 percent of the data in the world today has been created in the past two years. • 1 Gigabyte = Approximately 1 full-length feature film in digital format; 1 Petabyte= One Million Gigabytes or a Quadrillion Bytes; 1 Exabyte = One Billion Gigabytes; 1 Zettabyte = One Trillion Gigabytes or One Million Petabytes. 3/18/2018 12 NYCLA

e. Discovery Trends and TAR Big Data: Google, Marketers, Govt Inexpensive Cloud Computing, Open Source Software and Database Management have spawned Big Data • Google is more than 1 million petabytes in size and processes more than 24 petabytes of data a day, a volume that is thousands of times the quantity of all printed material in the U. S. Library of Congress. • 90 percent of the data in the world today has been created in the past two years. • 1 Gigabyte = Approximately 1 full-length feature film in digital format; 1 Petabyte= One Million Gigabytes or a Quadrillion Bytes; 1 Exabyte = One Billion Gigabytes; 1 Zettabyte = One Trillion Gigabytes or One Million Petabytes. 3/18/2018 12 NYCLA

e. Discovery: Trends and TAR: Mobile Devices • A mobile device is a hand sized computing device with a display screen with touch input or a miniature keyboard. i. Phone, Android, i. Pad and Blackberry are common mobile devices. • Mobile phone offer advanced capabilities beyond average cell phone, often with PC-like functionality. A mobile device (phone, pad) runs a complete operating system software providing a standardized interface and platform for application developers with an array of input and output options including keyboard, touch screen, voice recognition, camera/scanner • These devices with their array of capabilities and ease of transporting are in some ways more capable than a laptop/desktop computer MOBILE DEVICE FUNCTIONALITY • • • NYCLA 3/18/2018 Wireless Internet Audio/Video Location Based Services User Interface Keypad Touch Screen Bluetooth Computer and SQL Lite database Camera GPS location finder & supporting apps Media Player Voice Communication 13

e. Discovery: Trends and TAR: Mobile Devices • A mobile device is a hand sized computing device with a display screen with touch input or a miniature keyboard. i. Phone, Android, i. Pad and Blackberry are common mobile devices. • Mobile phone offer advanced capabilities beyond average cell phone, often with PC-like functionality. A mobile device (phone, pad) runs a complete operating system software providing a standardized interface and platform for application developers with an array of input and output options including keyboard, touch screen, voice recognition, camera/scanner • These devices with their array of capabilities and ease of transporting are in some ways more capable than a laptop/desktop computer MOBILE DEVICE FUNCTIONALITY • • • NYCLA 3/18/2018 Wireless Internet Audio/Video Location Based Services User Interface Keypad Touch Screen Bluetooth Computer and SQL Lite database Camera GPS location finder & supporting apps Media Player Voice Communication 13

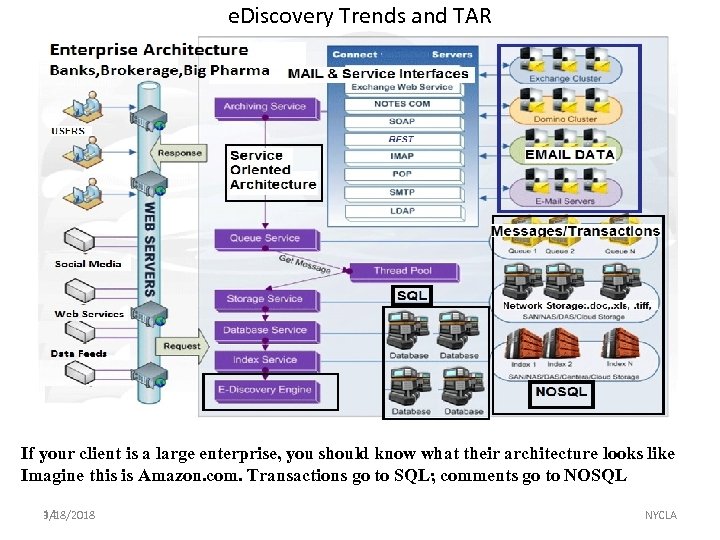

e. Discovery Trends and TAR If your client is a large enterprise, you should know what their architecture looks like Imagine this is Amazon. com. Transactions go to SQL; comments go to NOSQL 3/18/2018 14 NYCLA

e. Discovery Trends and TAR If your client is a large enterprise, you should know what their architecture looks like Imagine this is Amazon. com. Transactions go to SQL; comments go to NOSQL 3/18/2018 14 NYCLA

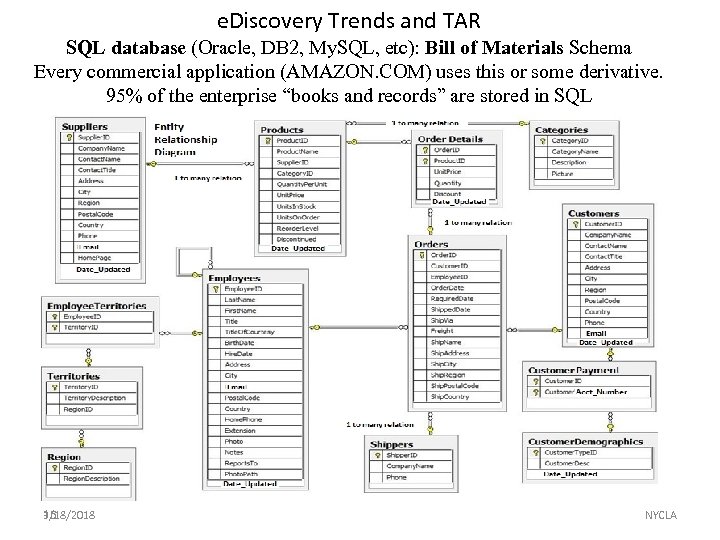

e. Discovery Trends and TAR SQL database (Oracle, DB 2, My. SQL, etc): Bill of Materials Schema Every commercial application (AMAZON. COM) uses this or some derivative. 95% of the enterprise “books and records” are stored in SQL 3/18/2018 15 NYCLA

e. Discovery Trends and TAR SQL database (Oracle, DB 2, My. SQL, etc): Bill of Materials Schema Every commercial application (AMAZON. COM) uses this or some derivative. 95% of the enterprise “books and records” are stored in SQL 3/18/2018 15 NYCLA

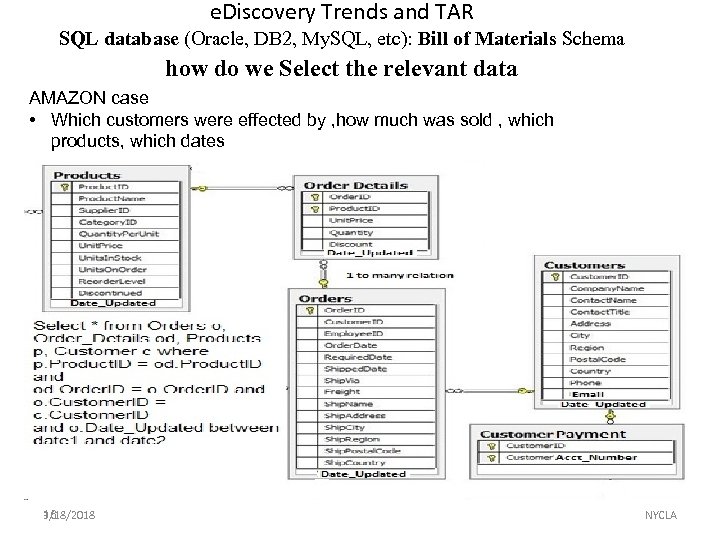

e. Discovery Trends and TAR SQL database (Oracle, DB 2, My. SQL, etc): Bill of Materials Schema how do we Select the relevant data AMAZON case • Which customers were effected by , how much was sold , which products, which dates 3/18/2018 16 NYCLA

e. Discovery Trends and TAR SQL database (Oracle, DB 2, My. SQL, etc): Bill of Materials Schema how do we Select the relevant data AMAZON case • Which customers were effected by , how much was sold , which products, which dates 3/18/2018 16 NYCLA

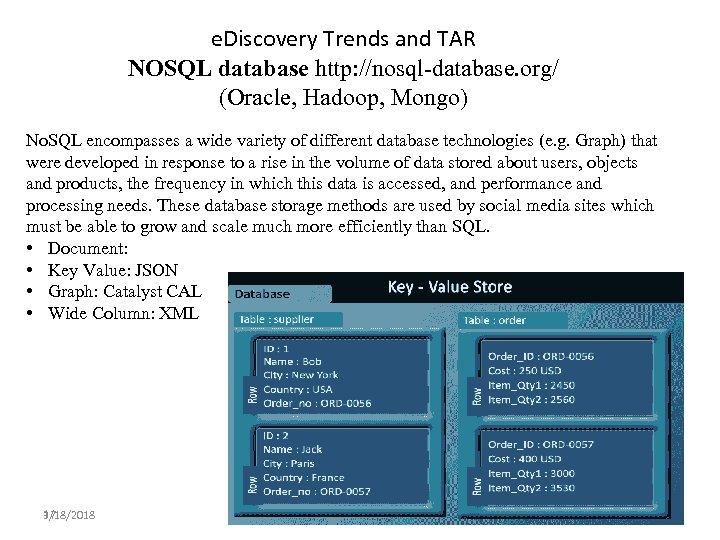

e. Discovery Trends and TAR NOSQL database http: //nosql-database. org/ (Oracle, Hadoop, Mongo) No. SQL encompasses a wide variety of different database technologies (e. g. Graph) that were developed in response to a rise in the volume of data stored about users, objects and products, the frequency in which this data is accessed, and performance and processing needs. These database storage methods are used by social media sites which must be able to grow and scale much more efficiently than SQL. • Document: • Key Value: JSON • Graph: Catalyst CAL • Wide Column: XML 3/18/2018 17 NYCLA

e. Discovery Trends and TAR NOSQL database http: //nosql-database. org/ (Oracle, Hadoop, Mongo) No. SQL encompasses a wide variety of different database technologies (e. g. Graph) that were developed in response to a rise in the volume of data stored about users, objects and products, the frequency in which this data is accessed, and performance and processing needs. These database storage methods are used by social media sites which must be able to grow and scale much more efficiently than SQL. • Document: • Key Value: JSON • Graph: Catalyst CAL • Wide Column: XML 3/18/2018 17 NYCLA

e. Discovery Trends and TAR Process starts with Litigation Hold • written request or reminder for the preservation of electronic evidence. Zubulake v. UBS Warburg LLC, 220 F. R. D. 212, 216 -18 (S. D. N. Y. 2003) (Zubulake IV) contains an extensive discussion of the litigation hold. • Large enterprises (banks, insurance, media, Govt) have policies in place regarding document/file retention and destruction of data after a certain period of time. • Litigation hold letters are issued to halt the company’s normal document retention policy in order to preserve potentially relevant documents. • The litigation hold process must be designed to cover all types of electronic documents, and it must be implemented at the proper time to preserve unchanged, as much of the relevant data as possible An effective litigation hold should contemplate the use of cloud computing , social media, personal devices, sensor data and anything that creates an “electronic footprint”. 3/18/2018 18 NYCLA

e. Discovery Trends and TAR Process starts with Litigation Hold • written request or reminder for the preservation of electronic evidence. Zubulake v. UBS Warburg LLC, 220 F. R. D. 212, 216 -18 (S. D. N. Y. 2003) (Zubulake IV) contains an extensive discussion of the litigation hold. • Large enterprises (banks, insurance, media, Govt) have policies in place regarding document/file retention and destruction of data after a certain period of time. • Litigation hold letters are issued to halt the company’s normal document retention policy in order to preserve potentially relevant documents. • The litigation hold process must be designed to cover all types of electronic documents, and it must be implemented at the proper time to preserve unchanged, as much of the relevant data as possible An effective litigation hold should contemplate the use of cloud computing , social media, personal devices, sensor data and anything that creates an “electronic footprint”. 3/18/2018 18 NYCLA

e. Discovery Trends and TAR Rule 26(f) conference: just the parties no Judge Rule 26(f), Subsection (1) sets the deadline for the conference as soon as practicable and at least 21 days before the scheduling conference or a Rule 16(b) scheduling order is due. Subsection (2) lists several required topics for the conference, including settlement, preservation and the discovery plan. Rule 26(f)(2) requires the parties “discuss any issues about preserving discoverable information. ” The preservation discussion should cover a number of specific points. Status of the litigation hold ‒ When was it issued, who received it, what subjects and data sources does it cover and what procedures are in place for auditing compliance? Time-sensitive sources ‒ Has provision been made to preserve time-sensitive data sources such as smartphones, social media and other website data and third-party hosted data? Automated deletion/archiving ‒ Have auto-delete and auto-archive functions been turned off for data sources covered by the hold? Narrowing the scope of preservation reduces the significant time and cost burden of compliance. An effective conference should contemplate the use of cloud computing , social media, personal devices, sensor data and anything that creates an “electronic footprint”. 3/18/2018 19 NYCLA

e. Discovery Trends and TAR Rule 26(f) conference: just the parties no Judge Rule 26(f), Subsection (1) sets the deadline for the conference as soon as practicable and at least 21 days before the scheduling conference or a Rule 16(b) scheduling order is due. Subsection (2) lists several required topics for the conference, including settlement, preservation and the discovery plan. Rule 26(f)(2) requires the parties “discuss any issues about preserving discoverable information. ” The preservation discussion should cover a number of specific points. Status of the litigation hold ‒ When was it issued, who received it, what subjects and data sources does it cover and what procedures are in place for auditing compliance? Time-sensitive sources ‒ Has provision been made to preserve time-sensitive data sources such as smartphones, social media and other website data and third-party hosted data? Automated deletion/archiving ‒ Have auto-delete and auto-archive functions been turned off for data sources covered by the hold? Narrowing the scope of preservation reduces the significant time and cost burden of compliance. An effective conference should contemplate the use of cloud computing , social media, personal devices, sensor data and anything that creates an “electronic footprint”. 3/18/2018 19 NYCLA

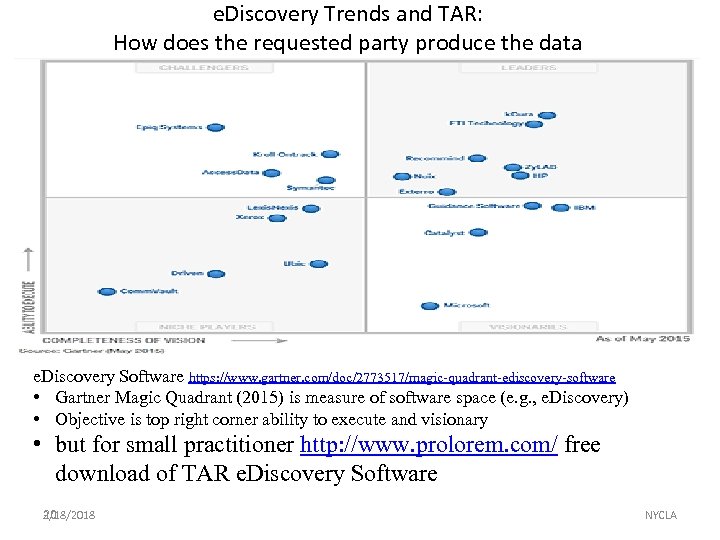

e. Discovery Trends and TAR: How does the requested party produce the data e. Discovery Software https: //www. gartner. com/doc/2773517/magic-quadrant-ediscovery-software • Gartner Magic Quadrant (2015) is measure of software space (e. g. , e. Discovery) • Objective is top right corner ability to execute and visionary • but for small practitioner http: //www. prolorem. com/ free download of TAR e. Discovery Software 3/18/2018 20 NYCLA

e. Discovery Trends and TAR: How does the requested party produce the data e. Discovery Software https: //www. gartner. com/doc/2773517/magic-quadrant-ediscovery-software • Gartner Magic Quadrant (2015) is measure of software space (e. g. , e. Discovery) • Objective is top right corner ability to execute and visionary • but for small practitioner http: //www. prolorem. com/ free download of TAR e. Discovery Software 3/18/2018 20 NYCLA

e. Discovery Trends and TAR FRCP Rule 34(b)(2)(E): Discovery must be produced one of two ways 1) “[A] party must produce documents as they are kept in the ordinary course of business or must organize and label them to correspond to the categories in the request. ” 2) “[I]f a request does not specify a form for producing electronically stored information, a party must produce it in a form or forms in which it is ordinarily maintained or in a reasonably usable form or forms. A party need not produce the same electronically stored information in more than one form. ” Structure the request to include ESI from all possible sources: contemplate the use of cloud computing , social media, personal devices, sensor data and anything that creates an “electronic footprint” and how it can be linked together to facilitate the . 3/18/2018 21 NYCLA

e. Discovery Trends and TAR FRCP Rule 34(b)(2)(E): Discovery must be produced one of two ways 1) “[A] party must produce documents as they are kept in the ordinary course of business or must organize and label them to correspond to the categories in the request. ” 2) “[I]f a request does not specify a form for producing electronically stored information, a party must produce it in a form or forms in which it is ordinarily maintained or in a reasonably usable form or forms. A party need not produce the same electronically stored information in more than one form. ” Structure the request to include ESI from all possible sources: contemplate the use of cloud computing , social media, personal devices, sensor data and anything that creates an “electronic footprint” and how it can be linked together to facilitate the . 3/18/2018 21 NYCLA

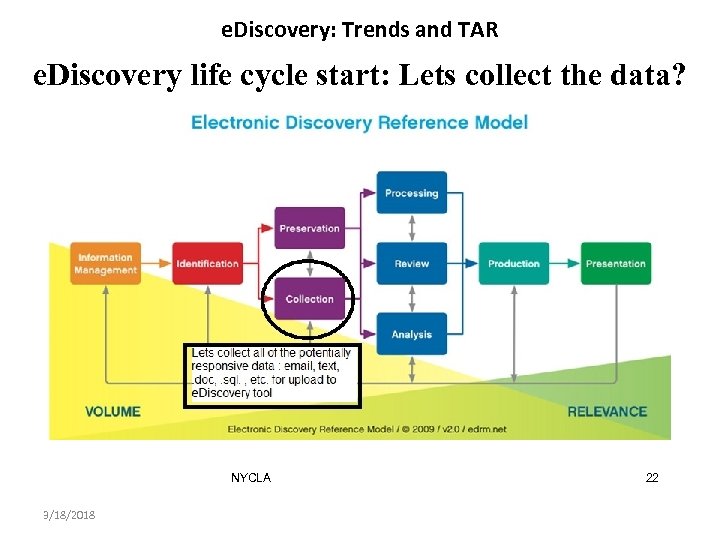

e. Discovery: Trends and TAR e. Discovery life cycle start: Lets collect the data? NYCLA 3/18/2018 22

e. Discovery: Trends and TAR e. Discovery life cycle start: Lets collect the data? NYCLA 3/18/2018 22

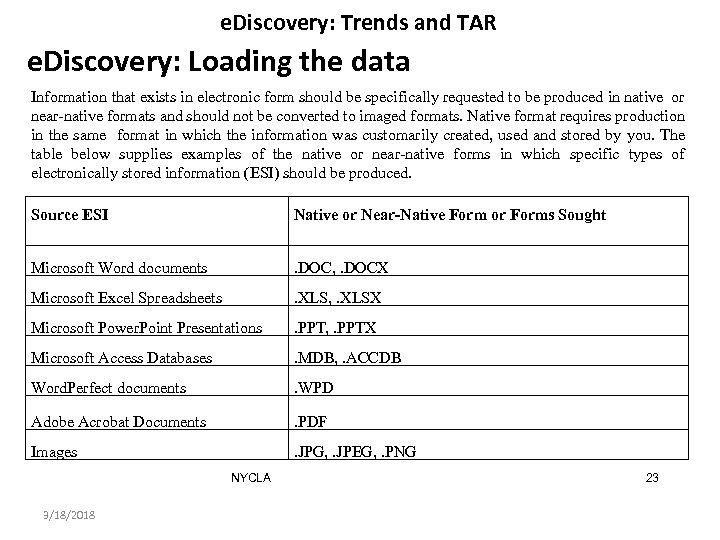

e. Discovery: Trends and TAR e. Discovery: Loading the data Information that exists in electronic form should be specifically requested to be produced in native or near-native formats and should not be converted to imaged formats. Native format requires production in the same format in which the information was customarily created, used and stored by you. The table below supplies examples of the native or near-native forms in which specific types of electronically stored information (ESI) should be produced. Source ESI Native or Near-Native Form or Forms Sought Microsoft Word documents . DOC, . DOCX Microsoft Excel Spreadsheets . XLS, . XLSX Microsoft Power. Point Presentations . PPT, . PPTX Microsoft Access Databases . MDB, . ACCDB Word. Perfect documents . WPD Adobe Acrobat Documents . PDF Images . JPG, . JPEG, . PNG NYCLA 3/18/2018 23

e. Discovery: Trends and TAR e. Discovery: Loading the data Information that exists in electronic form should be specifically requested to be produced in native or near-native formats and should not be converted to imaged formats. Native format requires production in the same format in which the information was customarily created, used and stored by you. The table below supplies examples of the native or near-native forms in which specific types of electronically stored information (ESI) should be produced. Source ESI Native or Near-Native Form or Forms Sought Microsoft Word documents . DOC, . DOCX Microsoft Excel Spreadsheets . XLS, . XLSX Microsoft Power. Point Presentations . PPT, . PPTX Microsoft Access Databases . MDB, . ACCDB Word. Perfect documents . WPD Adobe Acrobat Documents . PDF Images . JPG, . JPEG, . PNG NYCLA 3/18/2018 23

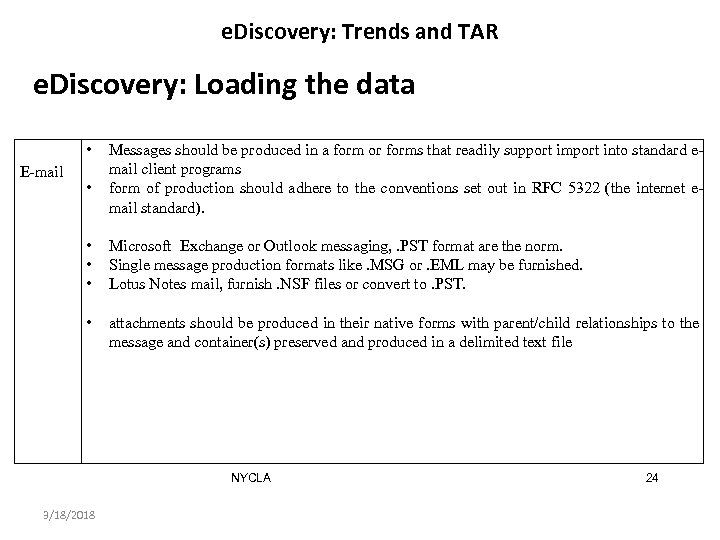

e. Discovery: Trends and TAR e. Discovery: Loading the data • E-mail • Messages should be produced in a form or forms that readily support import into standard email client programs form of production should adhere to the conventions set out in RFC 5322 (the internet email standard). • • • Microsoft Exchange or Outlook messaging, . PST format are the norm. Single message production formats like. MSG or. EML may be furnished. Lotus Notes mail, furnish. NSF files or convert to. PST. • attachments should be produced in their native forms with parent/child relationships to the message and container(s) preserved and produced in a delimited text file NYCLA 3/18/2018 24

e. Discovery: Trends and TAR e. Discovery: Loading the data • E-mail • Messages should be produced in a form or forms that readily support import into standard email client programs form of production should adhere to the conventions set out in RFC 5322 (the internet email standard). • • • Microsoft Exchange or Outlook messaging, . PST format are the norm. Single message production formats like. MSG or. EML may be furnished. Lotus Notes mail, furnish. NSF files or convert to. PST. • attachments should be produced in their native forms with parent/child relationships to the message and container(s) preserved and produced in a delimited text file NYCLA 3/18/2018 24

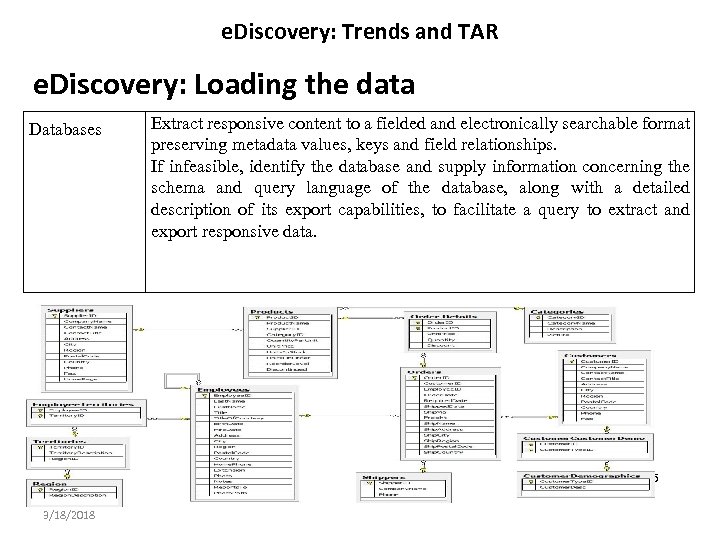

e. Discovery: Trends and TAR e. Discovery: Loading the data Databases Extract responsive content to a fielded and electronically searchable format preserving metadata values, keys and field relationships. If infeasible, identify the database and supply information concerning the schema and query language of the database, along with a detailed description of its export capabilities, to facilitate a query to extract and export responsive data. NYCLA 3/18/2018 25

e. Discovery: Trends and TAR e. Discovery: Loading the data Databases Extract responsive content to a fielded and electronically searchable format preserving metadata values, keys and field relationships. If infeasible, identify the database and supply information concerning the schema and query language of the database, along with a detailed description of its export capabilities, to facilitate a query to extract and export responsive data. NYCLA 3/18/2018 25

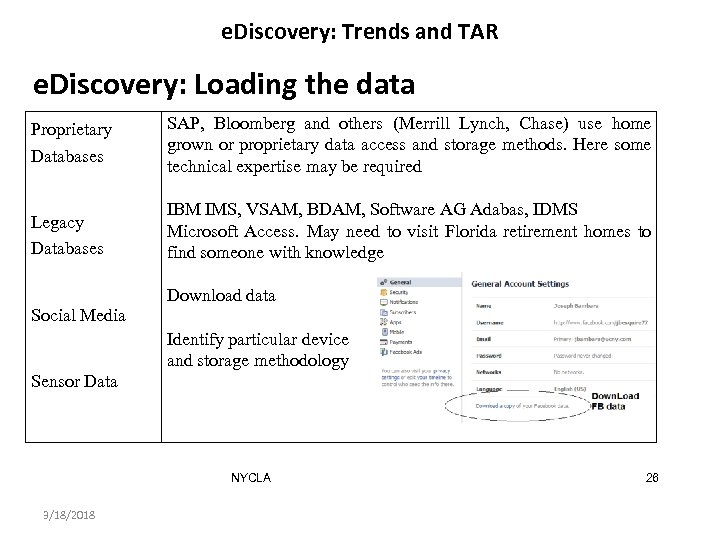

e. Discovery: Trends and TAR e. Discovery: Loading the data Proprietary Databases SAP, Bloomberg and others (Merrill Lynch, Chase) use home grown or proprietary data access and storage methods. Here some technical expertise may be required Legacy Databases IBM IMS, VSAM, BDAM, Software AG Adabas, IDMS Microsoft Access. May need to visit Florida retirement homes to find someone with knowledge Social Media Download data Identify particular device and storage methodology Sensor Data NYCLA 3/18/2018 26

e. Discovery: Trends and TAR e. Discovery: Loading the data Proprietary Databases SAP, Bloomberg and others (Merrill Lynch, Chase) use home grown or proprietary data access and storage methods. Here some technical expertise may be required Legacy Databases IBM IMS, VSAM, BDAM, Software AG Adabas, IDMS Microsoft Access. May need to visit Florida retirement homes to find someone with knowledge Social Media Download data Identify particular device and storage methodology Sensor Data NYCLA 3/18/2018 26

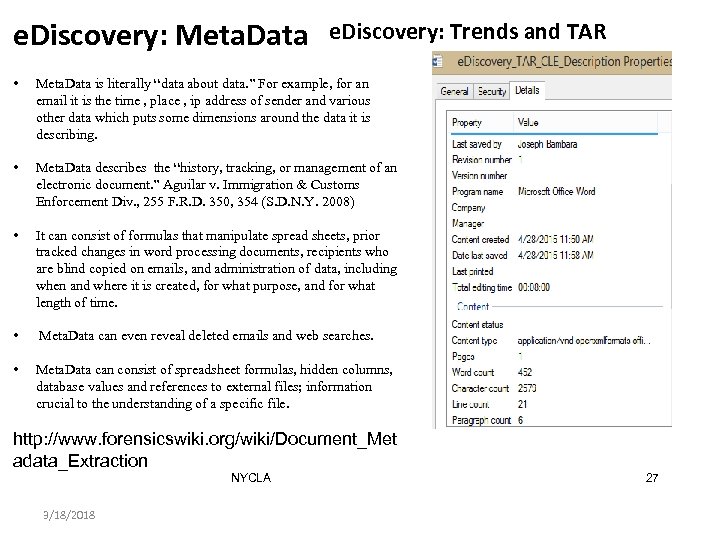

e. Discovery: Meta. Data e. Discovery: Trends and TAR • Meta. Data is literally “data about data. ” For example, for an email it is the time , place , ip address of sender and various other data which puts some dimensions around the data it is describing. • Meta. Data describes the “history, tracking, or management of an electronic document. ” Aguilar v. Immigration & Customs Enforcement Div. , 255 F. R. D. 350, 354 (S. D. N. Y. 2008) • It can consist of formulas that manipulate spread sheets, prior tracked changes in word processing documents, recipients who are blind copied on emails, and administration of data, including when and where it is created, for what purpose, and for what length of time. • Meta. Data can even reveal deleted emails and web searches. • Meta. Data can consist of spreadsheet formulas, hidden columns, database values and references to external files; information crucial to the understanding of a specific file. http: //www. forensicswiki. org/wiki/Document_Met adata_Extraction NYCLA 3/18/2018 27

e. Discovery: Meta. Data e. Discovery: Trends and TAR • Meta. Data is literally “data about data. ” For example, for an email it is the time , place , ip address of sender and various other data which puts some dimensions around the data it is describing. • Meta. Data describes the “history, tracking, or management of an electronic document. ” Aguilar v. Immigration & Customs Enforcement Div. , 255 F. R. D. 350, 354 (S. D. N. Y. 2008) • It can consist of formulas that manipulate spread sheets, prior tracked changes in word processing documents, recipients who are blind copied on emails, and administration of data, including when and where it is created, for what purpose, and for what length of time. • Meta. Data can even reveal deleted emails and web searches. • Meta. Data can consist of spreadsheet formulas, hidden columns, database values and references to external files; information crucial to the understanding of a specific file. http: //www. forensicswiki. org/wiki/Document_Met adata_Extraction NYCLA 3/18/2018 27

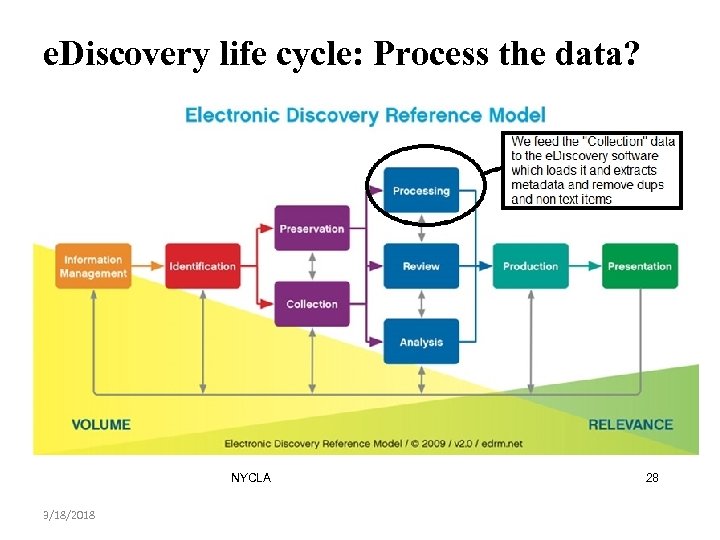

e. Discovery life cycle: Process the data? NYCLA 3/18/2018 28

e. Discovery life cycle: Process the data? NYCLA 3/18/2018 28

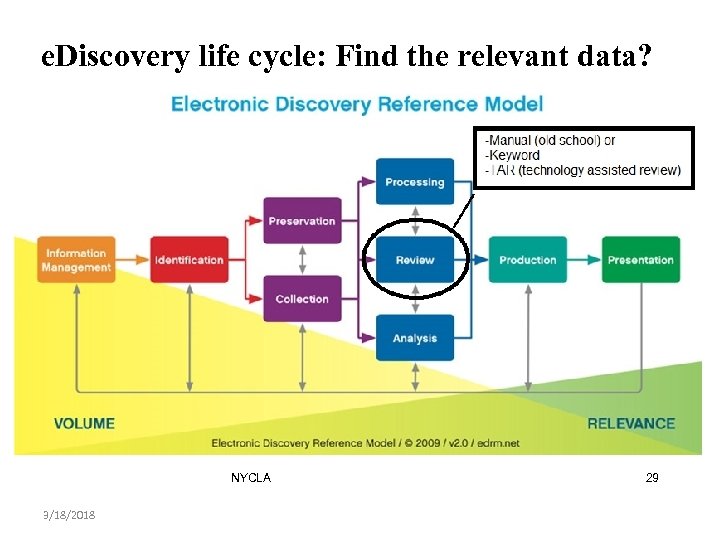

e. Discovery life cycle: Find the relevant data? NYCLA 3/18/2018 29

e. Discovery life cycle: Find the relevant data? NYCLA 3/18/2018 29

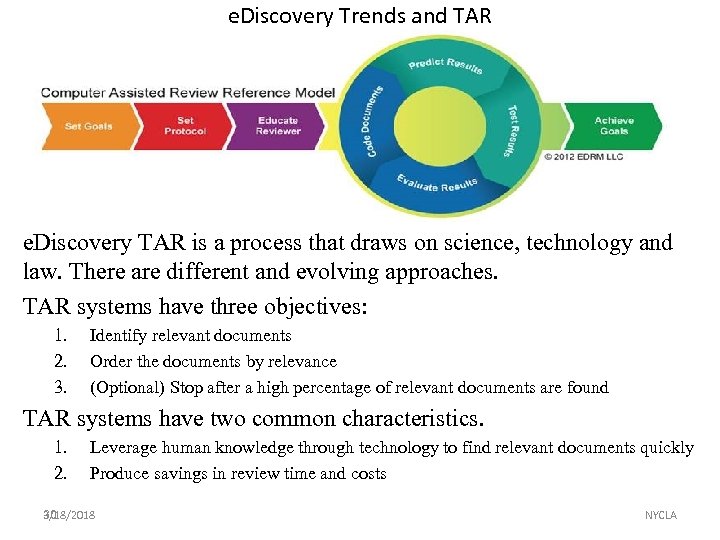

e. Discovery Trends and TAR e. Discovery TAR is a process that draws on science, technology and law. There are different and evolving approaches. TAR systems have three objectives: 1. 2. 3. Identify relevant documents Order the documents by relevance (Optional) Stop after a high percentage of relevant documents are found TAR systems have two common characteristics. 1. 2. Leverage human knowledge through technology to find relevant documents quickly Produce savings in review time and costs 3/18/2018 30 NYCLA

e. Discovery Trends and TAR e. Discovery TAR is a process that draws on science, technology and law. There are different and evolving approaches. TAR systems have three objectives: 1. 2. 3. Identify relevant documents Order the documents by relevance (Optional) Stop after a high percentage of relevant documents are found TAR systems have two common characteristics. 1. 2. Leverage human knowledge through technology to find relevant documents quickly Produce savings in review time and costs 3/18/2018 30 NYCLA

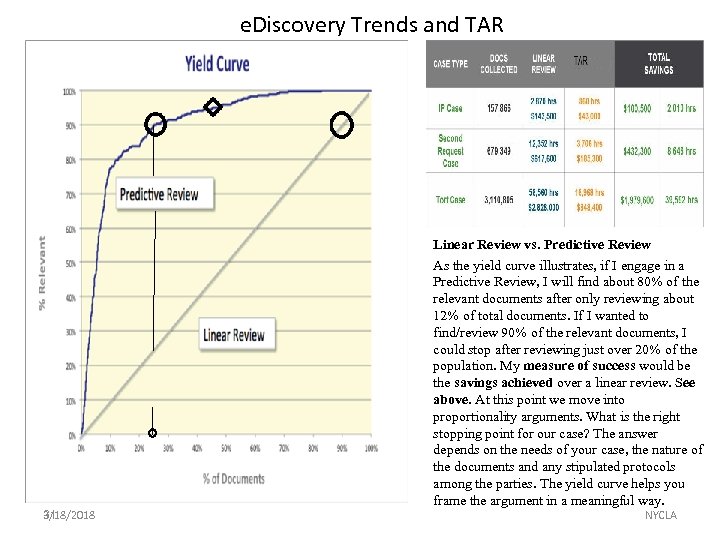

e. Discovery Trends and TAR 3/18/2018 31 Linear Review vs. Predictive Review As the yield curve illustrates, if I engage in a Predictive Review, I will find about 80% of the relevant documents after only reviewing about 12% of total documents. If I wanted to find/review 90% of the relevant documents, I could stop after reviewing just over 20% of the population. My measure of success would be the savings achieved over a linear review. See above. At this point we move into proportionality arguments. What is the right stopping point for our case? The answer depends on the needs of your case, the nature of the documents and any stipulated protocols among the parties. The yield curve helps you frame the argument in a meaningful way. NYCLA

e. Discovery Trends and TAR 3/18/2018 31 Linear Review vs. Predictive Review As the yield curve illustrates, if I engage in a Predictive Review, I will find about 80% of the relevant documents after only reviewing about 12% of total documents. If I wanted to find/review 90% of the relevant documents, I could stop after reviewing just over 20% of the population. My measure of success would be the savings achieved over a linear review. See above. At this point we move into proportionality arguments. What is the right stopping point for our case? The answer depends on the needs of your case, the nature of the documents and any stipulated protocols among the parties. The yield curve helps you frame the argument in a meaningful way. NYCLA

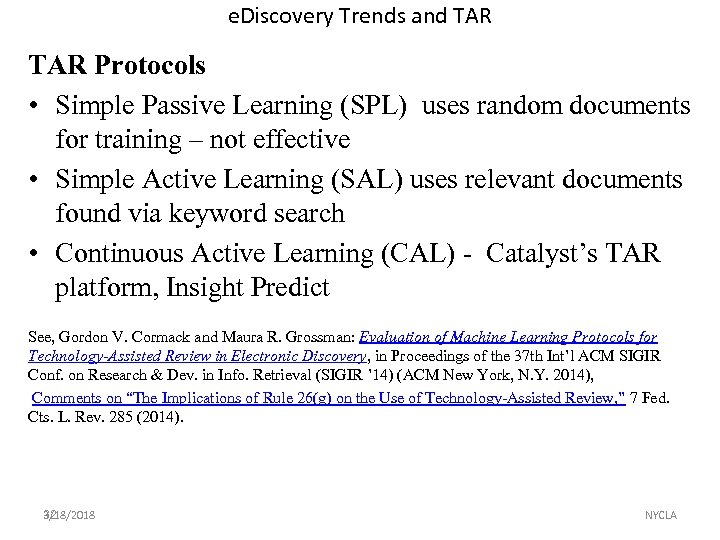

e. Discovery Trends and TAR Protocols • Simple Passive Learning (SPL) uses random documents for training – not effective • Simple Active Learning (SAL) uses relevant documents found via keyword search • Continuous Active Learning (CAL) - Catalyst’s TAR platform, Insight Predict See, Gordon V. Cormack and Maura R. Grossman: Evaluation of Machine Learning Protocols for Technology-Assisted Review in Electronic Discovery, in Proceedings of the 37 th Int’l ACM SIGIR Conf. on Research & Dev. in Info. Retrieval (SIGIR ’ 14) (ACM New York, N. Y. 2014), Comments on “The Implications of Rule 26(g) on the Use of Technology-Assisted Review, ” 7 Fed. Cts. L. Rev. 285 (2014). 3/18/2018 32 NYCLA

e. Discovery Trends and TAR Protocols • Simple Passive Learning (SPL) uses random documents for training – not effective • Simple Active Learning (SAL) uses relevant documents found via keyword search • Continuous Active Learning (CAL) - Catalyst’s TAR platform, Insight Predict See, Gordon V. Cormack and Maura R. Grossman: Evaluation of Machine Learning Protocols for Technology-Assisted Review in Electronic Discovery, in Proceedings of the 37 th Int’l ACM SIGIR Conf. on Research & Dev. in Info. Retrieval (SIGIR ’ 14) (ACM New York, N. Y. 2014), Comments on “The Implications of Rule 26(g) on the Use of Technology-Assisted Review, ” 7 Fed. Cts. L. Rev. 285 (2014). 3/18/2018 32 NYCLA

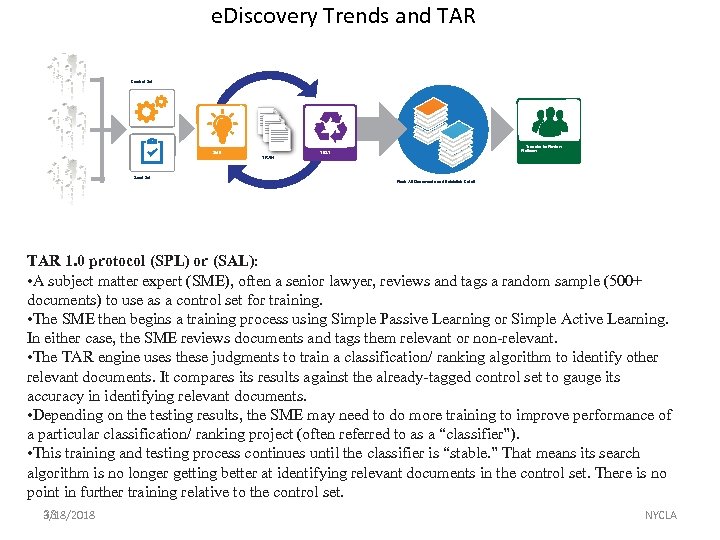

e. Discovery Trends and TAR Control Set SME Transfer to Review Platform TEST TRAIN Seed Set Rank All Documents and Establish Cutoff TAR 1. 0 protocol (SPL) or (SAL): • A subject matter expert (SME), often a senior lawyer, reviews and tags a random sample (500+ documents) to use as a control set for training. • The SME then begins a training process using Simple Passive Learning or Simple Active Learning. In either case, the SME reviews documents and tags them relevant or non-relevant. • The TAR engine uses these judgments to train a classification/ ranking algorithm to identify other relevant documents. It compares its results against the already-tagged control set to gauge its accuracy in identifying relevant documents. • Depending on the testing results, the SME may need to do more training to improve performance of a particular classification/ ranking project (often referred to as a “classifier”). • This training and testing process continues until the classifier is “stable. ” That means its search algorithm is no longer getting better at identifying relevant documents in the control set. There is no point in further training relative to the control set. 3/18/2018 33 NYCLA

e. Discovery Trends and TAR Control Set SME Transfer to Review Platform TEST TRAIN Seed Set Rank All Documents and Establish Cutoff TAR 1. 0 protocol (SPL) or (SAL): • A subject matter expert (SME), often a senior lawyer, reviews and tags a random sample (500+ documents) to use as a control set for training. • The SME then begins a training process using Simple Passive Learning or Simple Active Learning. In either case, the SME reviews documents and tags them relevant or non-relevant. • The TAR engine uses these judgments to train a classification/ ranking algorithm to identify other relevant documents. It compares its results against the already-tagged control set to gauge its accuracy in identifying relevant documents. • Depending on the testing results, the SME may need to do more training to improve performance of a particular classification/ ranking project (often referred to as a “classifier”). • This training and testing process continues until the classifier is “stable. ” That means its search algorithm is no longer getting better at identifying relevant documents in the control set. There is no point in further training relative to the control set. 3/18/2018 33 NYCLA

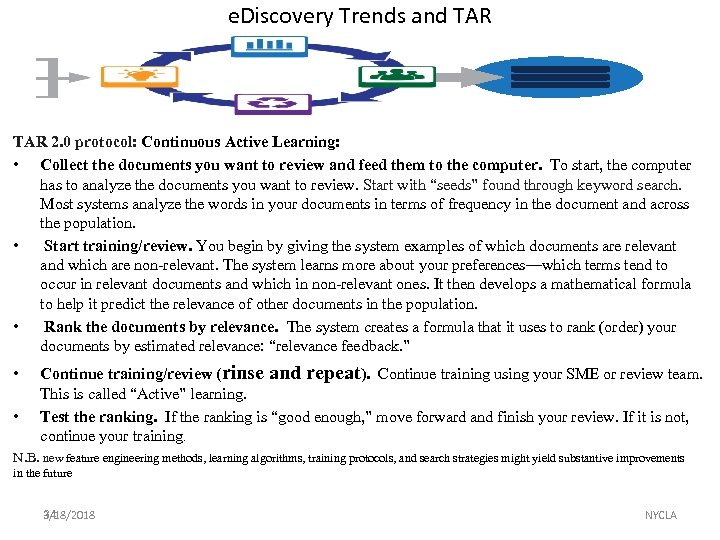

e. Discovery Trends and TAR 2. 0 protocol: Continuous Active Learning: • Collect the documents you want to review and feed them to the computer. To start, the computer has to analyze the documents you want to review. Start with “seeds” found through keyword search. Most systems analyze the words in your documents in terms of frequency in the document and across the population. • Start training/review. You begin by giving the system examples of which documents are relevant and which are non-relevant. The system learns more about your preferences—which terms tend to occur in relevant documents and which in non-relevant ones. It then develops a mathematical formula to help it predict the relevance of other documents in the population. • Rank the documents by relevance. The system creates a formula that it uses to rank (order) your documents by estimated relevance: “relevance feedback. ” • • Continue training/review (rinse and repeat). Continue training using your SME or review team. This is called “Active” learning. Test the ranking. If the ranking is “good enough, ” move forward and finish your review. If it is not, continue your training. N. B. new feature engineering methods, learning algorithms, training protocols, and search strategies might yield substantive improvements in the future 3/18/2018 34 NYCLA

e. Discovery Trends and TAR 2. 0 protocol: Continuous Active Learning: • Collect the documents you want to review and feed them to the computer. To start, the computer has to analyze the documents you want to review. Start with “seeds” found through keyword search. Most systems analyze the words in your documents in terms of frequency in the document and across the population. • Start training/review. You begin by giving the system examples of which documents are relevant and which are non-relevant. The system learns more about your preferences—which terms tend to occur in relevant documents and which in non-relevant ones. It then develops a mathematical formula to help it predict the relevance of other documents in the population. • Rank the documents by relevance. The system creates a formula that it uses to rank (order) your documents by estimated relevance: “relevance feedback. ” • • Continue training/review (rinse and repeat). Continue training using your SME or review team. This is called “Active” learning. Test the ranking. If the ranking is “good enough, ” move forward and finish your review. If it is not, continue your training. N. B. new feature engineering methods, learning algorithms, training protocols, and search strategies might yield substantive improvements in the future 3/18/2018 34 NYCLA

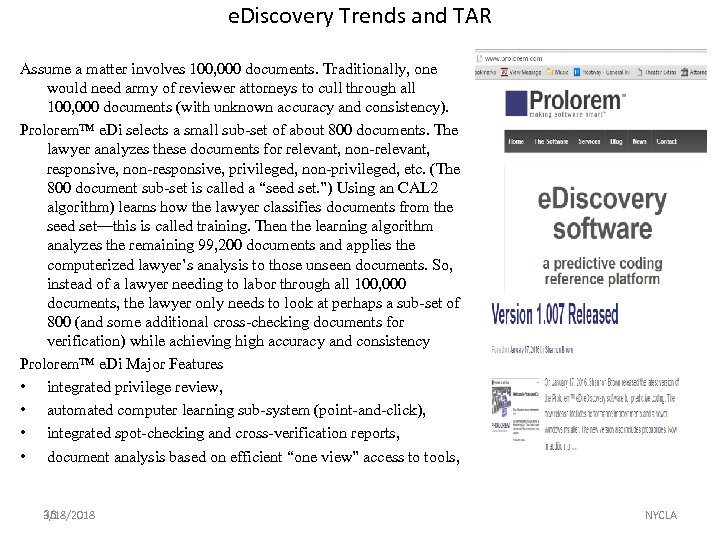

e. Discovery Trends and TAR Assume a matter involves 100, 000 documents. Traditionally, one would need army of reviewer attorneys to cull through all 100, 000 documents (with unknown accuracy and consistency). Prolorem™ e. Di selects a small sub-set of about 800 documents. The lawyer analyzes these documents for relevant, non-relevant, responsive, non-responsive, privileged, non-privileged, etc. (The 800 document sub-set is called a “seed set. ”) Using an CAL 2 algorithm) learns how the lawyer classifies documents from the seed set—this is called training. Then the learning algorithm analyzes the remaining 99, 200 documents and applies the computerized lawyer’s analysis to those unseen documents. So, instead of a lawyer needing to labor through all 100, 000 documents, the lawyer only needs to look at perhaps a sub-set of 800 (and some additional cross-checking documents for verification) while achieving high accuracy and consistency Prolorem™ e. Di Major Features • integrated privilege review, • automated computer learning sub-system (point-and-click), • integrated spot-checking and cross-verification reports, • document analysis based on efficient “one view” access to tools, 3/18/2018 35 NYCLA

e. Discovery Trends and TAR Assume a matter involves 100, 000 documents. Traditionally, one would need army of reviewer attorneys to cull through all 100, 000 documents (with unknown accuracy and consistency). Prolorem™ e. Di selects a small sub-set of about 800 documents. The lawyer analyzes these documents for relevant, non-relevant, responsive, non-responsive, privileged, non-privileged, etc. (The 800 document sub-set is called a “seed set. ”) Using an CAL 2 algorithm) learns how the lawyer classifies documents from the seed set—this is called training. Then the learning algorithm analyzes the remaining 99, 200 documents and applies the computerized lawyer’s analysis to those unseen documents. So, instead of a lawyer needing to labor through all 100, 000 documents, the lawyer only needs to look at perhaps a sub-set of 800 (and some additional cross-checking documents for verification) while achieving high accuracy and consistency Prolorem™ e. Di Major Features • integrated privilege review, • automated computer learning sub-system (point-and-click), • integrated spot-checking and cross-verification reports, • document analysis based on efficient “one view” access to tools, 3/18/2018 35 NYCLA

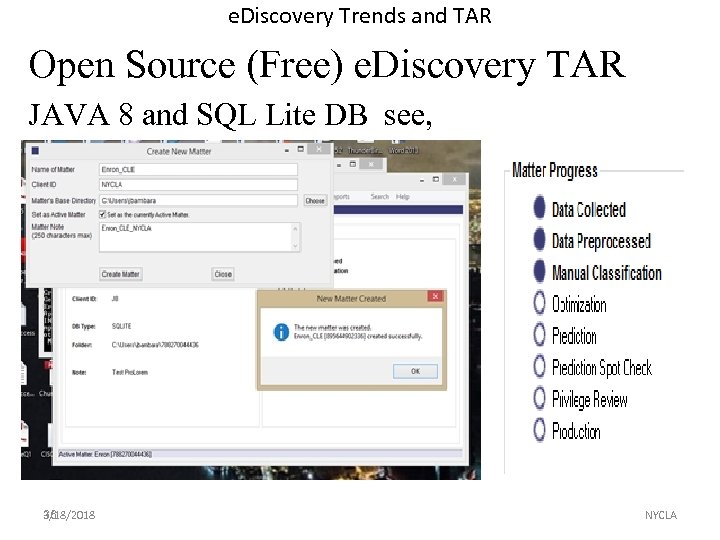

e. Discovery Trends and TAR Open Source (Free) e. Discovery TAR JAVA 8 and SQL Lite DB see, www. prolorem. com 3/18/2018 36 NYCLA

e. Discovery Trends and TAR Open Source (Free) e. Discovery TAR JAVA 8 and SQL Lite DB see, www. prolorem. com 3/18/2018 36 NYCLA

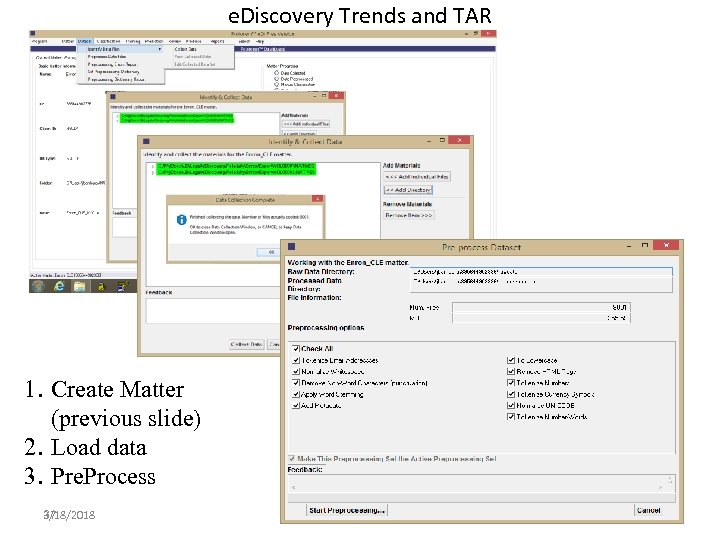

e. Discovery Trends and TAR 1. Create Matter (previous slide) 2. Load data 3. Pre. Process 3/18/2018 37 NYCLA

e. Discovery Trends and TAR 1. Create Matter (previous slide) 2. Load data 3. Pre. Process 3/18/2018 37 NYCLA

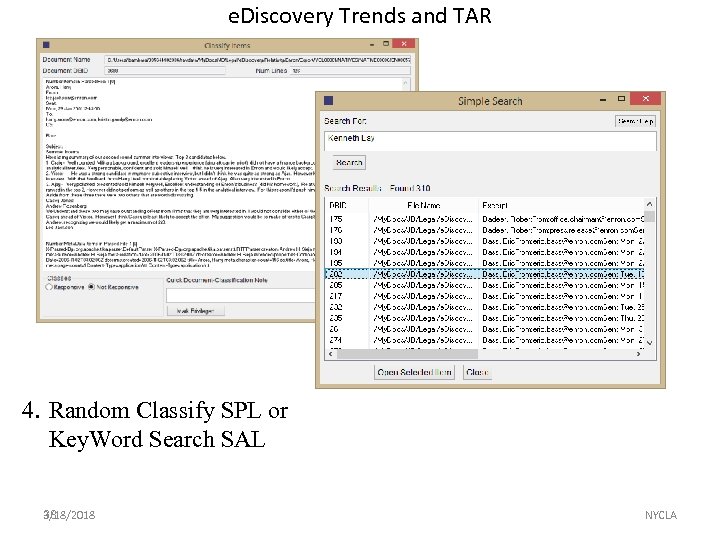

e. Discovery Trends and TAR 4. Random Classify SPL or Key. Word Search SAL 3/18/2018 38 NYCLA

e. Discovery Trends and TAR 4. Random Classify SPL or Key. Word Search SAL 3/18/2018 38 NYCLA

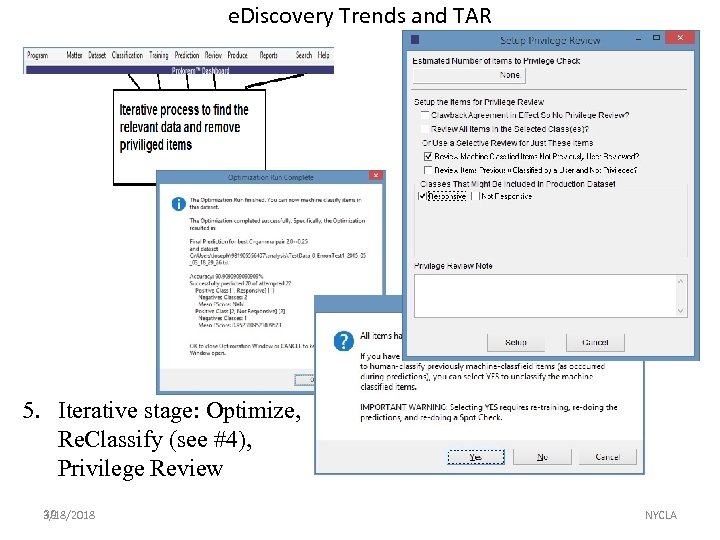

e. Discovery Trends and TAR 5. Iterative stage: Optimize, Re. Classify (see #4), Privilege Review 3/18/2018 39 NYCLA

e. Discovery Trends and TAR 5. Iterative stage: Optimize, Re. Classify (see #4), Privilege Review 3/18/2018 39 NYCLA

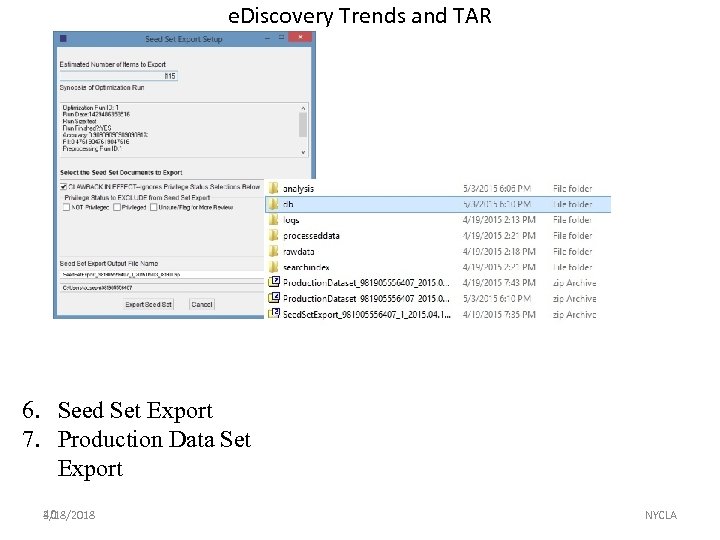

e. Discovery Trends and TAR 6. Seed Set Export 7. Production Data Set Export 3/18/2018 40 NYCLA

e. Discovery Trends and TAR 6. Seed Set Export 7. Production Data Set Export 3/18/2018 40 NYCLA

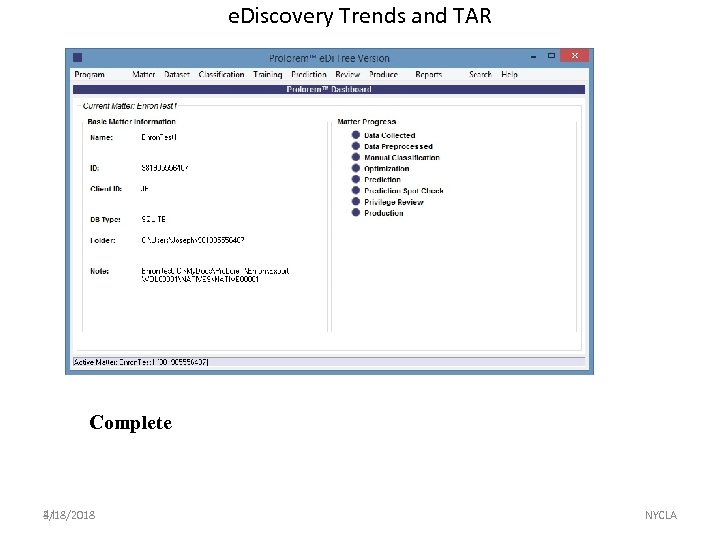

e. Discovery Trends and TAR Complete 3/18/2018 41 NYCLA

e. Discovery Trends and TAR Complete 3/18/2018 41 NYCLA

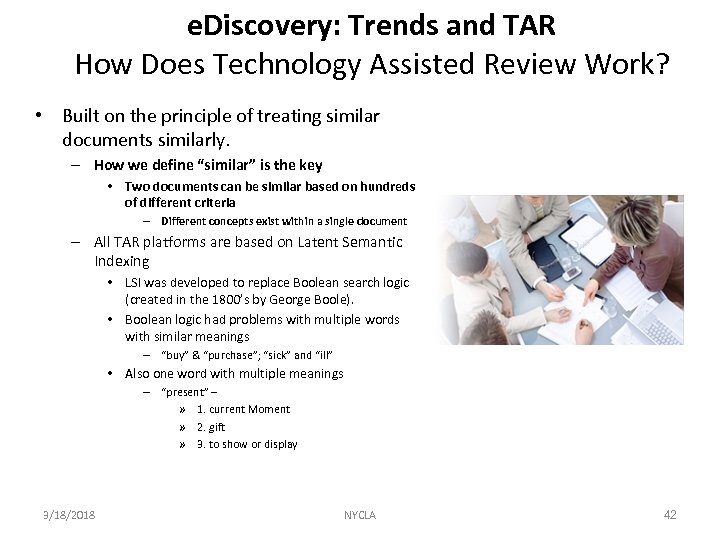

e. Discovery: Trends and TAR How Does Technology Assisted Review Work? • Built on the principle of treating similar documents similarly. – How we define “similar” is the key • Two documents can be similar based on hundreds of different criteria – Different concepts exist within a single document – All TAR platforms are based on Latent Semantic Indexing • LSI was developed to replace Boolean search logic (created in the 1800’s by George Boole). • Boolean logic had problems with multiple words with similar meanings – “buy” & “purchase”; “sick” and “ill” • Also one word with multiple meanings – “present” – » 1. current Moment » 2. gift » 3. to show or display 3/18/2018 NYCLA 42

e. Discovery: Trends and TAR How Does Technology Assisted Review Work? • Built on the principle of treating similar documents similarly. – How we define “similar” is the key • Two documents can be similar based on hundreds of different criteria – Different concepts exist within a single document – All TAR platforms are based on Latent Semantic Indexing • LSI was developed to replace Boolean search logic (created in the 1800’s by George Boole). • Boolean logic had problems with multiple words with similar meanings – “buy” & “purchase”; “sick” and “ill” • Also one word with multiple meanings – “present” – » 1. current Moment » 2. gift » 3. to show or display 3/18/2018 NYCLA 42

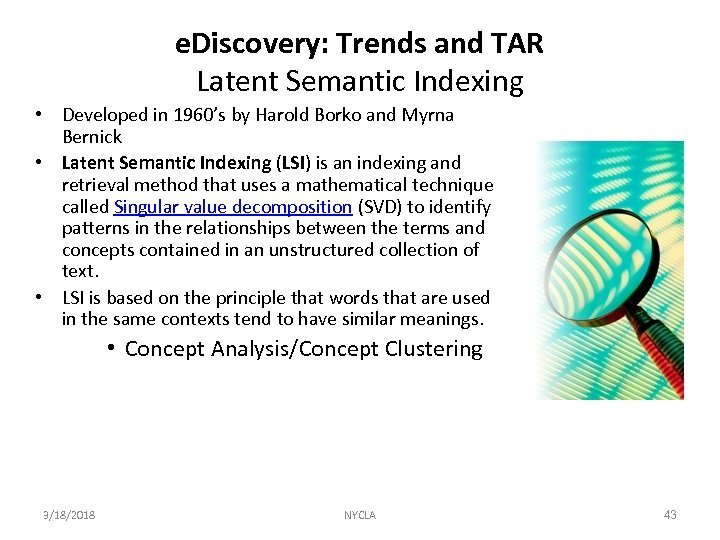

e. Discovery: Trends and TAR Latent Semantic Indexing • Developed in 1960’s by Harold Borko and Myrna Bernick • Latent Semantic Indexing (LSI) is an indexing and retrieval method that uses a mathematical technique called Singular value decomposition (SVD) to identify patterns in the relationships between the terms and concepts contained in an unstructured collection of text. • LSI is based on the principle that words that are used in the same contexts tend to have similar meanings. • Concept Analysis/Concept Clustering 3/18/2018 NYCLA 43

e. Discovery: Trends and TAR Latent Semantic Indexing • Developed in 1960’s by Harold Borko and Myrna Bernick • Latent Semantic Indexing (LSI) is an indexing and retrieval method that uses a mathematical technique called Singular value decomposition (SVD) to identify patterns in the relationships between the terms and concepts contained in an unstructured collection of text. • LSI is based on the principle that words that are used in the same contexts tend to have similar meanings. • Concept Analysis/Concept Clustering 3/18/2018 NYCLA 43

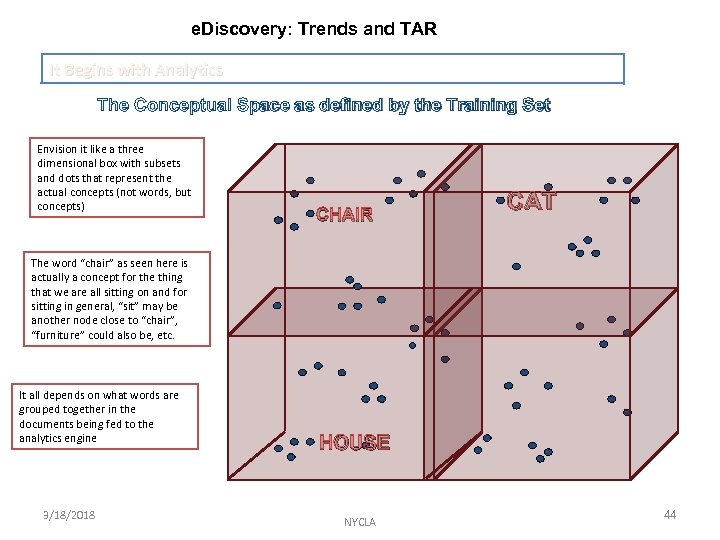

e. Discovery: Trends and TAR It Begins with Analytics The Conceptual Space as defined by the Training Set Envision it like a three dimensional box with subsets and dots that represent the actual concepts (not words, but concepts) CHAIR CAT The word “chair” as seen here is actually a concept for the thing that we are all sitting on and for sitting in general, “sit” may be another node close to “chair”, “furniture” could also be, etc. It all depends on what words are grouped together in the documents being fed to the analytics engine 3/18/2018 HOUSE NYCLA 44

e. Discovery: Trends and TAR It Begins with Analytics The Conceptual Space as defined by the Training Set Envision it like a three dimensional box with subsets and dots that represent the actual concepts (not words, but concepts) CHAIR CAT The word “chair” as seen here is actually a concept for the thing that we are all sitting on and for sitting in general, “sit” may be another node close to “chair”, “furniture” could also be, etc. It all depends on what words are grouped together in the documents being fed to the analytics engine 3/18/2018 HOUSE NYCLA 44

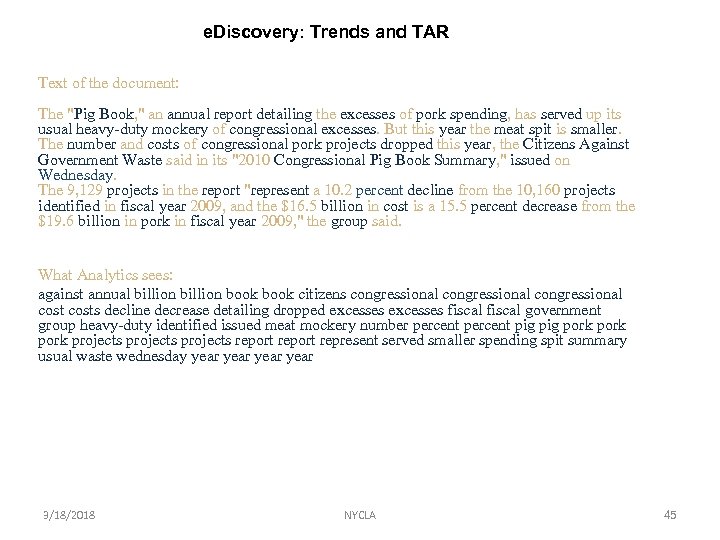

e. Discovery: Trends and TAR Text of the document: The "Pig Book, " an annual report detailing the excesses of pork spending, has served up its usual heavy-duty mockery of congressional excesses. But this year the meat spit is smaller. The number and costs of congressional pork projects dropped this year, the Citizens Against Government Waste said in its "2010 Congressional Pig Book Summary, " issued on Wednesday. The 9, 129 projects in the report "represent a 10. 2 percent decline from the 10, 160 projects identified in fiscal year 2009, and the $16. 5 billion in cost is a 15. 5 percent decrease from the $19. 6 billion in pork in fiscal year 2009, " the group said. What Analytics sees: against annual billion book citizens congressional costs decline decrease detailing dropped excesses fiscal government group heavy-duty identified issued meat mockery number percent pig pork projects report represent served smaller spending spit summary usual waste wednesday year 3/18/2018 NYCLA 45

e. Discovery: Trends and TAR Text of the document: The "Pig Book, " an annual report detailing the excesses of pork spending, has served up its usual heavy-duty mockery of congressional excesses. But this year the meat spit is smaller. The number and costs of congressional pork projects dropped this year, the Citizens Against Government Waste said in its "2010 Congressional Pig Book Summary, " issued on Wednesday. The 9, 129 projects in the report "represent a 10. 2 percent decline from the 10, 160 projects identified in fiscal year 2009, and the $16. 5 billion in cost is a 15. 5 percent decrease from the $19. 6 billion in pork in fiscal year 2009, " the group said. What Analytics sees: against annual billion book citizens congressional costs decline decrease detailing dropped excesses fiscal government group heavy-duty identified issued meat mockery number percent pig pork projects report represent served smaller spending spit summary usual waste wednesday year 3/18/2018 NYCLA 45

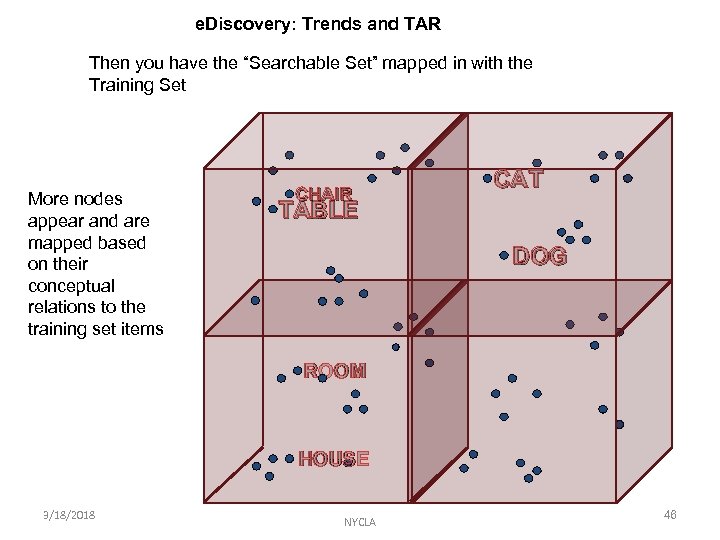

e. Discovery: Trends and TAR Then you have the “Searchable Set” mapped in with the Training Set More nodes appear and are mapped based on their conceptual relations to the training set items CHAIR CAT TABLE DOG ROOM HOUSE 3/18/2018 NYCLA 46

e. Discovery: Trends and TAR Then you have the “Searchable Set” mapped in with the Training Set More nodes appear and are mapped based on their conceptual relations to the training set items CHAIR CAT TABLE DOG ROOM HOUSE 3/18/2018 NYCLA 46