5ff89b039a1fd0b70e3822c956cac195.ppt

- Количество слайдов: 23

ESnet Update ESnet/Internet 2 Joint Techs Madison, Wisconsin July 17, 2007 Joe Burrescia ESnet General Manager Lawrence Berkeley National Laboratory 1

ESnet Update ESnet/Internet 2 Joint Techs Madison, Wisconsin July 17, 2007 Joe Burrescia ESnet General Manager Lawrence Berkeley National Laboratory 1

Outline ESnet’s Role in DOE’s Office of Science ESnet’s Continuing Evolutionary Dimensions Capacity Reach Reliability Guaranteed Services 2

Outline ESnet’s Role in DOE’s Office of Science ESnet’s Continuing Evolutionary Dimensions Capacity Reach Reliability Guaranteed Services 2

DOE Office of Science and ESnet • “The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, … providing more than 40 percent of total funding … for the Nation’s research programs in high-energy physics, nuclear physics, and fusion energy sciences. ” (http: //www. science. doe. gov) • The large-scale science that is the mission of the Office of Science is dependent on networks for o Sharing of massive amounts of data o Supporting thousands of collaborators world-wide o Distributed data processing o Distributed simulation, visualization, and computational steering o Distributed data management • ESnet’s mission is to enable those aspects of science that depend on networking and on certain types of large-scale collaboration 3

DOE Office of Science and ESnet • “The Office of Science is the single largest supporter of basic research in the physical sciences in the United States, … providing more than 40 percent of total funding … for the Nation’s research programs in high-energy physics, nuclear physics, and fusion energy sciences. ” (http: //www. science. doe. gov) • The large-scale science that is the mission of the Office of Science is dependent on networks for o Sharing of massive amounts of data o Supporting thousands of collaborators world-wide o Distributed data processing o Distributed simulation, visualization, and computational steering o Distributed data management • ESnet’s mission is to enable those aspects of science that depend on networking and on certain types of large-scale collaboration 3

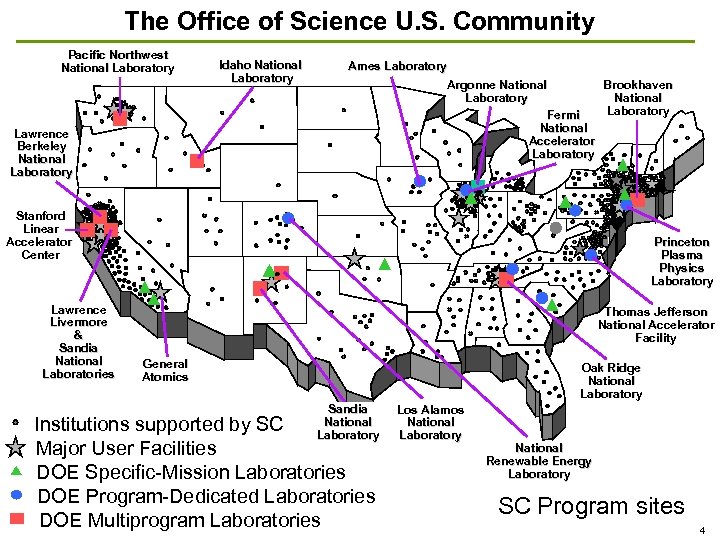

The Office of Science U. S. Community Pacific Northwest National Laboratory Idaho National Laboratory Ames Laboratory Argonne National Brookhaven Laboratory National Laboratory Fermi National Accelerator Laboratory Lawrence Berkeley National Laboratory Stanford Linear Accelerator Center Lawrence Livermore & Sandia National Laboratories Princeton Plasma Physics Laboratory Thomas Jefferson National Accelerator Facility General Atomics Oak Ridge National Laboratory Sandia National Laboratory Institutions supported by SC Major User Facilities DOE Specific-Mission Laboratories DOE Program-Dedicated Laboratories DOE Multiprogram Laboratories Los Alamos National Laboratory National Renewable Energy Laboratory SC Program sites 4

The Office of Science U. S. Community Pacific Northwest National Laboratory Idaho National Laboratory Ames Laboratory Argonne National Brookhaven Laboratory National Laboratory Fermi National Accelerator Laboratory Lawrence Berkeley National Laboratory Stanford Linear Accelerator Center Lawrence Livermore & Sandia National Laboratories Princeton Plasma Physics Laboratory Thomas Jefferson National Accelerator Facility General Atomics Oak Ridge National Laboratory Sandia National Laboratory Institutions supported by SC Major User Facilities DOE Specific-Mission Laboratories DOE Program-Dedicated Laboratories DOE Multiprogram Laboratories Los Alamos National Laboratory National Renewable Energy Laboratory SC Program sites 4

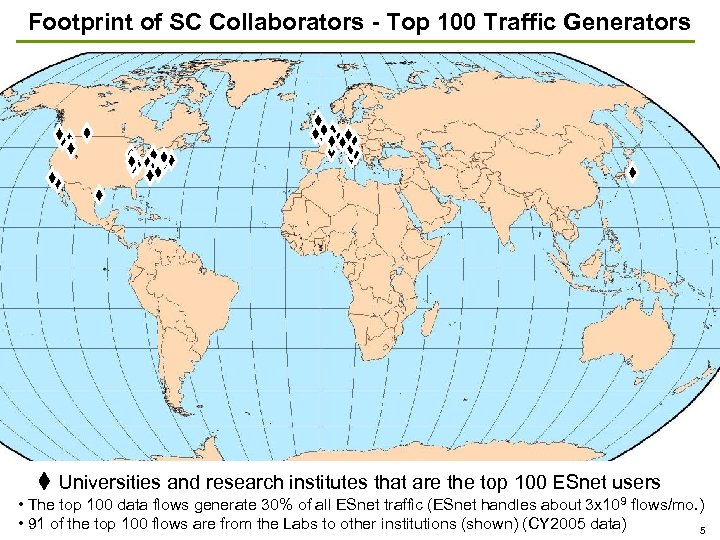

Footprint of SC Collaborators - Top 100 Traffic Generators Universities and research institutes that are the top 100 ESnet users • The top 100 data flows generate 30% of all ESnet traffic (ESnet handles about 3 x 10 9 flows/mo. ) • 91 of the top 100 flows are from the Labs to other institutions (shown) (CY 2005 data) 5

Footprint of SC Collaborators - Top 100 Traffic Generators Universities and research institutes that are the top 100 ESnet users • The top 100 data flows generate 30% of all ESnet traffic (ESnet handles about 3 x 10 9 flows/mo. ) • 91 of the top 100 flows are from the Labs to other institutions (shown) (CY 2005 data) 5

Changing Science Environment New Demands on Network • Increased capacity o Needed to accommodate a large and steadily increasing amount of data that must traverse the network • High-speed, highly reliable connectivity between Labs and US and international R&E institutions o To support the inherently collaborative, global nature of large-scale science • High network reliability o For interconnecting components of distributed largescale science • New network services to provide bandwidth guarantees o For data transfer deadlines for remote data analysis, real-time interaction with instruments, coupled computational simulations, etc. 6

Changing Science Environment New Demands on Network • Increased capacity o Needed to accommodate a large and steadily increasing amount of data that must traverse the network • High-speed, highly reliable connectivity between Labs and US and international R&E institutions o To support the inherently collaborative, global nature of large-scale science • High network reliability o For interconnecting components of distributed largescale science • New network services to provide bandwidth guarantees o For data transfer deadlines for remote data analysis, real-time interaction with instruments, coupled computational simulations, etc. 6

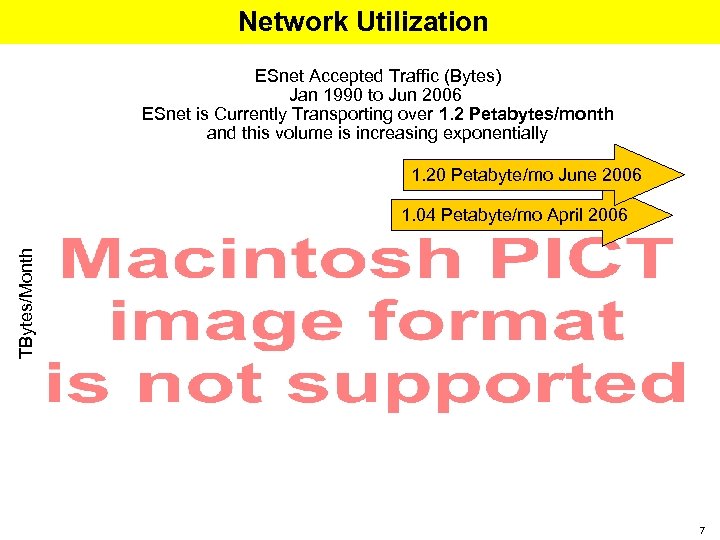

Network Utilization ESnet Accepted Traffic (Bytes) Jan 1990 to Jun 2006 ESnet is Currently Transporting over 1. 2 Petabytes/month and this volume is increasing exponentially 1. 20 Petabyte/mo June 2006 TBytes/Month 1. 04 Petabyte/mo April 2006 7

Network Utilization ESnet Accepted Traffic (Bytes) Jan 1990 to Jun 2006 ESnet is Currently Transporting over 1. 2 Petabytes/month and this volume is increasing exponentially 1. 20 Petabyte/mo June 2006 TBytes/Month 1. 04 Petabyte/mo April 2006 7

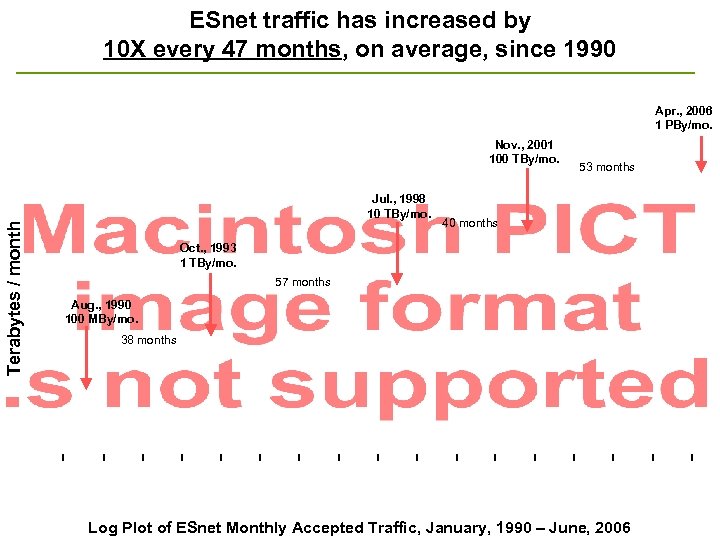

ESnet traffic has increased by 10 X every 47 months, on average, since 1990 Apr. , 2006 1 PBy/mo. Nov. , 2001 100 TBy/mo. Terabytes / month Jul. , 1998 10 TBy/mo. 53 months 40 months Oct. , 1993 1 TBy/mo. 57 months Aug. , 1990 100 MBy/mo. 38 months Log Plot of ESnet Monthly Accepted Traffic, January, 1990 – June, 2006

ESnet traffic has increased by 10 X every 47 months, on average, since 1990 Apr. , 2006 1 PBy/mo. Nov. , 2001 100 TBy/mo. Terabytes / month Jul. , 1998 10 TBy/mo. 53 months 40 months Oct. , 1993 1 TBy/mo. 57 months Aug. , 1990 100 MBy/mo. 38 months Log Plot of ESnet Monthly Accepted Traffic, January, 1990 – June, 2006

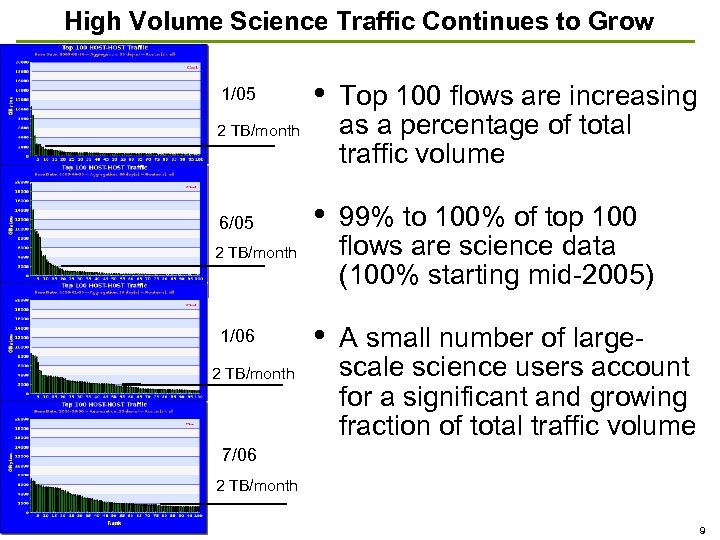

High Volume Science Traffic Continues to Grow 1/05 • Top 100 flows are increasing as a percentage of total traffic volume • 99% to 100% of top 100 flows are science data (100% starting mid-2005) • A small number of largescale science users account for a significant and growing fraction of total traffic volume 2 TB/month 6/05 2 TB/month 1/06 2 TB/month 7/06 2 TB/month 9

High Volume Science Traffic Continues to Grow 1/05 • Top 100 flows are increasing as a percentage of total traffic volume • 99% to 100% of top 100 flows are science data (100% starting mid-2005) • A small number of largescale science users account for a significant and growing fraction of total traffic volume 2 TB/month 6/05 2 TB/month 1/06 2 TB/month 7/06 2 TB/month 9

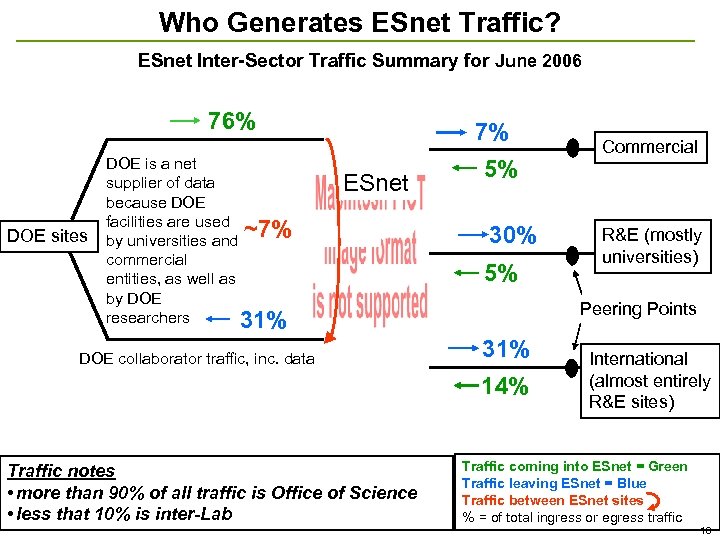

Who Generates ESnet Traffic? ESnet Inter-Sector Traffic Summary for June 2006 76% DOE sites DOE is a net supplier of data because DOE facilities are used ~7% by universities and commercial entities, as well as by DOE researchers 31% 7% ESnet DOE collaborator traffic, inc. data 5% 30% 5% R&E (mostly universities) Peering Points 31% 14% Traffic notes • more than 90% of all traffic is Office of Science • less that 10% is inter-Lab Commercial International (almost entirely R&E sites) Traffic coming into ESnet = Green Traffic leaving ESnet = Blue Traffic between ESnet sites % = of total ingress or egress traffic 10

Who Generates ESnet Traffic? ESnet Inter-Sector Traffic Summary for June 2006 76% DOE sites DOE is a net supplier of data because DOE facilities are used ~7% by universities and commercial entities, as well as by DOE researchers 31% 7% ESnet DOE collaborator traffic, inc. data 5% 30% 5% R&E (mostly universities) Peering Points 31% 14% Traffic notes • more than 90% of all traffic is Office of Science • less that 10% is inter-Lab Commercial International (almost entirely R&E sites) Traffic coming into ESnet = Green Traffic leaving ESnet = Blue Traffic between ESnet sites % = of total ingress or egress traffic 10

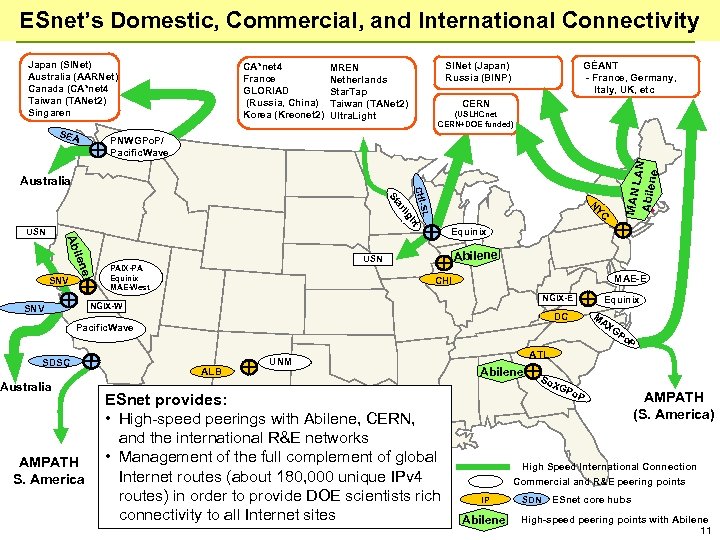

ESnet’s Domestic, Commercial, and International Connectivity Japan (SINet) Australia (AARNet) Canada (CA*net 4 Taiwan (TANet 2) Singaren SEA CA*net 4 France GLORIAD (Russia, China) Korea (Kreonet 2) GÉANT - France, Germany, Italy, UK, etc SINet (Japan) Russia (BINP) MREN Netherlands Star. Tap Taiwan (TANet 2) Ultra. Light CERN (USLHCnet CERN+DOE funded) MAN L AN Abilen e PNWGPo. P/ Pacific. Wave Australia YC N t gh SL CHI- rli a St USN Equinix e ilen Ab SNV Abilene USN PAIX-PA Equinix MAE-West MAE-E CHI NGIX-E NGIX-W SNV Equinix MA DC XG Pacific. Wave SDSC Australia AMPATH S. America Po P ALB UNM ESnet provides: • High-speed peerings with Abilene, CERN, and the international R&E networks • Management of the full complement of global Internet routes (about 180, 000 unique IPv 4 routes) in order to provide DOE scientists rich connectivity to all Internet sites ATL Abilene So XG Po P AMPATH (S. America) High Speed International Connection Commercial and R&E peering points IP Abilene SDN ESnet core hubs High-speed peering points with Abilene 11

ESnet’s Domestic, Commercial, and International Connectivity Japan (SINet) Australia (AARNet) Canada (CA*net 4 Taiwan (TANet 2) Singaren SEA CA*net 4 France GLORIAD (Russia, China) Korea (Kreonet 2) GÉANT - France, Germany, Italy, UK, etc SINet (Japan) Russia (BINP) MREN Netherlands Star. Tap Taiwan (TANet 2) Ultra. Light CERN (USLHCnet CERN+DOE funded) MAN L AN Abilen e PNWGPo. P/ Pacific. Wave Australia YC N t gh SL CHI- rli a St USN Equinix e ilen Ab SNV Abilene USN PAIX-PA Equinix MAE-West MAE-E CHI NGIX-E NGIX-W SNV Equinix MA DC XG Pacific. Wave SDSC Australia AMPATH S. America Po P ALB UNM ESnet provides: • High-speed peerings with Abilene, CERN, and the international R&E networks • Management of the full complement of global Internet routes (about 180, 000 unique IPv 4 routes) in order to provide DOE scientists rich connectivity to all Internet sites ATL Abilene So XG Po P AMPATH (S. America) High Speed International Connection Commercial and R&E peering points IP Abilene SDN ESnet core hubs High-speed peering points with Abilene 11

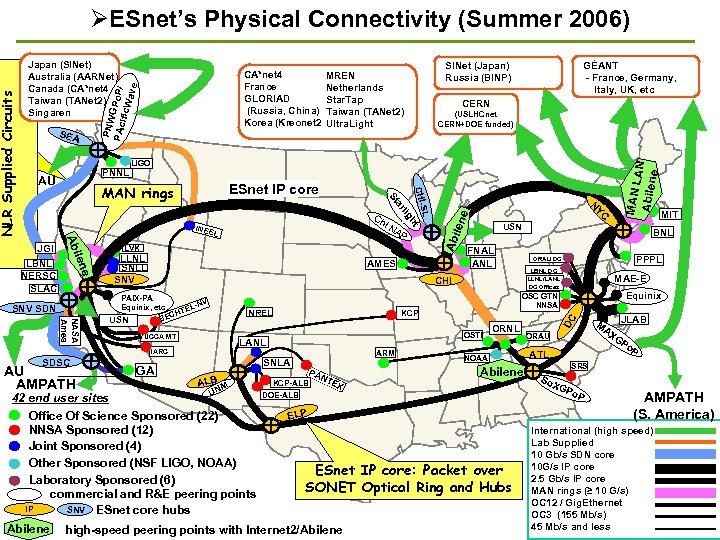

Japan (SINet) Australia (AARNet) Canada (CA*net 4 Taiwan (TANet 2) Singaren CA*net 4 France GLORIAD (Russia, China) Korea (Kreonet 2 PNW PAc GPo. P/ ific. W ave NASA Ames SDSC AMES USN FNAL ANL PAIX-PA -NV Equinix, etc. TEL ECH B USN NREL YUCCA MT GA OSTI LANL ARM SNLA ALB M UN PA KCP-ALB NT EX ORNL NOAA Abilene DOE-ALB ELP ESnet IP core: Packet over SONET Optical Ring and Hubs high-speed peering points with Internet 2/Abilene PPPL ORAU DC MAE-E Equinix OSC GTN NNSA KCP MIT BNL LBNL DC LLNL/LANL DC Offices CHI 42 end user sites Office Of Science Sponsored (22) NNSA Sponsored (12) Joint Sponsored (4) Other Sponsored (NSF LIGO, NOAA) Laboratory Sponsored (6) commercial and R&E peering points IP SNV ESnet core hubs Abilene YC LLNL SNLL SNV N LVK IARC AU AMPATH t e SNV SDN AP SL ilen JGI L gh i. N INEE LBNL NERSC SLAC CHI- Ch rli a St ESnet IP core MAN rings MAN L AN Abilen e LIGO DC AU CERN (USLHCnet CERN+DOE funded) len e PNNL GÉANT - France, Germany, Italy, UK, etc SINet (Japan) Russia (BINP) MREN Netherlands Star. Tap Taiwan (TANet 2) Ultra. Light Abi SEA Ab NLR Supplied Circuits ESnet’s Physical Connectivity (Summer 2006) JLAB MA XG ORAU Po P ATL SRS So XG Po P AMPATH (S. America) International (high speed) Lab Supplied 10 Gb/s SDN core 10 G/s IP core 2. 5 Gb/s IP core MAN rings (≥ 10 G/s) OC 12 / Gig. Ethernet OC 3 (155 Mb/s) 45 Mb/s and less 12

Japan (SINet) Australia (AARNet) Canada (CA*net 4 Taiwan (TANet 2) Singaren CA*net 4 France GLORIAD (Russia, China) Korea (Kreonet 2 PNW PAc GPo. P/ ific. W ave NASA Ames SDSC AMES USN FNAL ANL PAIX-PA -NV Equinix, etc. TEL ECH B USN NREL YUCCA MT GA OSTI LANL ARM SNLA ALB M UN PA KCP-ALB NT EX ORNL NOAA Abilene DOE-ALB ELP ESnet IP core: Packet over SONET Optical Ring and Hubs high-speed peering points with Internet 2/Abilene PPPL ORAU DC MAE-E Equinix OSC GTN NNSA KCP MIT BNL LBNL DC LLNL/LANL DC Offices CHI 42 end user sites Office Of Science Sponsored (22) NNSA Sponsored (12) Joint Sponsored (4) Other Sponsored (NSF LIGO, NOAA) Laboratory Sponsored (6) commercial and R&E peering points IP SNV ESnet core hubs Abilene YC LLNL SNLL SNV N LVK IARC AU AMPATH t e SNV SDN AP SL ilen JGI L gh i. N INEE LBNL NERSC SLAC CHI- Ch rli a St ESnet IP core MAN rings MAN L AN Abilen e LIGO DC AU CERN (USLHCnet CERN+DOE funded) len e PNNL GÉANT - France, Germany, Italy, UK, etc SINet (Japan) Russia (BINP) MREN Netherlands Star. Tap Taiwan (TANet 2) Ultra. Light Abi SEA Ab NLR Supplied Circuits ESnet’s Physical Connectivity (Summer 2006) JLAB MA XG ORAU Po P ATL SRS So XG Po P AMPATH (S. America) International (high speed) Lab Supplied 10 Gb/s SDN core 10 G/s IP core 2. 5 Gb/s IP core MAN rings (≥ 10 G/s) OC 12 / Gig. Ethernet OC 3 (155 Mb/s) 45 Mb/s and less 12

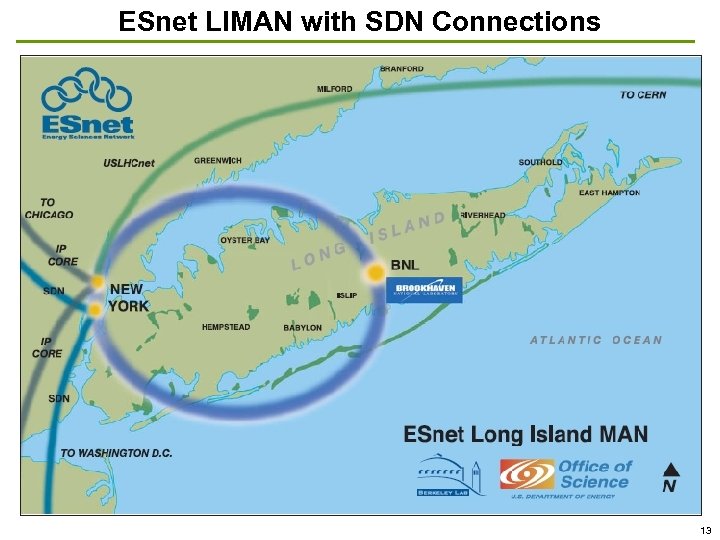

ESnet LIMAN with SDN Connections 13

ESnet LIMAN with SDN Connections 13

LIMAN and BNL • ATLAS (A Toroidal LHC Apparatu. S), is one of four detectors located at the Large Hadron Collider (LHC) located at CERN • BNL is the largest of the ATLAS Tier 1 centers and the only one in the U. S, and so is responsible for archiving and processing approximately 20 percent of the ATLAS raw data • During a recent multi-week exercise, BNL was able to sustain an average transfer rate from CERN to their disk arrays of 191 MB/s (~1. 5 Gb/s) compared to a target rate of 200 MB/s o This was in addition to “normal” BNL site traffic 14

LIMAN and BNL • ATLAS (A Toroidal LHC Apparatu. S), is one of four detectors located at the Large Hadron Collider (LHC) located at CERN • BNL is the largest of the ATLAS Tier 1 centers and the only one in the U. S, and so is responsible for archiving and processing approximately 20 percent of the ATLAS raw data • During a recent multi-week exercise, BNL was able to sustain an average transfer rate from CERN to their disk arrays of 191 MB/s (~1. 5 Gb/s) compared to a target rate of 200 MB/s o This was in addition to “normal” BNL site traffic 14

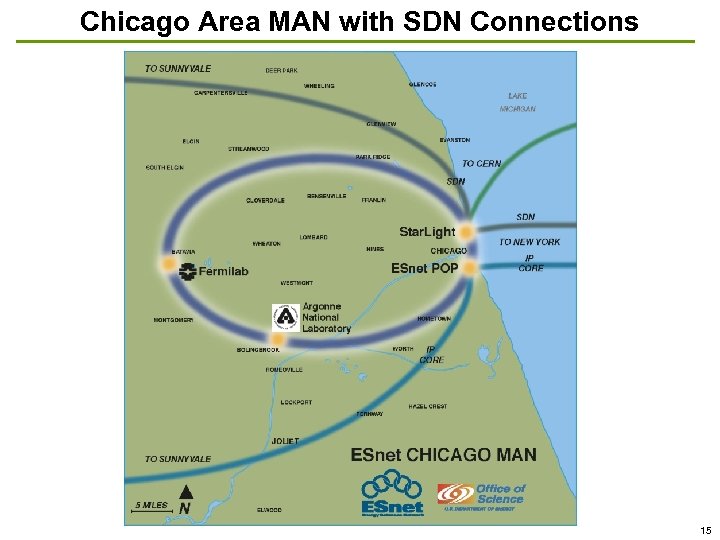

Chicago Area MAN with SDN Connections 15

Chicago Area MAN with SDN Connections 15

CHIMAN: FNAL and ANL • Fermi National Laboratory is the only US Tier 1 center for the Compact Muon Solenoid (CMS) experiment at LHC • Argonne National Laboratory will house a 5 -teraflop IBM Blue. Gene computer part of the National Leadership Computing Facility • Together with ESnet, FNAL and ANL will build the Chicago MAN (CHIMAN) to accommodate the vast amounts of data these facilities will generate and receive Five 10 GE circuits will go into FNAL o Three 10 GE circuits will go into ANL o Ring connectivity to Star. Light and to the Chicago ESnet POP o 16

CHIMAN: FNAL and ANL • Fermi National Laboratory is the only US Tier 1 center for the Compact Muon Solenoid (CMS) experiment at LHC • Argonne National Laboratory will house a 5 -teraflop IBM Blue. Gene computer part of the National Leadership Computing Facility • Together with ESnet, FNAL and ANL will build the Chicago MAN (CHIMAN) to accommodate the vast amounts of data these facilities will generate and receive Five 10 GE circuits will go into FNAL o Three 10 GE circuits will go into ANL o Ring connectivity to Star. Light and to the Chicago ESnet POP o 16

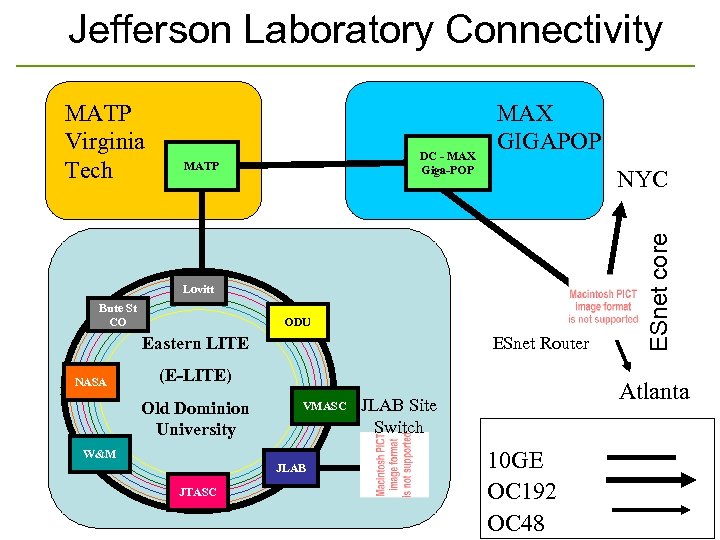

Jefferson Laboratory Connectivity DC - MAX Giga-POP MATP MAX GIGAPOP NYC Lovitt Bute St CO ODU Eastern LITE NASA ESnet Router (E-LITE) Old Dominion University VMASC W&M JLAB JTASC ESnet core MATP Virginia Tech Atlanta JLAB Site Switch 10 GE OC 192 OC 48

Jefferson Laboratory Connectivity DC - MAX Giga-POP MATP MAX GIGAPOP NYC Lovitt Bute St CO ODU Eastern LITE NASA ESnet Router (E-LITE) Old Dominion University VMASC W&M JLAB JTASC ESnet core MATP Virginia Tech Atlanta JLAB Site Switch 10 GE OC 192 OC 48

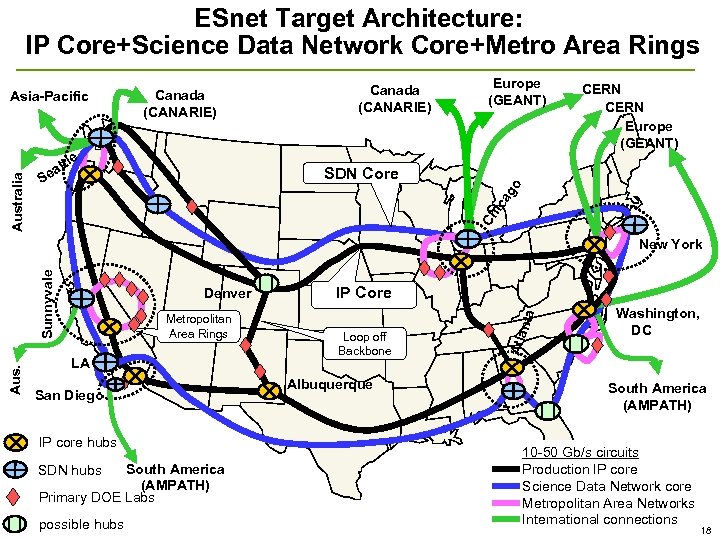

ESnet Target Architecture: IP Core+Science Data Network Core+Metro Area Rings Asia-Pacific Canada (CANARIE) Europe (GEANT) CERN Europe (GEANT) SDN Core ic ag o S Ch Australia le tt ea Canada (CANARIE) Aus. Metropolitan Area Rings LA San Diego IP core hubs South America (AMPATH) Primary DOE Labs SDN hubs possible hubs IP Core Loop off Backbone Albuquerque nta Denver Atla Sunnyvale New York Washington, DC South America (AMPATH) 10 -50 Gb/s circuits Production IP core Science Data Network core Metropolitan Area Networks International connections 18

ESnet Target Architecture: IP Core+Science Data Network Core+Metro Area Rings Asia-Pacific Canada (CANARIE) Europe (GEANT) CERN Europe (GEANT) SDN Core ic ag o S Ch Australia le tt ea Canada (CANARIE) Aus. Metropolitan Area Rings LA San Diego IP core hubs South America (AMPATH) Primary DOE Labs SDN hubs possible hubs IP Core Loop off Backbone Albuquerque nta Denver Atla Sunnyvale New York Washington, DC South America (AMPATH) 10 -50 Gb/s circuits Production IP core Science Data Network core Metropolitan Area Networks International connections 18

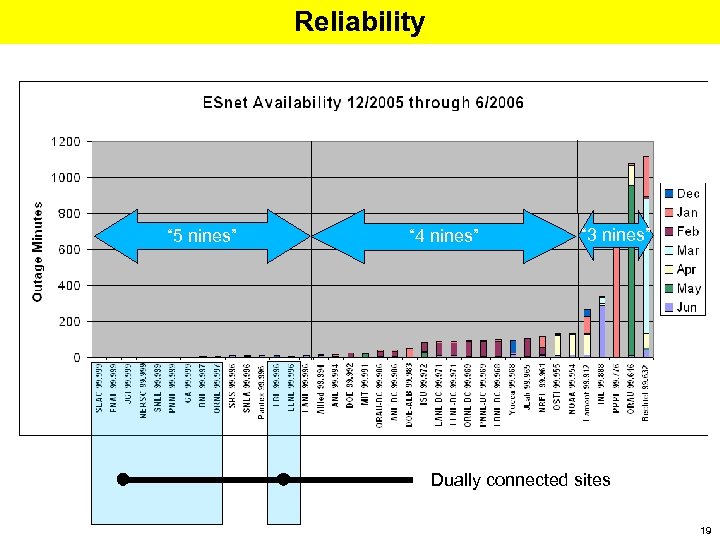

Reliability “ 5 nines” “ 4 nines” “ 3 nines” Dually connected sites 19

Reliability “ 5 nines” “ 4 nines” “ 3 nines” Dually connected sites 19

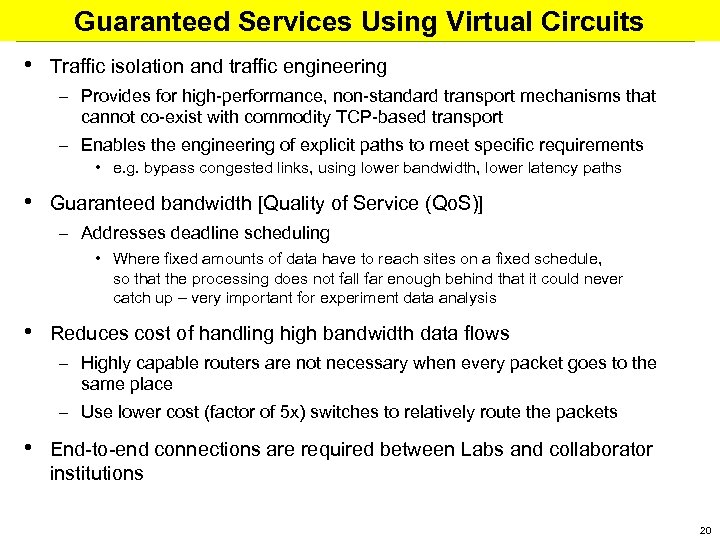

Guaranteed Services Using Virtual Circuits • Traffic isolation and traffic engineering – Provides for high-performance, non-standard transport mechanisms that cannot co-exist with commodity TCP-based transport – Enables the engineering of explicit paths to meet specific requirements • e. g. bypass congested links, using lower bandwidth, lower latency paths • Guaranteed bandwidth [Quality of Service (Qo. S)] – Addresses deadline scheduling • Where fixed amounts of data have to reach sites on a fixed schedule, so that the processing does not fall far enough behind that it could never catch up – very important for experiment data analysis • Reduces cost of handling high bandwidth data flows – Highly capable routers are not necessary when every packet goes to the same place – Use lower cost (factor of 5 x) switches to relatively route the packets • End-to-end connections are required between Labs and collaborator institutions 20

Guaranteed Services Using Virtual Circuits • Traffic isolation and traffic engineering – Provides for high-performance, non-standard transport mechanisms that cannot co-exist with commodity TCP-based transport – Enables the engineering of explicit paths to meet specific requirements • e. g. bypass congested links, using lower bandwidth, lower latency paths • Guaranteed bandwidth [Quality of Service (Qo. S)] – Addresses deadline scheduling • Where fixed amounts of data have to reach sites on a fixed schedule, so that the processing does not fall far enough behind that it could never catch up – very important for experiment data analysis • Reduces cost of handling high bandwidth data flows – Highly capable routers are not necessary when every packet goes to the same place – Use lower cost (factor of 5 x) switches to relatively route the packets • End-to-end connections are required between Labs and collaborator institutions 20

OSCARS: Guaranteed Bandwidth VC Service For SC Science • ESnet On-demand Secured Circuits and Advanced Reservation System (OSCARS) • To ensure compatibility, the design and implementation is done in collaboration with the other major science R&E networks and end sites o Internet 2: Bandwidth Reservation for User Work (BRUW) - Development of common code base o GEANT: Bandwidth on Demand (GN 2 -JRA 3), Performance and Allocated Capacity for End-users (SA 3 -PACE) and Advance Multi-domain Provisioning System (AMPS) - Extends to NRENs BNL: Tera. Paths - A Qo. S Enabled Collaborative Data Sharing Infrastructure for Petascale Computing Research o GA: Network Quality of Service for Magnetic Fusion Research o SLAC: Internet End-to-end Performance Monitoring (IEPM) o USN: Experimental Ultra-Scale Network Testbed for Large-Scale Science o • In its current phase this effort is being funded as a research project by the Office of Science, Mathematical, Information, and Computational Sciences (MICS) Network R&D Program • A prototype service has been deployed as a proof of concept To date more then 20 accounts have been created for beta users, collaborators, and developers o More then 100 reservation requests have been processed o 21

OSCARS: Guaranteed Bandwidth VC Service For SC Science • ESnet On-demand Secured Circuits and Advanced Reservation System (OSCARS) • To ensure compatibility, the design and implementation is done in collaboration with the other major science R&E networks and end sites o Internet 2: Bandwidth Reservation for User Work (BRUW) - Development of common code base o GEANT: Bandwidth on Demand (GN 2 -JRA 3), Performance and Allocated Capacity for End-users (SA 3 -PACE) and Advance Multi-domain Provisioning System (AMPS) - Extends to NRENs BNL: Tera. Paths - A Qo. S Enabled Collaborative Data Sharing Infrastructure for Petascale Computing Research o GA: Network Quality of Service for Magnetic Fusion Research o SLAC: Internet End-to-end Performance Monitoring (IEPM) o USN: Experimental Ultra-Scale Network Testbed for Large-Scale Science o • In its current phase this effort is being funded as a research project by the Office of Science, Mathematical, Information, and Computational Sciences (MICS) Network R&D Program • A prototype service has been deployed as a proof of concept To date more then 20 accounts have been created for beta users, collaborators, and developers o More then 100 reservation requests have been processed o 21

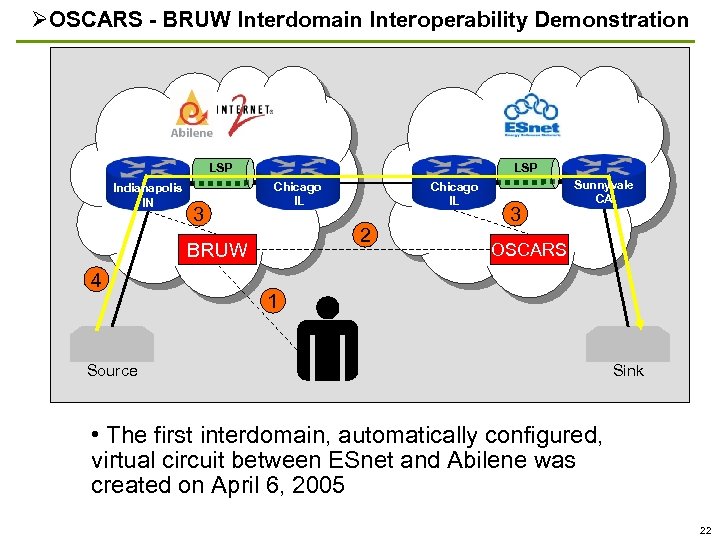

OSCARS - BRUW Interdomain Interoperability Demonstration LSP Indianapolis IN 3 LSP Chicago IL 2 BRUW 4 Chicago IL 3 Sunnyvale CA OSCARS 1 Source Sink • The first interdomain, automatically configured, virtual circuit between ESnet and Abilene was created on April 6, 2005 22

OSCARS - BRUW Interdomain Interoperability Demonstration LSP Indianapolis IN 3 LSP Chicago IL 2 BRUW 4 Chicago IL 3 Sunnyvale CA OSCARS 1 Source Sink • The first interdomain, automatically configured, virtual circuit between ESnet and Abilene was created on April 6, 2005 22

A Few URLs • ESnet Home Page o • National Labs and User Facilities o • http: //www. sc. doe. gov/sub/organization. htm ESnet Availability Reports o • http: //www. es. net http: //calendar. es. net/ OSCARS Documentation o http: //www. es. net/oscars/index. html 23

A Few URLs • ESnet Home Page o • National Labs and User Facilities o • http: //www. sc. doe. gov/sub/organization. htm ESnet Availability Reports o • http: //www. es. net http: //calendar. es. net/ OSCARS Documentation o http: //www. es. net/oscars/index. html 23