be8eb50793c33d6d016a9547eb54d673.ppt

- Количество слайдов: 46

End-to-end Bandwidth Estimation in the Wide Internet Daniele Croce Ph. D dissertation, April 16, 2010

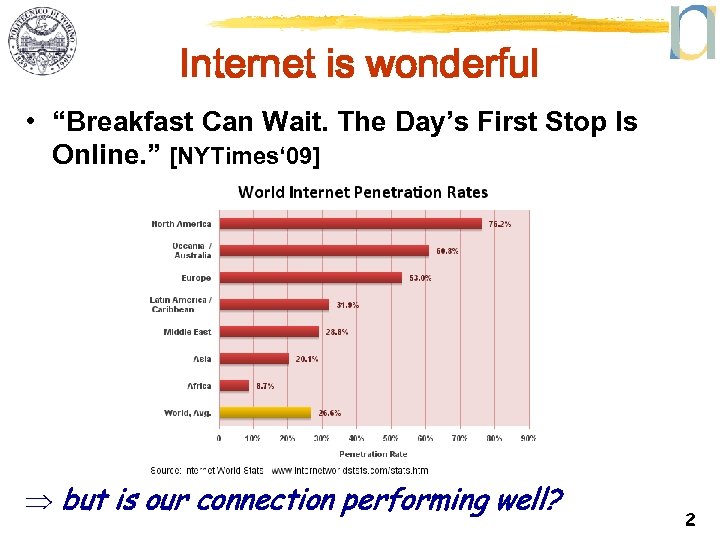

Internet is wonderful • “Breakfast Can Wait. The Day’s First Stop Is Online. ” [NYTimes‘ 09] Þ but is our connection performing well? 2

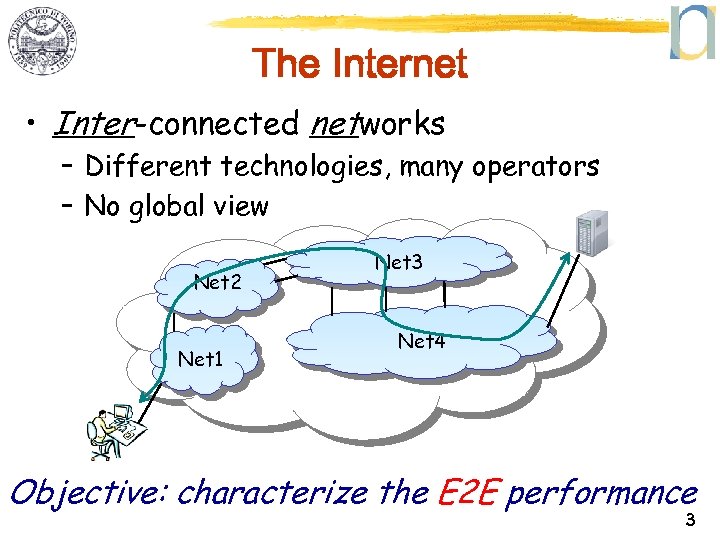

The Internet • Inter-connected networks – Different technologies, many operators – No global view Net 2 Net 1 Net 3 Net 4 Objective: characterize the E 2 E performance 3

Performance metrics • Simple metrics – Packet loss – Delay (One-Way, RTT), jitter – (TCP) throughput • Advanced metrics – End-to-end capacity C=min(Ci) – End-to-end available bandwidth (AB) • i. e. , the unused capacity A=min(Ai) 4

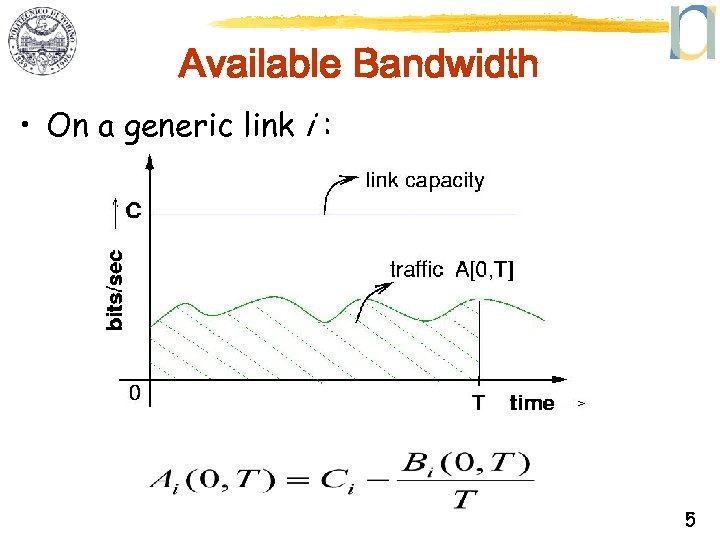

Available Bandwidth • On a generic link i : 5

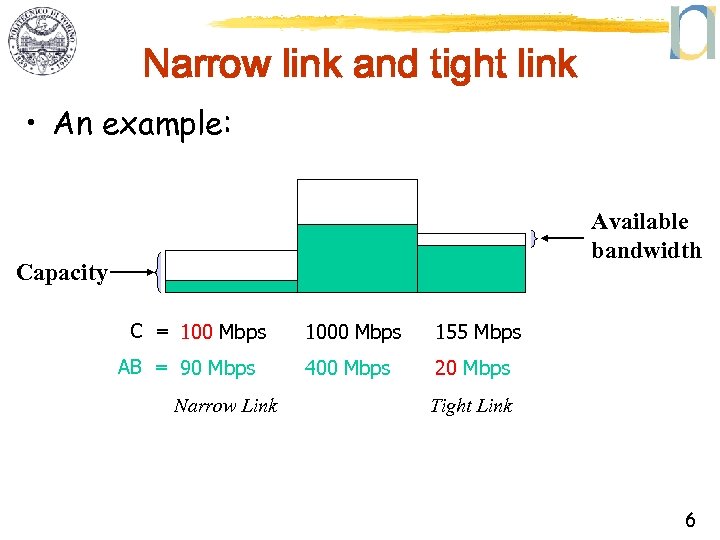

Narrow link and tight link • An example: Available bandwidth Capacity C = 100 Mbps AB = 90 Mbps Narrow Link 1000 Mbps 155 Mbps 400 Mbps 20 Mbps Tight Link 6

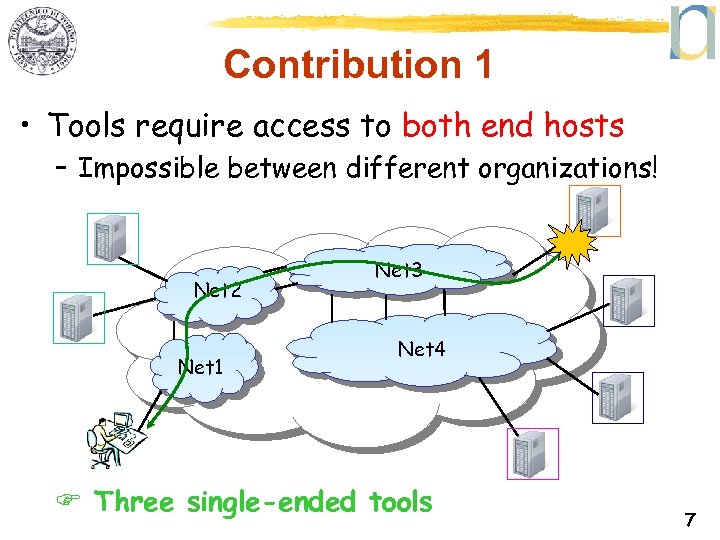

Contribution 1 • Tools require access to both end hosts – Impossible between different organizations! Net 2 Net 1 Net 3 Net 4 F Three single-ended tools 7

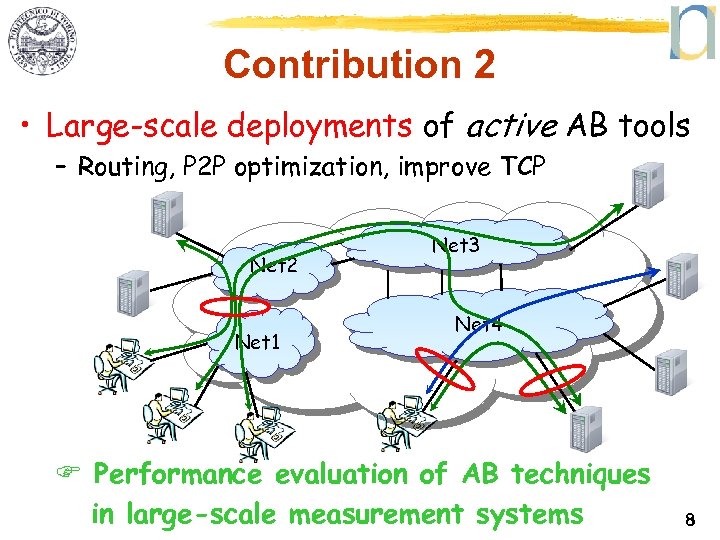

Contribution 2 • Large-scale deployments of active AB tools – Routing, P 2 P optimization, improve TCP Net 2 Net 1 Net 3 Net 4 F Performance evaluation of AB techniques in large-scale measurement systems 8

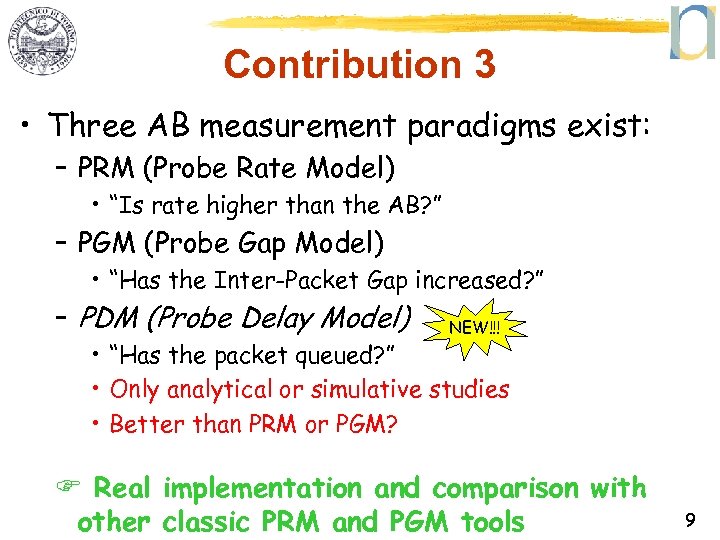

Contribution 3 • Three AB measurement paradigms exist: – PRM (Probe Rate Model) • “Is rate higher than the AB? ” – PGM (Probe Gap Model) • “Has the Inter-Packet Gap increased? ” – PDM (Probe Delay Model) NEW!!! • “Has the packet queued? ” • Only analytical or simulative studies • Better than PRM or PGM? F Real implementation and comparison with other classic PRM and PGM tools 9

SINGLE-ENDED TECHNIQUES 10

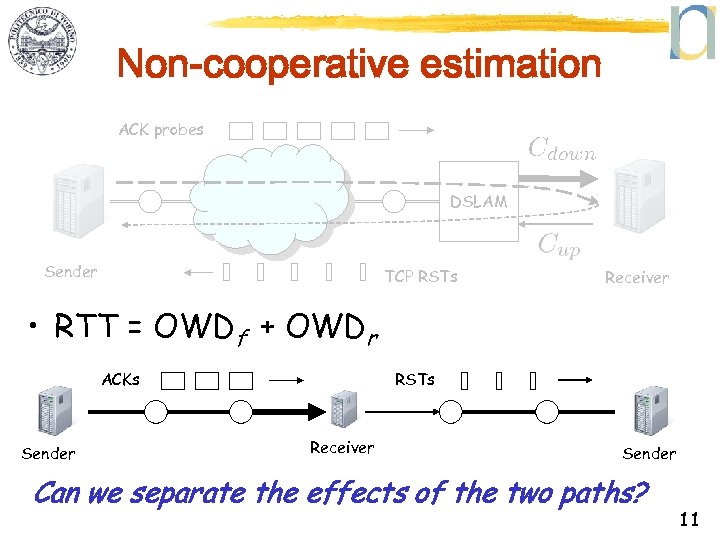

Non-cooperative estimation ACK probes DSLAM Sender TCP RSTs Receiver • RTT = OWDf + OWDr ACKs Sender RSTs Receiver Sender Can we separate the effects of the two paths? 11

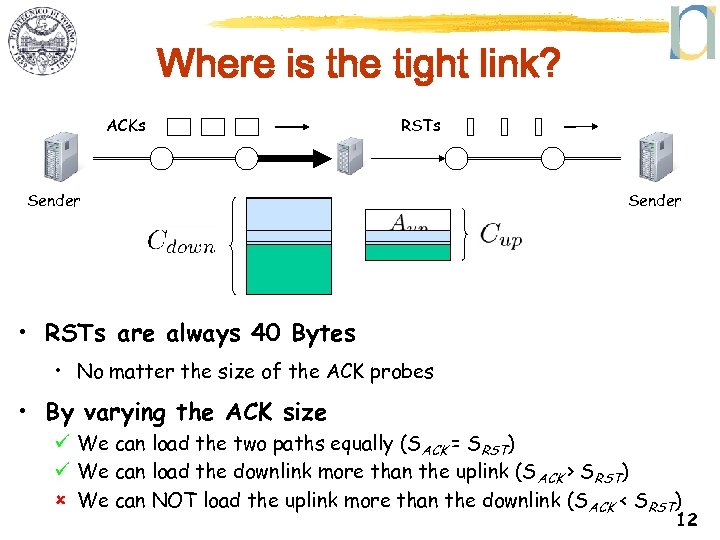

Where is the tight link? ACKs RSTs Sender • RSTs are always 40 Bytes • No matter the size of the ACK probes • By varying the ACK size ü We can load the two paths equally (SACK = SRST) ü We can load the downlink more than the uplink (SACK > SRST) û We can NOT load the uplink more than the downlink (S ACK < SRST) 12

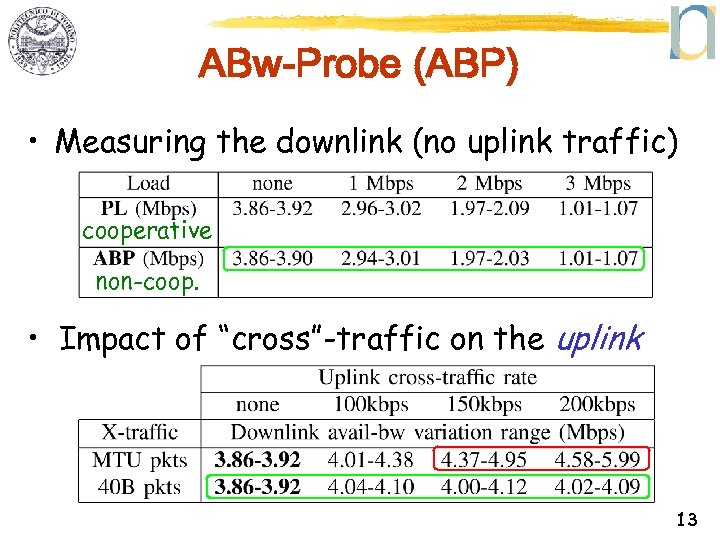

ABw-Probe (ABP) • Measuring the downlink (no uplink traffic) cooperative non-coop. • Impact of “cross”-traffic on the uplink 13

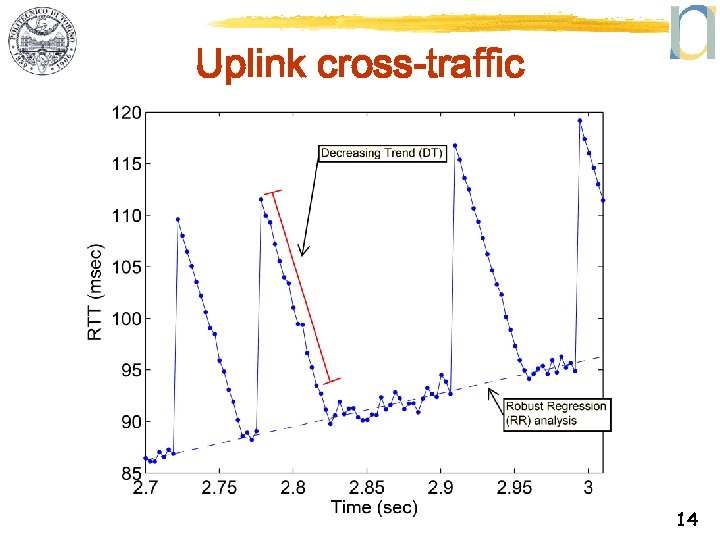

Uplink cross-traffic 14

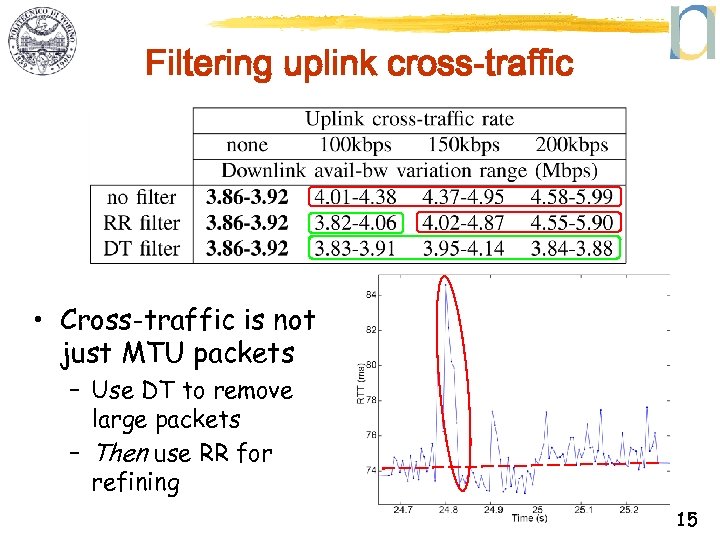

Filtering uplink cross-traffic • Cross-traffic is not just MTU packets – Use DT to remove large packets – Then use RR for refining 15

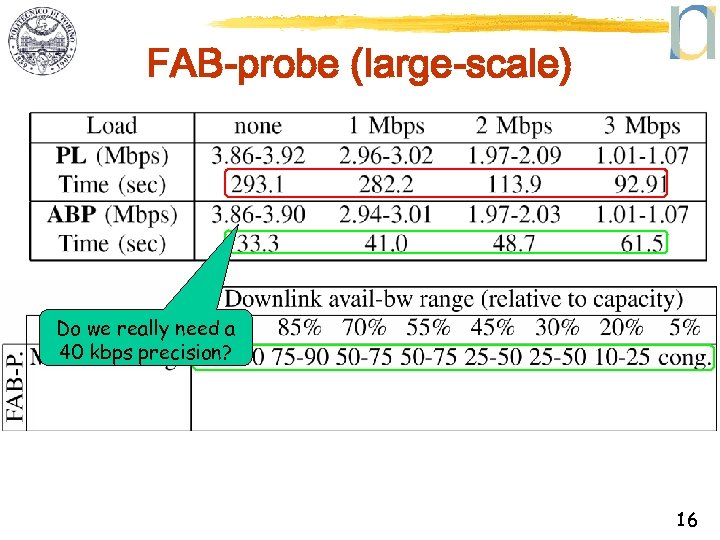

FAB-probe (large-scale) Do we really need a 40 kbps precision? 16

Real-world experience • Tested on 1244 ADSL hosts, 10 different ISPs – Participating in Kademlia DHT (e. Mule) • • Used KAD crawler (ACM IMC 2007) Selected ADSL using Maxmind 1. Capacity of the ADSL link 2. A snapshot of the available bandwidth 3. Average AB on over 10 days – – – 82 hosts online for over one month Static IP address Measured every 5 minutes • On average 6 seconds per measurement 17

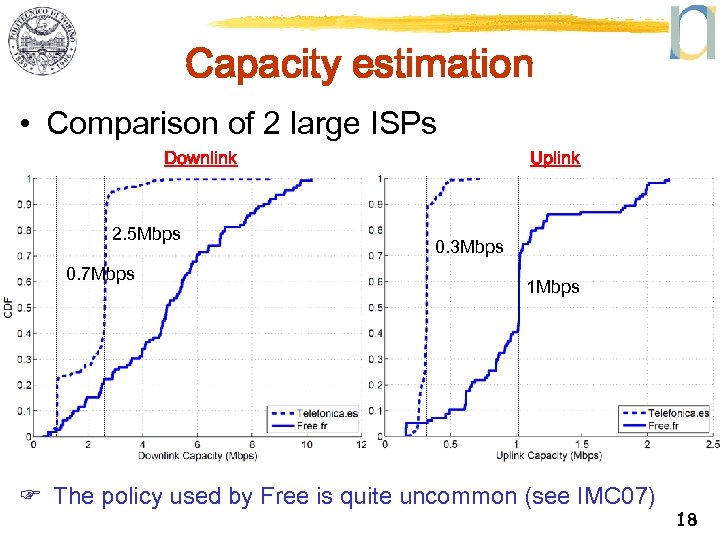

Capacity estimation • Comparison of 2 large ISPs Downlink 2. 5 Mbps 0. 7 Mbps Uplink 0. 3 Mbps 1 Mbps F The policy used by Free is quite uncommon (see IMC 07) 18

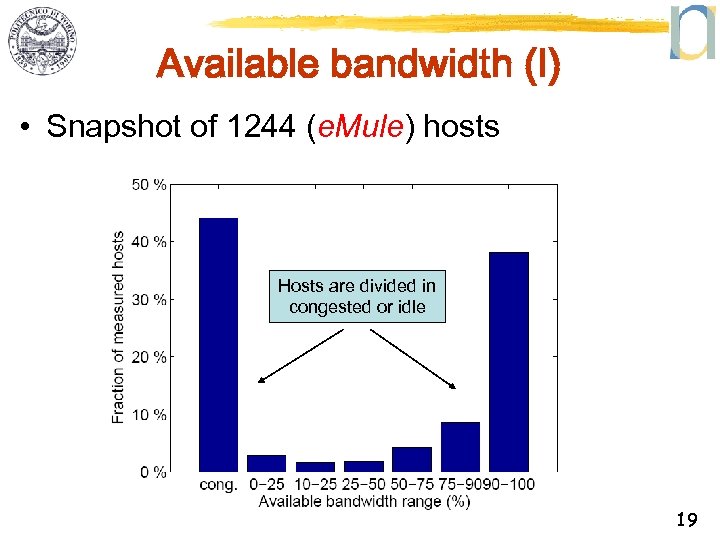

Available bandwidth (I) • Snapshot of 1244 (e. Mule) hosts Hosts are divided in congested or idle 19

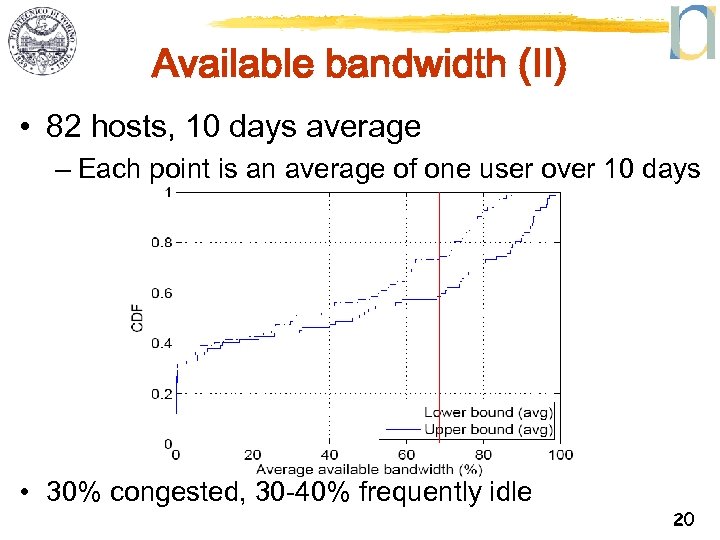

Available bandwidth (II) • 82 hosts, 10 days average – Each point is an average of one user over 10 days • 30% congested, 30 -40% frequently idle 20

ANALYSIS OF LARGE-SCALE AB MEASUREMENT SYSTEMS 21

Motivation • We have a dream: measure AB everywhere – Route selection, server selection – Overlay performance optimization – Improve TCP –. . . • Naïve approach: – pick one of the existing techniques! • BUT what if we all do the same simultaneously? Þ Interference between measurements 22

In brief • Existing techniques – Pathload, Spruce, path. Chirp • Experimental testbed – All tools suffer from mutual interference • But not in the same way!!! – High intrusiveness and overhead • Analytical models – Probability of interference – Measurement bias • What can we do? 23

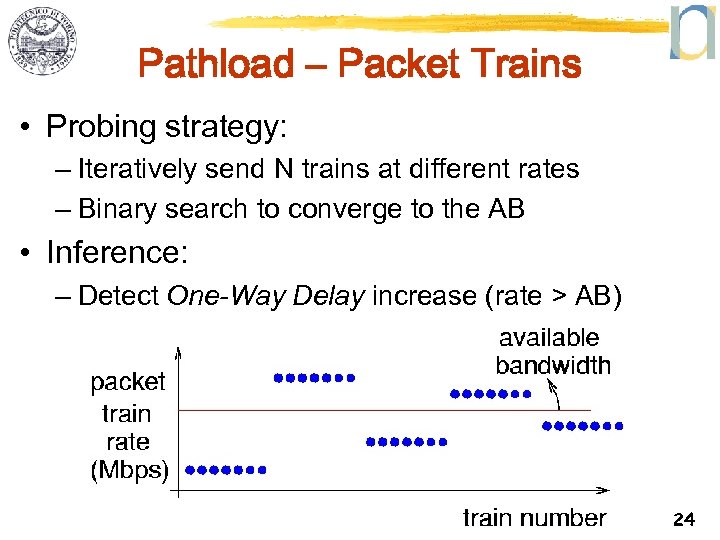

Pathload – Packet Trains • Probing strategy: – Iteratively send N trains at different rates – Binary search to converge to the AB • Inference: – Detect One-Way Delay increase (rate > AB) 24

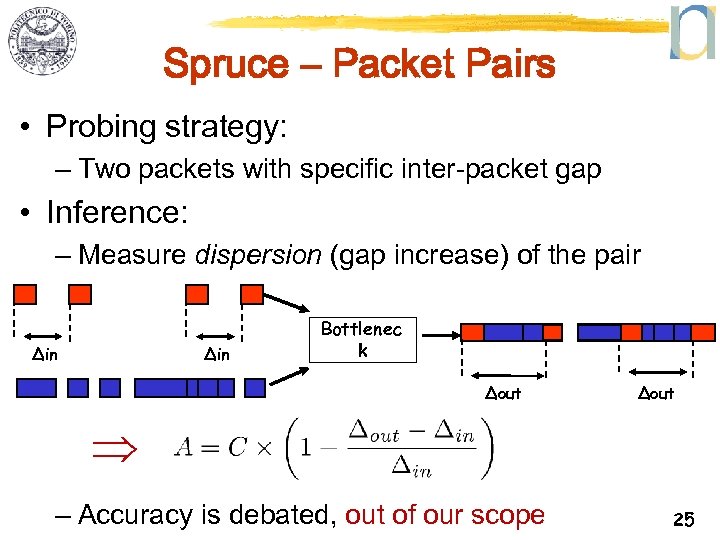

Spruce – Packet Pairs • Probing strategy: – Two packets with specific inter-packet gap • Inference: – Measure dispersion (gap increase) of the pair ∆in Bottlenec k ∆out Þ – Accuracy is debated, out of our scope 25

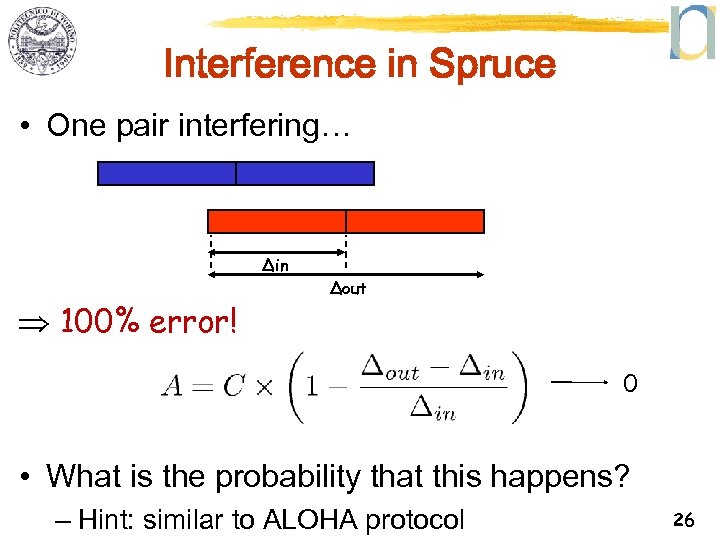

Interference in Spruce • One pair interfering… ∆in Þ 100% error! ∆out 0 • What is the probability that this happens? – Hint: similar to ALOHA protocol 26

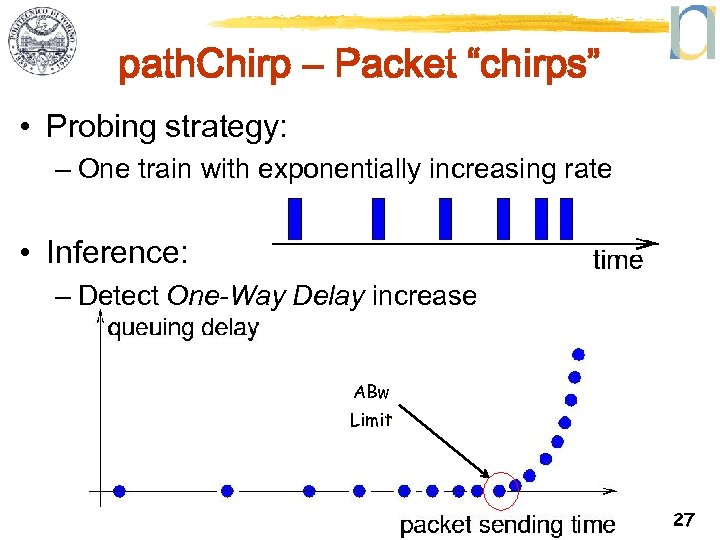

path. Chirp – Packet “chirps” • Probing strategy: – One train with exponentially increasing rate • Inference: – Detect One-Way Delay increase ABw Limit 27

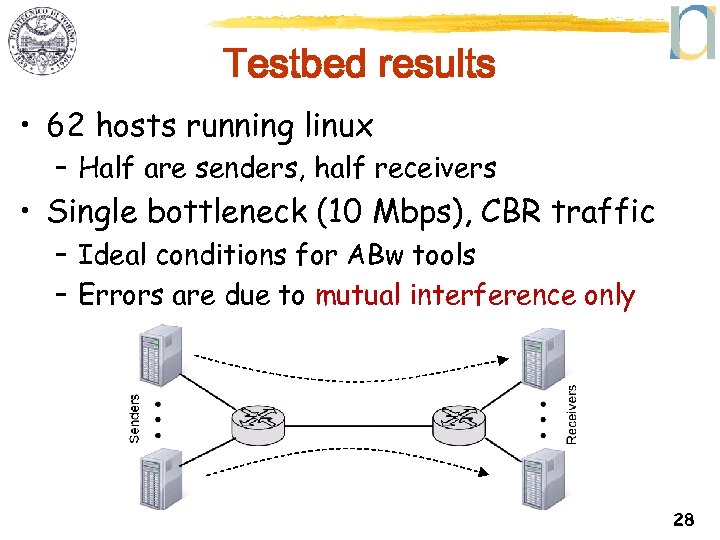

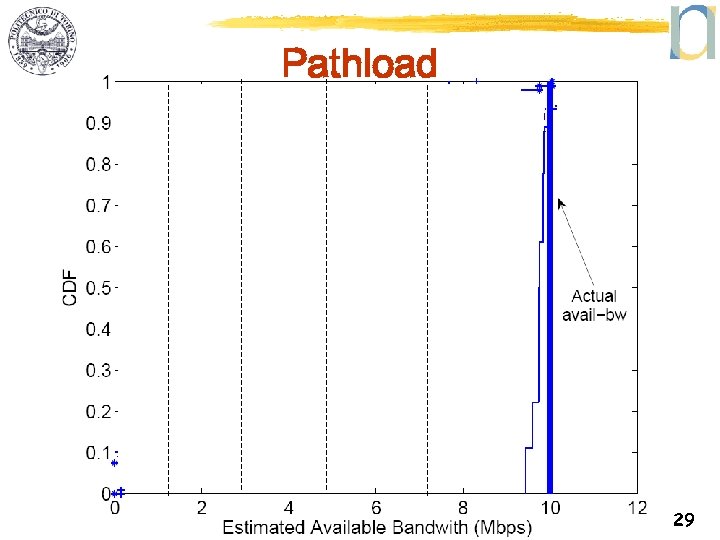

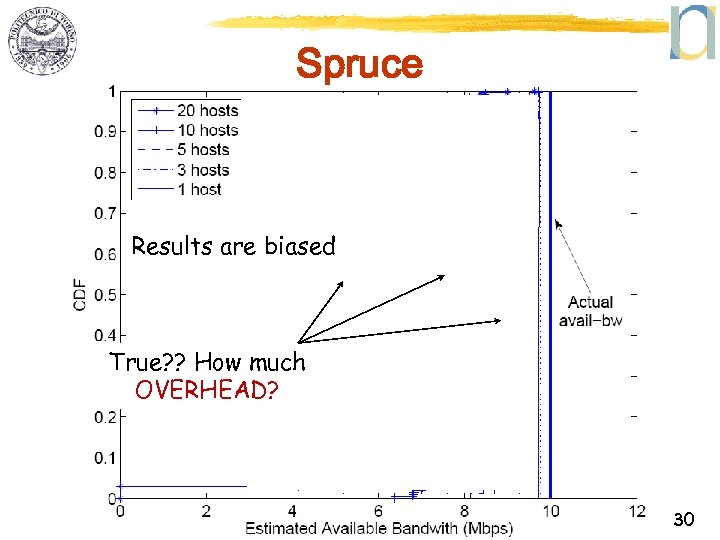

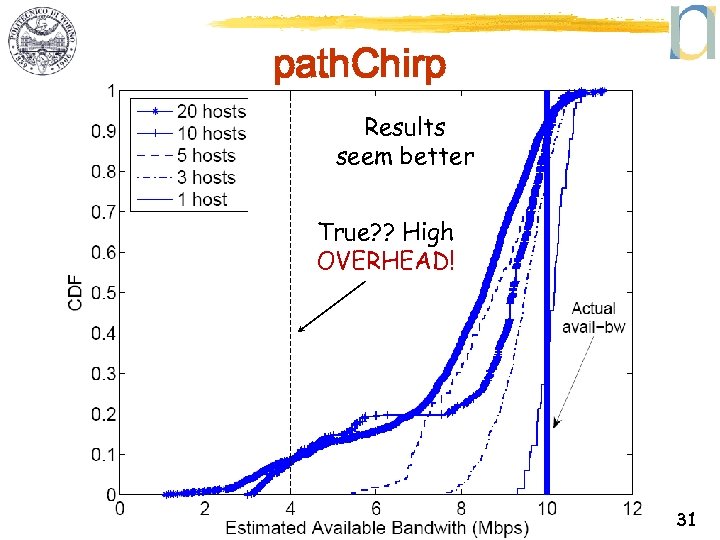

Testbed results • 62 hosts running linux – Half are senders, half receivers • Single bottleneck (10 Mbps), CBR traffic – Ideal conditions for ABw tools – Errors are due to mutual interference only 28

Pathload 29

Spruce Results are biased True? ? How much OVERHEAD? 30

path. Chirp Results seem better True? ? High OVERHEAD! 31

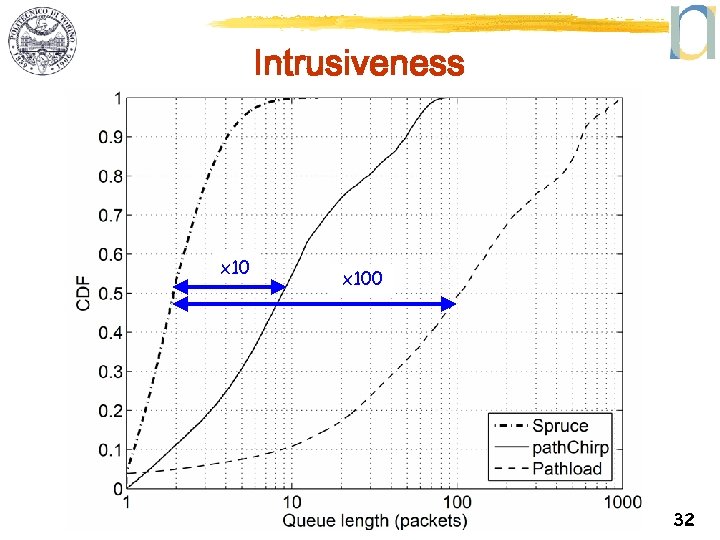

Intrusiveness x 100 32

Possible Solutions • Mutual interference – Direct probing more promising • Simple, Spruce-like algorithms. No binary search – Identify interference (and correct it)? ? • Overhead – “In-band” measurements (piggy-backing) • Best, no overhead at all • Complex! (SIGCOMM 09) + delay constraints – “Out-of-band” measurements • At least, make the overhead scale with the ABw! • Lets help each other! Network Tomography 33

Conclusions • Non-cooperative estimation – Three highly optimized tools – No need to install software or buy new equipment • An Italian ISP already interested! • Analysis of large-scale AB measurements – Tools can not be used off-the-shelf • Mutual interference, Intrusiveness, Overhead – Interference can be predicted and modeled – Discussed possible solutions • Future work includes – Technologies different from ADSL (cable, FTTH) – New, lightweight techniques (passive? ), tomography 34

BACKUP 35

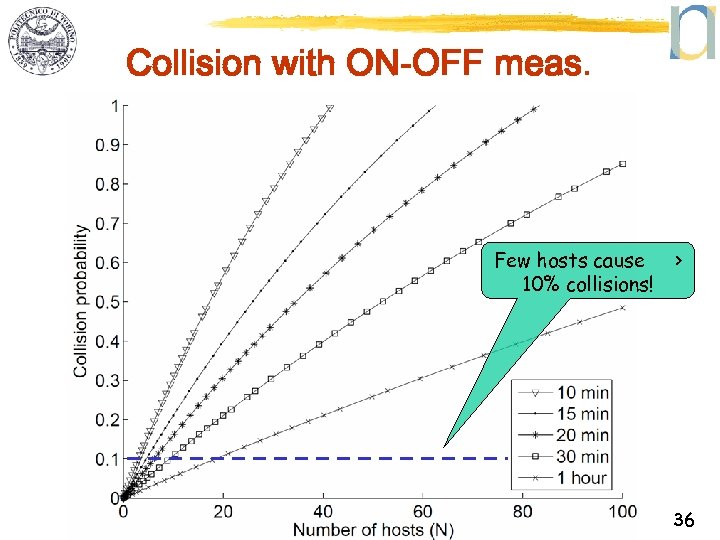

Collision with ON-OFF meas. Few hosts cause 10% collisions! > 36

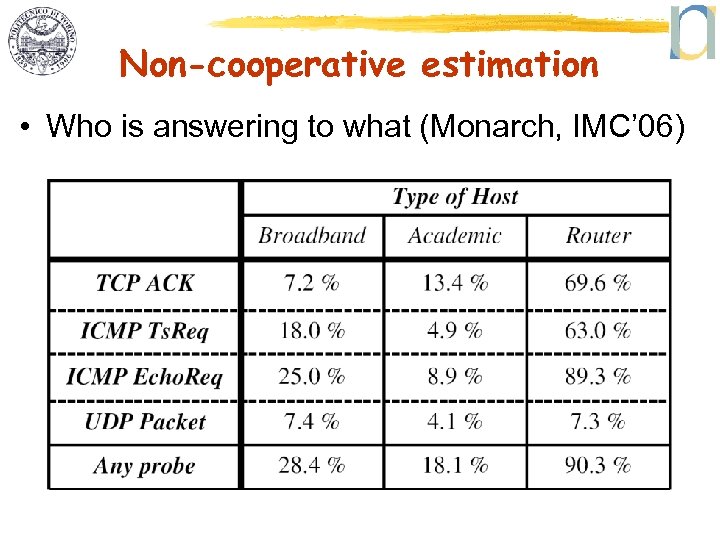

Non-cooperative estimation • Who is answering to what (Monarch, IMC’ 06)

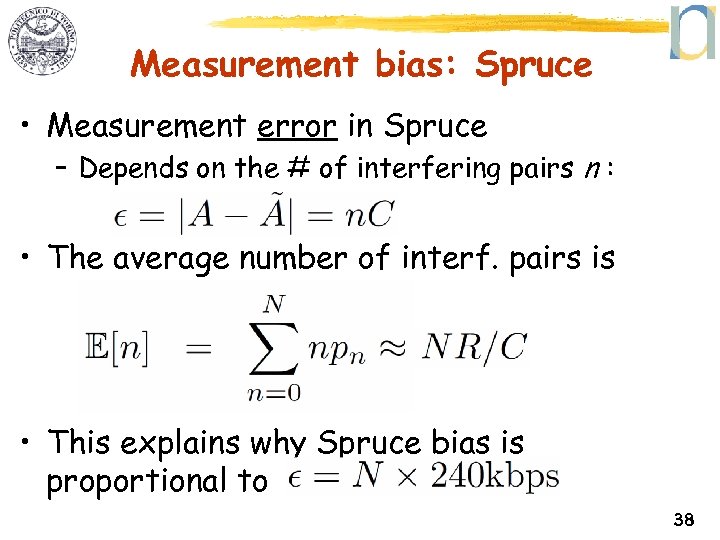

Measurement bias: Spruce • Measurement error in Spruce – Depends on the # of interfering pairs n : • The average number of interf. pairs is • This explains why Spruce bias is proportional to 38

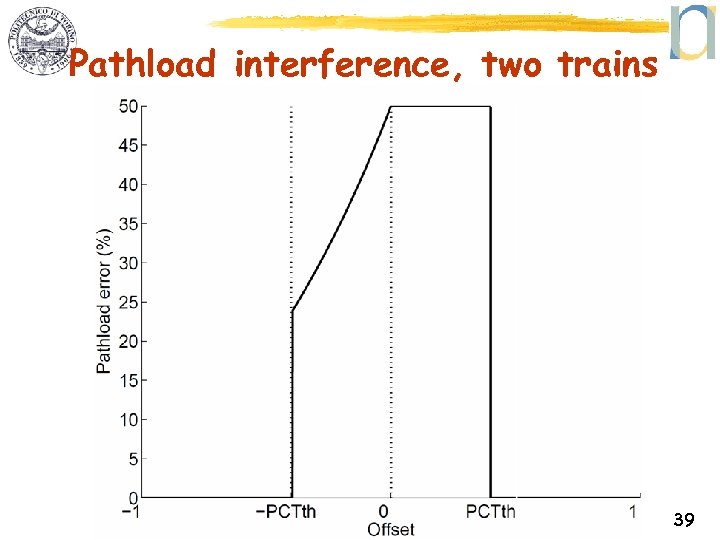

Pathload interference, two trains 39

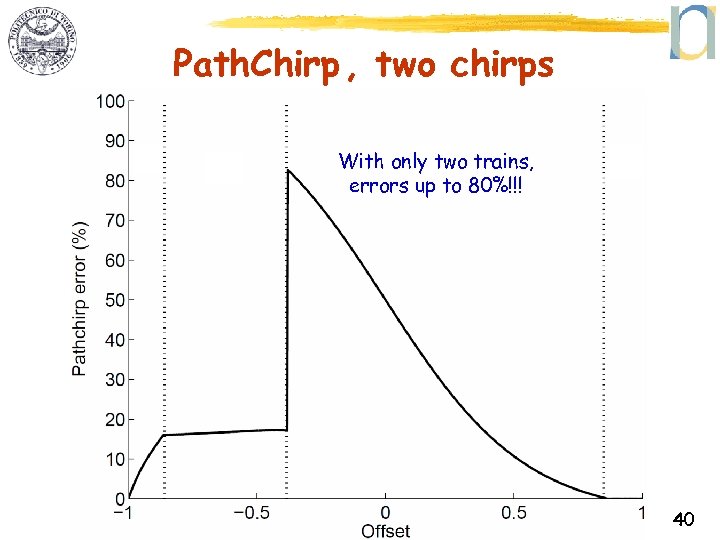

Path. Chirp , two chirps With only two trains, errors up to 80%!!! 40

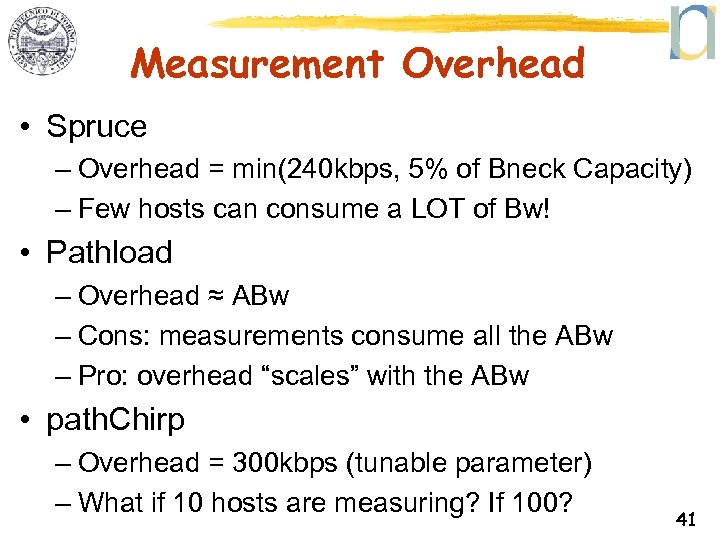

Measurement Overhead • Spruce – Overhead = min(240 kbps, 5% of Bneck Capacity) – Few hosts can consume a LOT of Bw! • Pathload – Overhead ≈ ABw – Cons: measurements consume all the ABw – Pro: overhead “scales” with the ABw • path. Chirp – Overhead = 300 kbps (tunable parameter) – What if 10 hosts are measuring? If 100? 41

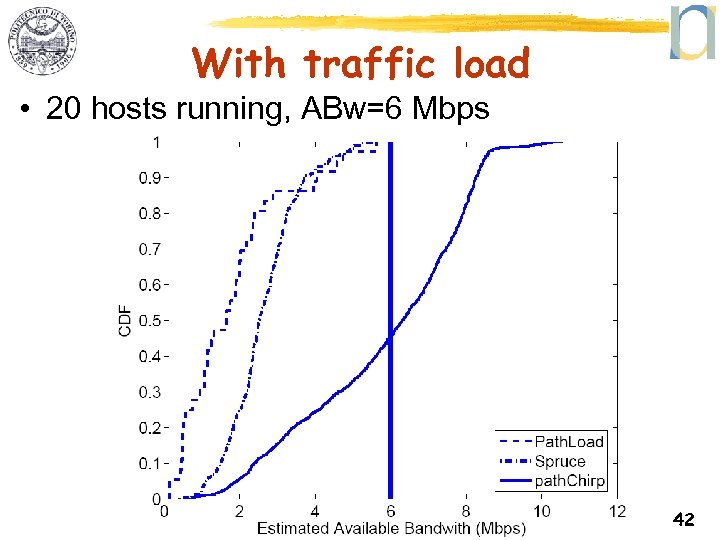

With traffic load • 20 hosts running, ABw=6 Mbps 42

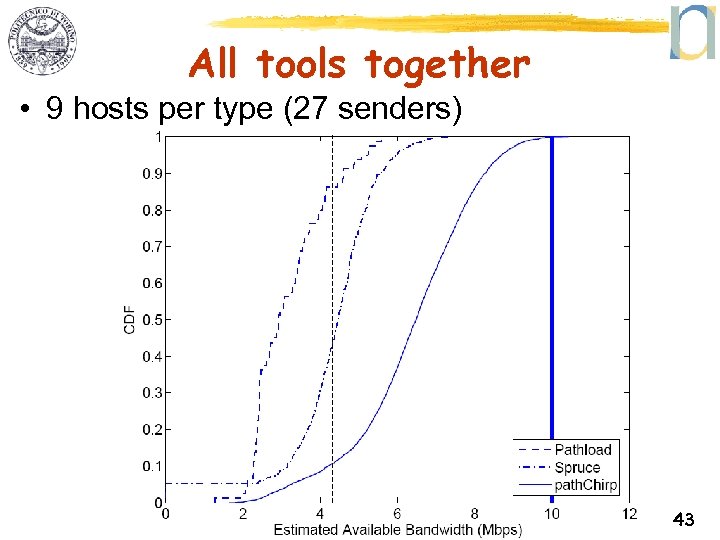

All tools together • 9 hosts per type (27 senders) 43

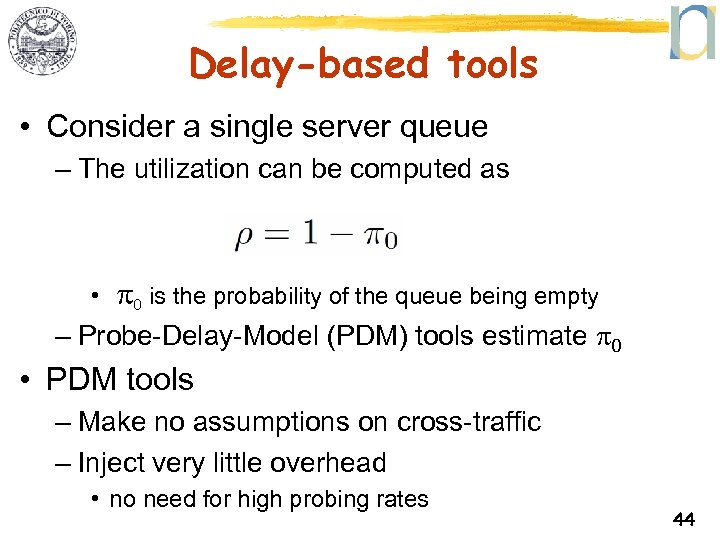

Delay-based tools • Consider a single server queue – The utilization can be computed as • p 0 is the probability of the queue being empty – Probe-Delay-Model (PDM) tools estimate p 0 • PDM tools – Make no assumptions on cross-traffic – Inject very little overhead • no need for high probing rates 44

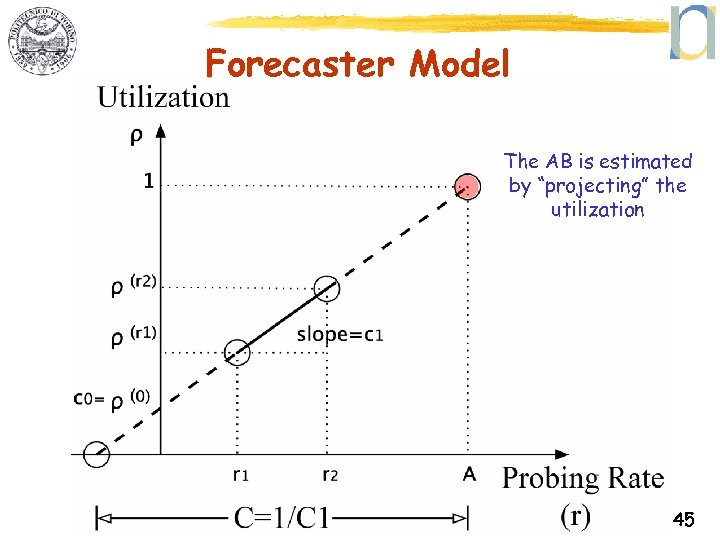

Forecaster Model The AB is estimated by “projecting” the utilization 45

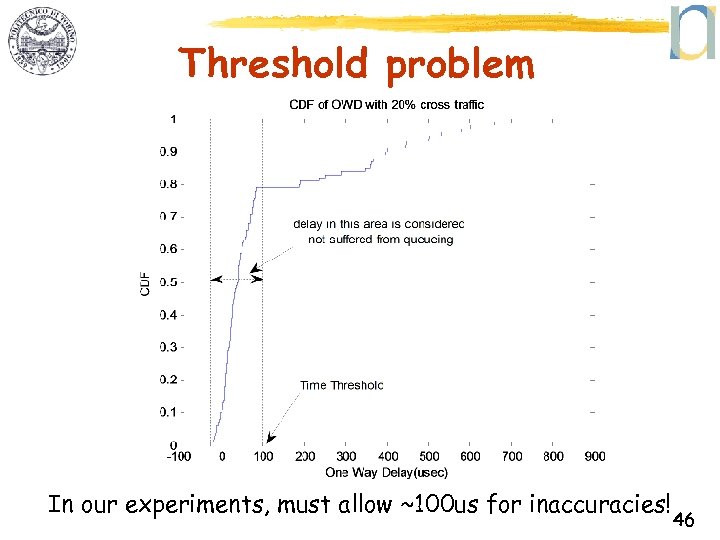

Threshold problem In our experiments, must allow ~100 us for inaccuracies! 46

be8eb50793c33d6d016a9547eb54d673.ppt