4974802e71879e4a1c81449a7659072d.ppt

- Количество слайдов: 48

Enabling Worm and Malware Investigation Using Virtualization (Demo and poster this afternoon) Dongyan Xu, Xuxian Jiang CERIAS and Department of Computer Science Purdue University 1

The Team q Lab FRIENDS Q Q Q q CERIAS Q q Xuxian Jiang (Ph. D. student) Paul Ruth (Ph. D. student) Dongyan Xu (faculty) Eugene H. Spafford External Collaboration Q Microsoft Research

Our Goal In-depth understanding of increasingly sophisticated worm/malware behavior

Outline q q Motivation An integrated approach Q Q Q q Front-end : Collapsar (Part I) Back-end : v. Ground (Part II) Bringing them together On-going work

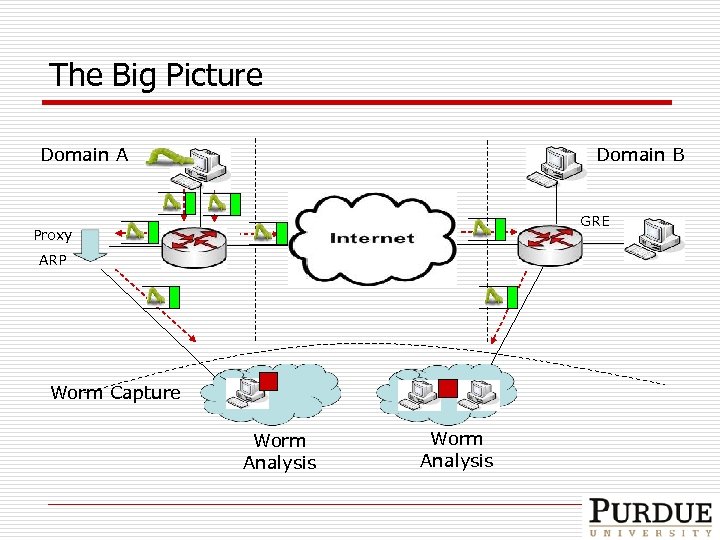

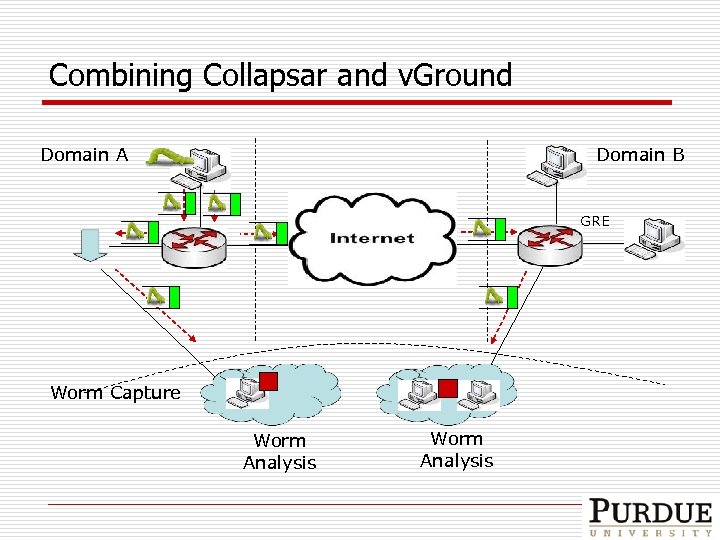

The Big Picture Domain B Domain A GRE Proxy ARP Worm Capture Worm Analysis

Part I Front-End: Collapsar Enabling Worm/Malware Capture * X. Jiang, D. Xu, “Collapsar: a VM-Based Architecture for Network Attack Detention Center”, 13 th USENIX Security Symposium (Security’ 04), 2004.

General Approach q Promise of honeypots Q Providing insights into intruders’ motivations, tactics, and tools v v Q Highly concentrated datasets w/ low noise Low false-positive and false negative rate Discovering unknown vulnerabilities/exploitations v Example: CERT advisory CA-2002 -01 (solaris CDE subprocess control daemon – dtspcd)

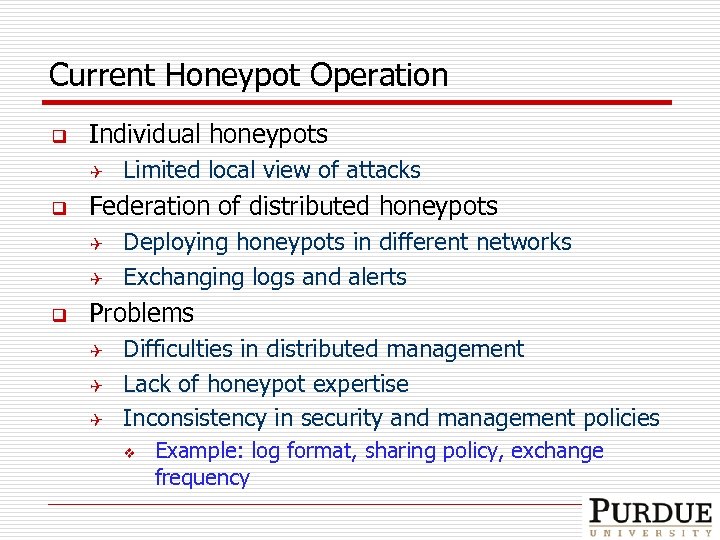

Current Honeypot Operation q Individual honeypots Q q Federation of distributed honeypots Q Q q Limited local view of attacks Deploying honeypots in different networks Exchanging logs and alerts Problems Q Q Q Difficulties in distributed management Lack of honeypot expertise Inconsistency in security and management policies v Example: log format, sharing policy, exchange frequency

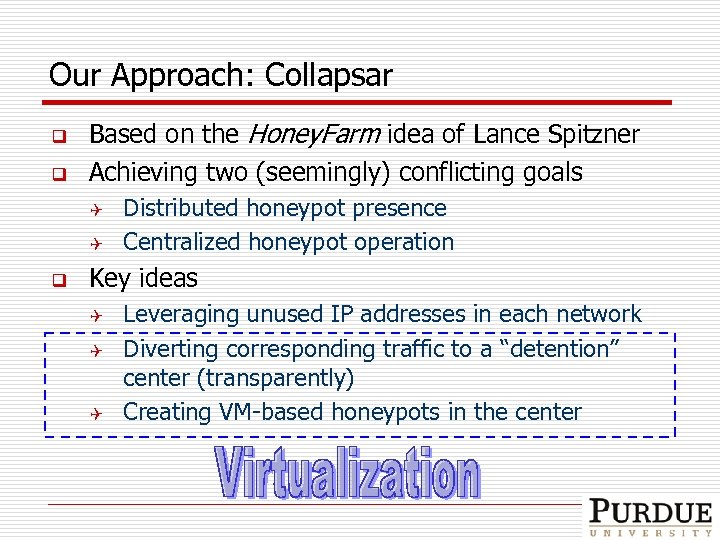

Our Approach: Collapsar q q Based on the Honey. Farm idea of Lance Spitzner Achieving two (seemingly) conflicting goals Q Q q Distributed honeypot presence Centralized honeypot operation Key ideas Q Q Q Leveraging unused IP addresses in each network Diverting corresponding traffic to a “detention” center (transparently) Creating VM-based honeypots in the center

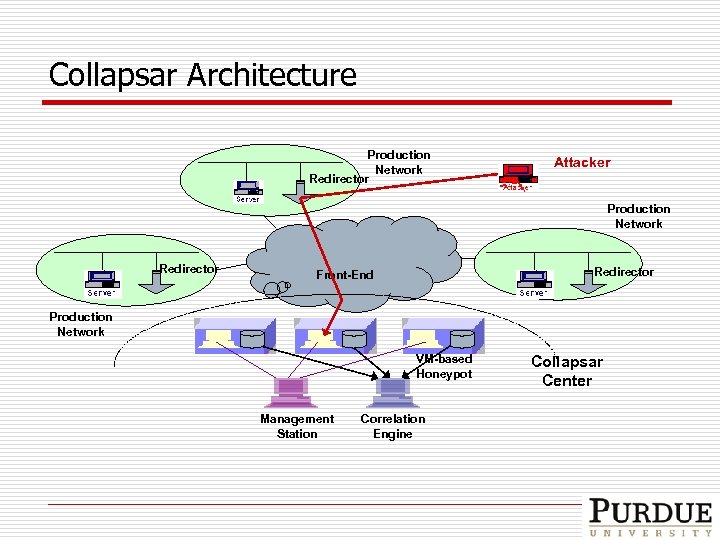

Collapsar Architecture Production Network Redirector Attacker Production Network Redirector Front-End Production Network VM-based Honeypot Management Station Correlation Engine Collapsar Center

Comparison with Current Approaches q Overlay-based approach (e. g. , Net. Bait, Domino overlay) Q Q Honeypots deployed in different sites Logs aggregated from distributed honeypots Data mining performed on aggregated log information Key difference: where the attacks take place (on-site vs. off-site)

Comparison with Current Approaches q Sinkhole networking approach (e. g. , i. Sink ) Q Q Q “Dark” space to monitor Internet abnormality and commotion (e. g. msblaster worms) Limited interaction for better scalability Key difference: contiguous large address blocks (vs. scattered addresses)

Comparison with Current Approaches q Low-interaction approach (e. g. , honeyd, i. Sink ) Q Q Q Highly scalable deployment Low security risks Key difference: emulated services (vs. real things) v v Less effective to reveal unknown vulnerabilities Less effective to capture 0 -day worms

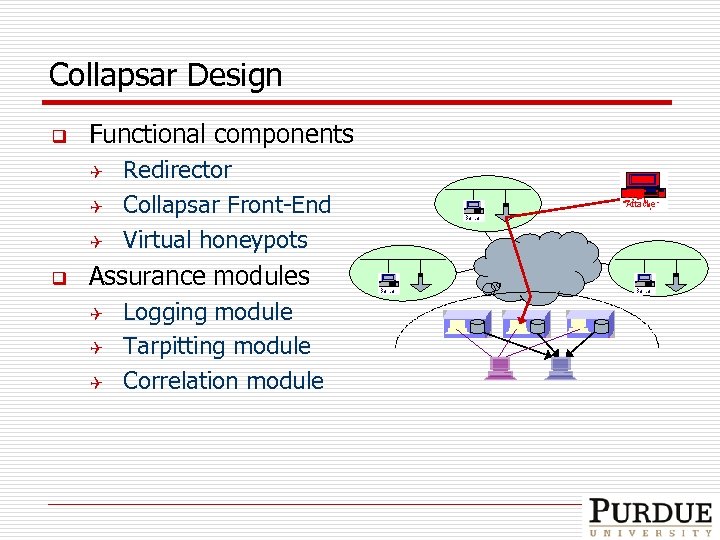

Collapsar Design q Functional components Q Q Q q Redirector Collapsar Front-End Virtual honeypots Assurance modules Q Q Q Logging module Tarpitting module Correlation module

Collapsar Deployment q q Deployed in a local environment for a two-month period in 2003 Traffic redirected from five networks Q Q Q q Three wired LANs One wireless LAN One DSL network ~ 50 honeypots analyzed so far Q Internet worms (MSBlaster, Enbiei, Nachi ) Q Interactive intrusions (Apache, Samba) Q OS: Windows, Linux, Solaris, Free. BSD

Incident: Apache Honeypot/VMware q Vulnerabilities Q Q q Vul 1: Apache (CERT® CA-2002 -17) Vul 2: Ptrace (CERT® VU-6288429) Time-line Q Deployed: 23: 44: 03 pm, 11/24/03 Q Compromised: 09: 33: 55 am, 11/25/03 q Attack monitoring Q Detailed log v http: //www. cs. purdue. edu/homes/jiangx/collapsar

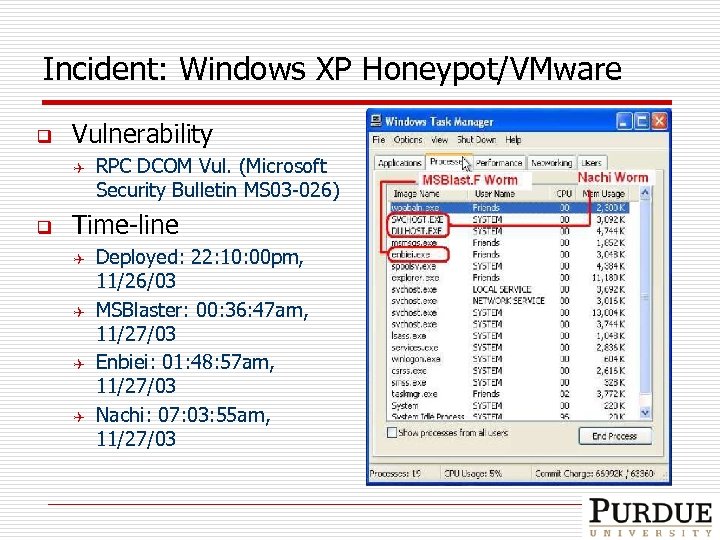

Incident: Windows XP Honeypot/VMware q Vulnerability Q q RPC DCOM Vul. (Microsoft Security Bulletin MS 03 -026) Time-line Q Q Deployed: 22: 10: 00 pm, 11/26/03 MSBlaster: 00: 36: 47 am, 11/27/03 Enbiei: 01: 48: 57 am, 11/27/03 Nachi: 07: 03: 55 am, 11/27/03

Summary (Front-End) q A novel front-end for worm/malware capture Q Q q Distributed presence and centralized operation of honeypots Good potential in attack correlation and log mining Unique features Q Q Q Aggregation of Scattered unused (dark) IP addresses Off-site (relative to participating networks) attack occurrences and monitoring Real services for unknown vulnerability revelation

Part II Back-End: v. Ground Enabling Worm/Malware Analysis * X. Jiang, D. Xu, H. J. Wang, E. H. Spafford, “Virtual Playgrounds for Worm Behavior Investigation”, 8 th International Symposium on Recent Advances in Intrusion Detection (RAID’ 05), 2005.

Basic Approach q A dedicated testbed Q Q q Internet-inna-box (IBM), Blended Threat Lab (Symantec) DETER Goal: understanding worm behavior Q Static analysis/ execution trace v Q Q Reverse Engineering (IDA Pro, GDB, …) Worm experiment within a limited scale Result: v Only enabling relatively static analysis within a small scale

The Reality – Worm Threats q Speed, Virulence, & Sophistication of Worms Q Q Q q Flash/Warhol Worms Polymorphic/Metamorphic Appearances Zombie Networks (DDo. S Attacks, Spam) What we also need Q A high-fidelity, large-scale, live but safe worm playground

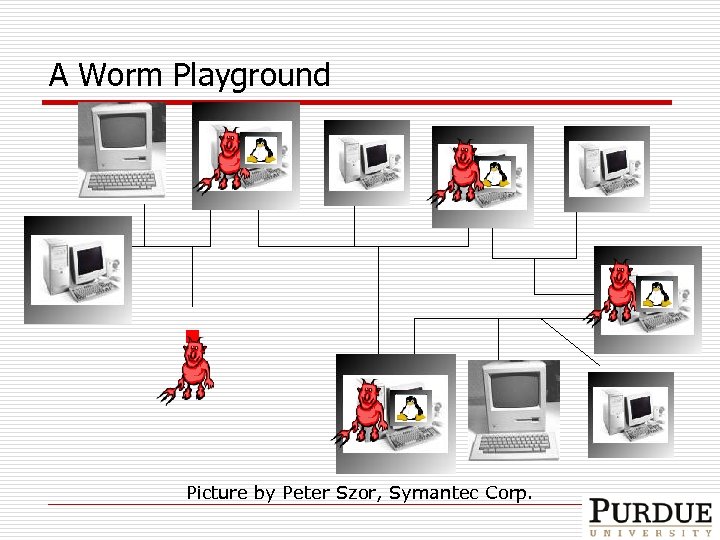

A Worm Playground Picture by Peter Szor, Symantec Corp.

Requirements q Cost & Scalability Q q Confinement Q q How about a topology with 2000+ nodes? In-house private use? Management & user convenience Q Q Diverse environment requirement Recovery from damages from a worm experiment v re-installation, re-configuration, and reboot …

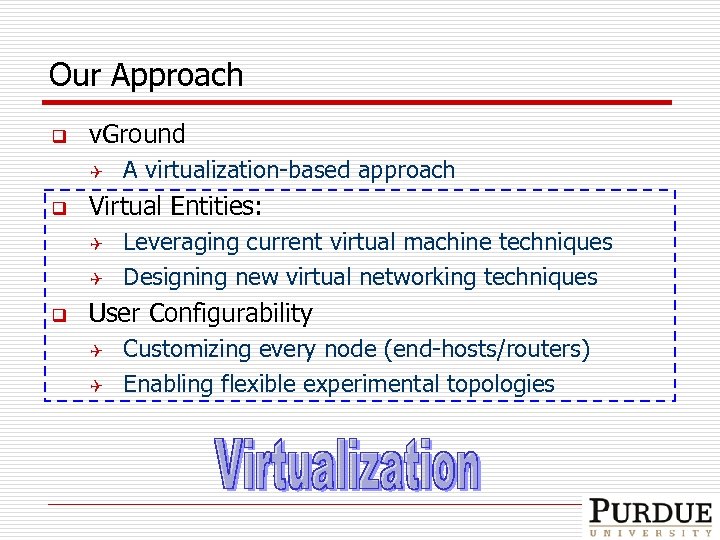

Our Approach q v. Ground Q q Virtual Entities: Q Q q A virtualization-based approach Leveraging current virtual machine techniques Designing new virtual networking techniques User Configurability Q Q Customizing every node (end-hosts/routers) Enabling flexible experimental topologies

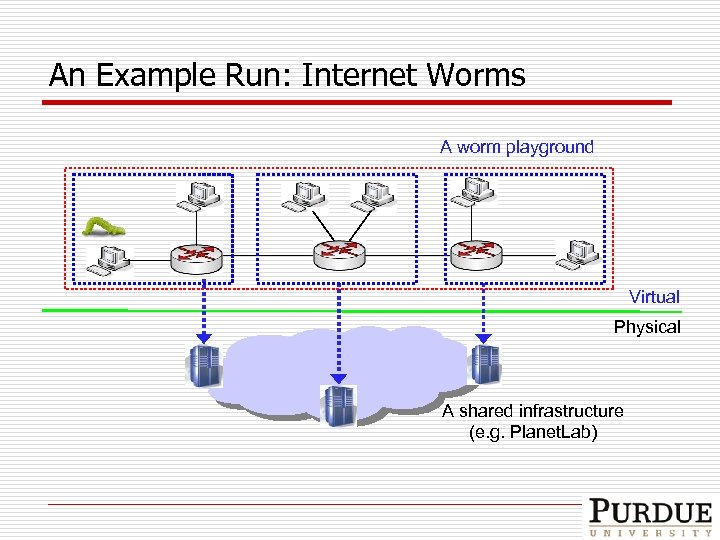

An Example Run: Internet Worms A worm playground Virtual Physical A shared infrastructure (e. g. Planet. Lab)

Key Virtualization Techniques q Full-System Virtualization q Network Virtualization

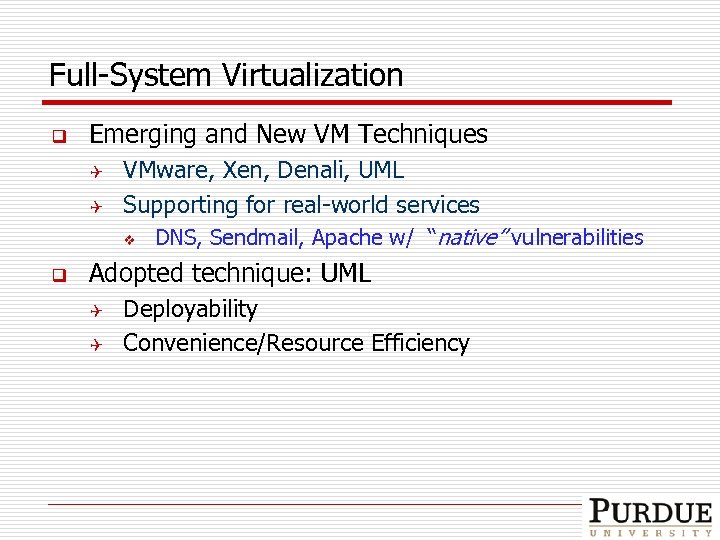

Full-System Virtualization q Emerging and New VM Techniques Q Q q VMware, Xen, Denali, UML Supporting for real-world services v DNS, Sendmail, Apache w/ “native” vulnerabilities Adopted technique: UML Q Q Deployability Convenience/Resource Efficiency

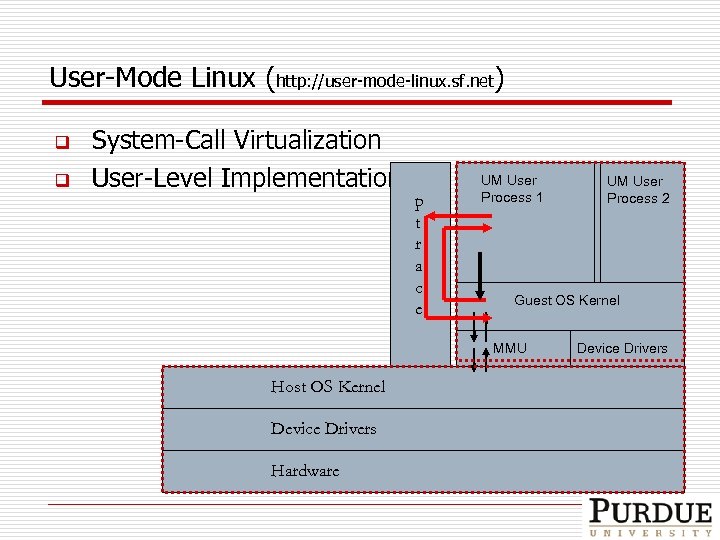

User-Mode Linux (http: //user-mode-linux. sf. net) q q System-Call Virtualization User-Level Implementation p t r a c e UM User Process 1 Guest OS Kernel MMU Host OS Kernel Device Drivers Hardware UM User Process 2 Device Drivers

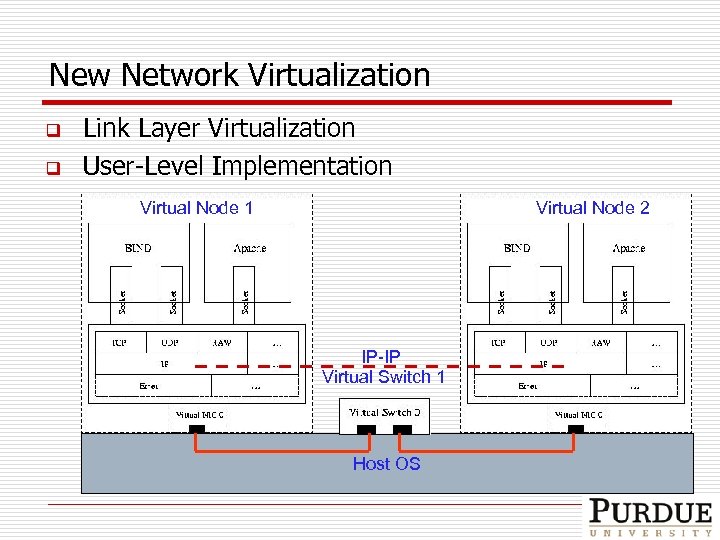

New Network Virtualization q q Link Layer Virtualization User-Level Implementation Virtual Node 1 Virtual Node 2 IP-IP Virtual Switch 1 Host OS

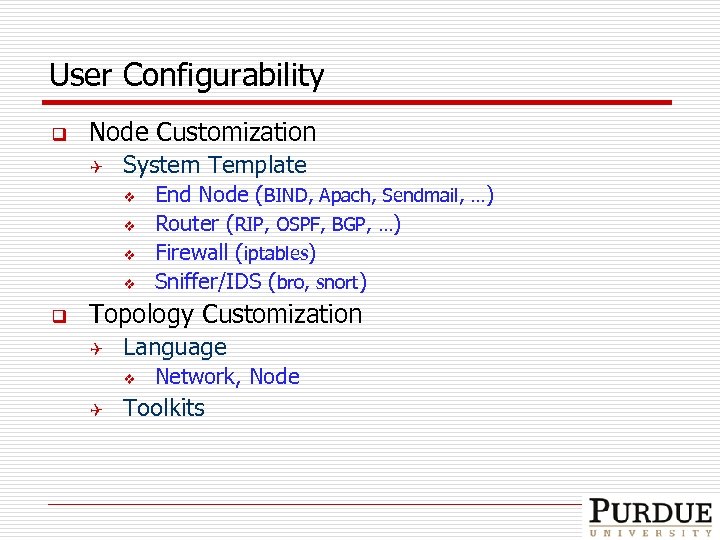

User Configurability q Node Customization Q System Template v v q End Node (BIND, Apach, Sendmail, …) Router (RIP, OSPF, BGP, …) Firewall (iptables) Sniffer/IDS (bro, snort) Topology Customization Q Language v Q Network, Node Toolkits

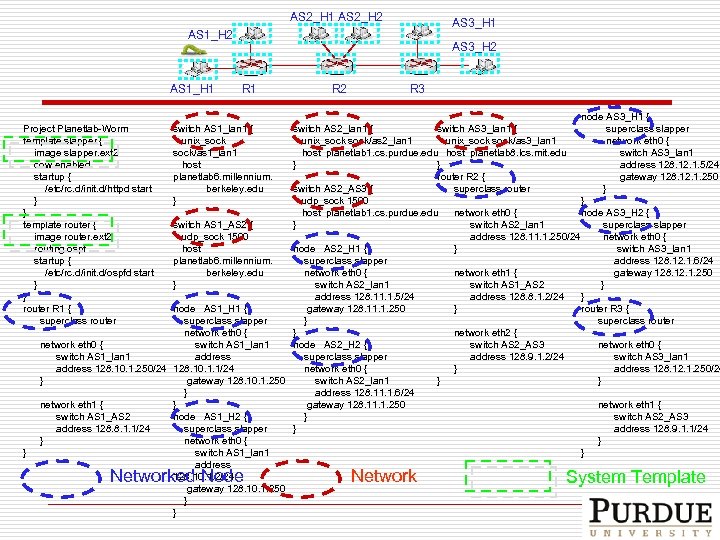

AS 2_H 1 AS 2_H 2 AS 3_H 1 AS 1_H 2 AS 1_H 1 R 2 R 3 node AS 3_H 1 { switch AS 2_lan 1 { switch AS 3_lan 1 { superclass slapper unix_sock/as 2_lan 1 unix_sock/as 3_lan 1 network eth 0 { host planetlab 1. cs. purdue. edu host planetlab 8. lcs. mit. edu switch AS 3_lan 1 } } address 128. 12. 1. 5/24 gateway 128. 12. 1. 250 router R 2 { switch AS 2_AS 3 { superclass router } udp_sock 1500 } host planetlab 1. cs. purdue. edu network eth 0 { node AS 3_H 2 { switch AS 1_AS 2 { } switch AS 2_lan 1 superclass slapper network eth 0 { udp_sock 1500 address 128. 11. 1. 250/24 host node AS 2_H 1 { } switch AS 3_lan 1 planetlab 6. millennium. superclass slapper address 128. 12. 1. 6/24 berkeley. edu network eth 0 { network eth 1 { gateway 128. 12. 1. 250 } switch AS 2_lan 1 switch AS 1_AS 2 } address 128. 11. 1. 5/24 address 128. 8. 1. 2/24 } node AS 1_H 1 { gateway 128. 11. 1. 250 } router R 3 { superclass slapper } superclass router network eth 0 { } network eth 2 { network eth 0 { switch AS 1_lan 1 node AS 2_H 2 { switch AS 2_AS 3 network eth 0 { switch AS 1_lan 1 address superclass slapper address 128. 9. 1. 2/24 switch AS 3_lan 1 address 128. 10. 1. 250/24 128. 10. 1. 1/24 network eth 0 { } address 128. 12. 1. 250/24 } gateway 128. 10. 1. 250 switch AS 2_lan 1 } } } address 128. 11. 1. 6/24 network eth 1 { } gateway 128. 11. 1. 250 network eth 1 { switch AS 1_AS 2 node AS 1_H 2 { } switch AS 2_AS 3 address 128. 8. 1. 1/24 superclass slapper } address 128. 9. 1. 1/24 } network eth 0 { } switch AS 1_lan 1 } address 128. 10. 1. 2/24 gateway 128. 10. 1. 250 } } Project Planetlab-Worm template slapper { image slapper. ext 2 cow enabled startup { /etc/rc. d/init. d/httpd start } } template router { image router. ext 2 routing ospf startup { /etc/rc. d/init. d/ospfd start } } router R 1 { superclass router } AS 3_H 2 switch AS 1_lan 1 { unix_sock/as 1_lan 1 host planetlab 6. millennium. berkeley. edu } Networked Node Network System Template

Features q Scalability Q q Iterative Experiment Convenience Q Q Q q q 3000 virtual hosts in 10 physical nodes Virtual node generation time: 60 seconds Boot-strap time: 90 seconds Tear-down time: 10 seconds Strict Confinement High Fidelity

Evaluation q Current Focus Q q Experiments Q q Worm behavior reproduction Probing, exploitation, payloads, and propagation Further Potentials – on-going work Q Q Routing worms / Stealthy worms Infrastructure security (BGP)

Experiment Setup q Two Real-World Worms Q q Lion, Slapper, and their variants A v. Ground Topology Q Q Q 10 virtual networks 1500 virtual Nodes 10 physical machines in an ITa. P cluster

Evaluation q q Target Host Distribution Detailed Exploitation Steps Malicious Payloads Propagation Pattern

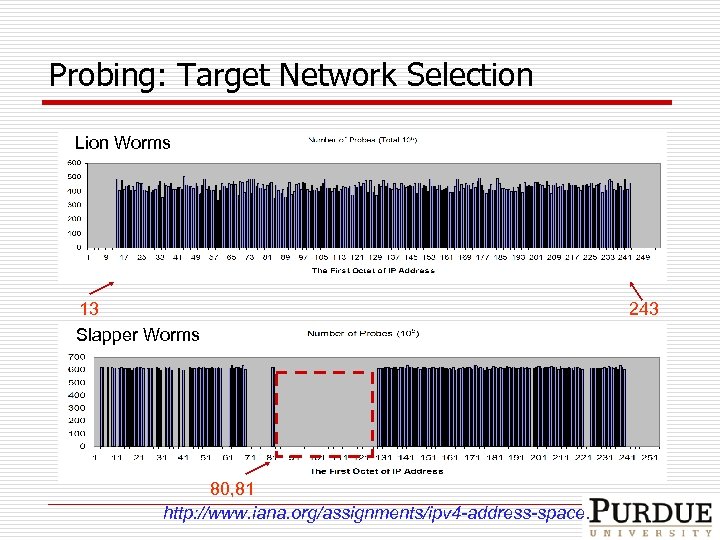

Probing: Target Network Selection Lion Worms 13 Slapper Worms 80, 81 http: //www. iana. org/assignments/ipv 4 -address-space. 243

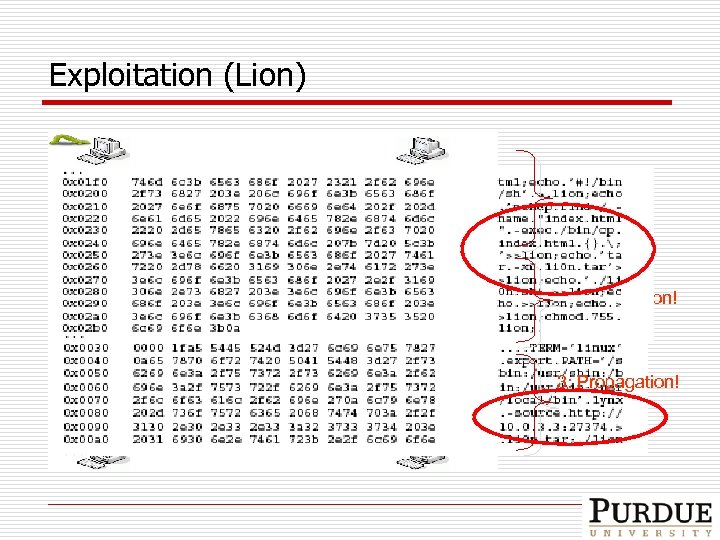

Exploitation (Lion) 1: Probing 2: Exploitation! 3: Propagation!

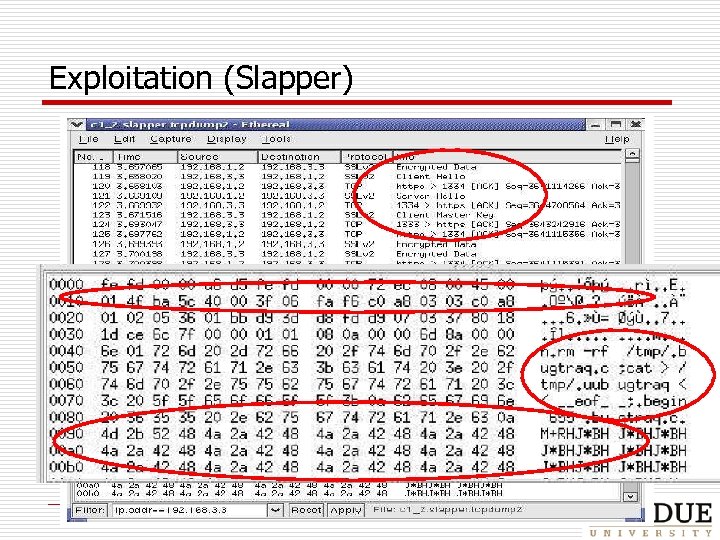

Exploitation (Slapper) 1: Probing 2: Exploitation! 3: Propagation!

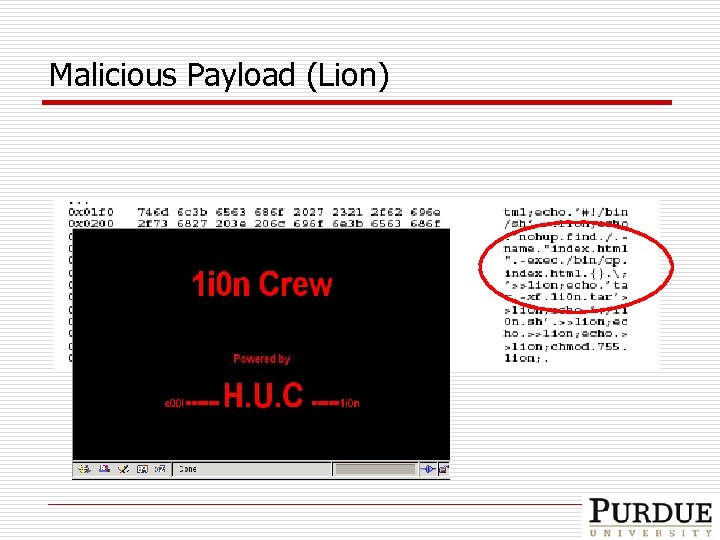

Malicious Payload (Lion)

Propagation Pattern and Strategy q Address-Sweeping Q Q q Randomly choose a Class B address (a. b. 0. 0) Sequentially scan hosts a. b. 0. 0 – a. b. 255 Island-Hopping Q Local subnet preference

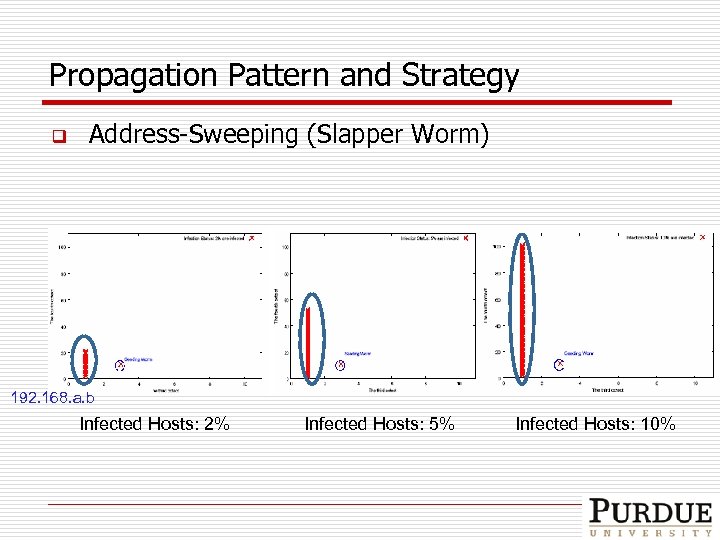

Propagation Pattern and Strategy q Address-Sweeping (Slapper Worm) 192. 168. a. b Infected Hosts: 2% Infected Hosts: 5% Infected Hosts: 10%

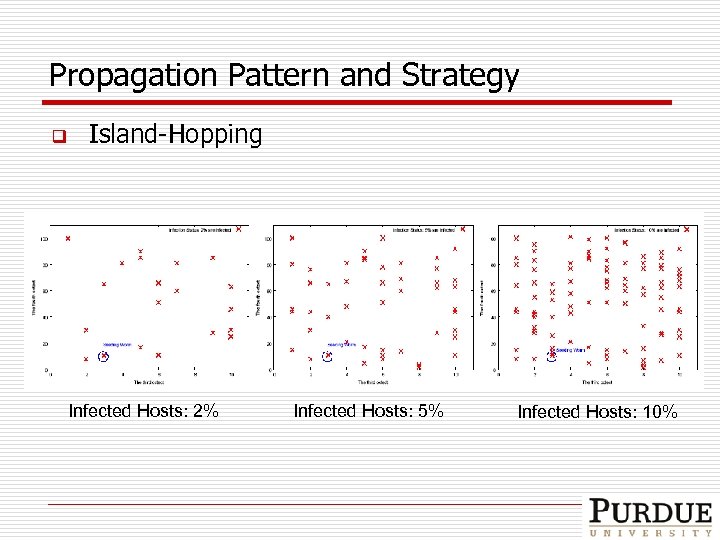

Propagation Pattern and Strategy q Island-Hopping Infected Hosts: 2% Infected Hosts: 5% Infected Hosts: 10%

Summary (Back-End) q v. Ground – the back-end Q Q A Virtualization-Based Worm Playground Properties: v v v High Fidelity Strict Confinement Good Scalability n v v 3000 Virtual Hosts in 10 Physical Nodes High Resource Efficiency Flexible and Efficient Worm Experiment Control

Combining Collapsar and v. Ground Domain B Domain A GRE Worm Capture Worm Analysis

Conclusions q An integrated virtualization-based platform for worm and malware investigation Q Q q Front-end : Collapsar Back-end : v. Ground Great potential for automatic Q Q Q Characterization of unknown service vulnerabilities Generation of 0 -day worm signatures Tracking of worm contaminations

On-going Work q More real-world evaluation Q Q q Stealthy worms Polymorphic worms Additional capabilities Q Q Collapsar center federation On-demand honeypot customization Worm/malware contamination tracking Automated signature generation

Thank you. Stop by our poster and demo this afternoon! For more information: Email: dxu@cs. purdue. edu URL: http: //www. cs. purdue. edu/~dxu Google: “Purdue Collapsar Friends”

4974802e71879e4a1c81449a7659072d.ppt