af92b2593b9619799ce12be04fa882d3.ppt

- Количество слайдов: 30

Enabling Grids for E-scienc. E The g. Lite Software Development Process Alberto Di Meglio CERN 4 October 2005 www. eu-egee. org INFSO-RI-508833

Overview Enabling Grids for E-scienc. E • • Software configuration management and tools Release process System configuration ETICS INFSO-RI-508833 CERN 2

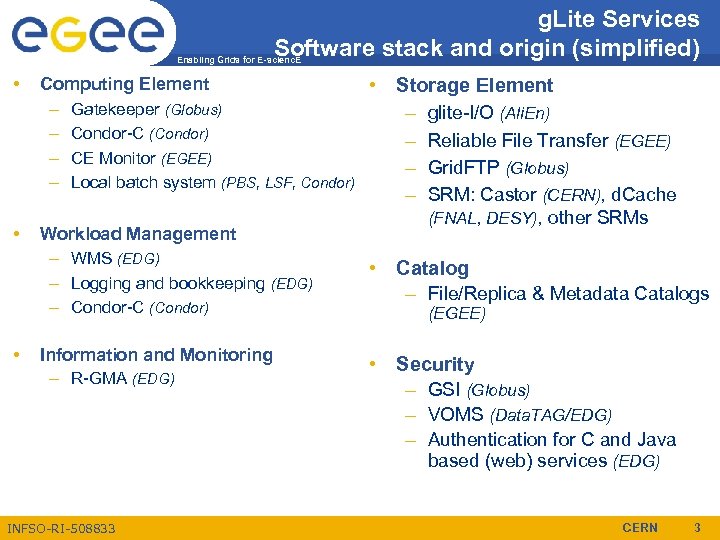

g. Lite Services Software stack and origin (simplified) Enabling Grids for E-scienc. E • Computing Element • – Gatekeeper (Globus) – Condor-C (Condor) – CE Monitor (EGEE) – Local batch system (PBS, LSF, Condor) • Workload Management – WMS (EDG) – Logging and bookkeeping (EDG) – Condor-C (Condor) • Information and Monitoring – R-GMA (EDG) Storage Element – glite-I/O (Ali. En) – Reliable File Transfer (EGEE) – Grid. FTP (Globus) – SRM: Castor (CERN), d. Cache (FNAL, DESY), other SRMs • Catalog – File/Replica & Metadata Catalogs (EGEE) • Security – GSI (Globus) – VOMS (Data. TAG/EDG) – Authentication for C and Java based (web) services (EDG) INFSO-RI-508833 CERN 3

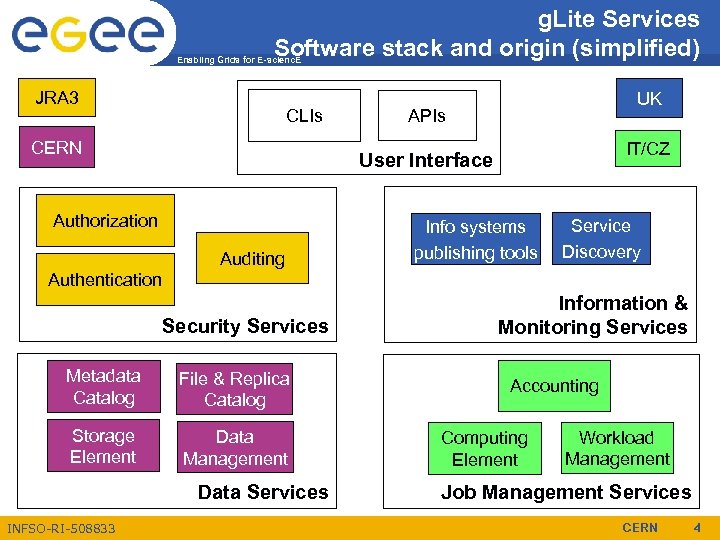

g. Lite Services Software stack and origin (simplified) Enabling Grids for E-scienc. E JRA 3 CLIs CERN UK APIs IT/CZ User Interface Authorization Auditing Info systems publishing tools Service Discovery Authentication Security Services Metadata Catalog File & Replica Catalog Storage Element Data Management Data Services INFSO-RI-508833 Information & Monitoring Services Accounting Computing Element Workload Management Job Management Services CERN 4

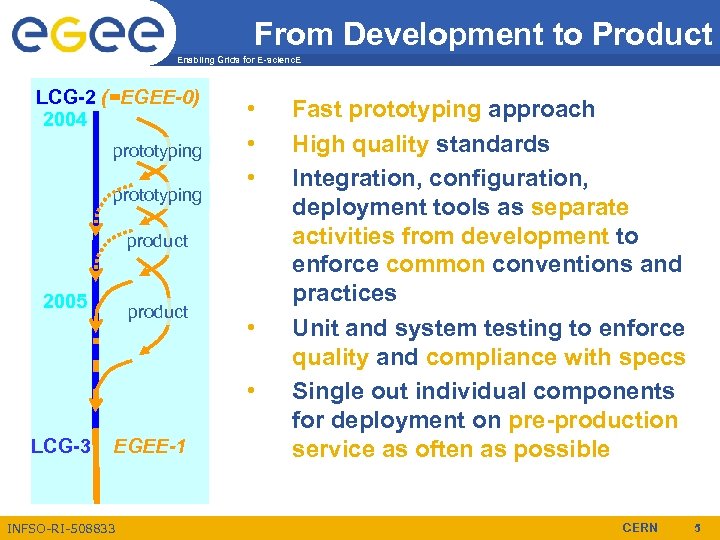

From Development to Product Enabling Grids for E-scienc. E LCG-2 (=EGEE-0) 2004 prototyping • • • product 2005 product • • LCG-3 EGEE-1 INFSO-RI-508833 Fast prototyping approach High quality standards Integration, configuration, deployment tools as separate activities from development to enforce common conventions and practices Unit and system testing to enforce quality and compliance with specs Single out individual components for deployment on pre-production service as often as possible CERN 5

Software Process Enabling Grids for E-scienc. E • JRA 1 Software Process is based on an iterative method loosely based on RUP and some XP practices • It comprises two main 12 -month development cycles divided in shorter development-integration-test-release cycles lasting from 2 to 6 weeks • The two main cycles starts with full Architecture and Design phases, but the architecture and design are periodically reviewed and verified. • The process is fully documented in a number of standard document: – – Software Configuration Management Plan (SCM) Test Plan Quality Assurance Plan Developer’s Guide INFSO-RI-508833 CERN 6

SCM Enabling Grids for E-scienc. E • • The SCM Plan is the core document of the Software Process It contains a description of the processes and the procedures to be applied to the six SCM activity areas: – Software configuration and versioning, tagging and branching conventions – Build Tools Systems – Bug Tracking – Change Control and the Change Control Board (CCB) – Release Process – Process Auditing and QA Metrics It is based on a number of standard methods and frameworks including: – ISO 10007: 2003 - Quality management systems -- Guidelines for configuration management, ISO, 2003 – IEEE Software Engineering Guidelines (http: //standards. ieee. org/reading/ieee/std/se) – The Rational Unified Process (http: //www 306. ibm. com/software/awdtools/rup/) In addition it adopts best-practice solutions 1 to guarantee the highest possible quality in a very distributed and heterogeneous collaboration 1 S. P. Berczuk, Software Configuration Management Patterns, Software Patterns Series, Addison-Wesley, 2002 A. Di Meglio et al. , A Pattern-based Continuous Integration Framework for Distributed EGEE Grid Middleware Development, Proc. CHEP 2004 INFSO-RI-508833 CERN 7

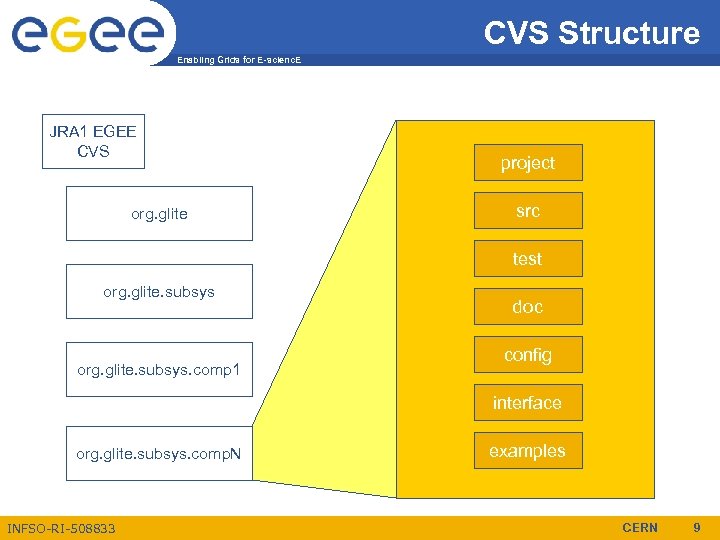

Version and Configuration Control Enabling Grids for E-scienc. E • • Based on CVS using CERN Central IT CVS service Fixed directory structure for each module Rules for tagging and branching (e. g. bug fix branches) Common naming conventions for baseline and release tags • Configuration files to automate creation of workspaces – Used to enforce build reproducibility – Used to create private workspaces for developers • Rules apply also to external dependencies, all thirdparty packages and versions are controlled by the build systems, not the developers INFSO-RI-508833 CERN 8

CVS Structure Enabling Grids for E-scienc. E JRA 1 EGEE CVS org. glite project src test org. glite. subsys. comp 1 doc config interface org. glite. subsys. comp. N INFSO-RI-508833 examples CERN 9

Workspaces Enabling Grids for E-scienc. E • A Workspace is one of the basic SCM Patterns • It is a private area where a build can be produced under configuration control • It includes all internal and external dependencies, but nothing is really installed on the computer, everything exists within the workspace sandbox • More independent workspaces can coexist on the same computer for parallel development • The workspace is created using a Configuration Specification File (CSF) • The CSFs contain all the information necessary to prepare the workspace extracting modules and other dependencies from CVS or the central software repository • There is a CSF file for the overall org. glite middleware suite and one CSF for each subsystem • CSFs are stored in the corresponding modules in CVS and versioned so that any version of the MW suite and all its subsystems can be reproduced at any time INFSO-RI-508833 CERN 10

Build Tools Enabling Grids for E-scienc. E • Ant: used for the general build management and all Java modules. • Make + GNU Autotools: for C/C++, Perl and other languages as necessary. Some effort has been done to port the Makefiles to Windows or use Cygwin, but with very limited success • Cruise. Control: used to automate the nightly and integration builds on the central servers • An abstraction layer has been created on top of these tools to provide a common interface to all build tools independently of the language, platform and tool used INFSO-RI-508833 CERN 11

Build Systems Enabling Grids for E-scienc. E • Common Build System – The common build system allows all developers, integrators and testers to manage private builds on their own computers with the same tools and settings (compilers, flags, configurations, etc) – Based on the concept of Private Workspaces, more than one independent workspace can be created on the same computer – Uses a set of common build and properties files to define targets and properties for all project modules – External dependencies are stored and versioned either in CVS or a central repository. All modules use the same versions of the external dependencies across the project • Central Build System – Located at CERN and maintained by the JRA 1 Integration Team – Based on the Common Build System, but automated by means of Cruise. Control and Maven to generate a continuous, automated integration process and the overall project web site. INFSO-RI-508833 CERN 12

Build Infrastructure Enabling Grids for E-scienc. E • Two nightly build servers on RH Linux 3. 0 (ia 32) – Clean builds out of HEAD and v. 1. x every night of all components – Results are published to the g. Lite web site – Tagged every night and totally reproducible • One continuous build server on RH Linux 3. 0 (ia 32) – Incremental builds out of v. 1. x every 60 minutes – Results published to Cruise. Control web site – Automated build error notifications to developers and Integration Team • One nightly build server on RH Linux 3. 0 (ia 64) – Clean builds every night of all components • One nightly build server on Windows XP – Clean builds every night of all components currently ported to Windows • Build system supported platforms: – Red Hat Linux 3. 0 and binary compatible platforms (SLC 3, Cent. OS, etc), 32 and 64 -bit (gcc) – Windows XP/2003 INFSO-RI-508833 CERN 13

Defect Tracking System Enabling Grids for E-scienc. E • Based on the Savannah project portal at CERN • Used also for change requests (for example API changes, external libraries version changes, etc). In this case, request are assigned to the Change Control Board for further evaluation (explained later) • Heavily customized to provide several additional bug status on top of the default Open and Closed (!) • Each g. Lite subsystem is tracked as a separate category and related bugs are assigned to the responsible clusters INFSO-RI-508833 CERN 14

Defect Tracking Configuration Enabling Grids for E-scienc. E • Two conditions: Open, Closed • Ten main states: None, Accepted, In Progress, Integration Candidate, Ready for Integration, Ready for Test, Ready for Review, Fixed, Duplicate, Invalid • Transitions between two states is in principle subject to specific checks (Is it a defect? Can it be fixed? Test passed, Review passed? ) • Not all transitions are allowed and additional automated changes can be triggered by changing the bug status (condition, assignment, etc) INFSO-RI-508833 CERN 15

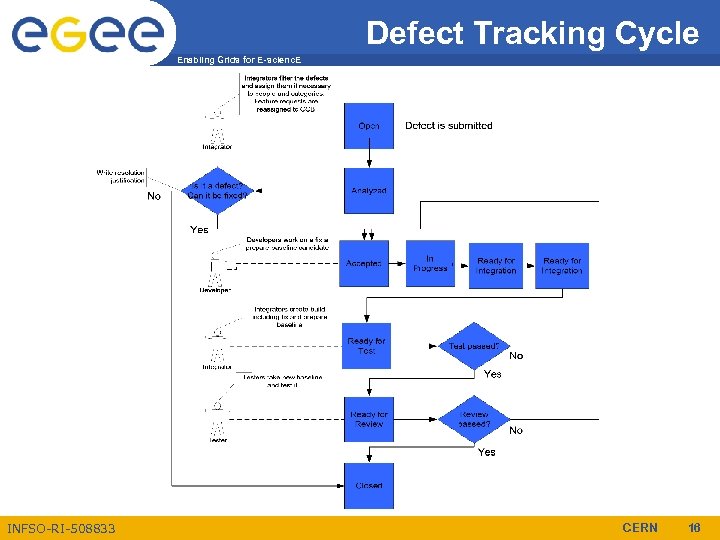

Defect Tracking Cycle Enabling Grids for E-scienc. E INFSO-RI-508833 CERN 16

Change Control Board Enabling Grids for E-scienc. E • All public changes must go through a formal approval process • The CCB is tasked to collect and examine the change requests • Changes are tracked and handled as quickly as possible • The CCB is not a physical team, but a role that is assumed by more than one team or group depending on the type of change (interface changes, bug fixes, software configuration changes, etc) INFSO-RI-508833 CERN 17

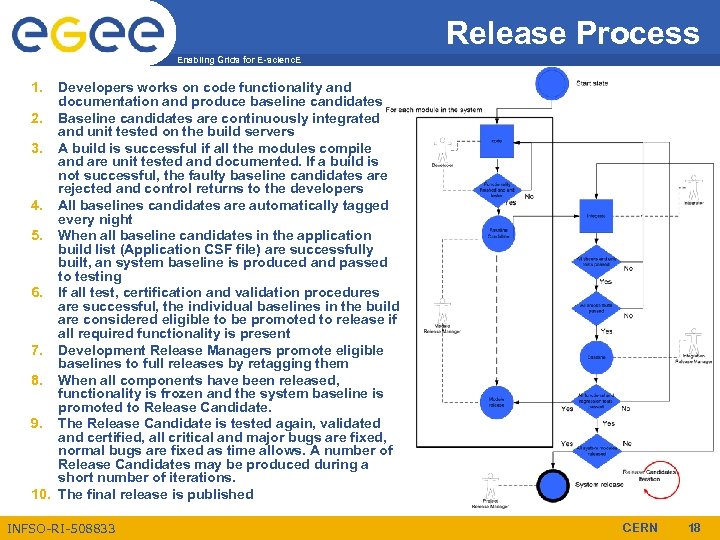

Release Process Enabling Grids for E-scienc. E 1. Developers works on code functionality and documentation and produce baseline candidates 2. Baseline candidates are continuously integrated and unit tested on the build servers 3. A build is successful if all the modules compile and are unit tested and documented. If a build is not successful, the faulty baseline candidates are rejected and control returns to the developers 4. All baselines candidates are automatically tagged every night 5. When all baseline candidates in the application build list (Application CSF file) are successfully built, an system baseline is produced and passed to testing 6. If all test, certification and validation procedures are successful, the individual baselines in the build are considered eligible to be promoted to release if all required functionality is present 7. Development Release Managers promote eligible baselines to full releases by retagging them 8. When all components have been released, functionality is frozen and the system baseline is promoted to Release Candidate. 9. The Release Candidate is tested again, validated and certified, all critical and major bugs are fixed, normal bugs are fixed as time allows. A number of Release Candidates may be produced during a short number of iterations. 10. The final release is published INFSO-RI-508833 CERN 18

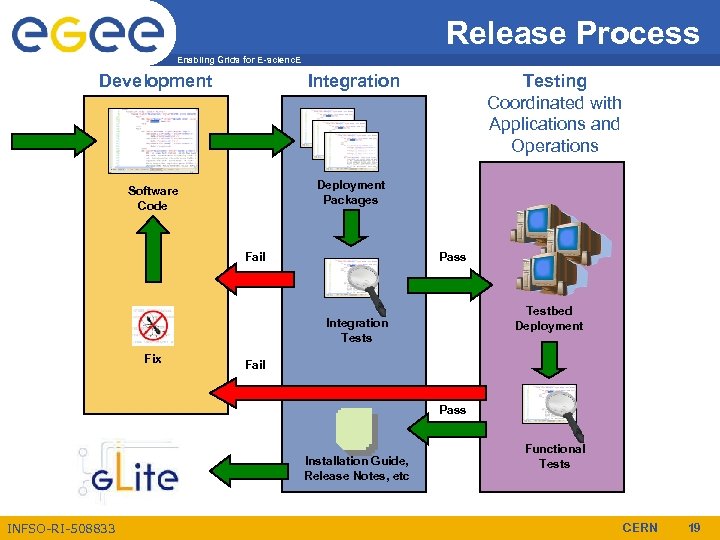

Release Process Enabling Grids for E-scienc. E Development Integration Software Code Deployment Packages Fail Testing Coordinated with Applications and Operations Pass Testbed Deployment Integration Tests Fix Fail Pass Installation Guide, Release Notes, etc INFSO-RI-508833 Functional Tests CERN 19

Integration and Configuration Enabling Grids for E-scienc. E • During the Integration phase individual development modules from the different JRA 1 clusters are assembled and uniform deployment packages are produced and tested • The deployment packages are completed with installation and configuration scripts, documentation and release notes • The configuration information is encoded using an XML schema. The advantage is that it allows structure and inheritance, can be validated and easily transformed in other formats as required by external configuration system. The disadvantage is that is more difficult to read than key-value pairs if one wants to manipulate the files directly (but there are several tools to do that easily) INFSO-RI-508833 CERN 20

Integration and Configuration Enabling Grids for E-scienc. E • At the moment three scenarios are being used • Simple web-based configuration: the XML-encode configuration files are stored on a web server and the configuration scripts access them using a standard web-enabled parser. Special features like XInclude to have all common parameters in centralized files, node-based access, etc are easily provided • Quattor: it is an installation and configuration system developed at CERN for managing large computing centres. The g. Lite Quattor templates are automatically created during the build process and the XML configuration files can be easily transformed in the internal XML format used by Quattor and, viceversa, g. Lite XML files can be generated from Quattor. This is currently used to manage the internal JRA 1 “Prototype” infrastructure • Configuration web service: is a web service like most of the g. Lite services with which it shares the security infrastructure based on VOMS certificates. It can use multiple back-ends (files, RMDBS) and both administrators and services can access it to dynamically read/write configuration information. A prototype has been developed as a demonstrator. INFSO-RI-508833 CERN 21

Packaging Enabling Grids for E-scienc. E • Installation packages for the g. Lite Middleware produced by the integration team • Supported packaging formats are: – Source tarballs with build scripts – Binary tarballs for the supported platforms – Native packages for supported platforms (e. g. RPMs for Red Hat Linux Enterprise 3. 0, MSIs for Windows XP, etc) • Packages are created by a packager program integrated in the build system. SPEC files for RPMS are automatically generated (but developers may provide their own if they wish). Extension to the creation of Windows MSI is foreseen • Installation and configuration is handled by configuration scripts developed by the integration team for each high level middleware service (ex. Computing Element, Data Catalog, R-GMA information system, etc) INFSO-RI-508833 CERN 22

Software Quality Assurance Enabling Grids for E-scienc. E • Software Metrics are collected as part of the build process • Failure to pass a quality check fails the build • Additional checks are implemented in the version control system (coding style, documentation tags) • Software Defect and QA Metrics are collected from the defect tracking system • Reports and graphs are published on the project web site (this is still limited, must be improved) INFSO-RI-508833 CERN 23

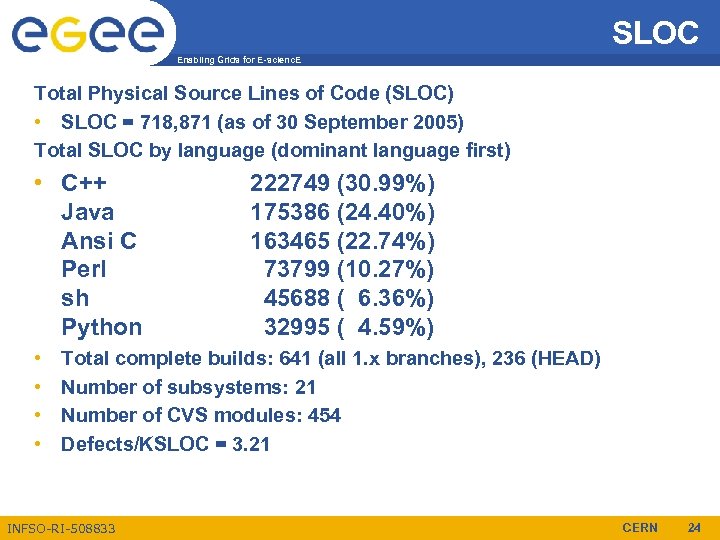

SLOC Enabling Grids for E-scienc. E Total Physical Source Lines of Code (SLOC) • SLOC = 718, 871 (as of 30 September 2005) Total SLOC by language (dominant language first) • C++ Java Ansi C Perl sh Python • • 222749 (30. 99%) 175386 (24. 40%) 163465 (22. 74%) 73799 (10. 27%) 45688 ( 6. 36%) 32995 ( 4. 59%) Total complete builds: 641 (all 1. x branches), 236 (HEAD) Number of subsystems: 21 Number of CVS modules: 454 Defects/KSLOC = 3. 21 INFSO-RI-508833 CERN 24

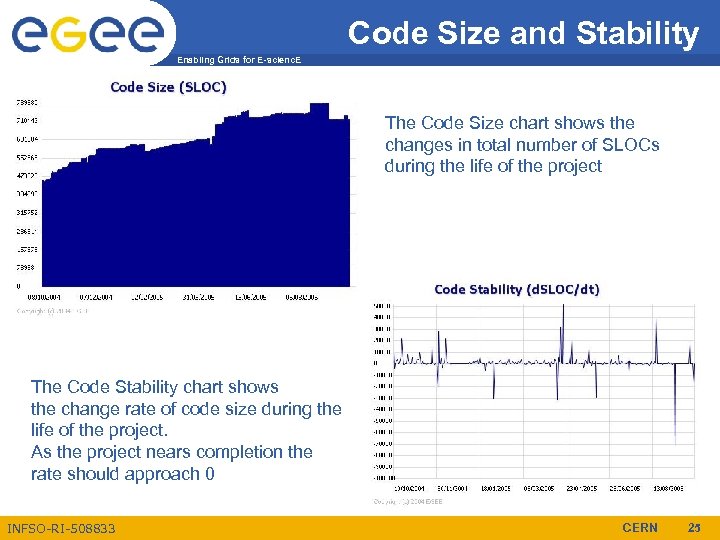

Code Size and Stability Enabling Grids for E-scienc. E The Code Size chart shows the changes in total number of SLOCs during the life of the project The Code Stability chart shows the change rate of code size during the life of the project. As the project nears completion the rate should approach 0 INFSO-RI-508833 CERN 25

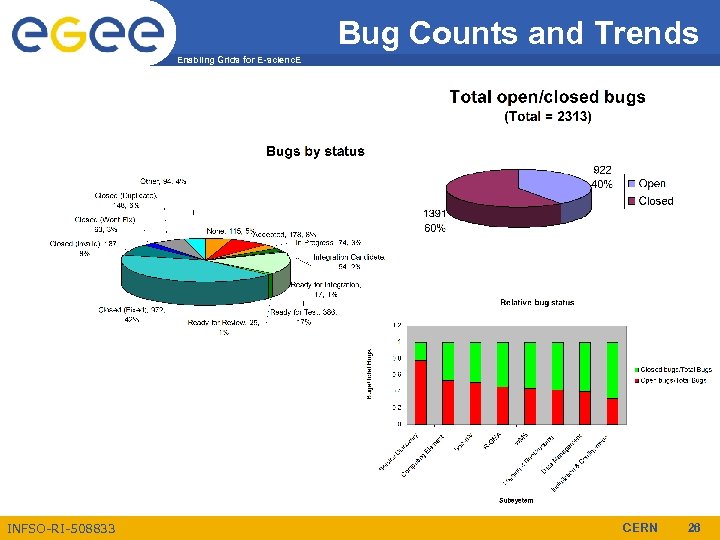

Bug Counts and Trends Enabling Grids for E-scienc. E INFSO-RI-508833 CERN 26

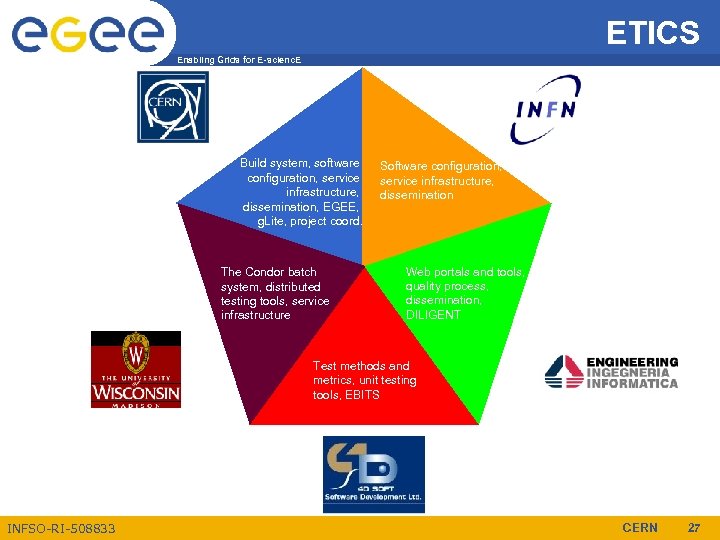

ETICS Enabling Grids for E-scienc. E Build system, software configuration, service infrastructure, dissemination, EGEE, g. Lite, project coord. The Condor batch system, distributed testing tools, service infrastructure Software configuration, service infrastructure, dissemination Web portals and tools, quality process, dissemination, DILIGENT Test methods and metrics, unit testing tools, EBITS INFSO-RI-508833 CERN 27

What is ETICS? Enabling Grids for E-scienc. E • ETICS stands for E-Infrastructure for Testing, Integration and Configuration of Software • The major goals of the project are: – To set-up an international and well-managed distributed service for software building, integration and testing that international collaborative software development projects can use as a commodity – To organize and disseminate a database of software configuration and interoperability information that projects can use to validate and benchmark their products against existing middleware and applications – To investigate and promote the establishment of an international software quality certification process with the ultimate goal of improving the overall quality of distributed software projects INFSO-RI-508833 CERN 28

The ETICS Service Enabling Grids for E-scienc. E • The distributed build and test service is the core of ETICS • The goal is to free open-source, academic and commercial projects from the need of setting up their own distributed infrastructure and use a managed service • The service will initially run in three resource centres: CERN, INFN and Uo. W and will expand to more locations • The service will provide all the necessary tools and information to build and test distributed software on many different platforms, producing reports and notifications • It will have a web portal to access information and register projects for being compiled and tested INFSO-RI-508833 CERN 29

More information Enabling Grids for E-scienc. E http: //www. glite. org http: //cern. ch/egee-jra 1 http: //www. eu-etics. org INFSO-RI-508833 CERN 30

af92b2593b9619799ce12be04fa882d3.ppt