3275481a8690fa1c4fd7df81d0af1871.ppt

- Количество слайдов: 32

Electronic Systems Center Integrity - Service - Excellence Integrated Developmental Test & Evaluation (IDT&E) Process with Quality Center (QC) Overview AFOTEC Pre-Core Meeting Mr. Mike Phillips ESC/ENIT 28 Mar 11 1

Electronic Systems Center Integrity - Service - Excellence Integrated Developmental Test & Evaluation (IDT&E) Process with Quality Center (QC) Overview AFOTEC Pre-Core Meeting Mr. Mike Phillips ESC/ENIT 28 Mar 11 1

Overview n Assumptions n Defense Business System (DBS) focused; Gunter perspective based on 30+ years experience Process and concepts can be tailored to meet new acquisition and sustainment programs n Does NOT address specific environments or infrastructure n Engrained in current SEP Process / current best practices n n In use by several major Acquisition Programs where Gunter ESC/ENIT (Gunter) is the appointed RTO (AF-IPPS, DEAMS, FIRST) n DOT&E and DDT&E recognized and accepted processes Integrity - Service - Excellence

Overview n Assumptions n Defense Business System (DBS) focused; Gunter perspective based on 30+ years experience Process and concepts can be tailored to meet new acquisition and sustainment programs n Does NOT address specific environments or infrastructure n Engrained in current SEP Process / current best practices n n In use by several major Acquisition Programs where Gunter ESC/ENIT (Gunter) is the appointed RTO (AF-IPPS, DEAMS, FIRST) n DOT&E and DDT&E recognized and accepted processes Integrity - Service - Excellence

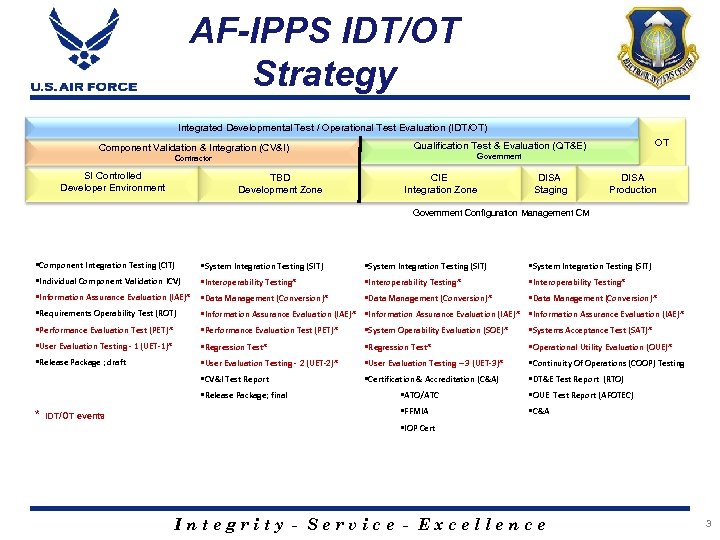

AF-IPPS IDT/OT Strategy Integrated Developmental Test / Operational Test Evaluation (IDT/OT) Component Validation & Integration (CV&I) Government Contractor SI Controlled Developer Environment TBD Development Zone OT Qualification Test & Evaluation (QT&E) CIE Integration Zone DISA Staging DISA Production Government Configuration Management CM §Component Integration Testing (CIT) §System Integration Testing (SIT) §Individual Component Validation ICV) §Interoperability Testing* §Data Management (Conversion)* §Information Assurance Evaluation (IAE)* §Data Management (Conversion)* §Requirements Operability Test (ROT) §Information Assurance Evaluation (IAE)* §Performance Evaluation Test (PET)* §System Operability Evaluation (SOE)* §Systems Acceptance Test (SAT)* §User Evaluation Testing - 1 (UET-1)* §Regression Test* §Operational Utility Evaluation (OUE)* §Release Package ; draft §User Evaluation Testing - 2 (UET-2)* §User Evaluation Testing – 3 (UET-3)* §Continuity Of Operations (COOP) Testing §CV&I Test Report §Certification & Accreditation (C&A) §DT&E Test Report (RTO) * IDT/OT events §ATO/ATC §OUE Test Report (AFOTEC) §FFMIA §Release Package; final §C&A §IOP Cert Integrity - Service - Excellence 3

AF-IPPS IDT/OT Strategy Integrated Developmental Test / Operational Test Evaluation (IDT/OT) Component Validation & Integration (CV&I) Government Contractor SI Controlled Developer Environment TBD Development Zone OT Qualification Test & Evaluation (QT&E) CIE Integration Zone DISA Staging DISA Production Government Configuration Management CM §Component Integration Testing (CIT) §System Integration Testing (SIT) §Individual Component Validation ICV) §Interoperability Testing* §Data Management (Conversion)* §Information Assurance Evaluation (IAE)* §Data Management (Conversion)* §Requirements Operability Test (ROT) §Information Assurance Evaluation (IAE)* §Performance Evaluation Test (PET)* §System Operability Evaluation (SOE)* §Systems Acceptance Test (SAT)* §User Evaluation Testing - 1 (UET-1)* §Regression Test* §Operational Utility Evaluation (OUE)* §Release Package ; draft §User Evaluation Testing - 2 (UET-2)* §User Evaluation Testing – 3 (UET-3)* §Continuity Of Operations (COOP) Testing §CV&I Test Report §Certification & Accreditation (C&A) §DT&E Test Report (RTO) * IDT/OT events §ATO/ATC §OUE Test Report (AFOTEC) §FFMIA §Release Package; final §C&A §IOP Cert Integrity - Service - Excellence 3

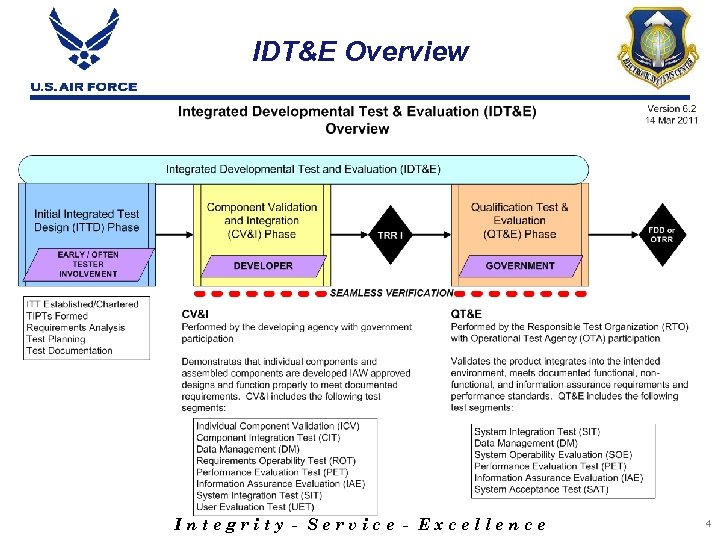

IDT&E Overview Integrity - Service - Excellence 4

IDT&E Overview Integrity - Service - Excellence 4

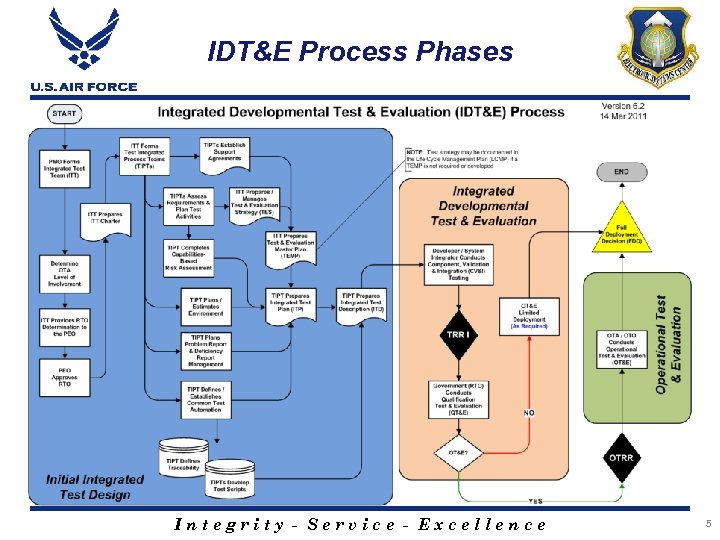

IDT&E Process Phases Integrity - Service - Excellence 5

IDT&E Process Phases Integrity - Service - Excellence 5

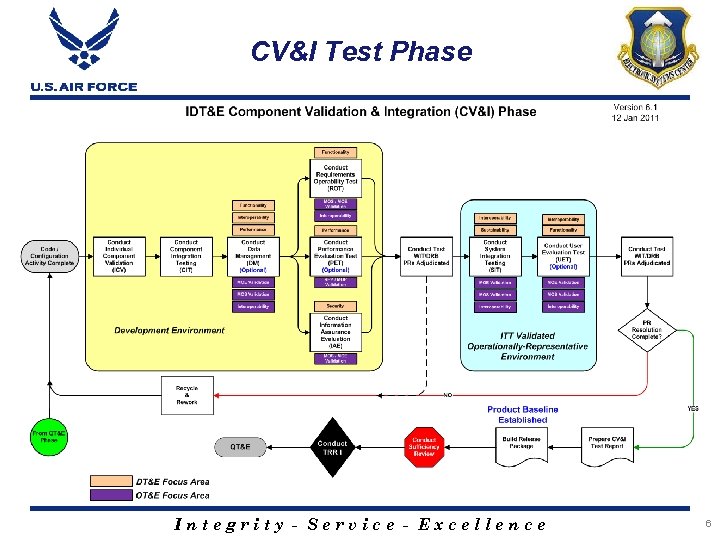

CV&I Test Phase Integrity - Service - Excellence 6

CV&I Test Phase Integrity - Service - Excellence 6

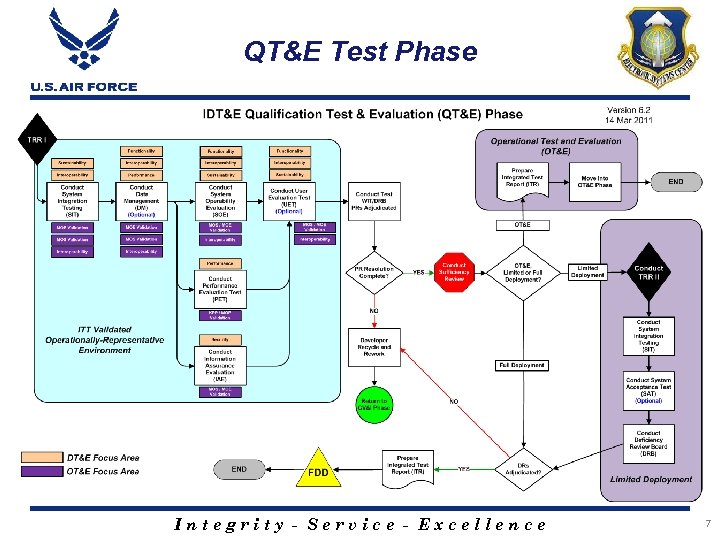

QT&E Test Phase Integrity - Service - Excellence 7

QT&E Test Phase Integrity - Service - Excellence 7

Open Discussion Integrity - Service - Excellence

Open Discussion Integrity - Service - Excellence

Quality Center (QC) 9. 2 Integrity - Service - Excellence

Quality Center (QC) 9. 2 Integrity - Service - Excellence

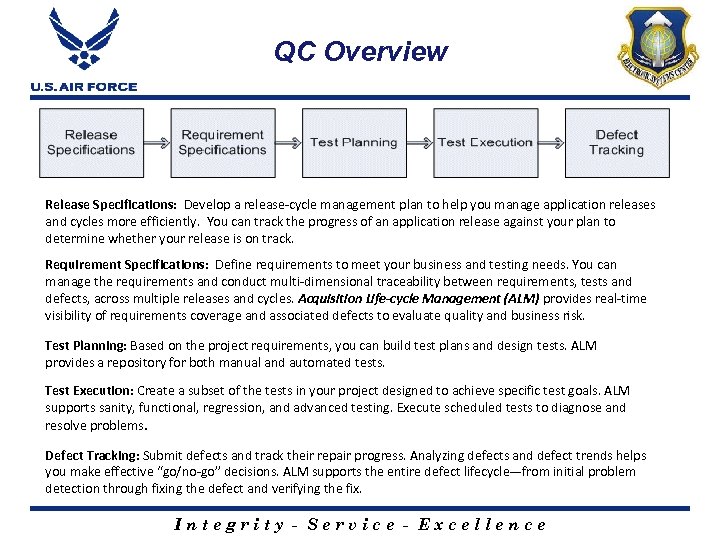

QC Overview Release Specifications: Develop a release-cycle management plan to help you manage application releases and cycles more efficiently. You can track the progress of an application release against your plan to determine whether your release is on track. Requirement Specifications: Define requirements to meet your business and testing needs. You can manage the requirements and conduct multi-dimensional traceability between requirements, tests and defects, across multiple releases and cycles. Acquisition Life-cycle Management (ALM) provides real-time visibility of requirements coverage and associated defects to evaluate quality and business risk. Test Planning: Based on the project requirements, you can build test plans and design tests. ALM provides a repository for both manual and automated tests. Test Execution: Create a subset of the tests in your project designed to achieve specific test goals. ALM supports sanity, functional, regression, and advanced testing. Execute scheduled tests to diagnose and resolve problems. Defect Tracking: Submit defects and track their repair progress. Analyzing defects and defect trends helps you make effective “go/no-go” decisions. ALM supports the entire defect lifecycle—from initial problem detection through fixing the defect and verifying the fix. Integrity - Service - Excellence

QC Overview Release Specifications: Develop a release-cycle management plan to help you manage application releases and cycles more efficiently. You can track the progress of an application release against your plan to determine whether your release is on track. Requirement Specifications: Define requirements to meet your business and testing needs. You can manage the requirements and conduct multi-dimensional traceability between requirements, tests and defects, across multiple releases and cycles. Acquisition Life-cycle Management (ALM) provides real-time visibility of requirements coverage and associated defects to evaluate quality and business risk. Test Planning: Based on the project requirements, you can build test plans and design tests. ALM provides a repository for both manual and automated tests. Test Execution: Create a subset of the tests in your project designed to achieve specific test goals. ALM supports sanity, functional, regression, and advanced testing. Execute scheduled tests to diagnose and resolve problems. Defect Tracking: Submit defects and track their repair progress. Analyzing defects and defect trends helps you make effective “go/no-go” decisions. ALM supports the entire defect lifecycle—from initial problem detection through fixing the defect and verifying the fix. Integrity - Service - Excellence

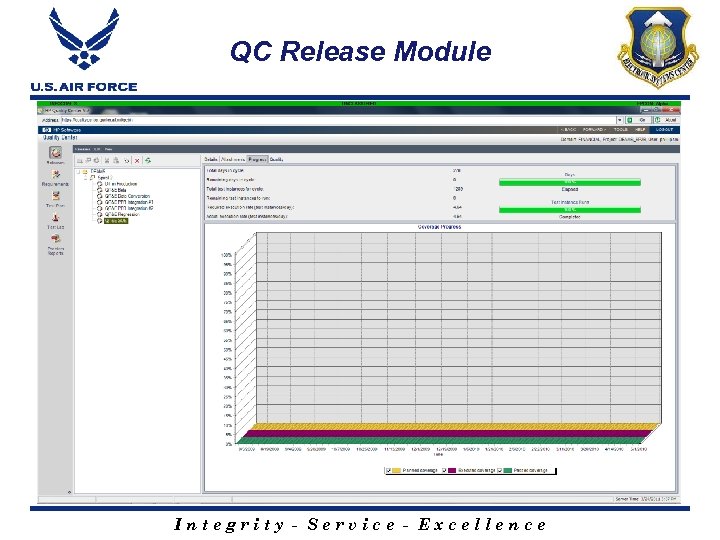

QC Release Module Integrity - Service - Excellence

QC Release Module Integrity - Service - Excellence

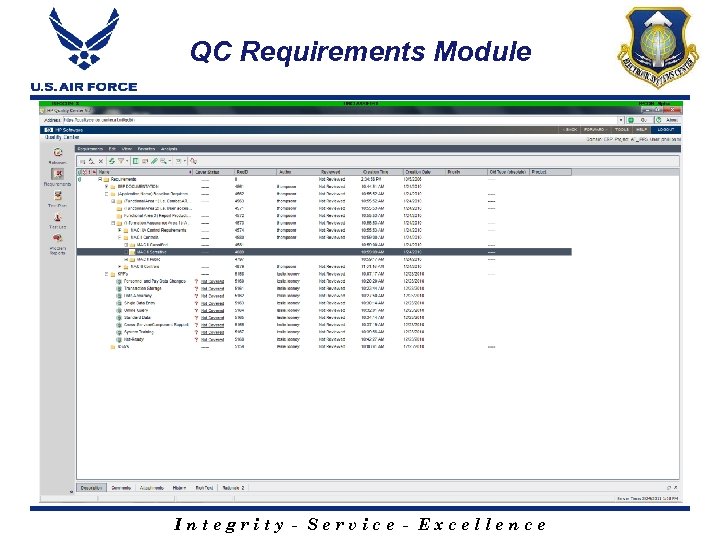

QC Requirements Module Integrity - Service - Excellence

QC Requirements Module Integrity - Service - Excellence

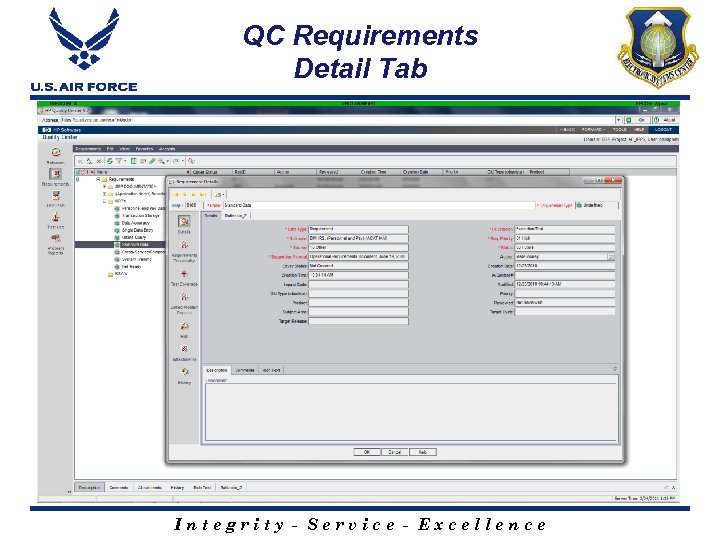

QC Requirements Detail Tab Integrity - Service - Excellence

QC Requirements Detail Tab Integrity - Service - Excellence

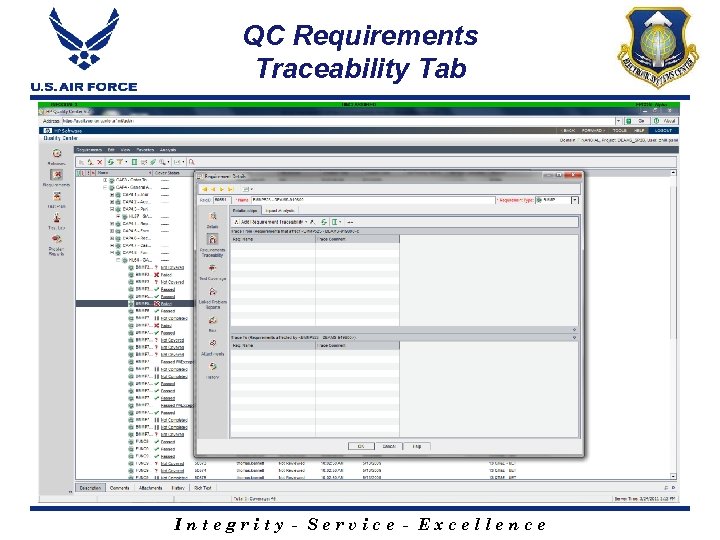

QC Requirements Traceability Tab Integrity - Service - Excellence

QC Requirements Traceability Tab Integrity - Service - Excellence

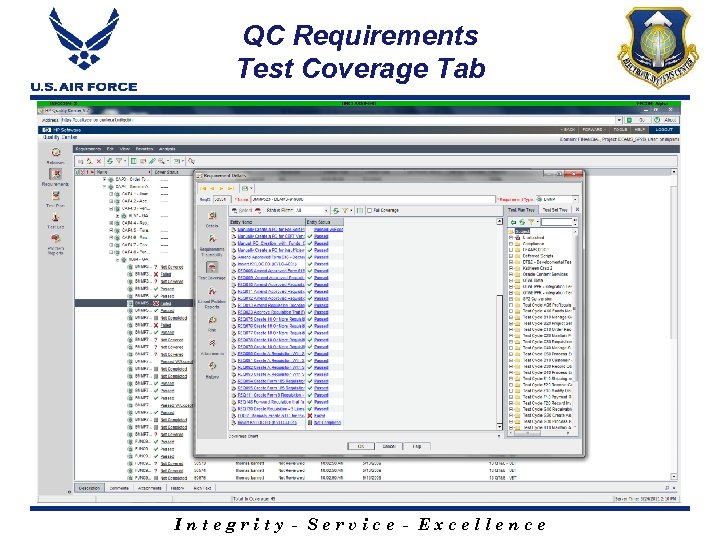

QC Requirements Test Coverage Tab Integrity - Service - Excellence

QC Requirements Test Coverage Tab Integrity - Service - Excellence

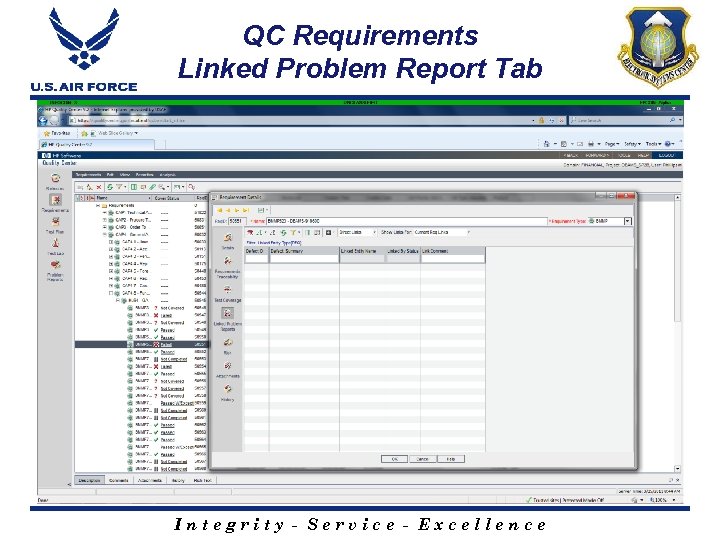

QC Requirements Linked Problem Report Tab Integrity - Service - Excellence

QC Requirements Linked Problem Report Tab Integrity - Service - Excellence

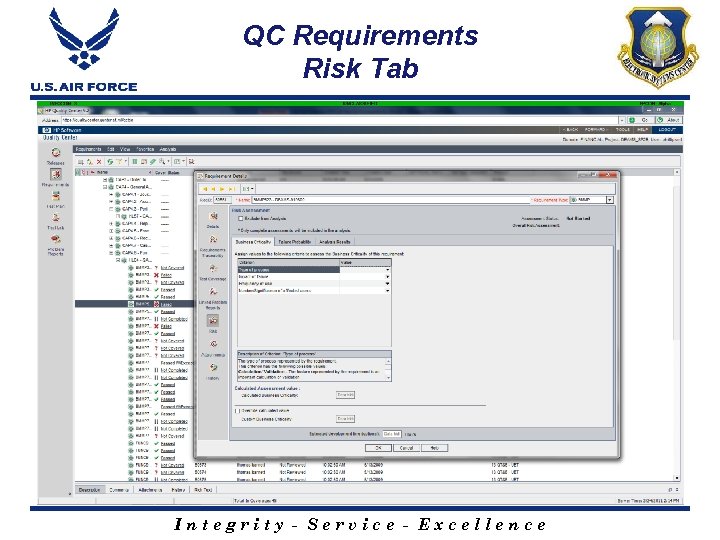

QC Requirements Risk Tab Integrity - Service - Excellence

QC Requirements Risk Tab Integrity - Service - Excellence

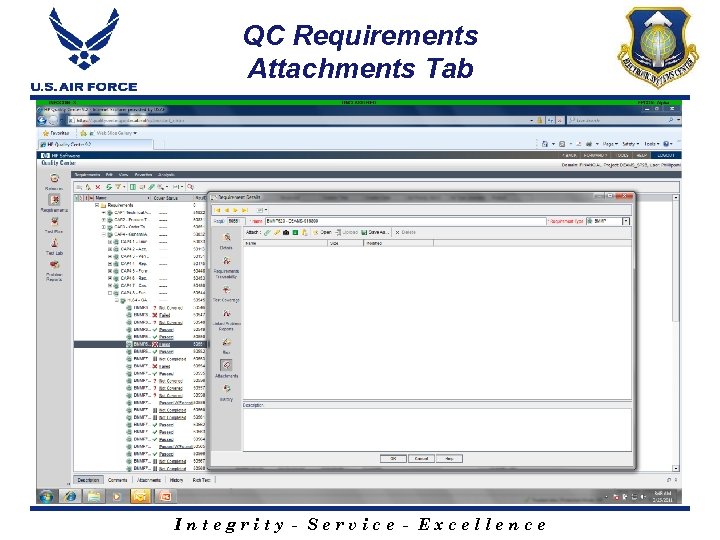

QC Requirements Attachments Tab Integrity - Service - Excellence

QC Requirements Attachments Tab Integrity - Service - Excellence

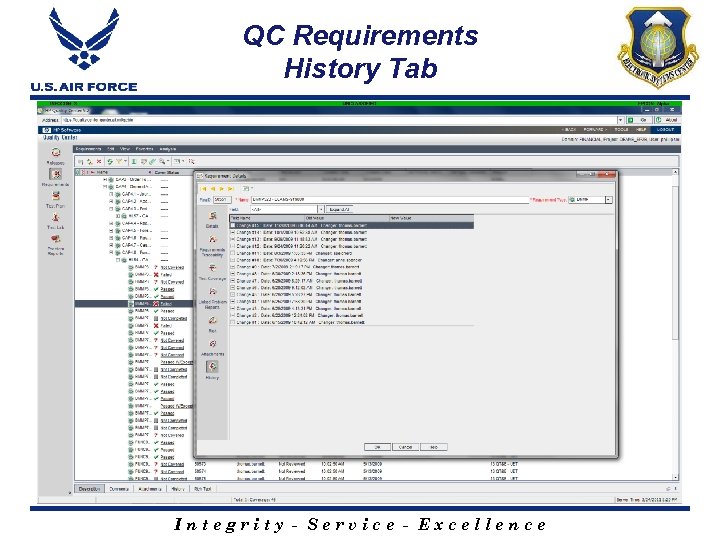

QC Requirements History Tab Integrity - Service - Excellence

QC Requirements History Tab Integrity - Service - Excellence

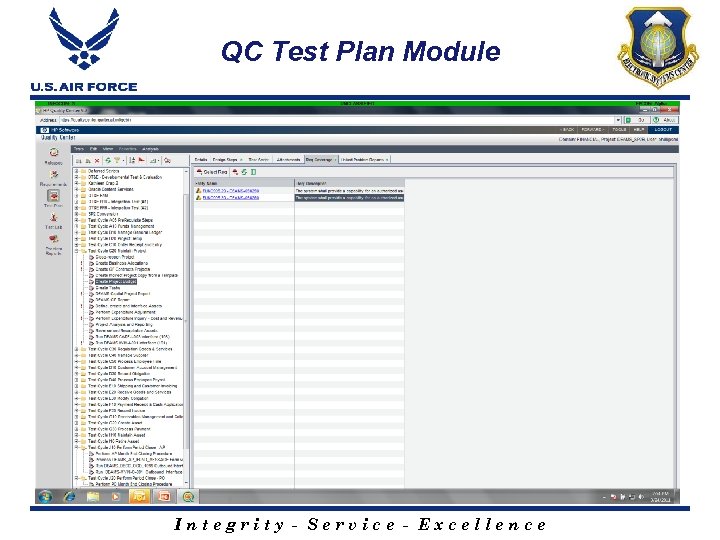

QC Test Plan Module Integrity - Service - Excellence

QC Test Plan Module Integrity - Service - Excellence

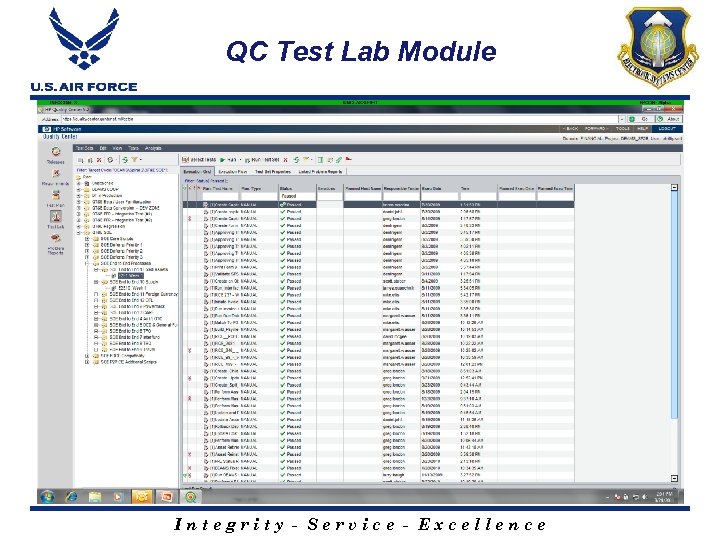

QC Test Lab Module Integrity - Service - Excellence

QC Test Lab Module Integrity - Service - Excellence

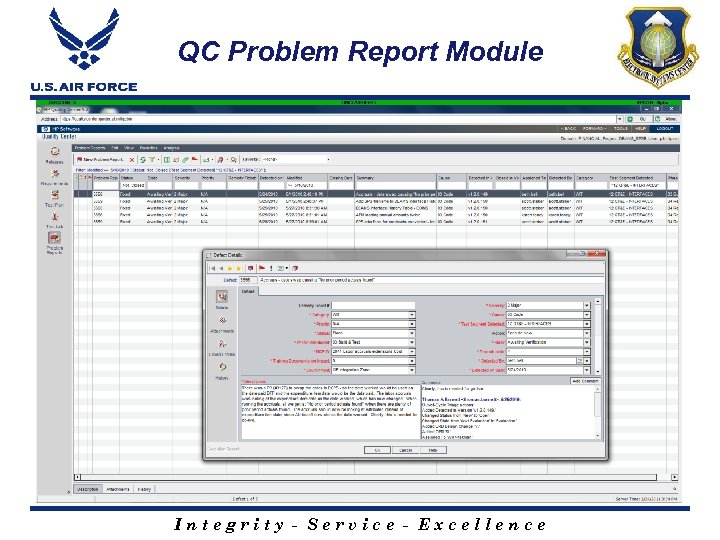

QC Problem Report Module Integrity - Service - Excellence

QC Problem Report Module Integrity - Service - Excellence

Questions? Integrity - Service - Excellence

Questions? Integrity - Service - Excellence

Back-Up Integrity - Service - Excellence

Back-Up Integrity - Service - Excellence

Terms & Definitions Component Integration Test (CIT): CIT, conducted during the CV&I Test Phase, validates completed components can be integrated into a complete system in accordance with specified requirements and approved designs. Component Validation and Integration (CV&I): CV&I, performed by the developing agency or system integrator and observed by the government RTO, demonstrates that each individual component and the assembled components are developed in accordance with the approved design and function properly to meet specified requirements. CV&I may be conducted in iterations as components are completed and integrated. Data Management (DM): DM, performed during both the CV&I and QT&E Test Phases, is the process to Extract, Transform, and Load (ETL) data from one system for use in another, usually for the purpose of application interoperability or system modernization. DM may consist of Data Migration, Data Conversion, and/or Data Validation. Deficiency Report (DR): The generic term used within the USAF to record, submit and transmit deficiency data which may include, but is not limited to a Deficiency Report involving quality, materiel, software, warranty, or informational deficiency data. Full Deployment Decision (FDD): FDD is the final decision made by the MDA authorizing an increment of the program to deploy software for full operational use. Functionality: The capacity of a system to provide useful function and the capability of the system to serve the purpose for which it was designed (meets defined requirements). Individual Component Validation (ICV): ICV, conducted during the CV&I Test Phase, validates each individual component is developed and operates in accordance with specified requirements and approved designs. Integrity - Service - Excellence 25

Terms & Definitions Component Integration Test (CIT): CIT, conducted during the CV&I Test Phase, validates completed components can be integrated into a complete system in accordance with specified requirements and approved designs. Component Validation and Integration (CV&I): CV&I, performed by the developing agency or system integrator and observed by the government RTO, demonstrates that each individual component and the assembled components are developed in accordance with the approved design and function properly to meet specified requirements. CV&I may be conducted in iterations as components are completed and integrated. Data Management (DM): DM, performed during both the CV&I and QT&E Test Phases, is the process to Extract, Transform, and Load (ETL) data from one system for use in another, usually for the purpose of application interoperability or system modernization. DM may consist of Data Migration, Data Conversion, and/or Data Validation. Deficiency Report (DR): The generic term used within the USAF to record, submit and transmit deficiency data which may include, but is not limited to a Deficiency Report involving quality, materiel, software, warranty, or informational deficiency data. Full Deployment Decision (FDD): FDD is the final decision made by the MDA authorizing an increment of the program to deploy software for full operational use. Functionality: The capacity of a system to provide useful function and the capability of the system to serve the purpose for which it was designed (meets defined requirements). Individual Component Validation (ICV): ICV, conducted during the CV&I Test Phase, validates each individual component is developed and operates in accordance with specified requirements and approved designs. Integrity - Service - Excellence 25

Terms & Definitions Information Assurance Evaluation (IAE): IAE, conducted during both the CV&I and QT&E Test Phases, evaluates information-related risks to a system. It consists of assessments of: (1) network security policies; (2) identification and authentication; (3) access controls; (4) auditing; and (5) the confidentiality, integrity, and availability of data and their delivery systems. IAE may include application of Security Technical Implementation Guides (STIGs), Security Readiness Review (SRR) Scans, Information Assurance Control Validation, and Application Software Assurance Center of Excellence (ASACOE) application codes scans. Initial Integrated Test Design (IITD): IITD is the process of planning for the execution of disciplined IDT&E for a program. It includes activities such identifying/appointing the RTO, forming the Integrated Test Team, preparing test strategies and planning documentation, and establishing a common T&E database. Integrated Developmental Test and Evaluation (IDT&E): IDT&E is conducted to evaluate design, quality, performance, functionality, security, interoperability, supportability, suitability, usability, and maturity of a system or capability in an operationally relevant environment. IDT&E includes contractor and government testing and is conducted over the life cycle of a system. IDT&E supports the acquisition of new systems as well as the enhancement/sustainment of capabilities within existing systems prior to fielding decisions. Integrated Test Description (ITD): The ITD establishes the scope of the Integrated Developmental Test and Evaluation (IDT&E) activities of the program for a SPECIFIC release. It documents the specific activities to be conducted for all IDT&E test phases and test segments and: (1) identifies the test participants and test locations for each IDT&E test segment; (2) identifies the test objectives for each IDT&E test segment; (3) defines the test environments; (4) defines the test schedule; and (5) defines the exit criteria for each test phase. It integrates developer/system integrator and government test approaches and activities into a single overarching set of descriptions. See Integrated Test Plan (ITP). Integrity - Service - Excellence 26

Terms & Definitions Information Assurance Evaluation (IAE): IAE, conducted during both the CV&I and QT&E Test Phases, evaluates information-related risks to a system. It consists of assessments of: (1) network security policies; (2) identification and authentication; (3) access controls; (4) auditing; and (5) the confidentiality, integrity, and availability of data and their delivery systems. IAE may include application of Security Technical Implementation Guides (STIGs), Security Readiness Review (SRR) Scans, Information Assurance Control Validation, and Application Software Assurance Center of Excellence (ASACOE) application codes scans. Initial Integrated Test Design (IITD): IITD is the process of planning for the execution of disciplined IDT&E for a program. It includes activities such identifying/appointing the RTO, forming the Integrated Test Team, preparing test strategies and planning documentation, and establishing a common T&E database. Integrated Developmental Test and Evaluation (IDT&E): IDT&E is conducted to evaluate design, quality, performance, functionality, security, interoperability, supportability, suitability, usability, and maturity of a system or capability in an operationally relevant environment. IDT&E includes contractor and government testing and is conducted over the life cycle of a system. IDT&E supports the acquisition of new systems as well as the enhancement/sustainment of capabilities within existing systems prior to fielding decisions. Integrated Test Description (ITD): The ITD establishes the scope of the Integrated Developmental Test and Evaluation (IDT&E) activities of the program for a SPECIFIC release. It documents the specific activities to be conducted for all IDT&E test phases and test segments and: (1) identifies the test participants and test locations for each IDT&E test segment; (2) identifies the test objectives for each IDT&E test segment; (3) defines the test environments; (4) defines the test schedule; and (5) defines the exit criteria for each test phase. It integrates developer/system integrator and government test approaches and activities into a single overarching set of descriptions. See Integrated Test Plan (ITP). Integrity - Service - Excellence 26

Terms & Definitions Integrated Test Plan (ITP): The ITP establishes the scope of the Integrated Developmental Test and Evaluation (IDT&E) activities of the program/family of systems. This plan should integrate developer and government test strategies into a single overarching plan covering all IDT&E events. ITPs are written at the major release level and reviewed and updated as required. See Integrated Test Description (ITD). Integrated Test Report (ITR): The ITR, prepared by the RTO at the conclusion of all IDT&E test segments, documents the results of actual test execution against planned test events. The ITR: (1) reports the rating given to each test objective at the completion of testing; (2) documents requirements verification and coverage statistics for each IDT&E test segment; (3) documents the problem reports and appropriate resolution actions for all PRs documented in each IDT&E test segment; and (4) provides an overall RTO evaluation and risk assessment of the success of IDT&E activities. Integrated Test Team (ITT): The ITT is a cross-functional chartered team of empowered representatives/organizations that assists the acquisition community with all aspects of T&E planning and execution. The ITT will ensure development of the TES, TEMP, Integrated Test Plan (ITP), and other T&E related documentation. Interoperability: The ability of systems to provide services to and accept services from other systems and to use the services so exchanged to enable them to operate effectively together. The condition achieved among communications electronics systems or items of communications-electronics equipment when information or services can be exchanged directly and satisfactorily between them or their users (interfaces). Key Performance Parameter (KPP): Those attributes or characteristics of a system that are considered critical or essential to the development of an effective military capability. A KPP normally has a threshold, representing the required value, and an objective, representing the desired value. Integrity - Service - Excellence 27

Terms & Definitions Integrated Test Plan (ITP): The ITP establishes the scope of the Integrated Developmental Test and Evaluation (IDT&E) activities of the program/family of systems. This plan should integrate developer and government test strategies into a single overarching plan covering all IDT&E events. ITPs are written at the major release level and reviewed and updated as required. See Integrated Test Description (ITD). Integrated Test Report (ITR): The ITR, prepared by the RTO at the conclusion of all IDT&E test segments, documents the results of actual test execution against planned test events. The ITR: (1) reports the rating given to each test objective at the completion of testing; (2) documents requirements verification and coverage statistics for each IDT&E test segment; (3) documents the problem reports and appropriate resolution actions for all PRs documented in each IDT&E test segment; and (4) provides an overall RTO evaluation and risk assessment of the success of IDT&E activities. Integrated Test Team (ITT): The ITT is a cross-functional chartered team of empowered representatives/organizations that assists the acquisition community with all aspects of T&E planning and execution. The ITT will ensure development of the TES, TEMP, Integrated Test Plan (ITP), and other T&E related documentation. Interoperability: The ability of systems to provide services to and accept services from other systems and to use the services so exchanged to enable them to operate effectively together. The condition achieved among communications electronics systems or items of communications-electronics equipment when information or services can be exchanged directly and satisfactorily between them or their users (interfaces). Key Performance Parameter (KPP): Those attributes or characteristics of a system that are considered critical or essential to the development of an effective military capability. A KPP normally has a threshold, representing the required value, and an objective, representing the desired value. Integrity - Service - Excellence 27

Terms & Definitions Limited Deployment: Limited Deployment begins when the Functional Sponsor and the MDA approves fielding the capability into an operational environment for: (1) IOT&E of the implementation and use of a major release at one or more selected operational sites; or (2) a QT&E test event extended into the production environment allowing a limited user base to use of the system under actual operating conditions. It provides the opportunity to observe the initial implementation and use of the system under actual operating conditions prior to the Full Deployment Decision (FDD). Measure of Effectiveness (MOE): A measure of the overall degree of mission accomplishment of a system used by representative personnel in the context of the organization, doctrine, tactics, threat (including countermeasures and nuclear threats), and environment in the planned or operational employment of the system. Measure of Suitability (MOS): A measure of the degree to which a system can be placed satisfactorily in field use with consideration given to availability, compatibility, transportability, interoperability, reliability, wartime usage rates, maintainability, safety, human factors, manpower supportability, logistics supportability, natural environmental effects and impacts, documentation, and training requirements. Measure of Performance (MOP): Similar to a Key Performance Parameter, a MOP Measure of a system's performance expressed as speed, frequency, or other distinctly quantifiable performance features (number of concurrent users, response times, availability rates, etc. ). Operational Test Agency (OTA): An independent agency reporting directly to the Service Chief that plans and conducts operational tests, reports results, and provides evaluations of overall operational capability of systems as determined by effectiveness, suitability, and other operational considerations. Integrity - Service - Excellence 28

Terms & Definitions Limited Deployment: Limited Deployment begins when the Functional Sponsor and the MDA approves fielding the capability into an operational environment for: (1) IOT&E of the implementation and use of a major release at one or more selected operational sites; or (2) a QT&E test event extended into the production environment allowing a limited user base to use of the system under actual operating conditions. It provides the opportunity to observe the initial implementation and use of the system under actual operating conditions prior to the Full Deployment Decision (FDD). Measure of Effectiveness (MOE): A measure of the overall degree of mission accomplishment of a system used by representative personnel in the context of the organization, doctrine, tactics, threat (including countermeasures and nuclear threats), and environment in the planned or operational employment of the system. Measure of Suitability (MOS): A measure of the degree to which a system can be placed satisfactorily in field use with consideration given to availability, compatibility, transportability, interoperability, reliability, wartime usage rates, maintainability, safety, human factors, manpower supportability, logistics supportability, natural environmental effects and impacts, documentation, and training requirements. Measure of Performance (MOP): Similar to a Key Performance Parameter, a MOP Measure of a system's performance expressed as speed, frequency, or other distinctly quantifiable performance features (number of concurrent users, response times, availability rates, etc. ). Operational Test Agency (OTA): An independent agency reporting directly to the Service Chief that plans and conducts operational tests, reports results, and provides evaluations of overall operational capability of systems as determined by effectiveness, suitability, and other operational considerations. Integrity - Service - Excellence 28

Terms & Definitions Operational Test and Evaluation (OT&E): OT&E is prospective system's operational effectiveness, suitability, and operational capabilities. In addition, OT&E provides information on organization, personnel requirements, doctrine, and tactics. It may also provide data to support or verify material in operating instructions, publications, and handbooks. Operational Test Readiness Review (OTRR): OTRR is a multi-disciplined product and process assessment to ensure that the production configuration system can proceed into Initial Operational Test and Evaluation (IOT&E) with a high probability of success. Performance Evaluation Test (PET): PET evaluates the performance of the integrated system by employing techniques which may include bandwidth analysis, load testing, and stress testing to ensure the system performs in accordance with specified requirements and approved designs. Problem Report (PR): PRs are used to describe an error, flaw, mistake, failure, or fault in a computer program or system that produces an incorrect or unexpected result, or causes it to behave in unintended ways. See Watch Item (WIT). Qualification Test and Evaluation (QT&E): QT&E, performed by government test representatives, validates the product integrates into its intended environment, meets specified requirements in accordance with the approved design, meets performance standards, and validates the information assurance controls employed by the system meet Do. D standards and policies. QT&E is performed in a government-provided and managed operationally-relevant environment. Regression Testing: Conducted throughout the IDT&E process, regression testing validates existing capabilities and functionality are not diminished or damaged by changes or enhancements introduced to a system. Regression testing also includes “break-fix” testing that verifies corrections implemented function to meet specified requirements. Integrity - Service - Excellence 29

Terms & Definitions Operational Test and Evaluation (OT&E): OT&E is prospective system's operational effectiveness, suitability, and operational capabilities. In addition, OT&E provides information on organization, personnel requirements, doctrine, and tactics. It may also provide data to support or verify material in operating instructions, publications, and handbooks. Operational Test Readiness Review (OTRR): OTRR is a multi-disciplined product and process assessment to ensure that the production configuration system can proceed into Initial Operational Test and Evaluation (IOT&E) with a high probability of success. Performance Evaluation Test (PET): PET evaluates the performance of the integrated system by employing techniques which may include bandwidth analysis, load testing, and stress testing to ensure the system performs in accordance with specified requirements and approved designs. Problem Report (PR): PRs are used to describe an error, flaw, mistake, failure, or fault in a computer program or system that produces an incorrect or unexpected result, or causes it to behave in unintended ways. See Watch Item (WIT). Qualification Test and Evaluation (QT&E): QT&E, performed by government test representatives, validates the product integrates into its intended environment, meets specified requirements in accordance with the approved design, meets performance standards, and validates the information assurance controls employed by the system meet Do. D standards and policies. QT&E is performed in a government-provided and managed operationally-relevant environment. Regression Testing: Conducted throughout the IDT&E process, regression testing validates existing capabilities and functionality are not diminished or damaged by changes or enhancements introduced to a system. Regression testing also includes “break-fix” testing that verifies corrections implemented function to meet specified requirements. Integrity - Service - Excellence 29

Terms & Definitions Requirements Operability Test (ROT): ROT, conducted during the CV&I Test Phase, validates the integrated system functions properly and meets specified requirements and approved designs. Responsible Test Organization (RTO): The lead government developmental test organization on the ITT that is qualified to conduct and responsible for overseeing DT&E. Seamless Verification: Seamless verification promotes using integrated testing procedures coupled with tester collaboration in early requirements definition and system development activities. It shifts T&E away from the traditional "pass-fail" model to one of providing continuous feedback and objective evaluations of system capabilities and limitations throughout system development and fielding. Sufficiency Review: Sufficiency Reviews are conducted as: (1) a CV&I assessment prior to TRR I to determine the sufficiency of CV&I test activities, provide an engineering go/no-go recommendation and to determine readiness to conduct TRR I; and (2) a QT&E mid-point assessment of IDT&E effectiveness used to determine the readiness for movement into Limited Deployment to conduct System Acceptance Test (SAT). Sustainability: The ability to maintain the necessary level and duration of operational activity to achieve military objectives. Sustainability is a function of providing for and maintaining those levels of ready forces, materiel, and consumables necessary to support military effort. System Acceptance Test (SAT): SAT, conducted during the QT&E Test Phase as part of Limited Deployment, obtains confirmation that a system meets mutually agreed-upon requirements. The end users or subject matter experts provides such confirmation after they conduct a period of trial or acceptance testing. Integrity - Service - Excellence 30

Terms & Definitions Requirements Operability Test (ROT): ROT, conducted during the CV&I Test Phase, validates the integrated system functions properly and meets specified requirements and approved designs. Responsible Test Organization (RTO): The lead government developmental test organization on the ITT that is qualified to conduct and responsible for overseeing DT&E. Seamless Verification: Seamless verification promotes using integrated testing procedures coupled with tester collaboration in early requirements definition and system development activities. It shifts T&E away from the traditional "pass-fail" model to one of providing continuous feedback and objective evaluations of system capabilities and limitations throughout system development and fielding. Sufficiency Review: Sufficiency Reviews are conducted as: (1) a CV&I assessment prior to TRR I to determine the sufficiency of CV&I test activities, provide an engineering go/no-go recommendation and to determine readiness to conduct TRR I; and (2) a QT&E mid-point assessment of IDT&E effectiveness used to determine the readiness for movement into Limited Deployment to conduct System Acceptance Test (SAT). Sustainability: The ability to maintain the necessary level and duration of operational activity to achieve military objectives. Sustainability is a function of providing for and maintaining those levels of ready forces, materiel, and consumables necessary to support military effort. System Acceptance Test (SAT): SAT, conducted during the QT&E Test Phase as part of Limited Deployment, obtains confirmation that a system meets mutually agreed-upon requirements. The end users or subject matter experts provides such confirmation after they conduct a period of trial or acceptance testing. Integrity - Service - Excellence 30

Terms & Definitions System Integration Test (SIT): SIT, conducted during both the CV&I and QT&E Phases, validates the integration of a system into an operationally-relevant environment (installation, removal, and back-up and recovery procedures). System Operability Evaluation (SOE): SOE, conducted during the QT&E Test Phase and managed by the RTO, is traceable scenario and/or script driven end-to-end qualification testing of a system that validates the integrated system operates in accordance with specified requirements and approved designs. Test and Evaluation Master Plan (TEMP): The TEMP documents the overall structure and objectives of the T&E program. It provides a framework within which to generate detailed T&E plans and it documents schedule and resource implications associated with the T&E program. The TEMP identifies the necessary developmental and operational test activities. It relates program schedule, test management strategy and structure, and required resources to: (1) critical operational issues; (2) critical technical parameters; (3) objectives and thresholds documented in the requirements document; and (4) milestone decision points. If a TEMP is not prepared, document your test strategy in the Life Cycle Management Plan (LCMP). Test and Evaluation Strategy (TES): The TES is the initial integrated T&E strategy for the program that describes how operational capability requirements will be tested and evaluated in support of the acquisition strategy. The TES is the initial version of the Test and Evaluation Master Plan (TEMP). Test Integrated Process Teams (TIPTs): Any temporary group, formed by the ITT, consisting of test and other subject matter experts who are focused on specific planning, issues or problems. Integrity - Service - Excellence 31

Terms & Definitions System Integration Test (SIT): SIT, conducted during both the CV&I and QT&E Phases, validates the integration of a system into an operationally-relevant environment (installation, removal, and back-up and recovery procedures). System Operability Evaluation (SOE): SOE, conducted during the QT&E Test Phase and managed by the RTO, is traceable scenario and/or script driven end-to-end qualification testing of a system that validates the integrated system operates in accordance with specified requirements and approved designs. Test and Evaluation Master Plan (TEMP): The TEMP documents the overall structure and objectives of the T&E program. It provides a framework within which to generate detailed T&E plans and it documents schedule and resource implications associated with the T&E program. The TEMP identifies the necessary developmental and operational test activities. It relates program schedule, test management strategy and structure, and required resources to: (1) critical operational issues; (2) critical technical parameters; (3) objectives and thresholds documented in the requirements document; and (4) milestone decision points. If a TEMP is not prepared, document your test strategy in the Life Cycle Management Plan (LCMP). Test and Evaluation Strategy (TES): The TES is the initial integrated T&E strategy for the program that describes how operational capability requirements will be tested and evaluated in support of the acquisition strategy. The TES is the initial version of the Test and Evaluation Master Plan (TEMP). Test Integrated Process Teams (TIPTs): Any temporary group, formed by the ITT, consisting of test and other subject matter experts who are focused on specific planning, issues or problems. Integrity - Service - Excellence 31

Terms & Definitions Test Readiness Review I (TRR I): TRR I is the formal review, conducted by the PM, signifying the CV&I portion of IDT&E is completed and determines if the system moves into the QT&E portion of IDT&E testing. The results of the TRR I will demonstrate that each individual component and the assembled components are developed or configured in accordance with the approved design and function properly to meet specified requirements. Triage: Periodic meetings conducted during IDT&E execution held to determining the priority of the problem reports generated during test and evaluation activities based on the severity of their condition. The results of Triage meeting typically result in determining the priority and order in which problem reports and analyzed and addressed User Evaluation Test (UET): UET, normally conducted during the CV&I Test Phase, is typically ad-hoc testing conducted by end-users of the system. UET is conducted to offer an early look at the maturity of the system and to evaluate the feasibility of the system to meet mission requirements. Validation: Confirms the performance of the system, in the intended environment, fulfills its intended purpose (as defined by end-users). Validation answers the question: “Did you build the right thing? ” Verification: Confirms the performance of system components/elements against specified requirements, Verification answers the question: “Did you build it right? ” Watch Item: Watch items are unique to test and evaluation and are used as a method to observe identified conditions which do not fully satisfy deficiency report submission criteria. If used, WITs complement, but do not replace, the official USAF deficiency reporting process. See Problem Report (PR). Integrity - Service - Excellence 32

Terms & Definitions Test Readiness Review I (TRR I): TRR I is the formal review, conducted by the PM, signifying the CV&I portion of IDT&E is completed and determines if the system moves into the QT&E portion of IDT&E testing. The results of the TRR I will demonstrate that each individual component and the assembled components are developed or configured in accordance with the approved design and function properly to meet specified requirements. Triage: Periodic meetings conducted during IDT&E execution held to determining the priority of the problem reports generated during test and evaluation activities based on the severity of their condition. The results of Triage meeting typically result in determining the priority and order in which problem reports and analyzed and addressed User Evaluation Test (UET): UET, normally conducted during the CV&I Test Phase, is typically ad-hoc testing conducted by end-users of the system. UET is conducted to offer an early look at the maturity of the system and to evaluate the feasibility of the system to meet mission requirements. Validation: Confirms the performance of the system, in the intended environment, fulfills its intended purpose (as defined by end-users). Validation answers the question: “Did you build the right thing? ” Verification: Confirms the performance of system components/elements against specified requirements, Verification answers the question: “Did you build it right? ” Watch Item: Watch items are unique to test and evaluation and are used as a method to observe identified conditions which do not fully satisfy deficiency report submission criteria. If used, WITs complement, but do not replace, the official USAF deficiency reporting process. See Problem Report (PR). Integrity - Service - Excellence 32