Efficient Production of Synthetic Skies for the Dark Energy Survey Raminder Singh Science Gateways Group Indiana University, Bloomington. ramifnu@iu. edu

Efficient Production of Synthetic Skies for the Dark Energy Survey Raminder Singh Science Gateways Group Indiana University, Bloomington. ramifnu@iu. edu

Background & Explanation • First project to combine four different statistical methods of dark matter and dark energy. • Baryon acoustic oscillations in the matter power spectrum. • Abundance and spatial distribution of galaxy groups and clusters. • Weak gravitational lensing by large-scale structure. • Type Ia supernovae - are quasi-independent. • 5000 -square degree survey of cosmic structure traced by galaxies Simulation Working Group is generating simulations for galaxy yields in various cosmologies Analysis of these simulated catalogs offers a quality assurance capability for cosmological and astrophysical analysis of upcoming DES telescope data. • •

Background & Explanation • First project to combine four different statistical methods of dark matter and dark energy. • Baryon acoustic oscillations in the matter power spectrum. • Abundance and spatial distribution of galaxy groups and clusters. • Weak gravitational lensing by large-scale structure. • Type Ia supernovae - are quasi-independent. • 5000 -square degree survey of cosmic structure traced by galaxies Simulation Working Group is generating simulations for galaxy yields in various cosmologies Analysis of these simulated catalogs offers a quality assurance capability for cosmological and astrophysical analysis of upcoming DES telescope data. • •

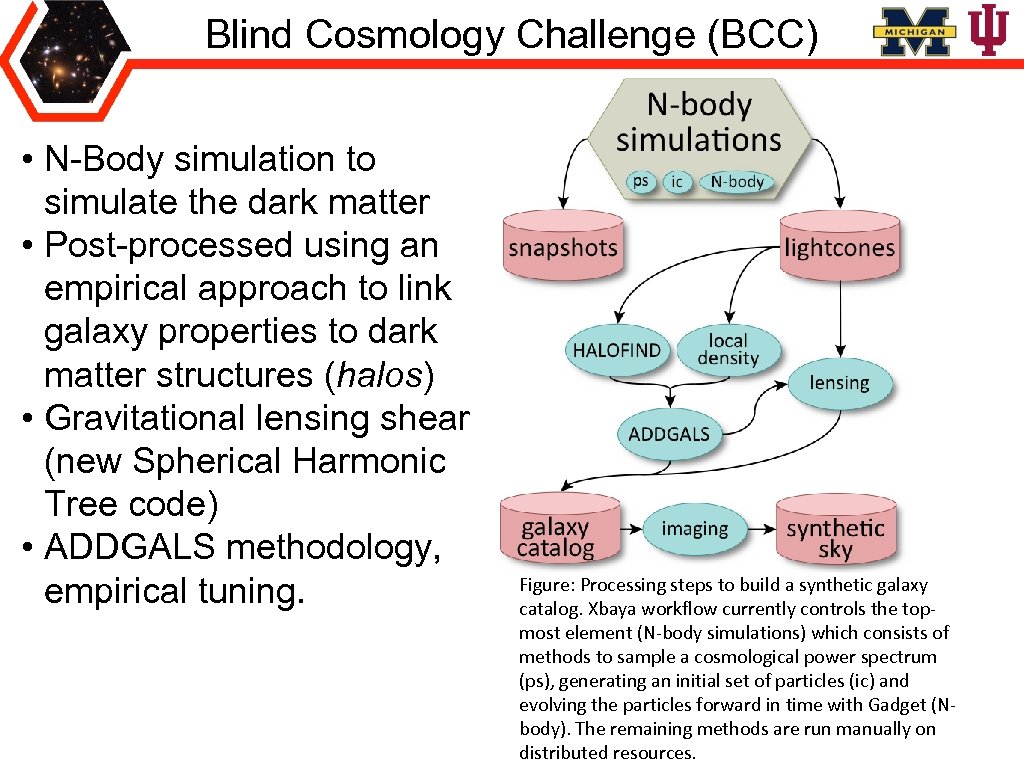

Blind Cosmology Challenge (BCC) • N-Body simulation to simulate the dark matter • Post-processed using an empirical approach to link galaxy properties to dark matter structures (halos) • Gravitational lensing shear (new Spherical Harmonic Tree code) • ADDGALS methodology, empirical tuning. Figure: Processing steps to build a synthetic galaxy catalog. Xbaya workflow currently controls the topmost element (N-body simulations) which consists of methods to sample a cosmological power spectrum (ps), generating an initial set of particles (ic) and evolving the particles forward in time with Gadget (Nbody). The remaining methods are run manually on distributed resources.

Blind Cosmology Challenge (BCC) • N-Body simulation to simulate the dark matter • Post-processed using an empirical approach to link galaxy properties to dark matter structures (halos) • Gravitational lensing shear (new Spherical Harmonic Tree code) • ADDGALS methodology, empirical tuning. Figure: Processing steps to build a synthetic galaxy catalog. Xbaya workflow currently controls the topmost element (N-body simulations) which consists of methods to sample a cosmological power spectrum (ps), generating an initial set of particles (ic) and evolving the particles forward in time with Gadget (Nbody). The remaining methods are run manually on distributed resources.

Project Plan • Project requested for ECSS support to automate and optimize processing on XSEDE resources. • ECSS staff, Dora Cai worked with the group on the work plan. • Work plan was discussed and a timeline were given to each task • Work plan called for porting all codes to TACCranger

Project Plan • Project requested for ECSS support to automate and optimize processing on XSEDE resources. • ECSS staff, Dora Cai worked with the group on the work plan. • Work plan was discussed and a timeline were given to each task • Work plan called for porting all codes to TACCranger

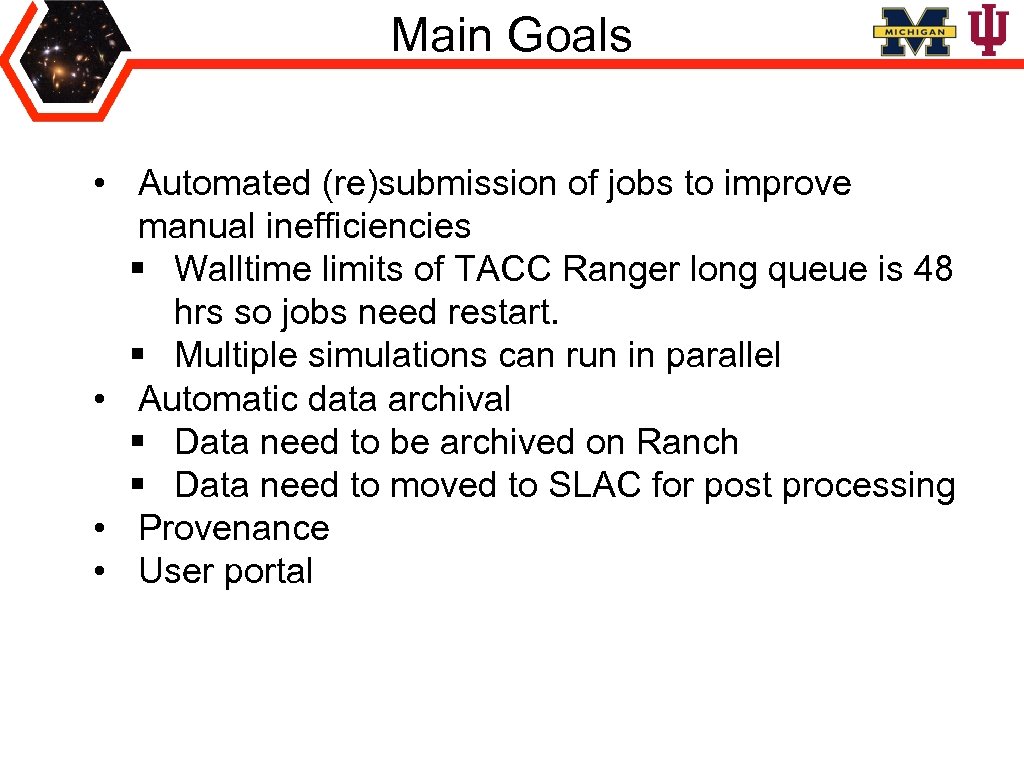

Main Goals • Automated (re)submission of jobs to improve manual inefficiencies § Walltime limits of TACC Ranger long queue is 48 hrs so jobs need restart. § Multiple simulations can run in parallel • Automatic data archival § Data need to be archived on Ranch § Data need to moved to SLAC for post processing • Provenance • User portal

Main Goals • Automated (re)submission of jobs to improve manual inefficiencies § Walltime limits of TACC Ranger long queue is 48 hrs so jobs need restart. § Multiple simulations can run in parallel • Automatic data archival § Data need to be archived on Ranch § Data need to moved to SLAC for post processing • Provenance • User portal

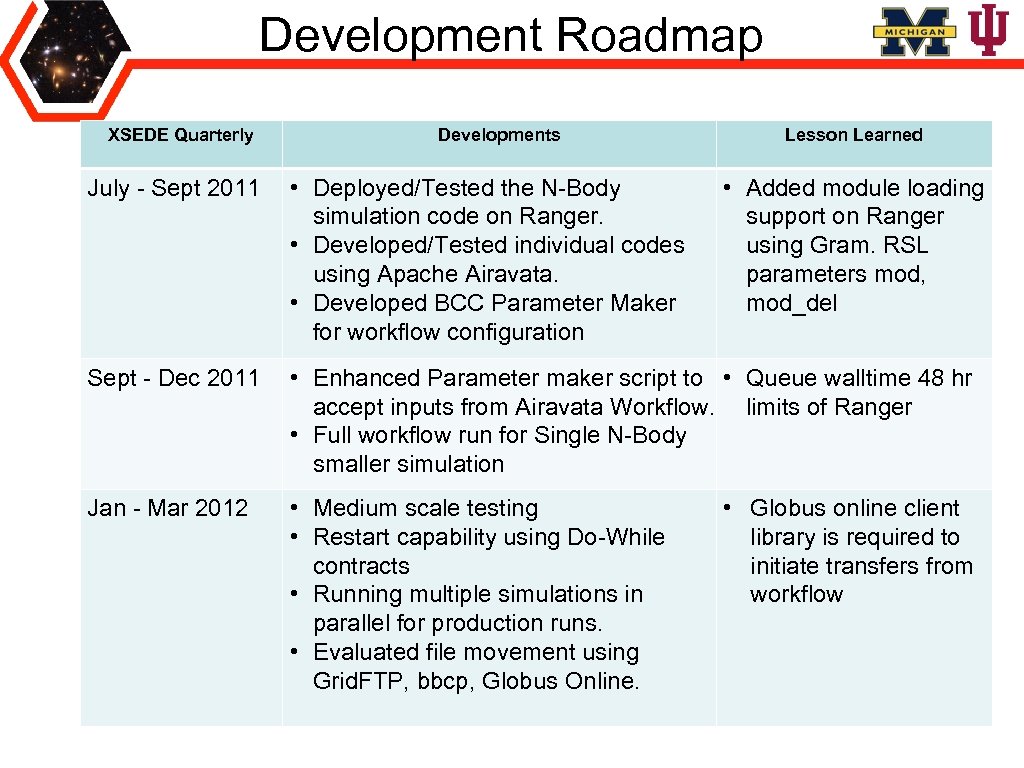

Development Roadmap XSEDE Quarterly Developments Lesson Learned July - Sept 2011 • Deployed/Tested the N-Body simulation code on Ranger. • Developed/Tested individual codes using Apache Airavata. • Developed BCC Parameter Maker for workflow configuration • Added module loading support on Ranger using Gram. RSL parameters mod, mod_del Sept - Dec 2011 • Enhanced Parameter maker script to • Queue walltime 48 hr accept inputs from Airavata Workflow. limits of Ranger • Full workflow run for Single N-Body smaller simulation Jan - Mar 2012 • Medium scale testing • Restart capability using Do-While contracts • Running multiple simulations in parallel for production runs. • Evaluated file movement using Grid. FTP, bbcp, Globus Online. • Globus online client library is required to initiate transfers from workflow

Development Roadmap XSEDE Quarterly Developments Lesson Learned July - Sept 2011 • Deployed/Tested the N-Body simulation code on Ranger. • Developed/Tested individual codes using Apache Airavata. • Developed BCC Parameter Maker for workflow configuration • Added module loading support on Ranger using Gram. RSL parameters mod, mod_del Sept - Dec 2011 • Enhanced Parameter maker script to • Queue walltime 48 hr accept inputs from Airavata Workflow. limits of Ranger • Full workflow run for Single N-Body smaller simulation Jan - Mar 2012 • Medium scale testing • Restart capability using Do-While contracts • Running multiple simulations in parallel for production runs. • Evaluated file movement using Grid. FTP, bbcp, Globus Online. • Globus online client library is required to initiate transfers from workflow

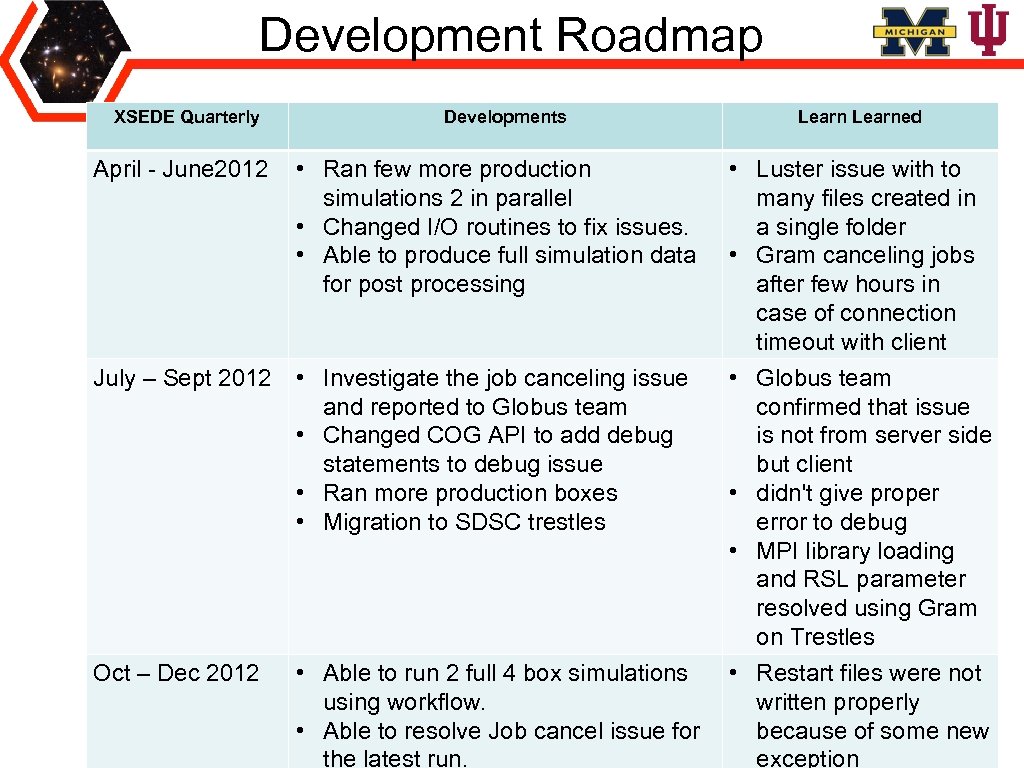

Development Roadmap XSEDE Quarterly Developments April - June 2012 • Ran few more production simulations 2 in parallel • Changed I/O routines to fix issues. • Able to produce full simulation data for post processing July – Sept 2012 • Investigate the job canceling issue and reported to Globus team • Changed COG API to add debug statements to debug issue • Ran more production boxes • Migration to SDSC trestles Oct – Dec 2012 • Able to run 2 full 4 box simulations using workflow. • Able to resolve Job cancel issue for the latest run. Learned • Luster issue with to many files created in a single folder • Gram canceling jobs after few hours in case of connection timeout with client • Globus team confirmed that issue is not from server side but client • didn't give proper error to debug • MPI library loading and RSL parameter resolved using Gram on Trestles • Restart files were not written properly because of some new exception

Development Roadmap XSEDE Quarterly Developments April - June 2012 • Ran few more production simulations 2 in parallel • Changed I/O routines to fix issues. • Able to produce full simulation data for post processing July – Sept 2012 • Investigate the job canceling issue and reported to Globus team • Changed COG API to add debug statements to debug issue • Ran more production boxes • Migration to SDSC trestles Oct – Dec 2012 • Able to run 2 full 4 box simulations using workflow. • Able to resolve Job cancel issue for the latest run. Learned • Luster issue with to many files created in a single folder • Gram canceling jobs after few hours in case of connection timeout with client • Globus team confirmed that issue is not from server side but client • didn't give proper error to debug • MPI library loading and RSL parameter resolved using Gram on Trestles • Restart files were not written properly because of some new exception

Xbaya Workflow • Three large-volume realizations with 20483 particles using 1024 processors • One smaller volume of 14003 particles using 512 processors. • These 4 boxes need about 300, 000 SUs • Each cosmology simulation entails roughly 56 TB of data.

Xbaya Workflow • Three large-volume realizations with 20483 particles using 1024 processors • One smaller volume of 14003 particles using 512 processors. • These 4 boxes need about 300, 000 SUs • Each cosmology simulation entails roughly 56 TB of data.

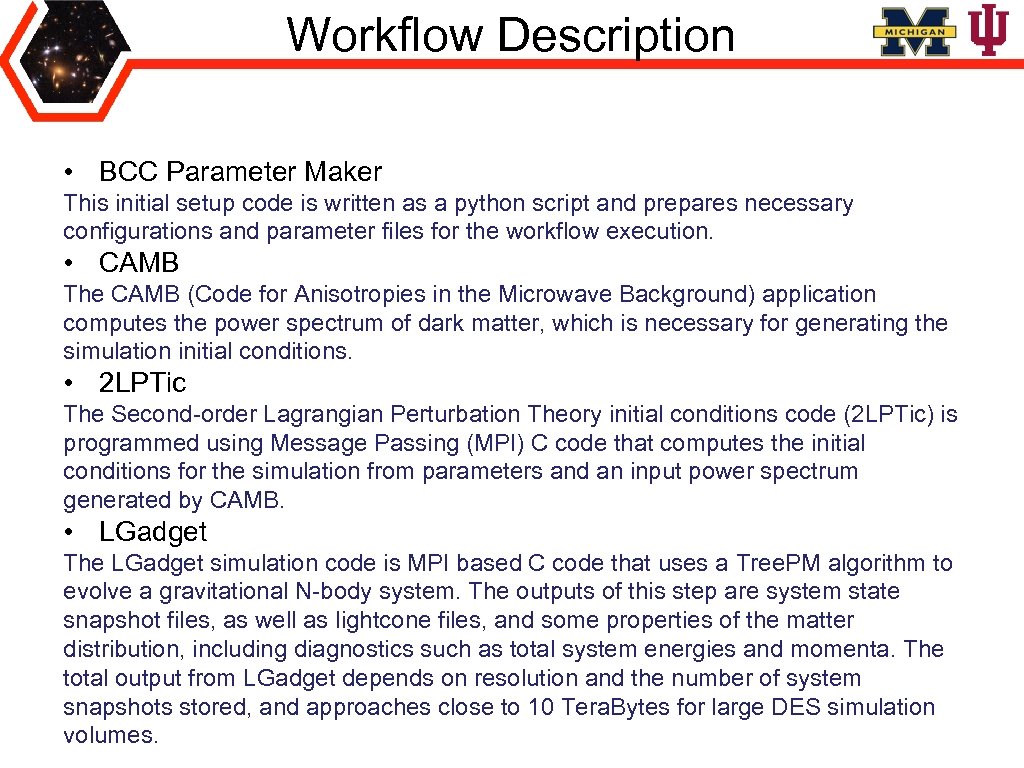

Workflow Description • BCC Parameter Maker This initial setup code is written as a python script and prepares necessary configurations and parameter files for the workflow execution. • CAMB The CAMB (Code for Anisotropies in the Microwave Background) application computes the power spectrum of dark matter, which is necessary for generating the simulation initial conditions. • 2 LPTic The Second-order Lagrangian Perturbation Theory initial conditions code (2 LPTic) is programmed using Message Passing (MPI) C code that computes the initial conditions for the simulation from parameters and an input power spectrum generated by CAMB. • LGadget The LGadget simulation code is MPI based C code that uses a Tree. PM algorithm to evolve a gravitational N-body system. The outputs of this step are system state snapshot files, as well as lightcone files, and some properties of the matter distribution, including diagnostics such as total system energies and momenta. The total output from LGadget depends on resolution and the number of system snapshots stored, and approaches close to 10 Tera. Bytes for large DES simulation volumes.

Workflow Description • BCC Parameter Maker This initial setup code is written as a python script and prepares necessary configurations and parameter files for the workflow execution. • CAMB The CAMB (Code for Anisotropies in the Microwave Background) application computes the power spectrum of dark matter, which is necessary for generating the simulation initial conditions. • 2 LPTic The Second-order Lagrangian Perturbation Theory initial conditions code (2 LPTic) is programmed using Message Passing (MPI) C code that computes the initial conditions for the simulation from parameters and an input power spectrum generated by CAMB. • LGadget The LGadget simulation code is MPI based C code that uses a Tree. PM algorithm to evolve a gravitational N-body system. The outputs of this step are system state snapshot files, as well as lightcone files, and some properties of the matter distribution, including diagnostics such as total system energies and momenta. The total output from LGadget depends on resolution and the number of system snapshots stored, and approaches close to 10 Tera. Bytes for large DES simulation volumes.

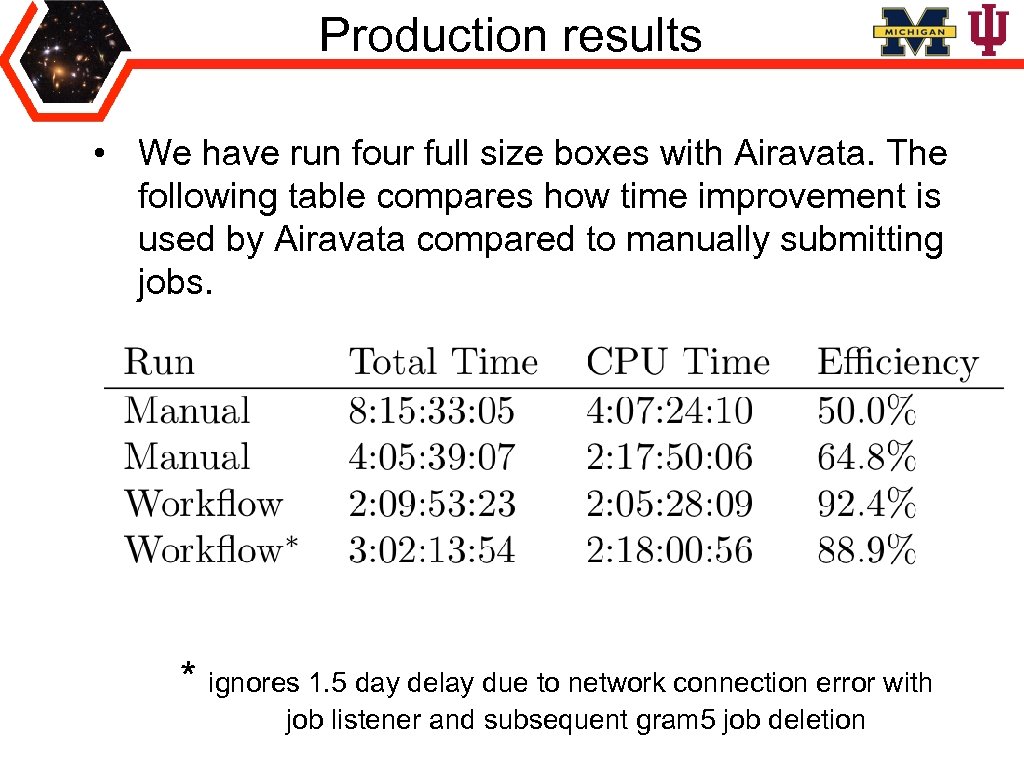

Production results • We have run four full size boxes with Airavata. The following table compares how time improvement is used by Airavata compared to manually submitting jobs. * ignores 1. 5 day delay due to network connection error with job listener and subsequent gram 5 job deletion

Production results • We have run four full size boxes with Airavata. The following table compares how time improvement is used by Airavata compared to manually submitting jobs. * ignores 1. 5 day delay due to network connection error with job listener and subsequent gram 5 job deletion

Lessons learned • MPI libraries and job scripts can be different on different resources. User needs to experiment to learn. • User scratch policies can be different on different machines so it can talk some time to migrate between resources. • Migration of working codes from one machine to another can take weeks to months • Grid Services and client libraries need 1 st class support XSEDE ticketing system is your Best Friend!

Lessons learned • MPI libraries and job scripts can be different on different resources. User needs to experiment to learn. • User scratch policies can be different on different machines so it can talk some time to migrate between resources. • Migration of working codes from one machine to another can take weeks to months • Grid Services and client libraries need 1 st class support XSEDE ticketing system is your Best Friend!

Future Goals • Migrate to SDSC Trestles for next production run. • Group is planning to work with Apache Airavata for future extensions. • Implement intermediate start of workflow in case of failure based on Provenance information. • Post processing • Plan for TACC Stampede migration. • Currently we are using Globus Online GUI interface for file transfer but would like to integrate using API’s with the Workflow • Migrate post processing and quality assurance code on XSEDE and develop post processing workflow • Try to integrate Post processing steps on SLAC

Future Goals • Migrate to SDSC Trestles for next production run. • Group is planning to work with Apache Airavata for future extensions. • Implement intermediate start of workflow in case of failure based on Provenance information. • Post processing • Plan for TACC Stampede migration. • Currently we are using Globus Online GUI interface for file transfer but would like to integrate using API’s with the Workflow • Migrate post processing and quality assurance code on XSEDE and develop post processing workflow • Try to integrate Post processing steps on SLAC

Team & Publication DES Simulation Working group August E. Evrard, Departments of Physics and Astronomy(Michigan) Brandon Erickson, grad student (Michigan) Risa Wechsler, asst. professor (Stanford/SLAC) Michael Busha, postdoc (Zurich) Matt Becker, grad student (Chicago) Andrey V. Kravtsov, Department of Astronomy and Astrophysics (Chicago) ECSS Suresh Marru, Science Gateways Group(Indiana) Marlon Pierce Science Gateways Group(Indiana) Lars Koesterke, TACC (Texas) Dora Cai, NCSA (Illinois) Publication: XSDEDE 12: Brandon M. S. Erickson, Raminderjeet Singh, August E. Evrard, Matthew R. Becker, Michael T. Busha, Andrey V. Kravtsov, Suresh Marru, Marlon Pierce, and Risa H. Wechsler. 2012. A high throughput workflow environment for cosmological simulations. In Proceedings of the 1 st Conference of the Extreme Science and Engineering Discovery Environment: Bridging from the e. Xtreme to the campus and beyond (XSEDE '12). ACM, New York, NY, USA, , Article 34 , 8 pages. DOI=10. 1145/2335755. 2335830 http: //doi. acm. org/10. 1145/2335755. 2335830

Team & Publication DES Simulation Working group August E. Evrard, Departments of Physics and Astronomy(Michigan) Brandon Erickson, grad student (Michigan) Risa Wechsler, asst. professor (Stanford/SLAC) Michael Busha, postdoc (Zurich) Matt Becker, grad student (Chicago) Andrey V. Kravtsov, Department of Astronomy and Astrophysics (Chicago) ECSS Suresh Marru, Science Gateways Group(Indiana) Marlon Pierce Science Gateways Group(Indiana) Lars Koesterke, TACC (Texas) Dora Cai, NCSA (Illinois) Publication: XSDEDE 12: Brandon M. S. Erickson, Raminderjeet Singh, August E. Evrard, Matthew R. Becker, Michael T. Busha, Andrey V. Kravtsov, Suresh Marru, Marlon Pierce, and Risa H. Wechsler. 2012. A high throughput workflow environment for cosmological simulations. In Proceedings of the 1 st Conference of the Extreme Science and Engineering Discovery Environment: Bridging from the e. Xtreme to the campus and beyond (XSEDE '12). ACM, New York, NY, USA, , Article 34 , 8 pages. DOI=10. 1145/2335755. 2335830 http: //doi. acm. org/10. 1145/2335755. 2335830