c0d32d2f49b7fdc54a8bebb9cbe7cd3b.ppt

- Количество слайдов: 26

Efficient, hot & automatic oracle database cloning “Discover how to clone your production database without disruption, in a totally automated & efficient way” Josep Vidal Canet Universtitat de València

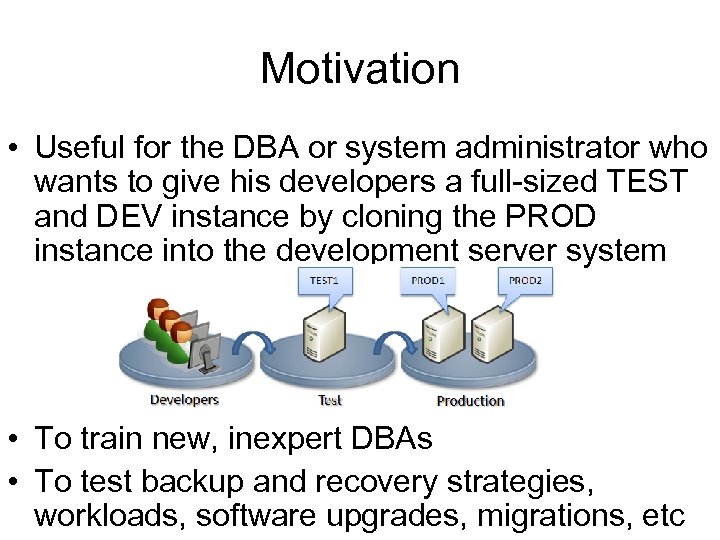

Motivation • Useful for the DBA or system administrator who wants to give his developers a full-sized TEST and DEV instance by cloning the PROD instance into the development server system • To train new, inexpert DBAs • To test backup and recovery strategies, workloads, software upgrades, migrations, etc

Universitat de Valencia • Spain’s third-largest university – 45. 000 students – 3. 500 teachers – 2. 000 workers • Different RDBMS: – DB 2 / ZOS mainly OLTP – The biggest amount of data is stored in Oracle / Unix / Linux • Both OLTP & OLAP – Postgres

What’s a database clone? • A database clone is a complete and separate copy of a database system that includes the business data, applications and the DBMS software (wikipedia) • The cloned DB is both fully functional and separate in its own right

DB cloning strategies • Physical copy -> same server architecture & oracle version • Export / import : different architectures or oracle versions

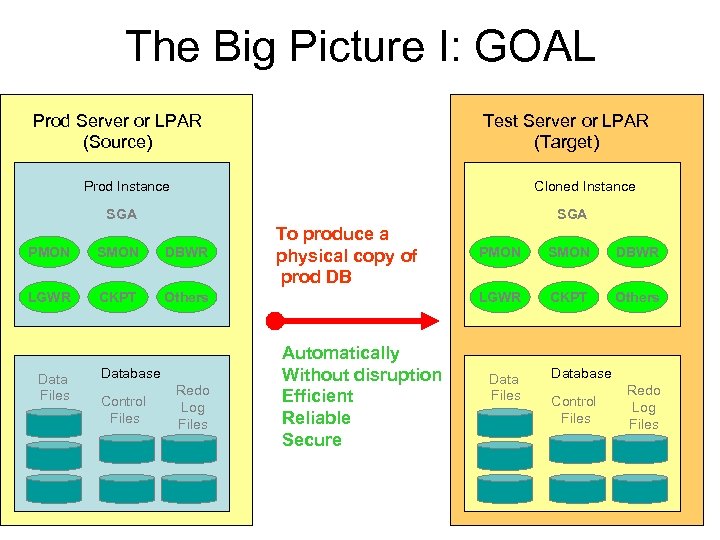

The Big Picture I: GOAL Prod Server or LPAR (Source) Test Server or LPAR (Target) Prod Instance Cloned Instance SGA PMON SMON DBWR LGWR CKPT To produce a physical copy of prod DB Others Data Files Database Control Files Redo Log Files SMON DBWR LGWR Automatically Without disruption Efficient Reliable Secure PMON CKPT Others Data Files Database Control Files Redo Log Files

pclone. sh • A script that automates the whole process: – Without disrupting production systems • Remote Hotbackup • If the OS allows it (AIX, HP-UX, Solaris) WLM controls are imposed to guarantee Qo. S – Efficient – Easy to schedule as a crontab job – Only differences (modified blocks) from prod to test database are copied – Parallel programming techniques are applied to reduce the amount of time required • The whole process is triggered by target system, which queries source database catalog and launches a remote hotbackup • Once completed, it is recovered in target system

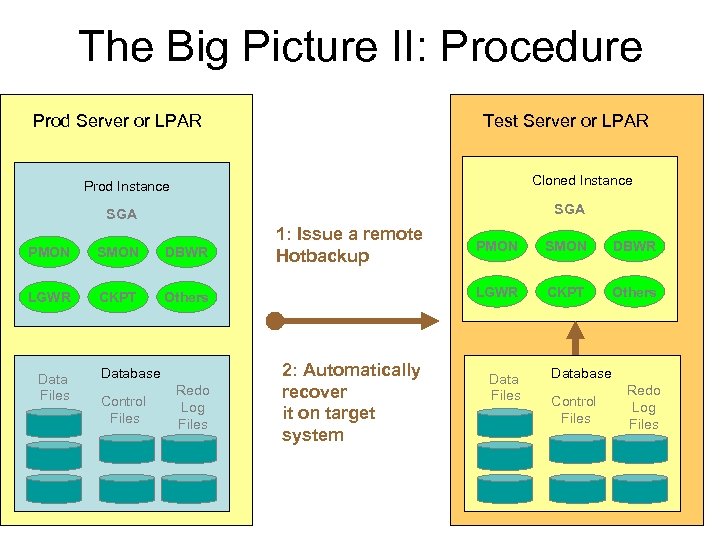

The Big Picture II: Procedure Prod Server or LPAR Test Server or LPAR Cloned Instance Prod Instance SGA PMON SMON DBWR LGWR CKPT 1: Issue a remote Hotbackup Data Files Database Control Files Redo Log Files 2: Automatically recover it on target system SMON DBWR LGWR Others PMON CKPT Others Data Files Database Control Files Redo Log Files

pclone. sh • No special hardware or software required • Data is moved using a SSL encrypted connection • Used at UV to clone/update test instances from production systems in a wide range of platforms (Linux/x 86, Solaris/SPARC, AIX/Power). • Easy to use: pclone. sh DB user/password • The tool can be downloaded from: – http: //www. uv. es/vijo/pclone. sh • Paper: – http: //www. uv. es/vijo/cloning_oracle_database. pdf

Requirements • Same ORACLE & OS versions & system architecture • ssh & rsync tools, configured so you can login without password • Time synchronization in both servers – NTP (Network Time protocol). • Archive log mode • Prod instance reachable from test system with tnsping utility

Main steps • • Automated using bash scripting Executed in the target system: 1. Queries source database catalog to obtain physical database objects 2. Copies each database object from source to target system ensuring database consistency 3. Starts the source database in the target system (DB recovery)

Step 1: Database physical layout • Source DB discovery – Find server & $ORACLE_HOME with tnsping & /etc/oratab – Test source DB status • Query target DB to determine physical layout – tablespaces, datafiles, redo logs, archive logs, init. ora, etc …

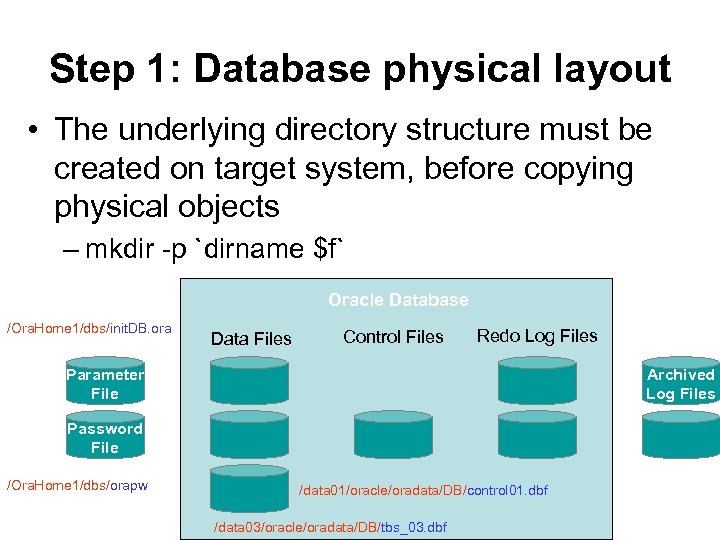

Step 1: Database physical layout • The underlying directory structure must be created on target system, before copying physical objects – mkdir -p `dirname $f` Oracle Database /Ora. Home 1/dbs/init. DB. ora Data Files Control Files Redo Log Files Parameter File Archived Log Files Password File /Ora. Home 1/dbs/orapw /data 01/oracle/oradata/DB/control 01. dbf /data 03/oracle/oradata/DB/tbs_03. dbf

Step 2: Copying physical database objects between systems • Goal: To copy each type of database object from source to target ensuring database consistency • Data transfer utility: rsync – Capable of copying files between remote computers by transferring only file differences

rsync • Utility: To copy files between two systems • Performs a block level comparison of two files • Transfers only modified blocks – huge benefit if you are transferring large files like dafafiles over a network link • Speeds up file transfers when the destination file already exists • rsync remote-update protocol – allows rsync to transfer just the differences between two sets of files across the network link, using an efficient checksum search

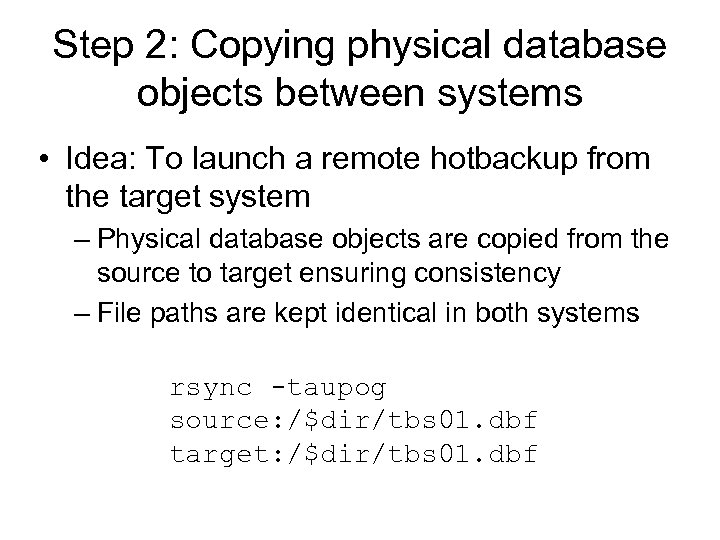

Step 2: Copying physical database objects between systems • Idea: To launch a remote hotbackup from the target system – Physical database objects are copied from the source to target ensuring consistency – File paths are kept identical in both systems rsync -taupog source: /$dir/tbs 01. dbf target: /$dir/tbs 01. dbf

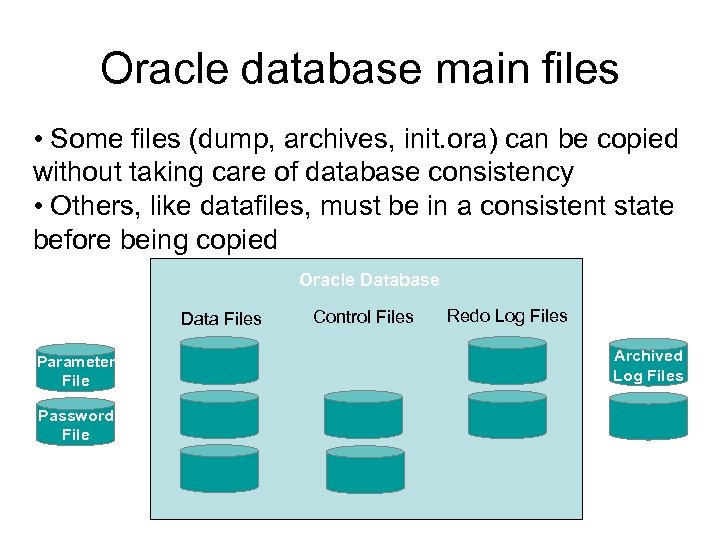

Oracle database main files • Some files (dump, archives, init. ora) can be copied without taking care of database consistency • Others, like datafiles, must be in a consistent state before being copied Oracle Database Data Files Parameter File Password File Control Files Redo Log Files Archived Log Files

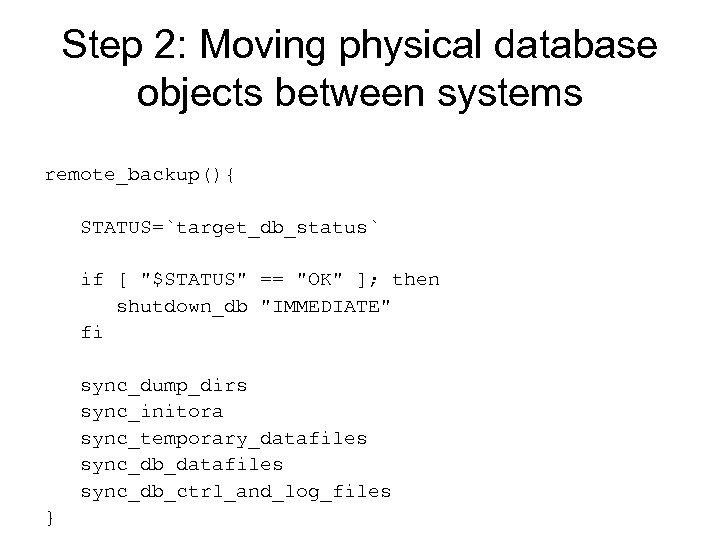

Step 2: Moving physical database objects between systems remote_backup(){ STATUS=`target_db_status` if [ "$STATUS" == "OK" ]; then shutdown_db "IMMEDIATE" fi sync_dump_dirs sync_initora sync_temporary_datafiles sync_db_ctrl_and_log_files }

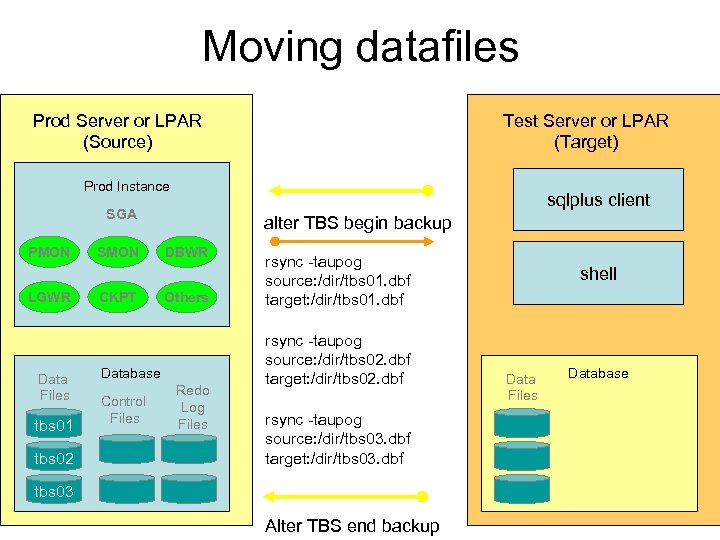

Moving datafiles Test Server or LPAR (Target) Prod Server or LPAR (Source) Prod Instance sqlplus client SGA PMON LGWR Data Files tbs 01 tbs 02 SMON CKPT alter TBS begin backup DBWR Others Database Control Files Redo Log Files rsync -taupog source: /dir/tbs 01. dbf target: /dir/tbs 01. dbf rsync -taupog source: /dir/tbs 02. dbf target: /dir/tbs 02. dbf rsync -taupog source: /dir/tbs 03. dbf target: /dir/tbs 03. dbf tbs 03 Alter TBS end backup shell Data Files Database

Moving datafiles • The algorithm can be summarized as: – for each Tablespace in the source database do: • Set tablespace in backup mode • Copy (using rsync) each datafile from source to target system using parallel techniques • End tablespace backup mode • A similar approach is used to move both control and redo log files

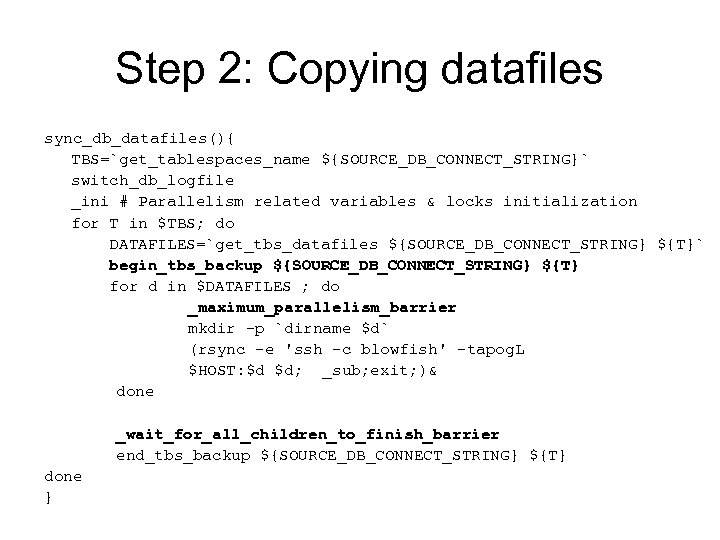

Step 2: Copying datafiles sync_db_datafiles(){ TBS=`get_tablespaces_name ${SOURCE_DB_CONNECT_STRING}` switch_db_logfile _ini # Parallelism related variables & locks initialization for T in $TBS; do DATAFILES=`get_tbs_datafiles ${SOURCE_DB_CONNECT_STRING} ${T}` begin_tbs_backup ${SOURCE_DB_CONNECT_STRING} ${T} for d in $DATAFILES ; do _maximum_parallelism_barrier mkdir -p `dirname $d` (rsync -e 'ssh -c blowfish' -tapog. L $HOST: $d $d; _sub; exit; )& done _wait_for_all_children_to_finish_barrier end_tbs_backup ${SOURCE_DB_CONNECT_STRING} ${T} done }

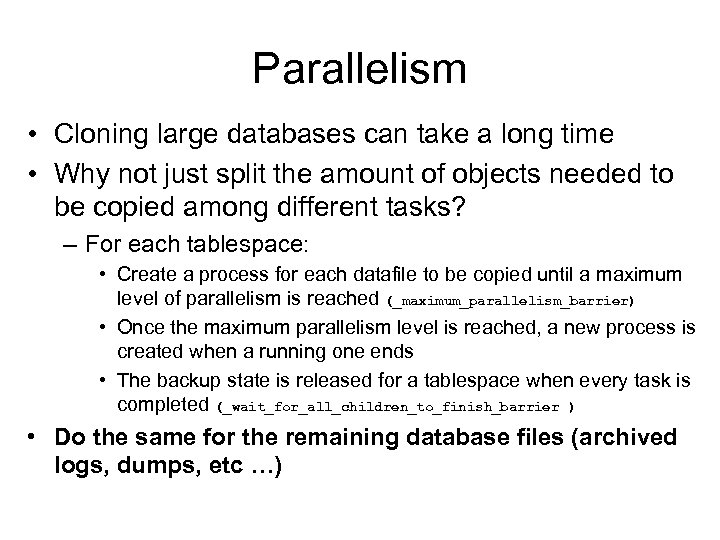

Parallelism • Cloning large databases can take a long time • Why not just split the amount of objects needed to be copied among different tasks? – For each tablespace: • Create a process for each datafile to be copied until a maximum level of parallelism is reached (_maximum_parallelism_barrier) • Once the maximum parallelism level is reached, a new process is created when a running one ends • The backup state is released for a tablespace when every task is completed (_wait_for_all_children_to_finish_barrier ) • Do the same for the remaining database files (archived logs, dumps, etc …)

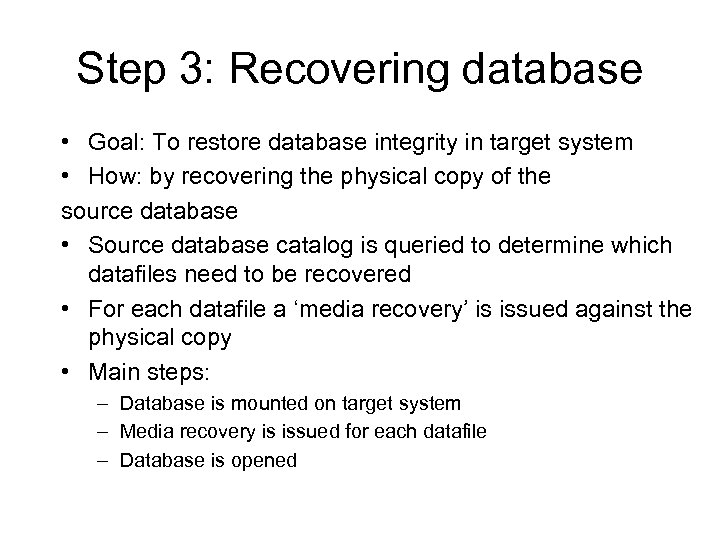

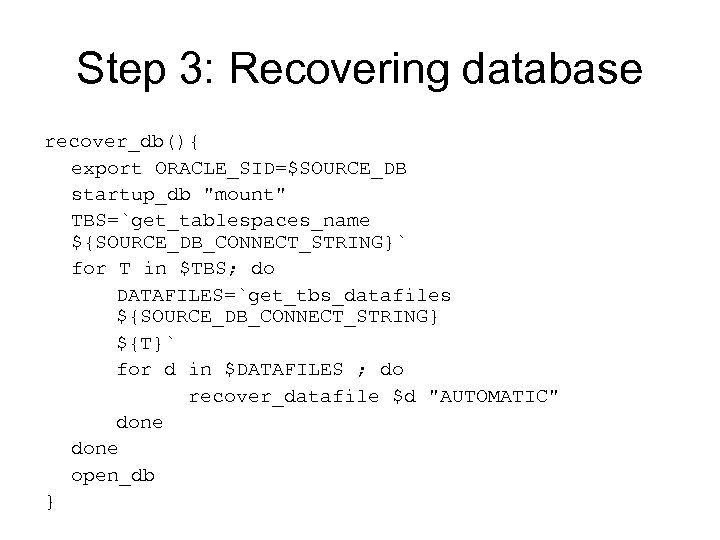

Step 3: Recovering database • Goal: To restore database integrity in target system • How: by recovering the physical copy of the source database • Source database catalog is queried to determine which datafiles need to be recovered • For each datafile a ‘media recovery’ is issued against the physical copy • Main steps: – Database is mounted on target system – Media recovery is issued for each datafile – Database is opened

Step 3: Recovering database recover_db(){ export ORACLE_SID=$SOURCE_DB startup_db "mount" TBS=`get_tablespaces_name ${SOURCE_DB_CONNECT_STRING}` for T in $TBS; do DATAFILES=`get_tbs_datafiles ${SOURCE_DB_CONNECT_STRING} ${T}` for d in $DATAFILES ; do recover_datafile $d "AUTOMATIC" done open_db }

Conclusions • Database cloning can be used for different purposes like testing, developing, database maintenance tests or DBA training • pclone. sh automates the whole process without disrupting production systems in an efficient manner • The whole process, major steps and the necessary code to implement it, has been discussed

Conclusions II • Performance – The time needed to complete the whole process depends on: • database size & available computational resources (CPUs & disks) • database update rate as well as cloning interval – Example: • 150 GB accounting production database, running in a p 570 server uncapped LPAR (6 power 5 CPU’s) • After an initial cloning, no additional synchronization was performed for 6 months • Afterwards, the test database was synchronized from the prod one • The whole process took less than an hour

c0d32d2f49b7fdc54a8bebb9cbe7cd3b.ppt