33dd42c7cb6e4935362262f3bf1ed87f.ppt

- Количество слайдов: 42

Educational data mining in a computer tutor that listens Joseph E. Beck Acknowledgements: NSF, Heinz 1

Educational data mining in a computer tutor that listens Joseph E. Beck Acknowledgements: NSF, Heinz 1

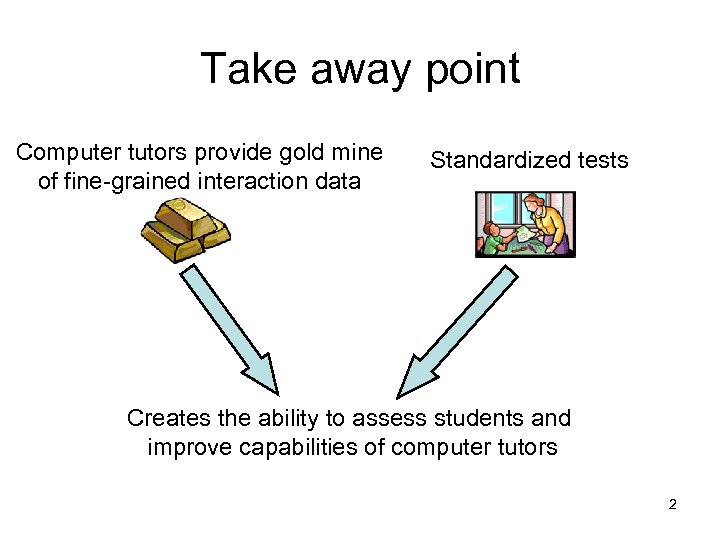

Take away point Computer tutors provide gold mine of fine-grained interaction data Standardized tests Creates the ability to assess students and improve capabilities of computer tutors 2

Take away point Computer tutors provide gold mine of fine-grained interaction data Standardized tests Creates the ability to assess students and improve capabilities of computer tutors 2

What is educational data mining? • Using data to learn about students and instruction – E. g. predict student behavior, assess students, evaluate the tutor’s teaching, etc. • Motivation: computer tutors provide large samples of fine-grained, longitudinal data that are a powerful (unique? ) source of knowledge to improve educational outcomes 3

What is educational data mining? • Using data to learn about students and instruction – E. g. predict student behavior, assess students, evaluate the tutor’s teaching, etc. • Motivation: computer tutors provide large samples of fine-grained, longitudinal data that are a powerful (unique? ) source of knowledge to improve educational outcomes 3

Difference between educational and standard data mining • Data collected with purpose in mind – Have control over schema • Describe more interactive phenomena • Generally smaller datasets 4

Difference between educational and standard data mining • Data collected with purpose in mind – Have control over schema • Describe more interactive phenomena • Generally smaller datasets 4

Project LISTEN’s Reading Tutor 5

Project LISTEN’s Reading Tutor 5

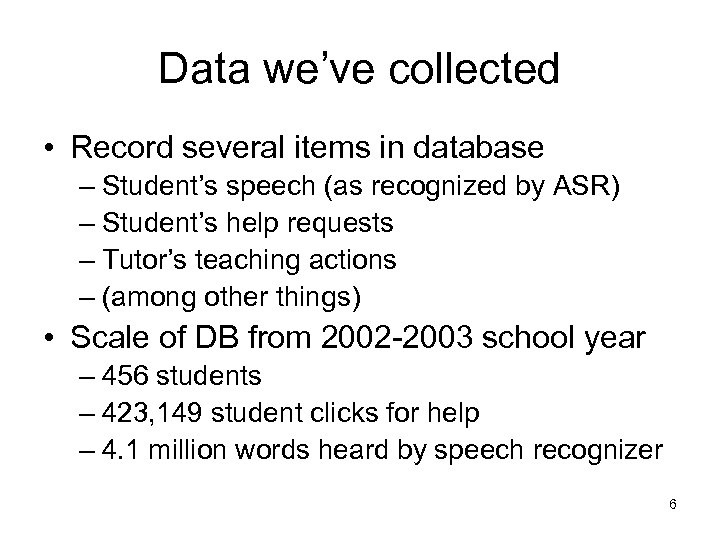

Data we’ve collected • Record several items in database – Student’s speech (as recognized by ASR) – Student’s help requests – Tutor’s teaching actions – (among other things) • Scale of DB from 2002 -2003 school year – 456 students – 423, 149 student clicks for help – 4. 1 million words heard by speech recognizer 6

Data we’ve collected • Record several items in database – Student’s speech (as recognized by ASR) – Student’s help requests – Tutor’s teaching actions – (among other things) • Scale of DB from 2002 -2003 school year – 456 students – 423, 149 student clicks for help – 4. 1 million words heard by speech recognizer 6

Outline Predicting student behavior • E. g. will the student click for help on this word? Inferring student’s skills • E. g. does the student know “ch” can make a K sound (e. g. “chaos”)? Future work 7

Outline Predicting student behavior • E. g. will the student click for help on this word? Inferring student’s skills • E. g. does the student know “ch” can make a K sound (e. g. “chaos”)? Future work 7

Predicting student help requests 8

Predicting student help requests 8

Why predict help requests? • Goal is to understand the student – In two senses • A good model of the student should be able to predict future actions (e. g. outcome measure) • Help requests provide window into student’s reading proficiency (e. g. source of knowledge) • Non-speech events are less noisy • Applications of help requests – Avoid overly complex material – Provide help ahead of time 9

Why predict help requests? • Goal is to understand the student – In two senses • A good model of the student should be able to predict future actions (e. g. outcome measure) • Help requests provide window into student’s reading proficiency (e. g. source of knowledge) • Non-speech events are less noisy • Applications of help requests – Avoid overly complex material – Provide help ahead of time 9

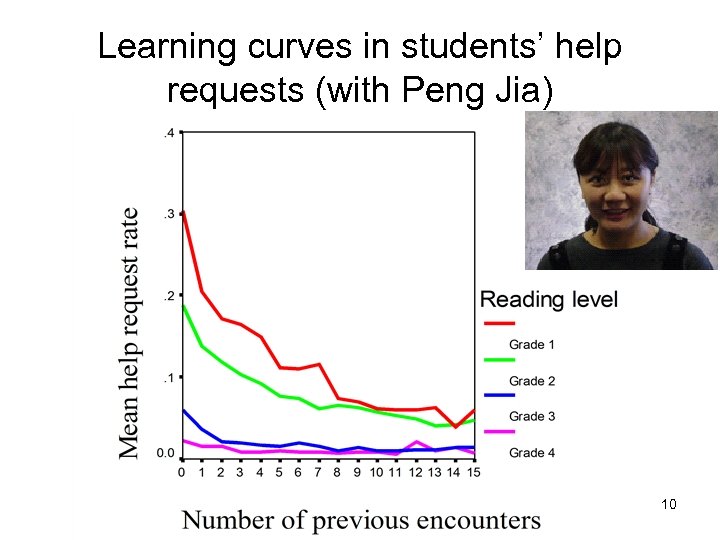

Learning curves in students’ help requests (with Peng Jia) 10

Learning curves in students’ help requests (with Peng Jia) 10

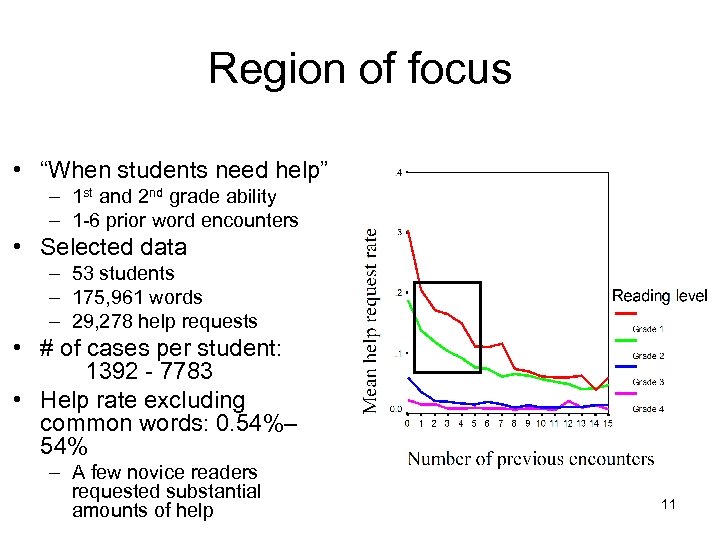

Region of focus • “When students need help” – 1 st and 2 nd grade ability – 1 -6 prior word encounters • Selected data – 53 students – 175, 961 words – 29, 278 help requests • # of cases per student: 1392 - 7783 • Help rate excluding common words: 0. 54%– 54% – A few novice readers requested substantial amounts of help 11

Region of focus • “When students need help” – 1 st and 2 nd grade ability – 1 -6 prior word encounters • Selected data – 53 students – 175, 961 words – 29, 278 help requests • # of cases per student: 1392 - 7783 • Help rate excluding common words: 0. 54%– 54% – A few novice readers requested substantial amounts of help 11

How to predict help requests • Approach: treat as classifier learning problem – Inputs: features about the word and the student – Output: whether the student will ask for help • Need to decide: – Features describing word and student – What data to use to train model 12

How to predict help requests • Approach: treat as classifier learning problem – Inputs: features about the word and the student – Output: whether the student will ask for help • Need to decide: – Features describing word and student – What data to use to train model 12

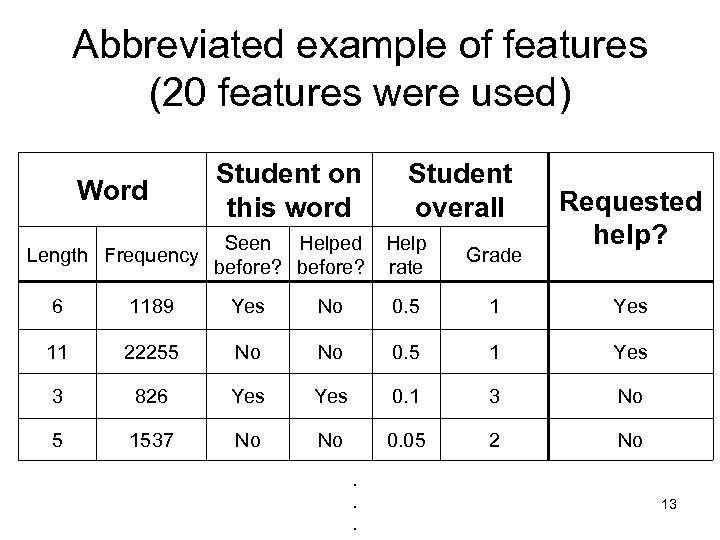

Abbreviated example of features (20 features were used) Word Student on this word Length Frequency Seen Helped before? Student overall Help rate Grade Requested help? 6 1189 Yes No 0. 5 1 Yes 11 22255 No No 0. 5 1 Yes 3 826 Yes 0. 1 3 No 5 1537 No No 0. 05 2 No . . . 13

Abbreviated example of features (20 features were used) Word Student on this word Length Frequency Seen Helped before? Student overall Help rate Grade Requested help? 6 1189 Yes No 0. 5 1 Yes 11 22255 No No 0. 5 1 Yes 3 826 Yes 0. 1 3 No 5 1537 No No 0. 05 2 No . . . 13

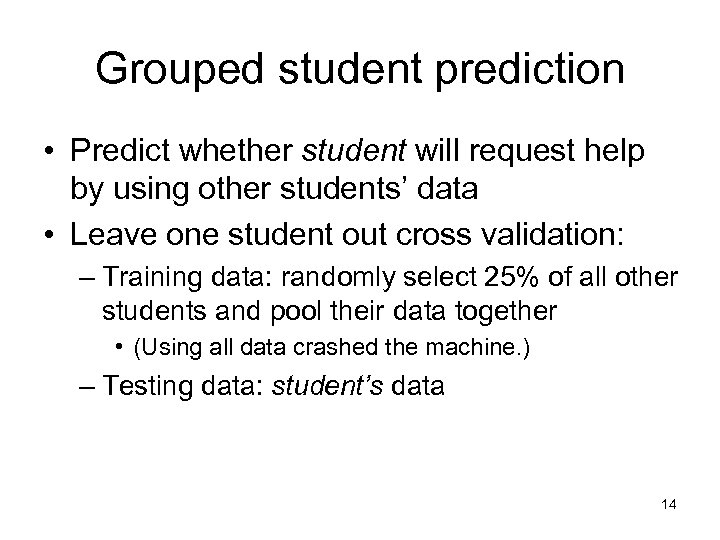

Grouped student prediction • Predict whether student will request help by using other students’ data • Leave one student out cross validation: – Training data: randomly select 25% of all other students and pool their data together • (Using all data crashed the machine. ) – Testing data: student’s data 14

Grouped student prediction • Predict whether student will request help by using other students’ data • Leave one student out cross validation: – Training data: randomly select 25% of all other students and pool their data together • (Using all data crashed the machine. ) – Testing data: student’s data 14

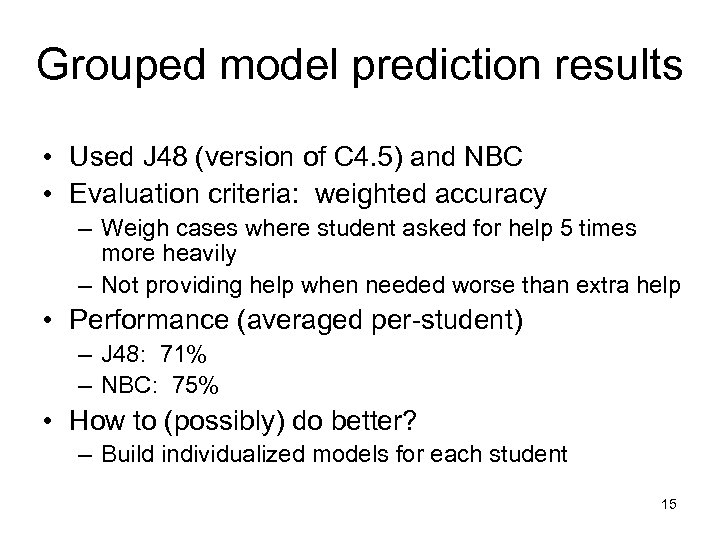

Grouped model prediction results • Used J 48 (version of C 4. 5) and NBC • Evaluation criteria: weighted accuracy – Weigh cases where student asked for help 5 times more heavily – Not providing help when needed worse than extra help • Performance (averaged per-student) – J 48: 71% – NBC: 75% • How to (possibly) do better? – Build individualized models for each student 15

Grouped model prediction results • Used J 48 (version of C 4. 5) and NBC • Evaluation criteria: weighted accuracy – Weigh cases where student asked for help 5 times more heavily – Not providing help when needed worse than extra help • Performance (averaged per-student) – J 48: 71% – NBC: 75% • How to (possibly) do better? – Build individualized models for each student 15

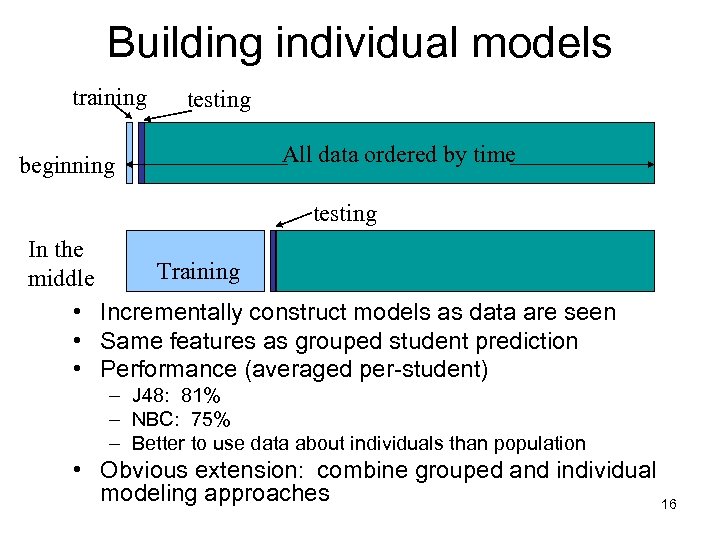

Building individual models training beginning testing All data ordered by time testing In the Training middle • Incrementally construct models as data are seen • Same features as grouped student prediction • Performance (averaged per-student) – J 48: 81% – NBC: 75% – Better to use data about individuals than population • Obvious extension: combine grouped and individual modeling approaches 16

Building individual models training beginning testing All data ordered by time testing In the Training middle • Incrementally construct models as data are seen • Same features as grouped student prediction • Performance (averaged per-student) – J 48: 81% – NBC: 75% – Better to use data about individuals than population • Obvious extension: combine grouped and individual modeling approaches 16

Using subword properties to help predict help requests (with June Sison) • If student is predicted to need help on “chord, ” he would probably need help on “chords” as well – Word roots? – But what about “chaos? ” “chemical? ” • CH /K/ is common across items • Model letter sound mappings in words – Called grapheme phoneme (g p) mappings 17

Using subword properties to help predict help requests (with June Sison) • If student is predicted to need help on “chord, ” he would probably need help on “chords” as well – Word roots? – But what about “chaos? ” “chemical? ” • CH /K/ is common across items • Model letter sound mappings in words – Called grapheme phoneme (g p) mappings 17

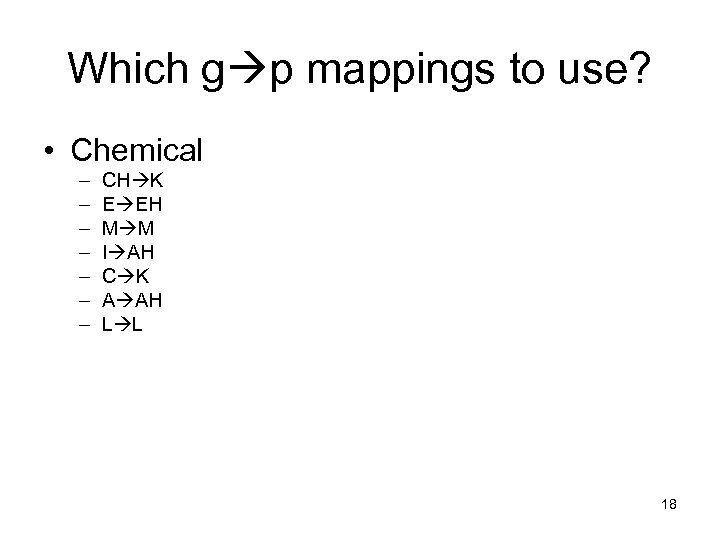

Which g p mappings to use? • Chemical – – – – CH K E EH M M I AH C K A AH L L 18

Which g p mappings to use? • Chemical – – – – CH K E EH M M I AH C K A AH L L 18

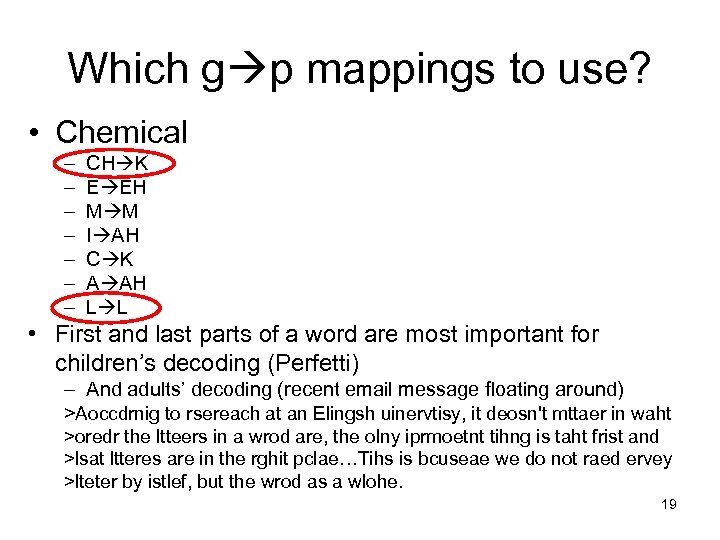

Which g p mappings to use? • Chemical – – – – CH K E EH M M I AH C K A AH L L • First and last parts of a word are most important for children’s decoding (Perfetti) – And adults’ decoding (recent email message floating around) >Aoccdrnig to rsereach at an Elingsh uinervtisy, it deosn't mttaer in waht >oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht frist and >lsat ltteres are in the rghit pclae…Tihs is bcuseae we do not raed ervey >lteter by istlef, but the wrod as a wlohe. 19

Which g p mappings to use? • Chemical – – – – CH K E EH M M I AH C K A AH L L • First and last parts of a word are most important for children’s decoding (Perfetti) – And adults’ decoding (recent email message floating around) >Aoccdrnig to rsereach at an Elingsh uinervtisy, it deosn't mttaer in waht >oredr the ltteers in a wrod are, the olny iprmoetnt tihng is taht frist and >lsat ltteres are in the rghit pclae…Tihs is bcuseae we do not raed ervey >lteter by istlef, but the wrod as a wlohe. 19

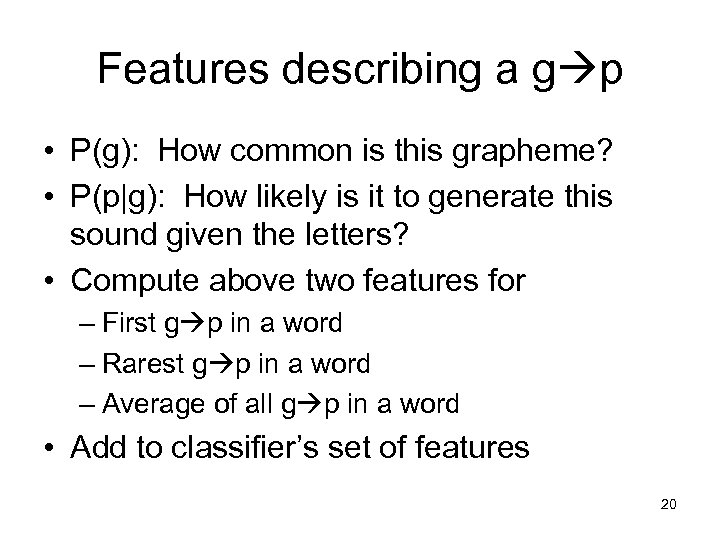

Features describing a g p • P(g): How common is this grapheme? • P(p|g): How likely is it to generate this sound given the letters? • Compute above two features for – First g p in a word – Rarest g p in a word – Average of all g p in a word • Add to classifier’s set of features 20

Features describing a g p • P(g): How common is this grapheme? • P(p|g): How likely is it to generate this sound given the letters? • Compute above two features for – First g p in a word – Rarest g p in a word – Average of all g p in a word • Add to classifier’s set of features 20

Results • Used individual models with J 48 • Improved accuracy by 0. 7% absolute (P=0. 013) over not using g p features – However, already using many features about student – Suggests students are sensitive to g p properties • Can we do better? – These g p properties are static – Only describe words, not students – Perhaps modeling a student’s skills would work better? • Infer what is in student’s head rather than just predict actions 21

Results • Used individual models with J 48 • Improved accuracy by 0. 7% absolute (P=0. 013) over not using g p features – However, already using many features about student – Suggests students are sensitive to g p properties • Can we do better? – These g p properties are static – Only describe words, not students – Perhaps modeling a student’s skills would work better? • Infer what is in student’s head rather than just predict actions 21

Outline Predicting student behavior • E. g. will the student click for help on this word? Inferring student’s skills • E. g. does the student know “ch” can make a K sound (e. g. “chaos”)? Future work 22

Outline Predicting student behavior • E. g. will the student click for help on this word? Inferring student’s skills • E. g. does the student know “ch” can make a K sound (e. g. “chaos”)? Future work 22

Automated assessment (with Peng Jia and June Sison) • We gather lots of data; use it to assess students – “Knowing What Students Know” provides metaphor • Why perform automated assessment? – Drawbacks of paper tests: • Expensive • Lack of ongoing results • Costly to report to teachers and computer tutors • Problem: our data are (literally) noisy – But we have a lot of it: students attempt over 300 words per day 23

Automated assessment (with Peng Jia and June Sison) • We gather lots of data; use it to assess students – “Knowing What Students Know” provides metaphor • Why perform automated assessment? – Drawbacks of paper tests: • Expensive • Lack of ongoing results • Costly to report to teachers and computer tutors • Problem: our data are (literally) noisy – But we have a lot of it: students attempt over 300 words per day 23

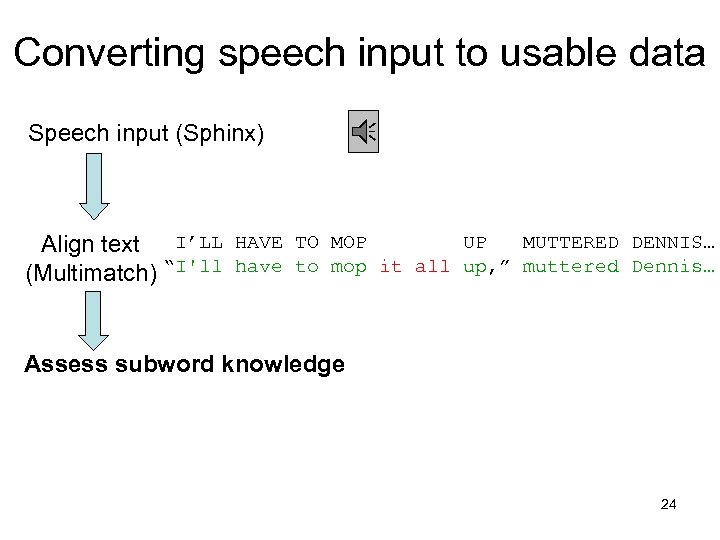

Converting speech input to usable data Speech input (Sphinx) UP MUTTERED DENNIS… Align text I’LL HAVE TO MOP (Multimatch) “I'll have to mop it all up, ” muttered Dennis… Assess subword knowledge 24

Converting speech input to usable data Speech input (Sphinx) UP MUTTERED DENNIS… Align text I’LL HAVE TO MOP (Multimatch) “I'll have to mop it all up, ” muttered Dennis… Assess subword knowledge 24

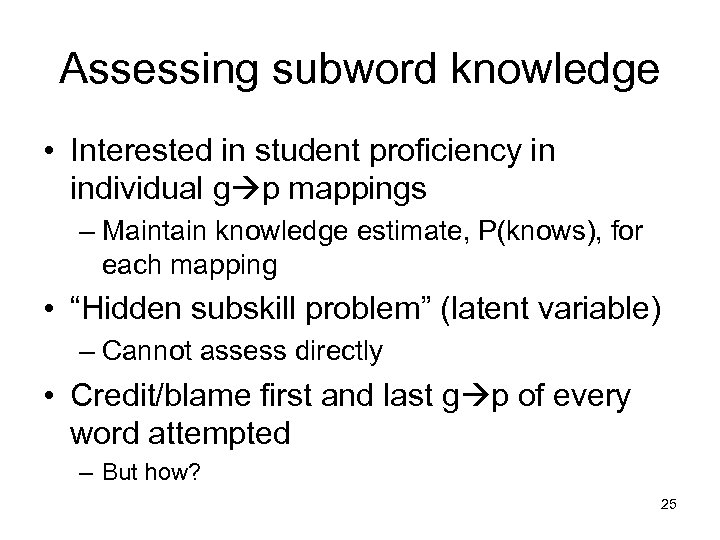

Assessing subword knowledge • Interested in student proficiency in individual g p mappings – Maintain knowledge estimate, P(knows), for each mapping • “Hidden subskill problem” (latent variable) – Cannot assess directly • Credit/blame first and last g p of every word attempted – But how? 25

Assessing subword knowledge • Interested in student proficiency in individual g p mappings – Maintain knowledge estimate, P(knows), for each mapping • “Hidden subskill problem” (latent variable) – Cannot assess directly • Credit/blame first and last g p of every word attempted – But how? 25

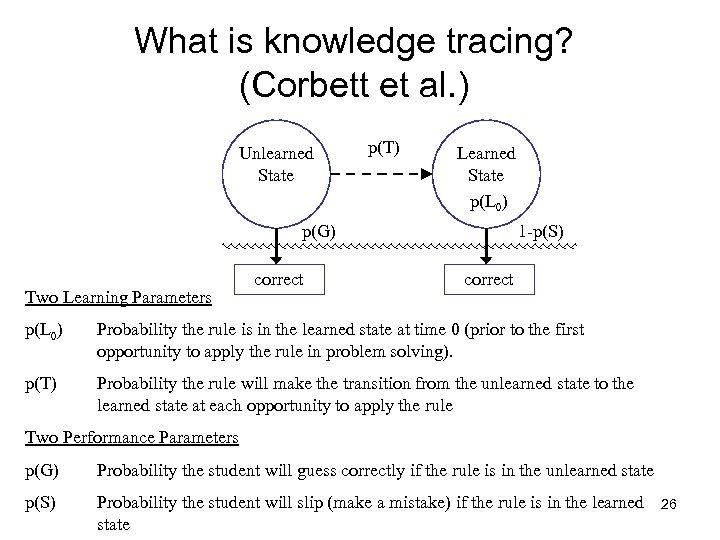

What is knowledge tracing? (Corbett et al. ) Unlearned State p(T) Learned State p(L 0) p(G) Two Learning Parameters correct 1 -p(S) correct p(L 0) Probability the rule is in the learned state at time 0 (prior to the first opportunity to apply the rule in problem solving). p(T) Probability the rule will make the transition from the unlearned state to the learned state at each opportunity to apply the rule Two Performance Parameters p(G) Probability the student will guess correctly if the rule is in the unlearned state p(S) Probability the student will slip (make a mistake) if the rule is in the learned state 26

What is knowledge tracing? (Corbett et al. ) Unlearned State p(T) Learned State p(L 0) p(G) Two Learning Parameters correct 1 -p(S) correct p(L 0) Probability the rule is in the learned state at time 0 (prior to the first opportunity to apply the rule in problem solving). p(T) Probability the rule will make the transition from the unlearned state to the learned state at each opportunity to apply the rule Two Performance Parameters p(G) Probability the student will guess correctly if the rule is in the unlearned state p(S) Probability the student will slip (make a mistake) if the rule is in the learned state 26

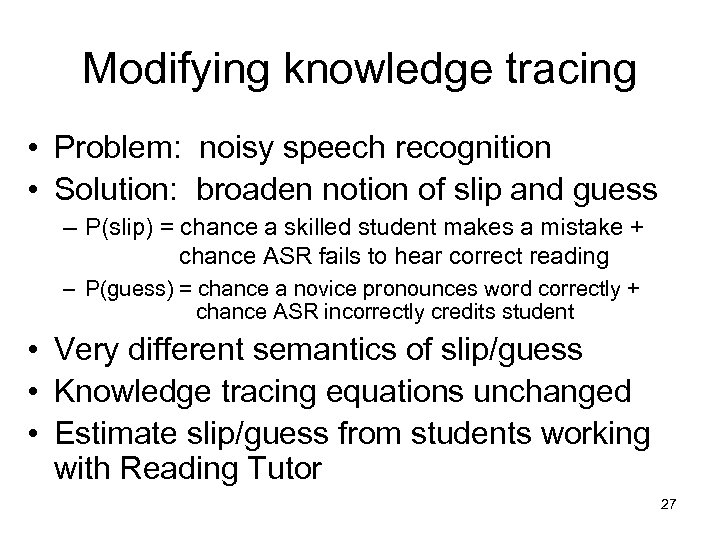

Modifying knowledge tracing • Problem: noisy speech recognition • Solution: broaden notion of slip and guess – P(slip) = chance a skilled student makes a mistake + chance ASR fails to hear correct reading – P(guess) = chance a novice pronounces word correctly + chance ASR incorrectly credits student • Very different semantics of slip/guess • Knowledge tracing equations unchanged • Estimate slip/guess from students working with Reading Tutor 27

Modifying knowledge tracing • Problem: noisy speech recognition • Solution: broaden notion of slip and guess – P(slip) = chance a skilled student makes a mistake + chance ASR fails to hear correct reading – P(guess) = chance a novice pronounces word correctly + chance ASR incorrectly credits student • Very different semantics of slip/guess • Knowledge tracing equations unchanged • Estimate slip/guess from students working with Reading Tutor 27

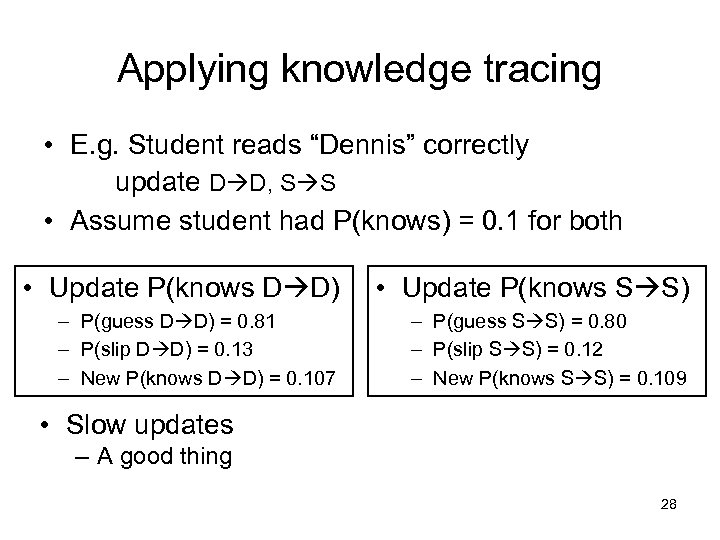

Applying knowledge tracing • E. g. Student reads “Dennis” correctly update D D, S S • Assume student had P(knows) = 0. 1 for both • Update P(knows D D) • Update P(knows S S) – P(guess D D) = 0. 81 – P(slip D D) = 0. 13 – New P(knows D D) = 0. 107 – P(guess S S) = 0. 80 – P(slip S S) = 0. 12 – New P(knows S S) = 0. 109 • Slow updates – A good thing 28

Applying knowledge tracing • E. g. Student reads “Dennis” correctly update D D, S S • Assume student had P(knows) = 0. 1 for both • Update P(knows D D) • Update P(knows S S) – P(guess D D) = 0. 81 – P(slip D D) = 0. 13 – New P(knows D D) = 0. 107 – P(guess S S) = 0. 80 – P(slip S S) = 0. 12 – New P(knows S S) = 0. 109 • Slow updates – A good thing 28

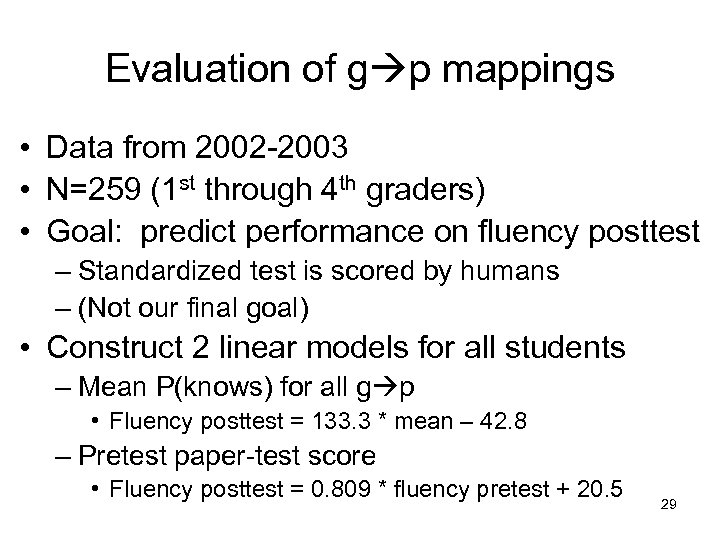

Evaluation of g p mappings • Data from 2002 -2003 • N=259 (1 st through 4 th graders) • Goal: predict performance on fluency posttest – Standardized test is scored by humans – (Not our final goal) • Construct 2 linear models for all students – Mean P(knows) for all g p • Fluency posttest = 133. 3 * mean – 42. 8 – Pretest paper-test score • Fluency posttest = 0. 809 * fluency pretest + 20. 5 29

Evaluation of g p mappings • Data from 2002 -2003 • N=259 (1 st through 4 th graders) • Goal: predict performance on fluency posttest – Standardized test is scored by humans – (Not our final goal) • Construct 2 linear models for all students – Mean P(knows) for all g p • Fluency posttest = 133. 3 * mean – 42. 8 – Pretest paper-test score • Fluency posttest = 0. 809 * fluency pretest + 20. 5 29

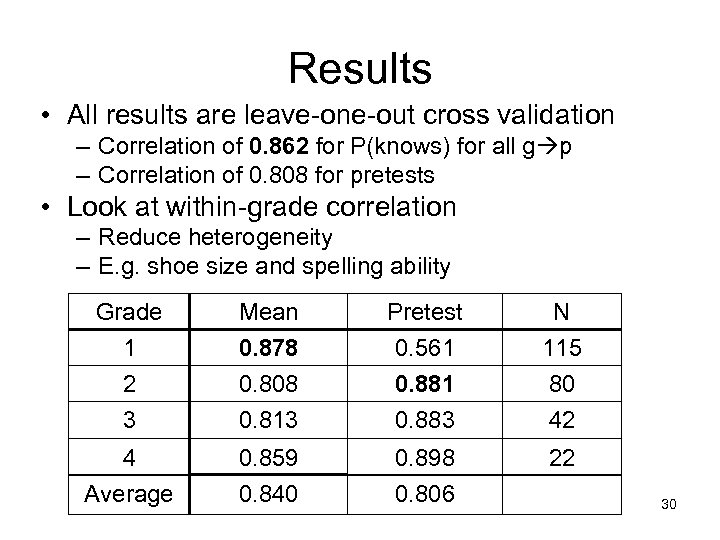

Results • All results are leave-one-out cross validation – Correlation of 0. 862 for P(knows) for all g p – Correlation of 0. 808 for pretests • Look at within-grade correlation – Reduce heterogeneity – E. g. shoe size and spelling ability Grade 1 2 3 Mean 0. 878 0. 808 0. 813 Pretest 0. 561 0. 883 N 115 80 42 4 Average 0. 859 0. 840 0. 898 0. 806 22 30

Results • All results are leave-one-out cross validation – Correlation of 0. 862 for P(knows) for all g p – Correlation of 0. 808 for pretests • Look at within-grade correlation – Reduce heterogeneity – E. g. shoe size and spelling ability Grade 1 2 3 Mean 0. 878 0. 808 0. 813 Pretest 0. 561 0. 883 N 115 80 42 4 Average 0. 859 0. 840 0. 898 0. 806 22 30

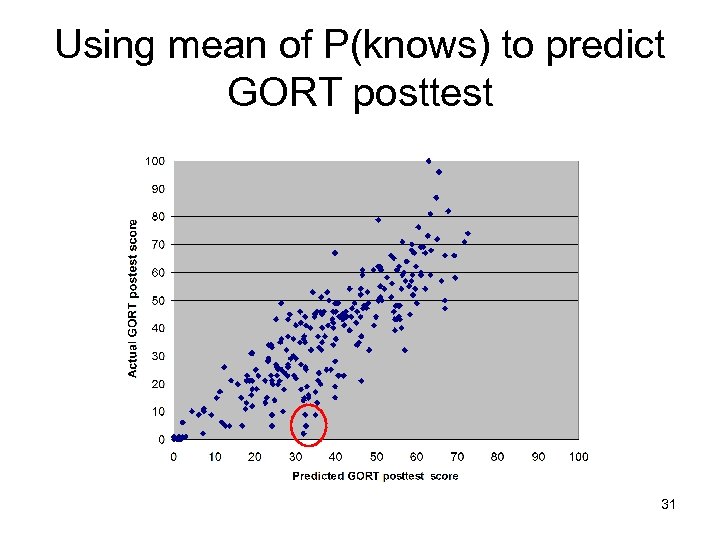

Using mean of P(knows) to predict GORT posttest 31

Using mean of P(knows) to predict GORT posttest 31

Outline Predicting student behavior • E. g. will the student click for help on this word? Inferring student’s skills • E. g. does the student know “ch” can make a K sound (e. g. “chaos”)? Future work 32

Outline Predicting student behavior • E. g. will the student click for help on this word? Inferring student’s skills • E. g. does the student know “ch” can make a K sound (e. g. “chaos”)? Future work 32

Near-term goals • Construct more general tools – Crosstabs – View a student’s growth in reading • Automated assessment – Validate g p mappings – Model latent variables – Improve ASR 33

Near-term goals • Construct more general tools – Crosstabs – View a student’s growth in reading • Automated assessment – Validate g p mappings – Model latent variables – Improve ASR 33

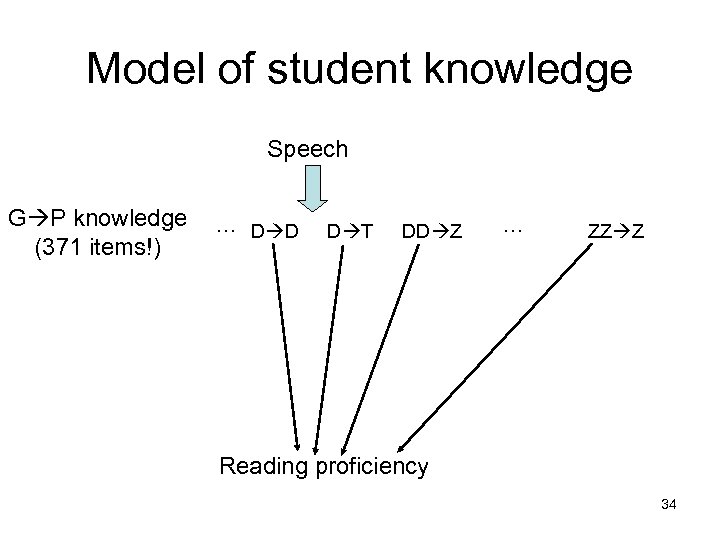

Model of student knowledge Speech G P knowledge (371 items!) … D D D T DD Z … ZZ Z Reading proficiency 34

Model of student knowledge Speech G P knowledge (371 items!) … D D D T DD Z … ZZ Z Reading proficiency 34

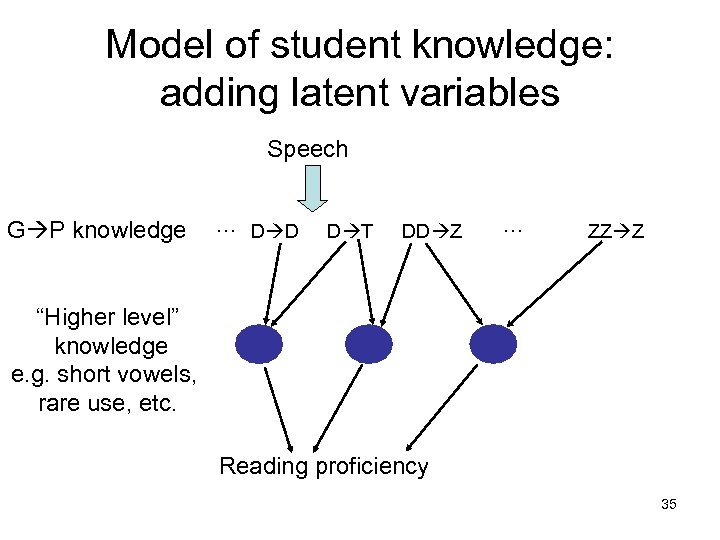

Model of student knowledge: adding latent variables Speech G P knowledge … D D D T DD Z … ZZ Z “Higher level” knowledge e. g. short vowels, rare use, etc. Reading proficiency 35

Model of student knowledge: adding latent variables Speech G P knowledge … D D D T DD Z … ZZ Z “Higher level” knowledge e. g. short vowels, rare use, etc. Reading proficiency 35

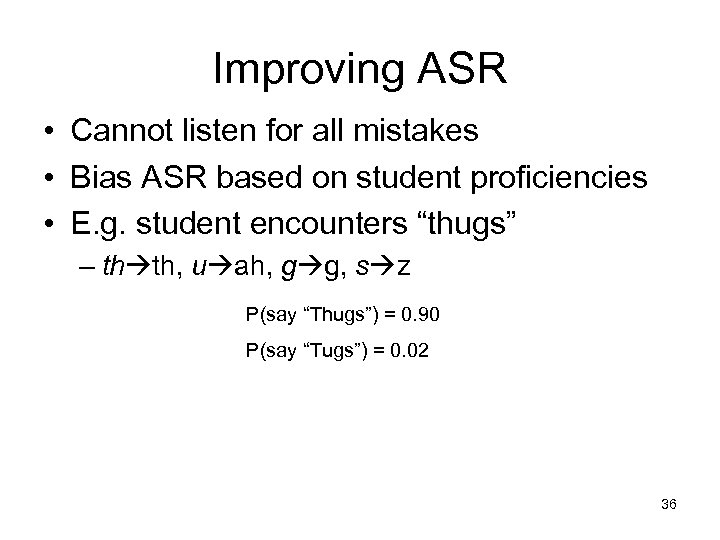

Improving ASR • Cannot listen for all mistakes • Bias ASR based on student proficiencies • E. g. student encounters “thugs” – th th, u ah, g g, s z P(say “Thugs”) = 0. 90 P(say “Tugs”) = 0. 02 36

Improving ASR • Cannot listen for all mistakes • Bias ASR based on student proficiencies • E. g. student encounters “thugs” – th th, u ah, g g, s z P(say “Thugs”) = 0. 90 P(say “Tugs”) = 0. 02 36

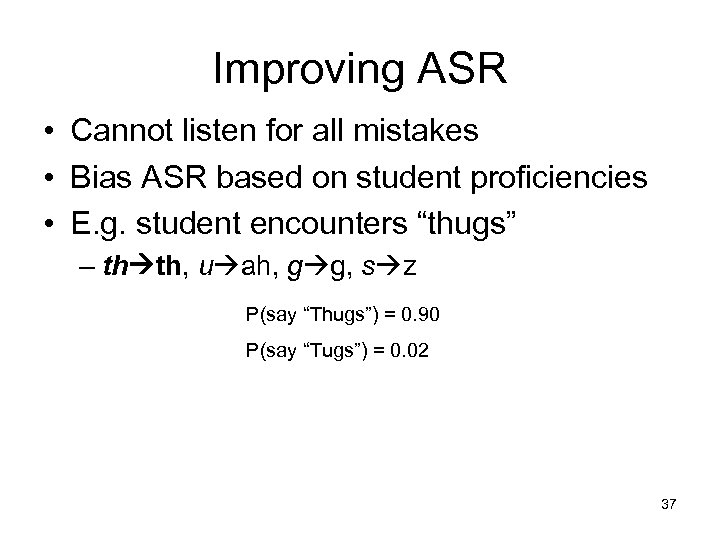

Improving ASR • Cannot listen for all mistakes • Bias ASR based on student proficiencies • E. g. student encounters “thugs” – th th, u ah, g g, s z P(say “Thugs”) = 0. 90 P(say “Tugs”) = 0. 02 37

Improving ASR • Cannot listen for all mistakes • Bias ASR based on student proficiencies • E. g. student encounters “thugs” – th th, u ah, g g, s z P(say “Thugs”) = 0. 90 P(say “Tugs”) = 0. 02 37

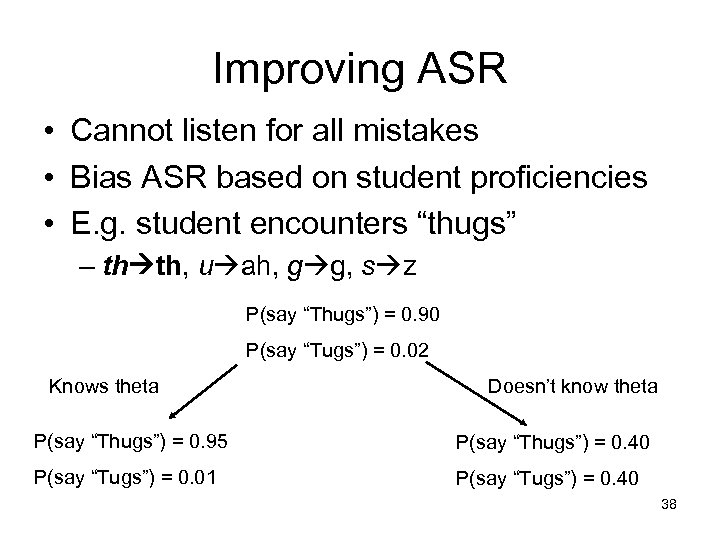

Improving ASR • Cannot listen for all mistakes • Bias ASR based on student proficiencies • E. g. student encounters “thugs” – th th, u ah, g g, s z P(say “Thugs”) = 0. 90 P(say “Tugs”) = 0. 02 Knows theta Doesn’t know theta P(say “Thugs”) = 0. 95 P(say “Thugs”) = 0. 40 P(say “Tugs”) = 0. 01 P(say “Tugs”) = 0. 40 38

Improving ASR • Cannot listen for all mistakes • Bias ASR based on student proficiencies • E. g. student encounters “thugs” – th th, u ah, g g, s z P(say “Thugs”) = 0. 90 P(say “Tugs”) = 0. 02 Knows theta Doesn’t know theta P(say “Thugs”) = 0. 95 P(say “Thugs”) = 0. 40 P(say “Tugs”) = 0. 01 P(say “Tugs”) = 0. 40 38

Longer-term goals • Improving ASR good goal due to ability to evaluate changes offline • However, would like to improve educational outcomes – Problem: harder to evaluate learning since human trials are expensive – Solution: construct a simulation of the student and tutor and use reinforcement learning (RL) – Approach used in my dissertation work (at UMass) on ADVISOR in the Animal. Watch system 39

Longer-term goals • Improving ASR good goal due to ability to evaluate changes offline • However, would like to improve educational outcomes – Problem: harder to evaluate learning since human trials are expensive – Solution: construct a simulation of the student and tutor and use reinforcement learning (RL) – Approach used in my dissertation work (at UMass) on ADVISOR in the Animal. Watch system 39

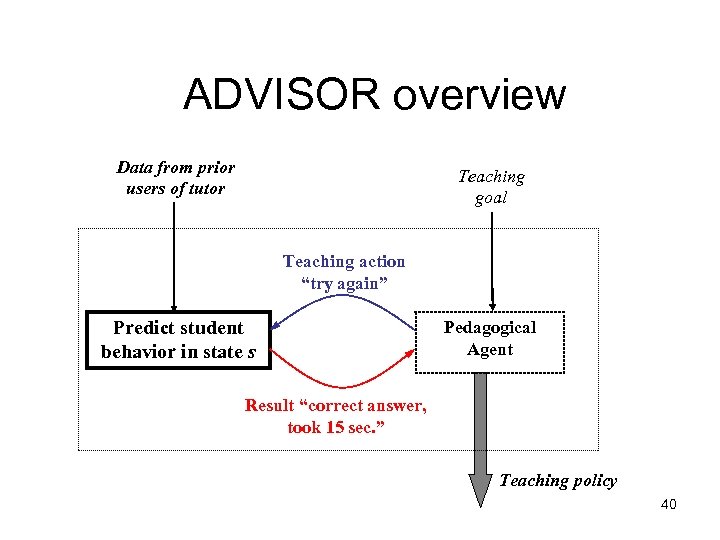

ADVISOR overview Data from prior users of tutor Teaching goal Teaching action “try again” Predict student behavior in state s Pedagogical Agent Result “correct answer, took 15 sec. ” Teaching policy 40

ADVISOR overview Data from prior users of tutor Teaching goal Teaching action “try again” Predict student behavior in state s Pedagogical Agent Result “correct answer, took 15 sec. ” Teaching policy 40

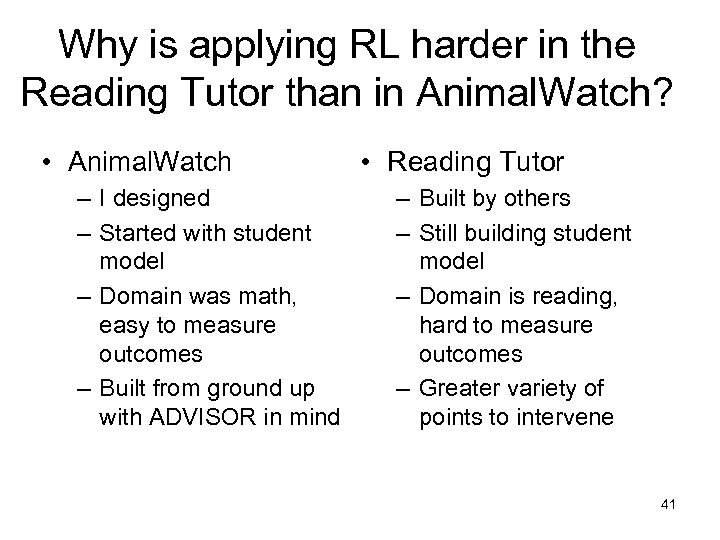

Why is applying RL harder in the Reading Tutor than in Animal. Watch? • Animal. Watch – I designed – Started with student model – Domain was math, easy to measure outcomes – Built from ground up with ADVISOR in mind • Reading Tutor – Built by others – Still building student model – Domain is reading, hard to measure outcomes – Greater variety of points to intervene 41

Why is applying RL harder in the Reading Tutor than in Animal. Watch? • Animal. Watch – I designed – Started with student model – Domain was math, easy to measure outcomes – Built from ground up with ADVISOR in mind • Reading Tutor – Built by others – Still building student model – Domain is reading, hard to measure outcomes – Greater variety of points to intervene 41

Conclusions • Can assess students despite noisy data • For predicting student behavior, data are plentiful • Can examine models and features • For predicting student test scores, data are scarce • Restricted to simple models and need good features • Educational data mining offers many opportunities to improve efficacy of teaching • Big data is a “secret weapon” but… – We still don’t have enough to do everything we want 42

Conclusions • Can assess students despite noisy data • For predicting student behavior, data are plentiful • Can examine models and features • For predicting student test scores, data are scarce • Restricted to simple models and need good features • Educational data mining offers many opportunities to improve efficacy of teaching • Big data is a “secret weapon” but… – We still don’t have enough to do everything we want 42