99f665f230885d877a6ee3ca079e82a9.ppt

- Количество слайдов: 63

Early Systems Costing Prof. Ricardo Valerdi Systems & Industrial Engineering rvalerdi@arizona. edu Dec 15, 2011 INCOSE Brazil Systems Engineering Week INPE/ITA São José dos Campos, Brazil 1

Early Systems Costing Prof. Ricardo Valerdi Systems & Industrial Engineering rvalerdi@arizona. edu Dec 15, 2011 INCOSE Brazil Systems Engineering Week INPE/ITA São José dos Campos, Brazil 1

Take-Aways 1. Cost ≈ f(Effort) ≈ f(Size) ≈ f(Complexity) 2. Requirements understanding and “ilities” are the most influential on cost 3. Early systems engineering yields high ROI when done early and well Two case studies: • SE estimate with limited information • Selection of process improvement initiative 2

Take-Aways 1. Cost ≈ f(Effort) ≈ f(Size) ≈ f(Complexity) 2. Requirements understanding and “ilities” are the most influential on cost 3. Early systems engineering yields high ROI when done early and well Two case studies: • SE estimate with limited information • Selection of process improvement initiative 2

Cost Commitment on Projects Blanchard, B. , Fabrycky, W. , Systems Engineering & Analysis, Prentice Hall, 2010. 3

Cost Commitment on Projects Blanchard, B. , Fabrycky, W. , Systems Engineering & Analysis, Prentice Hall, 2010. 3

Cone of Uncertainty 4 x 2 x Relative x Size Range 0. 5 x 0. 25 x Operational Concept Feasibility Life Cycle Objectives Plans/Rqts. Life Cycle Architecture Design Phases and Milestones Initial Operating Capability Develop and Test Boehm, B. W. , Software Engineering Economics, Prentice Hall, 1981. 4

Cone of Uncertainty 4 x 2 x Relative x Size Range 0. 5 x 0. 25 x Operational Concept Feasibility Life Cycle Objectives Plans/Rqts. Life Cycle Architecture Design Phases and Milestones Initial Operating Capability Develop and Test Boehm, B. W. , Software Engineering Economics, Prentice Hall, 1981. 4

The Delphic Sybil Michelangelo Buonarroti Capella Sistina, Il Vaticano (1508 -1512)

The Delphic Sybil Michelangelo Buonarroti Capella Sistina, Il Vaticano (1508 -1512)

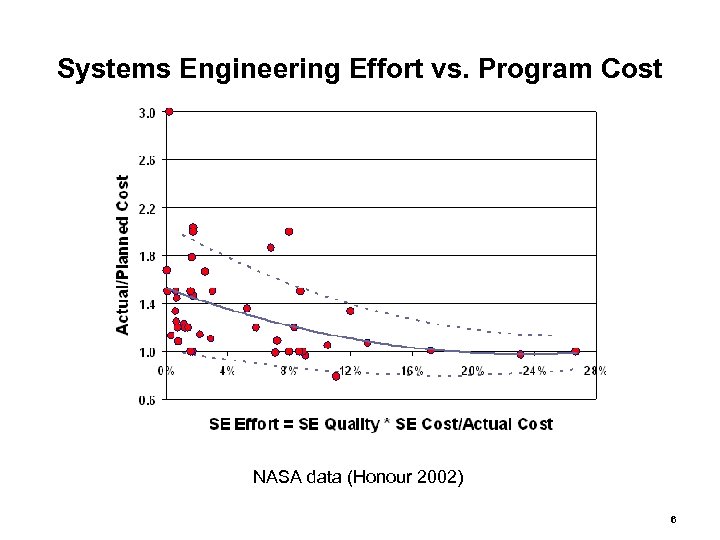

Systems Engineering Effort vs. Program Cost NASA data (Honour 2002) 6

Systems Engineering Effort vs. Program Cost NASA data (Honour 2002) 6

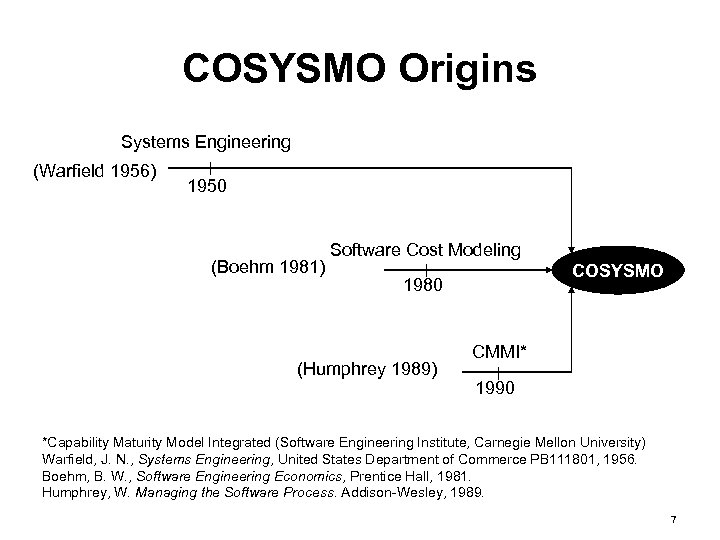

COSYSMO Origins Systems Engineering (Warfield 1956) 1950 (Boehm 1981) Software Cost Modeling COSYSMO 1980 (Humphrey 1989) CMMI* 1990 *Capability Maturity Model Integrated (Software Engineering Institute, Carnegie Mellon University) Warfield, J. N. , Systems Engineering, United States Department of Commerce PB 111801, 1956. Boehm, B. W. , Software Engineering Economics, Prentice Hall, 1981. Humphrey, W. Managing the Software Process. Addison-Wesley, 1989. 7

COSYSMO Origins Systems Engineering (Warfield 1956) 1950 (Boehm 1981) Software Cost Modeling COSYSMO 1980 (Humphrey 1989) CMMI* 1990 *Capability Maturity Model Integrated (Software Engineering Institute, Carnegie Mellon University) Warfield, J. N. , Systems Engineering, United States Department of Commerce PB 111801, 1956. Boehm, B. W. , Software Engineering Economics, Prentice Hall, 1981. Humphrey, W. Managing the Software Process. Addison-Wesley, 1989. 7

How is Systems Engineering Defined? • • • Acquisition and Supply • – Supply Process – Acquisition Process Technical Management • – Planning Process – Assessment Process – Control Process System Design – Requirements Definition Process – Solution Definition Process Product Realization – Implementation Process – Transition to Use Process Technical Evaluation – Systems Analysis Process – Requirements Validation Process – System Verification Process – End Products Validation Process EIA/ANSI 632, Processes for Engineering a System, 1999. 8

How is Systems Engineering Defined? • • • Acquisition and Supply • – Supply Process – Acquisition Process Technical Management • – Planning Process – Assessment Process – Control Process System Design – Requirements Definition Process – Solution Definition Process Product Realization – Implementation Process – Transition to Use Process Technical Evaluation – Systems Analysis Process – Requirements Validation Process – System Verification Process – End Products Validation Process EIA/ANSI 632, Processes for Engineering a System, 1999. 8

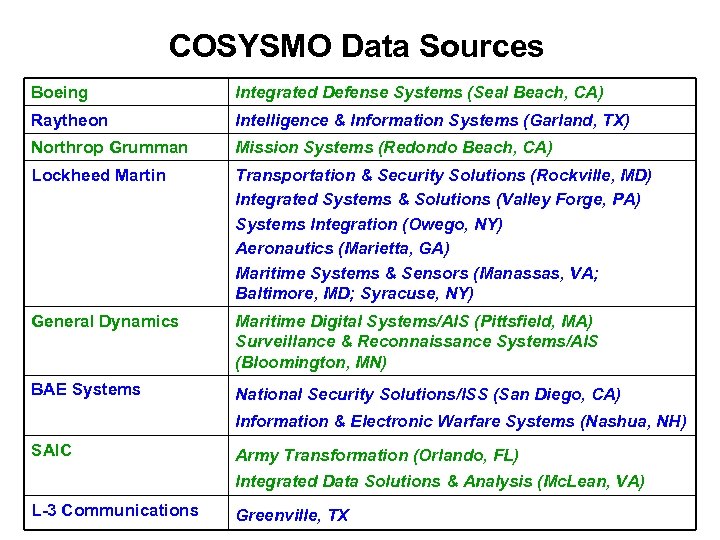

COSYSMO Data Sources Boeing Integrated Defense Systems (Seal Beach, CA) Raytheon Intelligence & Information Systems (Garland, TX) Northrop Grumman Mission Systems (Redondo Beach, CA) Lockheed Martin Transportation & Security Solutions (Rockville, MD) Integrated Systems & Solutions (Valley Forge, PA) Systems Integration (Owego, NY) Aeronautics (Marietta, GA) Maritime Systems & Sensors (Manassas, VA; Baltimore, MD; Syracuse, NY) General Dynamics Maritime Digital Systems/AIS (Pittsfield, MA) Surveillance & Reconnaissance Systems/AIS (Bloomington, MN) BAE Systems National Security Solutions/ISS (San Diego, CA) Information & Electronic Warfare Systems (Nashua, NH) SAIC Army Transformation (Orlando, FL) Integrated Data Solutions & Analysis (Mc. Lean, VA) L-3 Communications Greenville, TX

COSYSMO Data Sources Boeing Integrated Defense Systems (Seal Beach, CA) Raytheon Intelligence & Information Systems (Garland, TX) Northrop Grumman Mission Systems (Redondo Beach, CA) Lockheed Martin Transportation & Security Solutions (Rockville, MD) Integrated Systems & Solutions (Valley Forge, PA) Systems Integration (Owego, NY) Aeronautics (Marietta, GA) Maritime Systems & Sensors (Manassas, VA; Baltimore, MD; Syracuse, NY) General Dynamics Maritime Digital Systems/AIS (Pittsfield, MA) Surveillance & Reconnaissance Systems/AIS (Bloomington, MN) BAE Systems National Security Solutions/ISS (San Diego, CA) Information & Electronic Warfare Systems (Nashua, NH) SAIC Army Transformation (Orlando, FL) Integrated Data Solutions & Analysis (Mc. Lean, VA) L-3 Communications Greenville, TX

Modeling Methodology 10

Modeling Methodology 10

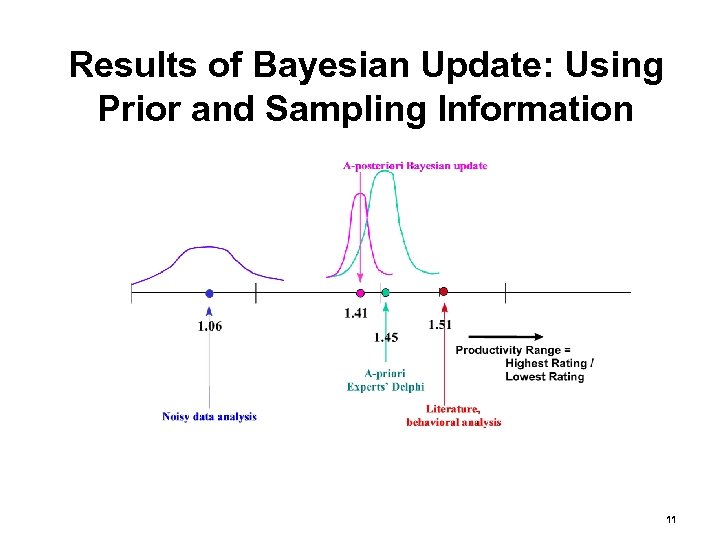

Results of Bayesian Update: Using Prior and Sampling Information 11

Results of Bayesian Update: Using Prior and Sampling Information 11

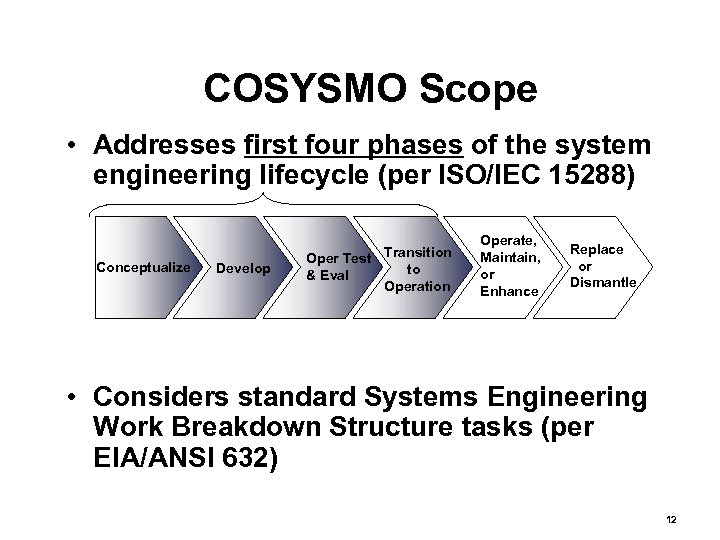

COSYSMO Scope • Addresses first four phases of the system engineering lifecycle (per ISO/IEC 15288) Conceptualize Develop Oper Test Transition to & Eval Operation Operate, Maintain, or Enhance Replace or Dismantle • Considers standard Systems Engineering Work Breakdown Structure tasks (per EIA/ANSI 632) 12

COSYSMO Scope • Addresses first four phases of the system engineering lifecycle (per ISO/IEC 15288) Conceptualize Develop Oper Test Transition to & Eval Operation Operate, Maintain, or Enhance Replace or Dismantle • Considers standard Systems Engineering Work Breakdown Structure tasks (per EIA/ANSI 632) 12

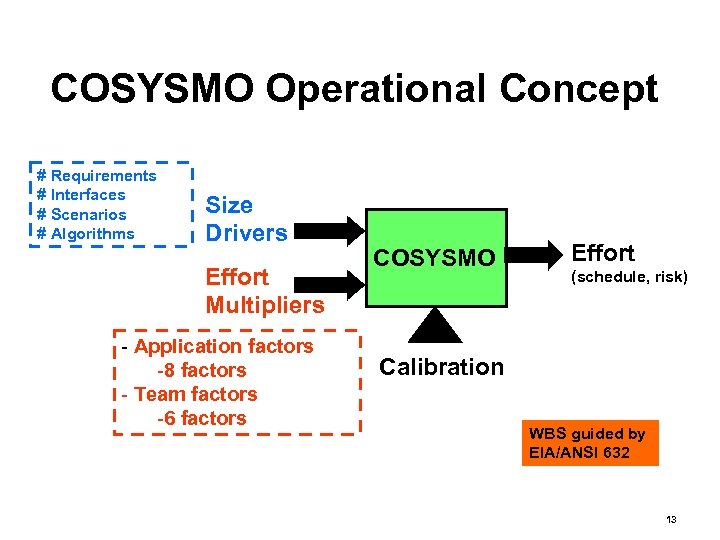

COSYSMO Operational Concept # Requirements # Interfaces # Scenarios # Algorithms Size Drivers Effort Multipliers - Application factors -8 factors - Team factors -6 factors COSYSMO Effort (schedule, risk) Calibration WBS guided by EIA/ANSI 632 13

COSYSMO Operational Concept # Requirements # Interfaces # Scenarios # Algorithms Size Drivers Effort Multipliers - Application factors -8 factors - Team factors -6 factors COSYSMO Effort (schedule, risk) Calibration WBS guided by EIA/ANSI 632 13

Software Cost Estimating Relationship MM = Man months a = calibration constant S = size driver E = scale factor c = cost driver(s) KDSI = thousands of delivered source instructions Boehm, B. W. , Software Engineering Economics, Prentice Hall, 1981. 14

Software Cost Estimating Relationship MM = Man months a = calibration constant S = size driver E = scale factor c = cost driver(s) KDSI = thousands of delivered source instructions Boehm, B. W. , Software Engineering Economics, Prentice Hall, 1981. 14

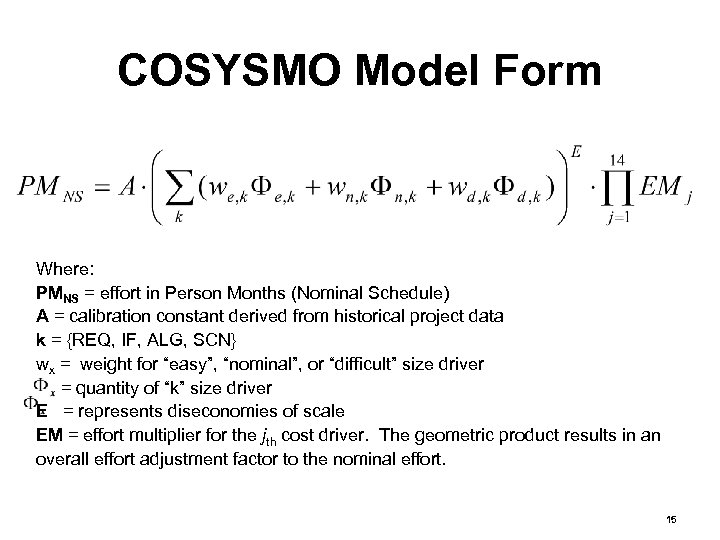

COSYSMO Model Form Where: PMNS = effort in Person Months (Nominal Schedule) A = calibration constant derived from historical project data k = {REQ, IF, ALG, SCN} wx = weight for “easy”, “nominal”, or “difficult” size driver = quantity of “k” size driver E = represents diseconomies of scale EM = effort multiplier for the jth cost driver. The geometric product results in an overall effort adjustment factor to the nominal effort. 15

COSYSMO Model Form Where: PMNS = effort in Person Months (Nominal Schedule) A = calibration constant derived from historical project data k = {REQ, IF, ALG, SCN} wx = weight for “easy”, “nominal”, or “difficult” size driver = quantity of “k” size driver E = represents diseconomies of scale EM = effort multiplier for the jth cost driver. The geometric product results in an overall effort adjustment factor to the nominal effort. 15

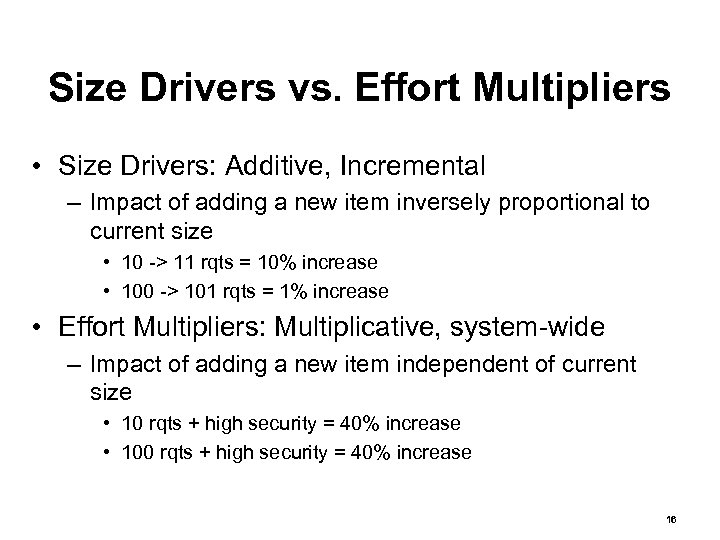

Size Drivers vs. Effort Multipliers • Size Drivers: Additive, Incremental – Impact of adding a new item inversely proportional to current size • 10 -> 11 rqts = 10% increase • 100 -> 101 rqts = 1% increase • Effort Multipliers: Multiplicative, system-wide – Impact of adding a new item independent of current size • 10 rqts + high security = 40% increase • 100 rqts + high security = 40% increase 16

Size Drivers vs. Effort Multipliers • Size Drivers: Additive, Incremental – Impact of adding a new item inversely proportional to current size • 10 -> 11 rqts = 10% increase • 100 -> 101 rqts = 1% increase • Effort Multipliers: Multiplicative, system-wide – Impact of adding a new item independent of current size • 10 rqts + high security = 40% increase • 100 rqts + high security = 40% increase 16

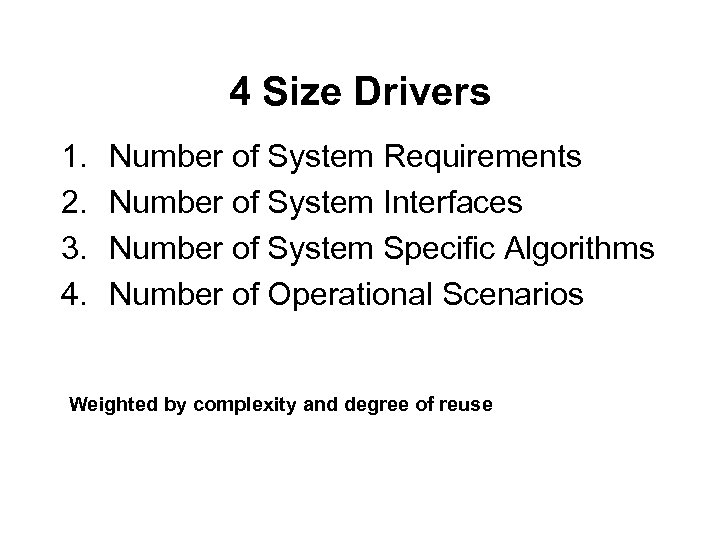

4 Size Drivers 1. 2. 3. 4. Number of System Requirements Number of System Interfaces Number of System Specific Algorithms Number of Operational Scenarios Weighted by complexity and degree of reuse

4 Size Drivers 1. 2. 3. 4. Number of System Requirements Number of System Interfaces Number of System Specific Algorithms Number of Operational Scenarios Weighted by complexity and degree of reuse

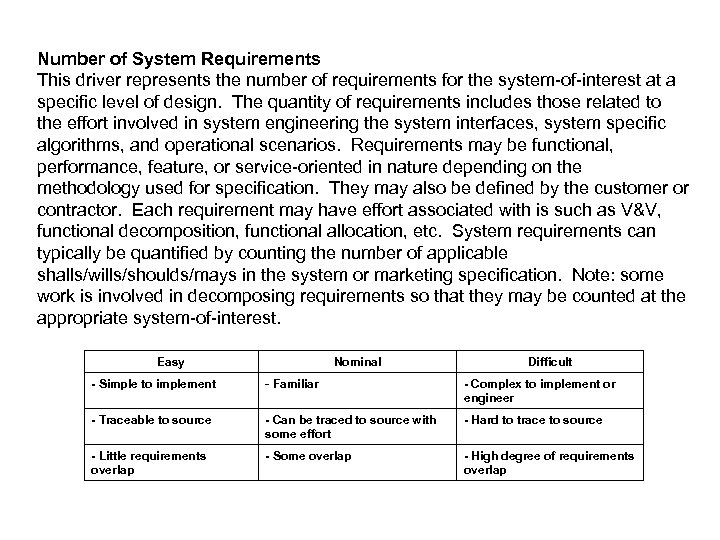

Number of System Requirements This driver represents the number of requirements for the system-of-interest at a specific level of design. The quantity of requirements includes those related to the effort involved in system engineering the system interfaces, system specific algorithms, and operational scenarios. Requirements may be functional, performance, feature, or service-oriented in nature depending on the methodology used for specification. They may also be defined by the customer or contractor. Each requirement may have effort associated with is such as V&V, functional decomposition, functional allocation, etc. System requirements can typically be quantified by counting the number of applicable shalls/wills/shoulds/mays in the system or marketing specification. Note: some work is involved in decomposing requirements so that they may be counted at the appropriate system-of-interest. Easy Nominal Difficult - Simple to implement - Familiar - Complex to implement or engineer - Traceable to source - Can be traced to source with some effort - Hard to trace to source - Little requirements overlap - Some overlap - High degree of requirements overlap

Number of System Requirements This driver represents the number of requirements for the system-of-interest at a specific level of design. The quantity of requirements includes those related to the effort involved in system engineering the system interfaces, system specific algorithms, and operational scenarios. Requirements may be functional, performance, feature, or service-oriented in nature depending on the methodology used for specification. They may also be defined by the customer or contractor. Each requirement may have effort associated with is such as V&V, functional decomposition, functional allocation, etc. System requirements can typically be quantified by counting the number of applicable shalls/wills/shoulds/mays in the system or marketing specification. Note: some work is involved in decomposing requirements so that they may be counted at the appropriate system-of-interest. Easy Nominal Difficult - Simple to implement - Familiar - Complex to implement or engineer - Traceable to source - Can be traced to source with some effort - Hard to trace to source - Little requirements overlap - Some overlap - High degree of requirements overlap

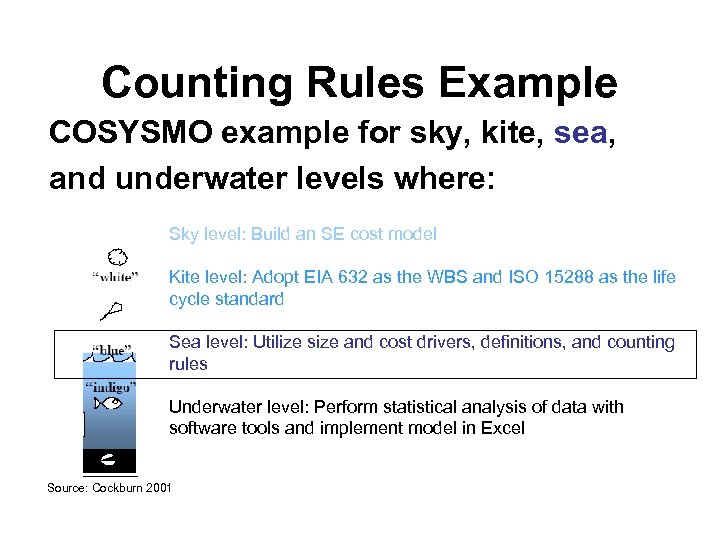

Counting Rules Example COSYSMO example for sky, kite, sea, and underwater levels where: Sky level: Build an SE cost model Kite level: Adopt EIA 632 as the WBS and ISO 15288 as the life cycle standard Sea level: Utilize size and cost drivers, definitions, and counting rules Underwater level: Perform statistical analysis of data with software tools and implement model in Excel Source: Cockburn 2001

Counting Rules Example COSYSMO example for sky, kite, sea, and underwater levels where: Sky level: Build an SE cost model Kite level: Adopt EIA 632 as the WBS and ISO 15288 as the life cycle standard Sea level: Utilize size and cost drivers, definitions, and counting rules Underwater level: Perform statistical analysis of data with software tools and implement model in Excel Source: Cockburn 2001

Size Driver Weights Easy Nominal Difficult # of System Requirements 0. 5 1. 00 5. 0 # of Interfaces 1. 7 4. 3 9. 8 # of Critical Algorithms 3. 4 6. 5 18. 2 # of Operational Scenarios 9. 8 22. 8 47. 4

Size Driver Weights Easy Nominal Difficult # of System Requirements 0. 5 1. 00 5. 0 # of Interfaces 1. 7 4. 3 9. 8 # of Critical Algorithms 3. 4 6. 5 18. 2 # of Operational Scenarios 9. 8 22. 8 47. 4

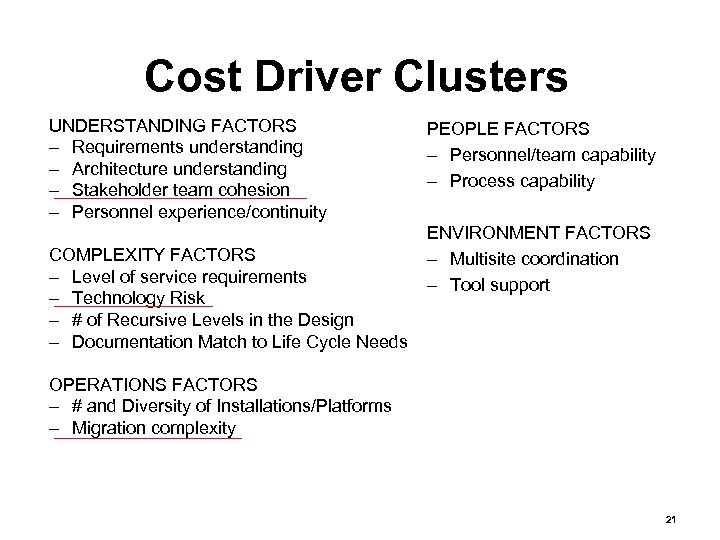

Cost Driver Clusters UNDERSTANDING FACTORS – Requirements understanding – Architecture understanding – Stakeholder team cohesion – Personnel experience/continuity COMPLEXITY FACTORS – Level of service requirements – Technology Risk – # of Recursive Levels in the Design – Documentation Match to Life Cycle Needs PEOPLE FACTORS – Personnel/team capability – Process capability ENVIRONMENT FACTORS – Multisite coordination – Tool support OPERATIONS FACTORS – # and Diversity of Installations/Platforms – Migration complexity 21

Cost Driver Clusters UNDERSTANDING FACTORS – Requirements understanding – Architecture understanding – Stakeholder team cohesion – Personnel experience/continuity COMPLEXITY FACTORS – Level of service requirements – Technology Risk – # of Recursive Levels in the Design – Documentation Match to Life Cycle Needs PEOPLE FACTORS – Personnel/team capability – Process capability ENVIRONMENT FACTORS – Multisite coordination – Tool support OPERATIONS FACTORS – # and Diversity of Installations/Platforms – Migration complexity 21

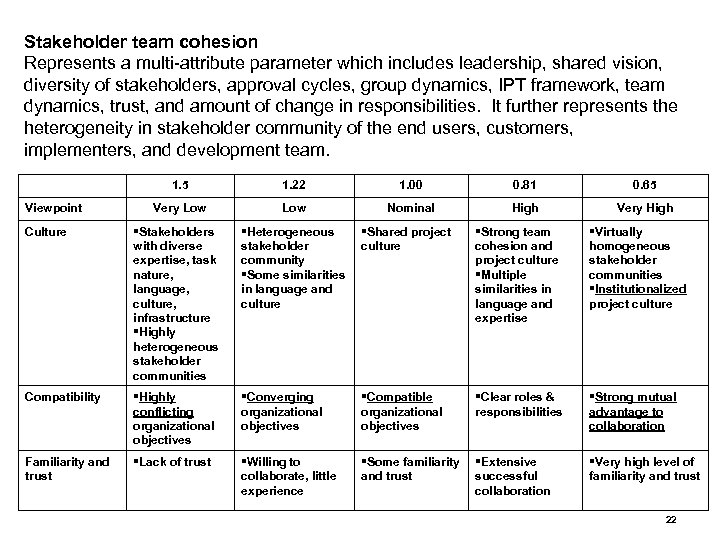

Stakeholder team cohesion Represents a multi-attribute parameter which includes leadership, shared vision, diversity of stakeholders, approval cycles, group dynamics, IPT framework, team dynamics, trust, and amount of change in responsibilities. It further represents the heterogeneity in stakeholder community of the end users, customers, implementers, and development team. 1. 5 Viewpoint 1. 22 1. 00 0. 81 0. 65 Very Low Nominal High Very High Culture Stakeholders with diverse expertise, task nature, language, culture, infrastructure Highly heterogeneous stakeholder communities Heterogeneous Shared project stakeholder culture community Some similarities in language and culture Strong team cohesion and project culture Multiple similarities in language and expertise Virtually homogeneous stakeholder communities Institutionalized project culture Compatibility Highly conflicting organizational objectives Converging organizational objectives Compatible organizational objectives Clear roles & responsibilities Strong mutual advantage to collaboration Familiarity and trust Lack of trust Willing to collaborate, little experience Some familiarity Extensive and trust successful collaboration Very high level of familiarity and trust 22

Stakeholder team cohesion Represents a multi-attribute parameter which includes leadership, shared vision, diversity of stakeholders, approval cycles, group dynamics, IPT framework, team dynamics, trust, and amount of change in responsibilities. It further represents the heterogeneity in stakeholder community of the end users, customers, implementers, and development team. 1. 5 Viewpoint 1. 22 1. 00 0. 81 0. 65 Very Low Nominal High Very High Culture Stakeholders with diverse expertise, task nature, language, culture, infrastructure Highly heterogeneous stakeholder communities Heterogeneous Shared project stakeholder culture community Some similarities in language and culture Strong team cohesion and project culture Multiple similarities in language and expertise Virtually homogeneous stakeholder communities Institutionalized project culture Compatibility Highly conflicting organizational objectives Converging organizational objectives Compatible organizational objectives Clear roles & responsibilities Strong mutual advantage to collaboration Familiarity and trust Lack of trust Willing to collaborate, little experience Some familiarity Extensive and trust successful collaboration Very high level of familiarity and trust 22

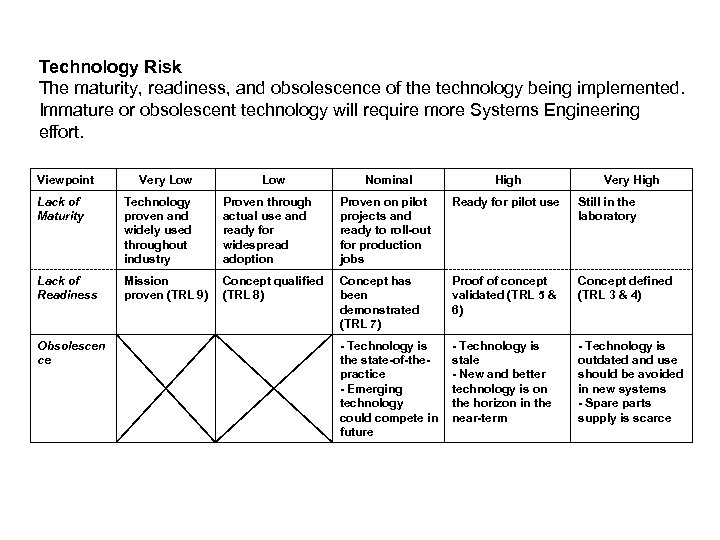

Technology Risk The maturity, readiness, and obsolescence of the technology being implemented. Immature or obsolescent technology will require more Systems Engineering effort. Viewpoint Very Low Nominal High Very High Lack of Maturity Technology proven and widely used throughout industry Proven through actual use and ready for widespread adoption Proven on pilot projects and ready to roll-out for production jobs Ready for pilot use Still in the laboratory Lack of Readiness Mission proven (TRL 9) Concept qualified (TRL 8) Concept has been demonstrated (TRL 7) Proof of concept validated (TRL 5 & 6) Concept defined (TRL 3 & 4) - Technology is the state-of-thepractice - Emerging technology could compete in future - Technology is stale - New and better technology is on the horizon in the near-term - Technology is outdated and use should be avoided in new systems - Spare parts supply is scarce Obsolescen ce

Technology Risk The maturity, readiness, and obsolescence of the technology being implemented. Immature or obsolescent technology will require more Systems Engineering effort. Viewpoint Very Low Nominal High Very High Lack of Maturity Technology proven and widely used throughout industry Proven through actual use and ready for widespread adoption Proven on pilot projects and ready to roll-out for production jobs Ready for pilot use Still in the laboratory Lack of Readiness Mission proven (TRL 9) Concept qualified (TRL 8) Concept has been demonstrated (TRL 7) Proof of concept validated (TRL 5 & 6) Concept defined (TRL 3 & 4) - Technology is the state-of-thepractice - Emerging technology could compete in future - Technology is stale - New and better technology is on the horizon in the near-term - Technology is outdated and use should be avoided in new systems - Spare parts supply is scarce Obsolescen ce

Migration complexity This cost driver rates the extent to which the legacy system affects the migration complexity, if any. Legacy system components, databases, workflows, environments, etc. , may affect the new system implementation due to new technology introductions, planned upgrades, increased performance, business process reengineering, etc. Viewpoint Legacy contractor Nominal Self; legacy system is well documented. Original team largely available Effect of legacy Everything is new; legacy system on new system is completely system replaced or non-existent High Very High Extra High Self; original Different development team not contractor; limited available; most documentation available Original contractor out of business; no documentation available Migration is restricted to integration only Migration is related to integration, development, architecture and design Migration is related to integration and development

Migration complexity This cost driver rates the extent to which the legacy system affects the migration complexity, if any. Legacy system components, databases, workflows, environments, etc. , may affect the new system implementation due to new technology introductions, planned upgrades, increased performance, business process reengineering, etc. Viewpoint Legacy contractor Nominal Self; legacy system is well documented. Original team largely available Effect of legacy Everything is new; legacy system on new system is completely system replaced or non-existent High Very High Extra High Self; original Different development team not contractor; limited available; most documentation available Original contractor out of business; no documentation available Migration is restricted to integration only Migration is related to integration, development, architecture and design Migration is related to integration and development

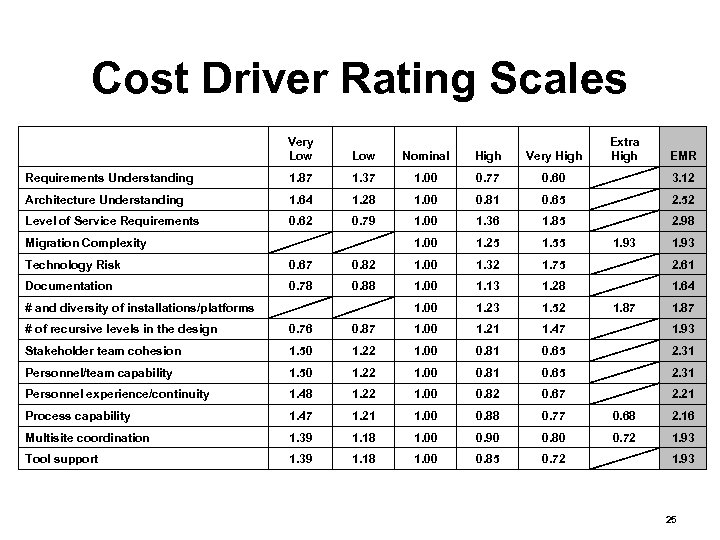

Cost Driver Rating Scales Very Low Very High Extra High Low Nominal High EMR Requirements Understanding 1. 87 1. 37 1. 00 0. 77 0. 60 3. 12 Architecture Understanding 1. 64 1. 28 1. 00 0. 81 0. 65 2. 52 Level of Service Requirements 0. 62 0. 79 1. 00 1. 36 1. 85 2. 98 1. 00 1. 25 1. 55 1. 93 Technology Risk 0. 67 0. 82 1. 00 1. 32 1. 75 2. 61 Documentation 0. 78 0. 88 1. 00 1. 13 1. 28 1. 64 1. 00 1. 23 1. 52 1. 87 # of recursive levels in the design 0. 76 0. 87 1. 00 1. 21 1. 47 1. 93 Stakeholder team cohesion 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel/team capability 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel experience/continuity 1. 48 1. 22 1. 00 0. 82 0. 67 2. 21 Process capability 1. 47 1. 21 1. 00 0. 88 0. 77 0. 68 2. 16 Multisite coordination 1. 39 1. 18 1. 00 0. 90 0. 80 0. 72 1. 93 Tool support 1. 39 1. 18 1. 00 0. 85 0. 72 1. 93 Migration Complexity # and diversity of installations/platforms 25

Cost Driver Rating Scales Very Low Very High Extra High Low Nominal High EMR Requirements Understanding 1. 87 1. 37 1. 00 0. 77 0. 60 3. 12 Architecture Understanding 1. 64 1. 28 1. 00 0. 81 0. 65 2. 52 Level of Service Requirements 0. 62 0. 79 1. 00 1. 36 1. 85 2. 98 1. 00 1. 25 1. 55 1. 93 Technology Risk 0. 67 0. 82 1. 00 1. 32 1. 75 2. 61 Documentation 0. 78 0. 88 1. 00 1. 13 1. 28 1. 64 1. 00 1. 23 1. 52 1. 87 # of recursive levels in the design 0. 76 0. 87 1. 00 1. 21 1. 47 1. 93 Stakeholder team cohesion 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel/team capability 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel experience/continuity 1. 48 1. 22 1. 00 0. 82 0. 67 2. 21 Process capability 1. 47 1. 21 1. 00 0. 88 0. 77 0. 68 2. 16 Multisite coordination 1. 39 1. 18 1. 00 0. 90 0. 80 0. 72 1. 93 Tool support 1. 39 1. 18 1. 00 0. 85 0. 72 1. 93 Migration Complexity # and diversity of installations/platforms 25

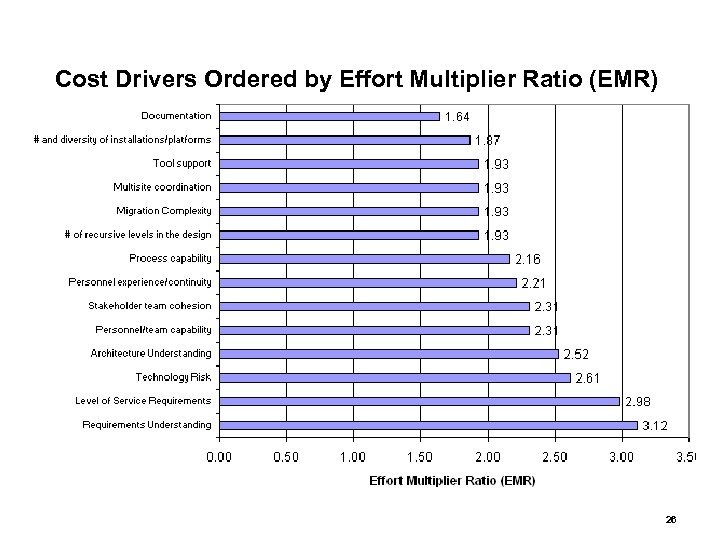

Cost Drivers Ordered by Effort Multiplier Ratio (EMR) 26

Cost Drivers Ordered by Effort Multiplier Ratio (EMR) 26

Effort Profiling Conceptualize Develop Operational Test & Evaluation ISO/IEC 15288 Technical Management System Design Product Realization Technical Evaluation ANSI/EIA 632 Acquisition & Supply Transition to Operation

Effort Profiling Conceptualize Develop Operational Test & Evaluation ISO/IEC 15288 Technical Management System Design Product Realization Technical Evaluation ANSI/EIA 632 Acquisition & Supply Transition to Operation

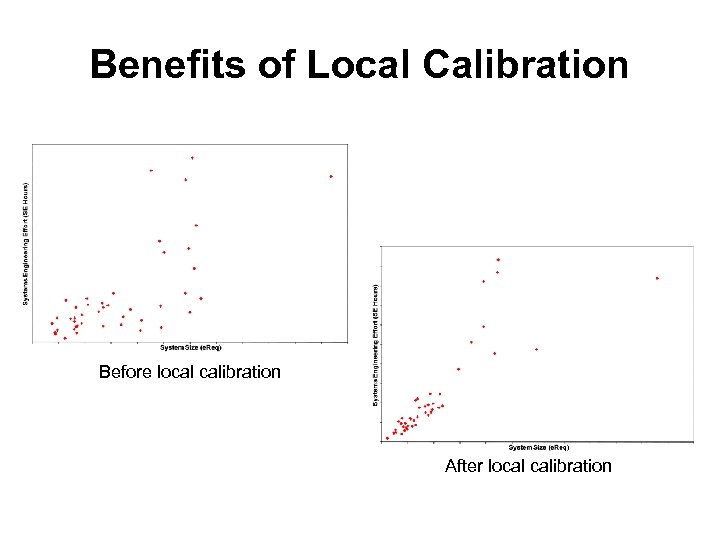

Benefits of Local Calibration Before local calibration After local calibration

Benefits of Local Calibration Before local calibration After local calibration

Prediction Accuracy PRED(30) PRED(25) PRED(20) PRED(30) = 100% PRED(25) = 57% 29

Prediction Accuracy PRED(30) PRED(25) PRED(20) PRED(30) = 100% PRED(25) = 57% 29

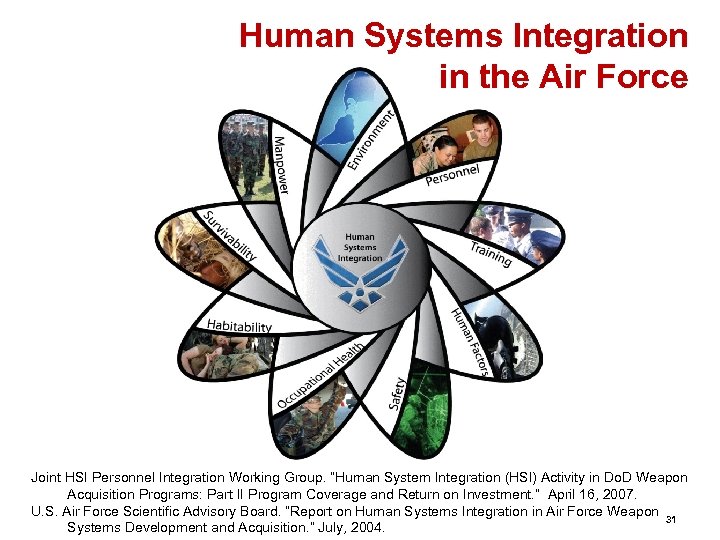

Human Systems Integration in the Air Force Joint HSI Personnel Integration Working Group. “Human System Integration (HSI) Activity in Do. D Weapon Acquisition Programs: Part II Program Coverage and Return on Investment. ” April 16, 2007. U. S. Air Force Scientific Advisory Board. “Report on Human Systems Integration in Air Force Weapon 31 Systems Development and Acquisition. ” July, 2004.

Human Systems Integration in the Air Force Joint HSI Personnel Integration Working Group. “Human System Integration (HSI) Activity in Do. D Weapon Acquisition Programs: Part II Program Coverage and Return on Investment. ” April 16, 2007. U. S. Air Force Scientific Advisory Board. “Report on Human Systems Integration in Air Force Weapon 31 Systems Development and Acquisition. ” July, 2004.

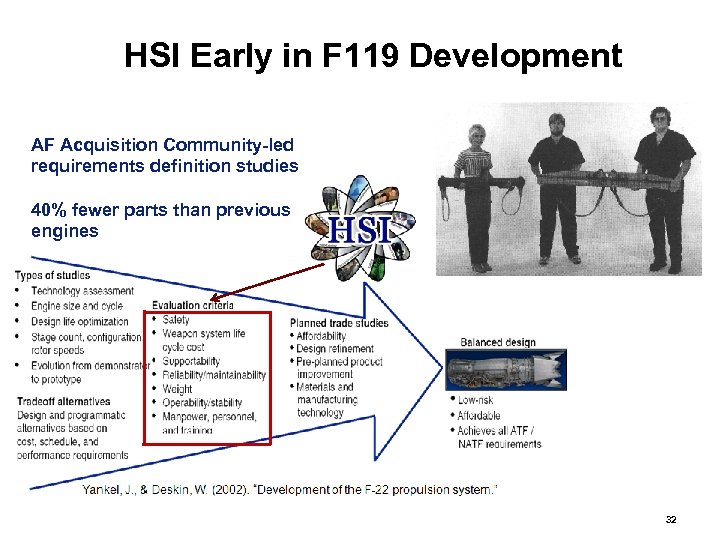

HSI Early in F 119 Development AF Acquisition Community-led requirements definition studies 40% fewer parts than previous engines 32

HSI Early in F 119 Development AF Acquisition Community-led requirements definition studies 40% fewer parts than previous engines 32

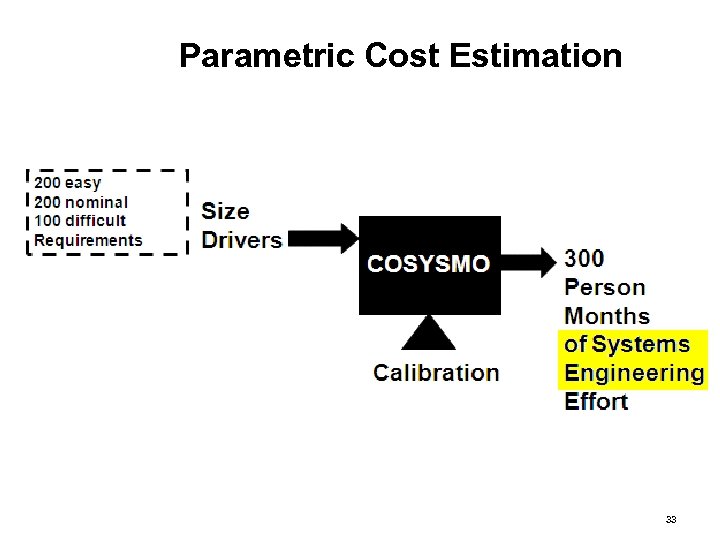

Parametric Cost Estimation 33

Parametric Cost Estimation 33

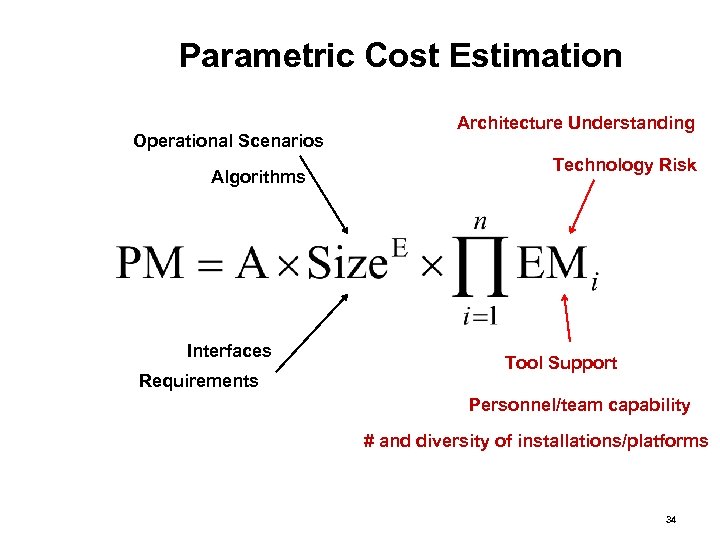

Parametric Cost Estimation Operational Scenarios Algorithms Interfaces Requirements Architecture Understanding Technology Risk Tool Support Personnel/team capability # and diversity of installations/platforms 34

Parametric Cost Estimation Operational Scenarios Algorithms Interfaces Requirements Architecture Understanding Technology Risk Tool Support Personnel/team capability # and diversity of installations/platforms 34

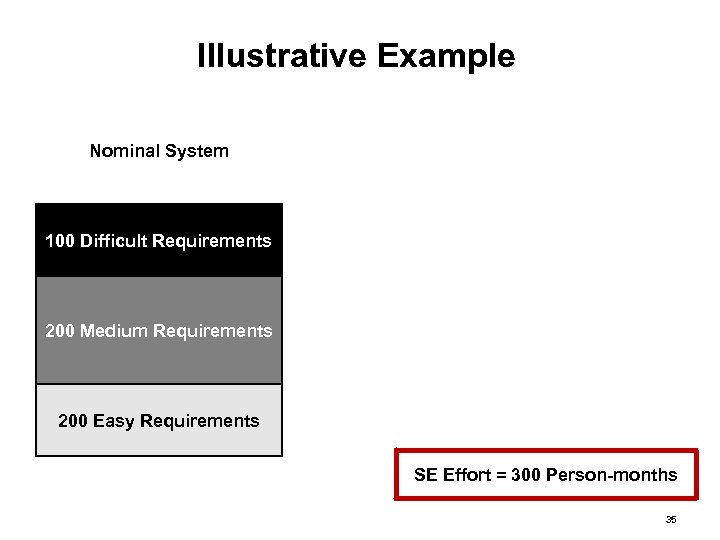

Illustrative Example Nominal System 100 Difficult Requirements 200 Medium Requirements 200 Easy Requirements SE Effort = 300 Person-months 35

Illustrative Example Nominal System 100 Difficult Requirements 200 Medium Requirements 200 Easy Requirements SE Effort = 300 Person-months 35

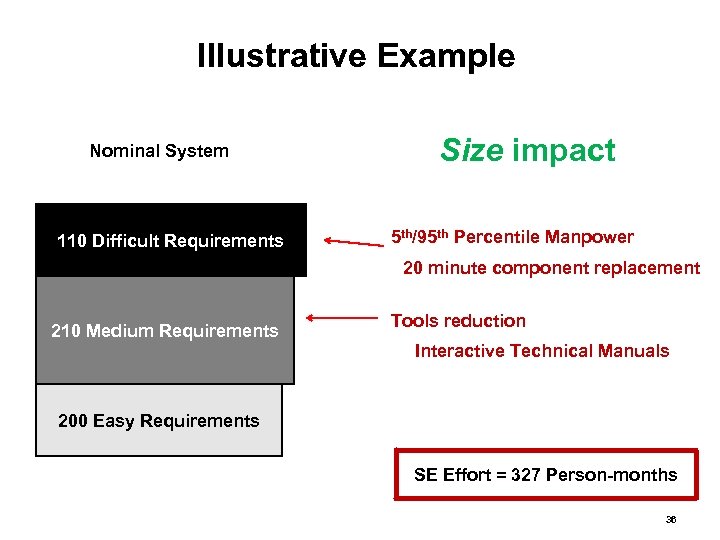

Illustrative Example Nominal System 110 Difficult Requirements Size impact 5 th/95 th Percentile Manpower 20 minute component replacement 210 Medium Requirements Tools reduction Interactive Technical Manuals 200 Easy Requirements SE Effort = 327 Person-months 36

Illustrative Example Nominal System 110 Difficult Requirements Size impact 5 th/95 th Percentile Manpower 20 minute component replacement 210 Medium Requirements Tools reduction Interactive Technical Manuals 200 Easy Requirements SE Effort = 327 Person-months 36

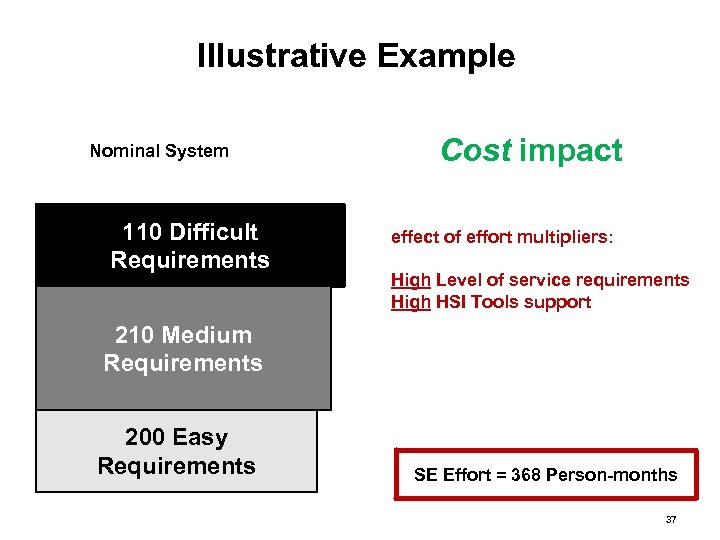

Illustrative Example Nominal System 110 Difficult Requirements Cost impact effect of effort multipliers: High Level of service requirements High HSI Tools support 210 Medium Requirements 200 Easy Requirements SE Effort = 368 Person-months 37

Illustrative Example Nominal System 110 Difficult Requirements Cost impact effect of effort multipliers: High Level of service requirements High HSI Tools support 210 Medium Requirements 200 Easy Requirements SE Effort = 368 Person-months 37

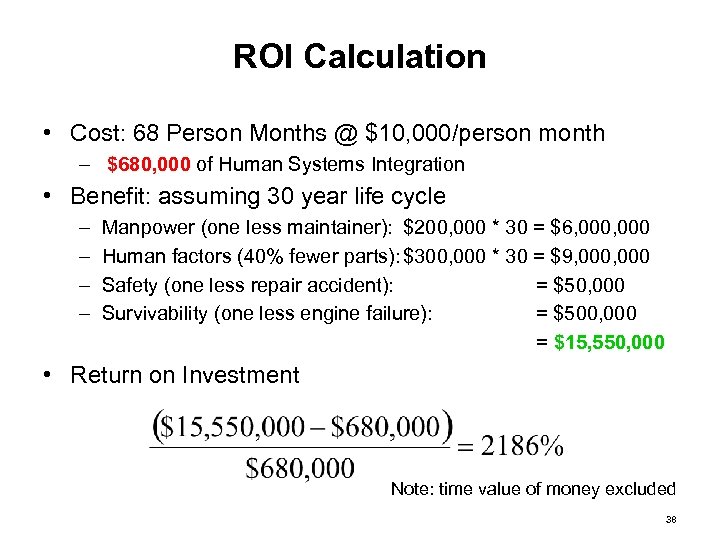

ROI Calculation • Cost: 68 Person Months @ $10, 000/person month – $680, 000 of Human Systems Integration • Benefit: assuming 30 year life cycle – – Manpower (one less maintainer): $200, 000 * 30 = $6, 000 Human factors (40% fewer parts): $300, 000 * 30 = $9, 000 Safety (one less repair accident): = $50, 000 Survivability (one less engine failure): = $500, 000 = $15, 550, 000 • Return on Investment Note: time value of money excluded 38

ROI Calculation • Cost: 68 Person Months @ $10, 000/person month – $680, 000 of Human Systems Integration • Benefit: assuming 30 year life cycle – – Manpower (one less maintainer): $200, 000 * 30 = $6, 000 Human factors (40% fewer parts): $300, 000 * 30 = $9, 000 Safety (one less repair accident): = $50, 000 Survivability (one less engine failure): = $500, 000 = $15, 550, 000 • Return on Investment Note: time value of money excluded 38

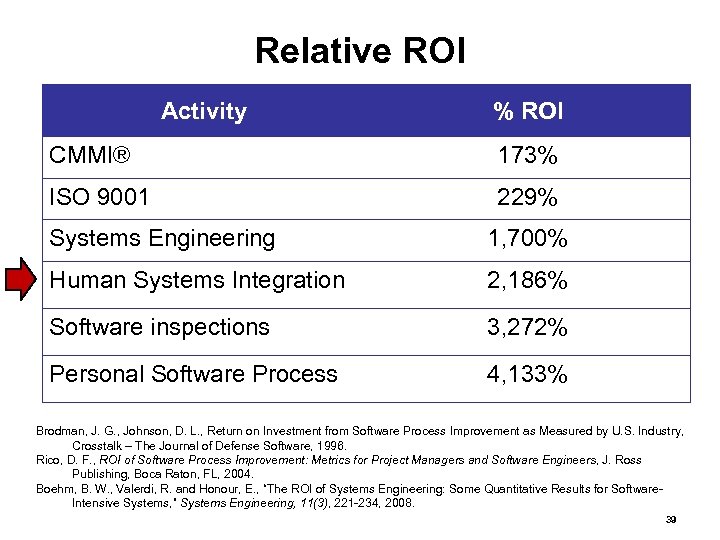

Relative ROI Activity % ROI CMMI® 173% ISO 9001 229% Systems Engineering 1, 700% Human Systems Integration 2, 186% Software inspections 3, 272% Personal Software Process 4, 133% Brodman, J. G. , Johnson, D. L. , Return on Investment from Software Process Improvement as Measured by U. S. Industry, Crosstalk – The Journal of Defense Software, 1996. Rico, D. F. , ROI of Software Process Improvement: Metrics for Project Managers and Software Engineers, J. Ross Publishing, Boca Raton, FL, 2004. Boehm, B. W. , Valerdi, R. and Honour, E. , “The ROI of Systems Engineering: Some Quantitative Results for Software. Intensive Systems, ” Systems Engineering, 11(3), 221 -234, 2008. 39

Relative ROI Activity % ROI CMMI® 173% ISO 9001 229% Systems Engineering 1, 700% Human Systems Integration 2, 186% Software inspections 3, 272% Personal Software Process 4, 133% Brodman, J. G. , Johnson, D. L. , Return on Investment from Software Process Improvement as Measured by U. S. Industry, Crosstalk – The Journal of Defense Software, 1996. Rico, D. F. , ROI of Software Process Improvement: Metrics for Project Managers and Software Engineers, J. Ross Publishing, Boca Raton, FL, 2004. Boehm, B. W. , Valerdi, R. and Honour, E. , “The ROI of Systems Engineering: Some Quantitative Results for Software. Intensive Systems, ” Systems Engineering, 11(3), 221 -234, 2008. 39

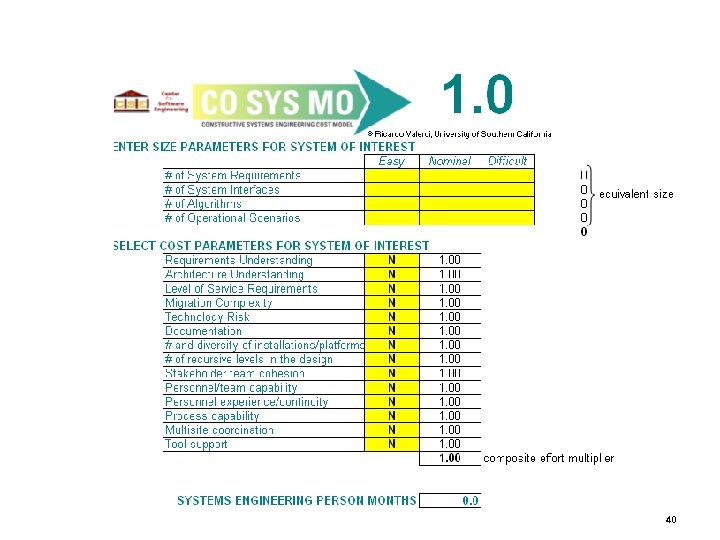

40

40

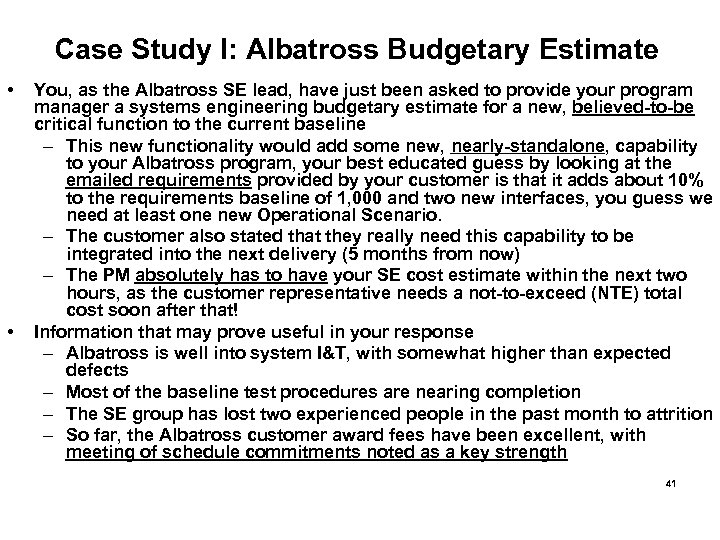

Case Study I: Albatross Budgetary Estimate • • You, as the Albatross SE lead, have just been asked to provide your program manager a systems engineering budgetary estimate for a new, believed-to-be critical function to the current baseline – This new functionality would add some new, nearly-standalone, capability to your Albatross program, your best educated guess by looking at the emailed requirements provided by your customer is that it adds about 10% to the requirements baseline of 1, 000 and two new interfaces, you guess we need at least one new Operational Scenario. – The customer also stated that they really need this capability to be integrated into the next delivery (5 months from now) – The PM absolutely has to have your SE cost estimate within the next two hours, as the customer representative needs a not-to-exceed (NTE) total cost soon after that! Information that may prove useful in your response – Albatross is well into system I&T, with somewhat higher than expected defects – Most of the baseline test procedures are nearing completion – The SE group has lost two experienced people in the past month to attrition – So far, the Albatross customer award fees have been excellent, with meeting of schedule commitments noted as a key strength 41

Case Study I: Albatross Budgetary Estimate • • You, as the Albatross SE lead, have just been asked to provide your program manager a systems engineering budgetary estimate for a new, believed-to-be critical function to the current baseline – This new functionality would add some new, nearly-standalone, capability to your Albatross program, your best educated guess by looking at the emailed requirements provided by your customer is that it adds about 10% to the requirements baseline of 1, 000 and two new interfaces, you guess we need at least one new Operational Scenario. – The customer also stated that they really need this capability to be integrated into the next delivery (5 months from now) – The PM absolutely has to have your SE cost estimate within the next two hours, as the customer representative needs a not-to-exceed (NTE) total cost soon after that! Information that may prove useful in your response – Albatross is well into system I&T, with somewhat higher than expected defects – Most of the baseline test procedures are nearing completion – The SE group has lost two experienced people in the past month to attrition – So far, the Albatross customer award fees have been excellent, with meeting of schedule commitments noted as a key strength 41

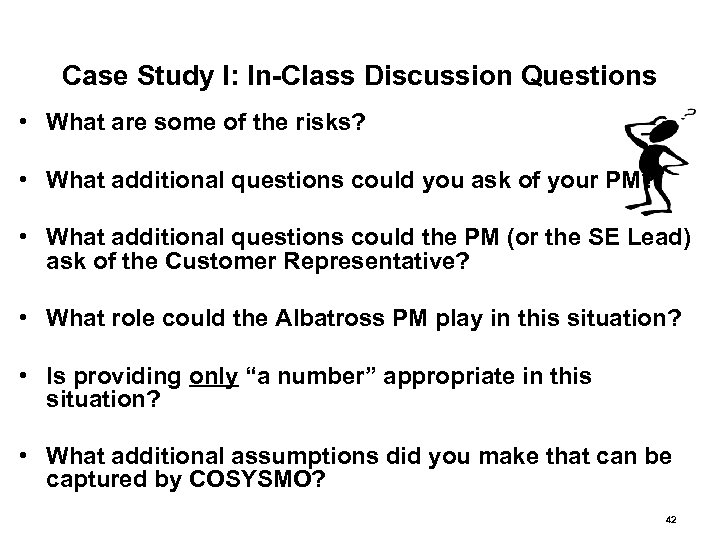

Case Study I: In-Class Discussion Questions • What are some of the risks? • What additional questions could you ask of your PM? • What additional questions could the PM (or the SE Lead) ask of the Customer Representative? • What role could the Albatross PM play in this situation? • Is providing only “a number” appropriate in this situation? • What additional assumptions did you make that can be captured by COSYSMO? 42

Case Study I: In-Class Discussion Questions • What are some of the risks? • What additional questions could you ask of your PM? • What additional questions could the PM (or the SE Lead) ask of the Customer Representative? • What role could the Albatross PM play in this situation? • Is providing only “a number” appropriate in this situation? • What additional assumptions did you make that can be captured by COSYSMO? 42

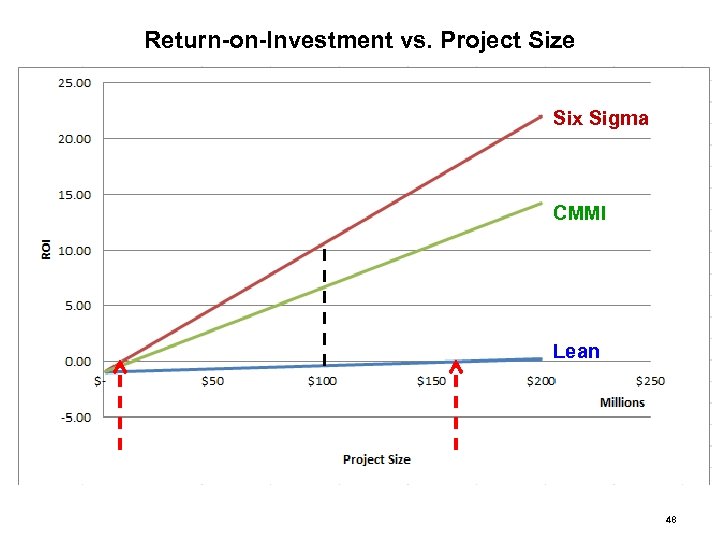

Case Study II: Selection of Process Improvement Initiative • You are asked by your supervisor to recommend which process improvement initiative should be pursued to help your project, a 5 -year $100, 000 systems engineering study. The options and their implementation costs are: Lean $1, 000 Six Sigma $600, 000 CMMI $500, 000 • Which one would you chose? 43

Case Study II: Selection of Process Improvement Initiative • You are asked by your supervisor to recommend which process improvement initiative should be pursued to help your project, a 5 -year $100, 000 systems engineering study. The options and their implementation costs are: Lean $1, 000 Six Sigma $600, 000 CMMI $500, 000 • Which one would you chose? 43

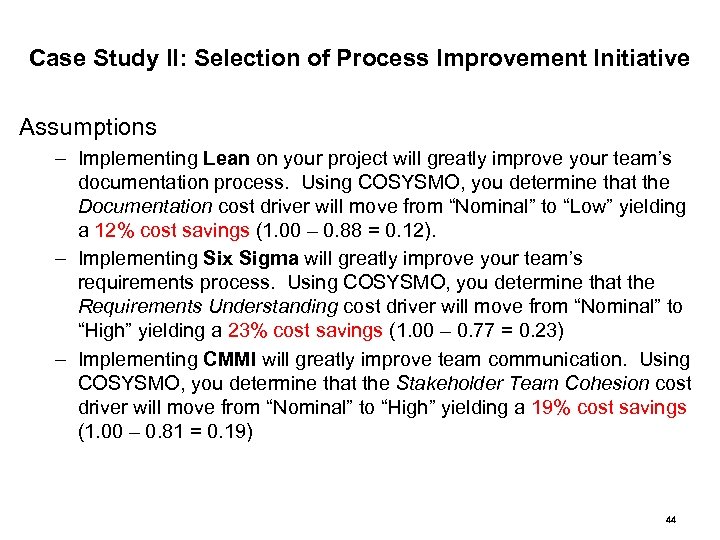

Case Study II: Selection of Process Improvement Initiative Assumptions – Implementing Lean on your project will greatly improve your team’s documentation process. Using COSYSMO, you determine that the Documentation cost driver will move from “Nominal” to “Low” yielding a 12% cost savings (1. 00 – 0. 88 = 0. 12). – Implementing Six Sigma will greatly improve your team’s requirements process. Using COSYSMO, you determine that the Requirements Understanding cost driver will move from “Nominal” to “High” yielding a 23% cost savings (1. 00 – 0. 77 = 0. 23) – Implementing CMMI will greatly improve team communication. Using COSYSMO, you determine that the Stakeholder Team Cohesion cost driver will move from “Nominal” to “High” yielding a 19% cost savings (1. 00 – 0. 81 = 0. 19) 44

Case Study II: Selection of Process Improvement Initiative Assumptions – Implementing Lean on your project will greatly improve your team’s documentation process. Using COSYSMO, you determine that the Documentation cost driver will move from “Nominal” to “Low” yielding a 12% cost savings (1. 00 – 0. 88 = 0. 12). – Implementing Six Sigma will greatly improve your team’s requirements process. Using COSYSMO, you determine that the Requirements Understanding cost driver will move from “Nominal” to “High” yielding a 23% cost savings (1. 00 – 0. 77 = 0. 23) – Implementing CMMI will greatly improve team communication. Using COSYSMO, you determine that the Stakeholder Team Cohesion cost driver will move from “Nominal” to “High” yielding a 19% cost savings (1. 00 – 0. 81 = 0. 19) 44

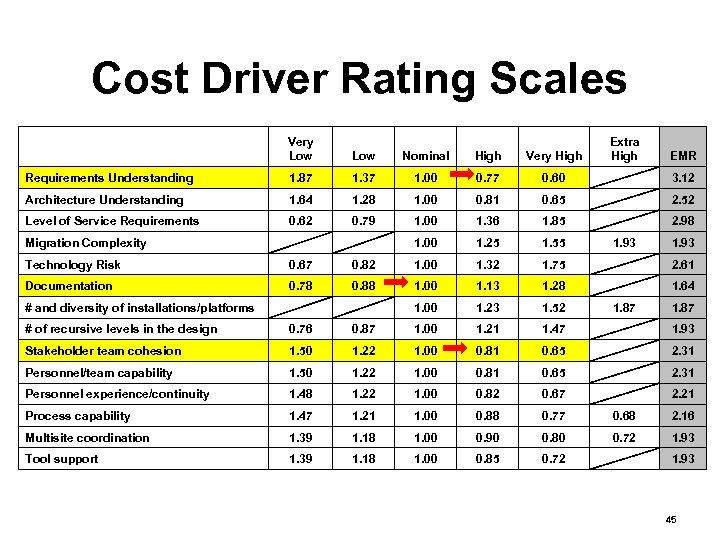

Cost Driver Rating Scales Very Low Very High Extra High Low Nominal High EMR Requirements Understanding 1. 87 1. 37 1. 00 0. 77 0. 60 3. 12 Architecture Understanding 1. 64 1. 28 1. 00 0. 81 0. 65 2. 52 Level of Service Requirements 0. 62 0. 79 1. 00 1. 36 1. 85 2. 98 1. 00 1. 25 1. 55 1. 93 Technology Risk 0. 67 0. 82 1. 00 1. 32 1. 75 2. 61 Documentation 0. 78 0. 88 1. 00 1. 13 1. 28 1. 64 1. 00 1. 23 1. 52 1. 87 # of recursive levels in the design 0. 76 0. 87 1. 00 1. 21 1. 47 1. 93 Stakeholder team cohesion 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel/team capability 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel experience/continuity 1. 48 1. 22 1. 00 0. 82 0. 67 2. 21 Process capability 1. 47 1. 21 1. 00 0. 88 0. 77 0. 68 2. 16 Multisite coordination 1. 39 1. 18 1. 00 0. 90 0. 80 0. 72 1. 93 Tool support 1. 39 1. 18 1. 00 0. 85 0. 72 1. 93 Migration Complexity # and diversity of installations/platforms 45

Cost Driver Rating Scales Very Low Very High Extra High Low Nominal High EMR Requirements Understanding 1. 87 1. 37 1. 00 0. 77 0. 60 3. 12 Architecture Understanding 1. 64 1. 28 1. 00 0. 81 0. 65 2. 52 Level of Service Requirements 0. 62 0. 79 1. 00 1. 36 1. 85 2. 98 1. 00 1. 25 1. 55 1. 93 Technology Risk 0. 67 0. 82 1. 00 1. 32 1. 75 2. 61 Documentation 0. 78 0. 88 1. 00 1. 13 1. 28 1. 64 1. 00 1. 23 1. 52 1. 87 # of recursive levels in the design 0. 76 0. 87 1. 00 1. 21 1. 47 1. 93 Stakeholder team cohesion 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel/team capability 1. 50 1. 22 1. 00 0. 81 0. 65 2. 31 Personnel experience/continuity 1. 48 1. 22 1. 00 0. 82 0. 67 2. 21 Process capability 1. 47 1. 21 1. 00 0. 88 0. 77 0. 68 2. 16 Multisite coordination 1. 39 1. 18 1. 00 0. 90 0. 80 0. 72 1. 93 Tool support 1. 39 1. 18 1. 00 0. 85 0. 72 1. 93 Migration Complexity # and diversity of installations/platforms 45

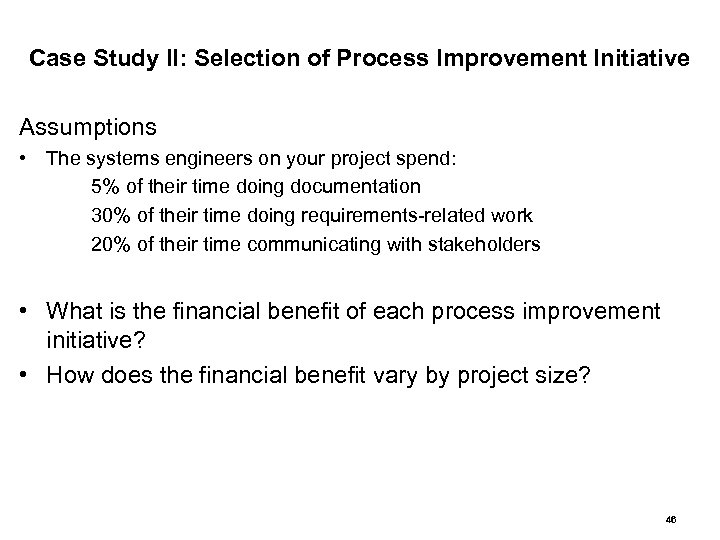

Case Study II: Selection of Process Improvement Initiative Assumptions • The systems engineers on your project spend: 5% of their time doing documentation 30% of their time doing requirements-related work 20% of their time communicating with stakeholders • What is the financial benefit of each process improvement initiative? • How does the financial benefit vary by project size? 46

Case Study II: Selection of Process Improvement Initiative Assumptions • The systems engineers on your project spend: 5% of their time doing documentation 30% of their time doing requirements-related work 20% of their time communicating with stakeholders • What is the financial benefit of each process improvement initiative? • How does the financial benefit vary by project size? 46

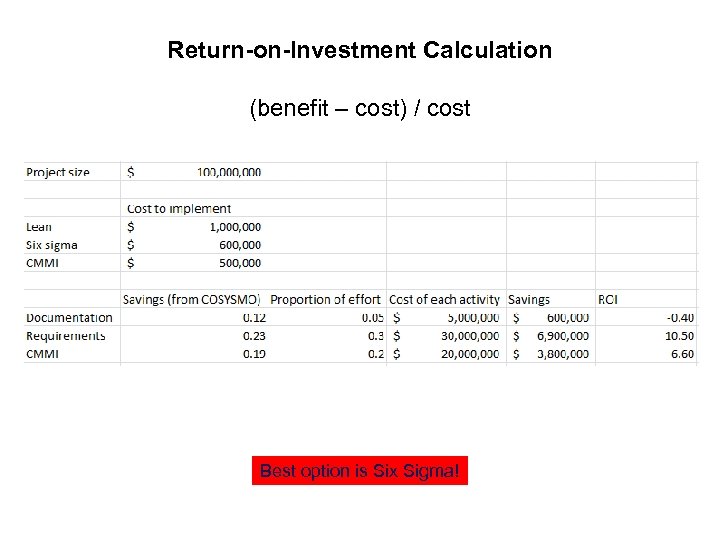

Return-on-Investment Calculation (benefit – cost) / cost Best option is Six Sigma!

Return-on-Investment Calculation (benefit – cost) / cost Best option is Six Sigma!

Return-on-Investment vs. Project Size Six Sigma CMMI Lean 48

Return-on-Investment vs. Project Size Six Sigma CMMI Lean 48

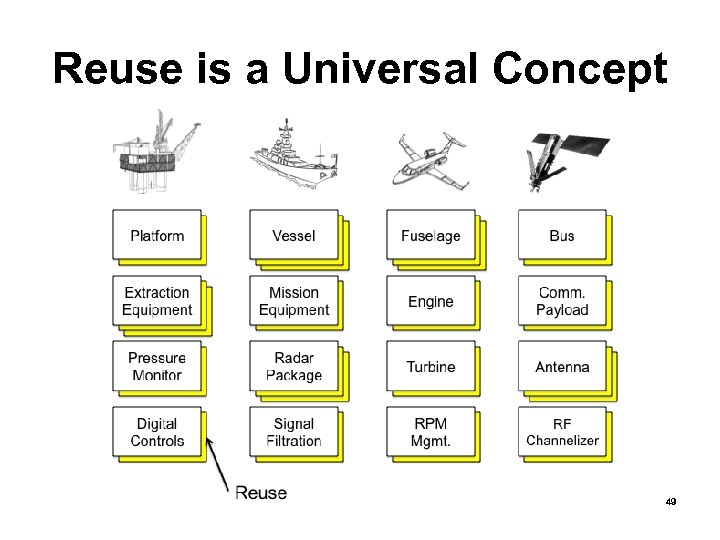

Reuse is a Universal Concept 49

Reuse is a Universal Concept 49

Reusable Artifacts • • • System Requirements System Architectures System Description/Design Documents Interface Specifications for Legacy Systems Configuration management plan Systems engineering management plan Well-Established Test Procedures User Guides and Operation Manuals Etc. 50

Reusable Artifacts • • • System Requirements System Architectures System Description/Design Documents Interface Specifications for Legacy Systems Configuration management plan Systems engineering management plan Well-Established Test Procedures User Guides and Operation Manuals Etc. 50

Reuse Principles 1. The economic benefits of reuse can be described either in terms of improvement (quality, risk identification) or reduction (defects, cost/effort, time to market). 2. Reuse is not free, upfront investment is required (i. e. , there is a cost associated with design for reusability). 3. Reuse needs to be planned from the conceptualization phase of program 51

Reuse Principles 1. The economic benefits of reuse can be described either in terms of improvement (quality, risk identification) or reduction (defects, cost/effort, time to market). 2. Reuse is not free, upfront investment is required (i. e. , there is a cost associated with design for reusability). 3. Reuse needs to be planned from the conceptualization phase of program 51

Reuse Principles (con’t. ) 4. Products, processes, and knowledge are all reusable artifacts. 5. Reuse is as much of a technical issue as it is an organizational one 6. Reuse is knowledge that must be deliberately captured in order to be beneficial. 52

Reuse Principles (con’t. ) 4. Products, processes, and knowledge are all reusable artifacts. 5. Reuse is as much of a technical issue as it is an organizational one 6. Reuse is knowledge that must be deliberately captured in order to be beneficial. 52

Reuse Principles (con’t. ) 7. The relationship between project size/complexity and amount of reuse is non-linear 8. The benefits of reuse are limited to closely related domains. 9. Reuse is more successful when level of service requirements are equivalent across applications. 53

Reuse Principles (con’t. ) 7. The relationship between project size/complexity and amount of reuse is non-linear 8. The benefits of reuse are limited to closely related domains. 9. Reuse is more successful when level of service requirements are equivalent across applications. 53

Reuse Principles (con’t. ) 10. Higher reuse opportunities exist when there is a match between the diversity and volatility of a product line and its associated supply chain. 11. Bottom-up (individual elements where make or buy decisions are made) and top-down (where product line reuse is made) reuse require fundamentally different strategies. 12. Reuse applicability is often time dependent; rapidly evolving domains offer fewer reuse opportunities than static domains. 54

Reuse Principles (con’t. ) 10. Higher reuse opportunities exist when there is a match between the diversity and volatility of a product line and its associated supply chain. 11. Bottom-up (individual elements where make or buy decisions are made) and top-down (where product line reuse is made) reuse require fundamentally different strategies. 12. Reuse applicability is often time dependent; rapidly evolving domains offer fewer reuse opportunities than static domains. 54

Reuse Success Factors • Platform – • People – • Appropriate product or technology, primed for reuse Adequate knowledge and understanding of both the heritage and new products Processes – Sufficient documentation to acquire and capture knowledge applicable to reuse as well as the capability to actually deliver a system incorporating or enabling reuse 55

Reuse Success Factors • Platform – • People – • Appropriate product or technology, primed for reuse Adequate knowledge and understanding of both the heritage and new products Processes – Sufficient documentation to acquire and capture knowledge applicable to reuse as well as the capability to actually deliver a system incorporating or enabling reuse 55

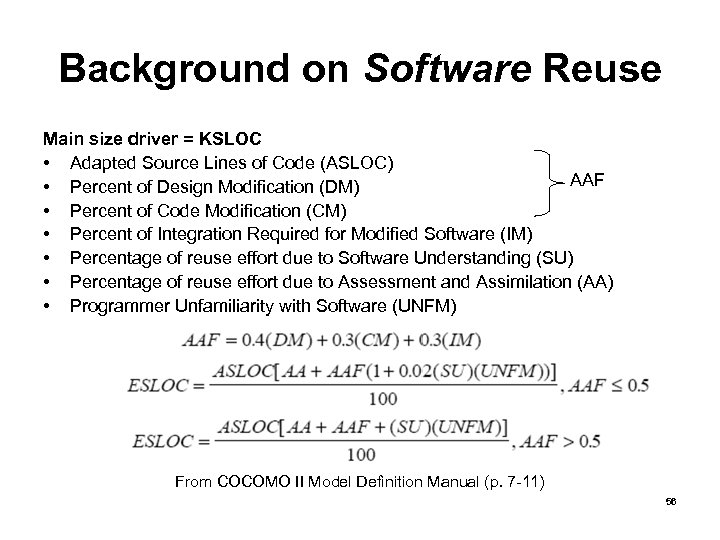

Background on Software Reuse Main size driver = KSLOC • Adapted Source Lines of Code (ASLOC) AAF • Percent of Design Modification (DM) • Percent of Code Modification (CM) • Percent of Integration Required for Modified Software (IM) • Percentage of reuse effort due to Software Understanding (SU) • Percentage of reuse effort due to Assessment and Assimilation (AA) • Programmer Unfamiliarity with Software (UNFM) From COCOMO II Model Definition Manual (p. 7 -11) 56

Background on Software Reuse Main size driver = KSLOC • Adapted Source Lines of Code (ASLOC) AAF • Percent of Design Modification (DM) • Percent of Code Modification (CM) • Percent of Integration Required for Modified Software (IM) • Percentage of reuse effort due to Software Understanding (SU) • Percentage of reuse effort due to Assessment and Assimilation (AA) • Programmer Unfamiliarity with Software (UNFM) From COCOMO II Model Definition Manual (p. 7 -11) 56

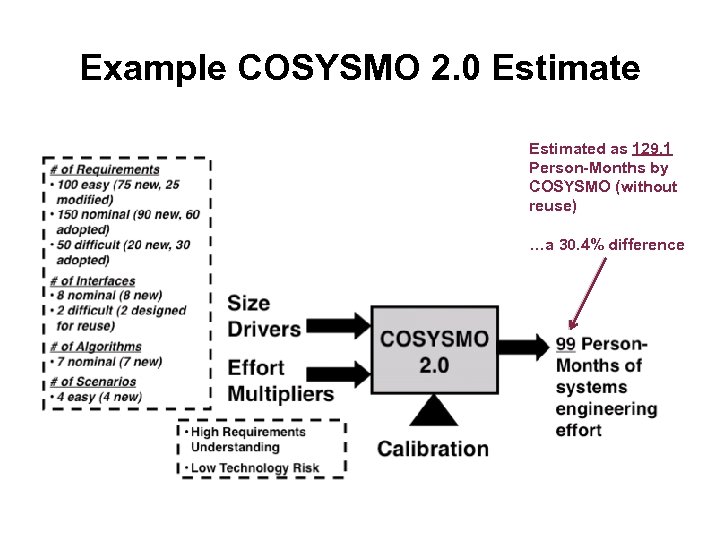

Example COSYSMO 2. 0 Estimated as 129. 1 Person-Months by COSYSMO (without reuse) …a 30. 4% difference

Example COSYSMO 2. 0 Estimated as 129. 1 Person-Months by COSYSMO (without reuse) …a 30. 4% difference

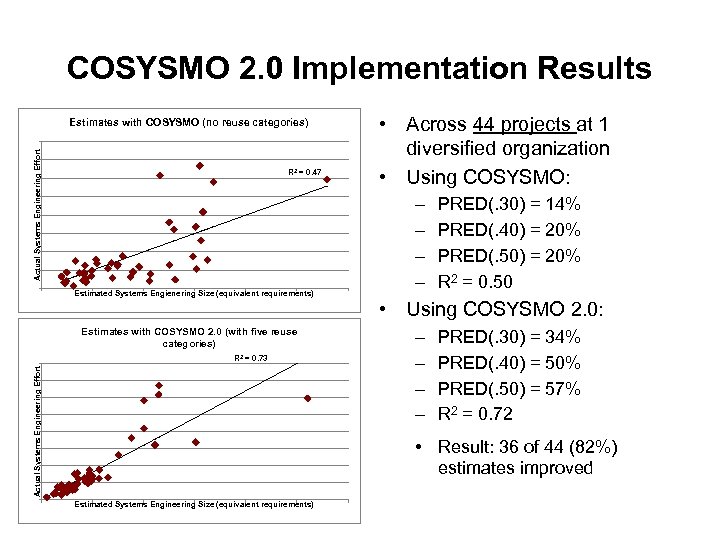

COSYSMO 2. 0 Implementation Results Actual Systems Engineering Effort Estimates with COSYSMO (no reuse categories) R 2 = 0. 47 Estimated Systems Engienering Size (equivalent requirements) • Across 44 projects at 1 diversified organization • Using COSYSMO: – – PRED(. 30) = 14% PRED(. 40) = 20% PRED(. 50) = 20% R 2 = 0. 50 • Using COSYSMO 2. 0: Estimates with COSYSMO 2. 0 (with five reuse categories) Actual Systems Engineering Effort R 2 = 0. 73 – – PRED(. 30) = 34% PRED(. 40) = 50% PRED(. 50) = 57% R 2 = 0. 72 • Result: 36 of 44 (82%) estimates improved September 10, 2009 Estimated Systems Engineering Size (equivalent requirements)

COSYSMO 2. 0 Implementation Results Actual Systems Engineering Effort Estimates with COSYSMO (no reuse categories) R 2 = 0. 47 Estimated Systems Engienering Size (equivalent requirements) • Across 44 projects at 1 diversified organization • Using COSYSMO: – – PRED(. 30) = 14% PRED(. 40) = 20% PRED(. 50) = 20% R 2 = 0. 50 • Using COSYSMO 2. 0: Estimates with COSYSMO 2. 0 (with five reuse categories) Actual Systems Engineering Effort R 2 = 0. 73 – – PRED(. 30) = 34% PRED(. 40) = 50% PRED(. 50) = 57% R 2 = 0. 72 • Result: 36 of 44 (82%) estimates improved September 10, 2009 Estimated Systems Engineering Size (equivalent requirements)

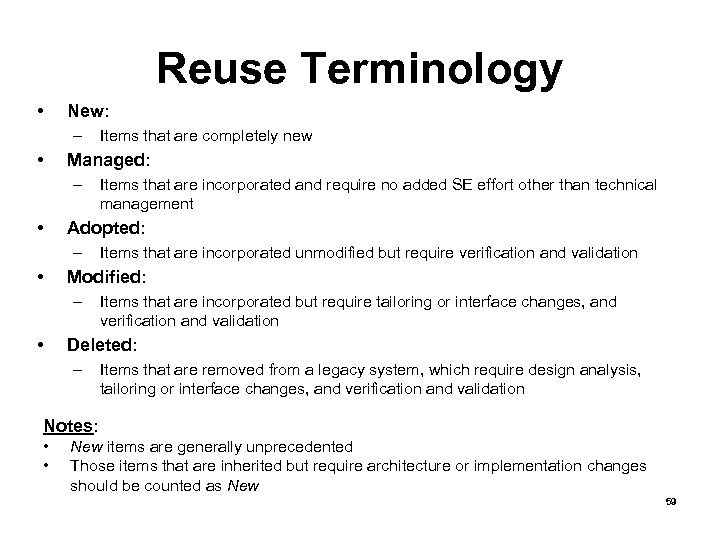

Reuse Terminology • New: – • Managed: – • Items that are incorporated unmodified but require verification and validation Modified: – • Items that are incorporated and require no added SE effort other than technical management Adopted: – • Items that are completely new Items that are incorporated but require tailoring or interface changes, and verification and validation Deleted: – Items that are removed from a legacy system, which require design analysis, tailoring or interface changes, and verification and validation Notes: • • New items are generally unprecedented Those items that are inherited but require architecture or implementation changes should be counted as New 59

Reuse Terminology • New: – • Managed: – • Items that are incorporated unmodified but require verification and validation Modified: – • Items that are incorporated and require no added SE effort other than technical management Adopted: – • Items that are completely new Items that are incorporated but require tailoring or interface changes, and verification and validation Deleted: – Items that are removed from a legacy system, which require design analysis, tailoring or interface changes, and verification and validation Notes: • • New items are generally unprecedented Those items that are inherited but require architecture or implementation changes should be counted as New 59

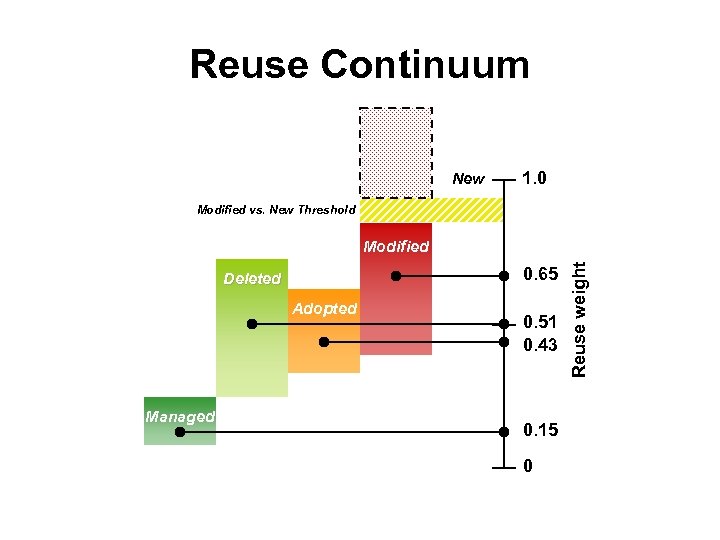

Reuse Continuum New 1. 0 Modified vs. New Threshold 0. 65 Deleted Adopted Managed 0. 51 0. 43 0. 15 0 Reuse weight Modified

Reuse Continuum New 1. 0 Modified vs. New Threshold 0. 65 Deleted Adopted Managed 0. 51 0. 43 0. 15 0 Reuse weight Modified

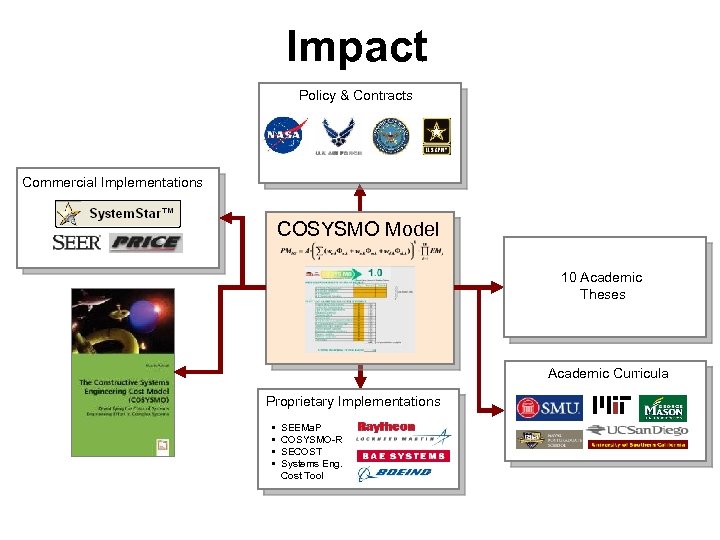

Impact Policy & Contracts Commercial Implementations COSYSMO Model 10 Academic Theses Academic Curricula Proprietary Implementations • • SEEMa. P COSYSMO-R SECOST Systems Eng. Cost Tool

Impact Policy & Contracts Commercial Implementations COSYSMO Model 10 Academic Theses Academic Curricula Proprietary Implementations • • SEEMa. P COSYSMO-R SECOST Systems Eng. Cost Tool

Take-Aways 1. Cost ≈ f(Effort) ≈ f(Size) ≈ f(Complexity) 2. Requirements understanding and “ilities” are the most influential on cost 3. Early systems engineering yields high ROI when done early and well Two case studies: • SE estimate with limited information • Selection of process improvement initiative 63

Take-Aways 1. Cost ≈ f(Effort) ≈ f(Size) ≈ f(Complexity) 2. Requirements understanding and “ilities” are the most influential on cost 3. Early systems engineering yields high ROI when done early and well Two case studies: • SE estimate with limited information • Selection of process improvement initiative 63