d731a454682e20b0dbd70de1be390cb9.ppt

- Количество слайдов: 63

Document Preprocessing and Indexing SI 650: Information Retrieval Winter 2010 School of Information University of Michigan 2010 © University of Michigan 1

Document Preprocessing and Indexing SI 650: Information Retrieval Winter 2010 School of Information University of Michigan 2010 © University of Michigan 1

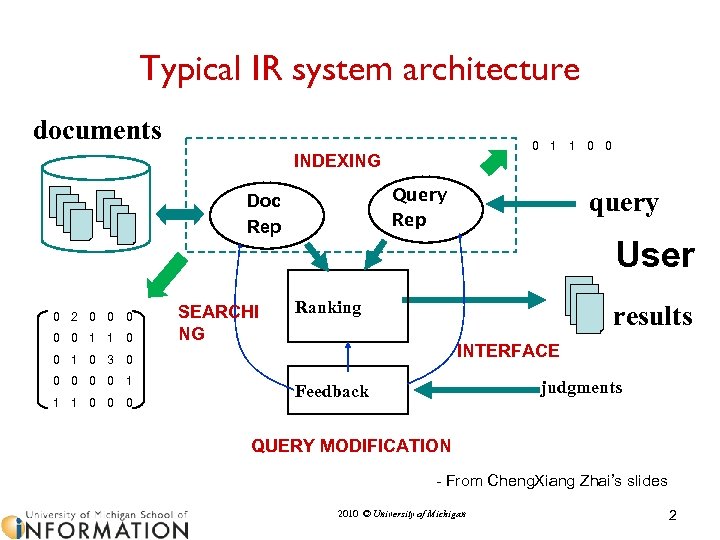

Typical IR system architecture documents 0 1 1 0 0 INDEXING Query Rep Doc Rep 0 2 0 0 0 1 1 0 SEARCHI NG User Ranking results INTERFACE 0 1 0 3 0 0 0 1 1 1 0 0 0 query judgments Feedback QUERY MODIFICATION - From Cheng. Xiang Zhai’s slides 2010 © University of Michigan 2

Typical IR system architecture documents 0 1 1 0 0 INDEXING Query Rep Doc Rep 0 2 0 0 0 1 1 0 SEARCHI NG User Ranking results INTERFACE 0 1 0 3 0 0 0 1 1 1 0 0 0 query judgments Feedback QUERY MODIFICATION - From Cheng. Xiang Zhai’s slides 2010 © University of Michigan 2

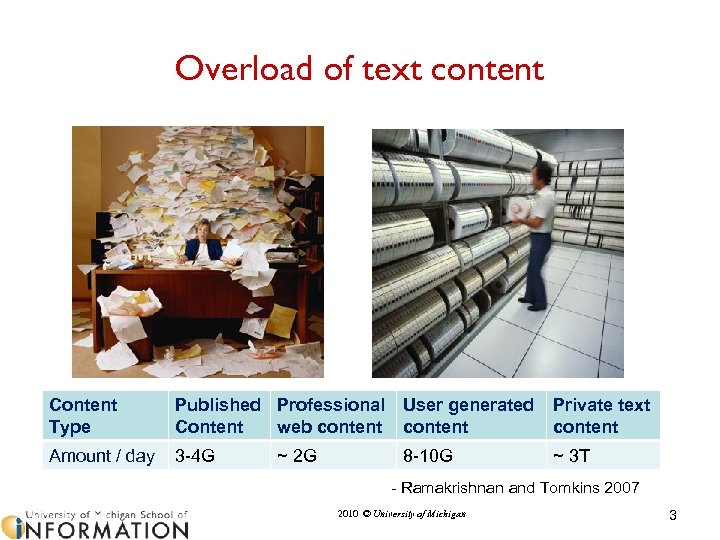

Overload of text content Content Type Published Professional Content web content User generated content Private text content Amount / day 3 -4 G 8 -10 G ~ 3 T ~ 2 G - Ramakrishnan and Tomkins 2007 2010 © University of Michigan 3

Overload of text content Content Type Published Professional Content web content User generated content Private text content Amount / day 3 -4 G 8 -10 G ~ 3 T ~ 2 G - Ramakrishnan and Tomkins 2007 2010 © University of Michigan 3

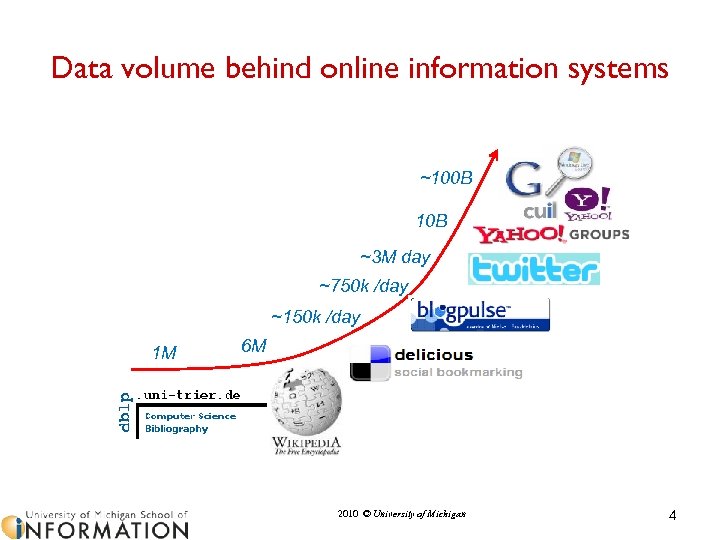

Data volume behind online information systems ~100 B 10 B ~3 M day ~750 k /day ~150 k /day 1 M 6 M 2010 © University of Michigan 4

Data volume behind online information systems ~100 B 10 B ~3 M day ~750 k /day ~150 k /day 1 M 6 M 2010 © University of Michigan 4

IR Winter 2010 … Automated indexing/labeling Storing, indexing and searching text. Inverted indexes. … 2010 © University of Michigan

IR Winter 2010 … Automated indexing/labeling Storing, indexing and searching text. Inverted indexes. … 2010 © University of Michigan

Sec. 1. 1 Handling large collections • Life is good when every document is mapped into a vector of words, but … • Consider N = 1 million documents, each with about 1000 words. • Avg 6 bytes/word including spaces/punctuation – 6 GB of data in the documents. • Say there are M = 500 K distinct terms among these. 2010 © University of Michigan 6

Sec. 1. 1 Handling large collections • Life is good when every document is mapped into a vector of words, but … • Consider N = 1 million documents, each with about 1000 words. • Avg 6 bytes/word including spaces/punctuation – 6 GB of data in the documents. • Say there are M = 500 K distinct terms among these. 2010 © University of Michigan 6

Sec. 1. 1 Storage issue • 500 K x 1 M matrix has half-a-trillion elements. – 4 bytes for an integer – 500 K x 1 M x 4 = 2 T (your laptop would fail) – 500 K x 100 G x 4 = 2*105 T (challenging even for google) • But it has no more than one billion positive numbers. – matrix is extremely sparse. – 1000 x 1 M x 4 = 4 G • What’s a better representation? 2010 © University of Michigan 7

Sec. 1. 1 Storage issue • 500 K x 1 M matrix has half-a-trillion elements. – 4 bytes for an integer – 500 K x 1 M x 4 = 2 T (your laptop would fail) – 500 K x 100 G x 4 = 2*105 T (challenging even for google) • But it has no more than one billion positive numbers. – matrix is extremely sparse. – 1000 x 1 M x 4 = 4 G • What’s a better representation? 2010 © University of Michigan 7

Indexing • Indexing = Convert documents to data structures that enable fast search • Inverted index is the dominating indexing method (used by all search engines) • Other indices (e. g. , document index) may be needed for feedback 2010 © University of Michigan 8

Indexing • Indexing = Convert documents to data structures that enable fast search • Inverted index is the dominating indexing method (used by all search engines) • Other indices (e. g. , document index) may be needed for feedback 2010 © University of Michigan 8

Inverted index • Instead of an incidence vector, use a posting table • CLEVELAND: D 1, D 2, D 6 • OHIO: D 1, D 5, D 6, D 7 • Use linked lists to be able to insert new document postings in order and to remove existing postings. • More efficient than scanning docs (why? ) 2010 © University of Michigan 9

Inverted index • Instead of an incidence vector, use a posting table • CLEVELAND: D 1, D 2, D 6 • OHIO: D 1, D 5, D 6, D 7 • Use linked lists to be able to insert new document postings in order and to remove existing postings. • More efficient than scanning docs (why? ) 2010 © University of Michigan 9

Inverted index • Fast access to all docs containing a given term (along with frequency and position information) • For each term, we get a list of tuples – (doc. ID, freq, pos). • Given a query, we can fetch the lists for all query terms and work on the involved documents. – Boolean query: set operation – Natural language query: term weight summing • Keep everything sorted! This gives you a logarithmic improvement in access. 2010 © University of Michigan 10

Inverted index • Fast access to all docs containing a given term (along with frequency and position information) • For each term, we get a list of tuples – (doc. ID, freq, pos). • Given a query, we can fetch the lists for all query terms and work on the involved documents. – Boolean query: set operation – Natural language query: term weight summing • Keep everything sorted! This gives you a logarithmic improvement in access. 2010 © University of Michigan 10

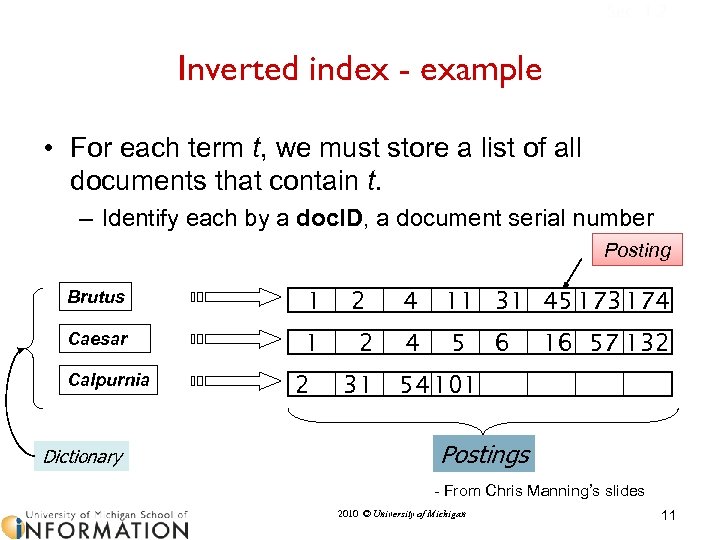

Sec. 1. 2 Inverted index - example • For each term t, we must store a list of all documents that contain t. – Identify each by a doc. ID, a document serial number Posting Brutus 1 Caesar 1 Calpurnia Dictionary 2 2 2 31 4 11 31 45 173 174 4 5 6 16 57 132 54 101 Postings - From Chris Manning’s slides 2010 © University of Michigan 11

Sec. 1. 2 Inverted index - example • For each term t, we must store a list of all documents that contain t. – Identify each by a doc. ID, a document serial number Posting Brutus 1 Caesar 1 Calpurnia Dictionary 2 2 2 31 4 11 31 45 173 174 4 5 6 16 57 132 54 101 Postings - From Chris Manning’s slides 2010 © University of Michigan 11

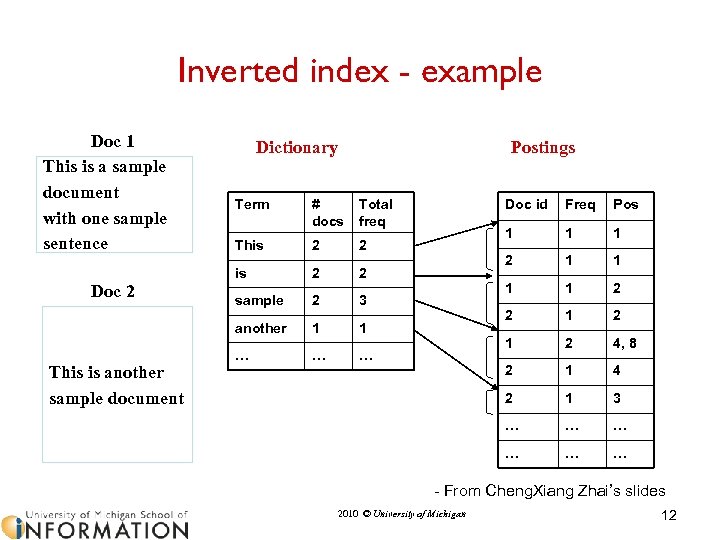

Inverted index - example Doc 1 This is a sample document with one sample sentence Dictionary Postings Total freq This 2 2 sample 2 3 another This is another sample document # docs is Doc 2 Term 1 1 … … Doc id … Freq Pos 1 1 1 2 2 1 2 4, 8 2 1 4 2 1 3 … … … - From Cheng. Xiang Zhai’s slides 2010 © University of Michigan 12

Inverted index - example Doc 1 This is a sample document with one sample sentence Dictionary Postings Total freq This 2 2 sample 2 3 another This is another sample document # docs is Doc 2 Term 1 1 … … Doc id … Freq Pos 1 1 1 2 2 1 2 4, 8 2 1 4 2 1 3 … … … - From Cheng. Xiang Zhai’s slides 2010 © University of Michigan 12

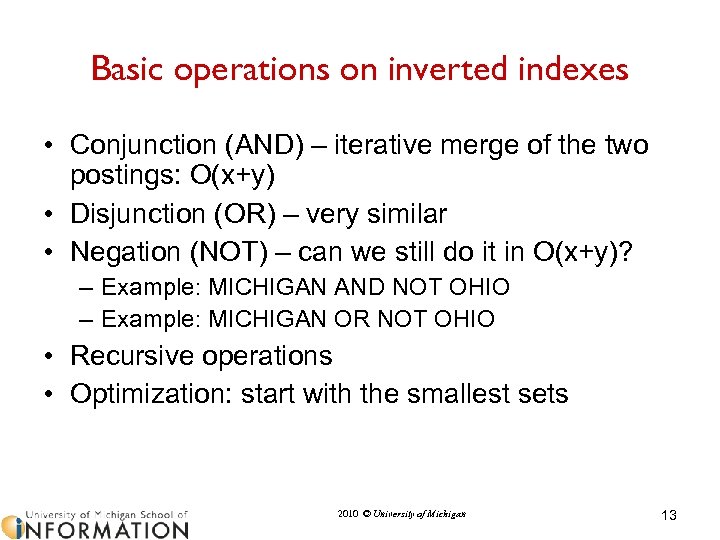

Basic operations on inverted indexes • Conjunction (AND) – iterative merge of the two postings: O(x+y) • Disjunction (OR) – very similar • Negation (NOT) – can we still do it in O(x+y)? – Example: MICHIGAN AND NOT OHIO – Example: MICHIGAN OR NOT OHIO • Recursive operations • Optimization: start with the smallest sets 2010 © University of Michigan 13

Basic operations on inverted indexes • Conjunction (AND) – iterative merge of the two postings: O(x+y) • Disjunction (OR) – very similar • Negation (NOT) – can we still do it in O(x+y)? – Example: MICHIGAN AND NOT OHIO – Example: MICHIGAN OR NOT OHIO • Recursive operations • Optimization: start with the smallest sets 2010 © University of Michigan 13

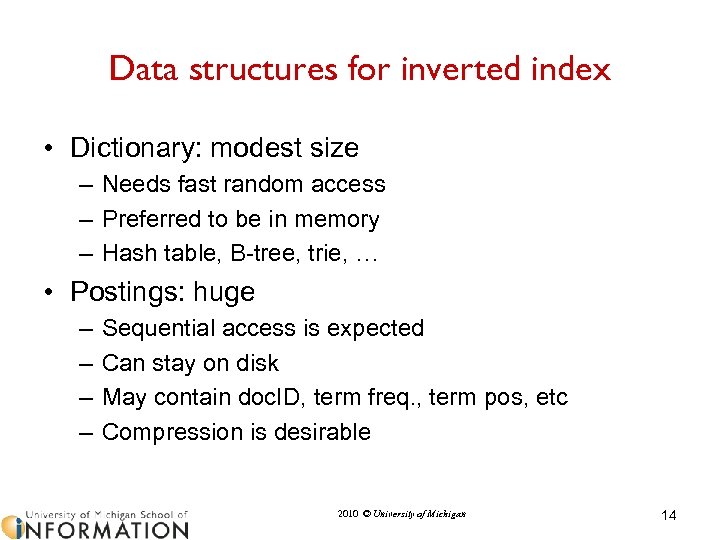

Data structures for inverted index • Dictionary: modest size – Needs fast random access – Preferred to be in memory – Hash table, B-tree, trie, … • Postings: huge – – Sequential access is expected Can stay on disk May contain doc. ID, term freq. , term pos, etc Compression is desirable 2010 © University of Michigan 14

Data structures for inverted index • Dictionary: modest size – Needs fast random access – Preferred to be in memory – Hash table, B-tree, trie, … • Postings: huge – – Sequential access is expected Can stay on disk May contain doc. ID, term freq. , term pos, etc Compression is desirable 2010 © University of Michigan 14

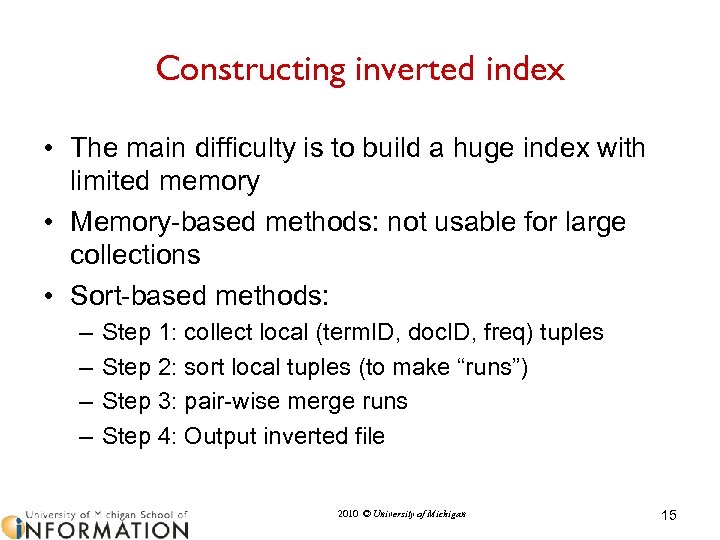

Constructing inverted index • The main difficulty is to build a huge index with limited memory • Memory-based methods: not usable for large collections • Sort-based methods: – – Step 1: collect local (term. ID, doc. ID, freq) tuples Step 2: sort local tuples (to make “runs”) Step 3: pair-wise merge runs Step 4: Output inverted file 2010 © University of Michigan 15

Constructing inverted index • The main difficulty is to build a huge index with limited memory • Memory-based methods: not usable for large collections • Sort-based methods: – – Step 1: collect local (term. ID, doc. ID, freq) tuples Step 2: sort local tuples (to make “runs”) Step 3: pair-wise merge runs Step 4: Output inverted file 2010 © University of Michigan 15

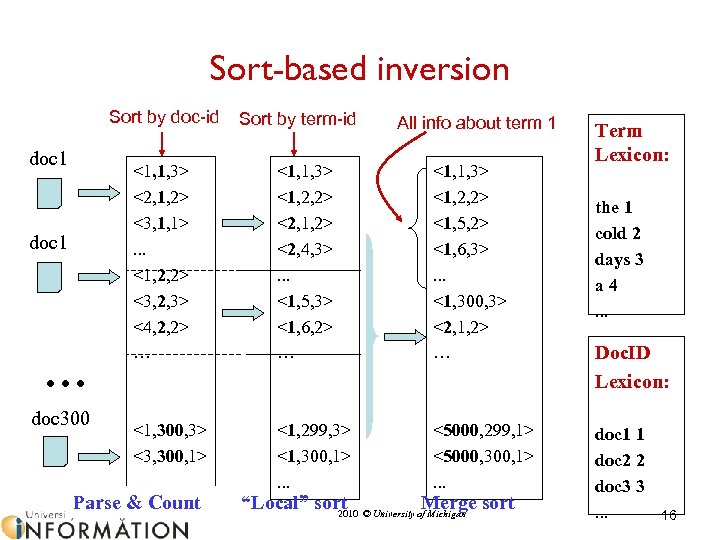

Sort-based inversion Sort by doc-id doc 1 . . . doc 300 Sort by term-id All info about term 1 <1, 1, 3> <2, 1, 2> <3, 1, 1>. . . <1, 2, 2> <3, 2, 3> <4, 2, 2> … <1, 1, 3> <1, 2, 2> <2, 1, 2> <2, 4, 3>. . . <1, 5, 3> <1, 6, 2> … <1, 1, 3> <1, 2, 2> <1, 5, 2> <1, 6, 3>. . . <1, 300, 3> <2, 1, 2> … <1, 300, 3> <3, 300, 1>. . . <1, 299, 3> <1, 300, 1>. . . <5000, 299, 1> <5000, 300, 1>. . . Parse & Count “Local” sort © University of. Merge sort 2010 Michigan Term Lexicon: the 1 cold 2 days 3 a 4. . . Doc. ID Lexicon: doc 1 1 doc 2 2 doc 3 3. . . 16

Sort-based inversion Sort by doc-id doc 1 . . . doc 300 Sort by term-id All info about term 1 <1, 1, 3> <2, 1, 2> <3, 1, 1>. . . <1, 2, 2> <3, 2, 3> <4, 2, 2> … <1, 1, 3> <1, 2, 2> <2, 1, 2> <2, 4, 3>. . . <1, 5, 3> <1, 6, 2> … <1, 1, 3> <1, 2, 2> <1, 5, 2> <1, 6, 3>. . . <1, 300, 3> <2, 1, 2> … <1, 300, 3> <3, 300, 1>. . . <1, 299, 3> <1, 300, 1>. . . <5000, 299, 1> <5000, 300, 1>. . . Parse & Count “Local” sort © University of. Merge sort 2010 Michigan Term Lexicon: the 1 cold 2 days 3 a 4. . . Doc. ID Lexicon: doc 1 1 doc 2 2 doc 3 3. . . 16

IR Winter 2010 … Document preprocessing. Tokenization. Stemming. The Porter algorithm. … 2010 © University of Michigan

IR Winter 2010 … Document preprocessing. Tokenization. Stemming. The Porter algorithm. … 2010 © University of Michigan

Can we make it even better? • Index term selection/normalization – Reduce the size of the vocabulary • Index compression – Reduce the space of storage 2010 © University of Michigan 18

Can we make it even better? • Index term selection/normalization – Reduce the size of the vocabulary • Index compression – Reduce the space of storage 2010 © University of Michigan 18

Should we index every term? • How big is English? – – – Dictionary Marketing Education (Testing of Vocabulary Size) Psychology Statistics Linguistics • Two Very Different Answers – Chomsky: language is infinite – Shannon: 1. 25 bits per character • Should we care about a term – If no body uses it as a query? 2010 © University of Michigan 19

Should we index every term? • How big is English? – – – Dictionary Marketing Education (Testing of Vocabulary Size) Psychology Statistics Linguistics • Two Very Different Answers – Chomsky: language is infinite – Shannon: 1. 25 bits per character • Should we care about a term – If no body uses it as a query? 2010 © University of Michigan 19

What is a good indexing term? • Specific (phrases) or general (single word)? • Luhn found that words with middle frequency are most useful – Not too specific (low utility, but still useful!) – Not too general (lack of discrimination, stop words) – Stop word removal is common, but rare words are kept • All words or a (controlled) subset? When term weighting is used, it is a matter of weighting not selecting of indexing terms (more later) 2010 © University of Michigan 20

What is a good indexing term? • Specific (phrases) or general (single word)? • Luhn found that words with middle frequency are most useful – Not too specific (low utility, but still useful!) – Not too general (lack of discrimination, stop words) – Stop word removal is common, but rare words are kept • All words or a (controlled) subset? When term weighting is used, it is a matter of weighting not selecting of indexing terms (more later) 2010 © University of Michigan 20

Term selection for indexing • Manual: e. g. , Library of Congress subject headings, Me. SH • Automatic: e. g. , TF*IDF based 2010 © University of Michigan 21

Term selection for indexing • Manual: e. g. , Library of Congress subject headings, Me. SH • Automatic: e. g. , TF*IDF based 2010 © University of Michigan 21

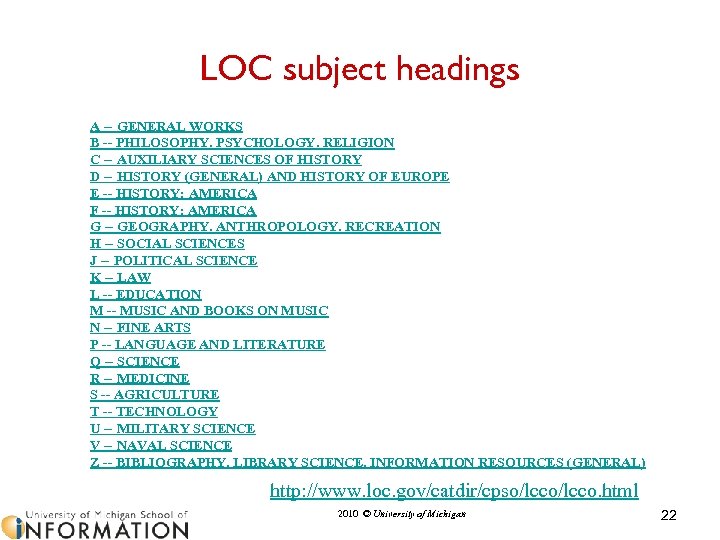

LOC subject headings A -- GENERAL WORKS B -- PHILOSOPHY. PSYCHOLOGY. RELIGION C -- AUXILIARY SCIENCES OF HISTORY D -- HISTORY (GENERAL) AND HISTORY OF EUROPE E -- HISTORY: AMERICA F -- HISTORY: AMERICA G -- GEOGRAPHY. ANTHROPOLOGY. RECREATION H -- SOCIAL SCIENCES J -- POLITICAL SCIENCE K -- LAW L -- EDUCATION M -- MUSIC AND BOOKS ON MUSIC N -- FINE ARTS P -- LANGUAGE AND LITERATURE Q -- SCIENCE R -- MEDICINE S -- AGRICULTURE T -- TECHNOLOGY U -- MILITARY SCIENCE V -- NAVAL SCIENCE Z -- BIBLIOGRAPHY. LIBRARY SCIENCE. INFORMATION RESOURCES (GENERAL) http: //www. loc. gov/catdir/cpso/lcco. html 2010 © University of Michigan 22

LOC subject headings A -- GENERAL WORKS B -- PHILOSOPHY. PSYCHOLOGY. RELIGION C -- AUXILIARY SCIENCES OF HISTORY D -- HISTORY (GENERAL) AND HISTORY OF EUROPE E -- HISTORY: AMERICA F -- HISTORY: AMERICA G -- GEOGRAPHY. ANTHROPOLOGY. RECREATION H -- SOCIAL SCIENCES J -- POLITICAL SCIENCE K -- LAW L -- EDUCATION M -- MUSIC AND BOOKS ON MUSIC N -- FINE ARTS P -- LANGUAGE AND LITERATURE Q -- SCIENCE R -- MEDICINE S -- AGRICULTURE T -- TECHNOLOGY U -- MILITARY SCIENCE V -- NAVAL SCIENCE Z -- BIBLIOGRAPHY. LIBRARY SCIENCE. INFORMATION RESOURCES (GENERAL) http: //www. loc. gov/catdir/cpso/lcco. html 2010 © University of Michigan 22

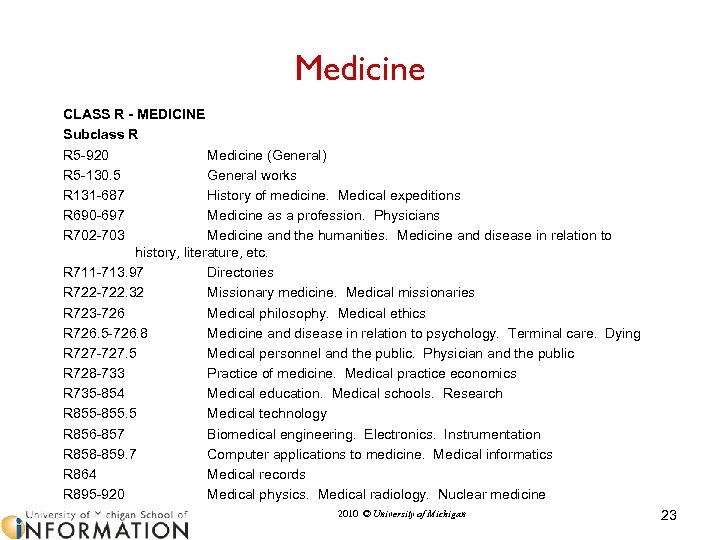

Medicine CLASS R - MEDICINE Subclass R R 5 -920 Medicine (General) R 5 -130. 5 General works R 131 -687 History of medicine. Medical expeditions R 690 -697 Medicine as a profession. Physicians R 702 -703 Medicine and the humanities. Medicine and disease in relation to history, literature, etc. R 711 -713. 97 Directories R 722 -722. 32 Missionary medicine. Medical missionaries R 723 -726 Medical philosophy. Medical ethics R 726. 5 -726. 8 Medicine and disease in relation to psychology. Terminal care. Dying R 727 -727. 5 Medical personnel and the public. Physician and the public R 728 -733 Practice of medicine. Medical practice economics R 735 -854 Medical education. Medical schools. Research R 855 -855. 5 Medical technology R 856 -857 Biomedical engineering. Electronics. Instrumentation R 858 -859. 7 Computer applications to medicine. Medical informatics R 864 Medical records R 895 -920 Medical physics. Medical radiology. Nuclear medicine 2010 © University of Michigan 23

Medicine CLASS R - MEDICINE Subclass R R 5 -920 Medicine (General) R 5 -130. 5 General works R 131 -687 History of medicine. Medical expeditions R 690 -697 Medicine as a profession. Physicians R 702 -703 Medicine and the humanities. Medicine and disease in relation to history, literature, etc. R 711 -713. 97 Directories R 722 -722. 32 Missionary medicine. Medical missionaries R 723 -726 Medical philosophy. Medical ethics R 726. 5 -726. 8 Medicine and disease in relation to psychology. Terminal care. Dying R 727 -727. 5 Medical personnel and the public. Physician and the public R 728 -733 Practice of medicine. Medical practice economics R 735 -854 Medical education. Medical schools. Research R 855 -855. 5 Medical technology R 856 -857 Biomedical engineering. Electronics. Instrumentation R 858 -859. 7 Computer applications to medicine. Medical informatics R 864 Medical records R 895 -920 Medical physics. Medical radiology. Nuclear medicine 2010 © University of Michigan 23

Automatic term selection methods • TF*IDF: pick terms with the highest TF*IDF scores • Centroid-based: pick terms that appear in the centroid with high scores • The maximal marginal relevance principle (MMR) • Related to summarization, snippet generation 2010 © University of Michigan 24

Automatic term selection methods • TF*IDF: pick terms with the highest TF*IDF scores • Centroid-based: pick terms that appear in the centroid with high scores • The maximal marginal relevance principle (MMR) • Related to summarization, snippet generation 2010 © University of Michigan 24

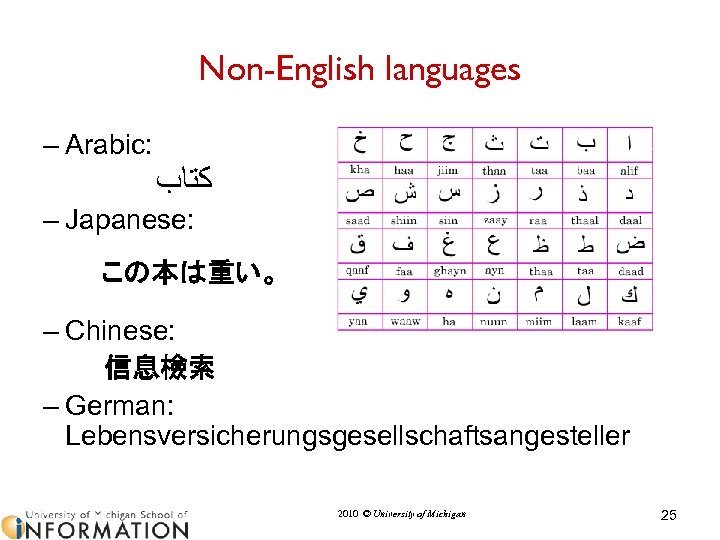

Non-English languages – Arabic: ﻛﺘﺎﺏ – Japanese: この本は重い。 – Chinese: 信息檢索 – German: Lebensversicherungsgesellschaftsangesteller 2010 © University of Michigan 25

Non-English languages – Arabic: ﻛﺘﺎﺏ – Japanese: この本は重い。 – Chinese: 信息檢索 – German: Lebensversicherungsgesellschaftsangesteller 2010 © University of Michigan 25

Document preprocessing • • What should we use to index? Dealing with formatting and encoding issues Hyphenation, accents, stemming, capitalization Tokenization: – Paul’s, Willow Dr. , Dr. Willow, 555 -1212, New York, ad hoc, can’t – Example: “The New York-Los Angeles flight” 2010 © University of Michigan 26

Document preprocessing • • What should we use to index? Dealing with formatting and encoding issues Hyphenation, accents, stemming, capitalization Tokenization: – Paul’s, Willow Dr. , Dr. Willow, 555 -1212, New York, ad hoc, can’t – Example: “The New York-Los Angeles flight” 2010 © University of Michigan 26

Document preprocessing • Normalization: – – Casing (cat vs. CAT) Stemming (computer, computation) String matching Labeled/labelled, extraterrestrial/extra-terrestrial/extra terrestrial, Qaddafi/Kadhafi/Ghadaffi • Index reduction – Dropping stop words (“and”, “of”, “to”) – Problematic for “to be or not to be” 2010 © University of Michigan 27

Document preprocessing • Normalization: – – Casing (cat vs. CAT) Stemming (computer, computation) String matching Labeled/labelled, extraterrestrial/extra-terrestrial/extra terrestrial, Qaddafi/Kadhafi/Ghadaffi • Index reduction – Dropping stop words (“and”, “of”, “to”) – Problematic for “to be or not to be” 2010 © University of Michigan 27

Tokenization • Normalize lexical units: Words with similar meanings should be mapped to the same indexing term • Stemming: Mapping all inflectional forms of words to the same root form, e. g. – computer -> compute – computation -> compute – computing -> compute (but king->k? ) • Porter’s Stemmer is popular for English 2010 © University of Michigan 28

Tokenization • Normalize lexical units: Words with similar meanings should be mapped to the same indexing term • Stemming: Mapping all inflectional forms of words to the same root form, e. g. – computer -> compute – computation -> compute – computing -> compute (but king->k? ) • Porter’s Stemmer is popular for English 2010 © University of Michigan 28

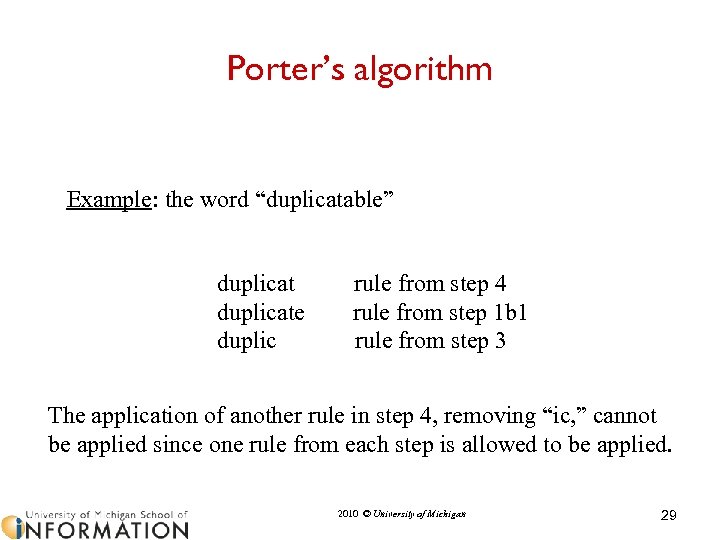

Porter’s algorithm Example: the word “duplicatable” duplicate duplic rule from step 4 rule from step 1 b 1 rule from step 3 The application of another rule in step 4, removing “ic, ” cannot be applied since one rule from each step is allowed to be applied. 2010 © University of Michigan 29

Porter’s algorithm Example: the word “duplicatable” duplicate duplic rule from step 4 rule from step 1 b 1 rule from step 3 The application of another rule in step 4, removing “ic, ” cannot be applied since one rule from each step is allowed to be applied. 2010 © University of Michigan 29

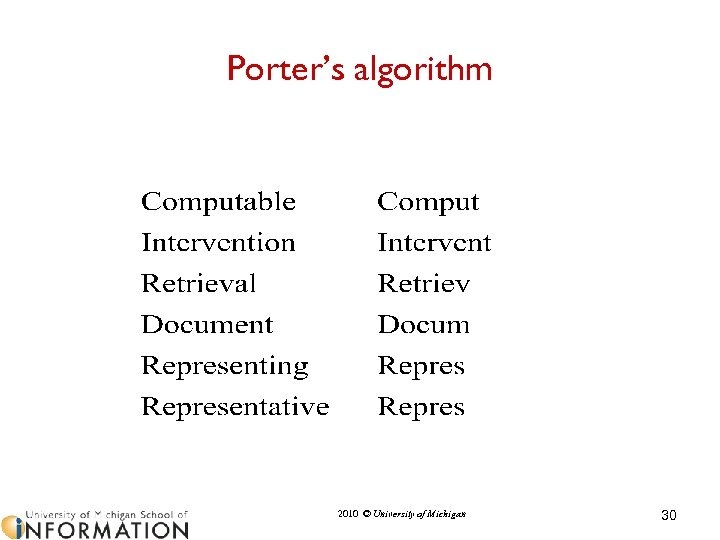

Porter’s algorithm 2010 © University of Michigan 30

Porter’s algorithm 2010 © University of Michigan 30

Links • http: //maya. cs. depaul. edu/~classes/ds 575/ porter. html • http: //www. tartarus. org/~martin/Porter. Ste mmer/def. txt 2010 © University of Michigan 31

Links • http: //maya. cs. depaul. edu/~classes/ds 575/ porter. html • http: //www. tartarus. org/~martin/Porter. Ste mmer/def. txt 2010 © University of Michigan 31

IR Winter 2010 … Approximate string matching … 2010 © University of Michigan

IR Winter 2010 … Approximate string matching … 2010 © University of Michigan

Approximate string matching • The Soundex algorithm (Odell and Russell) • Uses: – spelling correction – hash function – non-recoverable 2010 © University of Michigan 33

Approximate string matching • The Soundex algorithm (Odell and Russell) • Uses: – spelling correction – hash function – non-recoverable 2010 © University of Michigan 33

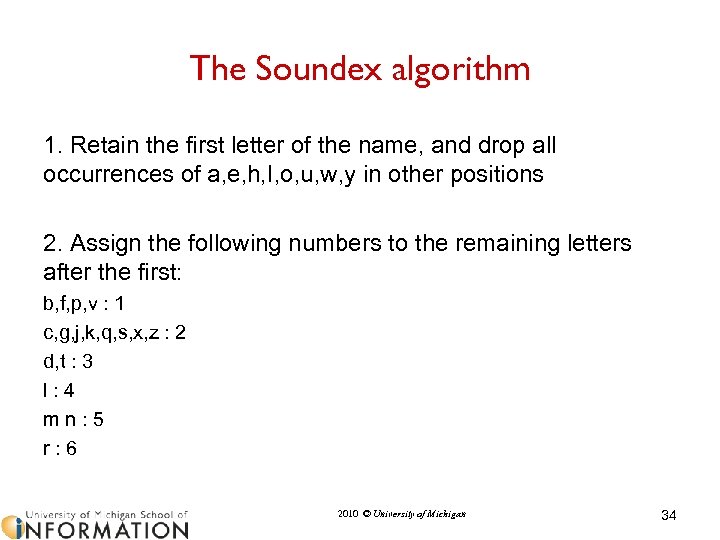

The Soundex algorithm 1. Retain the first letter of the name, and drop all occurrences of a, e, h, I, o, u, w, y in other positions 2. Assign the following numbers to the remaining letters after the first: b, f, p, v : 1 c, g, j, k, q, s, x, z : 2 d, t : 3 l: 4 mn: 5 r: 6 2010 © University of Michigan 34

The Soundex algorithm 1. Retain the first letter of the name, and drop all occurrences of a, e, h, I, o, u, w, y in other positions 2. Assign the following numbers to the remaining letters after the first: b, f, p, v : 1 c, g, j, k, q, s, x, z : 2 d, t : 3 l: 4 mn: 5 r: 6 2010 © University of Michigan 34

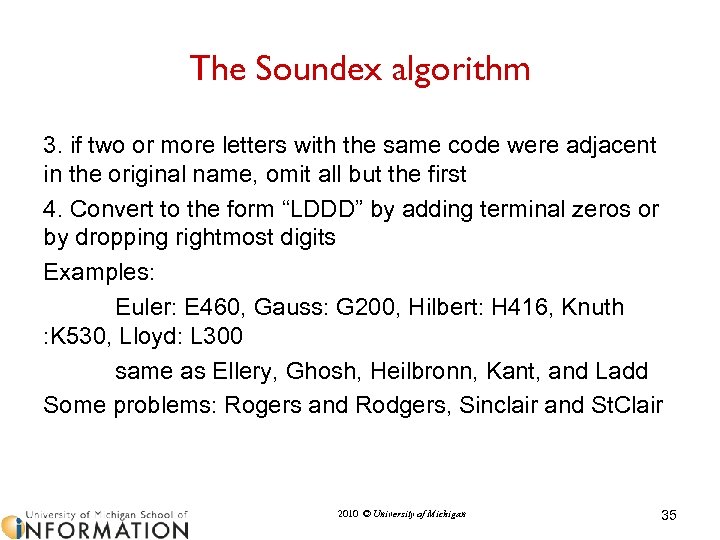

The Soundex algorithm 3. if two or more letters with the same code were adjacent in the original name, omit all but the first 4. Convert to the form “LDDD” by adding terminal zeros or by dropping rightmost digits Examples: Euler: E 460, Gauss: G 200, Hilbert: H 416, Knuth : K 530, Lloyd: L 300 same as Ellery, Ghosh, Heilbronn, Kant, and Ladd Some problems: Rogers and Rodgers, Sinclair and St. Clair 2010 © University of Michigan 35

The Soundex algorithm 3. if two or more letters with the same code were adjacent in the original name, omit all but the first 4. Convert to the form “LDDD” by adding terminal zeros or by dropping rightmost digits Examples: Euler: E 460, Gauss: G 200, Hilbert: H 416, Knuth : K 530, Lloyd: L 300 same as Ellery, Ghosh, Heilbronn, Kant, and Ladd Some problems: Rogers and Rodgers, Sinclair and St. Clair 2010 © University of Michigan 35

Levenshtein edit distance • Examples: – Theatre-> theater – Ghaddafi->Qadafi – Computer->counter • Edit distance (inserts, deletes, substitutions) – Edit transcript • Done through dynamic programming 2010 © University of Michigan 36

Levenshtein edit distance • Examples: – Theatre-> theater – Ghaddafi->Qadafi – Computer->counter • Edit distance (inserts, deletes, substitutions) – Edit transcript • Done through dynamic programming 2010 © University of Michigan 36

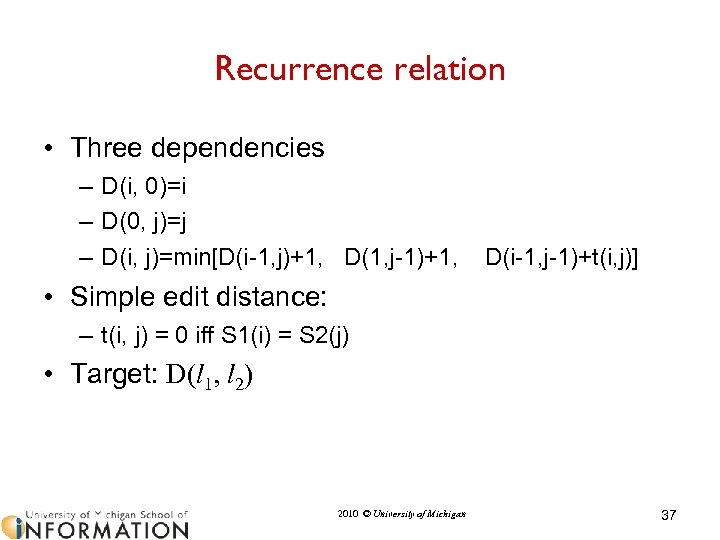

Recurrence relation • Three dependencies – D(i, 0)=i – D(0, j)=j – D(i, j)=min[D(i-1, j)+1, D(1, j-1)+1, D(i-1, j-1)+t(i, j)] • Simple edit distance: – t(i, j) = 0 iff S 1(i) = S 2(j) • Target: D(l 1, l 2) 2010 © University of Michigan 37

Recurrence relation • Three dependencies – D(i, 0)=i – D(0, j)=j – D(i, j)=min[D(i-1, j)+1, D(1, j-1)+1, D(i-1, j-1)+t(i, j)] • Simple edit distance: – t(i, j) = 0 iff S 1(i) = S 2(j) • Target: D(l 1, l 2) 2010 © University of Michigan 37

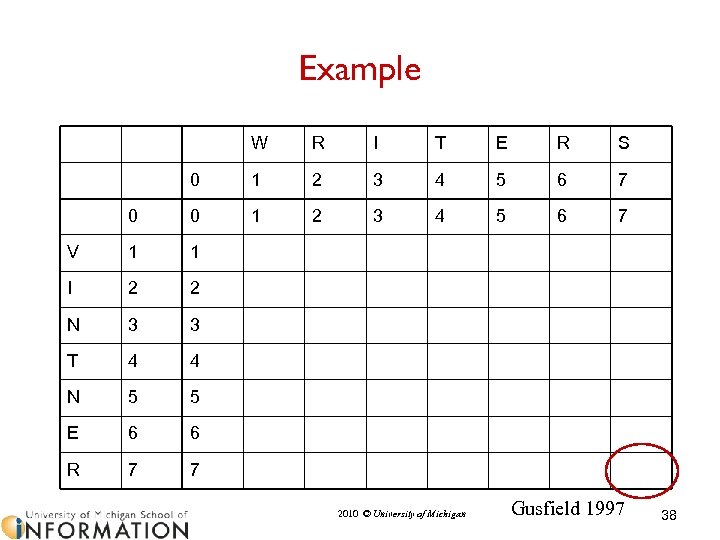

Example W R I T E R S 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 7 V 1 1 I 2 2 N 3 3 T 4 4 N 5 5 E 6 6 R 7 7 2010 © University of Michigan Gusfield 1997 38

Example W R I T E R S 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 7 V 1 1 I 2 2 N 3 3 T 4 4 N 5 5 E 6 6 R 7 7 2010 © University of Michigan Gusfield 1997 38

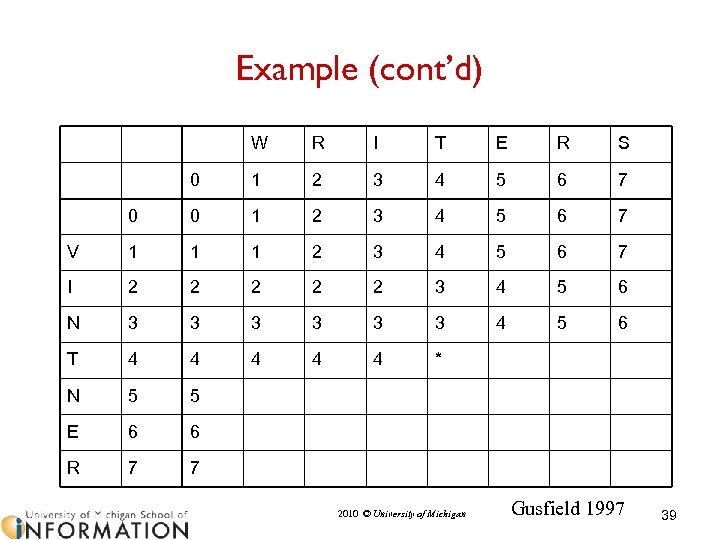

Example (cont’d) W R I T E R S 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 7 V 1 1 1 2 3 4 5 6 7 I 2 2 2 3 4 5 6 N 3 3 3 4 5 6 T 4 4 4 * N 5 5 E 6 6 R 7 7 2010 © University of Michigan Gusfield 1997 39

Example (cont’d) W R I T E R S 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 7 V 1 1 1 2 3 4 5 6 7 I 2 2 2 3 4 5 6 N 3 3 3 4 5 6 T 4 4 4 * N 5 5 E 6 6 R 7 7 2010 © University of Michigan Gusfield 1997 39

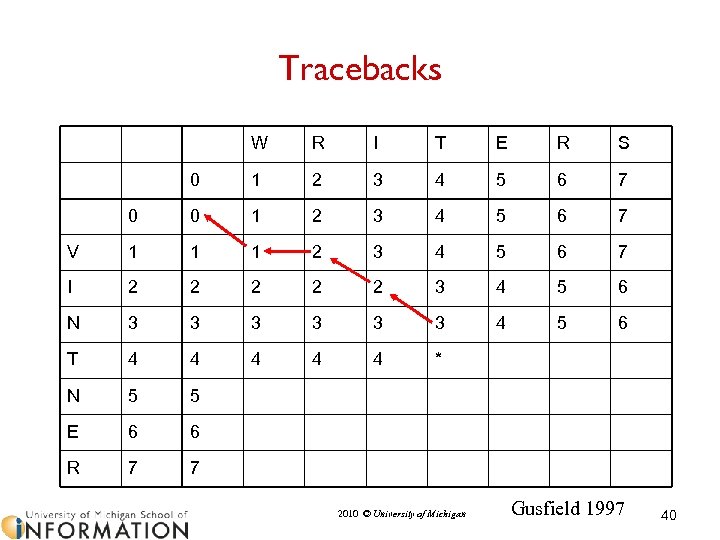

Tracebacks W R I T E R S 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 7 V 1 1 1 2 3 4 5 6 7 I 2 2 2 3 4 5 6 N 3 3 3 4 5 6 T 4 4 4 * N 5 5 E 6 6 R 7 7 2010 © University of Michigan Gusfield 1997 40

Tracebacks W R I T E R S 0 1 2 3 4 5 6 7 0 0 1 2 3 4 5 6 7 V 1 1 1 2 3 4 5 6 7 I 2 2 2 3 4 5 6 N 3 3 3 4 5 6 T 4 4 4 * N 5 5 E 6 6 R 7 7 2010 © University of Michigan Gusfield 1997 40

Weighted edit distance • Used to emphasize the relative cost of different edit operations • Useful in bioinformatics – Homology information – BLAST – Blosum – http: //eta. emblheidelberg. de: 8000/misc/mat/blosum 50. html 2010 © University of Michigan 41

Weighted edit distance • Used to emphasize the relative cost of different edit operations • Useful in bioinformatics – Homology information – BLAST – Blosum – http: //eta. emblheidelberg. de: 8000/misc/mat/blosum 50. html 2010 © University of Michigan 41

Links • Web sites: – http: //www. merriampark. com/ld. htm – http: //odur. let. rug. nl/~kleiweg/lev/ • Demo: – http: //nayana. ece. ucsb. edu/imsearch/imsearc h. html 2010 © University of Michigan 42

Links • Web sites: – http: //www. merriampark. com/ld. htm – http: //odur. let. rug. nl/~kleiweg/lev/ • Demo: – http: //nayana. ece. ucsb. edu/imsearch/imsearc h. html 2010 © University of Michigan 42

IR Winter 2010 … Index Compression IR Toolkits … 2010 © University of Michigan

IR Winter 2010 … Index Compression IR Toolkits … 2010 © University of Michigan

Inverted index compression • Compress the postings • Observations – Inverted list is sorted (e. g. , by docid or termfq) – Small numbers tend to occur more frequently • Implications – “d-gap” (store difference): d 1, d 2 -d 1, d 3 -d 2 -d 1, … – Exploit skewed frequency distribution: fewer bits for small (high frequency) integers • Binary code, unary code, -code 2010 © University of Michigan 44

Inverted index compression • Compress the postings • Observations – Inverted list is sorted (e. g. , by docid or termfq) – Small numbers tend to occur more frequently • Implications – “d-gap” (store difference): d 1, d 2 -d 1, d 3 -d 2 -d 1, … – Exploit skewed frequency distribution: fewer bits for small (high frequency) integers • Binary code, unary code, -code 2010 © University of Michigan 44

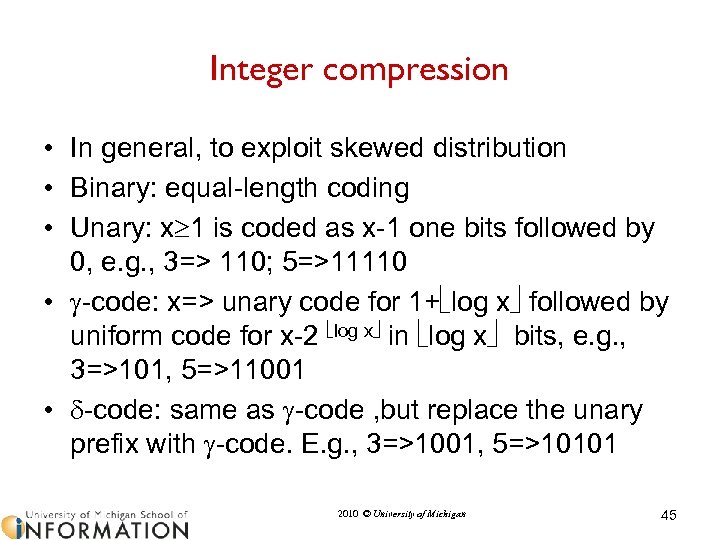

Integer compression • In general, to exploit skewed distribution • Binary: equal-length coding • Unary: x 1 is coded as x-1 one bits followed by 0, e. g. , 3=> 110; 5=>11110 • -code: x=> unary code for 1+ log x followed by uniform code for x-2 log x in log x bits, e. g. , 3=>101, 5=>11001 • -code: same as -code , but replace the unary prefix with -code. E. g. , 3=>1001, 5=>10101 2010 © University of Michigan 45

Integer compression • In general, to exploit skewed distribution • Binary: equal-length coding • Unary: x 1 is coded as x-1 one bits followed by 0, e. g. , 3=> 110; 5=>11110 • -code: x=> unary code for 1+ log x followed by uniform code for x-2 log x in log x bits, e. g. , 3=>101, 5=>11001 • -code: same as -code , but replace the unary prefix with -code. E. g. , 3=>1001, 5=>10101 2010 © University of Michigan 45

Text compression • Compress the dictionaries • Methods – Fixed length codes – Huffman coding – Ziv-Lempel codes 2010 © University of Michigan 46

Text compression • Compress the dictionaries • Methods – Fixed length codes – Huffman coding – Ziv-Lempel codes 2010 © University of Michigan 46

Fixed length codes • Binary representations – ASCII – Representational power (2 k symbols where k is the number of bits) 2010 © University of Michigan 47

Fixed length codes • Binary representations – ASCII – Representational power (2 k symbols where k is the number of bits) 2010 © University of Michigan 47

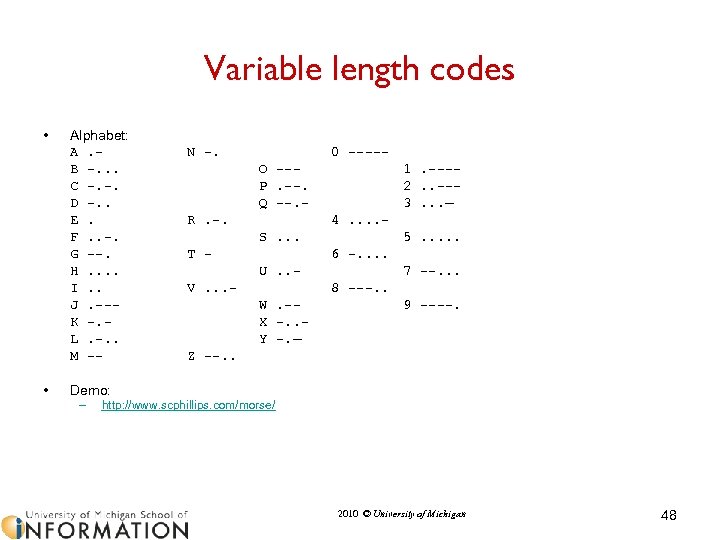

Variable length codes • • Alphabet: A. - B -. . . C -. -. D -. . E. F. . -. G --. H. . I. . J. --- K -. - L. -. . M -- N -. 0 ----O --- P. --. Q --. - R. -. 1. ---2. . --3. . . — 4. . - S. . . T - 5. . . 6 -. . U. . - V. . . - 7 --. . . 8 ---. . W. -- X -. . Y -. — 9 ----. Z --. . Demo: – http: //www. scphillips. com/morse/ 2010 © University of Michigan 48

Variable length codes • • Alphabet: A. - B -. . . C -. -. D -. . E. F. . -. G --. H. . I. . J. --- K -. - L. -. . M -- N -. 0 ----O --- P. --. Q --. - R. -. 1. ---2. . --3. . . — 4. . - S. . . T - 5. . . 6 -. . U. . - V. . . - 7 --. . . 8 ---. . W. -- X -. . Y -. — 9 ----. Z --. . Demo: – http: //www. scphillips. com/morse/ 2010 © University of Michigan 48

Most frequent letters in English • Some are more frequently used than others… • Most frequent letters: – E T A O I N S H R D L U • Demo: – http: //www. amstat. org/publications/jse/secure/v 7 n 2/co unt-char. cfm • Also: bigrams: – TH HE IN ER AN RE ND AT ON NT 2010 © University of Michigan 49

Most frequent letters in English • Some are more frequently used than others… • Most frequent letters: – E T A O I N S H R D L U • Demo: – http: //www. amstat. org/publications/jse/secure/v 7 n 2/co unt-char. cfm • Also: bigrams: – TH HE IN ER AN RE ND AT ON NT 2010 © University of Michigan 49

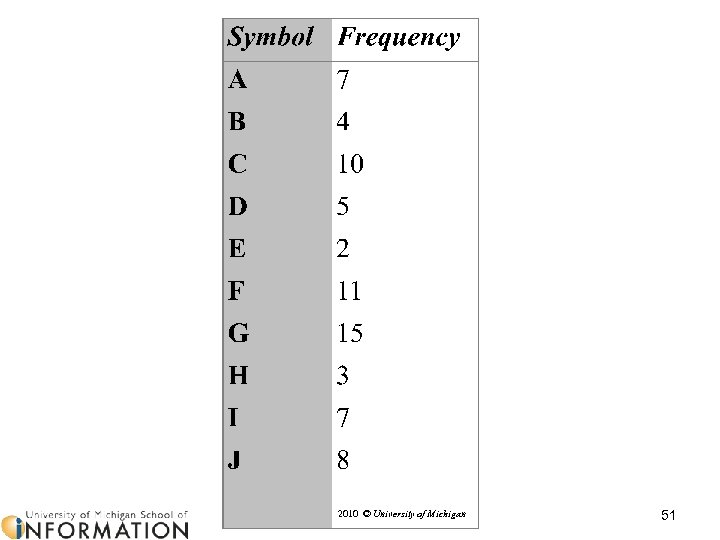

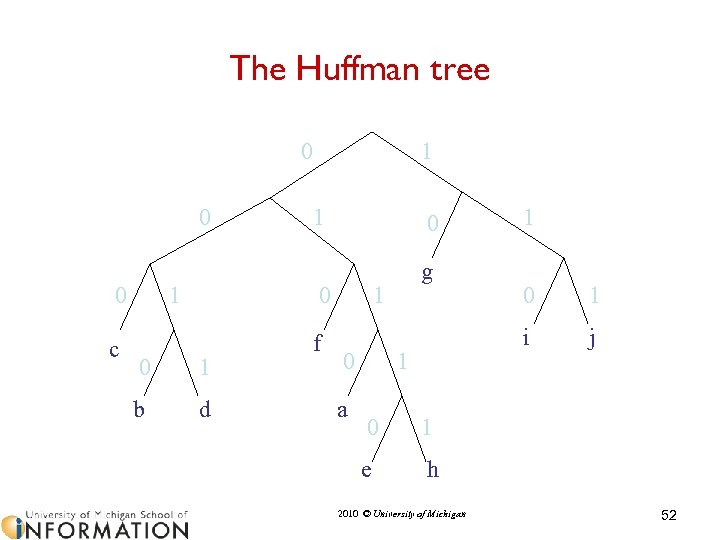

Huffman coding • Developed by David Huffman (1952) • Average of 5 bits per character (37. 5% compression) • Based on frequency distributions of symbols • Algorithm: iteratively build a tree of symbols starting with the two least frequent symbols 2010 © University of Michigan 50

Huffman coding • Developed by David Huffman (1952) • Average of 5 bits per character (37. 5% compression) • Based on frequency distributions of symbols • Algorithm: iteratively build a tree of symbols starting with the two least frequent symbols 2010 © University of Michigan 50

2010 © University of Michigan 51

2010 © University of Michigan 51

The Huffman tree 0 0 0 c 1 1 1 0 0 0 1 b d f g 1 0 a 1 0 j 1 e 1 i 1 0 h 2010 © University of Michigan 52

The Huffman tree 0 0 0 c 1 1 1 0 0 0 1 b d f g 1 0 a 1 0 j 1 e 1 i 1 0 h 2010 © University of Michigan 52

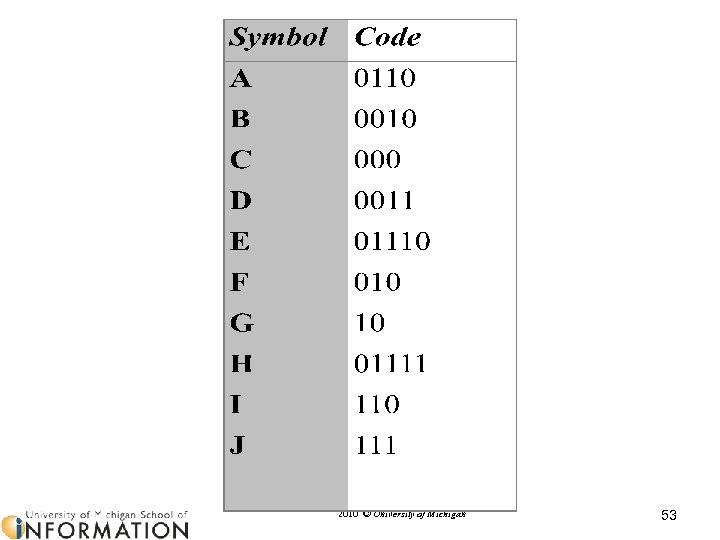

2010 © University of Michigan 53

2010 © University of Michigan 53

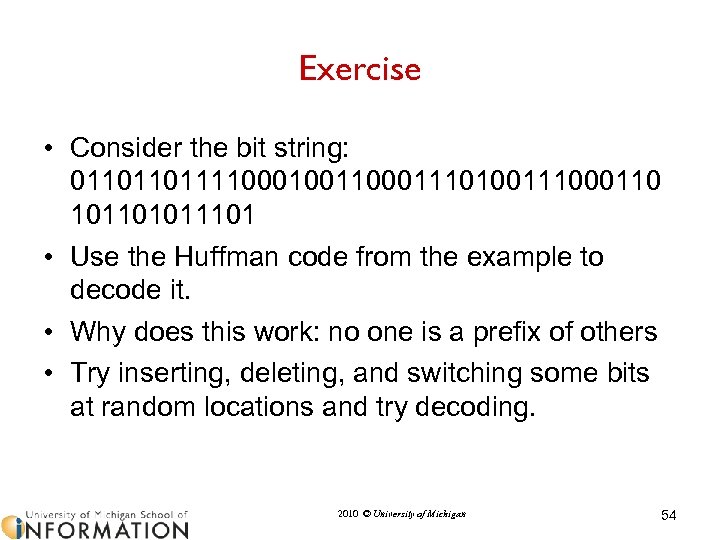

Exercise • Consider the bit string: 01101101111000100110001110100111000110 101101011101 • Use the Huffman code from the example to decode it. • Why does this work: no one is a prefix of others • Try inserting, deleting, and switching some bits at random locations and try decoding. 2010 © University of Michigan 54

Exercise • Consider the bit string: 01101101111000100110001110100111000110 101101011101 • Use the Huffman code from the example to decode it. • Why does this work: no one is a prefix of others • Try inserting, deleting, and switching some bits at random locations and try decoding. 2010 © University of Michigan 54

Extensions • Word-based • Domain/genre dependent models 2010 © University of Michigan 55

Extensions • Word-based • Domain/genre dependent models 2010 © University of Michigan 55

Links on text compression • Data compression: – http: //www. data-compression. info/ • Calgary corpus: – http: //links. uwaterloo. ca/calgary. corpus. html • Huffman coding: – http: //www. compressconsult. com/huffman/ – http: //en. wikipedia. org/wiki/Huffman_coding • LZ – http: //en. wikipedia. org/wiki/LZ 77 2010 © University of Michigan 56

Links on text compression • Data compression: – http: //www. data-compression. info/ • Calgary corpus: – http: //links. uwaterloo. ca/calgary. corpus. html • Huffman coding: – http: //www. compressconsult. com/huffman/ – http: //en. wikipedia. org/wiki/Huffman_coding • LZ – http: //en. wikipedia. org/wiki/LZ 77 2010 © University of Michigan 56

Open Source IR Toolkits • Smart (Cornell) • MG (RMIT & Melbourne, Australia; Waikato, New Zealand), • Lemur (CMU/Univ. of Massachusetts) • Terrier (Glasgow) • Clair (University of Michigan) • Lucene (Open Source) • Ivory (University of Maryland – cloud computing) 2010 © University of Michigan 57

Open Source IR Toolkits • Smart (Cornell) • MG (RMIT & Melbourne, Australia; Waikato, New Zealand), • Lemur (CMU/Univ. of Massachusetts) • Terrier (Glasgow) • Clair (University of Michigan) • Lucene (Open Source) • Ivory (University of Maryland – cloud computing) 2010 © University of Michigan 57

Smart • The most influential IR system/toolkit • Developed at Cornell since 1960’s • Vector space model with lots of weighting options • Written in C • The Cornell/AT&T groups have used the Smart system to achieve top TREC performance 2010 © University of Michigan 58

Smart • The most influential IR system/toolkit • Developed at Cornell since 1960’s • Vector space model with lots of weighting options • Written in C • The Cornell/AT&T groups have used the Smart system to achieve top TREC performance 2010 © University of Michigan 58

MG • A highly efficient toolkit for retrieval of text and images • Developed by people at Univ. of Waikato, Univ. of Melbourne, and RMIT in 1990’s • Written in C, running on Unix • Vector space model with lots of compression and speed up tricks • People have used it to achieve good TREC performance 2010 © University of Michigan 59

MG • A highly efficient toolkit for retrieval of text and images • Developed by people at Univ. of Waikato, Univ. of Melbourne, and RMIT in 1990’s • Written in C, running on Unix • Vector space model with lots of compression and speed up tricks • People have used it to achieve good TREC performance 2010 © University of Michigan 59

Lemur/Indri • An IR toolkit emphasizing language models • Developed at CMU and Univ. of Massachusetts in 2000’s • Written in C++, highly extensible • Vector space and probabilistic models including language models • Achieving good TREC performance with a simple language model 2010 © University of Michigan 60

Lemur/Indri • An IR toolkit emphasizing language models • Developed at CMU and Univ. of Massachusetts in 2000’s • Written in C++, highly extensible • Vector space and probabilistic models including language models • Achieving good TREC performance with a simple language model 2010 © University of Michigan 60

Terrier • A large-scale retrieval toolkit with lots of applications (e. g. , desktop search) and TREC support • Developed at University of Glasgow, UK • Written in Java, open source • “Divergence from randomness” retrieval model and other modern retrieval formulas 2010 © University of Michigan 61

Terrier • A large-scale retrieval toolkit with lots of applications (e. g. , desktop search) and TREC support • Developed at University of Glasgow, UK • Written in Java, open source • “Divergence from randomness” retrieval model and other modern retrieval formulas 2010 © University of Michigan 61

Lucene • • • Open Source IR toolkit Initially developed by Doug Cutting in Java Now has been ported to some other languages Good for building IR/Web applications Many applications have been built using Lucene (e. g. , Nutch Search Engine) • Currently the retrieval algorithms have poor accuracy 2010 © University of Michigan 62

Lucene • • • Open Source IR toolkit Initially developed by Doug Cutting in Java Now has been ported to some other languages Good for building IR/Web applications Many applications have been built using Lucene (e. g. , Nutch Search Engine) • Currently the retrieval algorithms have poor accuracy 2010 © University of Michigan 62

What You Should Know • • • What is an inverted index Why does an inverted index help make search fast How to construct a large inverted index How to preprocess documents to reduce the index terms How to compress an index IR toolkits 2010 © University of Michigan

What You Should Know • • • What is an inverted index Why does an inverted index help make search fast How to construct a large inverted index How to preprocess documents to reduce the index terms How to compress an index IR toolkits 2010 © University of Michigan