f9c7d9095937c95a235764c0c0215354.ppt

- Количество слайдов: 29

Document Classification with Naïve Bayes -How to Build Yahoo Automatically Andrew Mc. Callum Just Research & CMU www. cs. cmu. edu/~mccallum Joint work with Kamal Nigam, Jason Rennie, Kristie Seymore, Tom Mitchell, Sebastian Thrun, Roni Rosenfeld, Andrew Ng.

2

3

4

5

Document Classification Testing Data: “planning (Planning) language semantics proof intelligence” . Categories: ML Training Data: learning algorithm reinforcement intelligence network. . . Planning Semantics planning temporal reasoning plan language. . . programming semantics types language proof. . . Garb. Coll. garbage collection memory optimization region. . . Multimedia. . . GUI. . . 6

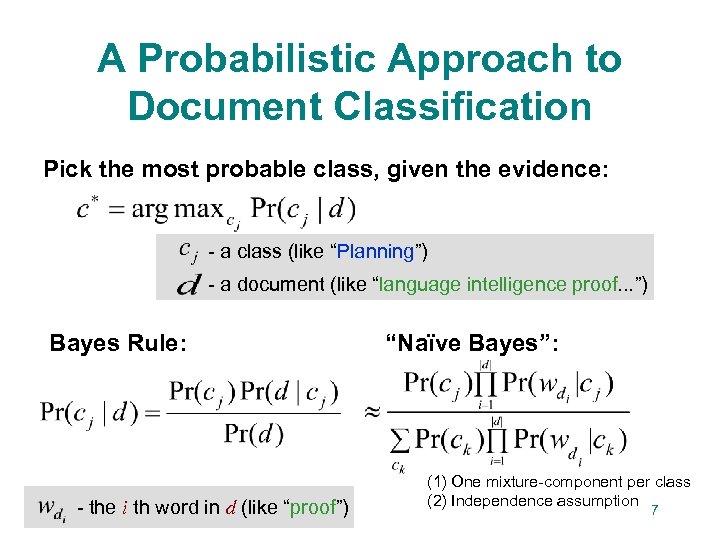

A Probabilistic Approach to Document Classification Pick the most probable class, given the evidence: - a class (like “Planning”) - a document (like “language intelligence proof. . . ”) Bayes Rule: - the i th word in d (like “proof”) “Naïve Bayes”: (1) One mixture-component per class (2) Independence assumption 7

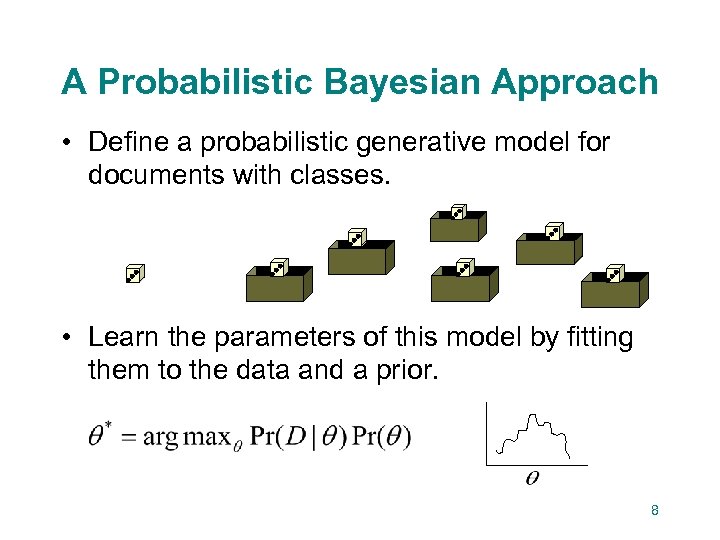

A Probabilistic Bayesian Approach • Define a probabilistic generative model for documents with classes. • Learn the parameters of this model by fitting them to the data and a prior. 8

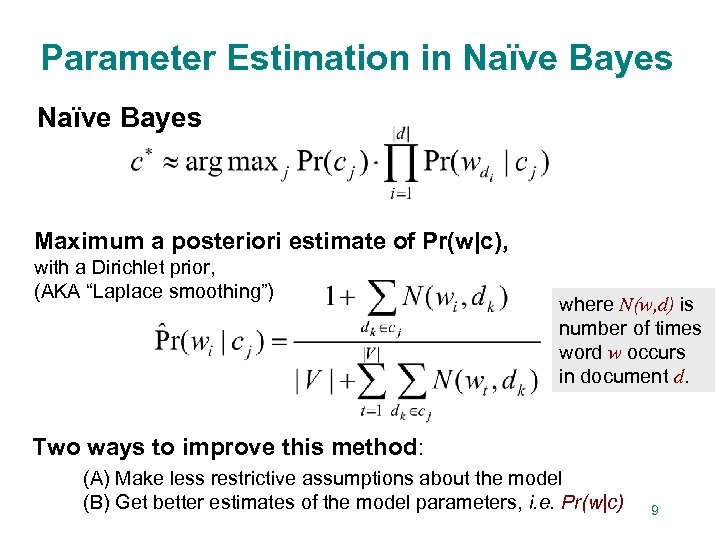

Parameter Estimation in Naïve Bayes Maximum a posteriori estimate of Pr(w|c), with a Dirichlet prior, (AKA “Laplace smoothing”) where N(w, d) is number of times word w occurs in document d. Two ways to improve this method: (A) Make less restrictive assumptions about the model (B) Get better estimates of the model parameters, i. e. Pr(w|c) 9

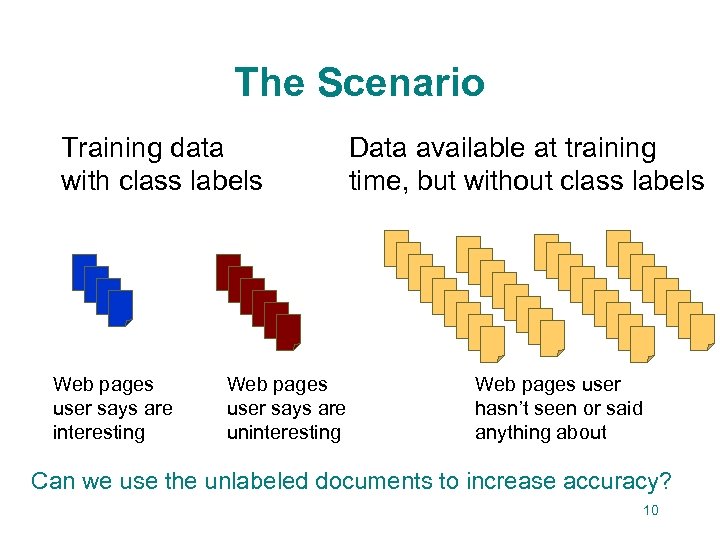

The Scenario Training data with class labels Web pages user says are interesting Web pages user says are uninteresting Data available at training time, but without class labels Web pages user hasn’t seen or said anything about Can we use the unlabeled documents to increase accuracy? 10

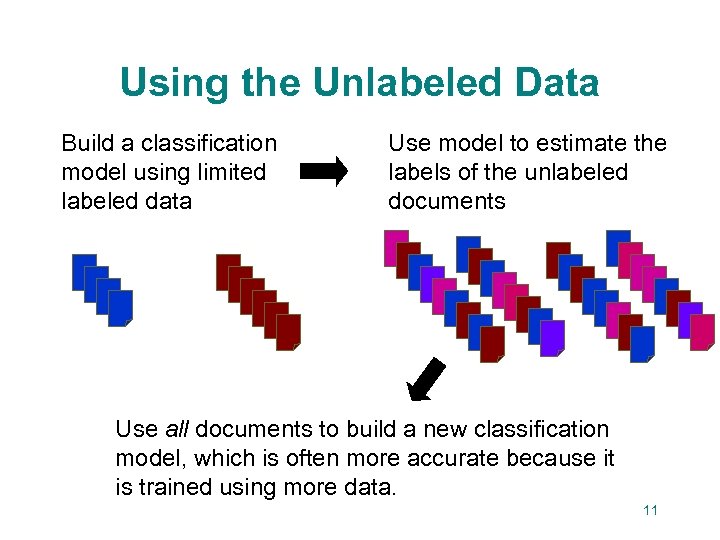

Using the Unlabeled Data Build a classification model using limited labeled data Use model to estimate the labels of the unlabeled documents Use all documents to build a new classification model, which is often more accurate because it is trained using more data. 11

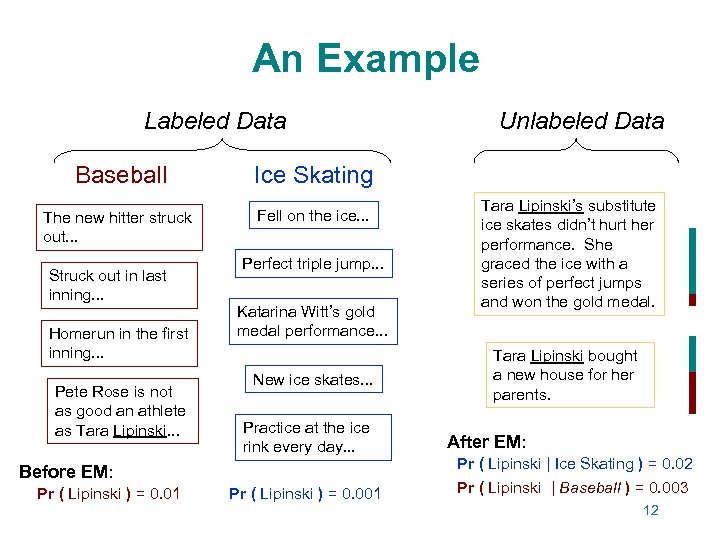

An Example Labeled Data Baseball Ice Skating The new hitter struck out. . . Fell on the ice. . . Unlabeled Data Struck out in last inning. . . Homerun in the first inning. . . Pete Rose is not as good an athlete as Tara Lipinski. . . Perfect triple jump. . . Katarina Witt’s gold medal performance. . . New ice skates. . . Practice at the ice rink every day. . . Before EM: Pr ( Lipinski ) = 0. 01 Pr ( Lipinski ) = 0. 001 Tara Lipinski’s substitute ice skates didn’t hurt her performance. She graced the ice with a series of perfect jumps and won the gold medal. Tara Lipinski bought a new house for her parents. After EM: Pr ( Lipinski | Ice Skating ) = 0. 02 Pr ( Lipinski | Baseball ) = 0. 003 12

![Filling in Missing Labels with EM [Dempster et al ‘ 77], [Ghahramani & Jordan Filling in Missing Labels with EM [Dempster et al ‘ 77], [Ghahramani & Jordan](https://present5.com/presentation/f9c7d9095937c95a235764c0c0215354/image-13.jpg)

Filling in Missing Labels with EM [Dempster et al ‘ 77], [Ghahramani & Jordan ‘ 95], [Mc. Lachlan & Krishnan ‘ 97] Expectation Maximization is a class of iterative algorithms for maximum likelihood estimation with incomplete data. • E-step: Use current estimates of model parameters to “guess” value of missing labels. • M-step: Use current “guesses” for missing labels to calculate new estimates of model parameters. • Repeat E- and M-steps until convergence. Finds the model parameters that locally maximize the probability of both the labeled and the unlabeled data. 13

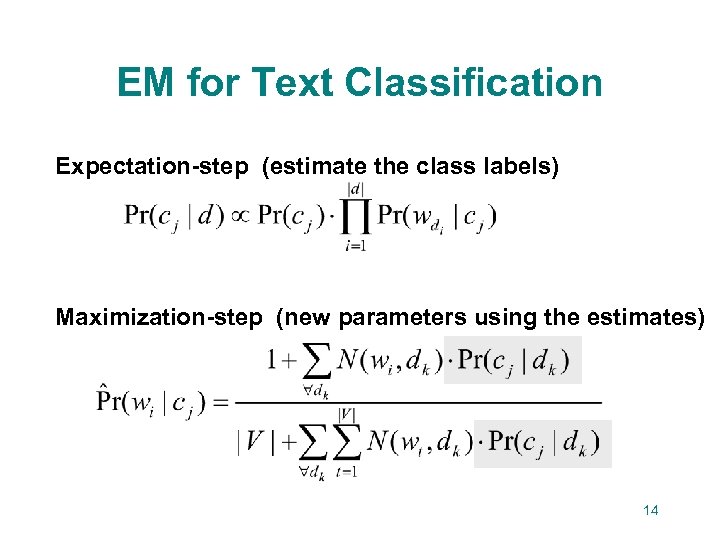

EM for Text Classification Expectation-step (estimate the class labels) Maximization-step (new parameters using the estimates) 14

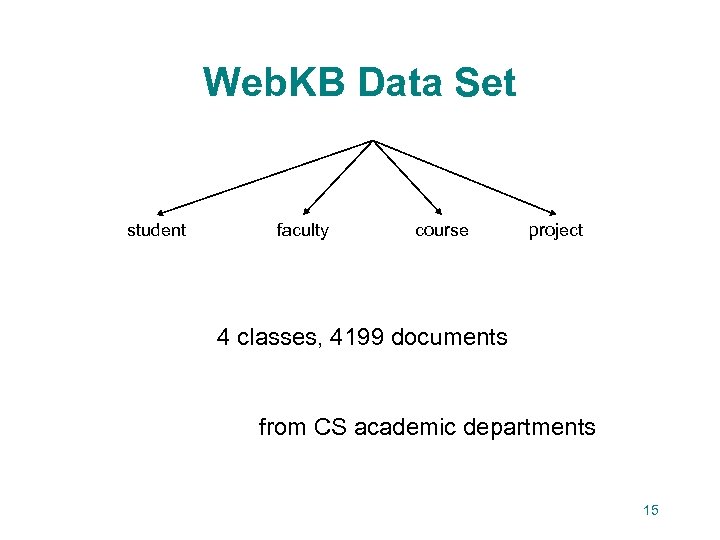

Web. KB Data Set student faculty course project 4 classes, 4199 documents from CS academic departments 15

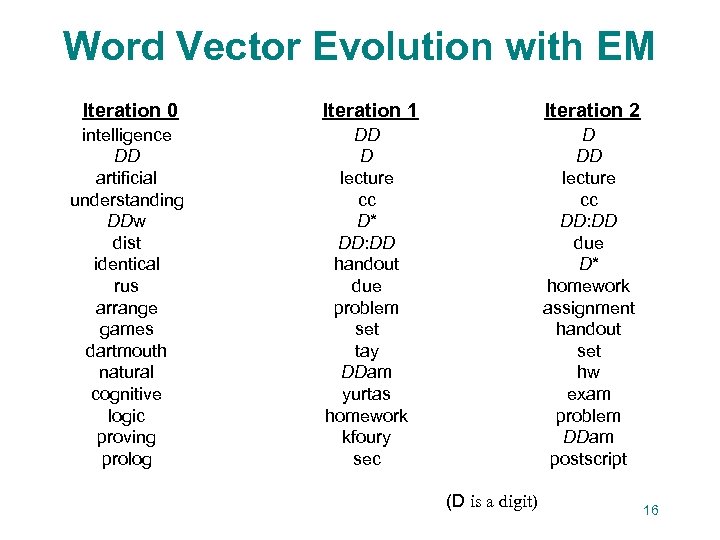

Word Vector Evolution with EM Iteration 0 Iteration 1 Iteration 2 intelligence DD artificial understanding DDw dist identical rus arrange games dartmouth natural cognitive logic proving prolog DD D lecture cc D* DD: DD handout due problem set tay DDam yurtas homework kfoury sec D DD lecture cc DD: DD due D* homework assignment handout set hw exam problem DDam postscript (D is a digit) 16

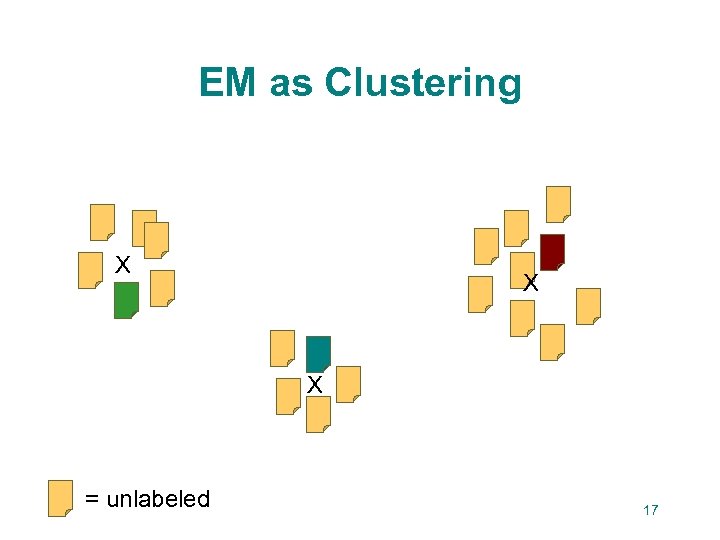

EM as Clustering X X X = unlabeled 17

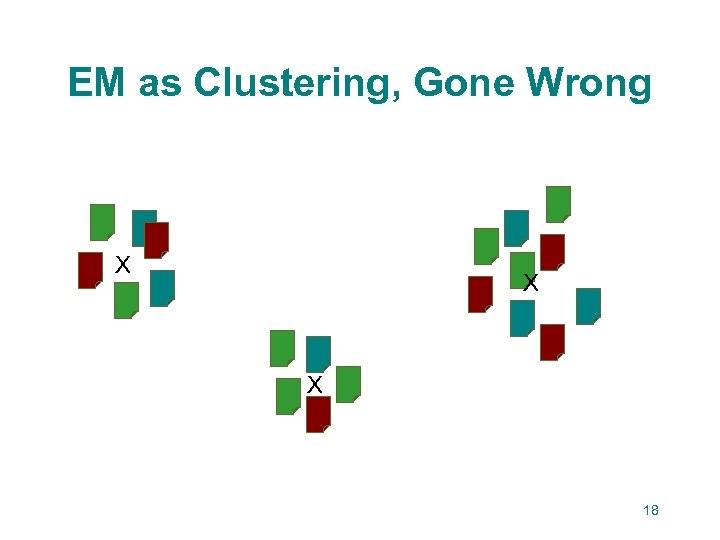

EM as Clustering, Gone Wrong X X X 18

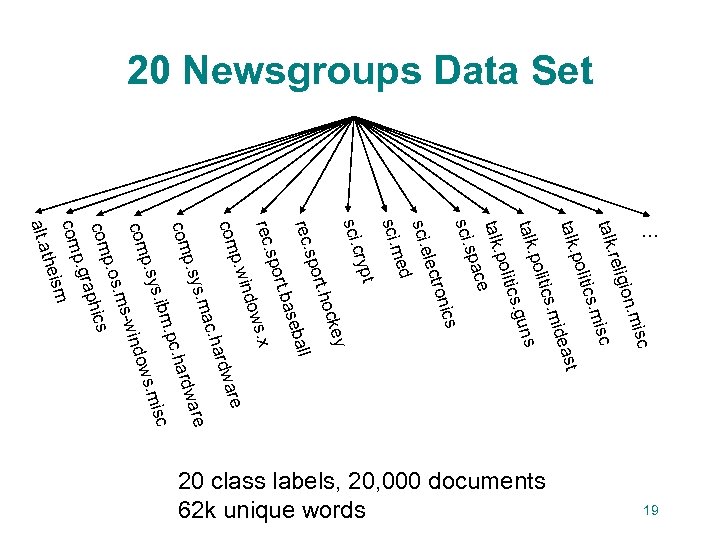

20 Newsgroups Data Set isc ion. m relig talk. isc ics. m polit st talk. idea ics. m polit talk. uns ics. g polit talk. pace sci. s s onic lectr sci. e ed sci. m rypt sci. c ckey t. ho spor ll rec. seba t. ba spor rec. ws. x ndo p. wi re com rdwa c. ha s. ma are p. sy ardw com. pc. h c s. ibm. mis p. sy ows com wind. msp. os com ics aph p. gr com m theis alt. a … 19 20 class labels, 20, 000 documents 62 k unique words

Newsgroups Classification Accuracy varying # labeled documents 20

Newsgroups Classification Accuracy varying # unlabeled documents 21

Web. KB Classification Accuracy varying # labeled documents 22

Web. KB Classification Accuracy varying weight of unlabeled data 23

Web. KB Classification Accuracy varying # labeled documents and selecting unlabeled weight by CV 24

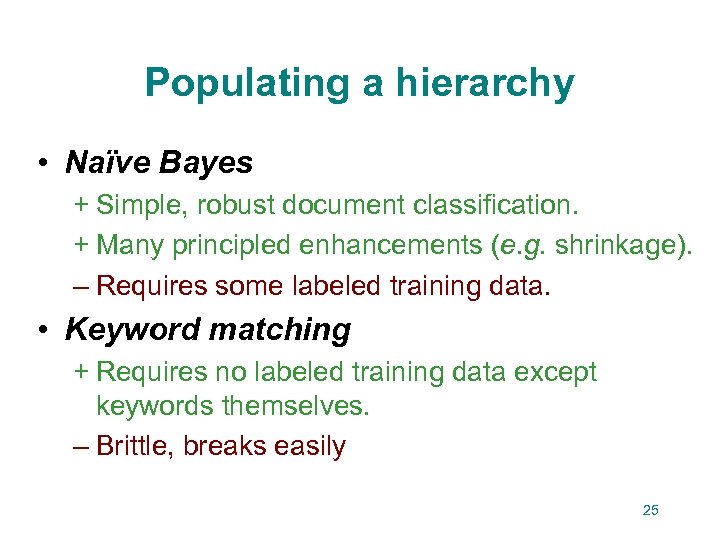

Populating a hierarchy • Naïve Bayes + Simple, robust document classification. + Many principled enhancements (e. g. shrinkage). – Requires some labeled training data. • Keyword matching + Requires no labeled training data except keywords themselves. – Brittle, breaks easily 25

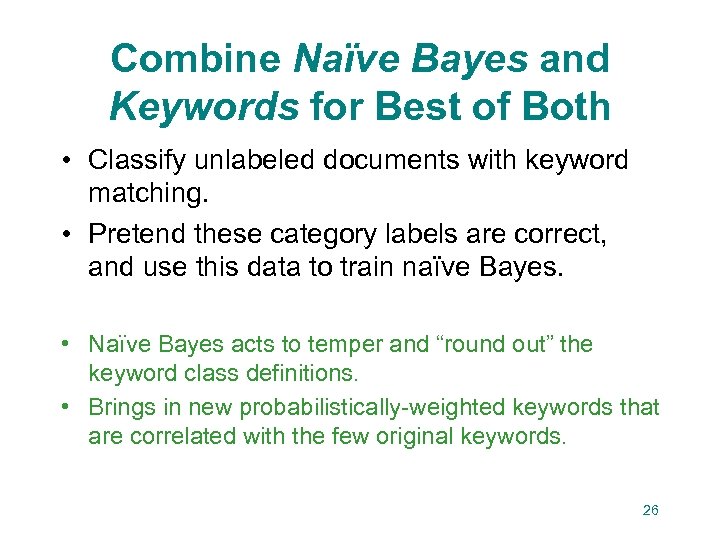

Combine Naïve Bayes and Keywords for Best of Both • Classify unlabeled documents with keyword matching. • Pretend these category labels are correct, and use this data to train naïve Bayes. • Naïve Bayes acts to temper and “round out” the keyword class definitions. • Brings in new probabilistically-weighted keywords that are correlated with the few original keywords. 26

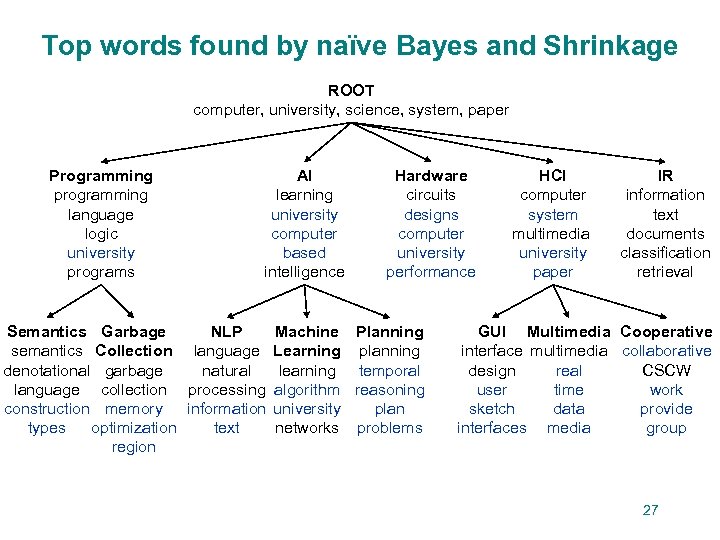

Top words found by naïve Bayes and Shrinkage ROOT computer, university, science, system, paper Programming programming language logic university programs AI learning university computer based intelligence Hardware circuits designs computer university performance Semantics Garbage NLP Machine Planning semantics Collection language Learning planning denotational garbage natural learning temporal language collection processing algorithm reasoning construction memory information university plan types optimization text networks problems region HCI computer system multimedia university paper IR information text documents classification retrieval GUI Multimedia Cooperative interface multimedia collaborative design real CSCW user time work sketch data provide interfaces media group 27

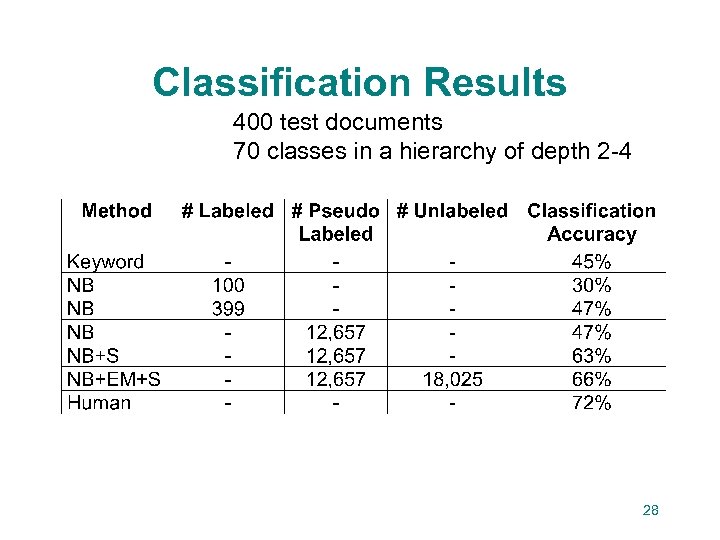

Classification Results 400 test documents 70 classes in a hierarchy of depth 2 -4 28

Conclusions • Naïve Bayes is a method of document classification based on Bayesian statistics. • Many parameters to estimate. Requires much labeled training data. • We can build on its probabilistic, statistical foundations to improve performance (e. g. unlabeled data + EM) • These techniques are accurate and robust enough to build useful Web services. 29

f9c7d9095937c95a235764c0c0215354.ppt